Abstract

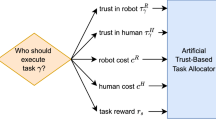

There is growing excitement about the potential of leveraging artificial intelligence (AI) to tackle some of the outstanding barriers to the full deployment of robots in daily lives. However, action and sensing in the physical world pose greater and different challenges for AI than analysing data in isolation and it is important to reflect on which AI approaches are most likely to be successfully applied to robots. Questions to address, among others, are how AI models can be adapted to specific robot designs, tasks and environments. This Perspective offers an assessment of what AI has achieved for robotics since the 1990s and proposes a research roadmap with challenges and promises. These range from keeping up-to-date large datasets, representatives of a diversity of tasks that robots may have to perform, and of environments they may encounter, to designing AI algorithms tailored specifically to robotics problems but generic enough to apply to a wide range of applications and transfer easily to a variety of robotic platforms. For robots to collaborate effectively with humans, they must predict human behaviour without relying on bias-based profiling. Explainability and transparency in AI-driven robot control are essential for building trust, preventing misuse and attributing responsibility in accidents. We close with describing what are, in our view, primary long-term challenges, namely, designing robots capable of lifelong learning, and guaranteeing safe deployment and usage, as well as sustainable development.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

27,99 € / 30 days

cancel any time

Subscribe to this journal

Receive 12 digital issues and online access to articles

118,99 € per year

only 9,92 € per issue

Buy this article

- Purchase on SpringerLink

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

References

Krizhevsky, A., Sutskever, I. & Hinton, G. E. ImageNet classification with deep convolutional neural networks. Commun. ACM 60, 84–90 (2017).

Siciliano, B. & Khatib, O. Handbook of Robotics (Springer, 2016).

Siciliano, B., Sciavicco, L., Villani, L. & Oriolo, G. Robotics: Modelling, Planning and Control (Springer, 2009).

Siegwart, R., Nourbakhsh, I. R. & Scaramuzza, D. Introduction to Autonomous Mobile Robots (MIT Press, 2011).

Billard, A. & Kragic, D. Trends and challenges in robot manipulation. Science 364, eaat8414 (2019).

Ravichandar, H. et al. Recent advances in robot learning from demonstration. Annu. Rev. Control Robot. Auton. Syst. 3, 297–330 (2020).

Mahler, J. et al. Learning ambidextrous robot grasping policies. Sci. Robot. J. 4, eaau4984 (2019).

Kim, S., Shukla, A. & Billard, A. Catching objects in flight. IEEE Trans. Robot. 30, 1049–1065 (2014).

Loquercio, A. et al. Learning high-speed flight in the wild. Sci. Robot. 6, eabg5810 (2021).

Grollman, D. H. & Billard, A. Donut as I do: learning from failed demonstrations. In Proc. IEEE International Conference on Robotics and Automation (ICRA) 3804–3809 (IEEE, 2011).

Chen, L., Paleja, R. & Gombolay, M. Learning from suboptimal demonstration via self-supervised reward regression. In Proc. 2020 Conference on Robot Learning (eds Kober, J. et al.) 1262–1277 (PMLR, 2021).

Butepage, J., Black, M. J., Kragic, D. & Kjellstrom, H. Deep representation learning for human motion prediction and classification. In Proc. 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR) 1591–1599 (IEEE, 2017).

Calinon, S. & Billard, A. Active teaching in robot programming by demonstration. In Proc. RO-MAN 2007 - The 16th IEEE International Symposium on Robot and Human Interactive Communication 702–707 (IEEE, 2007).

Zollner, R., Asfour, T. & Dillmann, R. Programming by demonstration: dual-arm manipulation tasks for humanoid robots. In Proc. 2004 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS) 479–484 (IEEE, 2004).

Nicolescu, M. & Mataric, M. J. in Imitation and Social Learning in Robots, Humans and Animals (eds Nehaniv, C. L. & Dautenhahn, K.) 407–424 (Cambridge Univ. Press, 2005).

Florence, P. et al. Implicit behavioral cloning. In Proc. 5th Conference on Robot Learning (eds Faust, A. et al.) 158–168 (PMLR, 2022).

Sutton, R. S. & Barto, A. G. Reinforcement Learning: An Introduction (MIT Press, 1998).

Kaufman, E. et al. Champion-level drone racing using deep reinforcement learning. Nature 620, 982–987 (2023).

Radosavovic, I. et al. Real-world humanoid locomotion with reinforcement learning. Sci. Robot. 9, eadi9579 (2024).

Haarnoja, T. et al. Learning agile soccer skills for a bipedal robot with deep reinforcement learning. Sci. Robot. 9, eadi8022 (2024).

Ibarz, J. et al. How to train your robot with deep reinforcement learning: lessons we have learned. Int. J. Robot. Res. 40, 698–721 (2021).

Kober, J. & Peters, J. Imitation and reinforcement learning. IEEE Robot. Autom. Mag. 17, 55–62 (2010).

Hester, T. et al. Deep Q-learning from demonstrations. In Proc. Thirty-Second AAAI Conference on Artificial Intelligence and Thirtieth Innovative Applications of Artificial Intelligence Conference and Eighth AAAI Symposium on Educational Advances in Artificial Intelligence (eds McIlraith, S. A. & Weinberger, K. Q.) 3223–3230 (AAAI Press, 2018).

Adams, S., Tyler, C. & Beling, P. A. A survey of inverse reinforcement learning. Artif. Intell. Rev. 55, 4307–4346 (2022).

Kim, D. et al. Review of machine learning methods in soft robotics. PLoS ONE 16, e0246102 (2021).

Subramanian, S. et al. Learning the signatures of the human grasp using a scalable tactile glove. Nature 569, 698–702 (2019).

Mahler, J. & Goldberg, K. Learning deep policies for robot bin picking by simulating robust grasping sequences. In Proc. 1st Annual Conference on Robot Learning (eds Levine, S. et al.) 515–524 (PMLR, 2017).

O’Neill, A. et al. Open X-Embodiment: robotic learning datasets and RT-X models. In Proc. 2024 IEEE International Conference on Robotics and Automation (ICRA) 6892–6903 (IEEE, 2024).

Qi, H., Kumar, A., Calandra, R., Ma, Y. & Malik, J. In-hand object rotation via rapid motor adaptation. In Proc. 6th Conference on Robot Learning (eds Liu, K. et al.) 1722–1732 (PMLR, 2023).

Khandate, G. et al. Sampling-based exploration for reinforcement learning of dexterous manipulation. In Proc. Robotics: Science and Systems XIX (eds Bekris, K. et al.) (RSS, 2023).

Chen, T. et al. Visual dexterity: in-hand reorientation of novel and complex object shapes. Sci. Robot. 8, eadc9244 (2023).

Kumar, A., Fu, Z., Pathak, D. & Malik, J. RMA: rapid motor adaptation for legged robots. In Proc. Robotics: Science and Systems XVII (eds Shell, D. A. et al.) (RSS, 2021).

Vaswani, A. et al. Attention is all you need. In Proc. Advances in Neural Information Processing Systems 30 (eds Guyon, I. et al.) (NIPS, 2017).

Shah, D. et al. Navigation with large language models: semantic guesswork as a heuristic for planning. In Proc. 7th Conference on Robot Learning (eds Tan, J. et al.) 2683–2699 (PMLR, 2023).

Gen, Z. et al. Vision-language pre-training: basics, recent advances, and future trends. Foundations and Trends in Computer Graphics and Vision Vol. 14 (Now Publishers, 2022).

Brohan A. et al. RT-2: vision-language-action models transfer web knowledge to robotic control. In Proc. 7th Conference on Robot Learning (eds Tan, J. et al.) 2165–2183 (PMLR, 2023).

Toussaint, M. Logic-geometric programming: an optimization-based approach to combined task and motion planning. In Proc. Twenty-Fourth International Joint Conference on Artificial Intelligence (eds Yang, Q. & Wooldridge, M.) 1930–1936 (AAAI Press, International Joint Conferences on Artificial Intelligence, 2015).

Semeraro, F. et al. Human–robot collaboration and machine learning: a systematic review of recent research. Rob. Comput. Integr. Manuf. 79, 102432 (2023).

Brunke, L. et al. Safe learning in robotics: from learning-based control to safe reinforcement learning. Annu. Rev. Control Robot. Auton. Syst. 5, 411–444 (2022).

Brunke, L. et al. Learning-based model predictive control: toward safe learning in control. Annu. Rev. Control Robot. Auton. Syst. 3, 269–296 (2020).

Nghiem, T. X. et al. Physics-informed machine learning for modeling and control of dynamical systems. In Proc. 2023 American Control Conference (ACC) 3735–3750 (IEEE, 2023).

Tsukamoto, H., Chung, S.-J. & Slotine, J.-J. E. Contraction theory for nonlinear stability analysis and learning-based control: a tutorial overview. Annu. Rev. Control 52, 135–169 (2021).

Kang, D., Cheng, J., Zamora, M., Zargarbashi, F. & Coros, S. RL + model-based control: using on-demand optimal control to learn versatile legged locomotion. IEEE Robot. Autom. Lett. 8, 6619–6626 (2023).

Ichnowski, J. et al. Deep learning can accelerate grasp-optimized motion planning. Sci. Robot. 5, eabd7710 (2020).

Thrun, S. & Mitchell, T. M. Lifelong robot learning. Rob. Autom. Syst. 15, 25–46 (1995).

Aliasghari, P., Ghafurian, M., Nehaniv, C. L. & Dautenhahn, K. How non-experts kinesthetically teach a robot over multiple sessions: diversity in teaching styles and effects on performance. Int. J. Soc. Robot. 16, 2079–2105 (2024).

Dahiya, R., Akinwande, D. & Chang, J. S. Flexible electronic skin: from humanoids to humans. Proc. IEEE 107, 2011–2015 (2019).

Author information

Authors and Affiliations

Contributions

A.B. led the writing and editing of the paper, and the creation of the images. A.A.-S., M.B., W.B., P.C., M.C., R.D., D.K., K.G., Y.N. and D.S. contributed to the writing of the paper. All authors gave final approval for submission.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Nature Machine Intelligence thanks Soheil Habibian and Luis J. Manso for their contribution to the peer review of this work.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Billard, A., Albu-Schaeffer, A., Beetz, M. et al. A roadmap for AI in robotics. Nat Mach Intell 7, 818–824 (2025). https://doi.org/10.1038/s42256-025-01050-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s42256-025-01050-6