Abstract

Importance

Worldwide, glaucoma is a leading cause of irreversible blindness. Timely detection is paramount yet challenging, particularly in resource-limited settings. A novel, computer vision-based model for glaucoma screening using fundus images could enhance early and accurate disease detection.

Objective

To develop and validate a generalised deep-learning-based algorithm for screening glaucoma using fundus image.

Design, setting and participants

The glaucomatous fundus data were collected from 20 publicly accessible databases worldwide, resulting in 18,468 images from multiple clinical settings, of which 10,900 were classified as healthy and 7568 as glaucoma. All the data were evaluated and downsized to fit the model’s input requirements. The potential model was selected from 20 pre-trained models and trained on the whole dataset except Drishti-GS. The best-performing model was further trained to classify healthy and glaucomatous fundus images using Fastai and PyTorch libraries.

Main outcomes and measures

The model’s performance was compared against the actual class using the area under the receiver operating characteristic (AUROC), sensitivity, specificity, accuracy, precision and the F1-score.

Results

The high discriminative ability of the best-performing model was evaluated on a dataset comprising 1364 glaucomatous discs and 2047 healthy discs. The model reflected robust performance metrics, with an AUROC of 0.9920 (95% CI: 0.9920–0.9921) for both the glaucoma and healthy classes. The sensitivity, specificity, accuracy, precision, recall and F1-scores were consistently higher than 0.9530 for both classes. The model performed well on an external validation set of the Drishti-GS dataset, with an AUROC of 0.8751 and an accuracy of 0.8713.

Conclusions and relevance

This study demonstrated the high efficacy of our classification model in distinguishing between glaucomatous and healthy discs. However, the model’s accuracy slightly dropped when evaluated on unseen data, indicating potential inconsistencies among the datasets—the model needs to be refined and validated on larger, more diverse datasets to ensure reliability and generalisability. Despite this, our model can be utilised for screening glaucoma at the population level.

Similar content being viewed by others

Introduction

Glaucoma is a multifactorial optic neuropathy that affects millions of people worldwide [1]. It is characterised by progressive degeneration of the optic nerve head (ONH), leading to irreversible vision loss if left undiagnosed and untreated in a timely manner [2, 3]. The early stages of the disease are usually asymptomatic, thus, proactive and effective screening methods are essential for preventing substantial vision loss. However, present glaucoma detection methods are restricted by improved access to comprehensive eye care, particularly in low- and middle-income countries where access to advanced diagnostic equipment and trained glaucoma specialists is limited [4, 5]. Moreover, current screening methods have a significant false-positive rate, which can cause unnecessary anxiety and burden [6, 7]. These challenges highlight the need for innovative approaches in glaucoma screening, including advancements in artificial intelligence.

Traditional techniques for glaucoma detection typically include measuring intraocular pressure (IOP), assessing the ONH using optical coherence tomography (OCT) and performing visual field tests. However, these methods can be time-consuming, expensive and prone to variability [8]. Measurement of IOP, while useful, often misses cases of normal-tension glaucoma where optic neuropathy occurs despite statistically normal pressure levels [9]. Furthermore, these clinical tests require interpretation by an experienced clinician, introducing subjective bias and limiting accessibility in resource-constrained settings. Although conventional diagnostic procedures are valuable, they serve as a ground for utilising more advanced technologies. Further, the need for in-person testing limits accessibility for patients in remote or underserved areas. These challenges underscore the need for more effective, accessible and unbiased approaches to screen glaucoma.

Computer vision-based models to diagnose glaucoma using fundus images [10]—a widely used and non-invasive imaging technique that can provide valuable details about ONH conditions—have shown promise. Fundus images reveal the early signs of glaucoma, which are relevant for screening and managing glaucoma [11, 12]. This imaging modality can be integrated with deep-learning algorithms to automatically extract glaucomatous features from fundus images. These algorithms have the potential to enhance the efficiency of glaucoma screening and diagnosis [13]. Nevertheless, most existing studies focus on specific populations or regions with limited data that lack the potential to be a generalisable model for glaucoma screening [14,15,16,17].

In this study, we sought to develop and validate a generalised deep-learning-based algorithm from 20 publicly accessible glaucomatous fundus data using the top-performing model out of 20 pre-trained models to ensure an accurate screening model for the disease, making it more useful in ophthalmic practice. This algorithm may provide a practical and cost-efficient way for screening glaucoma at the population level.

Methods

Description of datasets

We used 20 publicly accessible glaucoma datasets from cohorts across the world (eFig. 1 in Supplementary) [18, 19]. A full description of the cohorts is contained in the Supplementary Text. In brief, these datasets involved people from diverse ethnic groups, with retinal images being obtained using various cameras at differing resolutions. Our study design depends on pre-existing and anonymised data. Nevertheless, all investigations followed relevant guidelines and regulations, ensuring high ethical compliance standards throughout the study. All the datasets, excluding the Drishti-GS cohort, were used to train the classification model. The Drishti-GS dataset was used for external validation of our model [20]. The dataset’s ground truth was constructed by four experts with varying clinical experience (3–20 years), making it an ideal option for external validation.

Data pre-processing and augmentation

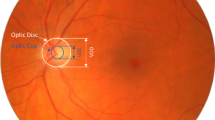

We utilised a diverse set of fundus images from 20 publicly accessible databases. These databases exhibited considerable variability in fundus images; some datasets had only ONH disc images, while others contained complete retinal fundus images. We pre-processed different image types to create a uniform set of ONH disc images—using OpenCV to identify and isolate circular objects (ONH) in fundus images [21]. This involved converting to grayscale, applying a Gaussian blur and using the Hough Circle Transform to improve circle detection [22]. The ONH images were then automatically cropped and resized to a uniform resolution of 512 × 512. If any image did not detect the ONH or if the ONH was off-centre, the image was manually cropped, focusing on the most clinically significant aspects of the fundus images [23]. After cropping, the image was downsized to 224 × 224 with three channels to ensure consistent dimensions for input into our deep-learning models for glaucoma screening.

Acknowledging the constraints of limited high-quality, publicly accessible fundus images, we increased our training dataset via data augmentation—a technique pivotal to preventing model overfitting and enabling robustness and generalisability [24]. To enhance our dataset’s diversity, various data augmentation techniques were employed, including random rotation, cropping, flipping, scaling, lighting adjustment, affine transformations and zoom, coupled with normalisation using ImageNet statistics, as shown in eTable 1 in Supplementary. Importantly, these augmentation techniques undistorted the critical features of the ONH disc images relevant for glaucoma diagnosis.

Model selection and training

Our study compared 20 deep-learning architectures for classifying healthy and glaucomatous disc images. This experiment included a variety of models, including ResNets (18, 34, 50, 101, 152 layers), VGG (16, 19 layers with batch normalisation), AlexNet, DenseNets (121, 161, 169, 201 layers), SqueezeNets (1.0, 1.1), GoogLeNet, ShuffleNet, ResNext (50_32x4d, 101_32x8d) and Wide ResNets (50_2, 101_2). We utilised the Fastai library using the cnn_learner function to create a learner object combining model, data, loss functions, and class weights [25]. All the models were trained for three epochs using a fine-tuning approach, and then we evaluated the accuracy of each model on our validation set, which consisted of 20% of the total 18,366 images. The process was repeated three times using randomly split data for training and testing purposes. For the comparative performance of the different models, we plotted a bar chart of the accuracies in eFig. 2 in Supplementary. The best-performing model (vgg19_bn using pre-trained weights from ImageNet [26]) was selected based on its performance and complexity. The vgg19_bn incorporates batch normalisation, which accelerates training and increases model stability by reducing internal covariate shifts [27]. The model’s parameters were altered to better fit our dataset by fine-tuning it for 15 epochs until the validation loss stopped decreasing. Following fine-tuning, we used the one-cycle policy to train the model, which adjusts the learning rate and momentum for more efficient training [28]. The training was terminated when the validation loss failed to improve over two consecutive epochs, enabled by a callback function.

The degree of agreement with the established ground truth of publicly available datasets varied considerably across datasets [29]. Subsequently, we employed the ImageClassifierCleaner from the Fastai library to review and clean our dataset [30]—excluding around 1% of the total images the model had incorrectly predicted. We repeated the shuffling and training process seven times and removed ~7% of the images to improve the overall quality of the dataset. The data-cleaning process effectively reduced the number of misclassified images from the dataset. The clean dataset showed a marked classification imbalance between healthy and glaucomatous fundus images: 59.7% healthy and 40.3% glaucomatous. We employed a weighted cross-entropy, a loss function commonly utilised in training a classification model, to address the problem of imbalanced data [31]. The model was re-trained on this refined data, which is more consistent and less likely to mislead the model. Finally, we evaluated the model’s performance on unseen data.

Model decision visualisation

We employed Gradient-weighted Class Activation Mapping (Grad-CAM) to improve the interpretability and transparency of deep-learning models—Grad-CAM provides valuable insights into the decision-making process by highlighting the important regions in an image relevant to predicting the healthy and glaucomatous disc images [32]. Grad-CAM calculates the gradient of the image score for a particular class and estimates the gradient of the final classification score concerning the weights of the last convolutional layer. These visualisations help uncover the underlying patterns and features that influence the model’s decision for clinicians, making it easier to validate and understand its predictions.

Statistical analyses

To evaluate the performance of our deep-learning model in distinguishing between healthy and glaucomatous discs, we applied various evaluation metrics: the AUROC, sensitivity (or recall), specificity, accuracy, precision and the F1-score. These metrics provide a comprehensive measure of the model’s accuracy, ability to avoid false positives and balance between precision and recall. The model’s prediction probabilities and true labels produced by the model were transformed into numpy arrays to facilitate subsequent computations. To validate the performance of these measurements, we utilised a bootstrap resampling method [33]. This method involved 4000 iterations of resampling with replacement from our dataset, which allowed for calculating 95% confidence intervals for each performance metric.

The experiment was performed on a virtual Ubuntu desktop (version 22.04) using NVIDIA A100 with 40GB of GPU RAM at Nectar Research Cloud [34]. Python (version 3.10.6) along with PyTorch (version 2.0.0+cu117), Fastai (version 2.7.12), TorchVision (version 0.15.1+cu117), Matplotlib (version 3.5.1) and Scikit-learn (version 1.2.2) libraries were employed [35,36,37].

Results

Data description

A total of 117,152 fundus images were retrieved from 20 databases with 12 unique countries and two unknown countries (Table 1). Non-referral glaucomatous images were excluded from the EyePACS dataset to prevent bias towards the healthy group, and 512 ungradable images were also excluded from the datasets. Our deep-learning model was trained and evaluated on an extensive dataset of 18,468 disc images from multiple clinical settings worldwide; 10,900 images were healthy, while 7568 had glaucoma.

Model performance

Our best-performing model demonstrated robust performance in discriminating between healthy and glaucomatous disc images, as shown in Table 2. The model achieved high accuracy, precision, recall and specificity in classifying the images. It successfully maintained a balance between sensitivity and specificity, as indicated by the AUROC of 0.9920, showing a strong ability to accurately identify glaucoma and healthy discs. Moreover, the F1 scores, which consider both precision and recall, were higher than 96% for both classes.

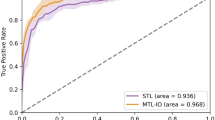

Generalisability of the model

To ensure the applicability of our model across populations, we validated it on the DrishtiI-GS dataset, which was excluded from the model’s training and validation steps. The model demonstrated significant generalisability, as highlighted by the performance metrics in Fig. 1. The model attained an overall accuracy of 87.13% (0.8713) and AUROC of 87.51% (0.8751) for distinguishing between healthy and glaucomatous discs. This performance on the DRISHTI-GS dataset suggests our best-performing model (vgg19_bn) has potential for glaucoma screening. However, further testing and validation on multiple datasets are essential to confirm its generalisability and reliability across different populations.

a Displays the confusion matrix from the validation data. b Illustrates the AUROC curve on the validation set, reflecting the model’s discriminative power between healthy and glaucomatous discs. For external validation on the Drishti dataset, (c) presents another confusion matrix showcasing the model’s predictive performance, while (d) features a plot summarising all key performance metrics such as sensitivity, specificity, accuracy, precision and F1-score.

Model errors: insights from the top losses

Our model for glaucoma screening had made some incorrect predictions on the validation set; 47 healthy images were wrongly categorised as having glaucoma (false positives), and 65 actual cases of glaucoma were missed (false negatives) (Fig. 1). Examination of the 47 misclassified healthy images revealed certain features, such as optic disc cupping, which could be associated with glaucoma. Among the 65 actual glaucoma cases the model missed, several could be early or borderline cases where pathological changes in the optic disc are less distinct. The conclusions from this error analysis provide valuable insights into areas for improving our model. To further understand the nature of our model’s misclassifications, we utilised the top_losses function from the Fastai library to visualise the instances with the highest loss, shown in Fig. 2. This analysis provides a comprehensive picture of our model’s strengths and weaknesses, guiding us in refining our model for improved accuracy in glaucoma screening.

Gradient-weighted class activation mapping (Grad-CAM)

Our classification model achieved promising results, exhibiting a robust capacity to screen glaucoma at a population level using fundus images. However, to further evaluate the decision-making process of our deep-learning model, we utilised Grad-CAM to visualise which regions in the fundus images influenced the model’s classification results. Grad-CAM heatmaps helped visualise the critical areas of fundus images that the model focused on to discriminate between healthy and glaucomatous discs. The heatmaps, represented in terms of higher-intensity-coloured regions superimposed on the fundus images, provided an intuitive understanding of the salient features recognised by our model. Moreover, localised areas around the optic nerve and retinal nerve fibre layer, where early signs of glaucomatous damage commonly occur, were also emphasised in the heatmaps (Fig. 3).

The Grad-CAM visualisations provided valuable insights into classifying fundus images as healthy or glaucomatous. As expected, in glaucomatous images, the heatmaps frequently highlighted the ONH, a critical region for diagnosing glaucoma due to characteristic features such as increased cup-to-disc ratio and neuroretinal rim thinning—which suggests the model correctly focused on clinically relevant areas when detecting glaucoma. Interestingly, healthy images demonstrated a diffused heatmap, implying that the model’s decision was guided more by the absence of pathological traits than the presence of specific healthy features. Saliency maps are primarily clustered at the ONH of glaucomatous discs.

Discussion

In this study, we aimed to develop and validate a generalised glaucoma screening model. The best-performing model (vgg19_bn) achieved promising accuracy, highlighting the potential of our approach to revolutionise the landscape of glaucoma screening. To the best of our understanding, no prior study had attempted to use extensive glaucomatous fundus data from diverse demographics and ethnicities, with images from various fundus cameras with different resolutions. Most deep-learning-based models were trained on a limited dataset for classifying healthy and glaucomatous fundus images from a single institution, which made the models non-generalisable for different populations and settings [10]. Our training dataset included 7,498 glaucoma cases and 10,869 healthy cases, gathered from 19 different datasets. This dataset—one of the most extensive clusters of fundus images ever used to develop a generalised glaucoma screening model—represents a wide range of ethnic groups and fundus cameras used, which could improve our model’s performance and make it more applicable globally. The best-performing model was selected in this study out of 20 pre-trained models (eFig. 2 in Supplementary)—choosing the right deep-learning architecture for a specific task is extremely important [38]. This extensive and diverse dataset using the potential deep-learning architecture can enhance the model’s generalisability, making it a versatile and practical tool for glaucoma screening in diverse populations.

Our best-performing model exhibited exceptional discriminative ability between glaucomatous and healthy discs; the model learned glaucomatous features from heterogeneous data. The vgg19_bn attained a high degree of AUROC of 99.2%, which was exceeded by ophthalmologists (82.0) and deep-learning systems (97.0) [39], demonstrating its potential for practical use in glaucoma screening. Li et al. trained and validated their model on 31,745 and 8000 fundus images, respectively [40]. The model performed exceptionally well, achieving an AUC of 0.986 with a sensitivity of 95.6%, a specificity of 92.0% and an accuracy of 92.9% for identifying referable glaucomatous optic neuropathy. Our model maintained balance across all performance metrics, as revealed in Table 2, for both glaucoma and healthy cases. The model was unbiased towards any particular class, making it a reliable tool for glaucoma screening for wider populations of glaucoma.

Furthermore, we implemented the DenseNet201, ResNet101 and DenseNet161 architectures (eTable 2 in Supplementary). The DenseNet201 demonstrated a classification accuracy of 96%, with an AUROC of 99%. Steen et al. employed the same DenseNet201 architecture, but their model achieved an accuracy of 87.60%, precision of 87.55%, recall of 87.60% and an F1 score of 87.57% on publicly available datasets containing 7299 non-glaucoma and 4617 glaucoma images [41]. Our study has a unique strength in balancing sensitivity and specificity, evident from our model’s high AUROC values—a significant advantage in real-world clinical settings. Many previous models had difficulty maintaining this balance, resulting in high false positive or false negative rates [16, 17, 42].

Liu et al. trained a CNN algorithm for automatically detecting glaucomatous optic neuropathy using a massive dataset of 241,032 images from 68,013 patients, and the model’s performance was impressive [43]. However, the model struggled with multiethnic data (7877) and images of varying quality (884), revealing a drop in AUC with 7.3% and 17.3%, respectively. In contrast, our model demonstrated a modest decline in accuracy, ~9.6%, when tested on the DRISHTI-GS dataset. We suspect that part of this performance shift might be due to inconsistencies and the lack of a clearly defined protocol for glaucoma classification across the publicly available dataset. We discovered specific variances in the classification criteria for glaucoma within the dataset (Fig. 2), which may have contributed to the slight drop in accuracy. Despite this, the model’s accuracy remained potent, indicating a strong generalisation capability. Nevertheless, it would be useful to evaluate the model’s performance across different datasets to confirm its reliability and generality further.

Investigating our model’s top losses, we ascertained two significant insights. First, the model did not perform well in classifying borderline cases, suggesting a need for advanced training techniques to handle such intricacies. Second, we identified potential mislabelling of fundus images within our dataset. This mislabelling could introduce confusion during the model’s learning phase, thereby decreasing performance. Both findings highlight the need for robust data quality checks and expert verification during dataset preparation. To improve the generalisability of the CNN model for glaucoma screening, we should consider accurate data labelled for training a model by glaucoma experts based on clinical tests rather than expanding the fundus data from multiethnic populations. Only five datasets (ACRIMA, REFUGE1, LAG, KEH and PAPILA) classify glaucoma based on comprehensive clinical examinations. In contrast, the remaining datasets used in this study either lacked detailed information regarding their study-specific classification of glaucomatous fundus images, or the diagnosis was solely based on fundus images. Given that accurate data labelling based on clinical tests is essential for training a robust model, the heterogeneity of disease classification may have impacted our model. Additional work profiling the validity and accuracy of these datasets is required.

We explored the decision-making process of our deep-learning model employing Grad-CAM to create heatmaps for the input fundus images. Heatmaps generated using Grad-CAM highlighted the regions of the fundus images that the model analysed when determining the presence or absence of glaucoma. Interestingly, the model’s emphasis areas align well with those that ophthalmologists would typically examine, such as the optic disc and cup, strengthening the clinical relevance of our model. These visual insights add a layer of transparency to our deep-learning model and provide a key link between automated classification and clinical understanding. These insights from the Grad-CAM heatmaps will be invaluable in ensuring the model’s decision-making process correlates with the clinical indicators of glaucoma. This can build clinicians’ trust in these algorithms, allowing for wide adoption in clinical practices.

Although our study demonstrates promising results, there are several limitations. First, our dataset had mislabelled fundus images, which could impact our model’s learning process and accuracy. We employed a data-cleaning procedure to address these challenges, removing 1306 images using ImageClassifierCleaner [30]. This process led to a cleaner and more reliable dataset, upon which we re-trained our model. This refinement considerably enhanced the model’s robustness and improved its generalisation ability to unseen data. Second, we observed that class imbalance could reduce the model’s effectiveness; however, we utilised class weight balance techniques to address this. Furthermore, a data augmentation technique was used in the training phase that could be different from the actual clinical images. Next, our Grad-CAM heatmaps indicated that the model occasionally focused on non-relevant regions for classification decisions, implying that the model might be learning from noise or artifacts within the images. Despite this limitation, the heatmaps confirmed that the model based its predictions on clinically interpretable features. Next, a substantial imbalance in the dataset (eTable 3 in Supplementary), with Non-Caucasians representing the majority of healthy cases (76.4%) and Caucasians accounting for nearly half of the glaucoma cases (47.4%), while Hispanics are underrepresented. This may impact our model’s performance and generalisability across different populations. Our study also acknowledges the publicly unavailable glaucomatous fundus data from the African continent (eFigure 1 in Supplementary) for our model’s training and validation. Incorporating glaucoma datasets from African countries could be highly beneficial to further enhance our model’s generalisability, especially in under-resourced areas. Finally, our model’s external validation was conducted solely on the DRISHTI-GS dataset. Future studies should aim to validate the model across multiple datasets, diverse populations and varied imaging devices to ensure broader applicability. Additionally, our model did not integrate clinical data, such as patients’ glaucoma history or IOP measurements, and visual field data, which could further enhance its predictive capabilities. Despite these limitations, the potential of our refined model for automated glaucoma screening remains significant and provides exciting prospects for future enhancements.

This research used fundus images to develop a robust computer vision model for glaucoma screening. The best-performing model (vgg19_bn) ascertained high values across multiple evaluation metrics for discerning disease status between glaucoma and healthy cohorts. This model offers a fast, cost-effective and highly accurate tool that can assist eye care practitioners in the decision-making process, ultimately improving patient outcomes and reducing the socioeconomic burden of glaucoma. However, the broad applicability of our model still needs to be validated on more ethnically diverse datasets to ensure its reliability and generalisability. Future studies should focus on the collation of images from people of diverse backgrounds.

Summary

What was known before

-

Glaucoma detection methods are restricted by improved access to comprehensive eye care, particularly in low- and middle-income countries. Current screening methods have a significant false-positive rate, which can cause unnecessary anxiety and burden for patients. Existing studies focus on specific populations or regions with limited data that lack the potential to be a generalisable computer vision model for glaucoma screening.

What this study adds

-

A robust computer vision model for glaucoma screening using fundus images. This study collected data from 20 publicly accessible databases and a potential model was selected from 20 pre-trained architectures. A computer vision model can assist ophthalmologists and optometrists in the decision-making process, improving patient outcomes and reducing socioeconomic burden. Identification of potential inconsistencies among datasets and the need for refining and validating the model on larger, more diverse datasets to ensure reliability and generalisability.

Data availability

All data used in this study are publicly available, with links provided in the supplementary materials.

References

Zhang N, Wang J, Li Y, Jiang B. Prevalence of primary open angle glaucoma in the last 20 years: a meta-analysis and systematic review. Sci Rep. 2021;11:1–12.

Medeiros FA, Zangwill LM, Bowd C, Mansouri K, Weinreb RN. The structure and function relationship in glaucoma: implications for detection of progression and measurement of rates of change. Investig Ophthalmol Vis Sci. 2012;53:6939–46.

Stein JD, Khawaja AP, Weizer JS. Glaucoma in adults—screening, diagnosis, and management: a review. JAMA. 2021;325:164–74.

Hamid S, Desai P, Hysi P, Burr JM, Khawaja AP. Population screening for glaucoma in UK: current recommendations and future directions. Eye. 2022;36:504–9.

Kolomeyer NN, Katz LJ, Hark LA, Wahl M, Gajwani P, Aziz K, et al. Lessons learned from 2 large community-based glaucoma screening studies. J Glaucoma. 2021;30:875–7. https://doi.org/10.1097/IJG.0000000000001920.

Forbes H, Sutton M, Edgar DF, Lawrenson J, Spencer AF, Fenerty C, et al. Impact of the Manchester glaucoma enhanced referral scheme on NHS costs. BMJ Open Ophthalmol. 2019;4:000278. https://doi.org/10.1136/bmjophth-2019-000278.

Moyer VA. Screening for glaucoma: U.S. Preventive Services Task Force recommendation statement. Ann Intern Med. 2013;159:484–9. https://doi.org/10.7326/0003-4819-159-6-201309170-00686.

Sharma P, Sample PA, Zangwill LM, Schuman JS. Diagnostic tools for glaucoma detection and management. Surv Ophthalmol. 2008;53:S17–32.

Killer HE, Pircher A. Normal tension glaucoma: review of current understanding and mechanisms of the pathogenesis. Eye. 2018;32:924–30.

Chaurasia AK, Greatbatch CJ, Hewitt AW. Diagnostic accuracy of artificial intelligence in glaucoma screening and clinical practice. J Glaucoma. 2022;31:285–99. https://doi.org/10.1097/IJG.0000000000002015.

Sihota R, Sidhu T, Dada T. The role of clinical examination of the optic nerve head in glaucoma today. Curr Opin Ophthalmol. 2021;32:83–91.

Bourne RRA. The optic nerve head in glaucoma. Community Eye Health. 2012;25:55–7.

Zedan MJM, Zulkifley MA, Ibrahim AA, Moubark AM, Kamari N, Abdani SR. Automated glaucoma screening and diagnosis based on retinal fundus images using deep learning approaches: a comprehensive review. Diagnostics. 2023;13. Epub ahead of print July 2023. https://doi.org/10.3390/diagnostics13132180.

Li L, Xu M, Liu H, Li Y, Wang X, Jiang L, et al. A large-scale database and a CNN model for attention-based glaucoma detection. IEEE Trans Med Imaging. 2020;39:413–24. https://doi.org/10.1109/TMI.2019.2927226.

Gheisari S, Shariflou S, Phu J, Kennedy PJ, Agar A, Kalloniatis M, et al. A combined convolutional and recurrent neural network for enhanced glaucoma detection. Sci Rep. 2021;11:1945 https://doi.org/10.1038/s41598-021-81554-4.

Hemelings R, Elen B, Barbosa-Breda J, Lemmens S, Meire M, Pourjavan S, et al. Accurate prediction of glaucoma from colour fundus images with a convolutional neural network that relies on active and transfer learning. Acta Ophthalmol. 2020;98:94. https://doi.org/10.1111/aos.14193.

Hung KH, Kao YC, Tang YH, Chen YT, Wang CH, Wang YC, et al. Application of a deep learning system in glaucoma screening and further classification with colour fundus photographs: a case control study. BMC Ophthalmol. 2022;22:483 https://doi.org/10.1186/s12886-022-02730-2.

Khan SM, Liu X, Nath S, Korot E, Faes L, Wagner SK, et al. A global review of publicly available datasets for ophthalmological imaging: barriers to access, usability, and generalisability. Lancet Digit Health. 2021;3:e51–66.

glaucoma-dataset-metadata/README.md at main · TheBeastCoding/glaucoma-dataset-metadata. GitHub, https://github.com/TheBeastCoding/glaucoma-dataset-metadata/blob/main/README.md. Accessed 18 July 2023.

Drishti-GS Dataset Webpage. http://cvit.iiit.ac.in/projects/mip/drishti-gs/mip-dataset2/Dataset_description.php. Accessed 18 July 2023.

OpenCV Library. OpenCV—open computer vision library. OpenCV. 2021. https://opencv.org/. Accessed 23 May 2023.

Bapat K Hough Transform using OpenCV. LearnOpenCV—Learn OpenCV, PyTorch, Keras, Tensorflow with examples and tutorials. 2019. https://learnopencv.com/hough-transform-with-opencv-c-python/. Accessed 30 July 2023.

Jonas JB, Budde WM. Diagnosis and pathogenesis of glaucomatous optic neuropathy: morphological aspects. Prog Retin Eye Res. 2000;19. https://doi.org/10.1016/s1350-9462(99)00002-6.

Goceri E. Medical image data augmentation: techniques, comparisons and interpretations. Artif Intell Rev. 2023;1:1–45.

Howard J, Gugger S. Fastai: a layered API for deep learning. Information. 2020;11:108.

vgg19_bn—Torchvision 0.15 documentation. https://pytorch.org/vision/stable/models/generated/torchvision.models.vgg19_bn.html?highlight=vgg19_bn#torchvision.models.vgg19_bn. Accessed 21 July 2023.

Ioffe S, Szegedy C. Batch normalization: accelerating deep network training by reducing internal covariate shift. 2015. http://arxiv.org/abs/1502.03167. Accessed 21 July 2023.

Howard J, Gugger S. Deep learning for coders with fastai and PyTorch. ‘O’Reilly Media, Inc.’. 2020. https://play.google.com/store/books/details?id=wATuDwAAQBAJ.

Amjadian E, Ardali MR, Kiefer R, Abid M, Steen J. Ground truth validation of publicly available datasets utilized in artificial intelligence models for glaucoma detection. Invest Ophthalmol Vis Sci. 2023;64:392.

Vision widgets. https://docs.fast.ai/vision.widgets.html. Accessed 6 Jun 2023.

Ho Y, Wookey S. The real-world-weight cross-entropy loss function: modeling the costs of mislabeling, https://ieeexplore.ieee.org/abstract/document/8943952. Accessed 13 July 2023.

Selvaraju RR, Cogswell M, Das A, Vedantam R, Parikh D, Batra D. Grad-CAM: visual explanations from deep networks via gradient-based localization. Int J Comput Vis. 2019;128:336–59.

Hesterberg TC. What teachers should know about the bootstrap: resampling in the undergraduate statistics curriculum. Am Stat. 2015;69:371–86.

Login - Nectar Dashboard. https://dashboard.rc.nectar.org.au/dashboard_home/. Accessed 22 May 2023.

PyTorch 2.0. https://pytorch.org/get-started/pytorch-2.0/. Accessed 22 May 2023.

torchvision. PyPI. https://pypi.org/project/torchvision/. Accessed 22 May 2023.

Installing. scikit-learn. https://scikit-learn.org/stable/install.html. Accessed 22 May 2023.

Alzubaidi L, Zhang J, Humaidi AJ, Al-Dujaili A, Duan Y, Al-Shamma O, et al. Review of deep learning: concepts, CNN architectures, challenges, applications, future directions. J Big Data. 2021;8:1–74.

Buisson M, Navel V, Labbé A, Watson SL, Baker JS, Murtagh P, et al. Deep learning versus ophthalmologists for screening for glaucoma on fundus examination: a systematic review and meta-analysis. Clin Exp Ophthalmol. 2021;49:1027–38. https://doi.org/10.1111/ceo.14000.

Li Z, He Y, Keel S, Meng W, Chang RT, He M. Efficacy of a deep learning system for detecting glaucomatous optic neuropathy based on color fundus photographs. Ophthalmology. 2018;125:1199–206. https://doi.org/10.1016/j.ophtha.2018.01.023.

Steen J, Kiefer R, Ardali M, Abid M, Amjadian E. Standardized and open-access glaucoma dataset for artificial intelligence applications. Investig Ophthalmol Vis Sci. 2023;64:384.

Diaz-Pinto A, Morales S, Naranjo V, Köhler T, Mossi JM, Navea A. CNNs for automatic glaucoma assessment using fundus images: an extensive validation. Biomed Eng Online. 2019;18:29 https://doi.org/10.1186/s12938-019-0649-y.

Liu H, Li L, Wormstone IM, Qiao C, Zhang C, Liu P, et al. Development and validation of a deep learning system to detect glaucomatous optic neuropathy using fundus photographs. JAMA Ophthalmol. 2019;137:1353–60. https://doi.org/10.1001/jamaophthalmol.2019.3501.

Lehrstuhl für Mustererkennung. Friedrich-Alexander-Universität Erlangen-Nürnberg. High-Resolution Fundus (HRF) Image Database. https://www5.cs.fau.de/research/data/fundus-images/. Accessed 18 July 2023.

CNNs for Automatic Glaucoma Assessment using Fundus Images: An Extensive Validation. figshare, https://figshare.com/s/c2d31f850af14c5b5232. Accessed 18 July 2023.

iChallenge-GON数据集 - 飞桨AI Studio, https://aistudio.baidu.com/aistudio/datasetdetail/177198. Accessed 19 July 2023.

Almazroa A. Retinal fundus images for glaucoma analysis: the RIGA dataset. https://doi.org/10.7302/Z23R0R29.

Website, http://medimrg.webs.ull.es/.

GitHub - cvblab/retina_dataset: Retina dataset containing 1) normal 2) cataract 3) glaucoma 4) retina disease. GitHub. https://github.com/cvblab/retina_dataset. Accessed 19 July 2023.

DRIONS-DB: RETINAL IMAGE DATABASE. http://www.ia.uned.es/~ejcarmona/DRIONS-DB.html. Accessed 19 July 2023.

Zhang E. Glaucoma detection. 2022. https://www.kaggle.com/sshikamaru/glaucoma-detection. Accessed 19 July 2023.

GitHub - smilell/AG-CNN: The model of ‘attention based glaucoma detection: a large-scale database with a CNN model’ (CVPR2019). GitHub. https://github.com/smilell/AG-CNN. Accessed 19 July 2023.

1000 Fundus images with 39 categories. 2019. https://www.kaggle.com/linchundan/fundusimage1000. Accessed 19 July 2023.

Raja H. Data on OCT and fundus images. Epub ahead of print 27 January 2020. https://doi.org/10.17632/2rnnz5nz74.2.

Deep-Learning-Based-Glaucoma-Detection-with-Cropped-Optic-Cup-and-Disc-and-Blood-Vessel-Segmentation/Dataset at master · mirtanvirislam/Deep-Learning-Based-Glaucoma-Detection-with-Cropped-Optic-Cup-and-Disc-and-Blood-Vessel-Segmentation. GitHub. https://github.com/mirtanvirislam/Deep-Learning-Based-Glaucoma-Detection-with-Cropped-Optic-Cup-and-Disc-and-Blood-Vessel-Segmentation/tree/master/Dataset. Accessed 19 July 2023.

GitHub—ProfMKD/Glaucoma-dataset: glaucoma dataset—Labelled data for fundus images. GitHub. https://github.com/ProfMKD/Glaucoma-dataset. Accessed 19 July 2023.

Orlando JI, Breda JB, Van Keer K, Blaschko MB, Blanco PJ, Bulant CA. LES-AV dataset. Epub ahead of print 14 February 2020. https://doi.org/10.6084/m9.figshare.11857698.v1.

Bajwa MN, Singh GAP, Neumeier W, Malik MI, Dengel A, Ahmed S. G1020: a benchmark retinal fundus image dataset for computer-aided glaucoma detection. 2020:1–7. http://arxiv.org/abs/2006.09158. Accessed 19 July 2023.

Kovalyk O, Morales-Sánchez J, Verdú-Monedero R, Sellés-Navarro I, Palazón-Cabanes A, Sancho-Gómez JL. PAPILA. Epub ahead of print 29 April 2022. https://doi.org/10.6084/m9.figshare.14798004.v1.

Kim U. Machine learn for glaucoma. Epub ahead of print 15 November 2018. https://doi.org/10.7910/DVN/1YRRAC.

AIROGS - Grand Challenge. grand-challenge.org. https://airogs.grand-challenge.org/data-and-challenge/. Accessed 19 July 2023.

Drishti-GS Dataset Webpage. http://cvit.iiit.ac.in/projects/mip/drishti-gs/mip-dataset2/Home.php. Accessed 19 July 2023.

Acknowledgements

This research was supported by a Program Grant from the National Health and Medical Research Council (NHMRC; GNT1150144). NHMRC fellowships support SM, JEC, DAM, and AWH and AKC is supported by a Research Training Program Scholarship from the University of Tasmania.

Funding

Open Access funding enabled and organized by CAUL and its Member Institutions.

Author information

Authors and Affiliations

Contributions

AKC contributed to the research design, data analysis and preparation of the manuscript. GSL was involved in research design and interpretation. CJG was involved in manuscript preparation and editing. PG provided interpretation, while JEC contributed to interpretation and critically reviewed the manuscript. DAM was involved in the study design, interpretation and critically reviewing the manuscript. SM provided interpretation and critically reviewed the manuscript. AWH contributed to the research design, interpretation and provided final approval of the manuscript. All authors reviewed and approved the final version of the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Chaurasia, A.K., Liu, GS., Greatbatch, C.J. et al. A generalised computer vision model for improved glaucoma screening using fundus images. Eye 39, 109–117 (2025). https://doi.org/10.1038/s41433-024-03388-4

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s41433-024-03388-4