Abstract

Goal-directed visual attention is a fundamental cognitive process that demonstrates the brain’s remarkable ability to prioritize visual information relevant to specific tasks or objectives. Despite its importance, there is a lack of comprehensive datasets to investigate the underlying neural mechanisms. Here, we present a large naturalistic visual search dataset in which rhesus macaques searched for targets among natural stimuli based on object categories using voluntary eye movements. The stimulus set included 40 images per category across four categories: faces, houses, flowers, and hands. We recorded activity from 6871 units in area V4, 8641 units in the inferior temporal cortex (IT), 5622 units in the orbital frontal cortex (OFC), and 9916 units in the lateral prefrontal cortex (LPFC). These units exhibited diverse receptive fields and selectivity for visual categories. Together, our extensive dataset provides a rich neuronal population across multiple brain areas, enabling a comprehensive analysis of the neural processes underlying goal-directed visual attention.

Similar content being viewed by others

Background & Summary

Visual attention refers to the cognitive process of selectively focusing on specific elements of a visual scene while filtering out irrelevant information, enabling efficient perception and decision-making in complex environments1,2,3. Goal-directed visual search is a critical aspect of visual attention, involving the deliberate and focused scanning of a visual environment to locate specific targets based on current goals and expectations. Natural visual search involves feature-based attention, which enhances the representation of relevant stimuli across the visual field, and spatial attention, which leverages foveal visual processing while continuously scanning the periphery4,5. Behavioral studies have demonstrated that both peripheral and foveal visual fields are essential for visual search. The peripheral field aids in selection, searching, and guiding the search, while the foveal field supports object analysis, observation, and recognition6,7,8,9,10,11 (see also fMRI evidence12). However, there is limited neural evidence at the single-unit resolution encompassing both foveal and peripheral populations.

Most neuronal-level visual search studies have recorded activity from units with receptive fields (RFs) confined to either the peripheral/parafoveal4,13,14,15,16,17 or central/foveal18,19,20 visual field. Moreover, many studies employed simplified or constrained stimuli: for example, combinations of eight colors and eight shapes4, eight object images14, a letter “T” as the target and “ + ” as the distractor15, circular image patches from black-and-white photographs13,16, and letter-like figures (e.g., T, I, E, L, F, O, Z)17 (but see also19 for 70 natural and man-made objects and18,20 for 20 visual search arrays, each containing 24 items). These limitations have restricted a comprehensive understanding of visual and cognitive processes in brain regions essential for visual attention. Notably, there are no publicly available single-unit datasets encompassing a large population of both foveal and peripheral units for naturalistic visual search.

To address this gap, we present a large neuronal dataset to the research community, offering several significant advantages. First, we recorded a total number of 6871 units from V4, 8641 units from IT (including both TE and TEO), 5622 units from the OFC, and 9916 units from the LPFC. Among these, 5070 units from V4, 5051 units from IT, 1470 units from the OFC, and 2997 units from the LPFC were visually responsive units. These units exhibited RFs covering the foveal, peripheral, or both regions, as well as neural selectivity across visual categories. This diversity in neuronal properties and the large number of recorded units are critical for a comprehensive understanding and modeling of visual attention. Second, we utilized naturalistic stimuli and trained monkeys to perform a visual search based on four object categories among 160 images. To the best of our knowledge, this represents one of the few natural object category-based visual search tasks used with monkeys, enabling insights into how the primate brain performs complex visual attention tasks. Third, unlike most monkey studies that constrain gaze to a fixed, limited number of positions through behavioral controls, we employed a natural free-gaze visual search task with fewer behavioral constraints, allowing for voluntary eye movements. With these advantages, we can investigate the neural mechanisms underlying the ___domain specificity of social attention21, the properties of RFs22, and the interplay between visual feature coding and attention23. Together, this paradigm, combined with extensive neuronal recordings, advances our understanding of the neural mechanisms underlying natural visual search behavior and contributes to the development of more accurate computational models for visual search.

Methods

Animal welfare statement

Non-human primate research represents a small yet essential component of neuroscience. The scientists involved in this study acknowledge their responsibility to conduct high-quality research while minimizing any discomfort or distress experienced by the animals. Sharing the study data in a standardized, open-access format aligns with this commitment, promoting transparency, accessibility, and optimal use of the data. This approach adheres to the principles of the 3Rs and supports international efforts to enhance the reporting and accessibility of biomedical research data.

All animal procedures in this study adhered to the guidelines of the Institutional Animal Care and Use Committee (IACUC) and were approved by the Animal Care and Use Committee at the Shenzhen Institute of Advanced Technology (SIAT), Chinese Academy of Sciences, under permit number SIAT-IRB-160223-NS-ZHH-A0187-003.

In accordance with SIAT’s protocol for the care and use of laboratory animals, the psychological and veterinary welfare of the animals were carefully monitored throughout the study by veterinarians, facility staff, and researchers specializing in non-human primates. After the experiment concluded, the animals remained in their original housing with access to free drinking water and food, as well as regular health checks and quarantine.

Animals and general procedures

Two male rhesus macaques (Macaca mulatta), weighing 12 kg and 15 kg on average, were used in this study (see Table 1 for details). The monkeys were trained to perform a category-based visual search task and a visually guided saccade task. The animals were head-fixed (using a headpost) while performing the tasks. Training began with familiarizing them with sitting in a primate chair. Once acclimated, they were introduced to the laboratory for task-specific training. The training started with a simplified version of the task and gradually progressed to the full task. The training phase was considered complete when the monkeys achieved stable performance above 85%.

Under aseptic conditions, each monkey was implanted with a head post for fixation and two recording chambers, providing access to brain regions including V4, IT, OFC, and LPFC. Localization of the chambers was based on MRI scans obtained before surgery. Surgeries were performed under sterile conditions using gas anesthesia following intramuscular injection induction, with pain levels assessed throughout. Specifically, before the surgery, atropine and ketamine were administered to induce muscle relaxation and anesthesia, followed by isoflurane gas to maintain anesthesia. During the surgery, various measurements were taken using an anesthesia monitor, including heart rate (ECG), blood oxygen saturation, respiratory rate, and rectal temperature. Post-operative monitoring and care, including pain relief, fluid supplementation, and infection prevention, were provided along with clinical assessments.

Daily routine

The animals were housed in a standard facility alongside other macaque monkeys, with controlled lighting and free access to food, water, and fruits. Veterinarians and caretakers conducted daily observations of both the animals and the facilities. During a typical workday, each animal was removed from its cage, placed in a primate chair for weighing, and then brought to the laboratory for training or recording sessions. Successful task performance was rewarded with juice, and sessions concluded based on the animal’s engagement. After each session, the animal was returned to its cage, with daily water intake monitored to ensure adequacy; additional water was provided in the cage if necessary. Access to food remained unrestricted and was freely available at all times.

Experimental setup and neural recordings

The experimental setup included electrophysiological recording hardware and software, an infrared eye-tracking system, a primate chair, a reward system, and an experimental control computer. Behavioral experiments were managed using a computer running MonkeyLogic software (University of Chicago, IL, USA; https://monkeylogic.nimh.nih.gov/index.html)24, which presented stimuli, monitored eye movements, and controlled reward delivery via PCIe-6321 multifunction DAQ cards connected to a BNC-2090A breakout box (National Instruments, Austin, TX, USA). Eye movements were tracked using an infrared eye-tracking system (iViewX Hi-Speed, SensoMotoric Instruments [SMI], Teltow, Germany), with a sampling rate of 500 Hz. The reward system comprised a graduated cylinder filled with juice, connected to a solenoid valve that regulated juice delivery through a feeding tube.

Extracellular recordings were daily performed using a 128-channel recording system (Cerebus System, Blackrock Microsystems, Salt Lake City, UT, USA; Table 2) with 24- or 32-contact electrodes (V-Probe or S-Probe, Plexon Inc, Dallas, TX, USA; Table 3). The electrodes were inserted through the dura mater under the protection of a guiding tube and were advanced to the targeted brain area using an electrode manipulator (Narishige Scientific Instrument, Tokyo, Japan). Recordings were filtered between 250 Hz and 5 kHz, amplified, and digitized at 30 kHz to obtain spikes. Spikes from different units were isolated using Offline Sorter software (Plexon Inc., Dallas, TX, USA; https://plexon.com/products/offline-sorter/) after the recording was completed. Single-unit and multi-unit spikes were acquired from V4, IT, OFC, and LPFC, with activity from two or three of these areas recorded simultaneously in most sessions. The locations of recordings were verified with MRI (Fig. 1 and Table 4). Additionally, task event codes and eye position signals were sent to the Cerebus system in parallel via digital and analog inputs, respectively.

MRI images of typical recording sites and all sites overlaid on the rhesus monkey brain atlas in stereotaxic coordinates28. The red arrow points to the electrodes. The red and blue lines represent the estimated spatial range of recordings in monkey S and monkey E, respectively. The numbers below indicate the rostral (+) or caudal (−) distances of the slices from the Ear Bar Zero.

Behavioral tasks

Monkeys were trained to perform a category-based free-gaze visual search task (Fig. 2a) using stimuli consisting of 160 natural object images across four categories: 40 faces, 40 houses, 40 flowers, and 40 hands, each subtending a visual angle of approximately 2 × 2 degrees. After a 400 ms fixation on a central spot, a cue stimulus was presented at the center of the screen. The cue stimulus was followed by the central spot after a random interval of 500 to 1300 ms. Following another 500 ms fixation on the central spot, a search array with 11 items, including two target stimuli, appeared. The 11 search items appeared at 11 locations randomly selected from 20 predefined positions (Fig. 2b).

Task sequence and predefined search array locations. (a) Monkeys initiated the trial by fixating on a central point for 400 ms. A cue was then presented for 500 to 1300 ms. After a delay of 500 ms, the search array with 11 items appeared. Monkeys were required to fixate on one of the two search targets that belonged to the same category as the cue for at least 800 ms to receive a juice reward. The white trace indicates eye gazes. (b) The 20 possible stimulus locations in the visual search task and the visually guided saccade task. Peripheral receptive fields (RFs) were mapped using the visually guided saccade task, which had the same 20 possible stimulus locations as the visual search task.

The two target stimuli and the cue stimulus belonged to the same category, although the target stimuli were always different from the cue stimulus. The cue stimulus was selected randomly from the house or face stimuli with equal probability. The other nine stimuli in the search array belonged to the other three categories. Monkeys were required to start the search within 4000 ms, find one of the two targets, and maintain fixation on the target for 800 ms to receive a juice reward. No constraints were placed on their search behavior, allowing the animals to conduct the search naturally.

Before the search array onset, monkeys were required to maintain central fixation, keeping their gaze within a circular window with a radius of 2° around the central spot/cue (referred to as the “fixation window”). The 20 predefined locations (Fig. 2b) covered the visual field with eccentricities ranging from 5.00° to 11.18° and included 18 locations symmetrically distributed in the left and right visual fields (nine on each side) and two locations along the vertical midline. It is worth noting that each ___location was surrounded by other locations at a minimum distance of 5°. Thus, during the search, when the animal fixated on an item, the nearest surrounding items were at least 5° away, facilitating the analysis of fixations following the array onset.

A visually guided saccade task was employed to map the RFs of the recorded units. Following a 400 ms central fixation, a stimulus randomly appeared in one of the 20 peripheral locations, and monkeys were required to make a saccade to the stimulus within 500 ms and maintain fixation on it for 300 ms to receive a reward.

Receptive field

The visual response to the cue and the search array in the free-gaze visual search task was assessed by comparing the firing rate during the post-stimulus period (50 to 200 ms after cue/array onset) to the corresponding baseline (−150 to 0 ms relative to cue/array onset) using a Wilcoxon rank-sum test. Based on these responses, we classified units into three categories of RFs:

-

(i)

Units with a focal foveal RF: These units responded solely to the cue in the foveal region (P < 0.05) but not to the search array that included items in the periphery (P > 0.05).

-

(ii)

Units with a broad foveal RF: These units responded to both the cue and the search array.

-

(iii)

Units with a peripheral RF: These units only responded to the search array (P < 0.05) but not to the cue (P > 0.05).

We implemented a visually guided saccade task to further map the peripheral RFs of units. In this task, we used the same faces and houses presented at the 20 predefined locations, identical to those in the visual search task. We compared responses within a time window of 50 to 200 ms after stimulus onset to the baseline (−150 to 0 ms relative to stimulus onset) using a two-tailed Wilcoxon rank-sum test to determine whether each unit had a significant response to stimuli in a specific peripheral RF ___location.

Category selectivity

We determined the category selectivity of each unit by comparing the response to face cues versus house cues in a time window of 50 to 200 ms after cue onset (Wilcoxon rank-sum test, P < 0.05). We further imposed a second criterion using a selectivity index similar to indices employed in previous IT studies25,26. For each unit with a foveal RF, the response to face stimuli (Rface) or house stimuli (Rhouse) was calculated using the visual search task by subtracting the mean baseline activity (−150 to 0 ms relative to the onset of the cue) from the mean response to the face or house cue (50 to 200 ms after the onset of the cue). For each unit with a peripheral RF, Rface and Rhouse were calculated using the visually guided saccade task by subtracting the mean baseline activity (−150 to 0 ms relative to the peripheral stimulus onset) from the mean response to the saccade target (50 to 200 ms after the onset of the saccade target). The selectivity index (SI) was then defined as (Rface − Rhouse) / (Rface + Rhouse). SI was set to 1 when Rface > 0 and Rhouse < 0, and to −1 when Rface < 0 and Rhouse > 0. Face-selective units were required to have an Rface at least 130% of Rhouse (i.e., the corresponding SI was greater than 0.13). Similarly, house-selective units were required to have an Rhouse at least 130% of Rface (i.e., the corresponding SI was smaller than −0.13). Units were labeled as non-category-selective if the response to face cues versus house cues was not significantly different (P > 0.05). The remaining units that did not fit into any of the aforementioned types were classified as undefined units (i.e., there was a significant difference but did not meet the second criterion).

Data Records

The dataset is publicly available on OSF (osf.io/sdgkr)27, including stimuli used in the experiments, behavioral data, events, neuronal spikes, local field potentials (LFPs), and preprocessing code. The data are organized into five folders: two folders contain data from the two monkeys, with RM006 for monkey S and RM008 for monkey E. The ‘spkLFPmatrix’ folder contains simultaneously recorded spikes and LFPs, with filenames indicating the monkey ID and brain region. The ‘imageset’ folder contains the stimuli used in the tasks, and the ‘code’ folder contains MATLAB scripts for data preprocessing (to analyze RF and category selectivity and store the information in the ‘cell list < region > ’ files, as described below) and for producing the figures (note that the scripts under ‘code for Distinct visual processing networks for foveal and peripheral visual fields’ are specifically for producing the figures in22).

The subject-specific directory (RM00 < label > ) contains the units (located in the ‘neurons’ directory) and the metadata for the units (located in the ‘cell list’ directory). Each file in the ‘neurons’ directory is stored in MATLAB ‘.mat’ format and contains variables related to trial events (‘TrlInfoMatrix’), search array stimuli (‘SearchSti’), saccade behavior (‘SearchEye’), and spike timing (‘neuron’) for all trials completed by the monkey. These files are organized by monkey, task, recording session, and unit, and can be identified by their filenames. For example, in the filename ‘m06cat003spk001a’, the first three characters represent the monkey (e.g., ‘m06’ for monkey S and ‘m08’ for monkey E), the next three characters represent the task (e.g., ‘cat’ for the category-based free-gaze visual search task and ‘det’ for the visually guided saccade task), the following three digits represent the session ID, and the final seven characters represent the unit ID. Each file in the ‘cell list’ directory is stored in ‘.txt’ format and contains metadata, including unit ID, recording site, and RF and category selectivity for each unit. These files are organized by brain areas and are named as ‘cell list < region >’, with five regions: V4, TEO, TE, OFC, and VPA (indicating LPFC). Note that we have separated IT into two subregions: TEO and TE.

The stimuli directory ‘imageset’ contains all the stimuli, with each file stored in ‘.bmp’ format and named with four digits. The first digit indicates the category of the stimulus, where 1, 2, 3, and 4 correspond to flower, house, monkey face, and monkey hand, respectively. The remaining three digits serve as unique identifiers for each image within its category.

Technical Validation

Behavior

The monkeys performed well on this task, with accuracy rates of 91.37% ± 2.93% (mean ± SD across sessions) and 92.25% ± 1.94% for finding faces and houses, respectively, for monkey S, and 86.55% ± 3.78% and 82.74% ± 3.61% for monkey E (Fig. 3a,b). The mean reaction time (RT), measured from the onset of the search array to the onset of the last fixation, was 462.04 ± 36.14 ms (mean ± SD across sessions) and 469.53 ± 38.98 ms for faces and houses, respectively, for monkey S, and 321.65 ± 24.48 ms and 368.53 ± 26.62 ms for monkey E (Fig. 3c,d).

Accuracy and reaction time. (a,b) Accuracy for face search and house search trials in two monkeys. On each box, the central mark is the median, the edges of the box are the 25th and 75th percentiles, the whiskers extend to the most extreme data points the algorithm considers to be not outliers, and the crosses denote the outliers. All box plots in this and subsequent figures follow the same convention. (c,d) Cumulative distribution of the reaction times (from the onset of the search array to the onset of the last fixation).

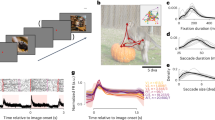

Monkeys completed the search with free eye movements, either by fixating on multiple targets (Fig. 4a,b) or when they first spotted a target (Fig. 4c). The mean number of fixations when searching for faces and houses was 2.47 ± 1.46 (mean ± SD across trials) and 2.52 ± 1.50, respectively, for monkey S, and 1.80 ± 0.95 and 2.02 ± 1.05 for monkey E (Fig. 4d). It is worth noting that our search paradigm differs from many prior studies in that each trial included two targets among nine distractors. Under a serial search strategy, this configuration would typically require approximately four saccades, on average, to reach a target. However, both monkeys reached a target in about two saccades, suggesting the involvement of a parallel or partially parallel search mechanism. This finding aligns with the idea that, particularly in trained subjects, multiple items in the array may be processed simultaneously to guide efficient target selection. The mean fixation duration was 208.24 ± 153.77 ms (mean ± SD across fixations), with 216.93 ± 162.91 ms for monkey S and 184.27 ± 121.97 ms for monkey E (Fig. 4e). In trials with multiple fixations on targets, 57.91 ± 4.79% and 53.18 ± 4.94% (mean ± SD across sessions) of fixations immediately returned to the same target when searching for faces and houses, respectively, for monkey S, and 39.91 ± 7.90% and 38.85 ± 6.35% for monkey E (Fig. 4f). The percentage of fixations that eventually returned to the same target was 63.23 ± 4.29% and 59.22 ± 3.77% for faces and houses, respectively, for monkey S, and 41.89 ± 7.54% and 41.86 ± 6.26% for monkey E (Fig. 4g).

Eye movement during visual search. (a–c) Sample search trials for faces. The white trace indicates eye gazes. The red square indicates the position of the central fixation (shown during search). (d) Distribution of the number of fixations for two monkeys. (e) Cumulative distribution of fixation duration. (f) Percentage of target (T) fixations that were immediately revisited (with only one item in between; only the same target item was considered). (g) Percentage of target (T) fixations that were eventually revisited (with any number of items in between; only the same target item was considered).

Firing rate

We recorded a total number of 6871 units from V4, 8641 units from IT (including both TE and TEO), 5622 units from the OFC, and 9916 units from the LPFC. 5070 units from V4, 5051 units from IT, 1470 units from the OFC, and 2997 units from the LPFC had a significant visually evoked response (i.e., the response to the cue or search array was significantly greater than the response to the baseline; Wilcoxon rank-sum test: P < 0.05). Among these visually responsive units, 1624 units from V4, 1419 units from IT, 888 units from the OFC, and 32 units from the LPFC had a focal foveal RF, while 781 units from V4, 268 units from IT, no units from the OFC, and 514 units from the LPFC had a localized peripheral RF.

Our trials involved presenting stimuli from different categories at various locations within the RFs, eliciting varying degrees of spiking activity—strong, weak, or none—depending on the cell’s RF and category selectivity. Consequently, the firing rate of units was expected to be higher for stimuli presented within their RFs and for stimuli belonging to their preferred category. Additionally, the response latency of units in the visual cortex was anticipated to be earlier than that in the prefrontal cortex (PFC). We plotted peri-stimulus time histograms (PSTHs) for stimuli presented at different locations and across various categories in both the visual cortex and PFC. Figure 5 and Fig. 6 illustrate these trends. A paired t-test revealed a significant difference in the average firing rate for the preferred ___location (Fig. 5; 43.37 ± 33.75 Hz vs. 28.91 ± 22.83 Hz; t(5896) = 62.4, P < 0.001). The identified foveal category-selective units from both monkeys differentiated fixations on faces and houses, showing higher firing rates for stimuli from the preferred category (Fig. 6a,b). Moreover, both foveal units (Fig. 6c,d) and peripheral units (Fig. 6e,f) distinguished target stimuli from distractors, demonstrating a feature attention effect. We characterized the RFs of peripheral units using the visually guided saccade task. As expected, the size of the peripheral RF (quantified by the number of stimuli that activated the unit; Fig. 7) in V4 (2.72 ± 2.03 [mean ± SD] stimuli) was smaller than that in IT (5.62 ± 3.07 stimuli; two-tailed two-sample t-test across units, t(1047) = 17.51, P = 1.95 × 10−60) and the LPFC (7.11 ± 2.95 stimuli; t(1293) = 31.75, P = 5.03 × 10−164).

Receptive field (RF) of units from the visual cortex and PFC. (a–d) Trials are aligned to the foveal cue onset for all stimuli. (e–h) Trials are aligned to the peripheral search array onset for all stimuli. The shaded area denotes ± SEM across units. The spike rate of each unit was normalized to its maximum spike rate across conditions.

The RFs of peripheral units were mapped using the visually guided saccade task. (a,b) Histogram of the number of stimulus locations that activated the peripheral RF in two monkeys. (c,d) The aggregated tuning regions of all units with a peripheral RF. Color bars show the number of units with tuning regions in a given stimulus ___location. Recordings were made from the right side of the brain for both monkeys.

Usage Notes

Our data are primarily organized by animal, with each monkey having its own dedicated folder. The code for data preprocessing and figure production can be found in the corresponding ‘code’ directory. For example, to define RFs, refer to ‘/code/data_preprocessing/RF*.m’; to define category selectivity, refer to ‘/code/category_selectivity*.m’. The files ‘cell list < region > .txt’ contain key information about each unit.

Code availability

The source code is included as part of the dataset. All code is implemented using MATLAB (MathWorks Inc).

References

Kastner, S. & Ungerleider, L. G. in Annual Review of Neuroscience Vol. 23 315-341 (2000).

Petersen, S. E. & Posner, M. I. The Attention System of the Human Brain: 20 Years After. Annual Review of Neuroscience 35, 73–89, https://doi.org/10.1146/annurev-neuro-062111-150525 (2012).

Corbetta, M. & Shulman, G. L. Control of goal-directed and stimulus-driven attention in the brain. Nat Rev Neurosci 3, 201–215, https://doi.org/10.1038/nrn755 (2002).

Bichot, N. P., Rossi, A. F. & Desimone, R. Parallel and serial neural mechanisms for visual search in macaque area V4. Science 308, 529–534, https://doi.org/10.1126/science.1109676 (2005).

Wolfe, J. M. Guided Search 6.0: An updated model of visual search. Psychon B Rev 28, 1060–1092, https://doi.org/10.3758/s13423-020-01859-9 (2021).

Bertera, J. H. & Rayner, K. Eye movements and the span of the effective stimulus in visual search. Percept Psychophys 62, 576–585, https://doi.org/10.3758/Bf03212109 (2000).

Chen, X. & Zelinsky, G. J. Real-world visual search is dominated by top-down guidance. Vision Res 46, 4118–4133, https://doi.org/10.1016/j.visres.2006.08.008 (2006).

Nuthmann, A. On the visual span during object search in real-world scenes. Vis Cogn 21, 803–837, https://doi.org/10.1080/13506285.2013.832449 (2013).

Malcolm, G. L. & Henderson, J. M. The effects of target template specificity on visual search in real-world scenes: evidence from eye movements. J Vis 9, 1–13, https://doi.org/10.1167/9.11.8 (2009).

Hooge, I. T. & Erkelens, C. J. Peripheral vision and oculomotor control during visual search. Vision Res 39, 1567–1575, https://doi.org/10.1016/s0042-6989(98)00213-2 (1999).

Laubrock, J., Cajar, A. & Engbert, R. Control of fixation duration during scene viewing by interaction of foveal and peripheral processing. J Vis 13, 11, https://doi.org/10.1167/13.12.11 (2013).

Cukur, T., Nishimoto, S., Huth, A. G. & Gallant, J. L. Attention during natural vision warps semantic representation across the human brain. Nat Neurosci 16, 763–770, https://doi.org/10.1038/nn.3381 (2013).

David, S. V., Hayden, B. Y., Mazer, J. A. & Gallant, J. L. Attention to stimulus features shifts spectral tuning of V4 neurons during natural vision. Neuron 59, 509–521, https://doi.org/10.1016/j.neuron.2008.07.001 (2008).

Bichot, N. P., Heard, M. T., DeGennaro, E. M. & Desimone, R. A Source for Feature-Based Attention in the Prefrontal Cortex. Neuron 88, 832–844, https://doi.org/10.1016/j.neuron.2015.10.001 (2015).

Mirpour, K., Bolandnazar, Z. & Bisley, J. W. Suppression of frontal eye field neuronal responses with maintained fixation. P Natl Acad Sci USA 115, 804–809, https://doi.org/10.1073/pnas.1716315115 (2018).

Mazer, J. A. & Gallant, J. L. Goal-related activity in V4 during free viewing visual search. Evidence for a ventral stream visual salience map. Neuron 40, 1241–1250, https://doi.org/10.1016/s0896-6273(03)00764-5 (2003).

Motter, B. C. Saccadic momentum and attentive control in V4 neurons during visual search. J Vis 18, 16, https://doi.org/10.1167/18.11.16 (2018).

Wang, S., Mamelak, A. N., Adolphs, R. & Rutishauser, U. Abstract goal representation in visual search by neurons in the human pre-supplementary motor area. Brain 142, 3530–3549, https://doi.org/10.1093/brain/awz279 (2019).

Sheinberg, D. L. & Logothetis, N. K. Noticing familiar objects in real world scenes: the role of temporal cortical neurons in natural vision. J Neurosci 21, 1340–1350, https://doi.org/10.1523/JNEUROSCI.21-04-01340.2001 (2001).

Wang, S., Mamelak, A. N., Adolphs, R. & Rutishauser, U. Encoding of Target Detection during Visual Search by Single Neurons in the Human Brain. Curr Biol 28, 2058–2069 e2054, https://doi.org/10.1016/j.cub.2018.04.092 (2018).

Zhang, J., Zhu, X., Zhou, H. & Wang, S. Behavioral and Neural Mechanisms of Face-Specific Attention during Goal-Directed Visual Search. The Journal of Neuroscience 44, e1299242024, https://doi.org/10.1523/JNEUROSCI.1299-24.2024 (2024).

Zhang, J., Zhou, H. & Wang, S. Distinct visual processing networks for foveal and peripheral visual fields. Commun Biol 7, 1259, https://doi.org/10.1038/s42003-024-06980-2 (2024).

Zhang, J., Cao, R., Zhu, X., Zhou, H. & Wang, S. Distinct attentional characteristics of neurons with visual feature coding in the primate brain. Sci Adv 11 https://doi.org/10.1126/sciadv.adq0332 (2025).

Asaad, W. F., Santhanam, N., McClellan, S. & Freedman, D. J. High-performance execution of psychophysical tasks with complex visual stimuli in MATLAB. J Neurophysiol 109, 249–260, https://doi.org/10.1152/jn.00527.2012 (2013).

Freiwald, W. A. & Tsao, D. Y. Functional Compartmentalization and Viewpoint Generalization Within the Macaque Face-Processing System. Science 330, 845, https://doi.org/10.1126/science.1194908 (2010).

Freiwald, W. A., Tsao, D. Y. & Livingstone, M. S. A face feature space in the macaque temporal lobe. Nat Neurosci 12, 1187–U1128, https://doi.org/10.1038/nn.2363 (2009).

Zhang, J., Zhu, X., Zhou, H. & Wang, S. Data release for “A large neuronal dataset for natural category-based free-gaze visual search in macaques”. OSF https://doi.org/10.17605/OSF.IO/SDGKR (2025).

Saleem, K. S. & Logothetis, N. K. A combined MRI and histology atlas of the rhesus monkey brain in stereotaxic coordinates. (Academic Press, 2012).

Acknowledgements

This research was supported by the NSF (BCS-1945230), NIH (R01MH129426), and AFOSR (FA9550-21-1-0088). The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

Author information

Authors and Affiliations

Contributions

J.Z., X.Z. and H.Z. designed research. J.Z. and X.Z. performed experiments. J.Z. and S.W. analyzed data. J.Z., H.Z. and S.W. wrote the paper. All authors discussed the results and contributed toward the manuscript.

Corresponding authors

Ethics declarations

Competing interests

The authors have declared that no competing interests exist.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Zhang, J., Zhu, X., Zhou, H. et al. A large neuronal dataset for natural category-based free-gaze visual search in macaques. Sci Data 12, 779 (2025). https://doi.org/10.1038/s41597-025-05130-5

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41597-025-05130-5