Abstract

This paper presents a novel approach to agricultural disease diagnostics through the integration of Deep Learning (DL) techniques with Visual Question Answering (VQA) systems, specifically targeting the detection of wheat rust. Wheat rust is a pervasive and destructive disease that significantly impacts wheat production worldwide. Traditional diagnostic methods often require expert knowledge and time-consuming processes, making rapid and accurate detection challenging. We drafted a new, WheatRustDL2024 dataset (7998 images of healthy and infected leaves) specifically designed for VQA in the context of wheat rust detection and utilized it to retrieve the initial weights on the federated learning server. This dataset comprises high-resolution images of wheat plants, annotated with detailed questions and answers pertaining to the presence, type, and severity of rust infections. Our dataset also contains images collected from various sources and successfully highlights a wide range of conditions (different lighting, obstructions in the image, etc.) in which a wheat image may be taken, therefore making a generalized universally applicable model. The trained model was federated using Flower. Following extensive analysis, the chosen central model was ResNet. Our fine-tuned ResNet achieved an accuracy of 97.69% on the existing data. We also implemented the BLIP (Bootstrapping Language-Image Pre-training) methods that enable the model to understand complex visual and textual inputs, thereby improving the accuracy and relevance of the generated answers. The dual attention mechanism, combined with BLIP techniques, allows the model to simultaneously focus on relevant image regions and pertinent parts of the questions. We also created a custom dataset (WheatRustVQA) with our augmented dataset containing 1800 augmented images and their associated question-answer pairs. The model fetches an answer with an average BLEU score of 0.6235 on our testing partition of the dataset. This federated model is lightweight and can be seamlessly integrated into mobile phones, drones, etc. without any hardware requirement. Our results indicate that integrating deep learning with VQA for agricultural disease diagnostics not only accelerates the detection process but also reduces dependency on human experts, making it a valuable tool for farmers and agricultural professionals. This approach holds promise for broader applications in plant pathology and precision agriculture and can consequently address food security issues.

Similar content being viewed by others

Introduction

Agricultural productivity is critically dependent on effective disease management strategies. Wheat rust, caused by various species of the Puccinia fungus, is one of the most devastating diseases affecting wheat crops worldwide. It can lead to significant yield losses, posing a threat to food security1. Traditional diagnostic methods for wheat rust involve manual inspection and expert analysis, which are time-consuming and not scalable for large agricultural fields2,3. Therefore, there is an urgent need for rapid, accurate, and scalable diagnostic tools. Deep learning (DL) methods have been successfully adapted for wheat rust image detection and classification achieving classification accuracy in the range of 96-99%4,5,6. Sharma et al. proposed a deep learning-based framework using multilayer perceptrons, achieving an accuracy of 96.24%7. Various deep learning approaches in plant disease detection, as demonstrated by Sladojevic et al.8, have been successfully employed for this purpose. Nigam et al.9 studied different computer vision models to study the highest accuracy when dealing with wheat rust data. Lu et al.10 made a significant breakthrough by modifying the traditional VGGNet deep learning architecture to create an in-field wheat disease detection system, which achieved an impressive accuracy of 97.95%. Notably, Singh et al.11 applied thermal imaging to tackle the problem, studying the results of Maximum Likelihood Estimations, Support Vector Machines, Neural Networks etc. Mi et al.12 proposed a model with a newer approach, with an embedding attention mechanism which outperformed ResNet.

Through the said DL methods, the most common and effective method highlighted is Transfer Learning4, wherein a large model is re-trained with ___domain-specific images. The model is more likely to generalize well and not overfit due to it being trained on a large dataset previously. This approach enables the efficient transfer of knowledge from related tasks, as deep learning (DL) models are capable of processing complex and large images, making them well-suited for analyzing high-resolution images5. However, these methods require a large amount of labeled training data and may not be suitable for unseen diseases. Furthermore, DL models are computationally expensive, which may be a limitation for some applications13. Li et al.14 performed semantic segmentation of wheat rust images, providing a unique path of research in the field highlighting the infections. Singhi et al.6 worked towards an integrated pretrained model to effectively detect wheat rust severity levels. Additionally, Maqsood et al.15 conducted a study and observed improved DL based detection results by using Super Resolution Generative Adversarial Networks (SRGANs), enhancing image-quality as well. Mohanty et al.2 conducted an extensive study on the variety of models available for transfer learning approaches for plant disease detection with a large dataset across 14 crop species and 26 diseases. All these studies simply classify the wheat image into their respective types or severity level. There is lack of an interactive system that offer a detailed understanding about the wheat rust images through question answering mechanism.

Visual Question Answering (VQA) models have gained significant attention in recent years as a means to enable machines to understand and respond to natural language queries about visual content. VQA models are designed to take an image and a question about the image as input and generate a relevant answer as output. Visual Question Answering (VQA)16 combines computer vision and natural language processing domains, enabling systems to answer questions about the content of an image. According to Antol et al.17, VQA models can be categorized into two main types: open-ended and multiple-choice. Open-ended VQA models generate a free-form answer, while multiple-choice models select an answer from a set of predefined options. Recent advances in VQA have been driven by the development of deep learning-based models, such as CNN-LSTM18and Bilinear Attention Networks19, which have achieved state-of-the-art performance on benchmark datasets such as VQA v2.020. The integration of VQA systems with deep learning have the potential to revolutionize agricultural diagnostics by providing detailed and context-aware information about plant diseases directly from images. This can significantly aid farmers and agricultural professionals in making timely and informed decisions. By providing rapid and accurate diagnostics, such an approach can help mitigate the impact of diseases like wheat rust, improving crop yields and contributing to global food security.

In this paper, we present a visual question answering system for wheat rust detection and diagnostics by integrating deep learning with VQA. To enhance the performance of our VQA system, we implement Bootstrapping Language-Image Pre-training (BLIP) methods16. BLIP leverages large-scale pre-training on diverse image-text pairs to improve the model’s ability to understand complex visual and textual inputs. A dual attention mechanism is used to focus on relevant regions in the image and pertinent parts of the question simultaneously. We introduce a new WheatRustVQA dataset specifically designed for this task, which includes images of wheat plants annotated with questions and answers related to rust infection. Our dataset covers various environmental conditions, plant growth stages, and rust types, ensuring the robustness and generalizability of the models trained on it. The primary objectives of the paper include:

-

Creation and application of a specialized WheatRustVQA dataset.

-

Assessing the performance of the fine-tuned BLIP model in accurately answering questions about wheat rust symptoms and severity.

-

Comparing our fine-tuned model and ChatGPT, highlighting the strengths of ___domain-specific training.

Proposed work

This study included an evaluation of state-of-the-art Convolution Neural Network (CNN) models for classification. Although previous literature exists on similar notes, the dataset used in this model is diverse, hence the model generalizes better. The models were evaluated, and the most accurate model was chosen as the central model. Alongside, text data was generated, and carefully vetted by industry professionals and agricultural research scientists, and our Visual Question Answering model was trained, creating a conversational interface for the detection of wheat rust. Figure 1a and b represent the schematic procedure and detailed core model structure adopted in the study.

We leverage Federated Learning to further enhance the classification system. Federated learning has advantages in terms of better data privacy with the flexibility to retain a part of the information off-premise on farms. It can also be used to seamlessly integrate machine learning models into drones for crop monitoring and analysis in real-time. It further ensures efficiency in handling data reduction to minimal transmission and storage. However, one apparent disadvantage of Federated Learning is that it increases the complexity of model training and deployment. In addition, model drift might occur, where decentralized models would eventually drift apart.

Experimental setup

The Federated Learning as well as the Visual Question Answering Models were trained on the NVIDIA RTX™ A6000 GPU, with 48GB of memory.

Novelty of the proposed approach

Dataset

A unique method of detecting wheat rust is through the use of a wide-ranging dataset. This represents a departure from previous investigations that utilized narrow datasets or only analyzed similar samples. For example, our WheatRustDL2024 dataset includes images showing different forms and degrees of rust infection on wheat leaves as well as healthy ones taken under varying lighting conditions, orientations, and resolutions among others. Such diversity in terms of these factors ensures exposure to many more real-world situations during training which in turn strengthens the system’s robustness, generality, and adaptability. With this kind of approach, it becomes possible for our model to detect accurately and classify wheat rust under any environmental condition thereby greatly contributing towards better disease control methods in agriculture.

Visual question answering

Additionally, in this study, the pioneering aspect of our research lies in the deployment of a state-of-the-art Visual Question Answering (VQA) system tailored specifically for wheat rust detection. VQA represents a cutting-edge intersection of computer vision and natural language processing, enabling machines to comprehend and respond to questions posed about images. Our VQA model is meticulously trained on a diverse dataset of wheat rust images, encompassing various rust severity levels, leaf orientations, and lighting conditions. By leveraging advanced deep learning architectures and attention mechanisms, our VQA system can accurately analyze images of rust-infected wheat leaves and provide detailed answers to questions regarding their condition. This innovative approach to VQA in the context of agricultural disease detection holds immense promise for facilitating rapid and accurate assessment of crop health, aiding farmers and agricultural experts in making informed decisions for disease management and crop protection.

Study Data: images and text

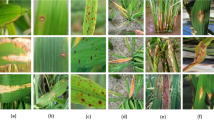

The WheatRustDL2024 dataset (Fig. 2), a rigorously curated collection of images across the globe, was compiled to facilitate the development of robust machine learning models for wheat rust detection. The dataset also features data personally gathered by the study’s authors from various agricultural fields across India.

Image data

This comprehensive dataset comprises 7998 images, carefully selected from diverse sources, including field-captured images and existing datasets. The images exhibit a range of conditions, including varying lighting, orientations, and resolutions, thereby ensuring the representation of diverse scenarios under which a wheat leaf may be examined. The inclusion of images depicting healthy leaves, as well as those infected with stripe rust and brown rust, enables the development of a well-generalized model capable of accurately distinguishing between these conditions. The WheatRustDL2024 dataset (Table 1) provides a robust foundation for training and evaluating machine learning models, ultimately contributing to the advancement of wheat rust detection and diagnosis. The data was augmented by resizing it into images of a uniform dimension of 224 × 224. Along with normalization, image flips, random zooming and rotations were used to enhance the dataset.

Text data

The text data utilized in this study was gathered through a careful process involving the creation of relevant questions to the farmers and the subsequent generation of corresponding answers using ChatGPT. A template based on the generated answers was crafted, and this template was then factually corrected to ensure accuracy. The corrected descriptions were then forwarded to industry specialists, agricultural researchers and scientists who diligently vetted each description. The descriptions were specifically crafted for images depicting leaves with identical types and severity levels of rust. These descriptions served a crucial role in ground truthing each batch of images, facilitating the training and evaluation of a Visual Question-answering interface for wheat rust detection. This process was repeated systematically for each class of rust, ensuring creation of a comprehensive and reliable dataset for model development and testing.

Dataset Collection and Annotation

To create a robust VQA system for wheat rust detection, we developed a comprehensive dataset comprising high-resolution images of wheat plants. The dataset includes images captured under various environmental conditions and at different plant growth stages to ensure diversity and generalizability. Each image is annotated with multiple questions and corresponding answers related to the presence, type, and severity of rust infections.

Source

Images were collected from agricultural fields, research institutions, and publicly available datasets.

Diversity

The dataset includes 3 different types of wheat leaves (“yellow rust”, “brown rust”, “healthy leaves”). Samples for each class of wheat leaves are shown in Fig. 2.

Resolution

High-resolution images to capture fine details necessary for accurate diagnosis.

Questions

Each image is annotated with questions (Table 2) that could be asked by farmers or agricultural professionals, such as “Is there rust on this plant?“, “What type of rust is present?“, and “What is the potential impact on the yield?”

Answers

Corresponding answers are provided based on expert analysis and validated by multiple reviewers to ensure accuracy. The answers generated by ChatGPT 4 is also included for comparison purpose.

WheatRustVQA dataset

A total of 40 high-resolution images were collected, comprising 10 images each of yellow rust, brown rust, black rust-infested leaves, and healthy leaves (Table 3). For each of these images, 15 questions and their corresponding accurate answers were meticulously curated, resulting in an initial dataset of 600 data points, where each data point consists of an image, a question, and the subsequent answer. To augment the dataset and enhance model training, image augmentation techniques were applied, including horizontal and vertical flips of all images. This augmentation increased the dataset to a final size of 1800 image-text pairs, thus improving the robustness and generalizability of the model.

Central model

Transfer learning

Nigam et al.9conducted a study on the behavior of CNN models on wheat rust classification, A major conclusion of which was applying transfer learning on pretrained models gave better results compared to training a model from scratch on the dataset. Additionally, the training time in training from scratch was significantly higher, hence making it less feasible. Therefore, in this study, we work towards achieving higher accuracy by fine-tuning state-of-the-art computer vision models. The central model was chosen after a holistic evaluation of the state-of-the-CNN classification models. The unique characteristics of the dataset led to insights that contradicted findings from previous studies. The evaluated models included ResNet21, VGG22, EfficientNet23, and InceptionNet24, each of which was assessed based on metrics such as accuracy, precision, recall, and F1 scores. A brief description of the above models is given in Table 4. Following this evaluation, ResNet demonstrated superior performance across the given metrics and was thus chosen as the central model for the federated setting.

Fine-tuning Process.We finetuned our generalised state-of-the-art Convolutional Neural Network models to our particular use case of wheat leaf image classification into three prospective classes (Yellow Rust, Brown Rust, and Healthy Leaf). In this process, the WheatRustDL24 with 7998 images across 3 classes were used for finetuning with a split of 80% for train and 20% for test. In all models, the last layer was unfrozen, and a final classification layer was added to three classes to suit the scope of our study. The optimiser used in the finetuning process was Stochastic Gradient Descent, with a learning rate of 0.001. Additionally, a momentum of 0.85 was applied for optimisation and a weight decay of 1e[-5 was applied for regularisation. The loss function used in this process was cross entropy across all state-of-the-art models.

Subsequently, the model was federated using FLOWER25, a framework that integrates mechanisms for detecting and mitigating Byzantine attacks. This incorporation ensures the integrity of model aggregation in decentralized environments, further enhancing the robustness and reliability of the federated learning system.

Federating strategy

Federated averaging, integral to our study’s federated learning approach, facilitates collaborative model training while respecting data privacy. Table a represents the federated average algorithm used in this study. Each device, such as those monitoring wheat rust, refines a local model using its respective data and sends model updates, not raw data, to a central server. The server aggregates these updates through averaging, enhancing the global model without exposing individual data. This process ensures robust learning across diverse data sources, crucial for our agricultural application’s privacy and data security considerations. Federated averaging is a distributed optimization algorithm that enables multiple devices to collaboratively train a model while preserving data privacy. The mathematical representation of FedAvg can be expressed as Eq. (1)

where α is a hyperparameter which signifies the weight of the local updates, signifying the emphasis of the local updates on the central model updating on the server. In this study, α has been chosen proportional to the number of samples in the dataset updated. Therefore, for a local client accessing the model, having a dataset of \(\:N\) images, \(\:\alpha\:\propto\:N\).

VQA Model Architecture

Our proposed method for visual question answering in agricultural disease diagnostics, specifically for wheat rust, leverages the power of the BLIP (Bootstrapping Language-Image Pre-training)16 model. We utilize the BLIP model as our foundation due to its strong performance in vision-language tasks. BLIP, is a unified vision-language model that excels in various tasks, including visual question answering. The model’s architecture consists of a vision transformer (ViT) for image encoding, a text transformer for language understanding and generation, and a multimodal fusion module that combines visual and textual features (Fig. 3). This architecture allows for effective joint reasoning over image and text inputs, making it suitable for our agricultural disease diagnostics task.

Fine-tuning Process. We fine-tuned the pre-trained BLIP model on our custom wheat rust VQA dataset. The fine-tuning process involves data preprocessing where the image is resized and normalized to match BLIP’s input requirements. It also involves tokenizing questions and answers using BLIP’s text encoder. We use AdamW with a learning rate of 10−5, batch size: 32. We employ a combination of cross-entropy loss for answer prediction and contrastive loss to enhance the alignment between visual and textual features. Initially we freeze the vision transformer to preserve learned visual features and gradually unfreeze layers, allowing for ___domain-specific visual feature adaptation. By fine-tuning BLIP on our specialized wheat rust VQA dataset, we aim to create a model that can accurately interpret visual cues in wheat plant images and provide relevant answers to diagnostic questions, thereby assisting in the identification and assessment of wheat rust infections.

In this finetuning process, the model textual outputs for the WheatRustVQA images and questions dataset were trained against the ground truth answers. The dataset was split into train (1750 tuples) and test (50 tuples) due to the dataset being narrow.

Benchmarking

We benchmark our VQA model using BLEU (Bilingual Evaluation Understudy) score. The BLEU score measures the similarity between machine-generated and human-written text, by calculating the precision of n-grams in generated text compared to the reference text. The score ranges from 0 (no similarity) to 1 (perfect match).

Para ID=“Par13”>

Results

Model evaluation results

Central Model

The study commenced with a thorough analysis of state-of-the-art deep learning models to choose as the central model. This initial study was done on various IncpeptionNet v3, VGG 16, ResNet 50 and 152, and EfficientNet B0, B1, B2, B3 models. The accuracy of these models on the raw dataset and the main dataset after augmentations, WheatRustDL2024, are given in Table 5.

For illustration, Figs. 4 and 5 represent the accuracy and loss curves associated with ResNet 50, 52 and EfficeintNet B0, B1. Initially, the model was trained on raw data. The InceptionNet v3 model achieves a training accuracy of 67.68% and a validation accuracy of 67.98%. The corresponding values for VGG 16, ResNet 50, ResNet 152, The EfficientNet B0, EfficientNet B1, EfficientNet B2, EfficientNet B3 models are [88.30%, 88.81%], [98.67%, 95.81%], [98.41%, 94.68%], [96.50%, 95.68%], [95.26%, 94.81%], [95.09%, 94.18%]. [67.27%, 75.38%] respectively. Most models outperformed on the main dataset with preprocessing augmentation methods. Notably, the ResNet families outperformed with an accuracy increase of 4–5% each, however even more notably, EfficientNet B3 outperformed the previously trained model with an accuracy increase of almost 20%. The accuracy of the model trained on raw data is generally lower than the accuracy when trained on the WheatRustDL2024 dataset. This difference can be attributed to the complexity of the raw data and the challenges in accurately labelling and processing it. The models perform better on the WheatRustDL2024 dataset, which may have been preprocessed or labelled more accurately, leading to improved model performance. Therefore, the main criterion for a model to have a high accuracy is its ability to generalize well, which had a low benchmark for models trained on raw data and a relatively higher benchmark for models trained on augmented data. Owing to the high accuracy of ResNet-50 on WheatRustDL24, it was chosen as the central model in the federated environment. This model now has downloadable weights and is constantly updated with federated averaging.

Analysis results of visual question answering

Figure 6 illustrates some of the questions and answers used in the VQA model. The fine-tuned VQA model gave a training average language modelling loss of 0.0071 on our test dataset of images and question-answers, and a test BLEU score of 0.6235. A snippet from our model testing is presented in Fig. 7 by inputting a random image of a wheat leaf and feeding prompts requesting more details on the same. To minimize the possibility of overfitting, image augmentation was applied to increase the dataset size. Keeping the narrow dataset in mind, the decision of fine-tuning was taken, and these results were tested on a unique dataset of images. The Loss Curve for the proposed VQA model is presented in Fig. 7, plotting the total loss across the dataset after each epoch, whereas Fig. 6 illustrates some of questions and answers used in the VQA model.

Discussions

Wheat rust detection remains a crucial task to address. Stem rusts are a significant threat to wheat production. Secondly, as global food security becomes increasingly important, ensuring the health of cereal crops like wheat is essential. Accurate detection allows growers to make timely management decisions, preventing further spread and minimizing yield losses. Therefore, in this study, we provided a federated setting for a deep learning model and a Visual Question Answering concept that would transcend the realm of the ongoing research towards tackling wheat rust.

Federated Deep Learning with better accuracy using varied datasets

This study commences with a brief study of the state-of-the-art convolutional neural network models. We achieved notable accuracies in the ResNet and EfficientNet architectures. Previous studies on wheat rust identification26,27 had a narrow range of classes and a highly specific dataset. Nigam et al.9 conducted a thorough analysis with a larger dataset, although it was not generalised, and they achieved model accuracies in the range of 95–96% on EfficientNet architectures, with the highest recorded being 95.76%, while they achieved 95.69% on ResNet-152. Additionally, their findings of the accuracy for VGGNet 19 was at 90.89%, similar the accuracy of VGGNet 16 was 88.36% in our study, a lighter model with 16 layers compared to the 19 in VGGNet 19.

Across the Deep Learning applications in wheat rust detection, Chang et al.28 proposed an improved Dense Net architecture with an added attention network on the Whet Common Disease Dataset, Wheat Rusts Dataset and the Common Poaceae DIsease Dataset, achieving accuracies of 98.32%, 96.31% and 95.00% respectively. Adem et al.29 conducted a comparative analysis of deep learning parameters such as layers, activation functions and optimisers, concluding that a 5-layer CNN model using activation function Leaky ReLU using the Nadam optimiser gave the highest accuracy of all in their study on a custom dataset, at 97.36%, an improvement from 90.87% from their default parameters. Liu et al.30 worked towards leaf segmentation, creating a mobile-based deep-learning model that achieved a 98.65% Mean Intersection over Union (MIoU) in segmentation. Khan et al.31 worked towards implementing a Convolutional Neural Network on a dataset of 407 images of wheat rust, aiming to further integrate this model into edge devices. They achieved an accuracy of 98.77% on their test. Nigam et al.32 added convolution block attention mechanisms to the EfficientNet B0 model to create a custom deep learning architecture. The custom model of this study achieved an accuracy of 98.70% on their test dataset and an F1 score of 98%. Broadly, the results of the high performance of deep learning architectures could be well substantiated by the previous analysis of deep learning architectures catered towards the classification of wheat rust, with our study emphasizing a more generalized dataset with varied conditions in place.

Such higher efficiency associated with federated learning as observed in this study is very much in line with previous studies on disease detection. Federated Learning is a natural step forward in the paradigm of deep learning. It is one of the few efficient models that account for privacy preservation and accessibility. It has already seen widespread adoption. It is widely used for disease detection while keeping sensitive patient data private. For instance, a federated learning approach was used to predict hospitalizations for cardiac events using a binary classification supervised model33. In the field of advertising, Facebook has employed federated learning to rebuild its ad system by running algorithms locally on phones to find out what ads a user finds interesting, respecting user privacy34.

Along with the general industry applications, Federated Learning has found applications in plant disease identification. Kabala et al.35 conducted a study analysing computer vision models and adopting a federated setting with Federated Average (FedAvg) as the strategy. They additionally tested Vision Transformers (ViTs), which is not as relevant for this study, as we deal with data consisting of fewer background features, making a transformer layer redundant. Hari et al.36 on similar notes proposed an improved federated learning model for plant leaf disease detection by analysing FedAvg and FedAdam.

Federated learning is a move forward toward enhancing the detection and classification of disease in agriculture since it allows the use of decentralized data and achieves better performance in models. Past studies in agriculture have been aggressively supporting this idea since Federated Learning addresses data privacy35,36,37,38. These studies have therefore proven the feasibility of Federated Learning for these agri-tasks: crop yield prediction, and disease diagnosis. Our research raised the question of data privacy in agricultural contexts and underlined the necessity for data in agriculture to be processed securely and decentralized. Moreover, our study defined the versatility of the application of Federated Learning at the edge device to offer real-time processing of data that reflects farming activities speedily. Our model classifies a general dataset and hence is used in strong and precise detection of diseases across different agricultural settings. Our work differs from the federated learning studies mentioned above concerning their application in agricultural-related issues, because the area of study concentrated on wheat rust disease, which affected most wheat plants worldwide. The study, thus, attempts to examine the applicability of Federated Learning in detecting wheat rust to make a contribution towards better and more efficient disease management strategies in the agricultural sector.

To the best of our knowledge, Federated Learning has not been implemented on wheat rust detection, where multiple agricultural bodies could collaborate and enhance a centralised architecture using their respective diverse datasets which also preserves their data privacy. Additionally, its lightweight nature makes it more suitable for integration with devices such as smartphones.

In this study, we proposed a federated central model that improves the strategy of Federated Averaging (FedAvg) naturally as it gives a higher weightage to a client that provides a larger dataset. Further work in this field could be towards another federating strategy or a simulation where the dataset is divided into multiple clients to analyse an optimal strategy. Apart from Federated Averaging, several other federated learning strategies have been proposed to improve the performance and efficiency of distributed machine learning. For instance, Federated Stochastic Gradient Descent (FedSGD) has been shown to converge faster than FedAvg in certain scenarios39. Another approach is Federated Proximal (FedProx), which incorporates a proximal term to handle heterogeneous data distributions across clients40. Additionally, Federated Multi-Task Learning (FedMTL) has been proposed to learn multiple related tasks simultaneously, leveraging shared knowledge across tasks41. These alternative strategies offer different trade-offs between communication efficiency, model accuracy, and robustness to non-IID data.

Another interesting field of study in Federated Learning lies in the selection of topology. In this study, we work with a centralised model that a client can access locally. Multiple conceptual topologies exist in Federated Learning, with the standard federated learning topology being the main aim of the study, creating a parent model. On other trajectories, experimentation with other topologies, such as the Star Topology, Tree Topology, Decentralised Topology etc. may also be a field of study in efficient wheat rust detection.

Multi-modal interactive interfaces

Interactive AI systems have revolutionized various industries by enabling dynamic human-machine interactions. These systems leverage natural language processing (NLP) and machine learning techniques to understand and respond to user queries, commands, and requests in real-time. They are deployed in virtual assistants, customer service chatbots, educational platforms, and smart home devices, enhancing user experiences and streamlining tasks. Specifically, Visual Question Answering (VQA) models have emerged as a powerful application of interactive AI, allowing users to pose questions about visual content and receive accurate responses.

The importance of the VQA approach alongside federated classification in this study is prominently highlighted by Ryo et al.42, who emphasized the need for incorporating social and human opinions into Artificial Intelligence. Their studies showed an abundance of research using CNN for image analysis and recurrent neural networks for time-series analysis. They suggest the use of multi-model AI, one taking data with different features such as image, audio, text, smell, etc. Therefore, VQA models pave the way for a new field of modelling as well, combining image features and text features, while being trained on data. Plant disease detection has seen the application of Natural Language Processing, with Guofeng et al.43 proposing a fine-tuned BERT for question classification of common crop diseases question answering systems, and Jain et al.44 proposing an agricultural question answering system. These are purely NLP applications, and do not take a step towards integrating image data, achieving multimodality.

Arora et al.45 introduced Long Short-Term Memory (LSTM) Recurrent Neural Network architectures, and also applying a Convolutional Neural Network for classification, therefore achieving multimodality, which, however, is not integrated, unlike VQA models. Along similar lines, LSTMs were also applied to agriculture by Shang et al.46 along with CNN models. Towards fruit tree disease estimation and decision making for further steps, Lan et al.47 created a Visual Question Answering model using Tucker decomposition to fuse image features with the subsequent text features. Similarly, Lu et al.48 worked towards a multimodal interface in agricultural disease detection by applying multimodal transformer architectures, subsequently creating a question-answering model.

In the context of this study, Generalisability refers to the model being able to correctly navigate through unseen data, similar to the kind of data the model is trained upon. In the context of this study, our dataset is generalised in the sense, that it consists of images of crops with similar diseases with different camera angles and lighting conditions, as well as varying levels of disease severity. Therefore, the model is trained upon different conditions in which a farmer or sensor might capture the image of a wheat leaf, including diverse environmental factors and image qualities. This is important since this allows the application of this model in different agricultural settings, including small-scale farms and large-scale industrial plants, where the model can be used to detect wheat rust in a variety of scenarios. However, to reinstate the scope of this study, generalisability does not concretely refer to generalisability across domains, such as (but not limited to) plant diseases other than wheat rust, or different types of crops. The models in this study are trained on a wheat-rust-specific dataset according to the scope of the study. Therefore, the models are generalisable within the scope of wheat rust detection specifically, and are expected to perform well on detecting wheat rust in various images, but may not perform well on detecting other types of plant diseases.

Additionally, Robustness refers to the model’s ability to maintain its performance even when the input data is noisy, incomplete or corrupted. In the context of this study, our model is designed to be robust to variations in image quality, such as different resolutions and compression levels. This is important because, in real-world agricultural settings, images of wheat leaves may be captured under varying conditions, such as with different camera and sensor qualities. By training our model on a dataset that includes images with these variations, we ensure that it can accurately detect wheat rust even when the input data is slightly corrupted. However, to clarify the scope of this study, robustness in this context does not imply that the model is completely robust to all possible types of noise or corruption, but rather that it is robust to the types of variations that are commonly encountered in agricultural settings, due to a variation in the quality of equipment. Specifically, our model is robust to variations in image quality that are typical of images captured in the field, but it may not be robust to more extreme types of noise or corruption that are not commonly encountered in agricultural settings.

This study explores a newer field of research in wheat rust detection. Additionally, with the Federated Learning interface, we explore VQA models to create an interactive interface for further research. This initial model was trained using 15 questions and answers for 40 images across four classes, yellow rust, brown rust, and healthy leaves, creating a custom dataset of 600 data points. VQA models can be broadly classified by the type of answers they process. They are generally models that process multiple choice answer type, short answer type and long answer type labels. The scope for a multiple-choice model in this particular field is less, although it would increase model objectivity, and correspondingly its accuracy. Therefore, given a suitable problem statement in the field of wheat rust detection, such a model can be applied to an appropriate dataset. Alternatively, there also exist models which work with short answers. There exists an abundance of such models.

Conclusions

This study introduces a novel approach to wheat rust diagnostics by integrating deep learning-based visual question answering (VQA) techniques. We established a baseline for this critical agricultural application by fine-tuning the BLIP model on our newly created WheatRustVQA dataset. Our research demonstrates the potential of VQA systems in plant pathology and provides valuable insights through a comparative analysis with ChatGPT. This work has significant implications for enhancing disease diagnostic capabilities in agriculture through image-based question answering. It also paves the way for more accessible expert-level diagnostics in regions with limited access to plant pathologists.

We show the performance of our system and report the standard VQA metrics that serve as baseline for wheat rust VQA. Our results indicate that the integration of BLIP methods with VQA models significantly enhances diagnostic accuracy, reducing the need for expert intervention. The integration of deep learning with VQA for agricultural disease diagnostics represents a significant advancement in precision agriculture. By providing rapid and accurate diagnostics, our approach can help mitigate the impact of diseases like wheat rust, improving crop yields and contributing to global food security. This paper lays the groundwork for future research and applications in plant pathology, highlighting the potential of deep learning and VQA in transforming agricultural practices.

While our results are promising, limitations include the need for further validation in varied field conditions and potential challenges in generalizing to other crop diseases. Future work should focus on expanding the dataset, exploring multi-disease models, and integrating this technology into mobile applications for real-time field use. In conclusion, this research lays a solid foundation for the application of AI in agricultural disease diagnostics. As we continue to refine these models and methodologies, the integration of VQA in agriculture promises to play a crucial role in addressing plant disease challenges and contributing to global food security.

Data availability

• This study introduces a Federated Learning and a Visual Question-Answering model. These models are available online on this study’s GitHub (https://github.com/aknnvt/FL-VQA-in-Wheat-Rust). • The custom datasets curated for this study, WheatRustDL2024 (https://bitspilaniac-my.sharepoint.com/:f:/g/personal/f20212378_pilani_bits-pilani_ac_in/EvwjsY_JT4FIu7ZTU8zyXOMB8Ywk4OXgO6LYwTk8dOiN_Q? e=45eTOD) and WheatRustVQA (https://docs.google.com/document/d/1EvVdrMi7W-JZkeeePEkNmVIEJ1dn7eln/edit? usp=sharing&ouid=114090611032812705334&rtpof=true&sd=true), are available for public use. Additionally, the images in WheatRustVQA (https://drive.google.com/drive/folders/1izs5ZVmi9V__ixk4ODiJAyachAulP3RL? usp=drive_link). The raw version of the answers can be found on the GitHub repository.

References

Abebe, W. Wheat Leaf Rust Disease Management: a review. J. Plant. Pathol. Microbiol. 12, 1–8 (2021).

Mohanty, S. P., Hughes, D. P. & Salathé, M. September 22). Using deep learning for image-based plant disease detection. Front. Plant Sci. https://doi.org/10.3389/fpls.2016.01419 (2016).

Bradhurst, R., Spring, D., Stanaway, M., Milner, J. & Kompas, T. A Generalised and Scalable Framework for Modelling Incursions, Surveillance and Control of Plant and Environmental Pests139105004 (Environmental Modelling & Software, 2021).

Too, E. C., Yujian, L., Njuki, S. & Yingchun, L. A comparative study of fine-tuning deep learning models for plant disease identification. Comput. Electron. Agric. 161, 272–279 (2019).

Ullah, A., Muhammad, K., Haq, I. U. & Baik, S. W. Action recognition using optimized deep autoencoder and CNN for surveillance data streams of non-stationary environments. Future Generation Computer Systems. (2019)., July 1 https://doi.org/10.1016/j.future.2019.01.029

Singhi, V., Kumar, D. & Kukreja, V. Integrated YOLOv4 Deep Learning Pretrained Model for Accurate Estimation of Wheat Rust Disease Severity. 2023 2nd International Conference on Applied Artificial Intelligence and Computing (ICAAIC), 489–494. (2023).

Sharma, R., Kukreja, V. & Gupta, R. Enhancing Wheat Crop Resilience: An Efficient Deep Learning Framework for the Detection and Classification of Rust Disease. 2023 4th International Conference for Emerging Technology (INCET). (2023). https://doi.org/10.1109/incet57972.2023.10170566

Sladojevic, S., Arsenovic, M., Anderla, A., Culibrk, D. & Stefanovic, D. January 1). Deep neural networks based Recognition of Plant diseases by Leaf Image classification. Comput. Intell. Neurosci. https://doi.org/10.1155/2016/3289801 (2016).

Nigam, S. et al. Deep transfer learning model for disease identification in wheat crop. Ecological Informatics. (2023)., July 1 https://doi.org/10.1016/j.ecoinf.2023.102068

Lu, J., Hu, J., Zhao, G., Mei, F. & Zhang, C. An in-field automatic wheat disease diagnosis system. Computers and Electronics in Agriculture. (2017)., November 1 https://doi.org/10.1016/j.compag.2017.09.012

Singh, R., Krishnan, P., Singh, V. K. & Banerjee, K. Application of thermal and visible imaging to estimate stripe rust disease severity in wheat using supervised image classification methods. Ecological Informatics. (2022)., November 1 https://doi.org/10.1016/j.ecoinf.2022.101774

Mi, Z., Zhang, X., Su, J., Han, D. & Su, B. September 9). Wheat stripe rust grading by deep learning with attention mechanism and images from Mobile devices. Front. Plant Sci. https://doi.org/10.3389/fpls.2020.558126 (2020).

Shoaib, M. et al. An advanced deep learning models-based plant disease detection: A review of recent research. Frontiers in Plant Science. (2023)., March 21 https://doi.org/10.3389/fpls.2023.1158933

Li, Y. et al. Semantic Segmentation of Wheat Stripe Rust Images Using Deep Learning. Agronomy. (2022)., November 23 https://doi.org/10.3390/agronomy12122933

Maqsood, M. H. et al. Super Resolution Generative Adversarial Network (SRGANs) for Wheat Stripe Rust Classification. Sensors. (2021)., November 26 https://doi.org/10.3390/s21237903

Li, J., Li, D., Xiong, C. & Hoi, S. C. BLIP: Bootstrapping Language-Image Pre-training for Unified Vision-Language Understanding and Generation. International Conference on Machine Learning. (2022).

Antol, S. et al. VQA: Visual Question Answering. 2015 IEEE International Conference on Computer Vision (ICCV). (2015). https://doi.org/10.1109/iccv.2015.279

Kafle, K. & Kanan, C. Visual question answering: Datasets, algorithms, and future challenges. Computer Vision and Image Understanding. (2017)., October 1 https://doi.org/10.1016/j.cviu.2017.06.005

Kim, J., Jun, J. & Zhang, B. Bilinear Attention Networks (Neural Information Processing Systems, 2018).

Goyal, Y., Khot, T., Summers-Stay, D., Batra, D. & Parikh, D. Making the V in VQA Matter: elevating the role of image understanding in visual question answering. Int. J. Comput. Vision. 127, 398–414 (2016).

He, K., Zhang, X., Ren, S., Sun, J. & Recognition, P. Deep Residual Learning for Image Recognition. 2016 IEEE Conference on Computer Vision and (CVPR), 770–778. (2015).

Simonyan, K. & Zisserman, A. Very deep Convolutional Networks for large-scale image recognition. CoRR. abs/1409.1556 (2014).

Tan, M. & Le, Q. V. EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks. (2019). ArXiv, abs/1905.11946.

Szegedy, C. et al. Going deeper with convolutions. 2015 IEEE Conference on Computer Vision and (CVPR), 1–9. (2014).

Beutel, D. J. et al. Flower: A Friendly Federated Learning Research Framework. (2020).

Genaev, M. A. et al. Image-Based Wheat Fungi Diseases Identification by Deep Learning. Plants. (2021)., July 21 https://doi.org/10.3390/plants10081500

Nigam, S. et al. Automating yellow rust disease identification in wheat using artificial intelligence. Indian J. Agricultural Sci. 91 (9), 1391–1395. https://doi.org/10.56093/ijas.v91i9.116097 (2021).

Shenglong, C. et al. Recognition of wheat rusts in a field environment based on improved DenseNet. Biosyst. Eng. https://doi.org/10.1016/j.biosystemseng.2023.12.016 (2024).

Kemal, A., Kavalcı, E., Fatih, Y., Kübra, Ö. & Halit, Ç. Bakır. A comparative analysis of Deep Learning parameters for enhanced detection of yellow rust in wheat. Uluslararası mühendislik araştırma ve geliştirme dergisi, doi: (2024). https://doi.org/10.29137/umagd.1390763

Weizhen, L. et al. StripeRust-Pocket: Mobile-based Deep Learning Application for Efficient Disease Severity Assessment of Wheat Stripe Rust (Plant phenomics, 2024). https://doi.org/10.34133/plantphenomics.0201

Aqeel, Ahmed, K. et al. Amin. Deep Learning-Based Classification of Wheat Leaf Diseases for Edge Devices. 1–6. doi: (2023). https://doi.org/10.1109/etecte59617.2023.10396676

Sapna, N. et al. EfficientNet architecture and attention mechanism-based wheat disease identification model. Procedia Comput. Sci. 235, 383–393. https://doi.org/10.1016/j.procs.2024.04.038 (2024).

Brisimi, T. S. et al. Federated learning of predictive models from federated Electronic Health Records. International Journal of Medical Informatics. (2018)., April 1 https://doi.org/10.1016/j.ijmedinf.2018.01.007

Hard, A. S. et al. (2018). Federated Learning for Mobile Keyboard Prediction. ArXiv, abs/1811.03604.

Kabala, D. M., Hafiane, A., Bobelin, L. & Canals, R. Image-based crop disease detection with federated learning. Scientific Reports. (2023)., November 6 https://doi.org/10.1038/s41598-023-46218-5

Hari, P., Singh, M. P. & Singh, A. K. An improved federated deep learning for plant leaf disease detection. Multimedia Tools and Applications. (2024)., March 13 https://doi.org/10.1007/s11042-024-18867-9

Khan, F. et al. Federated learning-based UAVs for the diagnosis of Plant diseases. 1–6. doi: (2022). https://doi.org/10.1109/ICEET56468.2022.10007133

Shiva, M. & Satvik, V. K. K. Vats. Advancing Agricultural practices: Federated Learning-based CNN for Mango Leaf Disease Detection. 1–6. doi: (2023). https://doi.org/10.1109/conit59222.2023.10205850

McMahan, H. B., Moore, E., Ramage, D., Hampson, S. & Arcas, B. A. Communication-Efficient Learning of Deep Networks from Decentralized Data. International Conference on Artificial Intelligence and Statistics. (2016).

Li, T. et al. Federated Optimization in Heterogeneous Networks (Learning, 2018).

Smith, V., Chiang, C. K., Sanjabi, M. & Talwalkar, A. Federated Multi-Task Learning. arXiv.org. (2017)., May 30 https://arxiv.org/abs/1705.10467

Ryo, M. et al. December 16). Deep learning for sustainable agriculture needs ecology and human involvement. J. Sustainable Agric. Environ. https://doi.org/10.1002/sae2.12036 (2022).

Guofeng, Y. & Yong, Y. Question classification of common crop disease question answering system based on BERT. www.joca.cn. (2020)., June 10 https://doi.org/10.11772/j.issn.1001-9081.2019111951

Jain, N. et al. AgriBot: Agriculture-Specific Question Answer System. (2019).

Bhavika, A. et al. Agribot: A Natural Language Generative Neural Networks Engine for Agricultural Applications. 2020:28–33. doi: (2020). https://doi.org/10.1109/IC3A48958.2020.233263

Shang, L. et al. Surrogate modelling of a detailed farm-level model using deep learning. J. Agric. Econ. 75 (1), 235–260. https://doi.org/10.1111/1477-9552.12543 (2023).

Lan, Y. et al. Visual question answering model for fruit tree disease decision-making based on multimodal deep learning. Frontiers in Plant Science. (2023)., January 5 https://doi.org/10.3389/fpls.2022.1064399

Lu, Y. et al. Application of Multimodal Transformer Model in Intelligent Agricultural Disease Detection and Question-Answering Systems. Plants. (2024)., March 28 https://doi.org/10.3390/plants13070972

Acknowledgements

Authors would like to thank Birla Institute of Technology and Science, Pilani, India for funding this project (Grant Reference: C1/23/118) through Cross Disciplinary Research Fund (CDRF) scheme. We also thank Indian Agricultural Research Institute (IARI), New Delhi, India for collaborative work and providing some datasets used in the study.

Funding

Open access funding provided by Birla Institute of Technology and Science.

Author information

Authors and Affiliations

Contributions

AN: Methodology, Software, Data curation, Writing- Original draft preparation, Investigation, Visualization, InvestigationRS: Methodology, Software, Data curation, Writing- Original draft preparation, Investigation, Visualization, InvestigationBP: Methodology, Software, Data curation, Writing- Original draft preparation, Investigation, Visualization, InvestigationOG: Methodology, Software, Data curation, Writing- Original draft preparation, Investigation, Visualization, InvestigationSR: Supervision, Conceptualization, Data curation, Methodology, Software, Writing- Original draft preparation, Investigation, Visualization, Writing- Reviewing and EditingMM: Supervision, Conceptualization, Data curation, Methodology, Software, Investigation, Visualization, Writing- Reviewing and EditingVKS: Data curation, Writing- Reviewing and Editing, Conceptualization, Visualization, InvestigationPN: Supervision, Conceptualization, Data curation, Writing- Reviewing and Editing, Conceptualization, Visualization, InvestigationVC: Supervision, Conceptualization, Data curation, Writing- Reviewing and Editing, Conceptualization, Visualization, Investigation.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Nanavaty, A., Sharma, R., Pandita, B. et al. Integrating deep learning for visual question answering in Agricultural Disease Diagnostics: Case Study of Wheat Rust. Sci Rep 14, 28203 (2024). https://doi.org/10.1038/s41598-024-79793-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-024-79793-2