Abstract

To aid in the diagnosis, monitoring, and surveillance of bladder carcinoma, this study aimed to develop and test an algorithm that creates a referenceable bladder map rendered from standard cystoscopy videos without the need for specialized equipment. A vision-based algorithm was developed to generate 2D bladder maps from individual video frames, by sequentially stitching image frames based on matching surface features, and subsequently localize and track frames during reevaluation. The algorithm was developed and calibrated in a 2D model and 3D anthropomorphic bladder phantom. The performance was evaluated in vivo in swine and with retrospective clinical cystoscopy video. Results showed that the algorithm was capable of capturing and stitching intravesical images with different sweeping patterns. Between 93% and 99% of frames had sufficient features for bladder map generation. Upon reevaluation, the cystoscope accurately localized a frame within 4.5 s. In swine, a virtual mucosal surface map was generated that matched the explant anatomy. A surface map could be generated based on archived patient cystoscopy images. This tool could aid recording and referencing pathologic findings and biopsy or treatment locations for subsequent procedures and may have utility in patients with metachronous bladder cancer and in low-resource settings.

Similar content being viewed by others

Introduction

Cystoscopy plays a central role in the diagnosis, treatment, and follow-up for patients with bladder cancer and other pathologies. Evaluations, reports, and re-evaluation of specific bladder locations identified under cystoscopy require a high degree of spatial awareness and training. Cystoscopic views can be limited due to variable bladder volume and anatomy and may lead to incomplete surveillance, which further complicates sequential localization of specific regions of interest upon re-evaluation, especially for inexperienced operators.

During cystoscopy, specific regions of interest are often documented with generic two-dimensional (2D) bladder diagrams1, which lack spatial context or detailed and reproducible ___location descriptors. The inability to provide a comprehensive record and standardized reference images poses challenges in communication between operators, making re-identification of prior resection margins or areas of interest difficult. Full video files provide optimal spatial context but are not included in medical records and are not easily transmittable. While structured reporting software can improve the level of care2, a standardized method to fully convey 3D cystoscopy spatial information outside of verbal or written reports has not yet been adopted.

To overcome this, image stitching algorithms and endoscopic tracking techniques have been developed3,4,5,6,7,8,9,10. Such tracking methodologies may benefit less experienced users and improve inter-operator communication, enabling standardized and reproducible navigation guidance. In low-resource settings, the ability to reduce inter-operator variability and allow remote experts to view or compare results is particularly advantageous. Proposed methods for generation of 3D anatomic atlases3,4,5,6,7, mosaic or panoramic images, which are more easily shared8,9, would otherwise require specialized tracking systems, equipment, or high-resolution cameras10.

Therefore, we developed an image stitching algorithm that aggregates and orients frames from videos to create a 2D representative map of a visualized field, while maintaining lesion orientation and frame of reference. Importantly (Figs. 1, 2 and 3), the image stitching process requires no hardware or specialized equipment and can use images recorded from the cystoscopy session without changing standard workflows. The algorithm was developed in 2D and 3D phantoms, tested in vivo in swine, and finally verified with human data. This technology has the potential to facilitate referencing and documentation in repeat cystoscopic procedures via enhanced tracking and localization, which are critical in longitudinal management of metachronous bladder lesions.

Bladder image stitching algorithm based on feature detection and matching of sequential images. Features are detected in the initial frame (\(\:{Frame}_{{M}_{i}}\)), and subsequent frame (\(\:{Frame}_{{M}_{(i+1)}}\)), matched, and the transform (\(\:{H}_{{M}_{i}}^{{M}_{(i+1)}}\)) is calculated to form a stitched, composite image

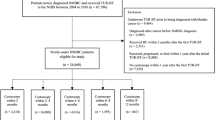

Flowchart description of the bladder stitching algorithm. Description of (a) feature detection and image transform calculation, (b) bladder map generation, (c) drift correction algorithm and (d) “revisit” algorithm, which enables video frames from repeat cystoscopy to be oriented to an original bladder map.

2D bladder image and 3D bladder phantom used in algorithm development and testing. (a) 2D experimental setup, (b) image calibration to correct for distortion and “fisheye” appearance with uncorrected (top) and corrected images (bottom), (c) test bladder image used in the 2D study, (d) illustrated sweep patterns used for original bladder map generation and revisit sweep, (e) 3D phantom, (f) example cystoscope image of 3D phantom, (g) illustration of two different sweep patterns performed to generate bladder maps, sweep 1 (yellow line) and sweep 2 (dashed black line).

2D image accuracy was tested using an 18 cm × 18 cm bladder image placed approximately 10 cm away from the cystoscope lens. Two perpendicular sweep patterns were made to cover the entire image by moving the image as illustrated in Fig. 3a.

Results

On both 2D and 3D phantom models, images were accurately stitched and used to aid localization of specific features during revisits, or follow-up cystoscopy, which implemented a distinct sweeping pattern. In the single study of the 2D phantom, a stitched map was created using 73 video frames (Fig. 4a). and drift correction was applied (Fig. 4b). Different from the original sweep pattern (Fig. 4c), the revisited sweep included 80 frames. It took 7 s to locate the position of the first image in the 2D map and an average of 0.76 s to localize successive frames. Image surface features were identified and mapped in 79/80 (99%) of frames. As shown through the localization and correct orientation of the cystoscope, the algorithm was able to accurately identify the ___location of an image in the 2D phantom, supporting the ability to reference to prior locations and navigate to lesions or tumors upon a repeat assessment (Fig. 4d).

Bladder map generation from a 2D bladder image. Bladder map (a) before and (b) after drift correction. (c) Cystoscope sweep pattern used in bladder map generation. (d) Revisit sweep on the original, drift-corrected sweep. The red line indicates the pattern of the revisit sweep, the green dots indicate the predicted ___location of the cystoscope within the map, and the blue arrows indicate the predicted rotation of the cystoscope with respect to the initial frame of the bladder map (top-most right frame).

Using a single sweep of the 3D phantom, 37 frames were acquired to create a map of half the bladder. A subsequent sweep, used for evaluating the ability to revisit locations in the initial map, generated a full map using 30 frames (Fig. 5). It took 4.5 s to localize the position of the first image frame and an average 0.35 s for each successive frame. 28/30 (93%) frames had sufficient image features to perform feature matching.

Assessment of bladder mapping algorithm on 3D bladder phantom. The left column is unprocessed, middle column is filtered to eliminates background shadows, and the right column is filtered to show just the vascular patterns. Blue and yellow arrows highlight common bifurcations between the original and stitched and processed images that highlight boundaries and vessels. (a) 3D bladder phantom with lines separating the half-bladder into 9 segments. (b) Images stitched together after a longitudinal sweeping image capture (sweep 1 illustrated in Fig. 1g). (c) Images stitched together after rotational image capture (sweep 2 illustrated in Fig. 1g).

In preclinical data, 294 frames were acquired to generate a bladder map. Sufficient imaging features were identified and mapped in 271/294 (92%) frames. The bladder map was generated in 8 s. In clinical data, a high-quality cystoscope (image size 730 × 730 pixels) was used to generate the bladder map in 168 s (Fig. 6, Supplemental Video 1). The resulting video was 33 MB and the size of final frame was 9837 × 8585 pixels. 161/168 (96%) frames had sufficient imaging features to perform feature matching. While the relative number of frames with a sufficient number of surface features were similar across all studies and cystoscopes, we did find the number of seconds to localize the first image was longer in clinical data when using the high-quality rigid cystoscope, which is related to the higher resolution and number of image frames.

Discussion

Bladder cancer is among the top ten leading causes of cancer-related deaths worldwide11 and presents a significant lifetime treatment cost, due to its metachronous nature, high rate of recurrence, and need for longitudinal surveillance12,13. Bladder cancer incidence is speculated to be higher in nations with high developmental index; however, high incidence of mortality is noted across North Africa in men and sub-Saharan Africa in women14. Low-middle income countries and rural areas of the United States may reflect varying and poor degrees access to expert care such as skilled cystoscopy15. Urothelial cancer care reflects a geographical health disparity given it requires complex access to multi-disciplinary specialists as well as technology and cystoscopy expertise. These geographical differences may be attributable to in part to differences in access to care and availability of diagnostic cystoscopy16,17.

We developed a tool that could help to standardize cystoscopy, enhance access and reproducibility, and reduce underdiagnosis with a focus on the ability to reference and revisit specific anatomic sites of concern during follow-up. Image stitching algorithms have been widely explored with endoscopic imaging sequences10, with urology and bladder being one of the most investigated due to the relatively predictable shape and feature-rich surface anatomy. Despite this effort, no image stitching algorithm has been deployed clinically. This may be due to the requirement for specialized tracking equipment or a limitation of applicable devices. Some solutions may only work with high-resolution cameras5, rigid cystoscopes4,5, tracking equipment3,5,6, or specialized imaging such as photodynamic diagnosis and tumor fluorescence18. The algorithm presented here was developed with disposable, flexible cystoscopes, whose use does not alter standard workflow, and is a low resource cost relative to these other technologies.

An additional goal of the algorithm presented in this study was to enable standardization, documentation, and sharing of results and data. Many image stitching algorithms aim to generate a 3D bladder model, which give a better understanding of the anatomical view of the bladder3,4,6,19,20, however, this limits the ability to share and quickly interpret cystoscopic findings. Kriegmair et al.8 and Groenhuis et al.9 demonstrated the ability to generate either 3D atlases or 2D maps of the bladder with Groenhuis et al. further demonstrating the ability to automatically detect feature changes in successive cystoscopic videos. As with those examples, the tool presented in this study permits sharing of single images with localization, rather than videos, that can provide a comprehensive view of the bladder and allow expert or outside review and referencing. At follow-up, accurate cystoscopic ___location information with documentation of pathological findings relative to landmarks in the bladder could directly aid re-examination of specific locations based on previously generated bladder maps. Additionally, while the bladder map orientation in these studies was based on the first frame in the sequence, in practice this could be standardized as beginning with an intravesical landmark.

One challenge of creating a whole bladder map was ensuring that the operator comprehensively swept the cystoscope over the entirety of bladder. The preclinical and clinical images presented in this study have apparent gaps, which may be due to incomplete sweeps or, potentially, shortcomings of the algorithms. Gong et al. developed a “mosaicking” method that allowed for generation of a stitched image using low-quality and few-feature medical images with applications in the retina and elsewhere21. Likewise, Phan et al. using a Structure from Motion (SfM) approach and optical flow techniques to accommodate sparse or distorted features20. Future iterations of this algorithm could explore such techniques to address this unmet technical need.

Soper et al. introduced a “bladder surveillance system” that coupled an image stitching algorithm, an ultrathin and flexible endoscope, and robotics to improve standardization and ensure full video coverage of the bladder6. This type of technology could allow for the collection of cystoscopic images without requiring the physical presence of a urologist. Still, the introduction of such specialized equipment may be cost-prohibitive in resource-scarce settings or low-middle income countries.

There are multiple limitations to our study, including the retrospective application to only a single clinical case. The robustness of the algorithm will need to be further developed, validated, and tested prospectively in clinical cases. For future clinical implementation, recommendations and adjustments may have to be made to standardize the use of the technology. For instance, urologists may have to fill the bladder to a specified volume, or to correlate different maps of the same patient and accommodate patient movement and non-rigid behavior of the bladder, elastic registration may be required. Further clinical investigations could help to determine the feature density required for the algorithm to perform sufficiently to enable tracking and image stitching. Good image quality and feature identification is a requirement to use the technology. Our results suggest that when > 90% of image frame have quality features then the algorithm will successfully stitch a bladder map, yet the limits require further exploration. Additionally, while comparison of a resected bladder to the stitched bladder atlas would be insightful, overcoming the lack of identifiable vasculature post-mortem, which is the primary feature used for image stitching, and the altered shape where the in vivo bladder is distended, is challenging. Direct comparison of rigid and flexible cystoscopes would also help to define limitations of the algorithm and systems approach.

In conclusion, vision-based image stitching, navigation, and referencing can make use of mucosal landmarks to successfully orient and guide the urologist during repeat cystoscopy, both in vitro and in vivo. An image stitching algorithm using a conventional cystoscope can generate a composite map of the whole bladder and be prospectively applied to cystoscopic procedures, potentially facilitating performance metrics in serial procedures in a recurrent or metachronous disease such as bladder cancer. This could prove to be a cost-effective approach to navigation that could be deployed in resource-scarce settings, without the requirement of robotic, electromagnetic, or fiberoptic tracking hardware, and could enable less skilled operators, facilitating a more standardized procedure.

Materials and methods

An algorithm was developed to generate 2D maps of stitched video frames for localization and tracking of cystoscopic imaging. The algorithm was evaluated on a 2D model, a 3D anthropomorphic bladder phantom, in vivo in swine, and retrospectively with human patient clinical imaging.

Bladder image stitching algorithm

The algorithm was developed to stitch sequential images from cystoscope videos by matching image surface features (Fig. 1). The sequential images were transformed, compensating for translation, rotation, and zoom of the cystoscope, and a 2D bladder map was constructed. Subsequent cystoscopic videos were used to detect matched image features in the original bladder map, with the goal of defining the ___location and orientation to a point of interest.

To detect features in each cystoscopic frame and calculate the frame transformation, “Speeded Up Robust Feature” (SURF) and “Random Sample Consensus” (RANSAC) were used. SURF was used to detect features in the current (\(\:{Frame}_{Mi}\)) and the next sequential cystoscopic video frame (\(\:{Frame}_{M(i+1)}\)), which could coincide with mucosal features such as vessels. If enough features (≥ 6) were found in each frame, a brute-force feature matcher and RANSAC were used to calculate the affine transformation (\(\:{H}_{{M}_{i}}^{{M}_{(i+1)}}\)). If an insufficient number of shared features were found between consecutive frames, the transformation of the prior frame was applied (Fig. 2a).

The bladder map orientation was initialized by the first frame (\(\:{M}_{0}\)). Then, the transformation calculated by feature detection was applied to the next consecutive frame (\(\:{M}_{i}\)). The bladder map was resized based on the applied transformation, and the process was repeated for all frames in the video (Fig. 2b).

Drift correction was applied to bladder maps to adjust and re-calibrate the transformation (Fig. 2c). In this transformation, similar features were identified between spatially adjacent frames, rather than temporally sequential frames.

Revisit algorithm

A “revisit” referencing algorithm was developed to enable cystoscopic re-evaluation of the bladder by guiding orientation of the new cystoscopic view, based on the original bladder map (Fig. 2d). Features of the first frame of the “revisit” cystoscopic video (\(\:{Frame}_{v0}\)) were detected by SURF and a search was conducted for matching features in the original cystoscopic bladder map. A transformation of the “revisit” cystoscopic frame to the bladder map was found, and the orientation of the frame was indicated. The process was repeated for each additional frame in the “revisit” cystoscopic video, to enable referencing.

Bench development in 2D and 3D models

The accuracy of camera calibration, whole bladder stitched image generation, and the ability to navigate from stitched images was tested using a 2D bladder image and a 3D printed bladder phantom (Fig. 3). A single-use flexible cystoscope (Neoflex, Neoscope Inc., San Jose, CA) with two degrees of freedom was used to image the 2D and 3D models, which both contained unique vascular surface patterns to provide contrast for image feature matching. Each frame had an area of 640 × 426 pixels and 96 dpi. The cystoscope camera was calibrated once before phantom image collection with a 5 × 7 checkerboard (10 mm for each square) to compensate for image distortion caused by the lens curvature (Fig. 3b, top). Single images were acquired with the camera placed at approximately 20 different positions and angles relative to the checkerboard. The intrinsic and extrinsic parameters of the camera were calculated using a standard camera calibration method22 (Fig. 3b, bottom).

3D image accuracy was tested using an idealized, rigid bladder phantom (500 mL, 11 × 8 × 7 cm) which was 3D printed (Ultimaker S3, Geldermalsen, the Netherlands). The interior was painted with vascular patterns, and the area was subdivided into 9 regions which were approximately equal in size (Fig. 3e). Two perpendicular sweep patterns were performed by inserting the cystoscope at the approximate urethral orifice and articulating the cystoscope tip to cover half of the bladder phantom as illustrated in Fig. 3g.

The number of images per sweep, the initial stitching time (the processing time to stitch the initial map from the first image to the last), the drift correction time, and the number of images where no transformation was found were recorded. All processing and computations were performed on an MSI PC, with a Windows 10 operating system, 2.2 GHz CPU, and 16.0GB RAM.

Preclinical evaluation in swine

To evaluate the technology in a controlled and systematic setting, one female Yorkshire domestic swine (Oak Hill Genetics, Ewing, Illinois) weighing 75 kg was studied under a protocol approved by the Institutional Animal Care and Use Committee, was reported following the ARRIVE guidelines and in compliance with the Public Health Service (PHS) Policy on Humane Care and Use of Laboratory Animals (policy). The subject was sedated with intramuscular ketamine (25 mg/kg), midazolam (0.5 mg/kg), and glycopyrrolate (0.01 mg/kg) and anesthetized with propofol (1 mg/kg intravenously). The subject was intubated and maintained under general anesthesia with isoflurane (1–5%) throughout the procedure. A disposable cystoscope (AMBU Inc., Columbia, MD, image size 360 × 360 pixels, 96 dpi) was inserted into the bladder and used to fill it with saline. Intravesical videos were acquired using at least two different systematic patterns of cystoscope motion. At the conclusion of the study, euthanasia was performed under general anesthesia by intravenous administration of Beuthanasia-D (pentobarbital sodium 390 mg/mL and phenytoin sodium 50 mg/mL). A 2D bladder map was created using stitched images and was qualitatively compared to still images of the excised bladder.

Clinical evaluation

The feasibility of bladder map stitching was retrospectively assessed from a cystoscopy video of a human patient undergoing evaluation for microscopic hematuria or bladder cancer. Institutional Review Board approval was obtained with waiver of written informed consent as specified in 45 CFR parts 46.116 and 46.117 at the National Institutes of Health and all studies were performed in accordance with the Declaration of Helsinki. A rigid cystoscope (Karl Storz, Tuttlingen, Germany) was utilized. After the completion of the clinical cystoscopic evaluation, the bladder was fully distended and revisited with the cystoscope. This evaluation was acquired by steadily sweeping the cystoscope in a cranio-caudal pattern, ensuring complete surveillance of the visualizable bladder mucosa. This final bladder evaluation was utilized to create a bladder map. The 96 dpi original images were cropped from 1920 × 1080 pixels to 740 × 740 pixels to enable faster image processing.

Data availability

The data analyzed during the current study are available from the corresponding author on reasonable request.

Abbreviations

- 2D:

-

Two-dimensional

- SURF:

-

Speeded-up robust feature

- RANSAC:

-

Random sample consensus

References

Babjuk, M. et al. European Association of Urology Guidelines on non-muscle-invasive bladder Cancer (Ta, T1, and carcinoma in situ). Eur. Urol. 81, 75–94. https://doi.org/10.1016/j.eururo.2021.08.010 (2022).

Bostrom, P. J. et al. Point-of-care clinical documentation: Assessment of a bladder cancer informatics tool (eCancerCareBladder): A randomized controlled study of efficacy, efficiency and user friendliness compared with standard electronic medical records. J. Am. Med. Inf. Assoc. 18, 835–841. https://doi.org/10.1136/amiajnl-2011-000221 (2011).

Shevchenko, N. et al. A high resolution bladder wall map: Feasibility study. Annu. Int. Conf. IEEE Eng. Med. Biol. Soc. 2012, 5761–5764. https://doi.org/10.1109/embc.2012.6347303 (2012).

Lurie, K. L., Angst, R., Zlatev, D. V. & Liao, J. C. Ellerbee Bowden, A. K. 3D reconstruction of cystoscopy videos for comprehensive bladder records. Biomed. Opt. Express. 8, 2106–2123. https://doi.org/10.1364/BOE.8.002106 (2017).

Suarez-Ibarrola, R. et al. A novel endoimaging system for endoscopic 3D reconstruction in bladder cancer patients. Minim. Invasive Therapy Allied Technol. 31, 34–41. https://doi.org/10.1080/13645706.2020.1761833 (2022).

Soper, T. D., Porter, M. P. & Seibel, E. J. Surface mosaics of the bladder reconstructed from endoscopic video for automated surveillance. IEEE Trans. Biomed. Eng. 59, 1670–1680. https://doi.org/10.1109/TBME.2012.2191783 (2012).

Hackner, R. et al. Panoramic Imaging Assessment of different bladder phantoms - an evaluation study. Urology 156, e103–e110. https://doi.org/10.1016/j.urology.2021.05.036 (2021).

Kriegmair, M. C. et al. Digital Mapping of the urinary bladder: Potential for standardized Cystoscopy reports. Urology 104, 235–241. https://doi.org/10.1016/j.urology.2017.02.019 (2017).

Groenhuis, V., de Groot, A. G., Cornel, E. B., Stramigioli, S. & Siepel, F. J. 3-D and 2-D reconstruction of bladders for the assessment of inter-session detection of tissue changes: A proof of concept. Int. J. Comput. Assist. Radiol. Surg. https://doi.org/10.1007/s11548-023-02900-7 (2023).

Bergen, T. & Wittenberg, T. Stitching and Surface Reconstruction from endoscopic image sequences: A review of applications and methods. IEEE J. Biomedical Health Inf. 20, 304–321. https://doi.org/10.1109/JBHI.2014.2384134 (2016).

Siegel, R. L., Miller, K. D., Jemal, A. & Cancer statistics CA: A cancer journal for clinicians 70, 7–30 (2020). https://doi.org/10.3322/caac.21590

Mossanen, M. & Gore, J. L. The burden of bladder cancer care: Direct and indirect costs. Curr. Opin. Urol. 24, 487–491. https://doi.org/10.1097/mou.0000000000000078 (2014).

Sievert, K. D. et al. Economic aspects of bladder cancer: What are the benefits and costs? World J. Urol. 27, 295–300. https://doi.org/10.1007/s00345-009-0395-z (2009).

van Hoogstraten, L. M. C. et al. Global trends in the epidemiology of bladder cancer: Challenges for public health and clinical practice. Nat. Rev. Clin. Oncol. 20, 287–304. https://doi.org/10.1038/s41571-023-00744-3 (2023).

Odisho, A. Y., Fradet, V., Cooperberg, M. R., Ahmad, A. E. & Carroll, P. R. Geographic distribution of urologists throughout the United States using a county level approach. J. Urol. 181, 760–765. https://doi.org/10.1016/j.juro.2008.10.034 (2009). discussion 765–766.

Richters, A., Aben, K. K. H. & Kiemeney, L. A. L. M. The global burden of urinary bladder cancer: An update. World J. Urol. 38, 1895–1904. https://doi.org/10.1007/s00345-019-02984-4 (2020).

Joshi, M., Polimera, H., Krupski, T. & Necchi, A. Geography should not be an oncologic destiny for Urothelial Cancer: Improving Access to Care by removing local, Regional, and International barriers. Am. Soc. Clin. Oncol. Educational Book. 27–340. https://doi.org/10.1200/edbk_350478 (2022).

Behrens, A., Stehle, T., Gross, S. & Aach, T. Local and global panoramic imaging for fluorescence bladder endoscopy. Annu. Int. Conf. IEEE Eng. Med. Biol. Soc. 2009, 6990–6993. https://doi.org/10.1109/iembs.2009.5333854 (2009).

Chen, L., Tang, W., John, N. W., Wan, T. R. & Zhang, J. J. SLAM-based dense surface reconstruction in monocular minimally invasive surgery and its application to augmented reality. Comput. Methods Programs Biomed. 158, 135–146. https://doi.org/10.1016/j.cmpb.2018.02.006 (2018).

Phan, T. B., Trinh, D. H., Wolf, D. & Daul, C. Optical flow-based structure-from-motion for the reconstruction of epithelial surfaces. Patt. Recogn. 105, 107391. https://doi.org/10.1016/j.patcog.2020.107391 (2020).

Gong, C., Brunton, S. L., Schowengerdt, B. T. & Seibel, E. J. Intensity-Mosaic: Automatic panorama mosaicking of disordered images with insufficient features. J. Med. Imaging (Bellingham). 8, 054002. https://doi.org/10.1117/1.Jmi.8.5.054002 (2021).

Zhang, Z. A flexible new technique for camera calibration. IEEE Trans. Pattern Anal. Mach. Intell. 22, 1330–1334. https://doi.org/10.1109/34.888718 (2000).

Acknowledgements

We would like to thank Amel Amalou and Zion Tse for critical input during preliminary in vitro assessment. This work was supported by the Center for Interventional Oncology in the Intramural Research Program of the National Institutes of Health (NIH) by intramural NIH Grants NIH Z01 1ZID BC011242 and CL040015. The NIH and Philips Research have a Cooperative Research and Development Agreement that supported this research.

Funding

Open access funding provided by the National Institutes of Health

Author information

Authors and Affiliations

Contributions

M.L.: Conceptualization; Data curation; Formal analysis; Investigation; Methodology; Software; Validation; Visualization; Writing – original draft; Writing – review and editing. N.V.: Conceptualization; Data curation; Formal analysis; Investigation; Methodology; Validation; Visualization; Writing – original draft; Writing – review and editing. S.G.: Methodology; Data curation; Investigation; Resources; Validation; writing – review and editing. D.L.: Conceptualization; Data curation; Methodology; Investigation; writing – review and editing. V.V.: Data curation; Investigation; Resources; writing – review and editing. N.G.: Data curation; Investigation; writing – review and editing. I.B.: Data curation; Investigation; writing – review and editing. S.R.: Conceptualization; Data curation; Investigation; writing – review and editing. W.P.: Data curation; Investigation; Resources; writing – review and editing. J.K.: Data curation; Investigation; Resources; writing – review and editing. S.X.: Conceptualization; Resources; writing – review and editing. B.W.: Conceptualization; Supervision; Funding acquisition; Project administration; Writing – original draft; Writing – review and editing.

Corresponding authors

Ethics declarations

Competing interests

NV is an employee of Philips. BJW is the Principal Investigator in Cooperative Research and Development Agreements between NIH and the following: BTG Biocompatibles/Boston Scientific, Siemens, NVIDIA, Celsion Corp, Canon Medical, XAct Robotics, and Philips. BJW and NIH are party to Material Transfer or Collaboration Agreements with: Angiodynamics, 3T Technologies, Profound Medical, Exact Imaging, Johnson and Johnson, Endocare/Healthtronics, and Medtronic. Outside the submitted work, BJW is primary inventor on 47 issued patents owned by the NIH (list available upon request), a portion of which have been licensed by NIH to Philips. BJW and NIH report a licensing agreement with Canon Medical on algorithm software with no patent. BJW is joint inventor (assigned to HHS NIH US Government (for patents and pending patents related to drug eluting bead technology, some of which may have joint inventorships with BTG Biocompatibles/Boston Scientific. BJW is primary inventor on patents owned by NIH in the space of drug eluting embolic beads. No other authors have conflicts of interest to declare.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Supplementary Material 1

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Li, M., Varble, N.A., Gurram, S. et al. Bladder image stitching algorithm for navigation and referencing using a standard cystoscope. Sci Rep 14, 29168 (2024). https://doi.org/10.1038/s41598-024-80284-7

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-024-80284-7