Abstract

Text Graph Representation Learning through Graph Neural Networks (TG-GNN) is a powerful approach in natural language processing and information retrieval. However, it faces challenges in computational complexity and interpretability. In this work, we propose CoGraphNet, a novel graph-based model for text classification, addressing key issues. To overcome information loss, we construct separate heterogeneous graphs for words and sentences, capturing multi-tiered contextual information. We enhance interpretability by incorporating positional bias weights, improving model clarity. CoGraphNet provides precise analysis, highlighting important words or sentences. We achieve enhanced contextual comprehension and accuracy through novel graph structures and the SwiGLU activation function. Experiments on Ohsumed, MR, R52, and 20NG datasets confirm CoGraphNet’s effectiveness in complex classification tasks, demonstrating its superiority.

Similar content being viewed by others

Introduction

Text Graph Representation Learning via Graph Neural Networks (TG-GNN) plays a crucial role in natural language processing and information retrieval, excelling in text classification. Models such as Text-GCN1 use graph convolutional networks to represent text as graphs, improving performance with limited training data. TensorGCN2 integrates semantic, syntactic, and sequential information through a text graph tensor, enhancing classification. TG-Transformer3 applies Transformer-based heterogeneous graph neural networks to capture complex text structures and relationships effectively.

TextING4 uses Graph Neural Networks to explore complex word relationships, enabling inductive learning for new words. T-VGAE5 combines a topic model with a variational graph autoencoder to reveal latent semantic connections, enhancing interpretability. Frog-GNN6 addresses few-shot text classification by applying multi-perspective aggregation with graph neural networks, improving class similarity and dissimilarity in data-scarce environments. Although these methods may experience some information loss during graph construction, their effectiveness depends on their design and goals. Understanding each method’s strengths, application contexts, and strategies for managing information loss is crucial.

While TG-GNN7,8,9,12,13 has notably improved text classification accuracy across various datasets, it faces limitations in interpretability and accuracy for complex and specialized datasets. The model struggles with deep comprehension and accurate classification of texts with intricate, extended sentences, and its decision-making process remains opaque. These challenges highlight the need for a thorough evaluation of TG-GNN in applications requiring both deep understanding and clear decision rationales, particularly in specialized domains.

This paper presents CoGraphNet, an innovative text classification model that constructs word and sentence structure graphs for each document, treating words and sentences as nodes to form distinct networks. This approach enhances content information beyond what is achievable with other graph models. Leveraging the pre-trained BERT model14,15 for text vectorization, CoGraphNet transforms sentences into embeddings, enriching textual representation. The model assigns edge weights based on a mix of cosine similarity16 and positional deviations, emphasizing the importance of key elements within the document, particularly in initial sentences. To assimilate hierarchical contextual information, we augment the model with a Hierarchical Attention network17,18. Incorporating the SwiGLU activation function19,20 within the GRU layer enhances both utility and interpretability. This comprehensive method addresses information loss and boosts interpretability and context sensitivity, leading to superior document classification outcomes. The primary contributions of this work are as follows:

-

Introduced a novel heterogeneous graph structure for text, using words and sentences as nodes.

-

Improved interpretability by integrating positional bias weights, clarifying key elements in classification.

-

The use of SwiGLU activation function improves accuracy and effectively handles various nodes and edges.

-

Analyzing computational complexity and introducing attention layer visualization

Related work

Transitioning from single to heterogeneous graphs

In the realm of graph neural networks, the advent of single graph learning was heralded by the implementation of graph convolutional networks (GCN)21,22, which facilitate node representation learning through the aggregation of information from direct neighbors. Although GCN is effective for homogeneous graphs, it struggles to integrate information from distant nodes. To address this limitation, GraphSAGE23 introduced a novel technique for sampling and aggregating node features, providing a nuanced method for node representation learning. Concurrently, the Graph Attention Network (GAT)24,25,26 incorporated an attention mechanism to dynamically learn node relationships through varying contributions from neighbors. Moreover, Graph Isomorphism Networks (GIN)27,28 utilized MLPs and summation of neighbor node features for learning node representations. However, the inherent limitations of single-graph learning become pronounced in the context of complex, heterogeneous graphs.

To surmount these obstacles, methodologies specifically designed for heterogeneous graphs have been developed. Heterogeneous Graph Neural Networks (H-GNN)25,29,30 are crafted to manage the dynamics between various node types effectively. Metapath2Vec31 leverages random walks to elucidate relationships within heterogeneous graphs by defining meta-paths. GraphSAGE++32 enhances GraphSAGE23 to adeptly navigate the intricacies of heterogeneous graphs. Heterogeneous graph neural networks (HetGNN)33,34 and the Hierarchical Attention Network (HAN)35,36 employ attention mechanisms to refine model representation learning for heterogeneous graphs. Furthermore, the hierarchical heterogeneous graph neural network (HGNN)37,38 explores complex relationships through multi-level representation learning.

Proposed62 a novel graph neural network model which combines part-of-speech (POS) guided syntactic dependency graphs with graph convolutional networks, aiming to overcome the noise issues present in traditional syntactic dependency trees in sentiment classification tasks. By introducing a heterogeneous graph structure, this approach significantly enhances the model’s capability to handle complex syntactic information. Syntactic, semantic and knowledge graph attention network model (SSK-GAT)11,59. Further exploration is conducted on how to optimize graph models for complex sentiment analysis tasks by integrating syntactic, semantic, and affective knowledge. These studies indicate that applying heterogeneous graphs to textual data can effectively enhance the model’s performance in fine-grained sentiment classification.

Contrasting with single graph learning, heterogeneous graph learning excels by comprehensively and flexibly managing relationships among diverse node types, thereby adapting more effectively to the complexities of real-world network structures. Our contribution, CoGraphNet, introduces a heterogeneous graph structure for text representation, conceptualizing each word and sentence as a node within a word-sentence heterogeneous graph. This innovation, alongside the employment of Gated Neural Networks for the learning of word node embeddings, not only boosts our model’s efficiency but also deepens the understanding of word relationships within the graphs, offering substantial training efficiency advantages over conventional graph models.

Graphs transitioning from traditional to BERT

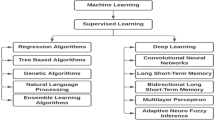

In text classification, the development of contextual information has followed several key phases. Initially, traditional methods employed machine learning algorithms such as Naive Bayes40,41 and Support Vector Machines42,43 along with basic bag-of-words models. The introduction of the N-gram model44,45 marked a significant improvement by capturing local word relationships. Subsequent advancements in word embedding technologies, including Word2Vec46,47 and GloVe48, enhanced the modeling of lexical semantics by representing words in continuous vector spaces, providing deeper contextual insights. HetersumGraph49 further diversified contextual information modeling in text classification by introducing a graph structure with TF-IDF weighted edges connecting word and sentence nodes, though these methods were primarily focused on individual word frequencies, limiting their capacity to understand complex word relationships and contextual subtleties.

The introduction of Convolutional Neural Networks (CNNs)51,52 brought local perception and multi-scaling to the forefront, enhancing the capture of local text features and contextual integration. Despite improvements in sequence data processing by Recurrent Neural Networks (RNNs)53, their effectiveness in managing long-term context relationships was compromised by dependency issues. Long Short-Term Memory Networks (LSTMs) and Gated Recurrent Units (GRUs) effectively addressed these challenges, significantly boosting text classification outcomes. The addition of attention mechanisms allowed models to thoroughly examine various information segments, leading to a more comprehensive modeling of context for text classification.

Recent advancements are marked by the widespread adoption of pre-trained language models like BERT55,56,57 and GPT58,59, with BERT surpassing traditional LSTM approaches. Leveraging extensive pre-training on large textual datasets, BERT excels at deciphering complex relationships and semantic nuances in texts.

The Cross-modal Fine-grained Alignment and Fusion Network (CoolNet)60 improves performance by converting images to textual descriptions and dynamically aligning semantic and syntactic information, showcasing BERT’s strength in multimodal sentiment analysis. The novel Graph Augmentation Network with Dynamic Sentiment Knowledge and Static External Knowledge Graphs (DSSK-GAN)61 combines dynamic and static knowledge graphs to enhance sentiment modeling of aspect terms. These studies illustrate how integrating graph neural networks with BERT effectively boosts text representation. Multi-channel Attentive Graph Convolutional Network (MAGCN)62. Through self-attention mechanisms and multi-head attention, it integrates sentiment knowledge, significantly enhancing the performance of cross-modal feature representations. This advancement indicates that multi-channel graph convolutional networks utilizing multi-head attention mechanisms can effectively incorporate sentiment knowledge when handling multimodal data. CoGraphNet further improves upon this method by optimizing the multi-head attention mechanism and fusion techniques in graph convolutional networks, achieving higher accuracy and effectiveness in dealing with complex sentiment analysis tasks. Our method improves text representation with BERT-based embeddings and spatial context. By combining cosine similarity with positional adjustments, we highlight key document elements, especially in initial sentences. A hierarchical attention network further enhances text comprehension.

Transitioning from classics to SwiGLU

In text classification, activation functions have evolved significantly. The sigmoid function63 was initially used for binary classification but suffered from vanishing gradients. The softmax function64 addressed multi-category classification but faced gradient explosion in long sequences. The ReLU function65 improved efficiency but had the ’dead neuron’ issue, which was later mitigated by Leaky ReLU66 and Parametric ReLU67 through negative slopes to maintain neuron activity.

The Exponential Linear Unit (ELU)68 improved upon previous functions by incorporating non-zero slopes in the negative ___domain, addressing the dead unit issue and performing well in text classification. Attention then shifted to gated activation functions like GRU70 and LSTM71, which effectively captured long-term dependencies in complex sequence data. Recent advancements include adaptive activation functions such as Swish73 and Mish74, which combine simplicity and stability for superior text classification results. Choosing the optimal activation function remains a challenge, requiring a balance between traditional and adaptive methods.

The most recent advancement, the SwiGLU activation function19,20,75, synthesizing the strengths of Swish73 and GLU76, has proven to be remarkably effective in complex text representation learning tasks. In our model, integrating SwiGLU substantially improves the management of heterogeneous graphs comprising words and sentences. SwiGLU’s distinctive gated mechanism dynamically fine-tunes its activation response based on input data characteristics, an essential feature for Graph Neural Networks (GNNs) tasked with processing diverse node and edge types. This functionality enables SwiGLU to adeptly differentiate and analyze various node types, such as words and sentences, significantly boosting the model’s accuracy in document classification tasks, particularly within complex heterogeneous graphs featuring a broad array of nodes and edges.

Method

Preliminaries

In this section, we introduce CoGraphNet, our innovative model tailored for text classification, which meticulously constructs two fundamental graph structures: the Word Structure Graph and the Sentence Structure Graph. The purposeful amalgamation of these graphs is intended to significantly enhance the efficacy of text analysis. We begin by offering an overview of the process involved in developing the Word Structure Graph, followed by an in-depth discussion on the formation of the Sentence Structure Graph. We then delve into the seamless integration of these two graphs, clarifying how their combination improves classification performance. The architectural design of CoGraphNet is depicted in Fig. 1.

Initially, the graphical representation allows us to visualize the preprocessing stages. The Text Graph Builder facilitates the graph-based representation learning of textual data through a series of steps: selecting pre-trained models, loading data, processing text, creating graph representations, and storing the processed data. Conversely, the “BERT Embedding and Graph” method processes textual data and constructs graph representations by employing the BERT model for word segmentation, calculating sentence similarity, and conducting sentence segmentation.

Leveraging a pre-trained BERT model enables the extraction of semantic information at the sentence level. Concurrently, the construction of a graph representation delineates the interrelationships among texts, with nodes symbolizing the texts and edges indicating the degree of similarity between them. This approach to graph representation learning is applicable to fields such as text mining and information retrieval, offering a rich set of features for subsequent natural language processing (NLP) applications.

Word-based graph construction and interaction and readout

Building upon the graph creation approach outlined in TextING4,8,31, our methodology enhances the model by incorporating a positional weight for words that co-occur within a specified window. In contrast to the technique discussed in the referenced paper, which exclusively considers the co-occurrence within a sliding window to determine edge weights, our model additionally accounts for the relative positioning of words. To achieve this, we introduce an alpha parameter that adjusts the edge weights based on the proximity of the words, enabling the model to capture not only the frequency but also the contextual significance of word interactions.

To integrate this concept of positional weighting into our graph construction, we employ the following formula to calculate the edge weights:

In this study, we incorporate crucial elements that are pivotal for understanding positional weighting in co-occurrence analysis. The term \(W_p\) denotes Positional Weight, highlighting the importance of the positions of co-occurring words within a specified window. The coefficient \(\alpha\)acts as a regulatory factor, adjusting the impact of positional weighting on edge weight determination. Additionally, \(\rho\) signifies the position of the first co-occurring word, and q denotes the position of the second co-occurring word within the window. The variable L defines the window’s length, setting the boundary for the number of words considered in the co-occurrence analysis.

Subsequently, the graph’s edge weight connecting two nodes is calibrated based on their co-occurrence and relative positions, producing a graph that reflects both co-occurrence and positional relationships between words. This enriched representation benefits subsequent learning tasks.

Our methodology is informed by the insights presented in CP-GNN77, which underscores the significant influence of the relative and contextual positions of words on the effectiveness of Graph Neural Networks in text categorization. While CP-GNN developed a Position-aware Graph Attention Module to account for word positional relationships, our approach simplifies this by calculating edge weights as a function of the distance between co-occurring words and their frequency of co-occurrence within a sliding window. Our experimental results validate that this architectural simplification retains the advantages of incorporating positional information into the word representation, thereby simplifying the training process.

Our model advances word node embeddings through the use of Gated Neural Networks, allowing nodes to update their representations by assimilating information from their immediate neighbors. By repeatedly stacking graph layers, our approach facilitates interactions among nodes across multiple hops, capturing complex feature interactions. Diverging from Zhang et al.4,8,31, our innovation lies in integrating the SwiGLU Activation function into the GRU component, enhancing the model’s ability to discern complex patterns in text data.

The Readout Layer effectively synthesizes node features into a cohesive graph-level representation. This is achieved through a strategic assembly of model components, starting with an embedding transformation that processes the inherent attributes of nodes. The application of max and mean pooling techniques further refines the feature aggregation process, ensuring both maximum and average values are captured. Moreover, the adoption of an attention mechanism introduces a nuanced layer of analysis, allowing the model to selectively focus on pertinent features during the graph-level representation phase.

In our model, an attention mechanism calculates attention scores for input features, where \(\sigma\) represents the Sigmoid function, \(x_i\) denotes input features, and \(W_{att}\) along with \(b_{att}\) constitute the attention layer’s weights and bias. These attention scores are then applied to modulate the embeddings, as delineated in the following calculation:

Following this, an activation function, specifically ReLU in our context, is applied to the weighted embeddings, which is defined as follows:

Next, we execute pooling operations on the node features, incorporating max pooling, mean pooling, and employing a multi-layer perceptron (MLP).

Algorithm 1 details the forward propagation mechanism of the Word Model, which accepts a graph representing a sequence of words as input. It employs a sequence of graph neural network (GNN) layers in conjunction with GRU cells to enable the transfer and extraction of information. Specifically, the SwiGLU Activation function is utilized to activate nodes within the GNN, and the Graph Layer function facilitates information propagation throughout the graph. The process culminates in the Readout Layer function, which aggregates and pools information from the graph nodes to produce the final output tensor.

Sentence-based graph construction and interaction and readout

We delve into the intricacies of text representation within graph neural networks, acknowledging the significance of word nodes in capturing themes and emotions in texts-for instance, positive sentiments identifiable by key terms such as ”like” or ”very good.” However, as demonstrated by Vaibhav et al. (2019), the interactions between sentences, particularly in various types of news articles, are crucial for delineating the logical structure of a text. Their research emphasized sentence interactions as the basis for graph construction, proposing a semantic graph neural network model for classifying fake news. Building upon this concept, ConTextING78 integrates word node graphs with BERT embeddings, yielding superior results compared to the use of word graphs in isolation.

Our methodology diverges from these models by establishing separate networks for each page, utilizing words and sentences as nodes without merging these graphs for information dissemination. This distinctive strategy enables us to intricately understand the nuances of the relationships between words and sentences within the text.

This study utilizes a pre-trained BERT model to convert sentences into embeddings for each graph node. We calculate edge weights by amalgamating cosine similarity with positional bias, highlighting the significance of the positioning of sentences within the document’s context.

We now proceed to discuss the construction and interaction of sentence-based graphs. Utilizing a pre-trained BERT model for sentence vectorization into embeddings, we determine edge weights through a blend of cosine similarity and positional bias. This process includes computing the positional bias weight, gated positional weight, cosine similarity, and ultimately, the final edge weight:

The position bias weight is calculated as the reciprocal of the position index plus one. The Gated Position Weight \(W_{GP}\)is then derived by applying the hyperbolic tangent (tanh) function to the position bias weight. Cosine Similarity between entities i and j is defined as the dot product of their respective vectors. The Edge Weight \(W_{{edge}(i,j)}\) is determined by multiplying the aforementioned three weights.

In our discussion on training strategies, we draw parallels to the word-level network but with a specific focus on enhancing the GRU module. To encompass both forward and backward contextual semantics within phrases, a bidirectional GRU is employed. The integration of the SwiGLU activation function within the GRU module significantly improves the model’s capability to decode complex sentence structures and semantic subtleties.

Algorithm 2 outlines the forward propagation routine of the Sentence Model, which differs from the Word Model by processing graphs that represent entire sentences. Echoing Algorithm 1, the GraphLayerSeq function facilitates information transfer across the graph and enables the operation of gated loop units via the BiGRUUnit function. The ReadoutLayerMerge function concludes the process by aggregating, pooling, and processing through an MLP the nodes in the graph to produce the final output tensor.

Word and sentence graph fusion

During the feature fusion phase, we concentrate on integrating the outputs from each Readout Layer to cohesively train both word-level and sentence-level graph representations. To refine the merging of these features, we introduce two learning parameters aimed at achieving optimal fusion. This method stands in contrast to TensorGCN67, which utilizes an intra- and inter-graph propagation approach across three distinct types of graphs. Conversely, our model is specifically designed to learn the integration of the two unique sets of features, thereby simplifying the fusion process. Despite our network’s comparative simplicity, it demonstrates marginally better performance than TensorGCN, underscoring the effectiveness of our streamlined feature fusion approach in accurately representing the textual input.

Where:

-

\(X_{word}\) represents the output from the word-level graph neural network;

-

\(X_{sen}\) denotes the output from the sentence-level graph neural network;

-

\({\alpha }_1\) and \({\alpha }_2\) are coefficients that respectively weight the contributions of \(X_{word}\) and \(X_{sen}\).

Algorithm 3 facilitates the seamless integration of word-level and sentence-level information derived from input graphs. By employing graph-based neural networks alongside bidirectional GRU units within the ‘ModelWord’ and ‘ModelSeq’ functions, it effectively discerns complex contextual relationships. The algorithm amalgamates these insights using weighted parameters, ultimately producing an output tensor that reflects normalized probabilities. In essence, Algorithm 3 provides a streamlined approach to achieving a holistic contextual understanding, pivotal for natural language processing applications.

Time and space complexity analysis

In this paper, we propose the CoGraphNet model, which handles text representation by constructing word and sentence graphs. To address the reviewers’ concerns about the computational complexity of the model, this section will provide a detailed analysis of the time and space complexity of the CoGraphNet model.

Time complexity

The time complexity of the CoGraphNet model mainly consists of the following parts: the construction of word and sentence graphs, the computation of GGNN layers, the computation of GRU and SwiGLU activation functions, and the processing of the fusion layer. We assume that the input document contains \(n_w\) words and \(n_s\) sentences, with each node’s feature dimension being d, and the number of edges in the word and sentence graphs being \(E_w\) and \(E_s\), respectively. The number of GGNN layers is L.

-

Construction of Word and Sentence Graphs:

-

The worst-case time complexity for constructing the word graph is \(O\left( n_w^2 \right)\), where each pair of words is connected. The complexity of constructing the sentence graph is \(O \left( n_s^2 \right)\).

-

-

Computational complexity of different neural network layers:

-

For each GGNN layer, node features are propagated through edges, with the computation complexity for each layer of the word and sentence graphs being \(O \left( E_w \cdot d \right)\) and \(O \left( E_s \cdot d \right)\), respectively. The total GGNN computation complexity is \(O(L \cdot (E_w \cdot d + E_s \cdot d))\).

-

GRU operates on each node of the word and sentence graphs, with time complexity of \(O(n_w \cdot d^2)\) (word graph) and \(O(n_s \cdot d^2)\) (sentence graph). Due to the fact that SwiGLU only acts on the node representation of each word or sentence, and its computation relies solely on the feature dimension d of the nodes, there is no significant dependence on the number of nodes \(n_w\) or edges \(E_w\) or \(E_s\). Therefore, when processing large-scale graph data, the computational complexity of SwiGLU is relatively small, and its impact on the overall model time complexity can be ignored.

-

-

Processing of the fusion layer:

-

The fusion layer combines the representations of the word and sentence graphs, with complexity \(O(n_w + n_s)\).

-

In summary, the total time complexity of the model is:

Space complexity

The space complexity of the CoGraphNet model mainly consists of the following parts: storage of node features, storage of graph edge information, and storage of GRU and SwiGLU parameters.

The storage space required for the node feature matrices of the word and sentence graphs is \(O(n_w \cdot d)\) and \(O(n_s \cdot d)\), respectively.

The storage space for edge weights in the word and sentence graphs is \(O(E_w)\) and \(O(E_s)\), respectively. The storage space complexity for GRU parameters is \(O(L \cdot d^2)\).

Therefore, the total space complexity of the model is:

According to the above analysis, the time complexity of the CoGraphNet model is primarily affected by the graph convolutional network and GRU, while the space complexity is mainly determined by the storage of node features and GRU parameters. In the application of large-scale text data, the complexity of the model is expected and can efficiently handle text classification tasks in practice.

Experiments

In this section, we rigorously evaluate our model, CoGraphNet, through a comprehensive set of experimental analyses. The primary aim of these experiments is to assess the overall performance and advantages of CoGraphNet relative to existing models. Additionally, we seek to quantify the unique contributions of word and sentence graph structures within our model during the fusion process, thereby elucidating how each component influences the final results.

Experimental settings

Datasets. In our analysis, we utilize four benchmark datasets, aligning with those utilized in the studies by1 and Lin et al.21. These datasets include: (1) the MR dataset for sentiment analysis of movie reviews, categorizing them into positive or negative sentiments; (2) the R52 dataset for classifying documents from the Reuters newswire into 52 categories; (3) the Ohsumed dataset for classifying medical abstracts into 23 categories of cardiovascular diseases; and (4) the 20NG news dataset, as used in BertGCN21, for the classification of news articles. Table 1 provides detailed statistics and additional information about each dataset, furnishing a detailed foundation for our experimental framework.

Implementation details. In the preprocessing of word graph data, we employ tokenization and stop word removal as foundational steps, aligning with the methodologies of Blanco and Lioma (2012) and Rousseau et al. (2015). Additionally, we initialize vertex embeddings with word features \(h \in {\mathbb {R}}^{|V| \times d}\)for each document’s graph to facilitate the contextual propagation of these features during word interactions. For sentence node vectorization, we leverage the sentence segmentation capabilities of the Natural Language Toolkit (NLTK) library, chosen for its comprehensive suite of English text processing tools, including tokenization, parsing, and classification.

Our experimental framework replicates the setup described by Zhang et al.4,8,31, encompassing the dropout rate, optimizer, and learning rate specifications. Sentence graph training utilizes BERT model vectorization with an embedding dimension of d = 768. The models for both word and sentence graphs are trained independently prior to the fusion and optimization phase, maintaining a learning rate of 0.00001 throughout the process.

Baselines. The field of text processing and graph neural networks (GNNs) features a wide array of models, ranging from traditional approaches like CNN and LSTM to versatile methods such as fastText, and cutting-edge graph-based techniques including Text GCN and Text GNN. Transformer-based models such as TG-Transformer, BertGCN, and RoBERTaGCN, which utilize attention mechanisms, and integrations like BertGAT and RoBERTaGAT, which combine BERT embeddings with Graph Attention Networks, exemplify the progressive nature of research bridging text processing with graph-based methodologies. Additionally, specialized models like HieGAT, ConTextING, MSABertGCN, and CP-GNN reflect the diverse explorations into text representation, underscoring the dynamic evolution of the field.

-

CNN (Convolutional Neural Network)51: Traditionally utilized in image processing, this neural network architecture can also be adapted for text data analysis through the application of one-dimensional convolutions.

-

LSTM (Long Short-Term Memory)71: This recurrent neural network (RNN) architecture is specifically designed to address and capture long-term dependencies in sequential data.

-

FastText79: A free, open-source, and lightweight library that enables the learning of text representations and supports text classification tasks.

-

Text GCN1: Developed for text data, this graph convolutional network seeks to identify and utilize semantic relationships among words.

-

Text GNN (Graph Neural Network)1: This neural network model is tailored for graph-structured data and holds potential for application in text data analysis.

-

Tensor GCN67: This graph convolutional network enhances its capabilities by integrating tensor structures, enabling the modeling of more complex relationships.

-

TextING4,8,31: This method innovates graph creation from text data, focusing on the co-occurrence and positional information of words to structure graphs.

-

TextING-M4,8,31: An adaptation of the original TextING approach, this variant introduces modifications to refine the method further.

-

TG-Transformer3: Leveraging transformer technology, this model excels in learning text representation, particularly in capturing long-range dependencies within text.

-

BertGCN21: Merging BERT (Bidirectional Encoder Representations from Transformers) with graph convolutional networks, this model achieves a synergistic effect in text processing and analysis.

-

RoBERTaGCN21: Building on BertGCN, this model utilizes RoBERTa, an optimized variant of BERT, to enhance text classification and representation.

-

BertGAT21: This model combines BERT embeddings with Graph Attention Networks (GAT) for improved attention-driven text analysis.

-

RoBERTaGAT21: Similar in approach to BertGAT, it integrates RoBERTa embeddings with GAT for enhanced model performance.

-

HieGAT37: A Heterogeneous Graph Attention Network that facilitates the processing of diverse data types within a unified framework.

-

ConTextING-BT78: Represents an evolved version of ConTextING, introducing modifications for better performance and adaptability.

-

w.GAT-BT78: An updated version of the w.GAT model, introducing enhancements for improved performance.

-

ConTextING-RBT78: This model represents an advanced iteration of ConTextING, incorporating modifications to optimize its functionality.

-

w.GAT-RBT78: A refined edition of the w.GAT framework, tailored to bolster its analytical capabilities.

-

MSABertGCN22: This innovative model merges Multiple Self-Attention mechanisms with BERT and Graph Convolutional Networks, offering a robust approach to text analysis.

-

Han-LT80: A sophisticated hierarchical attention network designed to enhance comprehension of extended texts.

-

Hypergraph Convolutional Neural Networks with Multi-feature Fusion (HGCNN-MFF)28: Leveraging hypergraph structures, this model integrates multi-feature fusion into convolutional neural networks for enriched data representation.

-

CP-GNN (Contextualized Property Graph Neural Network)77: This graph neural network excels by utilizing contextualized information, facilitating nuanced data analysis and interpretation.

Experimental parameter settings: For our experiments, we determined hyperparameters by reviewing pertinent literature and performing preliminary tests. Critical settings, including batch size and learning rate, underwent iterative refinement based on their performance against a validation dataset. This meticulous tuning process, vital for enhancing the model’s precision and computational efficiency, culminated in the selection of the values detailed in Table 2. These parameters are fundamental to the model’s efficacy and have been precisely adjusted to guarantee dependable outcomes.

Main results

In our analysis, CoGraphNet outperformed other models, as shown in Table 3, particularly enhancing foundational methods like TextING for complex and specialized articles. Our model’s word and sentence graph constructions demonstrated superior performance compared to baseline models due to CoGraphNet’s thorough analysis of local features and semantic relationships, leading to improved classification accuracy. We benchmarked against several foundational models, including Text GCN and TextING, noting their innovative contributions to graph neural networks. Inspired by Tensor GCN’s heterogeneous graphs and using BertGCN for phrase vectorization, our approach integrates sophisticated semantic and contextual relationships, aligning with ConTextING’s exploration of these dimensions.

CoGraphNet excelled on the Ohsumed dataset, which includes challenging medical articles, showcasing the model’s effectiveness in handling specialized content through its dual graph analysis. Additionally, CoGraphNet achieved strong results on the MR dataset, demonstrating its ability to integrate complex ___domain knowledge and nuanced linguistic understanding for accurate text classification.

Specifically, on the Ohsumed dataset, CoGraphNet achieved a notable accuracy of 73.34%, establishing it as a formidable tool for medical document classification. MSABertGCN also showed significant accuracy at 74.72%, with other models like Text GCN, Text GNN, and Tensor GCN displaying competitive performances.

CoGraphNet achieved an accuracy of 83.00%. on the MR dataset, which is used for sentiment analysis of movie reviews. While BERT-based models such as BertGCN, RoBERTaGCN, and BertGAT demonstrated higher accuracies, CoGraphNet’s performance across various datasets highlights its adaptability and effectiveness in different text classification scenarios. This adaptability is crucial for real-world applications where datasets can vary widely in terms of content and structure. CoGraphNet’s consistent results across these diverse datasets underscore its robustness and generalization capability. Despite not achieving the highest accuracy compared to BERT-based models, CoGraphNet provides a balanced approach, offering strong performance with fewer computational resources and shorter training times.

On the 20NG dataset, CoGraphNet maintained a high accuracy of 91.5%, showcasing its efficacy in document categorization across 20 different newsgroups. This high performance illustrates CoGraphNet’s ability to effectively categorize documents into a broad range of topics. While models like ConTextING-BT, w.GAT-RBT, and Han-LT use various strategies and demonstrate their own strengths, CoGraphNet’s combination of high accuracy, simplicity, and efficiency highlights its practical advantages. The model’s straightforward approach makes it particularly valuable for real-world document categorization tasks, where high performance must be balanced with computational efficiency.

The R52 dataset, CoGraphNet achieved an impressive accuracy of 96.1%, confirming its effectiveness in classifying news articles into 52 categories. This exceptional performance demonstrates CoGraphNet’s capability to manage complex text classification tasks with many categories. Notably, CoGraphNet also offers a balance of high accuracy and resource efficiency. Although models like ConTextING-RBT and Text GCN provide competitive performance, they often require longer training times and more computational resources. CoGraphNet’s efficiency, combined with its high accuracy, makes it a practical choice for text classification tasks, especially in scenarios where computational resources are limited and rapid processing is essential.

Case study

We utilized the CoGraphNet graph neural network model to analyze three distinct datasets: R52, Ohsumed, and MR. This involved examining the distribution of node weights within the GNN after training across these datasets. Our findings are comprehensively illustrated through comparisons and visualizations in Figs. 2, 3, and 4 using t-SNE, providing a clear visualization of the data distribution characteristics. Additionally, Figure 5 employs a density plot to further elucidate the distribution features of the data.

The visualizations underscore that our model, CoGraphNet, delineates clearer clusters with significant distances between different categories, in contrast to the TF-IDF model, whose clusters appear more compact and less defined. This distinction suggests that our model’s weight matrices more effectively encapsulate data features, thereby enhancing classification accuracy.

We applied the CoGraphNet graph neural network model to analyze three distinct datasets: R52, Ohsumed, and MR, focusing on the post-training distribution of node weights within the GNN across these datasets. This analysis was then contrasted with the traditional TF-IDF approach for classification. Our findings and comparative analysis are detailed in Fig. 5.

The figure compares word or sentence node weight distributions in R52, Ohsumed, and MR datasets using CoGraphNet and TF-IDF methods. Distributions are distinguished by colors for each method. It highlights differences in node weight allocation across datasets, where nodes correspond to article components.

Density plots from t-SNE analyses of each dataset, comparing CoGraphNet with TF-IDF, reveal distinct node weight distributions. The MR dataset shows a wide distribution of weights, indicating varied node influence on classification. A similar, though slightly different, pattern is observed in the Ohsumed dataset, suggesting unique node influence. The R52 dataset displays an even broader spread, enhancing the model’s ability to identify influential nodes. These plots collectively demonstrate that CoGraphNet fosters a diverse distribution of node weights, reflecting its approach to classification by leveraging a wide range of features rather than a few key nodes. This broad distribution underscores CoGraphNet’s exceptional interpretability and its nuanced handling of dataset complexity.

For document learning with CoGraphNet, we further investigate and visualize the attention layer, specifically the readout function. As illustrated in Fig. 6, the highlighted words are directly proportional to the attention weights. This indicates a positive correlation with the sentiment labels. This visualization helps interpret the working principle of CoGraphNet in sentiment analysis.

CoGraphNet for classification. The model analyzes the text, extracts positive words such as “exciting,” “stellar,” and “remarkable,” and assigns corresponding weights to each word. These weights reflect the importance of each vocabulary in predicting overall emotions. Based on the vocabulary weights calculated by the model, we calculate the overall sentiment prediction value of the text. In this way, we can quantify the emotional tendency of the text and classify it accordingly.

Ablation study

In this section, regarding the model structure, we executed distinct tests to evaluate the performance under various conditions: utilizing only the Word graph (Word G), employing only the Sentence graph (Sentence G), omitting the SwiGLU activation function from both the Word and Sentence graphs independently, excluding positional bias weights from both graphs separately, and eliminating the bidirectional GRU (Bi-GRU) from the Sentence G. The outcomes of these experiments are detailed in Table 4.

For our model’s training strategies, we investigated various approaches to optimize the combined model:

-

Joint Training of Sentence, Word, and Fusion Models: We increased the learning rate to 0.01 and unlocked all parameters. However, this approach led to highly variable results, manifesting in unstable outcomes.

-

Locking Word and Sentence Model Parameters, Fine-Tuning Only the Fusion Part: This technique delivered the most consistent outcomes. Although it did not achieve exceptionally high scores, the performance reliably exceeded that of baseline models.

-

Locking the Parameters of the Word or Sentence Model and Adjusting the Other Two Components: The outcomes of this method were on par with the second approach, focusing on fine-tuning only the fusion component.

Despite significant advancements, numerous areas remain unexplored, marking avenues for future research. In constructing word graphs, the optimal balance between word position and co-occurrence frequency as edge weights has yet to be determined. Currently, both elements are weighted equally, but adjusting this balance, including the consideration of words’ left and right positional contexts, could potentially improve model performance. Future studies will aim to identify the most effective combination of these factors. Additionally, we have not yet experimented with progressively unfreezing layers to determine the optimal fine-tuning strategy. Moreover, advanced techniques for integrating word and sentence graphs remain to be explored. Delving into these innovative fusion methods may uncover new strategies to enhance model efficacy.

Conclusion and discussion

The introduction of CoGraphNet provides a meaningful step forward in text categorization.While improving classification accuracy, the model also offers valuable insights into textual data through its word-sentence heterogeneous graph structure. By effectively capturing the relationships between words and sentences, CoGraphNet provides a more comprehensive representation of complex textual information compared to traditional models.

Our experimental results suggest that CoGraphNet performs well, particularly with specialized and complex texts. Its precision in article classification highlights its potential across various datasets, demonstrating encouraging generalization capabilities. Additionally, CoGraphNet contributes to improved interpretability by allowing the identification of key phrases and terms that influence classification outcomes, offering greater transparency in the model’s decision-making process.

In conclusion, CoGraphNet makes a meaningful contribution to text classification by balancing accuracy, interpretability, and versatility. There is still room for further refinement, and future work will focus on optimizing the model and exploring its potential applications across different domains.

Data availability

The datasets used in this study are sourced from relevant institutions or research groups, which have provided publicly accessible datasets. The relevant datasets and processed data can be downloaded from the following links: http://disi.unitn.it/moschitti/corpora.htm and https://github.com/CRIPAC-DIG/TextING/tree/master/data/corpus.

References

Yao, L., Mao, C. & Luo, Y. Graph convolutional networks for text classification. In Proceedings of the AAAI Conference on Artificial Intelligence (2019).

Liu, X., You, X., Zhang, X., Wu, J., & Lv, P. Tensor graph convolutional networks for text classification. In Proceedings of the AAAI Conference on Artificial Intelligence, vol. 34 8409–8416 (2020).

Zhang, H. & Zhang, J. Text graph transformer for document classification. In EMNLP (2020).

Zhang, Y., Yu, X., Cui, Z., Wu, S., Wen, Z. & Wang, L. Every document owns its structure: inductive text classification via graph neural networks. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics (2020).

Xie, Q., Huang, J., Du, P., Peng, M. & Nie, J. Y. Inductive topic variational graph auto-encoder for text classification. In Proceedings of the 2021 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies (2021).

Xu, S. & Xiang, Y. Frog-GNN: multi-perspective aggregation-based graph neural network for few-shot text classification. Expert Syst. Appl. 176, 114795 (2021).

Huang, L., Ma, D., Li, S., Zhang, X. & Wang, H. Text level graph neural network for text classification. arXiv preprint arXiv:1910.02356 (2019).

Zhang, Y., Yu, X., Cui, Z., Wu, S., Wen, Z. & Wang, L. Every document owns its structure: Inductive text classification via graph neural networks. arXiv preprint arXiv:2004.13826 (2020).

Wang, Y., Wang, S., Yao, Q. & Dou, D. Hierarchical heterogeneous graph representation learning for short text classification. arXiv preprint arXiv:2111.00180 (2021).

Xiao, L., Xue, Y., Wang, H. & Hu, X. Exploring fine-grained syntactic information for aspect-based sentiment classification with dual graph neural networks. Neurocomputing 471, 48–59 (2021).

Zhang, S., Gong, H. & She, L. An aspect sentiment classification model for graph attention networks incorporating syntactic, semantic, and knowledge (2023).

Zhang, C., Zhu, H., Peng, X., Wu, J. & Xu, K. Hierarchical information matters: Text classification via tree based graph neural network. arXiv preprint arXiv:2110.02047 (2021).

Piao, Y., Lee, S., Lee, D. & Kim, S. Sparse structure learning via graph neural networks for inductive document classification. In Proceedings of the AAAI Conference on Artificial Intelligence, vol. 36 11165–11173 (2022).

Yang, Y. & Cui, X. Bert-enhanced text graph neural network for classification. Entropy 23(11), 1536 (2021).

Yang, X., Yang, K., Cui, T., Chen, M. & He, L. A study of text vectorization method combining topic model and transfer learning. Processes 10(2), 350 (2022).

Liu, B., Li, X., Lee, W. S., & Yu, P. S. Text classification by labeling words. In Aaai, vol. 4425–430 (2004).

Yang, Z., Yang, D., Dyer, C., He, X., Smola, A. & Hovy, E. Hierarchical attention networks for document classification. In Proceedings of the 2016 conference of the North American chapter of the association for computational linguistics: human language technologies 1480–1489) (2016).

Wang, T., & Wan, X. Hierarchical attention networks for sentence ordering. In Proceedings of the AAAI Conference on Artificial Intelligence, vol. 33 7184–7191 (2019).

Han, X., & Zhang, Z. Combining interpretability and filtering algorithm for sequence recommendation. In 2022 IEEE 8th International Conference on Computer and Communications (ICCC) 2321–2325 (IEEE, 2022).

Narang, S. et al. Do transformer modifications transfer across implementations and applications?. arXiv preprint arXiv:2102.11972 (2021).

Lin, Y., Meng, Y., Sun, X., Han, Q., Kuang, K., Li, J., & Wu, F. Bertgcn: Transductive text classification by combining gcn and bert. arXiv preprint arXiv:2105.05727 (2021).

Yang, X., & Liu, W. Maximal-semantics-augmented BertGCN for text classification. Int. J. Asian Lang. Process. (2023).

Hajibabaee, P., Malekzadeh, M., Heidari, M., Zad, S., Uzuner, O., & Jones, J. H. An empirical study of the graphsage and word2vec algorithms for graph multiclass classification. In 2021 IEEE 12th Annual Information Technology, Electronics and Mobile Communication Conference (IEMCON) 0515–0522 (IEEE, 2021).

Liu, Y., & Gou, X. A text classification method based on graph attention networks. In 2021 International Conference on Information Technology and Biomedical Engineering (ICITBE) 35–39 (IEEE, 2021).

Yang, T. et al. HGAT: heterogeneous graph attention networks for semi-supervised short text classification. ACM Trans. Inf. Syst. (TOIS) 39(3), 1–29 (2021).

Wang, H. & Li, F. A text classification method based on LSTM and graph attention network. Connect. Sci. 34(1), 2466–2480 (2022).

Choudhary, A., & Arora, A. GIN-FND: Leveraging users’ preferences for graph isomorphic network driven fake news detection. Multimedia Tools Appl. 2023, 1–27 (2023).

Wang, Z. et al. Dynamic multi-task graph isomorphism network for classification of alzheimer’s disease. Appl. Sci. 13(14), 8433 (2023).

Pham, H. V., Thanh, D. H. & Moore, P. Hierarchical pooling in graph neural networks to enhance classification performance in large datasets. Sensors 21(18), 6070 (2021).

Yu, Z., & Gao, H. Molecular representation learning via heterogeneous motif graph neural networks. In International Conference on Machine Learning 25581–25594 (PMLR, 2022).

Zhang, Y., Meng, Y., Huang, J., Xu, F. F., Wang, X., & Han, J. Minimally supervised categorization of text with metadata. In Proceedings of the 43rd International ACM SIGIR Conference on Research and Development in Information Retrieval 1231–1240 (2020).

Huang, J., Tao, N., Chen, H., Deng, Q., Wang, W., & Wang, J. Semi-supervised text classification based on graph attention neural networks. In 2021 4th International Conference on Artificial Intelligence and Big Data (ICAIBD) 325–330 (IEEE, 2021).

Zhang, C., Song, D., Huang, C., Swami, A., & Chawla, N. V. Heterogeneous graph neural network. In Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining 793–803 (2019).

Li, C., Wang, Z., Zhao, Z., Duan, H. & Zeng, Q. HGNN-ETA: Heterogeneous graph neural network enriched with text attribute. World Wide Web 26(4), 1913–1934 (2023).

You, R., Zhang, Z., Dai, S., & Zhu, S. HAXMLNet: Hierarchical attention network for extreme multi-label text classification. arXiv preprint arXiv:1904.12578 (2019).

Sun, S., Sun, Q., Zhou, K., & Lv, T. Hierarchical attention prototypical networks for few-shot text classification. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP) 476–485 (2019).

Wang, Y., Wang, S., Yao, Q., & Dou, D. Hierarchical heterogeneous graph representation learning for short text classification. arXiv preprint arXiv:2111.00180 (2021).

Xu, X. et al. Short text classification based on hierarchical heterogeneous graph and LDA fusion. Electronics 12(12), 2560 (2023).

Li, Y., Tarlow, D., Brockschmidt, M., & Zemel, R. Gated graph sequence neural networks. arXiv preprint arXiv:1511.05493 (2015).

Zhang, Z., & Hawkins, C. Variational Bayesian inference for robust streaming tensor factorization and completion. In 2018 IEEE International Conference on Data Mining (ICDM) 1446–1451) (IEEE, 2018).

Fang, S., Zhe, S., Lee, K. C., Zhang, K., & Neville, J. Online bayesian sparse learning with spike and slab priors. In 2020 IEEE International Conference on Data Mining (ICDM) 142–151 (IEEE, 2020).

Vapnik, V. N. An overview of statistical learning theory. IEEE Trans. Neural Netw. 10(5), 988–999 (1999).

Tong, S., & Koller, D. Support vector machine active learning with applications to text classification. J. Mach. Learn. Res. 2, 45–66 (2001).

Peng, F., & Schuurmans, D. Combining naive Bayes and n-gram language models for text classification. In European Conference on Information Retrieval 335–350 (Springer, 2003).

Farhoodi, M., Yari, A., & Sayah, A. N-gram based text classification for Persian newspaper corpus. In The 7th International Conference on Digital Content, Multimedia Technology and its Applications 55–59 (IEEE, 2011).

Lilleberg, J., Zhu, Y., & Zhang, Y. Support vector machines and word2vec for text classification with semantic features. In 2015 IEEE 14th International Conference on Cognitive Informatics & Cognitive Computing (ICCI* CC) 136–140 (IEEE, 2015).

Jang, B., Kim, M., Harerimana, G., Kang, S. U. & Kim, J. W. Bi-LSTM model to increase accuracy in text classification: combining Word2vec CNN and attention mechanism. Appl. Sci. 10(17), 5841 (2020).

Dharma, E. M., Gaol, F. L., Warnars, H. L. H. S. & Soewito, B. The accuracy comparison among word2vec, glove, and fasttext towards convolution neural network (cnn) text classification. J. Theor. Appl. Inf. Technol. 100(2), 31 (2022).

Wang, D., Liu, P., Zheng, Y., Qiu, X., & Huang, X. Heterogeneous graph neural networks for extractive document summarization. arXiv preprint arXiv:2004.12393. (2020).

Yun-tao, Z., Ling, G. & Yong-cheng, W. An improved TF-IDF approach for text classification. J. Zhejiang Univ.-Sci. A 6, 49–55 (2005).

Wang, S., Huang, M., & Deng, Z. Densely connected CNN with multi-scale feature attention for text classification. In IJCAI. vol. 18 4468–4474 (2018).

Li, X., Qian, B., Wei, J., Li, A., Liu, X., & Zheng, Q. Classify EEG and reveal latent graph structure with spatio-temporal graph convolutional neural network. In 2019 IEEE International Conference on Data Mining (ICDM) 389–398 (IEEE, 2019).

Das, M., Pratama, M., Savitri, S., & Zhang, J. Muse-rnn: a multilayer self-evolving recurrent neural network for data stream classification. In 2019 IEEE International Conference on Data Mining (ICDM) 110–119 (IEEE, 2019).

Liu, P., Qiu, X., & Huang, X. Recurrent neural network for text classification with multi-task learning. arXiv preprint arXiv:1605.05101 (2016).

Sun, C., Qiu, X., Xu, Y., & Huang, X. How to fine-tune bert for text classification?. In Chinese Computational Linguistics: 18th China National Conference, CCL 2019, Kunming, China, October 18–20, 2019, Proceedings 18 194–206 (Springer International Publishing, 2019).

González-Carvajal, S., & Garrido-Merchán, E. C. Comparing BERT against traditional machine learning text classification. arXiv preprint arXiv:2005.13012 (2020).

Garg, S., & Ramakrishnan, G. Bae: Bert-based adversarial examples for text classification. arXiv preprint arXiv:2004.01970 (2020).

Balkus, S. V., & Yan, D. Improving short text classification with augmented data using GPT-3. Natural Lang. Eng. 2022, 1–30 (2022).

Zhang, R., Wang, Y. S., & Yang, Y. Generation-driven contrastive self-training for zero-shot text classification with instruction-tuned GPT. arXiv preprint arXiv:2304.11872 (2023).

Xiao, L., Wu, X. & Yang, S. Cross-modal fine-grained alignment and fusion network for multimodal aspect-based sentiment analysis. Inf. Process. Manage. 60, 6 (2023).

Liu, H., Li, X. & Lu, W. Graph augmentation networks based on dynamic sentiment knowledge and static external knowledge graphs for aspect-based sentiment analysis. Expert Syst. Appl. (2024).

Xiao, L., Wu, X. & Wu, W. Multi-channel attentive graph convolutional network with sentiment fusion for multimodal sentiment analysis. In ICASSP 2022–2022 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP) (2022).

Daqi, G. & Yan, J. Classification methodologies of multilayer perceptrons with sigmoid activation functions. Pattern Recogn. 38(10), 1469–1482 (2005).

Jiang, M. et al. Text classification based on deep belief network and softmax regression. Neural Comput. Appl. 29, 61–70 (2018).

Agarap, A. F. Deep learning using rectified linear units (relu). arXiv preprint arXiv:1803.08375 (2018).

Mastromichalakis, S. ALReLU: A different approach on Leaky ReLU activation function to improve Neural Networks Performance. arXiv preprint arXiv:2012.07564 (2020).

Liu, Y., Ma, J., Tao, Y., Shi, L., Wei, L., & Li, L. Hybrid neural network text classification combining tcn and gru. In 2020 IEEE 23rd International Conference on Computational Science and Engineering (CSE) 30–35 (IEEE, 2020).

Devi, T., & Deepa, N. A novel intervention method for aspect-based emotion Using Exponential Linear Unit (ELU) activation function in a Deep Neural Network. In 2021 5th International Conference on Intelligent Computing and Control Systems (ICICCS) 1671–1675 (IEEE, 2021).

Zulqarnain, M., Ghazali, R., Hassim, Y. M. M. & Aamir, M. An enhanced gated recurrent unit with auto-encoder for solving text classification problems. Arab. J. Sci. Eng. 46(9), 8953–8967 (2021).

Zulqarnain, M., Ghazali, R., Ghouse, M. G., & Mushtaq, M. F. Efficient processing of GRU based on word embedding for text classification. Int. J. Inf. Visualiz. 3(4), 377–383 (2019).

Zhou, C., Sun, C., Liu, Z., & Lau, F. A C-LSTM neural network for text classification. arXiv preprint arXiv:1511.08630 (2015).

Li, X., Li, Z., Xie, H., & Li, Q. Merging statistical feature via adaptive gate for improved text classification. In Proceedings of the AAAI Conference on Artificial Intelligence, vol. 35 13288–13296 (2021).

Mercioni, M. A., & Holban, S. P-swish: activation function with learnable parameters based on swish activation function in deep learning. In 2020 International Symposium on Electronics and Telecommunications (ISETC) 1–4 (IEEE, 2020).

Xuyang, G., Junyang, Y., & Shuwei, X. Text classification study based on graph convolutional neural networks. In 2021 International Conference on Internet, Education and Information Technology (IEIT) 102–105 (IEEE, 2021).

Nguyen, T. T., Wilson, C., & Dalins, J. Fine-tuning llama 2 large language models for detecting online sexual predatory chats and abusive texts. arXiv preprint arXiv:2308.14683 (2023).

Tan, Z. et al. Dynamic embedding projection-gated convolutional neural networks for text classification. IEEE Trans. Neural Netw. Learn. Syst. 33(3), 973–982 (2021).

Tan, Y., & J. Wang. Word Order is Considerable: Contextual Position-aware Graph Neural Network for Text Classification. International Joint Conference on Neural Networks (IJCNN). pp. 1–8 (Padua, Italy, 2022). https://doi.org/10.1109/IJCNN55064.2022.9891895.

Huang, Y.-H., Chen, Y.-H., & Chen, Y.-S. ConTextING: granting document-wise contextual embeddings to graph neural networks for inductive text classification. In Proceedings of the 29th international conference on computational linguistics (2022).

Joulin, A. et al. Bag of tricks for efficient text classification. arXiv preprint arXiv:1607.01759 (2016).

Ai, W. et al. A multi-semantic passing framework for semi-supervised long text classification. Appl. Intell. 53(17), 20174–20190 (2023).

Author information

Authors and Affiliations

Contributions

Pengyi Li†: Formal analysis, Investigation, Methodology, Validation, Experimentation, Code, Visualization, Writing-original draft, Writing-review. Xueying Fu†: Conceptualization, Investigation, Methodology, Data collection, Experimentation, Code, Validation, Visualization, Writing-original draft. Juntao Chen*: Methodology, Formal analysis, Software, Visualization, Experimentation, Writing-original draft, Writing-review, Supervision. Junyi Hu: Formal analysis, Data collection, Writing-original draft. † Denotes co-first authors with equal contribution and no specific order.

Corresponding authors

Ethics declarations

Competing interests

The authors have no competing interests to declare that are relevant to the content of this article.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Li, P., Fu, X., Chen, J. et al. CoGraphNet for enhanced text classification using word-sentence heterogeneous graph representations and improved interpretability. Sci Rep 15, 356 (2025). https://doi.org/10.1038/s41598-024-83535-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-024-83535-9

Keywords

This article is cited by

-

Big Data Analytics in IoT, social media, NLP, and information security: trends, challenges, and applications

Journal of Big Data (2025)