Abstract

To explore the attitudes of healthcare professionals and the public on applying ChatGPT in clinical practice. The successful application of ChatGPT in clinical practice depends on technical performance and critically on the attitudes and perceptions of non-healthcare and healthcare. This study has a qualitative design based on artificial intelligence. This study was divided into five steps: data collection, data cleaning, validation of relevance, sentiment analysis, and content analysis using the K-means algorithm. This study comprised 3130 comments amounting to 1,593,650 words. The dictionary method showed positive and negative emotions such as anger, disgust, fear, sadness, surprise, good, and happy emotions. Healthcare professionals prioritized ChatGPT’s efficiency but raised ethical and accountability concerns, while the public valued its accessibility and emotional support but expressed worries about privacy and misinformation. Bridging these perspectives by improving reliability, safeguarding privacy, and clearly defining ChatGPT’s role is essential for its practical and ethical integration into clinical practice.

Similar content being viewed by others

Introduction

Chat Generative Pre-trained Transformer (ChatGPT) is a natural language processing (NLP) tool based on a large-scale pre-trained language model developed by OpenAI1. It utilizes the Transformer architecture, which generates, comprehends, translates, and interprets human language. With the advancement of AI technology, the potential of ChatGPT’s application in the medical field has gradually emerged, especially in health education, disease prevention, and patient management2,3,4,5. ChatGPT can aid with medical history collecting, electronic medical record documentation, clinical decision support, patient health management, medical education, clinical research, and scientific writing in clinical practice3,5.

While ChatGPT exhibits proficiency in data processing and information production, the acceptance and attitudes by healthcare professionals and the public will significantly influence its widespread application in clinical practice6,7. Healthcare professionals may have concerns regarding the reliability of the technology in the decision-making process, particularly in intricate or urgent care situations when dependence on ChatGPT-generated recommendations may increase clinical risk8. Likewise, patient trust is crucial; their willingness to allow ChatGPT in the medical process will depend on trust in the technology, data privacy safeguarding, and emotional requirements satisfaction9. ChatGPT-generated advice may lack humanization for many patients, particularly in emotionally sensitive healthcare situations.

Therefore, studying the attitudes of healthcare professionals and the public toward the clinical application of ChatGPT may elucidate potential barriers and facilitators. Comprehending these attitudes enables the formulation of effective methods for integrating ChatGPT into clinical practice, ultimately improving healthcare service efficiency and optimizing the medical experience.

Background

The successful application of ChatGPT in clinical practice depends on technical performance and critically on the attitudes and perceptions of non-healthcare and healthcare6.

The attitudes of non-healthcare, as direct beneficiaries of medical services, will significantly impact the application of ChatGPT in clinical practice10. The researchers concluded that non-healthcare’s willingness to use ChatGPT is associated with expected benefits. Positive sentiments were reflected in younger patients’ willingness to use new technology, particularly about medical information and self-health management. They saw ChatGPT as a source of immediate and convenient advice11. Nevertheless, additional research indicates unfavorable attitudes, with patients exhibiting heightened apprehensions over the trustworthiness of information produced by AI technology, particularly concerning privacy protection and data security2,9. Moreover, the existing standardized recommendations produced by ChatGPT may need more human touch, affecting non-healthcare stakeholders’ participation in healthcare decision-making9. Therefore, non-healthcare’s attitude toward applying ChatGPT in clinical practice can help ensure the adoption of this technology.

At the same time, the attitude of healthcare professionals, as providers of medical services, determines whether ChatGPT can be effectively incorporated into daily clinical work. Positive sentiments are reflected in healthcare’s belief that ChatGPT can significantly improve efficiency in paperwork processing and reduce workload5,7,12,13. However, many healthcare professionals express skepticism over its function in intricate clinical decision-making7,8. Some physicians worry that relying on ChatGPT may weaken their professional judgment and even increase the risk of misdiagnosis. Moreover, ethical issues and the division of responsibility are essential concerns for healthcare professionals, especially in medical errors, where ChatGPT may obfuscate the assignment of accountability5,7,14. Therefore, studying healthcare professionals’ acceptance, concerns, and ethical considerations regarding ChatGPT can help ensure its safe and practical application in clinical environments.

The Internet platform serves as the primary channel for the rapid access and dissemination of ChatGPT information, and the Internet community is most concerned about the development of ChatGPT. ChatGPT, a hot topic on the Chinese Internet, has attracted extensive attention from healthcare and non-healthcare since its emergence15. The Internet is an open platform for discussion, enabling them to quickly access and share experiences and opinions about using ChatGPT. Healthcare professionals and the public can discuss its advantages and disadvantages in clinical practice. However, current research has yet to explore the attitudes of the Internet community regarding the application of ChatGPT in clinical practice, particularly in healthcare and non-healthcare perspectives. Therefore, this study will analyze the attitudes of healthcare and non-healthcare toward using ChatGPT in clinical practice based on Internet data to provide strategic recommendations for the clinical dissemination of ChatGPTI.

Methods

Data collection

This study used keywords to search from November 30, 2022, to September 1, 2024. The keywords are (“CHATGPT” OR “AI”) AND (“Doctor” OR “Nurse” OR “Health” OR “Medical” OR “Medical” OR “Diagnosis” OR “Treatment”) AND (“Intelligent medical” OR “AI consulting” OR “Medical technology innovation” OR “AI-assisted health management”). Social media include Zhihu, Weibo, and Red Book; medical forums include Ding Xiang Yuan and Ai Medicine. The information collected includes time, User type, text content, number of likes, etc. User type contains non-healthcare, health professional, and unknown. Non-healthcare is divided into self, family/friend, celebrity, and media organization. Finally, this study forms the text of non-healthcare as public opinion and the text of health professionals as health professional opinion.

Data cleaning

The data cleaning section is used to remove irrelevant information. This part is divided into four steps: removing irrelevant information, Text normalization, Language detection and filtering, and handling private information. Firstly, irrelevant information should be removed, and data redundancy should be reduced to avoid interfering with the analysis results. It includes removing blatant advertisements or spam comments and deleting duplicate comment content. Secondly, text normalization to ensure consistency and accuracy for data analysis. This part includes six steps: case conversion, removal of punctuation, special characters and emoticons, correction of spelling errors in comments, handling of spaces and acronyms, and removal of URLs. Thirdly, Language detection and filtering: This step can filter out and detect irrelevant language content if the dataset contains languages other than English and Chinese. Fourthly, Handling private information: Sensitive information (e.g., phone numbers, email addresses, etc.) should be deleted or anonymized for people’s privacy.

Validation of relevance

When investigating the availability of ChatGPT in medical and non-medical topics, a two-person test can effectively ensure rigor in data cleansing. Two researchers independently examined the data for relevance and usability in response to common problems such as hidden advertising, misinformation, and irrelevant content. First, the two men sifted through the original data set to find irrelevant or low-quality data, including advertising content, exaggerated misinformation, and repetitive or meaningless content. The two sides then review each other’s labeled data, record and discuss the items that differ, and reach a consensus to reduce the subjective deviation. The new types of issues identified during the review can be used to dynamically update data cleansing rules further to enhance the accuracy and credibility of data analysis.

Sentimental analysis

Selection of the sentiment dictionary

The sentiment dictionary of the Dalian University of Technology was used. This dictionary contains information on word lexical categories, categories, intensity, and polarity and has been adapted and improved in Chinese under Ekman’s emotion classification system16,17,18.

Text segmentation

This part is divided into three steps: Chinese word splitting, processing synonyms, and creating customized medical word lists. Chinese word splitting: Use Jieba, a reliable tool, to split the cleaned text into individual words. The lexical information (e.g., nouns, verbs, adjectives, etc.) in the split result is retained for matching emotion words. Handle near-synonyms: Use a thesaurus to replace or tag synonyms to improve vocabulary matching. Creating medical customized word lists to improve the accuracy of sentiment analysis.

Vocabulary matching

This step includes matching sentiment vocabulary, sentiment annotation, and contextual information. First, the segmented text was matched against a predefined sentiment dictionary adapted from the Dalian University of Technology lexicon. Identified words were assigned an emotion category, polarity, and intensity. Second, sentiment words were annotated within the text, linking them to specific emotion categories to facilitate quantification and detailed analysis. Finally, contextual adjustments were made to refine sentiment polarity and intensity, accounting for factors such as negations or amplifiers. For instance, positive words preceded by negations were reassigned to negative polarity, ensuring the accurate representation of emotional nuances. This systematic approach ensured robust and precise sentiment detection, forming the basis for subsequent analysis.

Emotion scoring method

This study utilized a combined weighting and counting approach to compute the overall sentiment score of each comment. Firstly, Sentiment words identified in the text were assigned intensity values based on the sentiment dictionary. Positive words contributed to a positive score, while negative words contributed to a negative score. Secondly, The overall sentiment score was calculated by subtracting the total negative score from the total positive score. Finally, the overall sentiment scores were standardized to a scale (−1 to 1) to ensure consistency and facilitate comparisons. This transformation helped align the results with practical application needs and allowed a more straightforward interpretation of sentiment polarity and intensity.

Testing and optimization

A two-stage validation process was implemented to ensure the reliability and accuracy of sentiment analysis. Firstly, manual Annotation: Two trained annotators independently labeled a subset of the data with sentiment categories. Annotation consistency was calculated using inter-annotator agreement measures to assess the reliability of manual labels. Secondly, comparison with Automated Analysis: The results of the automated sentiment analysis were compared against the manually annotated data. Performance was evaluated using precision, recall, and F1 scores, which balance precision and recall. The study achieved an F1 score of 76, indicating that the automated sentiment analysis met the required standard for accuracy. This iterative process ensured that the sentiment analysis was robust, reliable, and reflective of the original text data.

Content analysis using K-means algorithm

We conducted content analysis using the K-Means Algorithm to delve deeper into the underlying themes and patterns within the textual data. This method, a widely recognized unsupervised machine learning technique, is particularly effective in identifying clusters within large datasets, enabling the extraction of insightful patterns. To ensure the reliability and applicability of this method in our research, we followed a rigorous, step-by-step process validated through prior optimization efforts19. The structured application of the K-Means algorithm was divided into four steps.

-

Step 1: Initialization: The initial stage involves establishing cluster centers to initiate the clustering process. Specifically, K data points were randomly selected from the dataset as initial centroids. The value of K, representing the number of clusters, was determined through a combination of preliminary analysis and ___domain-specific knowledge. Multiple random initializations were performed in certain instances to enhance robustness and ensure the reliability and accuracy of sentiment analysis.

-

Step 2: Iterative optimization: The refinement of cluster centers was achieved through an iterative optimization process designed to minimize variance within clusters. This step unfolded in three phases: Firstly, assigning Data Points to Clusters: For each data point, distances to the K centroids were calculated (using Euclidean distance as the standard). Each data point was then assigned to the cluster with the nearest centroid. Secondly, updating Cluster Centers: After the assignment, each cluster’s mean of data points was calculated to redefine its centroid. These updated centroids ensured that cluster centers accurately reflected their data points. Thirdly, reassignment and Update: This process of assigning data points to clusters and recalculating centroids was repeated iteratively. Refinements continued until the clusters stabilized.

-

Step 3: Termination criteria: The iterative process was concluded when the cluster centers displayed minimal or no significant changes between iterations, signaling convergence. A maximum iteration threshold was also applied to ensure computational efficiency, preventing excessive computations even in scenarios where centroids adjusted slightly between iterations.

-

Step 4: Evaluation and interpretation: The clusters were evaluated for quality and relevance using the elbow method, which determines the optimal number of clusters by assessing within-cluster variance against the number of clusters. Each cluster was then analyzed for prevalent topics, including frequently occurring terms and sentiment scores. The clusters were validated against known categories and expected patterns, providing a robust foundation for interpreting the results.

Result

This study comprised 3,130 comments, amounting to 1,593,650 words. The results for Non-healthcare professionals showed 1856 comments, with 92% self, family/friend; healthcare professionals: 1120; unknown user: 154. Time analysis produced 494 comments for 2022, 1596 for 2023, and 1040 for 2024. Tables 1 and 2 present the results of sentiment analysis and content analysis.

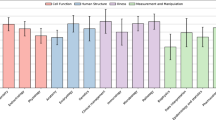

Non-healthcare professionals had seven emotions on the use of ChatGPT in clinical practice. Good emotion included anticipations of tangible outcomes, optimization consulting, and trust in potential results. Happy emotions included emotional support, relief of anxiety, and positive acceptance of feelings. Surprise emotions included exceeding expected results, delivery of information, and curiosity about potential applications. Anger emotion included misapplication, therapeutic misinformation, and ethical issues. Disgust emotions included privacy and data risks, questioning accuracy, over-reliance, and complexity. Fear emotion included unknowns, skepticism about responsiveness, and negative medical experiences. Sad emotions included failure to meet therapeutic expectations, failure to alleviate pain, and loneliness.

Healthcare professionals had seven emotions on the use of ChatGPT in clinical practice. Good emotion included professionalism, innovative development, and efficiency and accuracy expectations. Happy emotions included application satisfaction and workload relief. Surprise emotions included cautiousness of popularization and innovative trends. Anger emotion included limitations, misinformation regarding therapy, and patient over-reliance. Disgust emotion included reliability in the professional field, ethical and legal risk, and job-loss risk. Fear emotion includes a crisis of trust, losing control, and questioning processing capability. Sad emotions included self-blame and the Powerlessness of technological innovations.

Discussion

This study is the first to explore the sentiments of healthcare and non-healthcare professionals toward implementing ChatGPT in clinical practice and to provide feasible recommendations for AI-assisted clinical practice. This study extracted Good, Happy, Surprise, Anger, Disgust, Fear, and Sad emotions. Healthcare and non-healthcare professionals share concerns about misinformation, over-reliance, trust, expectations of actual results, and possible risks. In particular, healthcare professionals were more concerned about the changes and potential risks of ChatGPT to medical work patterns. Non-healthcare professionals had ambivalence between expectations and actual application, reflecting this group’s perception of the need for more ChatGPT in clinical practice.

Sentiment analysis of non-healthcare professionals

Good emotion included anticipations of tangible outcomes, optimization consulting, and trust in potential results. Firstly, the result of the anticipation of tangible outcomes is consistent with Platt and Avishek’s findings20,21. Non-healthcare professionals require ChatGPT to deliver accurate and credible medical diagnoses, health information, and further support. Consequently, ChatGPT must be refined to guarantee the precision of its medical information while elucidating intricate terminology. Furthermore, it consistently updates its medical knowledge repository to guarantee alignment with contemporary medical standards. Secondly, the results of optimization consulting are consistent with Cheng, Shang, Scherr, and Alanzi’s findings22,23,24,25. Non-healthcare professionals seek prompt and comprehensive feedback from ChatGPT, particularly in urgent circumstances. This concept is closely related to their time cost and health status. Therefore, this study suggests that ChatGPT should be required to have real-time response capability to shorten the waiting time of patients and fulfill urgent health needs. Thirdly, the result of trust in potential results is consistent with Choudhury, Platt, Avishek, and Razdan’s findings20,21,26,27. Non-healthcare professionals’ intention to use ChatGPT enhances their faith, and conversely, good experiences such as successful medical treatment or health recovery increase trust in ChatGPT. Moreover, the findings of this study are inconsistent with those of Shinnosuke Ikeda. The selection of outcomes based on expert and AI recommendations is not associated with faith in AI. Consequently, ChatGPT must deliver an optimal user experience and implement a trust mechanism in actual applications.

Happy emotions included emotional support, relief of anxiety, and positive acceptance of feelings. Firstly, the results of emotional support are consistent with Zheng, Sharma, and Alanezi’s findings28,29,30. Non-healthcare professionals anticipate ChatGPT’s efficacy in medical treatment outcomes or health recuperation domains. Non-healthcare professionals anticipate that the technology will assist in alleviating their ailment or identifying the appropriate therapy pathway. Consequently, the ChatGPT therapy procedure should be supplemented with manual support and psychological assistance for the patient. Secondly, the results of anxiety relief are consistent with Alanezi and Farhat’s findings28,31. Non-healthcare professionals depend on ChatGPT to alleviate tension and promote relaxation when confronted with ambiguous medical concerns. This study proposes that ChatGPT should assist patients in stress management by suggesting resources that alleviate emotional distress. Thirdly, the result of positive acceptance is consistent with Alanezi and Morita’s findings28,32. Non-healthcare professionals are very receptive to new technologies and demonstrate considerable motivation and enthusiasm for the ChatGPT application, indicating their aspiration to improve their medical experience through innovative solutions. Consequently, the promotion of ChatGPT should emphasize innovation and practicality while showcasing personalized and intelligent features to fulfill patients’ expectations of emerging technologies.

Surprise emotions included exceeding expected results, delivery of information, and curiosity about potential applications. Firstly, exceeding expected results is consistent with Platt and Deiana’s findings21,33. Non-healthcare professionals frequently empathize with ChatGPT’s performance in medical applications, particularly when the outcomes surpass their expectations. Consequently, we advocate for gradually familiarizing the public with the application domains and functionalities of ChatGPT. Secondly, information delivery is consistent with Alanezi’s findings28. Non-healthcare professionals may view ChatGPT as markedly distinct from conventional methods of delivering medical information. Consequently, we recommend that ChatGPT implement a more participatory and visual methodology for information dissemination, thereby improving comprehension and involvement through diagrams and sequential instructions. Thirdly, curiosity about potential applications is consistent with Elisabetta, Choudhury, and Lee’s findings26,34,35. Non-healthcare professionals are keenly interested in ChatGPT’s functionalities within medical scenarios, especially since ChatGPT can solve complex medical problems. Therefore, the design process of ChatGPT should emphasize its proficiency in addressing intricate medical issues and encourage patients to engage with the technology by presenting real-world instances of interdisciplinary collaboration, disease forecasting, and the formulation of personalized treatment plans.

Anger emotion included misapplication and misinformation, as well as ethical issues. Firstly, the misapplication of results originated from discontent or technical faults during the consultation process and outcomes delivered by ChatGPT, particularly with high expectations. This concept should have been referenced in the previous study. It is because the ChatGPT techniques employed have successfully undergone ethical audits or the researcher’s subjective non-disclosure has led to the absence of this aspect of the problem. Consequently, we propose that a stringent trial design is necessary when using ChatGPT. Secondly, misinformation results are consistent with Alanezi, Deiana, Kahambing, De Angelis, Park, Morita, and Li’s findings14,28,32,33,36,37,38. The accurate diagnostic and treatment information provided by ChatGPT may positively impact patient health. These circumstances arise from patients’ heightened health sensitivity and significant dependence on the precision of information. It is advisable to enhance information accuracy monitoring via a multi-tiered medical data validation system, updates of the medical knowledge repository, and the implementation of expert audits. Simultaneously, self-correction and disclaimer mechanisms enhance patient vigilance. Thirdly, ethical issues are consistent with Platt and Alanezi’s findings21,28. Non-healthcare professionals are apprehensive that the use of ChatGPT may intensify societal issues, particularly in potential inequitable or discriminating treatment. To mitigate ethical concerns, the design of ChatGPT must adhere to the ideals of equity and transparency. Opening the decision-making process, incorporating ethical reviews and patient feedback systems, and continuously identifying and mitigating ethical risks to achieve equity and inclusivity.

Disgust emotions included privacy and data risks, questioning accuracy, over-reliance, and complexity. Firstly, the complexity result should have been mentioned in the previous study. The ChatGPT’s usage restrictions in China and the discomfort encountered during interactions may lead the public to forsake, particularly among persons with inadequate information literacy. Consequently, this study indicates that participants in future programs must evaluate their informational capabilities. ChatGPT treatment procedures can be customized by streamlining the interface, incorporating interactive coaching, and minimizing the learning curve. Secondly, the privacy and data risks results are consistent with Kahei Au, Platt, Alanezi, Kahambing, Morit, and Alanzi’s findings21,22,28,32,37,39. Non-healthcare professionals express concerns about privacy and personal data vulnerabilities, disinformation, and the potential inaccuracies of AI in health advising. This study advocates for privacy protection and data security enhancement. Frequent information audits and data security assessments must be performed to guarantee data protection and uniform utilization. Thirdly, the accuracy of the results of the questionnaires is consistent with Platt, Alanezi, Morita, and Alanzi’s findings21,22,28,32. Non-healthcare professionals are concerned about the distrust generated by ChatGPT, mainly the misinformation and the potential misinformation of AI for health counseling. Consequently, the collaboration of interdisciplinary medical specialists guarantees that the diagnostic and treatment information produced is reliable and has clinical value. A user feedback mechanism is implemented to rectify misinformation promptly. Fourthly, over-reliance results are consistent with Kiyoshi, Hussain, and Chakraborty Samant’s findings40,41,42. Non-healthcare professionals depend on ChatGPT for decision-making and need help comprehending its output. Consequently, the design of ChatGPT should prioritize the role of “assisting decision-making” over “leading decision-making,” clearly delineating its supportive function as a medical instrument. Simultaneously, explicit reminders and guidance must motivate patients to consult their physicians when making significant medical decisions, mitigating the excessive dependence on AI.

Fear emotion included unknowns, skepticism about responsiveness, and negative medical experiences. Firstly, unknowns are consistent with the Ikeda findings43. Non-healthcare professionals are concerned about ChatGPT’s potential diagnosis or treatment inaccuracies, insufficient communication with their physicians, and care quality and safety. These emotions primarily reflect unfamiliar technologies and the anxiety that human-computer connection may diminish emotional support. Therefore, enhancing human-computer collaboration and improving verbal communication and psychological reassurance features in ChatGPT apps is advisable to facilitate physicians’ involvement in crucial medical decision-making. Secondly, the results of responsiveness skepticism are consistent with Saeidnia and Scherr’s findings24,44. Non-healthcare professionals are concerned about the incapacity of ChatGPT technology to manage intricate medical scenarios, particularly when patients need precarious and swift decision-making. Therefore, it is advisable to enhance ChatGPT’s response and referral systems in intricate situations by collaborating with physicians to acquire prompt patient history and current health information. Thirdly, the fear of negative medical experiences may arise from worries that ChatGPT fails to encompass the entirety of an individual’s medical history or intricate pathology, particularly about high-risk or complex conditions. The researchers should have mentioned this idea because ChatGPT is used less in medical practice. Therefore, this study advocates prioritizing the patient’s healthcare experience in future practice. It is advisable to enhance the integration of historical data and personalized medication in future applications of ChatGPT by refining the patient record management system and condition monitoring mechanisms.

Sad emotions included failure to meet therapeutic expectations, failure to alleviate pain, and loneliness. Firstly, the result of failure to meet therapeutic expectations is consistent with Elshazly’s findings45. Non-healthcare professionals’ primary issue is that ChatGPT is ineffective in medical procedures, particularly regarding disease progression or inadequate treatment outcomes. This result contradicts the conclusions of Xue and Levkovich46,47. They believe that ChatGPT presents a viable option for telemedicine consultations. Healthcare professionals may use it as patient education, while patients might use it as a quick tool for health inquiries. Augmenting ChatGPT’s function as a supplementary resource to assist patients in comprehending their illnesses and offering prudent health management guidance. Secondly, the result of failure to alleviate pain is consistent with Elshazly’s findings45. Failure to alleviate pain suggests that ChatGPT may not effectively mitigate patients’ physical discomfort during medical operations. The result is inconsistent with Wen Peng’s findings48. The study suggests that ChatGPT excels in pain management and meets the established criteria. Enhancing ChatGPT functionality in pain evaluation and management is advisable by meticulously documenting and monitoring variations in patients’ pain levels. Simultaneously, collaboration with physicians and medication protocols should be enhanced to guarantee the efficacy of pain management measures. Thirdly, loneliness suggests an absence of interpersonal interactions and emotional engagement in ChatGPT. This idea should have been mentioned in previous studies. Therefore, this study suggests designing a humanized and interactive approach in practice. It is recommended that personalized conversational styles, emotionally supportive language, and interactive care features be added to the design of ChatGPT. Additionally, it can be synergized with human caregivers to provide emotional support.

Sentiment analysis of healthcare professionals

Good emotion included professionalism, innovative development, and efficiency and accuracy expectations. Firstly, professionalism is consistent with Wang and Xie’s findings49,50. Healthcare professionals prioritize ChatGPT’s ability to conform to established standards of care and assist in clinical decision-making. Healthcare professionals emphasize standardization and consistency. Therefore, this study suggests establishing a mechanism to standardize the evaluation and certification of ChatGPT by strictly adhering to medical standards in its design and application. This design will allow healthcare professionals to perceive it as compliant with industry standards during its utilization, hence augmenting their faith in it as an assistive tool. Secondly, innovative development is consistent with Jin, Platt, and Qi’s findings21,51,52. Healthcare professionals are concerned about their potential innovative ability, particularly in spearheading the advancement and utilization of medical technology. Healthcare professionals enhance treatment outcomes through technological innovation. Therefore, this study suggests promoting the application of ChatGPT in technological innovation, including introducing the latest AI algorithms, data analytics techniques, and intelligent predictive models, to enhance its cutting-edge in the medical field. Thirdly, efficiency and accuracy expectations are consistent with Xue and Xie’s findings47,50. Healthcare professionals consider the ChatGPT’s efficacy and precision as crucial indicators for advancing healthcare and refining the diagnostic and therapeutic processes. Therefore, this study suggests optimizing the processing efficiency and diagnostic accuracy in applying ChatGPT and developing an efficient diagnosis and treatment support tool for specific conditions.

Happy emotions included application satisfaction and workload relief. Firstly, application satisfaction is consistent with Elisabetta, Choudhury, and Avishek’s findings20,35,53. Healthcare professionals prioritize the practical implementation of technology in the medical process, as well as safety and satisfaction. This study indicates that the design and implementation should prioritize optimizing the user experience to ensure a user-friendly interface and intuitive functionality while minimizing operational difficulties. Secondly, workload relief is consistent with Avishek’s findings20. Healthcare professionals anticipate ChatGPT will alleviate the burden of demanding everyday responsibilities and facilitate secure decision-making in intricate situations. Therefore, this study recommends the implementation of automated tasks, intelligent decision support features, and optimized workflow integration in the design and application of ChatGPT.

Surprise emotions included cautiousness of popularization and innovative trends. Firstly, the cautiousness of popularization is consistent with Wardah, Han, Felix, Yuan, and Tian’s findings54,55,56,57,58. Healthcare professionals have been surprised by the performance of ChatGPT in clinical settings. However, they showed more caution in popularizing and applying this non-traditional technique. Including comprehensive clinical trial data and empirical studies is advisable while advocating for ChatGPT to bolster physicians’ trust. A phased implementation plan should be employed, commencing with a small-scale pilot and progressively broadening the scope to mitigate risks and gather practical experience. Secondly, innovative trends are consistent with Santiago, Bryan, and Qi’s findings52,59,60. Healthcare professionals see ChatGPT’s capabilities combined with traditional diagnostic and treatment methods as a transformative instrument for healthcare. Physicians monitor the advancement of the technologies while maintaining vigilance. Consequently, we encourage physicians to investigate the capabilities of ChatGPT in enhancing the efficiency of their practice and improving patient management.

Anger emotion included limitations, therapeutic misinformation, and patient over-reliance. Firstly, limitations are consistent with Matthew, Lukas J Meier, and Eric J Beltrami’s findings61,62,63. Healthcare professionals’ anger predominantly stems from perceived constraints of the technology. They may experience dissatisfaction and skepticism regarding the technology’s failure to adequately resolve specific complex therapeutic issues. Therefore, this study recommends that detailed technical documentation and clinical trial data be provided to clarify the scope and limitations of ChatGPT. Secondly, therapeutic misinformation is consistent with Kahambing and Tian’s findings37,57. Healthcare professionals’ anger may arise from ChatGPT’s provision of diagnostic and treatment suggestions contradicting their medical expertise, particularly in complex or rare cases. Healthcare professionals may regard ChatGPT’s diagnostic advice as misleading, resulting in grievances and emotional protests. This study recommends robust quality control measures to evaluate, scrutinize, and validate ChatGPT’s medical diagnostic suggestions. Concurrently, physicians should be motivated to provide feedback and rectify problems to enhance the precision and dependability of the technology consistently. Thirdly, Patient over-reliance on ChatGPT signifies a tendency to doubt their physician’s diagnosis in favor of ChatGPT’s therapy suggestions. In this situation, physicians perceive a danger to their professional status, resulting in unhappiness. This idea should have been mentioned in a previous study. However, a similar view is that patients’ reliance on search engines affects physicians’ professional authority. This study suggests that instructions on using ChatGPT should be strengthened in patient education to clarify its role as a supportive tool and improved to enhance patients’ understanding and trust in doctors’ advice.

Disgust emotion included reliability in the professional field, ethical and legal risk, and job-loss risk. Firstly, reliability in the professional field is consistent with Kahei Au, Tian, Liu, and Harriet’s findings39,57,64,65. Healthcare professionals are skeptical about the use of ChatGPT in specialized medicine. ChatGPT cannot understand complex medical problems accurately because of its non-specific medical data and sluggish update frequency. They believe that ChatGPT technology still needs to achieve accuracy and reliability for clinical application and cannot replace doctors’ judgment. Inconsistent with Wang and Meo’s findings49,66, ChatGPT possesses the capability to comprehend medical knowledge within the Chinese context and has attained the proficiency and standards necessary for entry into graduate programs in clinical medicine. Therefore, this study recommends updating the medical datasets and conducting clinical trials. Secondly, ethical and legal risk is consistent with Kahei Au, Wang, Liu, and Eric J Beltrami’s findings39,49,61,64. Healthcare professionals are concerned about the legal and ethical risks that ChatGPT may pose in medical practice, especially privacy protection and data leakage. Therefore, this study recommends implementing strict data privacy protection measures, including encryption and anonymization of patient information, alongside creating an ethical review framework. Thirdly, the job-loss risk is consistent with Umar Ali Bukar’s findings67. Healthcare professionals resist using ChatGPT, viewing it as a potential danger to their professional standing. This study advocates for the physicians’ professional training in new technology by elucidating the features and limitations of ChatGPT to boost the recognition of physicians’ professionalism.

Fear emotion includes a crisis of trust, losing control, and questioning processing capability. Firstly, the crisis of trust is consistent with Kahei Au and Liu’s findings39,64. Healthcare professionals may worry that ChatGPT could undermine their professionalism and authority in the diagnostic process, particularly when its recommendations contradict their clinical judgment, diminishing patient trust. Therefore, this study suggests clarifying the role of ChatGPT as an auxiliary tool in its application to ensure that its recommendations are consistent with physicians’ professional judgment. Secondly, losing control is consistent with Julien and Liu’s findings64,68. Healthcare professionals may be concerned about the likelihood of ChatGPT failing in complex medical decisions or causing severe medical errors due to technical malfunctions. This sentiment is associated with physicians’ long-term duty to focus on patient safety. Therefore, this study suggests implementing rigorous technology testing and quality control measures and developing contingency plans for possible technological failures. Thirdly, questioning processing capability is consistent with Saeidnia, Scherr, Julien, Deanna, and Liu’s findings24,44,64,68,69. Healthcare professionals are concerned that ChatGPT can manage intricate clinical scenarios because ChatGPT’s data is not specialized for medical data. This study recommends augmenting ChatGPT’s processing capabilities by incorporating medical ___domain-specific datasets and algorithmic optimization. Simultaneously, model training and validation are conducted to improve its capacity to manage intricate clinical situations.

Sad emotions included self-blame and the Powerlessness of technological innovations. Firstly, Self-blame is consistent with Liu’s findings64. Healthcare professionals may feel self-blame for errors or misdiagnoses during medical procedures if they make wrong decisions by ChatGPT, which may exacerbate their guilt. This concept reflects physicians’ heightened sensitivity to their professional responsibilities. Therefore, it is recommended that the accuracy and reliability of the technology in the application be enhanced to mitigate the escalation of accountability resulting from technological failures. Secondly, the Powerlessness of technological innovations is consistent with Christy’s findings70. Healthcare professionals frequently need help managing the intricacies and resource deficiencies. The advent of ChatGPT may not have mitigated pressures; instead, it has intensified physicians’ skepticism over the technology’s capacity to resolve the issue entirely. Therefore, this study suggests upgrading physicians’ training on new technologies, increasing their acceptance and confidence.

Comparison between health and non-health professionals

Health professionals and non-health people both express trust issues and concerns about misinformation, highlighting shared skepticism regarding ChatGPT’s reliability. Positive emotions reflect shared optimism about its potential to improve clinical outcomes and reduce workload. However, healthcare professionals show more caution about ChatGPT’s impact on professional roles and medical errors, emphasizing ethical and legal risks. Non-healthcare individuals focus more on personal experiences, such as emotional support and anxiety relief, highlighting the practical and immediate utility of ChatGPT.

Limitations

This study included two limitations. First, the Internet data was biased, excluding the viewpoints of older persons with limited Internet abilities. The emotional attitudes of the older population as a group with higher health risks are valuable. Future studies must validate the attitudes of the older population toward the new technology of ChatGPT. Secondly, despite incorporating the temporal dimension into the analysis. Nonetheless, the duration of ChatGPT’s application is relatively brief and requires extended observation. Consequently, future studies may be undertaken to corroborate the multidimensional temporal nodes. Finally, this study exclusively encompassed the content of ChatGPT and clinical medical documents, ignoring the content related to ChatGPT and writing skills. Consequently, we propose that future studies examine the relationship between clinical medical documents and writing skills.

Conclusions

This study revealed distinct yet overlapping sentiments among healthcare professionals and the public toward ChatGPT’s use in clinical practice. Healthcare professionals valued efficiency but voiced concerns about ethical risks and professional accountability. Non-healthcare individuals emphasized emotional support and accessibility while worrying about privacy and misinformation. Addressing these issues—such as enhancing reliability, safeguarding privacy, and defining ChatGPT’s role as a support tool—will be key to its integration. Future research should focus on aligning ChatGPT with clinical standards, and user needs to ensure it enhances healthcare delivery while maintaining the trust and ethical integrity.

Data availability

The datasets generated and analysed during the current study are not publicly available due this data only be used for academic research but are available from the corresponding author on reasonable request. If the dataset is needed, support can be obtained from Weibo and Zhihu platforms.

References

OpenAI. GPT-4 Technical Report. OpenAI (2023). https://openai.com/index/gpt-4-research/. Accessed September 19, 2024.

Ateeb Ahmad, P. et al. ChatGPT and global public health: Applications, challenges, ethical considerations and mitigation strategies. Glob. Transit. 5, 50–54 (2023).

Dave, T., Athaluri, S. A. & Singh, S. ChatGPT in medicine: An overview of its applications, advantages, limitations, future prospects, and ethical considerations. Front. Artif. Intell. 6, 1169595 (2023).

Ferres, J. M. L., Weeks, W. B., Chu, L. C., Rowe, S. P. & Fishman, E. K. Beyond chatting: The opportunities and challenges of ChatGPT in medicine and radiology. Diagn. Interv. Imaging 104, 263–264 (2023).

Yan, M., Cerri, G. G. & Moraes, F. Y. ChatGPT and medicine: how AI language models are shaping the future and health related careers. Nat. Biotechnol. 41, 1657–1658 (2023).

Amin, S. M., El‐Gazar, H. E., Zoromba, M. A., El‐Sayed, M. M. & Atta, M. H. R. Sentiment of nurses towards artificial intelligence and resistance to change in healthcare organisations: A mixed‐method study. J. Adv. Nurs. 16435 (2024).

Mu, Y. & He, D. The potential applications and challenges of ChatGPT in the medical field. Int. J. Gen. Med. (2024).

Younis, H. A. et al. A systematic review and meta-analysis of artificial intelligence tools in medicine and healthcare: applications, considerations, limitations, motivation and challenges. Diagn. Basel Switz. 14, 109 (2024).

Haltaufderheide, J. & Ranisch, R. The ethics of ChatGPT in medicine and healthcare: A systematic review on large language models (LLMs). Npj Digit. Med. 7, 1–11 (2024).

Shenhav, A. The affective gradient hypothesis: An affect-centered account of motivated behavior. Trends Cogn. Sci. https://doi.org/10.1016/j.tics.2024.08.003 (2024).

Yang, J. ChatGPTs’ journey in medical revolution: A potential panacea or a hidden pathogen?. Ann. Biomed. Eng. 51, 2356–2358 (2023).

Tan, S., Xin, X. & Wu, D. ChatGPT in medicine: Prospects and challenges: A review article. Int. J. Surg. Lond. Engl. 110, 3701–3706 (2024).

Wójcik, S. et al. Beyond ChatGPT: What does GPT-4 add to healthcare? The dawn of a new era. Cardiol. J. 30, 1018–1025 (2023).

Park, K.-S. & Choi, H. How to harness the power of GPT for scientific research: A comprehensive review of methodologies, applications, and ethical considerations. Nucl. Med. Mol. Imaging 58, 323–331 (2024).

Franze, A., Galanis, C. R. & King, D. L. Social chatbot use (e.g. ChatGPT) among individuals with social deficits: Risks and opportunities. J. Behav. Addict. 12, 871–872 (2023).

Cero, I., Luo, J. & Falligant, J. M. Lexicon-based sentiment analysis in behavioral research. Perspect. Behav. Sci. 47, 283–310 (2024).

Yazdani, A., Shamloo, M., Khaki, M. & Nahvijou, A. Use of sentiment analysis for capturing hospitalized cancer patients’ experience from free-text comments in the Persian language. BMC Med. Inform. Decis. Mak. 23, 275 (2023).

Zhou, Q., Xu, Y., Yang, L. & Menhas, R. Attitudes of the public and medical professionals toward nurse prescribing: A text-mining study based on social medias. Int. J. Nurs. Sci. 11, 99–105 (2024).

Zhou, Q., Lei, Y., Du, H. & Tao, Y. Public concerns and attitudes towards autism on Chinese social media based on K-means algorithm. Sci. Rep. 13, 1–8 (2023).

Choudhury, A., Elkefi, S. & Tounsi, A. Exploring factors influencing user perspective of ChatGPT as a technology that assists in healthcare decision making: A cross sectional survey study. PloS One 19, e0296151 (2024).

Platt, J. et al. Public comfort with the use of ChatGPT and expectations for healthcare. J. Am. Med. Inform. Assoc. JAMIA 31, 1976–1982 (2024).

Alanzi, T. M. et al. Public awareness of obesity as a risk factor for cancer in central Saudi Arabia: feasibility of ChatGPT as an educational intervention. Cureus 15, e50781 (2023).

Cheng, S., Xiao, Y., Liu, L. & Sun, X. Comparative outcomes of AI-assisted ChatGPT and face-to-face consultations in infertility patients: a cross-sectional study. Postgrad. Med. J. 5, 83 (2024).

Scherr, R., Halaseh, F. F., Spina, A., Andalib, S. & Rivera, R. ChatGPT interactive medical simulations for early clinical education: Case study. JMIR Med. Educ. 9, e49877 (2023).

Shang, L., Li, R., Xue, M., Guo, Q. & Hou, Y. Evaluating the application of ChatGPT in China’s residency training education: An exploratory study. Med. Teach. 8, 1–7 (2024).

Choudhury, A. & Shamszare, H. The impact of performance expectancy, workload, risk, and satisfaction on trust in ChatGPT: Cross-sectional survey analysis. JMIR Hum. Fact. 11, e55399 (2024).

Razdan, S., Siegal, A. R., Brewer, Y., Sljivich, M. & Valenzuela, R. J. Assessing ChatGPT’s ability to answer questions pertaining to erectile dysfunction: Can our patients trust it?. Int. J. Impot. Res. https://doi.org/10.1038/s41443-023-00797-z (2023).

Alanezi, F. Assessing the effectiveness of ChatGPT in delivering mental health support: A qualitative study. J. Multidiscip. Healthc. 17, 461–471 (2024).

Sharma, S. et al. A critical review of ChatGPT as a potential substitute for diabetes educators. Cureus 15, e38380 (2023).

Zheng, Y. et al. Enhancing diabetes self-management and education: A critical analysis of ChatGPT’s role. Ann. Biomed. Eng. 52, 741–744 (2024).

Farhat, F. ChatGPT as a complementary mental health resource: A boon or a bane. Ann. Biomed. Eng. 52, 1111–1114 (2024).

Morita, P. P. et al. Applying ChatGPT in public health: A SWOT and PESTLE analysis. Front. Public Health 11, 1225861 (2023).

Deiana, G. et al. Artificial intelligence and public health: Evaluating ChatGPT responses to vaccination myths and misconceptions. Vaccines 11, 1217 (2023).

Lee, S. & Park, G. Exploring the impact of ChatGPT literacy on user satisfaction: The mediating role of user motivations. Cyberpsychol. Behav. Soc. Netw. 26, 913–918 (2023).

Maida, E. et al. ChatGPT vs. neurologists: A cross-sectional study investigating preference, satisfaction ratings and perceived empathy in responses among people living with multiple sclerosis. J. Neurol. 271, 4057–4066 (2024).

De Angelis, L. et al. ChatGPT and the rise of large language models: The new AI-driven infodemic threat in public health. Front. Public Health 11, 1166120 (2023).

Kahambing, J. G. ChatGPT, public health communication and “intelligent patient companionship”. J. Public Health Oxf. Engl. 45, e590 (2023).

Li, L. T. Editorial Commentary: ChatGPT Provides Misinformed Responses to Medical Questions. Arthrosc. J. Arthrosc. Relat. Surg. Off. Publ. Arthrosc. Assoc. N. Am. Int. Arthrosc. Assoc. S0749–8063(24), 00462–00466 (2024).

Au, K. & Yang, W. Auxiliary use of ChatGPT in surgical diagnosis and treatment. Int. J. Surg. Lond. Engl. 109, 3940–3943 (2023).

Arabpur, A., Farsi, Z., Butler, S. & Habibi, H. Comparative effectiveness of demonstration using hybrid simulation versus task-trainer for training nursing students in using pulse-oximeter and suction: A randomized control trial. Nurse Educ. Today 110, 105204 (2022).

Chakraborty Samant, A., Tyagi, I., Vybhavi, J., Jha, H. & Patel, J. ChatGPT dependency disorder in healthcare practice: An editorial. Cureus 16, e66155 (2024).

Hussain, T., Wang, D. & Li, B. The influence of the COVID-19 pandemic on the adoption and impact of AI ChatGPT: Challenges, applications, and ethical considerations. Acta Psychol. (Amst) 246, 104264 (2024).

Ikeda, S. Inconsistent advice by ChatGPT influences decision making in various areas. Sci. Rep. 14, 15876 (2024).

Saeidnia, H. R., Kozak, M., Lund, B. D. & Hassanzadeh, M. Evaluation of ChatGPT’s responses to information needs and information seeking of dementia patients. Sci. Rep. 14, 10273 (2024).

Elshazly, A. M., Shahin, U., Al Shboul, S., Gewirtz, D. A. & Saleh, T. A conversation with ChatGPT on contentious issues in senescence and cancer research. Mol. Pharmacol. 105, 313–327 (2024).

Levkovich, I. & Elyoseph, Z. Identifying depression and its determinants upon initiating treatment: ChatGPT versus primary care physicians. Fam. Med. Commun. Health 11, e002391 (2023).

Xue, Z. et al. Quality and dependability of ChatGPT and DingXiangYuan forums for remote orthopedic consultations: Comparative analysis. J. Med. Internet Res. 26, e50882 (2024).

Peng, W. et al. Evaluating AI in medicine: A comparative analysis of expert and ChatGPT responses to colorectal cancer questions. Sci. Rep. 14, 2840 (2024).

Wang, H., Wu, W., Dou, Z., He, L. & Yang, L. Performance and exploration of ChatGPT in medical examination, records and education in Chinese: Pave the way for medical AI. Int. J. Med. Inf. 177, 105173 (2023).

Xie, S. et al. Utilizing ChatGPT as a scientific reasoning engine to differentiate conflicting evidence and summarize challenges in controversial clinical questions. J. Am. Med. Inform. Assoc. JAMIA 31, 1551–1560 (2024).

Jin, J. Q. & Dobry, A. S. ChatGPT for healthcare providers and patients: Practical implications within dermatology. J. Am. Acad. Dermatol. 89, 870–871 (2023).

Qi, W. & Niu, F. Exploring the unknown: Evaluating ChatGPT’s performance in uncovering novel aspects of plastic surgery and identifying areas for future innovation. Aesthetic. Plast. Surg. https://doi.org/10.1007/s00266-024-04200-0 (2024).

Choudhury, A. & Shamszare, H. Investigating the impact of user trust on the adoption and use of ChatGPT: Survey analysis. J. Med. Internet Res. 25, e47184 (2023).

Busch, F., Adams, L. C. & Bressem, K. K. Spotlight on the biomedical ethical integration of AI in medical education—Response to: “An explorative assessment of ChatGPT as an aid in medical education: Use it with caution”. Med. Teach. 46, 594–595 (2024).

Han, Z., Battaglia, F., Udaiyar, A., Fooks, A. & Terlecky, S. R. An explorative assessment of ChatGPT as an aid in medical education: Use it with caution. Med. Teach. 46, 657–664 (2024).

Rafaqat, W., Chu, D. I. & Kaafarani, H. M. AI and ChatGPT meet surgery: A word of caution for surgeon-scientists. Ann. Surg. 278, e943–e944 (2023).

Tian, S. et al. Opportunities and challenges for ChatGPT and large language models in biomedicine and health. Brief Bioinform. 25, bbad993 (2023).

Yuan, S. et al. Leveraging and exercising caution with ChatGPT and other generative artificial intelligence tools in environmental psychology research. Front. Psychol. 15, 1295275 (2024).

Berrezueta-Guzman, S., Kandil, M., Martín-Ruiz, M.-L., Pau de la Cruz, I. & Krusche, S. Future of ADHD care: Evaluating the efficacy of ChatGPT in therapy enhancement. Healthc. Basel Switz. 12, 683 (2024).

Lim, B. et al. Exploring the unknown: Evaluating ChatGPT’s performance in uncovering novel aspects of plastic surgery and identifying areas for future innovation. Aesthetic. Plast. Surg. 48, 2580–2589 (2024).

Beltrami, E. J. & Grant-Kels, J. M. Consulting ChatGPT: Ethical dilemmas in language model artificial intelligence. J. Am. Acad. Dermatol. 90, 879–880 (2024).

Maksimoski, M., Noble, A. R. & Smith, D. F. Does ChatGPT Answer Otolaryngology Questions Accurately?. The Laryngoscope. 134, 4011–4015 (2024).

Meier, L. J. ChatGPT’s responses to dilemmas in medical ethics: The devil is in the details. Am. J. Bioeth. AJOB 23, 63–65 (2023).

Liu, J., Wang, C. & Liu, S. Utility of ChatGPT in clinical practice. J. Med. Internet Res. 25, e48568 (2023).

Walker, H. L. et al. Reliability of medical information provided by ChatGPT: Assessment against clinical guidelines and patient information quality instrument. J. Med. Internet Res. 25, e47479 (2023).

Meo, S. A., Al-Masri, A. A., Alotaibi, M., Meo, M. Z. S. & Meo, M. O. S. ChatGPT knowledge evaluation in basic and clinical medical sciences: Multiple choice question examination-based performance. Healthc. Basel Switz. 11, 2046 (2023).

Bukar, U. A., Sayeed, M. S., Razak, S. F. A., Yogarayan, S. & Amodu, O. A. An integrative decision-making framework to guide policies on regulating ChatGPT usage. PeerJ Comput. Sci. 10, e1845 (2024).

Haemmerli, J. et al. ChatGPT in glioma adjuvant therapy decision making: ready to assume the role of a doctor in the tumour board?. BMJ Health Care Inform. 30, e100775 (2023).

Palenzuela, D. L., Mullen, J. T. & Phitayakorn, R. AI Versus MD: Evaluating the surgical decision-making accuracy of ChatGPT-4. Surgery 176, 241–245 (2024).

Boscardin, C. K., Gin, B., Golde, P. B. & Hauer, K. E. ChatGPT and generative artificial intelligence for medical education: Potential impact and opportunity. Acad. Med. J. Assoc. Am. Med. Coll. 99, 22–27 (2024).

Acknowledgements

The authors would like to thank Tao Zhou who provided advice and assistance with this study.

Funding

No funding.

Author information

Authors and Affiliations

Contributions

All authors participated in designing, data collection, data analyze, drafted the manuscript. QZ critically assessed the manuscript, and all authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethical approval and consent to Participate

It is not applicable. The study did not involve human subjects, and the data came from publicly available platforms for academic research. Therefore, after the Institutional Review Board’s Approval, the researchers only collected comments that did not involve Human subjects’ privacy and did not cause human subjects harm.

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Lu, L., Zhu, Y., Yang, J. et al. Healthcare professionals and the public sentiment analysis of ChatGPT in clinical practice. Sci Rep 15, 1223 (2025). https://doi.org/10.1038/s41598-024-84512-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-024-84512-y