Abstract

Point Transformer can effectively capture both local information and long-range global context in point cloud data. However, its reliance on dividing local areas into separate tokens can result in a loss of instance structure, limiting its feature representation ability in large-scale urban aerial point clouds, especially the fine-grained classification of ground objects. To address these limitations, we propose a novel Point Transformer-based Multi-feature Fusion (PTMF) Network that explicitly integrates geometric features into the Point Transformer architecture to classify large-scale aerial point clouds. The fusion of geometric features effectively complements global contextual feature extraction that is solely based on positional relationships with prior information. Specifically, the PTMF network fuses down-sampled geometric and inherent point cloud features at each stage, using the Transition Up module to up-sample mapping features. Our experiments on the SensatUrban and DALES datasets demonstrate significant improvements, achieving mIoU scores of 63.52% and 82.18%, respectively, outperforming existing methods.

Similar content being viewed by others

Introduction

As a crucial means of acquiring and processing spatial 3D information, 3D point cloud technology demonstrates extensive application potential across multiple domains including autonomous driving1, robotics2, virtual reality (VR)/augmented reality (AR)3, cybersecurity4, adversarial attack5 and industrial applications6. As a vital branch of this technology, aerial point clouds, collected aerially using LiDAR or photogrammetry, are 3D point sets that provide highly accurate characterization of the geometric features of the ground surface and surface features (e.g., buildings, vegetation, terrain)7. These datasets play a pivotal role in advancing the automation and intelligence of 3D geographic information systems. The intelligent classification of aerial point clouds has emerged as a key enabling technology. It is widely adopted in natural resource management for terrain mapping8, smart agriculture and forestry9, high-definition mapping10, digital city modeling11, cultural heritage preservation12, environmental monitoring13, and power infrastructure inspection11. Efficiently extracting valuable insights from these massive aerial point cloud datasets remains a critical research challenge in contemporary geospatial studies.

Currently, point cloud classification methods for aerial point clouds are mainly divided into traditional supervised classification methods and deep learning methods. The traditional supervised classification methods14 first define an appropriate neighborhood for the adjacent points of each point in the point cloud, representing the local context information. Based on this, a mathematical expression is designed to represent the spatial or spectral attributes of the adjacent points. The selected representative samples and corresponding labels are then input into a classifier (such as AdaBoost15, support vector machine (SVM)16, composite kernel SVM17, and random forest (RF)18 for training and testing to classify samples. This method is straightforward and relatively easy to implement, but its performance mainly depends on the definition of neighbors19 and the design of manual features20, which are related to the density and quality of the point clouds, Manual feature design is particularly challenging for large-scale outdoor complex scene point clouds, resulting in limited classification accuracy.

As deep learning continues to be researched in the field of 3D point clouds, deep learning techniques can indirectly learn features from 2D projected images derived from point clouds21 or regular 3D grids22, or directly from point clouds. MVCNN23 pioneered 2D projection methods. It projects the point cloud into different views of the image plane, and then extracts global descriptors from the 2D image through multiple 2D CNNs and maximum pooling layers; Subsequently, Yang et al.24, Tatarchenko et al.25, and FPConv26 have indirectly extracted features from 2D projected images derived from point clouds, but the process of projecting irregular projections of point clouds to regular 2D representations result in the loss of 3D geometric information. Hongwu Kuang et al.27 voxelized 3D point clouds into regular 3D lattice meshes and used 3D convolution to obtain feature information; OctNet28, Brock et al.29, and ResNet30 are all exploring this method, which shows good accuracy, but there are still shortcomings such as high computing cost and poor flexibility. Point-based methods, such as PointNet31 and its successors, maintain the integrity of positional information and can avoid the loss of spatial geometric information inherent in projection methods. PointNet, considered a milestone, effectively copes with the disorder of point clouds, achieving classification and segmentation tasks efficiently. Hu et al. proposed RandLA-Net32, a large-scale point cloud semantic segmentation network based on random sampling, which directly processes large-scale point clouds without block division. It considers the geometric structure of point clouds as a whole, enabling effective calculation with high accuracy and low memory consumption. However, the basic idea of the above method is the deep application of 2D convolutional neural network in the field of 3D point cloud, and there exists the problem of low sensory field, which results in insufficient extraction of point cloud feature information.

The success of Transformers and self-attention mechanisms in natural language processing33 and 2D vision34 has also advanced their application in 3D point cloud classification. The Point Transformer algorithm was the first to apply Transformers and self-attention mechanisms to 3D point cloud data, significantly improving model performance, especially for indoor ultra-large-scale scenes35. However, the need to sample local areas separately without exploring adjacent local areas deeply destroys instance structure information, limiting the Transformer’s ability to enhance point cloud instance feature expression. This issue is even more pronounced for sparse and low-accuracy wide-area aerial point clouds, leading to reduced accuracy in fine-grained classification36. There have been no published articles on the classification of airborne point cloud data in large-scale outdoor scenes, especially when the data is extremely unbalanced.

The above literature analysis reveals that the 3D point cloud classification algorithms have made notable progress, but there is still room for improvement in applying the powerful Point Transformer algorithm to large-scale outdoor scene airborne point cloud data and further using the feature information to improve the model’s generalization. To this end, this paper proposes the novel Transformer-based Multi-feature Fusion Network (PTMF Network) to classify large-scale outdoor scene airborne point cloud datasets. The specific contributions are as follows:

-

1.

We propose a Transformer-based Multi-feature Fusion Network that explicitly integrates geometric features into the Point Transformer architecture for the classification of large-scale outdoor aerial point clouds. The incorporation of local geometric structures effectively complements global contextual feature extraction, which relies solely on positional relationships, by providing prior information.

-

2.

To fuse the geometric and semantic features, we propose a Multi-feature Point Transformer Module that can adaptively capture feature similarity by incorporating geometric features into the self-attention mechanism at multiple layers, thus improving the classification of fine-grained features in a wide range of outdoor scenes.

-

3.

To verify the performance of the proposed network model, we conduct experiments on the large-scale aerial point cloud datasets SensatUrban and DALES, and the experimental results show that the point cloud geometric structural features together with the deep semantic information can effectively improve the classification effect of the aerial point cloud data for large-scale outdoor scenes.

Related works

Deep learning methods for point cloud classification

In recent years, deep learning has made significant progress in the field of point cloud classification, with researchers proposing numerous deep learning-based semantic segmentation models for processing point cloud data. Depending on the input format, these methods are primarily categorized into three types: 2D image-based methods, 3D voxel-based methods, and point-based methods.

2D image-based methods project 3D data onto 2D images and utilize 2D convolutional neural networks to extract features. Representative methods in this category include MVCNN12, GVCNN37, LRMV3DOR38, DSCNN39, PCN40, ShapePFCN41, and LightGBM11. While these methods reduce dimensionality and computational costs, they result in the loss of spatial information and are unable to fully capture 3D geometric and structural information.

3D voxel-based methods, such as VoxNet42, represent unordered point clouds using regular volume grids and learn features from structured 3D data for classification and segmentation tasks. Representative methods in this category include PointGrid43, SegCloud44, SSCN45,O-CNN46, OctNet47 and NormalNet48. These approaches allow the easy application of 3D convolutional methods to unstructured point cloud data, similar to how 2D convolutions are applied to images. However, the contradiction between resolution and computational demand limits the real-time performance and applicability of these methods.

Point-based methods, pioneered by PointNet, directly process point cloud data to maintain the integrity of positional information. Subsequent networks like PointNet + + have built upon this foundation, introducing improvements such as local feature aggregation and attention mechanisms to enhance classification and segmentation performance. Classic algorithms in point-based methods include PointSIFT49, PointConv50, KPConv51, PointCNN52, DGCNN53, PCGCN54, and pointweb55. RandLA-Net56 and MSAAN57 further improve efficiency and accuracy by processing large-scale point clouds as a whole. These algorithms can directly handle raw point cloud data, preserving complete geometric information and featuring high efficiency and flexibility, making them suitable for various tasks such as classification and segmentation. However, these algorithms are sensitive to noise and require large amounts of labeled data. They also face challenges in terms of computational resources and high performance demands when dealing with large-scale or incomplete data.

Self-attentive networks and point cloud transformer

Self-attentive networks and Transformer models have caused a major revolution in the field of natural language processing58 and have further advanced their application in 2D vision (e.g., intensive prediction tasks such as image segmentation and object detection) with great success59. The Point Transformer method60 introduced a self-attention network and Transformer to the 3D point cloud classification work for the first time, which significantly improved the classification accuracy; SortNet61 also utilized the attention mechanism to extract local features from different subspaces. Pu-Transformer62 used a transformer structure to enhance the point and channel relationships for up-sampling point clouds; Pointformer33 designed an attention-based feature extractor as the backbone of different object detection frameworks. All the above methods make use of the characteristics of point cloud sequence data and the highly scalable learning ability of the Transformer to effectively obtain the local features of the point cloud and the global feature information of long threads. The disadvantage of this method is that it only samples local areas in the sampling process, lacks information mining of adjacent local areas, and destroys the instance structure, thus limiting the ability of Transformer to represent the feature enhancement of point cloud instances, especially for sparse and low-precision wide-area airborne point clouds, which will directly lead to the decrease of the accuracy of fine-grained feature classification.

Geometric feature fusion for point cloud classification

Over the past few years, point cloud classification methods that integrate geometric features have achieved significant progress in the fields of deep learning and computer vision. These methods enhance the accuracy and robustness of point cloud classification by combining the geometric properties of point clouds (such as local neighborhood relationships, linear features, normal vectors, and density) with deep learning models. MFFGCNN63 proposed for airborne LiDAR point cloud classification uses traditional point cloud features to promote the effective expression of deep features, thereby improving classification accuracy. CAFF-PointNet64 calculates geometric features of point clouds through spherical neighborhoods and combines them with semantic features extracted by deep learning, significantly improving the accuracy of small object classification. Li et al.8 constructed a geometric attention mechanism network that effectively integrates local geometric features, optimal neighborhood selection, and advanced features of deep learning for ALS point cloud classification to further improve the effectiveness and performance of the point cloud classification task. GSFE65 designs an adaptive feature fusion module, by integrating geometric and deep learning features, this module enhances the model’s perception of point clouds, meeting the demand for high-precision semantic segmentation across different scenarios. While these methods have achieved remarkable success in point cloud classification, their feature extraction relies on specific network architectures, such as convolutional methods. This leads to limited receptive fields and insufficient extraction of local features, restricting their applicability and performance on broader point cloud datasets.

In summary, while existing methods have made significant progress in point cloud classification tasks, several challenges and limitations persist. Therefore, this study aims to propose a novel point cloud classification method. By leveraging traditional geometric features with strong guidance and relevance, it effectively complements the deep semantic features acquired by the Point Transformer. This approach addresses the issue of instance structure destruction caused by the Point Transformer’s sampling of independent local areas. It also delves into the complex geometric morphological structures hidden within point cloud information, enhancing the Transformer’s ability to express enhanced features of point cloud instances. Consequently, this improves the fine - grained classification accuracy of aerial point clouds and provides a more effective and robust solution for point cloud classification.

Method

Overview

In this section, we introduce the proposed PTMF network model, following the Transformer architecture of encoding-decoding60, to fully explore the point cloud objects by inputting geometric features (linear feature, plane feature, spherical feature, and curvature feature) targeting structural information. The geometric structure of point cloud objects can be fully explored to further improve the performance of the 3D point cloud classification network model, which is especially effective in extracting semantic feature information of aerial point cloud data for a wide range of outdoor scenes.

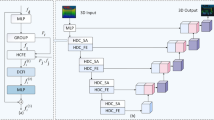

The overall structure of the proposed network model is shown in Fig. 1. It mainly contains the Multi-feature Point Transformer module, Transition Down (TD) module, and Transition UP (TU) module, whose functions and structures are described in detail in “Multi-feature point transformer module” and “Transition down module and transition up module”. Given an input point cloud with N points, firstly, N points are retained by the multilayer perceptron (MLP), and at the same time, a vector of length 32 is generated as the features of the point cloud, and then high-dimensional deep-level feature extraction is accomplished by using the TD module and the stacking of the 5-stage Multi-feature Point Transformer Block, including traditional geometric features and point cloud self-information mined through deep learning networks. The core of the PTMF network is a novel Multi-feature Point Transformer module, which acts as a feature extractor throughout each stage of encoding and decoding, and then interpolates and recovers the output features and the number of point clouds through the TU module. Finally, the MLP is used to map the results linearly to obtain the corresponding dense predictive classification results.

Multi-feature point transformer module

The Multi-feature Point Transformer Module is a key component of the PTMF network and is a combination of Multi-feature Point Transformer Layer and Multi-feature Point Transformer Block. This is achieved by stacking multiple Multi-feature Point Transformer Blocks with an integrated Multi-feature Point Transformer Layer.

Multi-feature point transformer layer

Current point cloud classification networks rely solely on coordinate information and deep learning networks to mine abstract high-level features, producing heterogeneity in classification results for low-density areas and urban target boundaries66. For point clouds data, the manually designed point cloud features used in the traditional supervised classification method are very instructive and targeted for the fine-grained classification of typical features in large-area complex outdoor point cloud scenarios, which can effectively improve the accuracy of feature classification19. Experiments on feature selection using random forests by Sun et al.17 showed that geometric features obtained by covariance matrix computation are more favorable for the classification of aerial point clouds data in urban areas. Since the extraction of geometric features needs to consider the neighborhood spatial characteristics, and according to the characteristics of the feature classes in the study area, this paper chooses the relevant features based on eigenvectors and eigenvalues, including linear feature (Lλ), plane feature (Pλ), spherical feature (Sλ) and curvature feature (Cλ), and the variations of the geometric features of the different features are also different, therefore these features are very useful, repeated experiments have also shown that the combination of Lλ, Pλ, Sλ, and Cλ geometric features is optimal. But how to introduce these useful features into deep learning networks is a key question. In this paper, we draw on the idea of MFFGCNN67 to introduce geometric features into a 3D point cloud convolutional neural network, before the data are inputted into the network, they are obtained based on the eigenvalues λi (λ1 > λ2 > λ3) and eigenvectors vi, which are then obtained by solving the covariance matrix C of the local point cloud in the 3 coordinate directions 68, where C · vi = λi · vi, i ∈ {1,2,3}, the formula for calculating eigenvalues according to the input data are shown in Eqs. (1), (2), (3) and (4). The neighborhood construction and covariance calculation refer to the classical definitions, parameter designs, and physical interpretation of covariance matrices established in seminal works such as Weinmann et al.69 and Demantke et al.70. Specifically, an adaptive algorithm was employed to dynamically adjust the neighborhood extent, effectively mitigating overfitting issues inherent to traditional fixed-neighborhood approaches69. The grid resolution was optimized to 0.25 m through systematic parameter analysis, striking an optimal balance between feature discriminability and computational efficiency70. This configuration ensures robust characterization of geometric features while maintaining practical processing requirements for large-scale point cloud datasets.

where λ1, λ2, λ3 (λ1 > λ2 > λ3) are the eigenvalue; Lλ, Pλ, Sλ, Cλ are linear feature, plane feature, spherical feature and curvature feature, respectively.

Then, Lλ, Pλ, Sλ, and Cλ are combined with the original information of the point cloud (XYZRGB or XYZ) into rich feature representations, which are together used as model inputs into the novel Multi-feature point Transformer to train to get the final point cloud classification results. Specifically, the coordinate matrix of the input point cloud is p: {p1, p2,...pN} ∈ RN×3, the eigenmatrix is F: {f1, f2,...fN} ∈ RN×D. N and D denote the number of point clouds and feature dimensions, respectively. The raw input features of the point cloud are obtained by splicing the normalized X-Y-Z coordinates, raw RGB colors, and geometric features (Lλ, Pλ, Sλ, Cλ ). The Multi-feature Point Transformer Layer consists of two parts, the Position Coding Module and the Multi-feature Self-Attention Module, and the structure is shown in Fig. 2. The local region locations and features are first encoded through the Multi-feature Point Transformer layer.

For any point \({x_i}\), \({x_i}\) ∈ (3,Fi) in the point cloud set, find its k (k = 16)18 nearest neighbour point sets χ(i) \(\subseteq\) χ, where the neighbour points \({x_j}\), \({x_j}\epsilon\)χ(i), for the three-dimensional coordinates of the point \({x_{i~~}}\)and its neighbor point \(\:{x}_{j}\), Pi and Pj, respectively, utilize a multilayer perceptual machine (MLP) function θ that contains two linear layers, one ReLU layer, and a multilayer perceptron (MLP) function θ to achieve position encoding. The position encoding function δ is defined as shown in Eq. (5).

where θ is the MLP function, Pi and Pj are the 3D coordinates of the point \({x_i}\) and its neighbor point \({x_j}\) respectively.

For the features, containing R, G, B, Lλ, Pλ, Sλ, and Cλ, the vector self-attention mechanism is applied to compute the summation of the attention values of the relevant \({x_j}\)neighbors as the feature output \({y_i}\) of the point \({x_i}\), which is expressed as shown in Eq. (6).

where \(\varphi , \psi ,\alpha\) are a point-by-point feature transformer, e.g., linear projection or multilayer perceptron; δ is a ___location coding function; \(\rho\) is a normalization function, e.g., softmax; each point local neighborhood is obtained by self-attention attention to the query \(\varphi \left( {{x_i}} \right)\)(Query, Q), the key \(\psi \left( {{x_j}} \right)\)(Key, K) and the value\(\alpha \left( {{x_j}} \right)\)(Value, V), the ___location coding function δ added to the attention generation branch and feature transformation branch can improve the performance of the model (Point Transformer), through the \(\gamma\) function (MLP) to calculate the feature weights with the value of \(\alpha \left( {{x_j}} \right)\) point multiplication, getting the point \({x_i}\) and its associated neighbor attention value summed up is the high-dimensional feature output of the multi-feature point transformer at point \({x_i}\).

These high-dimensional and deep abstract features include traditional geometric features and point cloud information obtained by mining the point cloud features through the Multi-feature Point Transformer Layer module. In the process of feature information extraction, the geometric features play an important role in digging into the complex geometrical structure hidden in the point cloud information, which can effectively complement the original data and information to further improve the performance of the model. The Multi-feature Point Transformer Layer module is the innovation point of this paper and where this paper differs from the classic Point Transformer Layer in Point Transformer.

Multi-feature point transformer block

The function of the Multi-feature Point Transformer Block module is to integrate the point cloud inputs carrying point cloud positions and features into the Multi-feature Point Transformer Layer to achieve the exchange of information between local feature vectors of the point cloud and to output the new feature vectors of all the data points, and it also can reduce the dimension, accelerating the model processing process, and the aggregated feature information is adapted to both the feature vector content and the 3D layout content. This module mainly constitutes the feature extractor of the PTMF network model through the combination of Linear Layer and Multi-feature Point Transformer Layer and realizes the above function by using the principle of Vector Self-Attention Mechanism introduced in the above “Multi-feature point transformer layer”), and the specific structure is shown in Fig. 3a.

Transition down module and transition up module

The function of the Transition Down module is the same as that of the Transition Down module in the classical Point Transformer, which down-samples the input point cloud set step by step as needed, generates five-stage encoded point set bases of [N, N/4, N/16, N/64, N/256], and outputs the features of the down-sampled point set. The schematic diagram is shown in Fig. 3b. Firstly, for the input point set p, p ∈ (N,3 + F), N denotes the number of points, 3 denotes that each point contains 3D coordinates, and F denotes other feature information, the farthest point sampling method is used to get the downsampled points, and then the sampled points go through the linear layer, the regularisation layer and the ReLU function to get the features of each point after the downsampling, and then the features of the point set are obtained by finding the k (k = 16) nearest neighbors of that point, and then the features of the under-sampled point set are outputted by using the local maximum pooling layer to output the features of the downsampled point set.

The network structure proposed in this paper adopts the encoding-decoding U-Net network structure, and the Transition Up module as a decoder mainly corresponds to the encoder Transition Down, which has the same function as the Transition Down module in the Point Transformer and mainly processes the features output from the Transition Down module to map back to the high-resolution point set. This module has the same function as the Transition Down module in the Point Transformer, which is mainly to process the output features of the Transition Down module so that they can be mapped back to the high-resolution point set, and the specific implementation schematic is shown in Fig. 3c. Firstly, the input point features (including the Transition Down Feature and the original input network features of the point cloud) are processed by linear processing, and then the high-dimensional features are mapped to the high-resolution point set using trilinear interpolation to recover their feature dimensions.

Experiments

We evaluate our model on two large-scale airborne scene datasets: SensatUrban and DALES, featuring diverse types and densely labeled data.

Datasets

To evaluate the effectiveness of the proposed method, we conduct experiments on two large-scale outdoor urban scene airborne datasets.

SensatUrban71. This large-scene outdoor airborne dataset is a photogrammetric point cloud dataset generated by the University of Oxford using an unmanned aerial vehicle (UAV) equipped with the Sensefly Soda 3D sensor to acquire 2D imagery, and the point cloud information includes 3D coordinates and RGB information. The dataset contains a total of 13 semantic classifications of point clouds for the cities of Birmingham (BIR) and Cambridge (CAM), ranging from large objects such as buildings, floors, walls, parks and to very small objects such as bicycles, paths, pavements in the urban scene. The point clouds in both city datasets are useful in this experimental analysis.

DALES 72. The dataset is an airborne ALS dataset acquired by a two-channel airborne mapping system, the Riegl 31, with point cloud information in 3D coordinates only, covering the city of Surrey, Canada, with an area of about 330 km2, containing four scene types: rural, urban, commercial, and suburban, and with eight categories of semantic labeling categories: buildings, cars, trucks, poles, wires, fences, ground, and vegetation.

Data preprocessing

Due to the limitations of network and hardware equipment, most of the current point-based point cloud deep learning networks are designed for indoor scenes or outdoor ground scene point cloud data, so when these networks are used for aerial point cloud data for large-scale outdoor scenes because the point cloud contains complex and varied terrain areas, there are large differences in the scale of the features, the distribution of feature classes is not balanced, the density of the point cloud is sparse, and the resolution of the data is low. If the local features are extracted from the dense point cloud data, the difference between the input data levels will cause the training process to oscillate and affect the classification accuracy. Therefore, in this paper, the adaptability of the fusion model in airborne point cloud data categories and input scales is solved by balancing the point cloud category processing and point cloud partition block processing. Meanwhile, the normalization of the preprocessed data improves the generalization ability and robustness of the model.

Point cloud category balance processing. The difference in the feature scale of airborne point cloud data and low resolution or different point cloud region selection will cause an imbalance in the number of point cloud categories, to ensure that the deep-level feature acquisition in the training process is not affected by the difference in the number of categories in the initial data set, first of all, the point cloud data reading for the number of different categories of point cloud to give different weights, a single category weight is obtained by the total number of points divided by the total number of points in a particular category, the detailed calculation formula is given in Eq. (7)73. Then a complex loss function is set to solve the problem of sample imbalance. Specifically, a composite loss function integrating Weighted Cross Entropy and Focal Loss is designed to dynamically adjust class weights and sample difficulty, mitigating class imbalance and enhancing the model’s focus on hard samples. The mathematical formulation is provided in Eq. (8)74.

where, \({N_{total}}\) denotes the total number of point clouds across all categories in the dataset; \({N_c}\) represents the number of point clouds corresponding to category C; \({w_c}\) is the inverse frequency weight for category C, designed to mitigate class imbalance during training by assigning higher weights to underrepresented classes.

Where, \({w_c}\) denotes the category weight, which is generally inversely proportional to the data distribution (e.g., categories with fewer samples receive larger weights); \({p_c}\) represents the model’s predicted probability for category C; is the focusing parameter (default γ = 2), that suppresses gradient contributions from easily classifiable samples.

Point cloud partition block processing. To ensure the effective extraction of local features, it is necessary to effectively slice the large-scale point cloud to obtain the appropriate scale size when the data are input in batches. In this paper, the large-scale point cloud data is sliced into 100 m × 100 m size point cloud blocks, and then each point cloud block is sampled according to the average of the categories, and the number of points in each category is the total number of points divided by the number of categories, and the categories larger than this are downsampled, and the categories smaller than this are resampled repeatedly, and then the sampled point sets are obtained in the end.

Experimental environment and parameter settings

This experimental environment is AutoDL AI Arithmetic Cloud (https://www.autodl.com/home) with 48vCPU Xeon(R) Platinum 8255 C CPU @ 2.50 GHz, Nvidia GeForce RTX 3090 (24GB) Quad Graphics, Nvidia support GPU acceleration, based on Cuda 11.1, Python 3.7 and PyTorch 1.9.0 on Ubuntu 20.04. The network was trained with an input batch size of 16, an iteration number (epoch) of 300 using the Adam optimizer, an initial learning rate of 0.5, a Momentum of 0.9, and a weight decay of 0.0001.

Evaluation metrics

Classification accuracy evaluation metrics were selected as Accuracy (Acc), Intersection-over-Union (IoU), Mean Intersection-over-Union (mIoU), Mean Accuracy (mAcc), and Overall Accuracy (OA)60; where Acc, IoU is used exclusively to evaluate the classification performance of each single class, and mIoU, mAcc, and OA are used to evaluate the performance of the entire test dataset. The loss function uses a linear combination of weighted cross-entropy loss and Lovász-Softmax loss75 to calculate the loss value, which can effectively improve the category imbalance problem, and each iteration outputs the validation set accuracy to ensure that the network is not overfitted.

Results and analyses

To fully validate the effectiveness of the performance of the proposed Multi-feature point Transformer model, evaluation experiments are conducted on the datasets SensatUrban and DALES to validate and analyze the network performance of the point Transformer and the Multi-feature point Transformer network for multi-feature fusion feature classification experimental analysis.

Compared with other methods on the sensaturban dataset

To evaluate the performance of the proposed PTMF network, nine influential 3D semantic segmentation networks are selected for pairwise comparison against the SensatUrban dataset: PointNet31, PointNet + + 76, TangentConv25, SPGraph 77, SparseConv78, KPConv79, RandLA-Net32, LCPFormer36, Point Transformer60. PointNet20 and PointNet + + 28, as two important models in the field of point cloud processing, use MLP to extract global and local features from disordered point clouds; TangentConv14 convolves the point cloud data on the tangent plane, which can handle point clouds with different densities and distributions; SPGraph46 converts the point cloud into a graph structure, combined with graph convolutional neural networks (GCN) to extract features; SparseConv47 and KPConv48 implement convolution operations on point clouds to extract features. RandLA-Net21 proposed a random hierarchical attention mechanism for point cloud feature extraction; LCPFormer25 proposed a local coordinate coding method based on Transformer architecture to capture the long-range dependency of point cloud features; Point Transformer37 directly applies Transformer to point cloud processing for the first time. Through the self-attention mechanism, the global context information in the point cloud is effectively captured. These methods have their own characteristics, and start with different angles and methods, and solve some key problems in point cloud processing, such as disorder, sparsity, fusion of local and global features, etc.

We adopt Point Transformer37, which is most relevant to our network, for the experimental setup for two primary reasons: Firstly, its straightforward design, superior efficiency, and outstanding performance render Point Transformer37 a promising application potential and technical significance within the ___domain of point cloud processing. Secondly, while Point Transformer has demonstrated impressive outcomes in semantic segmentation tasks for indoor scene point cloud data, it has yet to exhibit comparable effectiveness on outdoor, large-scale urban scene point cloud datasets. Specific comparative results are detailed in Table 1, where the optimal outcomes in each category are highlighted in bold black; Fig. 6 illustrates the comparative results of the SensatUrban dataset against other methodologies.

As shown in Table 1, the proposed PTMF method improves the mIoU by 5.17% and 0.12% compared to the previous methods with higher accuracy, LCPFormer and Point Transformer, respectively. The complete classification results for the SensatUrban dataset are shown in Table 1, and our proposed method also has great potential in fine-grained categories, such as the accuracy of Bridge, Parking, Street, Furniture, Car, and Footpath categories are much higher than the segmentation accuracy of other networks. Figure 4a also visualizes the Segmentation results to visualize the global accuracy of nine point cloud semantic segmentation methods on the SensatUrban dataset which demonstrates the effectiveness of our method.

Compared with other methods on the DALES dataset

To further demonstrate the performance of the proposed PTMF network, seven 3D semantic segmentation networks, namely KPConv48, PointNet + + 8, ConvPoint1, SuperPoint80, PointCNN 81, ShellNet 82, and Point Transformer37, are selected. The results are compared with the experimental results of the proposed method in the DALES dataset. The basics of KPConv48, PointNet + + 82, and Point Transformer37 networks have been described in 4.5-1). ConvPoint1, SuperPoint49, PointCNN50, and ShellNet51 all implement convolution operations on point clouds to process disordered point clouds to obtain point cloud feature information, but their implementation methods and application backgrounds are different.

The complete results of semantic segmentation of the DALES dataset are shown in Table 2, our method achieves the highest level of accuracy in most of the feature classes, such as Grounds, Buildings, trucks, Power Lines, fences, and vegetation feature classes reach the highest level of IoU; The overall accuracy mIoU and mAcc were 82.18% and 98.23%, respectively. Figure 4b shows the comparison of the segmentation results and overall accuracy results of the seven point cloud semantic segmentation methods for each feature in the DALES dataset, respectively. These results show that our method is better than the other seven networks in terms of the classification accuracy of each feature and the overall classification accuracy, which fully demonstrates that the PTMF method proposed by us is practicable and effective.

Result visualization

To evaluate the impact of the proposed PTMF Network more intuitively, we randomly select three point cloud scenes from the SensatUrban dataset and the DALES dataset, respectively, and have visualized their output results for comparison. The visualization results are shown in Figs. 5 and 6.

Figure 5 shows the comparison of our semantic segmentation results with real labels and Baseline Network Point Transformer on the SensatUrban dataset, RGB Cloud points are also shown in Fig. 5 to make the comparison clearer. Similar results can also be found in Fig. 6, which shows the results of the DALES dataset. The visualization results strongly support our motivation that the separate sampling of local regions leads to the destruction of instance information, the original Point Transformer uses local area sampling alone, which leads to the destruction of instance information, but our proposed PTMF method can effectively enhance the expression of point cloud geometric features, provide more information and discriminant representation, and help the network to further mine the fine-grained features of point cloud, to improve the classification accuracy.

Characteristic analysis

Screening of experimental protocols

According to the analysis in “Multi-feature point transformer module”, the geometric features in this paper are selected to assist the PTMF network in achieving the point cloud classification based on the four types of geometric features, namely Lλ, Pλ, Sλ, and Cλ, which are the most widely used correlation features based on eigenvectors and eigenvalues. According to the combination form of geometric features and point cloud information, the multi-feature fusion combination schemes for SensatUrban and DALES datasets are shown in Table 3, respectively. Taking the SensatUrban dataset as an example to conduct experiments according to the combination scheme shown in Table 1, the four combination schemes in feat7, the mIoU accuracy is only differentiated in the third decimal place, so we choose the X, Y, Z, R, G, B, Lλ combination which has the highest accuracy; X, Y, Z, R, G, B, Lλ, Pλ combination in feat8 has the highest accuracy; X, Y, Z, R, G, B, Lλ, Pλ, Sλ combination in feat9 has the highest accuracy; X, Y, Z, R, G, B, Lλ, Pλ, Sλ, Cλ combination has the highest accuracy; the combination of the four features in feat10 has the highest accuracy. Therefore, the best feature fusion scheme for the experimental design of this paper is (1) point cloud self-information; (2) point cloud self-information and Lλ; (3) point cloud self-information, Lλ, and Pλ; (4) point cloud self-information, Lλ, Pλ, and Sλ; and (5) point cloud self-information, Lλ, Pλ, Sλ, and Cλ. The input matrices of the multi-feature fusion model for the SensatUrban dataset are respectively.

Quantitative analysis

To comprehensively analyze the performance of the point Transformer network, experiments were carried out on the SensatUrban and DALES datasets according to the five schemes mentioned above, and the statistics of the evaluation indexes of the classification results of the five schemes are shown in Tables 4 and 5. To facilitate the longitudinal comparison of the classification results of this experiment with those of other methods, the classification accuracy of each feature in the two datasets is shown in Acc, and the overall classification indexes are shown in mIoU, mAcc, and OA; the optimal results in each column are indicated in bold black, and the visual display of the evaluation indexes of the overall accuracy of the two datasets is shown in Fig. 7.

From Table 4 SensatUrban dataset classification accuracy comparison, it can be seen that by adding four types of geometric features in turn, the classification accuracy for five feature categories Bridge, Parking, Street Furniture, Footpath, Car shows a significant improvement trend under the combined influence of the four types of geometric features, which is 26.41%, 27.18%, 14.67%, 15.8%, and 4.57% higher than that of the classification accuracy without adding four types of geometric features, respectively; We sequentially add four types of geometric features (Lλ, Pλ, Sλ, Cλ) to improve the classification accuracy of the above five types of features in turn, and the combination of the four features has the highest accuracy, which further verifies that the targeted geometric feature coding added to the network is able to achieve a significant improvement in the classification accuracy of the features that were originally extracted with low accuracy; Ground, Vegetation, Building, Water also improved the classification accuracy when adding geometric features, Wall and Traffic Road showed a decreasing trend in classification accuracy when adding four features; Rail and Bike were not detected.

From Table 5 DALES dataset classification accuracy comparison can be seen, the four geometric features are added in turn, eight kinds of ground cover features have a certain degree of enhancement, the combination of the four features use the highest accuracy, trucks increased by 12% points, it is the classification accuracy of the most improved features, and each other type of features enhancement is between 2 and 10% points, analyze the reason for its information with the dataset comes with information related to the data set, the reason for this is related to the information that comes with the dataset, which only has XYZ coordinate information, and the geometric features provide targeted geometric shape features to assist the XYZ coordinates to mine the deeper features of the features. The color information RGB is also important for fine-grained understanding of urban-scale point clouds, especially for distinguishing heterogeneous classes with consistent geometries.

From the overall evaluation metrics comparison plots of the five scenarios for the SensatUrban dataset and the DALES dataset shown in Fig. 7, it can be seen that under the multi-feature strategy, the overall metrics mIoU, mAcc, and OA show an increasing trend in the sequence of adding Lλ, Pλ, Sλ, and Cλ. Among them, the mIoU of the two datasets increased by 5.17% and 5.41%.

The comparative analysis of the classification accuracy of each category of the five scenarios in the two datasets and the overall accuracy evaluation indexes indicate that the Multi-feature Point Transformer method with multi-feature information fusion strategy proposed in this paper is reasonable and effective, which can improve the performance of the classification model of the model to a certain extent, and further improve the classification accuracy of the features. Reliable experimental validation and method improvement are provided for Point Transformer to be used on airborne large-scale outdoor urban scene datasets.

Visualization of experimental results of feature analysis

To demonstrate the effectiveness of our proposed Multi-feature point Transformer method more intuitively, we randomly sampled several point cloud scenes from the SensatUrban and DALES datasets to visualize their outputs, as shown in Fig. 8. Ground Truth represents the real feature classification labels, and each row of feat6, feat7, feat8, feat9, feat10 of SensatUrban data and feat3, feat4, feat5, feat6, feat7 of DALES data represent the overall semantic segmentation results and local zoom-in of different feature combinations, respectively. feat6 and feat3 rows represent the segmentation results of the two datasets’ point cloud self-contained data information XYZRGB and XYZ, respectively, followed by the corresponding rows each representing the segmentation results incorporating the combination of the four types of geometrical features Lλ, Pλ, Sλ, Cλ, respectively. From the different colored marked areas in Fig. 8.

Discussions

In this paper, the proposed PTMF method is applied to two aerial LiDAR point cloud datasets, SensatUrban and DALES, for experimental evaluation of the model. From the five scenarios of multi-feature combinations in Tables 4 and 5, although the overall evaluation metrics of the method on both datasets indicate that the self-contained information of the point cloud data is the best to use in combination with the four types of geometrical features, mIoU and mAcc accuracy being the highest. However, for the classification accuracy of each feature class, with the sequential addition of the four types of geometric features, the classification accuracy of the eight feature classes in the DALES dataset shows a trend of sequential enhancement, indicating that the four types of geometric features have a certain degree of enhancement effect on the features of the eight feature classes in this dataset; On the other hand, on the SensatUrban dataset, it appears that Wall and Traffic Road show a decreasing trend in classification accuracy when four features are added; Ground, Vegetation, Building, and Water also improve the classification accuracy when geometric features are added, but the highest accuracies occur in feat8, feat9, feat7, and feat7, respectively; Rail and Bike are not detected, which indicates that the four geometric features inhibit the feature expression of Wall and Traffic Road features, and do not play any role in Rail and Bike. The reasons for this were analyzed for two reasons:

One is related to the two datasets, although both datasets are outdoor large-scale urban scene datasets, the SensatUrban dataset contains 13 feature categories, which are complex and have huge differences in the scales of different categories, such as Building, Wall and Car, Bike, which will cause the model to be difficult to classify all the categories on different scales. The model can hardly classify all the categories at different scales at the same time with high accuracy; From Fig. 8, we can intuitively see that the urban scene features in the SensatUrban dataset are more complex and the features are more finely grained, which further increases the difficulty of feature classification, and there is an extreme data imbalance in this dataset, with very few Rail and Bike training samples, which account for about 0.1% and 0.001% of all the data samples, respectively, and the structure of these two types of features are very different from the other features. Even if we choose the strategy of mining point cloud morphological structure by combining the Transformer network structure, which has a strong advantage in feature extraction, and the more targeted point cloud geometric features, we still cannot detect and extract Rail and Bike information. As for the DALES dataset, the eight feature types contained in the dataset are all common features in urban scenes with a concentrated distribution, clear boundary features, and structural information, and these features have a sufficient number of samples annotated, and the four types of geometric features we have incorporated, Lλ, Pλ, Sλ, and Cλ, have a strong relevance to the morphological structure of these features, and thus can effectively promote the features’ strong expression. Therefore, the classification accuracy of each feature shows the expected effect of step-by-step improvement.

Secondly, since each of the four types of geometric features describes different geometric properties of the point cloud in the neighborhood, different geometric properties have different effects on different features. Lλ describes the degree of the linear fit of local surfaces, with low linearity estimates on planes with good ductility and high linearity estimates on linear edges such as edges of buildings or roads, whereby effective extraction of regions with good linear edges can be achieved, which can theoretically assist in the classification of features along the edges; Pλ describes the change in flatness of local point cloud fitting surfaces, and features with good planarity are highly flat; Sλ is mainly used to describe spherical features, e.g. a vegetated area will have a high degree of sphericity of the point cloud in that area due to the shape of some of the tree canopies; Cλ describes the degree of the drastic change of the surface fitted to the point cloud within a local neighborhood, with a higher degree of curvature within a densely vegetated local area, which is significantly higher than the curvature of the roofs of flatter buildings and roads. According to the descriptions of the four geometric features above, Lλ, Pλ has an enhancement effect on the feature extraction of relatively flat Ground features, while Sλ, Cλ do not help or even inhibit the feature recognition of locally flat and ductile features; for the Vegetation category which contains high and low trees, shrubs, hedges, and bushes, the joint use of Lλ, Pλ, and Sλ enhances their feature information; Lλ, Pλ has an enhancement effect on the extraction of flat roof plane features for relatively flat Buildings, while Sλ improves the recognition of some pointed or rounded roofs; Water in the SensatUrban dataset contains rivers and canals with narrow and long linear features, so Lλ is more effective for its feature enhancement, and with Pλ, Sλ, Cλ the inhibition is reduced. For the Wall feature category in the SensatUrban dataset, which contains fences, motorway barriers, walls, and other obstacles, the geometric structure of these features, the external shape of these features, and the scale of the object structure vary greatly, and the sparseness of the point cloud obtained from the data acquisition angle is not the same, resulting in the geometric features that we selected are almost not targeted at these features, and the inclusion of them inhibits the expression of the feature information, resulting in the classification accuracy being lowered. The Traffic Road category includes traffic roads, main streets, highways, and drivable areas, so it is possible to carry a large number of vehicle features in this category, so adding geometric features will misclassify the Traffic Road points into other features such as automobiles, which will reduce the recognition accuracy. This is the reason why the classification results do not increase but decrease when four geometric features are added to the Water, Wall, and Traffic Road features in the SensatUrban dataset.

In summary, the proposed PTMF method for the prevalent categories in urban scenes, such as the DALES dataset demonstrates, that the distribution of landforms is concentrated, the boundary features and structural information are clear in the urban scene data, and the four types of geometric features Lλ, Pλ, Sλ, Cλ that we incorporate are very specific to the morphological structure of these landforms, and thus can effectively promote the strong expression of the landform features so that the classification accuracy of each feature shows the expected effect of step-by-step improvement. However, in the case of the SensatUrban dataset, which is a complex urban scene with fine-grained features, and a dataset with huge differences in the scale of feature classes and extreme data imbalance, the method in this paper has some limitations in breaking through the accuracy of individual features. However, in general, the method is effective in improving the classification accuracy of point clouds for large-scale urban scenes.

Conclusions

In this work, we propose a Transformer-based Multi-feature Fusion Network (PTMF), which explicitly integrates geometric features into the Point Transformer architecture for large-scale airborne point cloud classification. In terms of feature selection optimization, theoretical analysis and extensive experimental validation demonstrate that Lλ, Pλ, Sλ, and Cλ can effectively enhance the fine-grained classification accuracy of aerial point clouds. Regarding fusion mechanism innovation, we introduce a Multi-feature Point Transformer Module that enables adaptive multi-level fusion of geometric and semantic features. This innovative mechanism not only strengthens feature representation but also provides a more flexible approach for feature interaction in complex point cloud classification scenarios. In terms of network architecture innovation, PTMF improves the point cloud modeling capability of traditional Transformer architectures by incorporating geometric information into the self-attention mechanism, allowing the network to better capture both global and local characteristics of complex point cloud morphologies. Comprehensive experimental evaluations were conducted on large-scale urban benchmark datasets, SensatUrban and DALES. The results demonstrate that the proposed method exhibits outstanding performance and robustness across diverse scenarios. In future work, we aim to further improve the classification accuracy of individual feature categories in the SensatUrban dataset by assigning learnable weights to geometric features during the encoding process. This involves analyzing the contribution of each geometric feature to enhance model performance and classification accuracy.

Data availability

All data generated or analyzed during this study are included in this published article.

References

Zhuang, X. et al. Perception-aware multi-sensor fusion for 3D lidar semantic segmentation. IEEE Trans. Geosci. Remote Sens. 59, 1234–1245 (2021).

Charles, R. Q., Su, H., Mo, K. C., Guibas, L. J. & PointNet Deep learning on point sets for 3D classification and segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 77–85 (2017).

Mekuria, R. et al. Design, implementation, and evaluation of a point cloud compression framework. ISPRS J. Photogramm. Remote Sens. 130, 123–135 (2017).

Ren, Y., Xiao, Y., Zhou, Y., Zhang, Z. & Tian, Z. CSKG4APT: a cybersecurity knowledge graph for advanced persistent threat organization attribution. IEEE Trans. Knowl. Data Eng. 1–15 (2022).

Tang, K. et al. SymAttack: symmetry-aware imperceptible adversarial attacks on 3D point clouds. In Proceedings of the 32nd ACM International Conference on Multimedia 3131–3140 (ACM, Melbourne 2024).

Liu, H. et al. PHCG: PLC honeypoint communication generator for industrial IoT. EEE Trans. Mob. Comput. 24 (1), 198–208 (2025).

Bouziani, A. et al. Comparison assessment of digital 3D models obtained from photogrammetry and LiDAR. ISPRS Arch. Photogramm. Remote Sens. Spatial Inf. Sci. Volume XLVI-4/W5-2021, (2021).

Li, W., Wang, F. D. & Xia, G. S. A geometry-attentional network for ALS point cloud classification. ISPRS J. Photogramm. Remote Sens. 164, 26–40 (2020).

Xu, Y., Jia, J. & Zhu, X. Classification of lidar point clouds using supervised learning. ISPRS J. Photogramm. Remote Sens. 167, 186–178 (2020).

Chen, L. et al. Multi-feature aggregation for semantic segmentation of airborne lidar point clouds. ISPRS J. Photogramm. Remote Sens. 192, 1–15 (2022).

Hu, H., Wang, Y. & Zhang, X. Airborne lidar point cloud classification based on deep learning features. ISPRS J. Photogramm. Remote Sens. 156, 123–135 (2020).

Huang, J. et al. GraNet: global relation-aware attentional network for lidar point cloud classification. ISPRS J. Photogramm. Remote Sens. 178, 109–124 (2021).

Zhu, X. et al. Cylindrical and asymmetrical 3D convolutional networks for lidar point cloud classification. ISPRS J. Photogramm. Remote Sens. 164, 156–170 (2020).

Vosselman, G., Coenen, M. & Rottensteiner, F. Contextual segment-based classification of airborne laser scanner data. ISPRS J. Photogramm. Remote Sens. 128, 354–371 (2017).

Chan, J. C. W. & Paelinckx, D. Evaluation of random forest and adaboost tree-based ensemble classification and spectral band selection for ecotope mapping using airborne hyperspectral imagery. Remote Sens. Environ. 112, 2999–3011 (2008).

Mallet, C., Bretar, F., Roux, M., Soergel, U. & Heipke, C. Relevance assessment of full-waveform lidar data for urban area classification. ISPRS J. Photogramm. Remote Sens. 66, S71–S84 (2011).

Ghamisi, P. & Hofle, B. LiDAR data classification using extinction profiles and a composite kernel support vector machine. IEEE Geosci. Remote Sens. Lett. 14, 659–663 (2017).

Sun, J., Lai, Z. & Long, Y. Point cloud classification features. ISPRS J. Photogramm. Remote Sens. 128, 354–371 (2017).

Weinmann, M., Jutzi, B., Hinz, S. & Mallet, C. Semantic point cloud interpretation based on optimal neighborhoods, relevant features and efficient classifiers. ISPRS J. Photogramm. Remote Sens. 105, 286–304 (2015).

Xu, Y. et al. Classification of lidar point clouds using supervoxel-based detrended feature and perception-weighted graphical model. IEEE J. Sel. Top. Appl. Earth Obs Remote Sens. 13, 72–88 (2020).

Alshehhi, R., Marpu, P. R., Woon, W. L. & Mura, M. D. Simultaneous extraction of roads and buildings in remote sensing imagery with convolutional neural networks. ISPRS J. Photogramm. Remote Sens. 130, 139–149 (2017).

Wu, B., Zhou, X., Zhao, S., Yue, X. & Keutzer, K. SqueezeSegV2: Improved Model Structure and Unsupervised Domain Adaptation for Road-Object Segmentation from a LiDAR Point Cloud. Preprint at (2018). http://arxiv.org/abs/1809.08495

Su, H., Maji, S., Kalogerakis, E. & Learned-Miller, E. Multi-view convolutional neural networks for 3D shape recognition. In IEEE International Conference on Computer Vision (ICCV) 945–953 (IEEE, Santiago 2015).

Yang, Z. & Wang, L. Learning relationships for multi-view 3D object recognition. In 2019 IEEE/CVF International Conference on Computer Vision (ICCV) 7504–7513 (IEEE, Seoul 2019).

Tatarchenko, M., Park, J., Koltun, V., Zhou, Q. Y. & Recognition, P. Tangent convolutions for dense prediction in 3D. In 2018 IEEE/CVF Conference on Computer Vision 3887–3896 (IEEE, Salt Lake City 2018).

Lin, Y. et al. FPConv: learning local flattening for point Convolution. ISPRS J. Photogramm. Remote Sens. (2025).

Kuang, H., Wang, B., An, J., Zhang, M. & Zhang, Z. Voxel-FPN: multi-scale voxel feature aggregation for 3D object detection from LIDAR point clouds. Sensors 20, 704 (2020).

Riegler, G., Ulusoy, A. O. & Geiger, A. OctNet: learning deep 3D representations at high resolutions. In IEEE Conference on Computer Vision and Pattern Recognition (CVPR) 6620–6629 (IEEE, Honolulu 2017).

Brock, A., Lim, T., Ritchie, J. M. & Weston, N. Generative and discriminative voxel modeling with convolutional neural networks. Preprint at (2016). http://arxiv.org/abs/1608.04236

Zhu, Z., Wang, X., Bai, S., Yao, C. & Bai, X. Deep learning representation using autoencoder for 3D shape retrieval. Preprint at (2014). http://arxiv.org/abs/1409.7164

Charles, R. Q. et al. Deep Learning on point sets for 3D classification and segmentation. In IEEE Conference on Computer Vision and Pattern Recognition (CVPR) 77–85 (IEEE 2017). https://doi.org/10.1109/CVPR.2017.16

Hu, Q. et al. RandLA-Net: efficient semantic segmentation of large-scale point clouds. In 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) 11105–11114 (IEEE, Seattle 2020).

Pan, X., Xia, Z., Song, S., Li, L. E. & Huang, G. 3D object detection with pointformer. ISPRS J. Photogramm. Remote Sens. 128, 354–371 (2017).

Zhu, X. et al. Deformable DETR: deformable transformers for end-to-end object detection. Preprint at (2021). http://arxiv.org/abs/2010.04159

Xu, Y. et al. Deep learning on point sets with parameterized convolutional filters. in Computer Vision–ECCV 2018 (eds Ferrari, V., Hebert, M., Sminchisescu, C. & Weiss, Y.) vol. 11212 90–105 (Springer International Publishing, Cham, (2018).

Huang, Z., Zhao, Z., Li, B. & Han, J. LCPFormer: towards effective 3D point cloud analysis via local context propagation in transformers. IEEE Trans. Circuits Syst. Video Technol. 33, 4985–4996 (2023).

Feng, Y., Zhang, Z., Zhao, X., Ji, R. & Gao, Y. GVCNN: group-view convolutional neural networks for 3D shape recognition. In 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition 264–272 (IEEE, Salt Lake City 2018). https://doi.org/10.1109/CVPR.2018.00035

Yang, Y. & Wang, X. Learning relationships for multi-view 3D object recognition. ISPRS J. Photogramm. Remote Sens. 128, 354–371 (2019).

Wang, Y. et al. Dominant set clustering and pooling for multi-view learning. ISPRS J. Photogramm. Remote Sens. 128, 372–385 (2019).

Cao, Y. et al. 3D object classification via spherical projections. ISPRS J. Photogramm. Remote Sens. 128, 386–400 (2017).

Kalogerakis, E. et al. 3D shape segmentation with projective convolutional networks. ISPRS J. Photogramm. Remote Sens. 128, 401–415 (2017).

Maturana, D. & Scherer, S. VoxNet: a 3D convolutional neural network for real-time object recognition. ISPRS J. Photogramm. Remote Sens. 128, 416–425 (2015).

Le, T. & Duan, Y. PointGrid: a deep network for 3D shape understanding. In 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition 9204–9214 (IEEE, Salt Lake City 2018). https://doi.org/10.1109/CVPR.2018.00959

Tchapmi, L. P., Choy, C. B., Armeni, I., Gwak, J. & Savarese, S. SEGCloud: semantic segmentation of 3D point clouds. Int. J. Comput. Vis. 128, 1234–1245 (2017).

Graham, B. & Maaten, L. V. D. Submanifold sparse convolutional networks. Conf. Comput. Vis. Pattern Recognit. 1, 97–106 (2017).

Wang, P. S., Liu, Y., Guo, Y. X., Sun, C. Y. & Tong, X. O-CNN: Octree-based convolutional neural networks. ACM Trans. Graph. 36, 72:1–72:11 (2017).

Riegler, G., Ulusoy, A. O. & Geiger, A. OctNet: learning deep 3D representations at high resolutions. IEEE Conf. Comput. Vis. Pattern Recognit. 1, 3577–3586 (2017).

Wang, Y. et al. A voxel-based CNN for 3D object classification. IEEE Trans. Pattern Anal. Mach. Intell. 41, 1769–1782 (2019).

Jiang, M., Wu, Y., Zhao, T., Zhao, Z. & Lu, C. PointSIFT: a SIFT-like network module for 3D point cloud semantic segmentation. Eur. Conf. Comput. Vis. 11211, 567–583 (2018).

Wu, W., Qi, Z. & Fuxin, L. PointConv: deep convolutional networks on 3D point clouds. IEEE Conf. Comput. Vis. Pattern Recognit. 1, 9621–9630 (2019).

Thomas, H., Qi, C. R., Deschaud, J. E., Marcotegui, B. & Goulette, F. KPConv: flexible and deformable convolution for point clouds. IEEE Int. Conf. Comput. Vis. 1, 6411–6420 (2019).

Li, Y. et al. PointCNN: convolution on X-transformed points. Adv. Neural Inf. Process. Syst. 31, 820–830 (2018).

Wu, Z. et al. DGCNN: disordered graph convolutional neural network. IEEE Trans. Neural Netw. Learn. Syst. 30, 3401–3412 (2019).

Tian, Y., Zhang, Y., Zhang, H. & Xu, C. PCGCN: partition-centric processing for accelerating graph convolutional networks. IEEE Trans. Parallel Distrib. Syst. 31, 2269–2282 (2020).

Zhao, H., Jiang, L., Fu, C. W. & Jia, J. PointWeb: enhancing local neighborhood features for point cloud processing. IEEE Conf. Comput. Vis. Pattern Recognit. 1, 5565–5573 (2019).

Hu, Q. et al. RandLA-Net: efficient semantic segmentation of large-scale point clouds. IEEE Conf. Comput. Vis. Pattern Recognit. 1, 11108–11117 (2020).

Geng, Z. et al. Multi-scale attentive aggregation for lidar point cloud segmentation. IEEE Trans. Image Process. 30, 7767–7779 (2021).

Wu, F., Fan, A., Baevski, A., Dauphin, Y. N. & Auli, M. Pay less attention with lightweight and dynamic convolutions. Preprint at (2019). http://arxiv.org/abs/1901.10430

Wang, W. et al. Pyramid vision transformer: a versatile backbone for dense prediction without convolutions. In 2021 IEEE/CVF International Conference on Computer Vision (ICCV) 548–558 (IEEE, Montreal 2021). https://doi.org/10.1109/ICCV48922.2021.00061

Zhao, H., Jiang, L., Jia, J., Torr, P. H. S. & Koltun V. Point transformer.

Rigutini, L., Papini, T., Maggini, M. & Scarselli, F. SortNet: learning to rank by a neural preference function. IEEE Trans. Neural Netw. 22, 1368–1380 (2011).

Qiu, S., Anwar, S. & Barnes, N. PU-transformer: point cloud upsampling transformer. in Computer vision—ACCV 2022 (eds Wang, L., Gall, J., Chin, T. J., Sato, I. & Chellappa, R.) vol. 13841 326–343 (Springer Nature, Cham, (2023).

Dai, M., Xing, S., Xu, Q., Li, P. & Chen, K. Multifeature fusion and geometric convolution for object classification of airborne lidar point clouds. J. Image Graph. 574–585 (2022).

Lai, M., Zhao, J., Liu, C., Cui, C. & Long, H. Semantic segmentation of airborne lidar point clouds based on CAFF-PointNet. Laser Optoelectron. Prog. 58, 202–223 (2021).

Tian, S. & Long, A. A point cloud classification method based on graph Convolution and multi-level feature fusion. Laser Optoelectron. Prog. 15, 123–130 (2023).

Huang, R. et al. Deep point embedding for urban classification using ALS point clouds: a new perspective from local to global. ISPRS J. Photogramm. Remote Sens. 163, 62–81 (2020).

Huang, Q., Wang, W. & Neumann, U. Recurrent Slice Networks for 3D Segmentation of Point Clouds. In 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition 2626–2635 (IEEE, Salt Lake City 2018). https://doi.org/10.1109/CVPR.2018.00278

Huang, Q., Wang, W. & Neumann, U. Recurrent Slice Networks for 3D Segmentation of Point Clouds. in 2018IEEE/CVF Conference on Computer Vision and Pattern Recognition 2626–2635 (IEEE, Salt Lake City, UT, 2018).https://doi.org/10.1109/CVPR.2018.00278

Weinmann, M. et al. Distinctive 2D and 3D features for automated large-scale scene analysis in urban areas. ISPRS J. Photogramm. Remote Sens. 105, 286–304 (2015).

Demantké, J. et al. Streamed vertical rectangle detection in terrestrial laser scans for facade database production. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. I-3, 123–130 (2012).

Hu, Q. et al. SensatUrban: learning semantics from urban-scale photogrammetric point clouds. Preprint at (2022). http://arxiv.org/abs/2201.04494

Varney, N., Asari, V. K. & Graehling, Q. DALES: a large-scale aerial LiDAR data set for semantic segmentation. Preprint at (2020). http://arxiv.org/abs/2004.11985

Liu, X. Principles and applications of data science. In Proceedings of the International Conference on Data Science Vol. 123. 456–789 (2022).

Dao, Y. & Ly, Z. A comprehensive study on medical image segmentation. In Proceedings of the International Conference on Medical Imaging Vol. 456, 901–912 (2023).

Chen, J., Zhao, Y., Meng, C. & Liu, Y. Multi-feature aggregation for semantic segmentation of an urban scene point cloud. Remote Sens. 14, 5134 (2022).

Qi, C. R., Yi, L., Su, H. & Guibas, L. J. PointNet++: deep hierarchical feature learning on point sets in a metric space. Adv. Neural Inf. Process. Syst. (2017). arXiv preprint arXiv:1706.02143.

Lowphansirikul, C., Kim, K. S., Vinayaraj, P., Tuarob, S. & Singh, A. 3D semantic segmentation of large-scale point-clouds in urban areas using deep learning. In 2019 11th International Conference on Knowledge and Smart Technology (KST) 238–243 (IEEE, Phuket 2019).

Khakhlyuk, A., Dense captioning for 3D scenes with SparseConv. J. Comput. Vis. 15, 123–135 (2023).

Thomas, H. et al. KPConv: flexible and deformable convolution for point clouds. In 2019 IEEE/CVF International Conference on Computer Vision (ICCV) 6410–6419 (IEEE, Seoul 2019).

DeTone, D., Malisiewicz, T. & Rabinovich, A. SuperPoint: self-supervised interest point detection and description. In 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW) 337–33712 (IEEE, Salt Lake City 2018).

Li, Y., Chen, Z., Wu, B. & Xu, M. PointCNN: convolution on X-transformed points. Neural Netw. 15, 112–130 (2020).

Zhang, Z., Hua, B. S. & Yeung, S. K. ShellNet: efficient point cloud convolutional neural networks using concentric shells statistics. In 2019 IEEE/CVF International Conference on Computer Vision (ICCV) 1607–1616 (IEEE, Seoul 2019).

Acknowledgements

This work is funded by the Joint Fund of Collaborative Innovation Center of Geo-Information Technology for Smart Central Plains, Henan Province, and Key Laboratory of Spatiotemporal Perception and Intelligent Processing Ministry of Natural Resources, No.232108.This work was part of the National Natural Science Foundation of China for Surface Programs, No.41876105.This work was part of the Excellent Youth Foundation of He’nan Scientific Committee, No. 242300421043.This work was part of the Key scientific research Foundation of the university in Henan Province, No. 25A420004.This work was part of the Science and Technique Foundation of Henan Province, No. 242102321009.This work was part of the Key Scientific Research Foundation of the university in Henan Province, No. 24A420001.

Author information

Authors and Affiliations

Contributions

A. and D. led the study design and experimental planning. C. as the senior advisor, guided the research direction, manuscript structure, and content review, while E managed data acquisition. D. and F. contributed to algorithmic enhancements. G. H. provided technical support in experiments.B. conducted an in-depth analysis of the research results and played a significant role in revising the manuscript. All authors collaborated on writing, reviewing, and approving the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Pan, J., Cao, J., Xing, S. et al. An aerial point cloud classification using point transformer via multi-feature fusion. Sci Rep 15, 22924 (2025). https://doi.org/10.1038/s41598-025-02719-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-025-02719-z