Abstract

Model verification is a crucial step in the development and optimization of Earth system models (ESMs). Recently, a deep learning approach in evaluating statistical consistency for the atmosphere component of ESMs (A-ESM-DCT) has been proven effective in model verification. However, due to the longer timescales required for the ocean to adjust compared to the atmosphere, achieving an efficient method for evaluating the consistency of ocean models necessitates extending model integration time while reducing ensemble simulation size. Traditional deep learning models, such as those in A-ESM-DCT, often lack robustness and fail to guarantee convergence in small data regimes. We introduce a deep learning consistency test for the ocean component of ESMs, referred to as O-ESM-DCT. The O-ESM-DCT is based on a physics-informed convolutional autoencoder (PIConvAE) model to achieve rapid convergence with significantly fewer simulations and adopts the data-fidelity and physical-driven losses as an indicator to determine the consistency. The O-ESM-DCT is applicable for evaluating the consistency of Community Earth System Model in heterogeneous computing environments as well as modifications to the compiler, numbers of computing cores, and model parameters. Our O-ESM-DCT offers an effective and objective solution for ensuring the reliability of the development and optimization processes for the ocean component of ESMs on high-performance computing systems.

Similar content being viewed by others

Introduction

Earth system models (ESMs) are established by dynamical, physical, chemical, and biological processes through numerical methods1, such as the Community Earth System Model (CESM)2. Developed by National Center for Atmospheric Research (NCAR), the CESM provides the simulations of the past, present, and future climate states of Earth. The CESM consists of multiple component models, such as atmosphere, land, ocean, and sea ice models, which are coupled together. Model verification and validation are necessary works in the development of ESMs3. The research purposes and datasets of model verification and validation are different. Model validation evaluates the ability of the model to simulate the real world by comparing with the observational data products4,5,6,7, and model verification evaluates the correctness of the model during the computing environment changes by comparing with original and trusted simulations8,9,10,11,12,13,14,15,16,17,18.

With the development of supercomputers, changes in software and hardware, such as compiler changes and heterogeneous technologies, as well as changes in parallel strategies due to the increase in computing cores, can cause the simulation results of ESMs that are no longer bit-for-bit (BFB) identical to previous results19, which needs to ensure that the outputs still produce the same climate. Hence, we are expected to develop a tool and focus on model verification to determine whether or not changes during the development and optimization of ESMs adversely affect the simulation.

Model verification needs to consider the rationality of the datasets and methods. Firstly, computing environment changes such as compiler changes and heterogeneous technologies can inhibit bit-for-bit reproducibility of ESM simulations but are not expected to affect the scientific consistency18, evaluating the statistical consistency of ESM simulations is the currently common model verification approach. Secondly, the traditional model verification approaches need assessment and support from climate scientists, so the coarse-grained and objective approaches are widely used13,14,15,16,18. Finally, the time scales of ocean and atmospheric simulations for model verification are different. For example, the community Earth system model ensemble consistency test (CESM-ECT) for Community Atmospheric Model (CAM) evaluates statistical distinguishability at 9 time steps16, but the CESM-ECT for Parallel Ocean Program (POP) requires annual average simulation results14. That is because the ocean and atmosphere models are different in dynamics, spatial scales, and timescales. The propagation timescale for adjusting the ocean is much longer than adjusting the atmosphere14.

The ESM deep learning consistency test for the atmosphere component of ESMs (A-ESM-DCT) is a coarse-grained and objective approach and uses an unsupervised bidirectional gate recurrent unit-autoencoder (BGRU-AE) to detect the consistency in heterogeneous computing, compiling optimization option changes, and model parameter changes, for the features of simulation results on the HPC systems18. However, the A-ESM-DCT is not applicable to the simulations of ocean models. First, the A-ESM-DCT only evaluates variables from the CAM component of CESM at the 96 time steps. The consistency test approach for the ocean component of ESMs requires annual average simulation results. The cost of data acquisition of annual ocean simulations is higher than that of short-term atmospheric simulations. Reducing the ensemble size is a necessary method to save costs, but the deep convolutional or recurrent neural networks lack robustness and fail to provide the guarantees of convergence in the small data regime20. The physical-information neural networks (PINNs) can demonstrate advantages in the small data regime. The main idea of the PINNs is that physical laws or empirically validated rules are introduced into NNs to achieve convergence fast and generalize well even when only a few training datasets are available20,21. Then, the A-ESM-DCT evaluates the outputs from the atmosphere component of CESM after the data pre-processing by the global area-weighted and vertical mean. However, the global weighted-area mean for the data pre-processing can not be used for ocean model data, because the variability of ocean data is less heterogeneous than that of atmospheric data across the ESMs grid14. The outputs of ocean models with spatial attributes are suitable for analysis in convolutional neural networks (CNNs)22. In the last several years, the CNNs have been explored in the field of Earth system science, such as data-driven weather forecasting23,24,25,26,27,28 and tropical cyclone detecting29,30,31,32. Hence, our focus in this study is on an unsupervised physics-informed and CNN deep learning tool for evaluating the statistical consistency of the ocean component of ESMs.

The goal of this article is to document the development of a physics-informed deep learning consistency test approach for the ocean component of ESMs, referred to as O-ESM-DCT, which is a supplement to ESM-DCT. The tool is based on the unsupervised physics-informed convolutional autoencoder (PIConvAE)33 model to analyze spatial attributes and achieve convergence fast in the few ensembles, and is applied to evaluate whether or not a new configuration is consistent with the original trusted configuration for the ocean component of CESM. The O-ESM-DCT is a first-step ocean model consistency test tool based on deep learning, which can achieve rapid convergence with significantly fewer simulations compared with traditional machine learning methods, analyze spatial features compared with statistical methods, and provide the guidelines for building a PINN including more complex nonlinear physical processes to evaluate the consistency for the ocean component of ESMs.

The rest of the paper is organized as follows. “Methods” Section details the physics-informed deep learning consistency test approach. “Results” Section shows the results of experiments with the consistency test approach. “Conclusion” Section shows the conclusion.

Methods

In this study, we develop a physics-informed deep learning consistency test tool for the ocean component of ESMs. Our development includes: (1) designing a PIConvAE model to study the spatial features and achieve convergence fast. (2) developing an ensemble approach to take the annual statistical distributions as the datasets. (3) designing an anomaly detection indicator to determine the consistency.

A PIConvAE deep learning model

The CNNs have been used for spatial feature analysis in the field of Earth system science. The 2D data such as the images or simulation results of sea surface temperature, are the main application scenarios of the 2D CNN. For the 3D data such as the simulation results of ocean models with added depth direction based on longitude and latitude direction, the structure of CNNs needs to be upgraded. The 3D CNNs are more suitable for 3D feature learning compared to 2D CNNs34,35, hence we apply the 3D CNNs to the field of 3D data analysis to evaluate the consistency for the ocean component of ESMs. The 3D convolution is achieved by convolving a 3D kernel to the cube formed by stacking multiple contiguous frames together. The value at position (x, y, z) on the jth feature map in the ith layer is given by

where tanh is the hyperbolic tangent function, bij is the bias for this feature map, m indexes over the set of feature maps in the (i-1)th layer connected to the current feature map, \(w_{{ij{\text{m}}}}^{pqr}\) is the (p, q ,r)th value of the kernel connected to the mth feature map in the previous layer, Pi is the height of the kernel, Qi is the width of the kernel, Ri is the size of the 3D kernel along the dimension of depth in ocean model.

PINNs20 are hybrid approaches that take into account data-based neural network models and physics-informed mechanistic models36. Many mathematical models are used to build the PINNs in Earth system modeling, such as the Navier–Stokes equation, Burgers equation, wave equation, advection–diffusion equation, and discrete-time models21,36,37. However, the mathematical models in the PINNs for evaluating the consistency for the ocean models have three constraints. First, evaluating the ESMs consistency uses the simulation results at a certain moment or time average, thus making the mathematical models independent of time as far as possible. Second, relative to other components in the Earth system, the ocean model has fewer independent diagnostic variables: temperature, sea surface height, salinity, zonal velocity, and meridional velocity14. The mathematical models should be related to the diagnostic variables.

We use the seawater equation of state (EOS) derived by McDougall and Jackett38 to build a PINN and then evaluate the consistency of the ocean models. The EOS for seawater is a diagnostic equation for the density in terms of temperature, salinity, and pressure. The equation of EOS is as follows:

where E1 is a 12-term polynomial and E2 is a 13-term polynomial, \(\rho\) is the density, \(\theta\) is potential temperature, S is the salinity, p is the pressure. To avoid a nonlinear integration of the hydrostatic equation, the pressure, p, is approximately evaluated as a time-independent function of depth p0(z) by means of the equation derived in Dukowicz39. The equation of p0(z) is as follows:

where z is the depth. The equation of E1 and E2 are as follows:

and

where the coefficients are shown in Table 1.

In this study, we use the PIConvAE deep learning model to evaluate statistical consistency for the ocean component of ESMs on the HPC systems. We use the 3D CNN model in the encoder and decoder to analyze the 3D features and integrate the EOS function into the loss function to constrain the deep learning model outputs to satisfy the physics-driven laws. Following the Eq. (1), the physics-driven loss function is as follows:

where the \(\theta^{\prime }\),\(\rho^{\prime }\), S′, and p′ are the deep learning model outputs. The PIConvAE deep learning model loss function is as follows:

where \(\lambda\) is the loss coefficient to make a balance between the loss terms, and LAE is the data-fidelity loss representing the reconstruction errors between the output and input of the AE. The illustration of the PIConvAE model is shown in Fig. 1.

The illustrations of the PIConvAE model. The blue dashed box represents the encoder, and the red dashed box represents the decoder. The short code C is the output of the encoder. The Conv3D is the CNN model. The ReLU is the activation function. The input and output are the input values and predicted values of the data.

It’s worth mentioning that we are expected to develop an efficient tool based on the PINN to evaluate the consistency for the ocean component of ESMs. However, the ocean models are established by many complex nonlinear physical processes, such as mixed layer depth (MLD) feedbacks and internal waves. At present, as the first step, given the constraint of efficiency and computing resources, we simplify the PINN structure and achieve as high accuracy as possible. Here, we only use the EOS (not including complex physical processes mentioned yet) as the physical-driven term to establish our PIConvAE model, which meets the basic consistency evaluation requirements. However, in the future, the tool could be improved by adding more ocean processes with more physical balance.

In addition, in this first step ocean model consistency test tool, which addresses the fundamental balance (geostrophic in this case), we only use the primary diagnostic variables rather than all independent diagnostic variables (zonal and meridional velocities not included in this case, for instance). In the future, with the advancement of ocean models with more physical phenomena simulated in detail, the consistency test model needs to be refined to account for accurate processes such as precise thermohaline circulation40 and detailed sea ice melting41. Under the circumstance, more complete independent diagnostic variables such as meridional and zonal velocities as well as sea surface height should be included to describe the ageostrophic signals and instantaneous freshwater flux, etc.

An ensemble approach to establish the datasets

The ESM-DCT evaluates the consistency through the analysis feature of ensemble simulation data. Ensembles created by small initial perturbations are used to diagnose the influence of computing environment changes42,43,44,45 and reduce the influence of the initial condition uncertainty46. For the O-ESM-DCT, we generate the ensemble for the datasets by running simulations that differ only in a random perturbation of the initial ocean temperature.

The propagation timescale for adjusting the ocean is much slower than that in the atmosphere. Evaluating the consistency for the ocean component of ESMs requires annual average simulation results. Following previous studies8,14, we generate 3-year simulation ensembles as datasets and evaluate the distribution of the annual average. The ensemble simulation data as the training, validation, and testing datasets are generated to train the PIConvAE model, adjust the hyperparameters of the PIConvAE model, and adjust the size of the training sets. The ratio of training, validation, and testing datasets is 6:2:2.

An anomaly detection indicator to determine the consistency

The ESM-DCT uses the loss threshold of the training datasets as an indicator to quickly determine the consistency and issue an overall pass or fail result. For the O-ESM-DCT, the loss contains the physics-driven loss by the output of the PIConvAE and the data-fidelity loss by the reconstruction errors between the output and input of the PIConvAE. We use the mean squared error (MSE) of the inputs and outputs as the reconstruction errors. Firstly, we use the training and validation datasets to train the PIConvAE deep learning model. Then, we re-input the training datasets into the saved PIConvAE deep learning model and regain the maximum value of the losses. Finally, we use the saved PIConvAE and the loss threshold of training datasets to determine the consistency of the testing datasets. The losses of normal testing datasets should be lower than the loss threshold of training datasets since the normal testing datasets are close to the training datasets47. If the passing rate of the testing datasets is equal to 0%, the tool issues an overall “failure”. Although the threshold setting is relatively simple, it is feasible to use it as a fast and objective indicator for anomaly detection and evaluating the consistency. Figure 2 illustrates the workflow for the O-ESM-DCT.

Software tools to evaluate the consistency

We further discuss the software O-ESM-DCT tools for the consistency evaluation of the ocean component of ESMs on HPC systems. We add the O-ESM-DCT tools to the existing ESM-DCT suite of Python tools. The O-ESM-DCT tools include four functions: 1) pre-processing the ESM simulation results and constructing the datasets; 2) training the PIConvAE model; 3) getting the loss threshold of training datasets; 4) testing the PIConvAE model.

Firstly, the ensemble simulation data as the training, validation, and testing datasets are generated and handled as needed, as noted in “An ensemble approach to establish the datasets” Section. The inputs of the deep learning model are variables: potential temperature, salinity, pressure, and density. The potential temperature and salinity are the simulation results of the ocean component of ESMs. The pressure is approximately evaluated as a time-independent function of depth by means of the equation derived in Dukowicz39. The density is calculated using the potential temperature, salinity, and pressure by the EOS. All ensembles are standardized by min–max normalization. We create Python codes get_datasets to compute the variables and generate the training, validation, and testing datasets.

Secondly, the PIConvAE model should be trained using the training datasets and validation datasets. At each time step of training the PIConvAE model, the users can input the validation datasets into the PIConvAE model and calculate the losses. We create Python codes train + validate_PIConvAE to train and save the PIConvAE model. We use the Pytorch tool to implement the PIConvAE model. The users adjust the PIConvAE model parameters until the minimum losses of the validation datasets and save the final PIConvAE model.

Thirdly, the loss threshold of the training datasets is calculated as an indicator to issue an overall pass or fail result. We create Python codes threshold_PIConvAE where the users can re-input the training datasets into the saved PIConvAE deep learning model and regain the maximum value of the losses.

Finally, we create Python codes test_PIConvAE to test the generalization performance of the model. The users can evaluate “new” simulation data for consistency. The testing datasets can be the simulation results of the heterogeneous computing environment, compiling optimization option changes, and model parameter changes. The users input the testing datasets into the saved PIConvAE deep learning model. If the losses of testing datasets are greater than the loss threshold of the training datasets, the testing datasets are not “passing”. If the passing rate of the testing datasets is equal to 0%, the tool issues an overall “failure”.

Results

We use the CESM as an example of large-scale ESMs to evaluate the consistency using the O-ESM-DCT. In the O-ESM-DCT, we accept the new ESM configurations if the non-linear features (learned from the deep learning model) of the new simulation data can match that of the original ensemble data. The CESM version used in the present study is an earlier version tagged as CESM1.3-beta17_sehires38, which has been optimized and developed to better support CESM simulations in the heterogeneous many-core systems48,49,50,51. The CESM compset is the B compset. The CESM grid resolution is “ne30g16”, which corresponds to 1º grid containing 320 × 384 grid points and 60 vertical levels for the ocean components. The time integration step size is 30 min. Simulations were run with 96 computing cores. The default compiler for the CESM is Intel 17.0.1 with -O2 optimization. The datasets are the ensembles with the O(10−14) perturbations of initial ocean temperature. The training and validation datasets are with default configurations. The testing datasets are with configurations with expected outcomes, such as changes in computing environments, model parameters, and the number of computing cores. The datasets in this study is low resolution. For high-resolution simulation, the resolution of training set, validation, and testing datasets should be unified. Note that the EOS equation is used as the basic function to build the PINNs for evaluating the consistency because of its simplicity. For other ESMs such as Nucleus for European Modelling of the Ocean (NEMO), Modular Ocean Model version 6 (MOM6), and Massachusetts Institute of Technology General Circulation Model (MITgcm), the importance of physical variables (e.g., mixed layer depth, salinity flux) can vary across different ocean models. Then, the high-resolution models can add physical process descriptions, which makes the O-ESM-DCT add more physical loss terms to the EOS to represent more oceanic dynamic processes.

Training the PIConvAE model

The GPU computing system we used for the PIConvAE model consists of Nvidia H100. Each H100 GPU contains 132 multithreaded streaming multiprocessors (SMs). Each SM contains 128 FP32 cores, 64 FP64 cores, 64 INT32 cores, and 4 Tensor cores.

We use the grid search to select the best hyperparameters and use the training and validation datasets to train the PIConvAE model, then we select the optimal hyperparameters when the mean losses of validation datasets in the PIConvAE model is the minimum value and the accuracy of testing datasets in the PIConvAE model is the maximum value. The values of optimal hyperparameters in the PIConvAE model are shown in Table 2. The dropout52 is the probability that the neuron is set to zero during training. The learning rate is used to control the magnitude of updating weights in each iteration of the model. The epochs are the times when the training datasets have been used by the model for training. The batch-size is used to define the number of training samples used by the model in each iteration. The \(\lambda\) is the loss coefficient in the Eq. (7) to make a balance between the data-fidelity and physics-driven losses. The hyperparameters include dropout in [0.0, 0.2, 0.4, 0.6, 0.8], learning rate in [0.00001, 0.0001, 0.001, 0.01], batch-size in [4, 8, 16, 32, 64], \(\lambda\) in [0.0, 0.1, …, 1.0].

We calculate the losses after re-inputting the training datasets into the saved PIConvAE model. The probability density function (PDF) of the losses of the training datasets is shown in Fig. 3. Following Fig. 3, we take 0.08661 as the loss threshold. Therefore, if the losses of testing datasets are greater than the loss threshold, the testing datasets are not “passing”. If the passing rate of the testing datasets is equal to 0%, the tool issues an overall “failure”. It represents that the simulation results of training and testing datasets are statistically distinguishable. Then, we use the Kolmogorov–Smirnov two-sample equality of distribution test (K–S test) to.

We select the ensemble size of training sets when the accuracy of testing datasets reaches its maximum value. The ratio of training, validation, and testing datasets of O-ESM-DCT is 6:2:2. The accuracy of O-ESM-DCT with different ensemble sizes of training datasets is shown in Table 3. Following Table 3, the ensemble size of training, validation, and testing datasets is 60, 20, and 20. At this size, the accuracy of test datasets with expected outcomes in the PIConvAE model is the maximum value of 99.0%.

Heterogeneous computing environment and compiler modifications

We use the O-ESM-DCT to evaluate the consistency of simulation results in the heterogeneous computing environment and compiler modifications that inhibit bit-for-bit reproducibility but are not expected to affect the statistical consistency19.

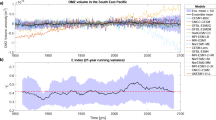

The first three experiments use the same Intel compiler version but different optimization levels: Intel 17.0.1 with -O0, -O1, and -O3. The next experiment uses the SW9 compiler on the new Sunway system only the general-purpose cores, management processing elements (MPEs) are involved in computation. The final experiment uses the SW9 compiler on the new Sunway system but the general-purpose cores, MPE, and accelerator cores, computing processing elements (CPEs), involved in computation. Note that the experiment where only the general-purpose cores are involved in computation is in the homogeneous computing but changes the compiler. The experiment where the general-purpose cores and accelerator cores are involved in computation is configured with the Zhang and McFarlane cumulus convection parameterization scheme (ZM scheme)53 of atmosphere components and K Profile parameterization scheme (KPP scheme)54 of ocean components for the heterogeneous computing. The passing rate of the testing datasets is shown in Table 4. Figure 4 shows the losses of the testing datasets with the heterogeneous computing environment and compiler modifications. The results show that the heterogeneous computing and compiler modifications can not affect the consistency of the CESM simulation results and the O-ESM-DCT can accept the uncertainties caused by the software and hardware designs on the HPC system.

The losses of the testing datasets with heterogeneous computing environment and compiler modifications. The red line is the loss threshold of the training datasets. -O0, -O1, and -O3 are the testing datasets with compiling optimization option changes. MPE and MPE + CPE are the testing datasets with general-purpose cores and general-purpose + accelerator cores involved in computation on the new Sunway system.

Modifications with the number of computing cores

Modifications with the number of computing cores inhibit bit-for-bit reproducibility but do not affect the statistical consistency. We use the O-ESM-DCT to evaluate the consistency of simulation results with different number of computing cores. By default, 96 computing cores were used for simulations. We run the simulations with 48, 144, and 192 computing cores used for the testing datasets. The passing rate of the testing datasets is shown in Table 5. Figure 5 shows the losses of the testing datasets with the different number of computing cores. The results show that the O-ESM-DCT can accept the uncertainties caused by changes in the number of computing cores on the HPC system.

Modifications with the physical parameters

Our tool must successfully detect the inconsistency of the simulation results that are known to produce statistically distinguishable outputs. We change three physical parameters in the KPP parameterization scheme: background diffusivity, equatorial diffusivity, and maximum psi-induced diffusivity. O-ESM-DCT should fail the results because modifications with the physical parameters change the vertical mixing and affect the statistical consistency. By default, the coefficients of background diffusivity (bckgrnd_vdc1), equatorial diffusivity (bckgrnd_vdc_eq), and maximum psi-induced diffusivity (bckgrnd_vdc_psim) are 0.16, 0.01, and 0.13. We change these coefficients to 0.26, 0.02, and 0.15. The passing rate of the testing datasets is shown in Table 6. Figure 6 shows the losses of the testing datasets with the modifications with the physical parameters. The results show that the tool can detect the modifications known to produce statistically distinguishable outputs.

Model comparison

We show the advantages of physical information in evaluating statistical consistency. We compare the performance between the machine learning method, local outlier factor (LOF)55, CESM-ECT, ConvAE, and the O-ESM-DCT using the PIConvAE. For LOF, each ensemble computes its distance from all other ensemble members, finds its k-nearest neighbors, and computes the LOF score. If the LOF of an ensemble member is greater than the LOF threshold, the LOF returns the member as a “failure”. In this study, the number of nearest neighbors is 5 and the LOF threshold is 1. The Euclidean distance is the distance calculation method. The ratio of training and testing datasets of LOF is 7:3. The CESM-ECT computes Z-score of temperature and salinity. The losses of ConvAE only contain the data-fidelity loss representing the reconstruction errors between the output and input of the AE.

We train and test the methods with different ensemble sizes. The ensemble size is in [15, 30, 45, 60, 75, 90, 105, 120]. We use the nonparametric Friedman test to compare the performance of these four methods. The p-value is 0.0001, which demonstrates that there are statistically significant differences between the four methods. We use the Nemenyi test for post-hoc analysis. The results are shown in Table 7. Table 7 shows that there are statistically significant differences between the PIConvAE and LOF as well as CESM-ECT methods. Table 8 shows the best evaluation metrics of the methods, where the results of PIConvAE are equal to Table 3. Following Table 8, the ensemble size of training datasets of PIConvAE is 60, that of ConvAE is 105, that of LOF is 90, and that of CESM-ECT is 105 when the accuracy and recall of testing datasets reaches its maximum value. The PIConvAE can achieve maximum accuracy with the minimum number of sets. The physical information can achieve convergence fast and generalize well.

We compare the computation time between the ConvAE, PIConvAE, LOF, and CESM-ECT with the same computing environments when the accuracy of testing datasets reaches its maximum value. For the LOF and CESM-ECT, the computation time is required for building the model and obtaining the results. For the ConvAE and PIConvAE, the computation time is required for building the model, getting the reconstruction errors, and obtaining the results. Table 9 shows the computation time of ConvAE, PIConvAE, LOF, and CESM-ECT for CESM. The PIConvAE has higher computational efficiency than the LOF and CESM-ECT. That is because the PIConvAE has a relatively simple network structure when used for CESM. Although the computation time of PIConvAE is longer than that of ConvAE, PIConvAE has advantages in accuracy and ensemble size.

Conclusion

Model verification is a critically important step in the development and optimization of ESMs, coarse-grained and objective approaches are widely used to evaluate the statistical consistency of ESM simulations. The coarse-grained and deep learning approach in evaluating statistical consistency for the atmosphere component of ESMs (A-ESM-DCT) has shown effectiveness in model verification. However, the A-ESM-DCT is not directly applicable to ocean model simulations for several reasons. Firstly, the ocean component requires annual average simulation results for consistency testing, whereas the A-ESM-DCT is designed to evaluate atmospheric variables from the CAM component of CESM using only 96 time steps. Secondly, reducing ensemble size is essential to minimize computational costs, but traditional deep learning models like A-ESM-DCT lack robustness and fail to ensure convergence when dealing with small data regimes. Lastly, the global weighted-area mean used for data preprocessing in A-ESM-DCT is unsuitable for ocean model data due to the relatively uniform variability of ocean data across ESM grids, compared to the more heterogeneous variability in atmospheric data14.

To address these challenges, we developed a physics-informed deep learning consistency test approach specifically for the ocean component of ESMs, referred to as O-ESM-DCT. This method complements ESM-DCT18 by generating 3-year simulation ensembles as datasets and employing the PIConvAE deep learning model to evaluate the distribution of annual averages, achieving rapid convergence with reduced ensemble sizes. The O-ESM-DCT leverages physics-driven and data-fidelity loss functions to assess whether new model simulations-arising from hardware, software, or human changes (e.g., heterogeneous computing environments, compiler modifications, variations in core numbers, and parameter changes)—are statistically distinguishable from the original ensembles on HPC systems. This work enhances confidence in detecting errors during the development and optimization of the ocean component of ESMs on HPC systems, integrating physical information with artificial intelligence.

Future efforts will focus on expanding test scenarios to improve model generalization and updating model structures for more precise feature extraction. Subsequent research will incorporate test datasets from other heterogeneous many-core systems, such as GPU-based HPC systems, refine attention mechanisms in deep learning architectures, and enhance model parameterization to address overfitting concerns. Then, the O-ESM-DCT will be used for model validation to ensure robustness in real-world scenarios and evaluate model consistency under diverse oceanic events, including ENSO and extreme marine phenomena such as marine storms. Finally, in the future, the O-ESM-DCT shall add more physics-informed mechanistic models to represent the complex ocean dynamics such as MLD feedbacks and internal waves, and include additional variables related to momentum and dynamics such as currents and sea surface height etc. Furthermore, the new neural networks will be used to not only evaluate consistency but also solve partial differential equations (PDEs).

Data availability

Codes, data and scripts used to run the models and produce the figures in this work are available on the Zenodo site (https://doi.org/https://doi.org/10.5281/zenodo.14575027, Yu et al., 2025) or by sending a written request to the corresponding author (Shaoqing Zhang, [email protected]).

References

Flato, G. M. Earth system models: An overview. WIREs Clim. Change. 2, 783–800. https://doi.org/10.1002/wcc.148 (2011).

Hurrell, J. W. et al. The community earth system model: A framework for collaborative research. Bull. Am. Meteorol Soc. 94, 1339–1360. https://doi.org/10.1175/BAMS-D-12-00121.1 (2013).

Carson II, J. S. Model verification and validation. in Proceedings of the 2002 Winter Simulation Conference. https://doi.org/10.1109/WSC.2002.1172868 (2002).

Collier, N. et al. The International land model benchmarking (ILAMB) system. Design, theory, and implementation. J Adv. Model Earth Sy. 10, 2731–2754. https://doi.org/10.1029/2018MS001354 (2018).

Lee, J. et al. Systematic and objective evaluation of earth system models: PCMDI metrics package (PMP) version 3. Geosci. Model Dev. 17, 3919–3948. https://doi.org/10.5194/gmd-17-3919-2024 (2024).

Eyring, V. et al. ESMValTool (v1.0): a community diagnostic and performance metrics tool for routine evaluation of Earth system models in CMIP. Geosci. Model Dev. 9, 1747–1802. https://doi.org/10.5194/gmd-9-1747-2016 (2016).

Righi, M. et al. Earth system model evaluation tool (ESMValTool) v2.0: Technical overview. Geosci. Model Dev. 13, 1179–1199. https://doi.org/10.5194/gmd-13-1179-2020 (2020).

Mahajan, S. Ensuring statistical reproducibility of ocean model simulations in the age of hybrid computing. Platform Adv. Sci. Comput. https://doi.org/10.1145/3468267.3470572 (2021).

Mahajan, S., Evans, K. J., Kennedy, J. H., Xu, M. & Norman, M. R. A multivariate approach to ensure statistical reproducibility of climate model simulations. Platf. Adv. Sci. Comput. https://doi.org/10.1145/3324989.3325724 (2019).

Mahajan, S., Gaddis, A. L., Evans, K. J. & Norman, M. R. Exploring an ensemble-based approach to atmospheric climate modeling and testing at scale. Proc. Comput. Sci. 108, 735–744. https://doi.org/10.1016/j.procs.2017.05.259 (2017).

Massonnet, F. et al. Replicability of the EC-Earth3 earth system model under a change in computing environment. Geosci. Model Dev. 13, 1165–1178. https://doi.org/10.5194/gmd-13-1165-2020 (2020).

Wan, H. et al. A new and inexpensive non-bit-for-bit solution reproducibility test based on time step convergence (TSC1.0). Geosci. Model Dev. 10, 537–552. https://doi.org/10.5194/gmd-10-537-2017 (2017).

Baker, A. H. et al. A new ensemble-based consistency test for the community earth system model (pyCECT v1.0). Geosci. Model Dev. 8, 3823–3859. https://doi.org/10.5194/gmd-8-2829-2015 (2015).

Baker, A. H. et al. Evaluating statistical consistency in the ocean model component of the community earth system model (pyCECT v2.0). Geosci. Model Dev. 9, 2391–2406. https://doi.org/10.5194/gmd-9-2391-2016 (2016).

Milroy, D. J. et al. Towards characterizing the variability of statistically consistent community earth system model simulations. Proc. Comput. Sci. 80, 1589–1600. https://doi.org/10.1016/j.procs.2016.05.489 (2016).

Milroy, D. J., Baker, A. H., Hammerling, D. M. & Jessup, E. R. Nine time steps: Ultra-fast statistical consistency testing of the community earth system model (pyCECT v30). Geosci. Model Dev. 11, 697–711. https://doi.org/10.5194/gmd-11-697-2018 (2018).

Hong, S. Y. et al. An evaluation of the software system dependency of a global atmospheric model. Mon. Weather Rev. 141, 4165–4172. https://doi.org/10.1175/MWR-D-12-00352.1 (2013).

Yu, Y. et al. A deep learning-based consistency test approach for earth system models on HPC systems. iScience 28, 111574. https://doi.org/10.1016/j.isci.2024.111574 (2025).

Yu, Y. et al. Characterizing uncertainties of earth system modeling with heterogeneous many-core architecture computing. Geosci. Model Dev. 15, 6695–6708. https://doi.org/10.5194/gmd-15-6695-2022 (2022).

Raissi, M., Perdikaris, P. & Karniadakis, G. E. Physics informed deep learning (part i): Data-driven solutions of nonlinear partial differential equations. https://doi.org/10.48550/arXiv.1711.10561 (2017).

Raissi, M., Perdikaris, P. & Karniadakis, G. E. Physics-informed neural networks: A deep learning framework for solving forward and inverse problems involving nonlinear partial differential equations. J. Comput. Phys. 378, 686–707. https://doi.org/10.1016/j.jcp.2018.10.045 (2019).

Krizhevsky, A., Sutskever, I. & Hinton, G. E. ImageNet classification with deep convolutional neural networks. Adv. Neural. Inf. Process. Syst. https://doi.org/10.1145/3065386 (2012).

Scher, S. Toward data-driven weather and climate forecasting: Approximating a simple general circulation model with deep learning. Geophys. Res. Lett. 45, 12616–12622. https://doi.org/10.1029/2018GL080704 (2018).

Scher, S. & Messori, G. Weather and climate forecasting with neural networks: Using general circulation models (GCMs) with different complexity as a study ground. Geosci. Model Dev. 12, 2797–2809. https://doi.org/10.5194/gmd-12-2797-2019 (2019).

Weyn, J. A., Durran, D. R. & Caruana, R. Can machines learn to predict weather? Using deep learning to predict gridded 500-hPa geopotential height from historical weather data. J. Adv. Model Earth. Sy. 11, 2680–2693. https://doi.org/10.1029/2019MS001705 (2019).

Weyn, J. A., Durran, D. R. & Caruana, R. Improving data-driven global weather prediction using deep convolutional neural networks on a cubed sphere. J. Adv. Model Earth Sy. 12, e2020MS002109. https://doi.org/10.1029/2020MS002109 (2020).

Rasp, S. et al. WeatherBench: A benchmark data set for data-driven weather forecasting. J. Adv. Model Earth Sy. 12, e2020MS002203. https://doi.org/10.1029/2020MS002203 (2020).

Rasp, S. et al. A benchmark for the next generation of data-driven global weather models. J. Adv. Model Earth Sy. 16, e2023MS004019. https://doi.org/10.1029/2023MS004019 (2024).

Liu, Y. et al. Application of deep convolutional neural networks for detecting extreme weather in climate datasets. https://doi.org/10.48550/arXiv.1605.01156 (2016).

Matsuoka, D., Nakano, M., Sugiyama, D. & Uchida, S. Deep learning approach for detecting tropical cyclones and their precursors in the simulation by a cloud-resolving global nonhydrostatic atmospheric model. Prog. Earth and Planet. Sc. 5, 80. https://doi.org/10.1186/s40645-018-0245-y (2018).

Shakya, S., Kumar, S. & Goswami, M. Deep learning algorithm for satellite imaging based cyclone detection. IEEE J. Select. Topics Appl. Earth Observ. Remote Sens. 13, 827–839. https://doi.org/10.1109/JSTARS.2020.2970253 (2020).

Gardoll, S. & Boucher, O. Classification of tropical cyclone containing images using a convolutional neural network: Performance and sensitivity to the learning dataset. Geosci. Model Dev. 15, 7051–7073. https://doi.org/10.5194/gmd-15-7051-2022 (2022).

Zideh, M. J. & Solanki, S. K. Multivariate physics-informed convolutional autoencoder for anomaly detection in power distribution systems with high penetration of DERs. ArXiv, https://doi.org/10.48550/arXiv.2406.02927 (2024).

Ji, S., Xu, W., Yang, M. & Yu, K. 3D convolutional neural networks for human action recognition. IEEE Trans. Patt. Anal. Mach. Intell. https://doi.org/10.1109/TPAMI.2012.59 (2013).

Tran, D., Bourdev, L., Fergus, R., Torresani, L. & Paluri, M. Learning Spatiotemporal Features with 3D Convolutional Networks. in 2015 IEEE International Conference on Computer Vision (ICCV). https://doi.org/10.1109/ICCV.2015.510 (2015).

Wolff, T. D., Carrillo, H., Martí, L. & Sánchez-Pi, N. Towards Optimally weighted physics-informed neural networks in ocean modelling. ArXiv. https://doi.org/10.48550/arXiv.2106.08747 (2021).

Wu, J., Xiao, H. & Paterson, E. Physics-informed machine learning approach for augmenting turbulence models: A comprehensive framework. Phys. Rev. Fluids. 3, 074602. https://doi.org/10.1103/PhysRevFluids.3.074602 (2018).

McDougall, T. J. & Jackett, D. R. Accurate and computationally efficient algorithms for potential temperature and density of seawater. J. Atmos. Ocean Tech. 20, 730–741. https://doi.org/10.1175/1520-0426(2003)202.0.CO;2 (2003).

Dukowicz, J. K. Reduction of Density and pressure gradient errors in ocean simulations. J. Phys. Oceanogr. 31, 1915–1921. https://doi.org/10.1175/1520-0485(2001)0312.0.CO;2 (2001).

Rahmstorf, S. Thermohaline circulation: The current climate. Nature https://doi.org/10.1038/421699a (2003).

Ohshima, K. I. et al. Relationship between the upper ocean and sea ice during the Antarctic melting season. J. Geophys. Res. 103, 7601–7615. https://doi.org/10.1029/97JC02806 (1998).

Düben, P. D., Subramanian, A., Dawson, A. & Palmer, T. N. A study of reduced numerical precision to make superparametrisation more competitive using a hardware emulator in the OpenIFS model. J. Adv. Model Earth Syst. 9, 566–584. https://doi.org/10.1002/2016MS000862 (2017).

Prims, O. T. et al. How to use mixed precision in ocean models: exploring a potential reduction of numerical precision in NEMO 4.0 and ROMS 3.6. Geosci. Model Dev. 12, 3135–3148. https://doi.org/10.5194/gmd-12-3135-2019 (2019).

Rosinski, J. M. & Williamson, D. L. The accumulation of rounding errors and port validation for global atmospheric models. SIAM J. Sci. Comput. 18, 552–564. https://doi.org/10.1137/S1064827594275534 (1997).

Arteaga, A., Fuhrer, O. & Hoefler, T. Designing bit-reproducible portable high-performance applications. in 2014 IEEE International Parallel & Distributed Processing Symposium (IPDPS). https://doi.org/10.1109/IPDPS.2014.127 (2014).

Sansom, P. G., Stephenson, D. B., Ferro, C. A. T., Zappa, G. & Shaffery, L. Simple uncertainty frameworks for selecting weighting schemes and interpreting multimodel ensemble climate change experiments. J. Clim. 26, 4017–4037. https://doi.org/10.1175/JCLI-D-12-00462.1 (2013).

Gong, D. et al. Memorizing normality to detect anomaly. Memory-augmented deep autoencoder for unsupervised anomaly detection. in 2019 IEEE/CVF International Conference on Computer Vision (ICCV). https://doi.org/10.1109/ICCV.2019.00179 (2019).

Zhang, S. et al. Optimizing high-resolution community earth system model on a heterogeneous many-core supercomputing platform (CESMHR_sw1.0). Geosci. Model Dev. 13, 4809–4829. https://doi.org/10.5194/gmd-2020-18 (2020).

Fu, H. et al. The sunway taihulight supercomputer: System and applications. Sci. China Inf. Sci. 59, 072001. https://doi.org/10.1007/s11432-016-5588-7 (2016).

Fu, H. et al. Redesigning cam-se for peta-scale climate modeling performance and ultra-high resolution on sunway taihulight. in International Conference for High Performance Computing, Networking, Storage and Analysis. https://doi.org/10.1145/3126908.3126909 (2017a).

Fu, H. et al. Refactoring and optimizing the community atmosphere model (CAM) on the sunway taihu-light supercomputer. in International Conference for High Performance Computing, Networking, Storage and Analysis. https://doi.org/10.1109/SC.2016.82 (2017b).

Srivastava, N. et al. Dropout: A simple way to prevent neural networks from overfitting. J. Mach. Learn. Res. 15, 1929–1958. https://doi.org/10.1016/j.neucom.2022.08.022 (2014).

Zhang, G. J. & Mcfarlane, N. A. Sensitivity of climate simulations to the parameterization of cumulus convection in the Canadian climate centre general circulation model. Atmos. Ocean. 33, 407–446. https://doi.org/10.1080/07055900.1995.9649539 (1995).

Large, W. G., Mcwilliams, J. C. & Doney, S. C. Oceanic vertical mixing: A review and a model with a nonlocal boundary layer parameterization. Rev. Geophys. 32, 363–403. https://doi.org/10.1029/94RG01872 (1994).

Breunig, M. M., Kriegel, H.-P., Ng, R. T. & Sander, J. LOF: Identifying density-based local outliers. in The 2000 ACM SIGMOD International Conference on Management of Data. https://doi.org/10.1145/342009.335388 (2000).

Acknowledgements

This research is supported by the National Key R&D Program of China (Grant No. 2022YFE0106400), the Science and Technology Innovation Projects of Laoshan Laboratory (Grant Nos. LSKJ202202200, LSKJ202302400), the National Natural Science Foundation of China (grant no. 42361164616), Shandong Province’s “Taishan” Scientist Program (Grant No. ts201712017), Postdoctoral Fellowship Program of CPSF (Grant No. GZC20232491), Qingdao Post-doctoral Applied Research Project (Grant No. QDBSH20240102165). All numerical experiments are performed at Laoshan Laboratory and Hainan Artificial Intelligence Computing Center.

Author information

Authors and Affiliations

Contributions

Yangyang Yu is responsible for all plots, initial analysis, and some writing; Shaoqing Zhang leads the project, organizes and refines the paper; Haohuan Fu, Dexun Chen, and Yishuai Jin provide significant discussions and inputs for the whole research; all other co-authors make equal contributions by wording discussions, comments and reading proof.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Yu, Y., Zhang, S., Fu, H. et al. Evaluating statistical consistency for the ocean component of earth system models using physics informed convolutional autoencoder. Sci Rep 15, 18864 (2025). https://doi.org/10.1038/s41598-025-03092-7

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-025-03092-7