Abstract

A key challenge in solving electrical text problems is to automatically adapt to understanding semantically diverse text problems. However, existing solution methods either focus on semantic or structural analysis, and they cannot parse the problem text into a more interpretable form of machine solver. A general electrical problem solver should be able to understand complex and various problem texts and apply relevant electrical theorems at the same time. In this paper, we propose a sequence to formula tree model by using a deep learning network that can parse the problem text into a formula tree with numerical values and electrical theorems. A pair of encoder and decoder of Gate Recurrent Unit is proposed to establish a formula tree graph structure for the problem text and theorem library, and the implicit electrical theorems can be extracted by using a Graph Convolutional Neural Network. Based on the formula tree and implicit electrical theorems, an electrical problem solver is proposed to produce the solutions to electrical problems. The experimental results demonstrate the effectiveness of the proposed model in solving electrical text problems. In addition, a dataset containing 3027 electrical text problems is collected and subjected to format normalization and information annotation operations.

Similar content being viewed by others

Introduction

Artificial intelligence has long been driven by the pursuit of automatic machine solutions, with particular emphasis on mathematical problem-solving, which has witnessed rapid development and garnered significant attention within the academic community. Among the international academic landscape, China has emerged as a leading force in the realm of mathematics. In parallel, the solution of physical problems represents a crucial branch of automatic machine solutions that have experienced substantial growth in recent years, spurred by advancements in the field of mathematics. Notably, the nature of physics problems demands accurate results and a rigorous problem-solving process. Even if the solution is correct, a lack of rigor in the problem-solving approach renders it invalid. This characteristic poses a unique challenge within the realm of physics, setting it apart from mathematics. In many ways, the study of machine-solving physics problems holds the potential to advance the humanization of machines, as it emphasizes both the correctness of results and the problem-solving process itself. The present paper aims to train a deep learning network model to comprehend junior high school electrical text problems and perform formula-solving operations. As illustrated in Table 1, solving electrical text problems necessitates the computer’s understanding of the information encapsulated within the question, the construction of an accurate solution formula, the incorporation of relevant electrical theorems into the formula, and ultimately, the derivation of the final answer through a series of computations.

Using seq2seq models and seq2tree models has been widely adopted in mathematical problem-solving. However, both models suffer from certain inherent limitations. Traditional physics problem-solving primarily relies on the seq2seq model, which faces a critical bottleneck in compressing the entire source sequence into a fixed-size vector. Consequently, when confronted with longer texts, crucial information may be lost. Furthermore, the seq2seq model exhibits issues of equation repeatability. During problem-solving, the model may generate redundant expressions such as "3 + 1 + 9" and "3 + 9 + 1" or "3 + (1 + 9)" and "3 + 1 + 9". Equation repeatability adversely affects the model’s efficiency and may lead to incorrect calculation results.

To address these challenges, researchers have proposed transforming the solution equation into a binary tree structure, wherein the tree serves as a decoder. By decoding the expression tree using the seq2tree model, a unique solution equation can be obtained through tree traversal, effectively circumventing the problem of equation repeatability. However, solving physical electrical problems necessitates the incorporation of numerous fixed theorems, resulting in excessively long and complex generated binary trees that are challenging to interpret, as depicted in Fig. 1. Therefore, a deep learning model called Sequence to Formula Tree (Seq2FT) is designed, which transforms the problem text into a tree-based solution expression comprising electrical theorems. As illustrated in Fig. 2, such a tree-based solving expression aligns more closely with the characteristics of physical problem-solving, potentially offering advantages in terms of training efficiency and solution accuracy.

The above example illustrates an addition operation. The Seq2FT model does not require complex configurations for arithmetic operators, as the necessary operations within the theorem have already been predefined in the theorem map. Consequently, the setup of subsequent operations, if required, remains relatively straightforward. However, this does not imply that the Seq2FT model is limited to addition operators; rather, other operators are also supported but are not explicitly depicted in Figure 2.

The solving characteristics of physical problems fundamentally differ from those of mathematical issues, demanding a heightened level of rigor in solving. In response to this distinction, we propose the Seq2FT model, which introduces a departure from the conventional prediction generation approach employed in solving operations by incorporating a knowledge-driven mechanism to guide the decoding process. Our model draws inspiration and innovative insights from related works in the field. For instance, the graph-tree model1 combines graph representation with a tree structure, while the Graph-to-Tree(G2T) model employs a top-down goal-driven mechanism and pseudo-dual learning scheme2. These prior studies provide valuable inspiration and novel concepts that have greatly influenced the design of the mechanisms integrated within our proposed model.

Implicit relation extraction is a key step in physics problem-solving. Initially, rule-based methods were commonly employed for relation extraction3. However, with the advent of machine learning techniques, the focus shifted towards supervised, weakly supervised, and unsupervised approaches, depending on the availability of labeled data4. Recently, deep learning methods have gained popularity in the extraction5,6,7. While these methods have shown significant advancements, they are not well-suited for physics problems that heavily rely on formula theorems. To address this issue, a novel approach is proposed that incorporates an electrical theorem graph containing diverse electrical theorems and utilizes graph convolutional networks for information analysis. Based on the known conditions provided in the problem text, the most appropriate theorem formula is selected from the knowledge graph and embedded into the corresponding node of the formula tree. This entire process can be seen as an implicit relation extraction process, establishing connections between various physical quantities (voltage and current) based on direct relations presented in the problem text. These implicit relations correspond to specific electrical theorems in the knowledge graph.

Furthermore, obtaining suitable datasets about physical problems is difficult due to the lack of public access permission. Moreover, certain publicly available datasets fail to meet the necessary research standards and experimental considerations regarding formatting and scale. Consequently, it becomes imperative to construct a dataset that adheres to format specifications, possesses reasonable data quality, and encompasses an appropriate scale to facilitate subsequent studies and experiments. Through meticulous collection and curation, a dataset TexPE-3K is constructed that consists of over 3000 instances of physical electrical text problems. It is crucial to mention that TexPE-3K is designed to cater to the knowledge level expected of junior middle school students in China. Following the publication of this paper, we are considering the release of the TexPE-3K dataset for use in other research endeavors.

The main contributions of this paper are as follows:

-

This paper constructs a sequence to formula tree model that converts the problem text into a formula tree that is plugged into the numerical values of the problem text. This applies electrical knowledge to problem-solving and makes the results more interpretable.

-

This study propose a problem preprocessing approach that leverages semantic model pooling to systematically identify and extract direct relationships embedded within the problem text, enhancing both efficiency and accuracy in textual analysis.

-

A knowledge-driven mechanism for the tree-decoding process that uses an electrical theorems graph and GCN is designed.

-

A dataset TexPE-3 K is created which contains electrical text problems with 3027 different types and format standards that need to be solved by formulas.

Related work

Math word problems solving

In recent years, the field of mathematical problem-solving has witnessed significant research interest, particularly in the realm of solving mathematical text problems8,9. The utilization of expression trees, as opposed to linear representations, has emerged as a promising approach for solving Mathematical Word Problems (MWP) due to its potential to reduce the likelihood of predicting invalid equations. Constructing tree structures using Recurrent Neural Network (RNN) presents a novel avenue for solving MWPs. By converting equations into expression trees, the inherent structure of the equation is naturally captured, resulting in improved solution accuracy, particularly for complex problems.Qin suggested that multiple generated expression trees can be unified into a general expression tree using a symbol extension set10. A semantic alignment general tree solver (SA-solver) was employed, utilizing the Unified Expression Tree (UET) representation to solve various types of MWPs in a cohesive model. This not only enhanced the practicality of the MWP solver, addressing multiple text application problems but also improved problem-solving efficiency. Wu emphasized the significance of numerical symbols in problem-solving and highlighted the need to consider their prominent role. Wu proposed NumS2T11, which incorporates a numerical property prediction mechanism to obtain numerical category and comparison information, thereby assessing their importance in the overall expression. This information is explicitly merged into the numerical sequence to tree network, enhancing the solving performance of mathematical application problems.

In addition to the aforementioned methods, several other notable machine solution approaches have been summarized. The contrast learning model12 and deep cross-modal learning network13, as proposed by Guo, provided a comprehensive framework for effectively summarizing and integrating both text and image information within a given problem14. By leveraging these techniques, the model can process and learn from multi-modal and multi-feature data, thereby demonstrating significant advantages in addressing complex problem scenarios. The incorporation of text and image information enhances the model’s ability to capture diverse and complementary aspects of the problem, facilitating a more comprehensive understanding and enabling more effective problem-solving. Xin introduced a novel hierarchical mathematical solver (HMS)15, which aims to comprehensively comprehend and apply problem-solving techniques. To enhance problem understanding, the HMS mimics human reading habits and incorporates a layered problem encoder for word and sentence encoding. The decoder utilized a hierarchical attention mechanism, leveraging different contextual levels to reinforce the problem’s semantics. Zhang proposed a multi-view consistent contrast learning method to achieve more comprehensive semantic-to-equation mapping16. Similarly, Jie treated the task as a complex relation extraction problem and introduced interpretable deductive reasoning steps3. By constructing a target expression, this approach not only provides more interpretable steps but also enables more accurate predictions for complex reasoning problems. Li proposed a fine-to-coarse mathematical application problem-modeling method17, which aims to capture local fine-grained information and global logical structures. This iterative approach combines low-level operators to predict high-level operators, enabling the model to analyze problems from bottom to top and reason about the operators. This model exhibits greater sensitivity to local variations and can better handle unseen problem types. Additionally, a modified structure known as the New Multi-Task Multi-Decoder Model (MMTM)18 has been introduced. The MMTM model employs a multi-task and multiple decoder framework during training, creating different tasks using expression tree-based labels derived from the sequence’s before, in, and after components. By incorporating task-specific decoders within a multitask framework, the model’s reasoning ability is strengthened, leading to improved generalization capabilities.

In recent years, large language models (LLMs), spearheaded by transformer-based architectures19, have made groundbreaking contributions to mathematical problem-solving tasks. Notably, the widely recognized GPT model20 has demonstrated remarkable proficiency in this ___domain. Subsequently, specialized LLMs designed explicitly for mathematical reasoning have emerged, including LLaMA21, LLaVA22, and Math-PUMA23, among others. These advanced models enhance problem-solving accuracy by conducting in-depth semantic analyses of problem statements, thereby improving their ability to comprehend and process complex mathematical queries.

Physics problem solving

Other countries have made advancements in this field before China, evident in systems such as CATE, WebCT, and OASIS, which are auxiliary education systems but do not include question-answering functionalities. Recent cutting-edge research also exists, for instance, Driess proposed a deep visual reasoning technology24 that predicts discrete action sequences based on initial working scene images, addressing physical reasoning problems. Additionally, enhanced simulation learning25 has been introduced to tackle complex and real-time physical problems by generating physical actions instead of solely solving them for robots.

Chinese research in this field has a clear direction. In 2019, Jian proposed a circuit problem solution system based on a rules model and relation reasoning algorithm26. This system utilizes an extraction method for extracting explicit and implicit relations27 from the problem text, employing a syntax semantic model28, unit theorem model, and relation extraction algorithm to construct the rules model. This enhances the effectiveness of topic text understanding and circuit analysis . Besides the solution system, related research also includes the generation of physical circuit knowledge point tags, ResNeXt28 network and equation reasoning. These auxiliary studies offer crucial technical support for physical problem-solving systems and provide valuable insights for the present study.

Graph neural network

Expressing data in the form of a graph is a novel approach in data processing that has found widespread application in various scenarios, such as knowledge graphs, which serve as graph structure knowledge bases. Knowledge graphs enable the representation of the structural relations between entities using multiple nodes and edges29. The simplest way to represent the graph structure is through an adjacency matrix, which captures the correlations between nodes and edges in the graph. Kipf and Welling introduced a simplified variant of GNNs known as Graph Convolutional Neural Networks (GCNs). GCNs leverage an effective variant of convolutional neural networks specifically designed for graph data. GCNs have been successfully applied to various tasks, including interest recommendation30, human motion recognition31 and price prediction32, demonstrating promising results on several benchmark datasets.

The development of network models with tree structures coincided with the emergence of sequence-graph and graph-tree structures in neural network models. Cao introduced the Seq2DAG33 method, recognizing that equations can be represented as direct acyclic graphs (DAG) rather than simple sequences. In this approach, a leaf node represents a quantity, while internal and root nodes correspond to arithmetic or comparative operators. The Seq2DAG method directly extracts the equation set as a DAG structure and iteratively aggregates quantities and expressions in a bottom-up manner, making it suitable for solving multivariate problems. Li pointed out that existing Seq2Seq models either treat input objects as sequences, disregarding essential structural information, or consider output objects solely as sequence output rather than structural objects during decoding. To address this limitation, Li proposed Graph2Tree34 that set a graph structure as the encoder for input encoding and a tree structure for output decoding. The Graph-to-tree model outperforms classical Seq2Seq models in terms of performance. Bin proposed a Graph-to-Tree(G2T) model with pseudo-dual learning scheme2. It decomposed the target into sub-targets connected by operators using graph encoding and top-down recursive method. This process continued until the target was simplified enough to be achieved through known leaf nodes. Meanwhile, the pseudo-dual learning scheme can enhance the reasoning ability of the model and improve the accuracy of problem solving.This approach fosters a more humanized machine solution operation. Shen presented a multi-encoder and multi-decoder model35 to enhance the representation capability of text descriptions and generate diverse equation expressions. This method overcomes the problem of low accuracy in text parsing of the classical Seq2Seq model. Tsai proposed the Sequence to General Tree (S2G)36 model that represents nodes as formulas with corresponding quantity parameters and integrates mathematical ___domain knowledge into problem-solving to produce more interpretable results.

Methodology

This section provides a comprehensive exposition of the working principle of the Seq2FT model. The intricacies of its overall architecture, the significance of direct symmetry in the problem text, the methodologies employed for the extraction and processing of implicit relations, and the step-by-step process of problem-solving also will be introduced.

Model architecture

Regarding the selection of techniques, we acknowledge the limited availability of relevant studies on applying computational methods to physics problem-solving. To ensure the reliability and feasibility of our research, we have opted to initiate our work with fundamental machine learning and deep learning techniques. If a prototype model is successfully developed and experimental data are obtained, more advanced methodologies can be employed in subsequent research to further optimize and enhance the model’s performance.

The Seq2FT model is rooted in the traditional Seq2Seq model, employing an Encoder-Decoder framework as its fundamental structure. However, we have incorporated several notable enhancements in various aspects of the model’s design.

Firstly, we have introduced a text preprocessing module dedicated to encoding the textual information within the question stem. This module identifies and extracts direct relations present in the question, generating a precoding sequence that facilitates subsequent coding operations. Subsequently, the encoder processes the precoding sequence obtained from the preceding module, generating a corresponding sequence of hidden states. The decoder then performs subsequent decoding operations based on these hidden states. In our deliberations, we considered multiple factors and ultimately opted to employ a bidirectional Gate Recurrent Unit (GRU) as the encoder’s architecture.

Furthermore, we address the problem of summarizing physical electrical theorems by constructing a graph structure theorem library. This entails developing a graph convolutional network (GCN) encoder tailored to this task. Leveraging the straight relations within the problem and the graph structure of the theorem library, we transmit this information to the GCN encoder. Consequently, the GCN encoder assesses whether an electrical theorem from the theorem library should be embedded as a formula node within the formula tree. This generates a sequence of embedded state representations for the formula nodes, facilitating subsequent decoding operations. This aspect distinguishes the Seq2FT model from both the traditional model and other variations.

The sequences generated by the two distinct encoders are jointly transmitted to the decoder. Initially, the decoder generates two corresponding context vectors based on these distinct sequences. It then proceeds to predict the output states of each head and child node within the formula tree. Employing a top-down approach, the decoder decodes the final tree-structured formula. Similar to the encoder, the decoder predominantly adopts the GRU architecture. However, it incorporates additional specialized functionalities to enhance the prediction of output states at tree junctions.

In summary, the Seq2FT model extends the traditional Seq2Seq model by introducing modifications to its architecture. These alterations include a dedicated text preprocessing module, the utilization of a bidirectional GRU as the encoder, the integration of a GCN encoder for theorem library processing, and a decoder enhanced to predict output states within the tree structure. These refinements enable the Seq2FT model to effectively solve problems involving formula generation and enhance its performance compared to traditional models.

Figure 3 provides a concise representation of the structural composition of the Seq2FT model and illustrates the interconnections between its various modules. The model operates sequentially, progressing from left to right to ensure proper functioning and coherent processing of the input data. The diagram captures the model’s key components, highlighting the flow of information and the dependencies among the different modules, thereby facilitating a better understanding of the overall architecture of the Seq2FT model.

Figure 4 provides a comprehensive depiction of each module’s detailed composition and structure, presenting the operational outcomes and the interdependencies among the modules described in Fig. 3. This detailed representation serves as a foundation for further in-depth explanation and analysis of the significant modules in the subsequent sections. By meticulously examining each module’s functionality, relations, and operational processes, we aim to provide a more comprehensive understanding of the intricate workings of the Seq2FT model.

Preprocessing of problem text

Text preprocessing constitutes a pivotal aspect of this research endeavor. In problem texts, it is common to encounter known conditions that can be transformed into direct relations. It is crucial to accurately identify and extract these direct relations during the preprocessing phase, as it forms the foundation for all subsequent operations. The initial step in processing the input problem text involves standardization, where various textual elements are transformed to ensure consistency. This includes converting written physical units to their corresponding symbols (“Amp” to "A" and “Ohm” to "Ω") and unifying different names for the same physical quantity (“voltage drop” and “potential difference” to “voltage”).

Once the standardization process is complete, the text undergoes edge detection, word segmentation, and annotation, resulting in a word sequence with part-of-speech annotations representing the problem text’s annotated sequence. These operations contribute to the organization and structure of the information contained in the problem text, enabling further analysis and processing.

Figure 5 illustrates the intricate process of problem text processing. By analyzing the information presented in the figure, it becomes apparent that the annotation sequence is generated through the segmentation and annotation of the preprocessed problem text. It is crucial to note that the assignment of labels to specific words during the annotation process necessitates a predefined set of labels to ensure the precision and rigor of the annotation. For a comprehensive understanding of the specific labels assigned to words during the annotation process, please refer to the detailed table provided in the subsequent sections.

Table 2 provides a comprehensive enumeration of words and their corresponding labels, which are crucial for our subsequent analyses. Particular emphasis must be placed on specific information, including physical variables, numerical data, and physical units. The subsequent stage primarily involves performing relation extraction on the annotated sequences. Subsequently, we proceed to construct a concise semantic model that facilitates the identification of relevant information within the annotated sequence. By leveraging this model, we extract all direct relations that possess physical significance, thereby generating a consolidated summary of the direct relation group.

Figure 6 depicts the comprehensive procedure for obtaining the entire set of direct relations. The construction of the semantic model assumes a pivotal role in extracting these relations. In the context of electrical problems, we consider the primary focus to be on pertinent electrical-related physical variables, variable data, and units. Additionally, aside from direct relations, the problem text also incorporates fundamental grammar, which necessitates processing by the semantic model. Consequently, we define the semantic model as a triple: \(LM = (V, M, D)\), wherein \(V\) represents the electrical variables present in the problem text, \(M\) represents the grammatical vocabulary contained within the problem text, and \(D\) represents the extracted electrical relations. It is noteworthy that, for electrical topics, the expression format of direct relations is not uniquely defined. Thus, we can construct multiple semantic models, thereby forming a pool of semantic models from which we can extract direct relations expressed in diverse forms. The structure of the model pool is delineated in Eq. (1):

In (1), \({LM}_{n}\) represents the identifier for a specific semantic model, with the subscript " \(n\) " denoting the model number. The variables \({V}_{n}\), \({M}_{n}\), and \({D}_{n}\) in the triplet represent the lists of electrical variables, syntactic speech patterns, and electrical direct relations, corresponding to the specific semantic model.

To meet the experimental requirements, we constructed a model pool comprising 10 simple semantic models. Given that the dataset used in the experiment is not excessively complex, the simplicity of the model pool enables effective extraction of direct relations from the problem text. It should be noted that the part-of-speech labels employed in the semantic model slightly differ from those in the annotated sequence. The part-of-speech labels in the semantic model align well with the focus of this research paper, but their universality is relatively limited.

Table 3 presents a detailed breakdown of the part-of-speech labels used in the semantic model. These labels are applied consistently across all semantic models in the pool, facilitating the extraction of diverse forms of electrical direct relations. The subsequent Table 4 showcases the processing results obtained from the 10 different semantic models:

In Table 4, the combination of "\(V+M\)" represents the problem text features extracted by the semantic model, while D represents the direct relation extracted by the model from these text features. The ellipsis "…" represents other characters and annotation information present in the problem text. Additionally, the semantic model updates the annotation information of the annotated sequence, thereby facilitating subsequent preprocessing operations.

Our approach distinguishes itself from conventional relation extraction algorithms by introducing specific enhancements to improve performance and effectiveness. The direct relation group is marked using [CLS] and [END] identifiers at the beginning and end of the annotated sequence. This annotation sequence, including the identifiers, undergoes one-hot encoding, which is a common data preprocessing technique in deep learning. The one-hot encoding process is applied to the annotated sequence, enabling precoding operations. Additionally, the mean value of the two identifiers is calculated to obtain the corresponding sequence, denoted as \(K\). The final step involves assembling the precoding annotated sequence and the direct relation sequence using an embedded matrix, resulting in the final precoding sequence, denoted as \({T}_{K}\).

Precoding sequence processed by GRU encoder

GRU, as a variant of LSTM, offers similar effectiveness while simplifying the structure, making it more suitable for our solution process. Furthermore, considering the limited size of our dataset, employing GRU as an encoder helps mitigate overfitting issues commonly encountered with smaller datasets. Therefore, we adopt a bidirectional GRU with reduced parameters, capable of considering both preceding and succeeding information, as the encoder in our Seq2FT model to process the precoding sequence \({T}_{K}\).

In the encoder, the sequence of word embeddings is fed into the GRU from both left to right and right to left. The GRU continuously processes the precoding sequence \({\{x{^{\prime}}}_{1}, {x{^{\prime}}}_{2}, {x{^{\prime}}}_{3}\cdots ,{{x}^{{^{\prime}}}}_{n}\}\) of the problem text as input and generates the hidden state sequence \(\{\overrightarrow{{h}_{1}}, \overrightarrow{{h}_{2}},\dots , \overrightarrow{{h}_{\text{n}}}\}\) as output. At each step, the hidden state \(\overrightarrow{{h}_{\text{s}}}\) at position \(s\) is calculated based on the previous hidden state \(\overrightarrow{{h}_{\text{s}-1}}\) and the current word embedding \({x^{\prime}}_{s}\), as shown in (2). Here, GRU represents a function consisting of a two-layer GRU. Similarly, in the opposite direction, the hidden state \(\overleftarrow{{h}_{s}}\) at step s is computed using the subsequent hidden state \(\overleftarrow{{h}_{s+1}}\) and the current word embedding \({x^{\prime}}_{s}\), as described in (3). The final hidden state \({h}_{s}\) incorporates contextual information from the source token \({x^{\prime}}_{s}\) and is calculated in (4). Ultimately, the encoder outputs the hidden state sequence \(\left\{{h}_{1,}{h}_{2},\dots ,{h}_{n},{k}_{1,}{k}_{2},\dots ,{k}_{n}\right\}\) containing the direct relations.

The operation process of the preprocessing and the encoding was presented in Algorithm1.

Implicit relation acquisition by GCN encoder

The GCN encoder is a feature of the Seq2FT model. After obtaining the hidden state and direct relations from the text sequence through the encoder, the model proceeds to extract the implicit relations within the problem to solve for the answer based on these relations. To extract the implicit relations from the text sequence, we compile a comprehensive collection of electrical theorems required for solving electrical problems and construct an electrical theorem graph (TG). By combining the known conditions with the electrical theorems, we can derive the implicit relations. During the solution generation process, the model leverages the intricate processing capabilities of multi-layer neural networks to establish correspondences between the known conditions in the problem and the information acquired from the theorem graph. This enables the model to uncover the implicit electrical relations between different quantities.

The challenge lies in transforming an electrical theorem within an electrical graph into a format that can be comprehended by the model. The constructed electrical theorem graph primarily encompasses commonly used electrical formulas and physical quantities, such as current, voltage, resistance, electric power, and other relevant theorems. To establish connections between the units of electricity in the graph and their computational relationships, we introduce the formula nodes.

To parse the formula nodes, we employ a double-layer graph convolutional neural network (GCN). The GCN encoder converts the relation nodes within the electrical theorem graph into corresponding vector representations. Leveraging the precoding sequence, the model utilizes layer-to-layer propagation to update the feature values of nodes, thereby enhancing the model’s understanding of the information encoded within the theorem graph. By leveraging the relationships between nodes and their eigenvalues, we derive the adjacency matrix \({\varvec{A}}\) and eigenmatrix \({{\varvec{B}}}^{*}\), which are employed in conjunction with the GCN to generate the embedded state sequence \(Z\) for the formula nodes, as depicted in (5). Consequently, the model becomes capable of recognizing the embedded state sequence \(Z\) and comprehending the information encapsulated within the theorem graph.

Sequence \(Z\) represents the embedding state of each node in the theorem graph, which can be used in the decoder to represent the physical quantity represented by the parameters in the formula.

The pseudo-code for the GCN parsing process is shown in Algorithm 2.

A Tree-structure decoder generates the formula tree

The construction of the decoder constitutes a pivotal aspect of this study. The tree structure decoder proves advantageous in avoiding the generation of redundant equations, and it facilitates a more intuitive and interpretable solution process by representing tree nodes solely as electrical theorems relevant to the problem. Our designed decoder aims to decode the formula tree corresponding to the problem by combining the hidden state sequence and direct relation sequence \({H}_{K}\) obtained from the encoder, along with the embedding state sequence Z derived from the theorem graph nodes using GCN.

The decoding process unfolds as follows. Initially, the decoder is provided with the initial state \({s}_{n}\). The output state \({y}_{n}\) for the current node is predicted, and the associated child node index is determined. If the current node represents a formula, the child node is selected based on the units encompassed within the formula. Conversely, if the current node is not a formula node, the decoder decodes the operator and the corresponding child node index based on the previous three state sequences. In other words, the final solution tree consists of a formula tree and an ordinary tree. The decoder employs two context vectors to calculate the probability that the solution tree corresponds to a formula tree.

Utilizing the embedded state \({i}_{{y}_{n-1}}\) of the previous node and the hidden state \({h}_{n}\) of the current node, the attention mechanism computes the first context vector \({c}_{n}\) in (6). Similarly, by utilizing the embedded state sequence \(Z\) of the unit nodes within the electrical theorem graph and the hidden state \({h}_{n}\) of the current node, the attention mechanism generates the second context vector \({d}_{n}\) in (7). The computation of the prediction probability \(P\) in (8) and the final output state \({y}_{n}^{*}\) in (9) can be summarized as follows:

It is noteworthy that the Seq2FT model differs from the conventional Seq2Tree model in terms of the generated expression tree structure, which is not strictly binary. In our model, when predicting a node, the number of its child nodes becomes uncertain. Consequently, the entire prediction process can be perceived as the generation of multiple trees from top to bottom. Given the current state \({s}_{n}\), embedded state \({{\varvec{i}}}_{{{\varvec{y}}}_{{\varvec{n}}-1}}\) of a node, and context vector \({c}_{n}\), we predict its first child node \({s}_{1}^{n}\). Subsequently, the remaining child nodes are predicted based on their sibling node, allowing the decoder to predict all child nodes \({s}_{n}^{n}\) in (10) of a node when its current state is \({s}_{n}\).

The decoder of the Seq2FT model is constructed using GRU, which incorporates a projection layer and an activation function. Initially, GRU acquires the embedding state \({{\varvec{i}}}_{{{\varvec{y}}}_{{\varvec{n}}}}\) of the child node and the context vector \({c}_{n}\). It then calculates the decoding state \({s{^{\prime}}}_{n}^{n}\) in (11) of the child node based on the state of its preceding sibling node. Following the projection and activation steps, the final output state \({{s}^{*}}_{n}^{n}\) in (12) of the child node is obtained. We sequentially place the child nodes onto the stack and iterate the decoding process until all child nodes have been processed and removed from the stack.

The pseudo-code for the decoding process is shown in Algorithm 3.

Solution generation

The primary objective of problem solving is to traverse the decoded formula tree and derive the corresponding unique equation, which the model then uses to calculate the final answer. However, due to the specific nature of physical circuit problems, some may require the application of electrical theorem formulas, while others may not. Consequently, the formula trees we decode can be categorized into two types. The first type consists of formula trees incorporating electrical theorem nodes, whereas the second type comprises ordinary expression trees without such nodes.

For formula trees containing electrical theorem nodes, the model converts them into the corresponding equation sequence and calculates the answer based on the specific formula provided in the electrical theorem graph, considering the number of leaf nodes. On the other hand, the model extracts the corresponding equation and solves for the answer through a traversal process for ordinary expression trees of the second type.

Finally, the performance of the model is evaluated by computing the loss function value. The loss function employs maximum likelihood estimation in the form of cross-entropy loss, which measures the similarity between the generated equation and the target equation. Each dataset entry includes an ID, problem text, corresponding solution equation, and answer. By comparing the generated equation sequence with the target equation provided in the dataset, the model calculates the loss value and continually propagates it backward to update the parameters, thereby facilitating the entire problem-solving process. The formula for the loss function is represented in (13).

To enhance comprehension, we have consolidated the comprehensive process of solving electrical text questions using the Seq2FT model in Fig. 7.

Dataset

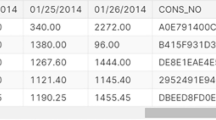

The TexPE-3K dataset comprises six distinct subsets corresponding to different types of electrical problems: current, voltage, resistance, electrical energy, electrical power, and electrical heat. Constructed in alignment with the Chinese junior high school physics curriculum, the dataset is derived from textbooks, exercise books, and test papers. It encompasses approximately 3000 textual problems related to electricity, each systematically annotated with solution formulas, answers, and standardized problem statements. A comprehensive overview of the TexPE-3K dataset, including its composition and scale, is provided in Table 5.

Table 5 provides detailed statistics on various parameters within the TexPE-3K dataset. These parameters include the problem type, annotation mode, and corresponding proportions. To showcase the variations between different annotation methods employed in the TexPE-3K dataset, we present two examples that exemplify these distinct approaches (Tables 6 and 7). The formula annotation method includes electrical theorem formulas and their corresponding parameters as summarized in the theorem graph. In contrast, the expression annotation solely includes the corresponding solution formula, omitting the theorem formula found in the theorem graph. To comprehensively evaluate the model’s performance, we partition the TexPE-3K dataset into five equally sized experimental datasets. In each experiment, one is designated for testing, while the remaining four are used for training. This process is repeated five times, and the final result is obtained by averaging the results across all experiments.The distribution of question types within the experimental dataset was proportional to that of the overall dataset, ensuring the inclusion of each question type in a balanced manner.

Experiment

Most of the existing state-of-the-art models primarily emphasize semantic and structural analysis as the core of their research. In this study, we focus on optimizing relation extraction within the Seq2FT model. Accordingly, our experimental design consists of two key components: first, a comparative analysis of the proposed relation extraction method against existing approaches; and second, an evaluation of the Seq2FT model’s performance relative to existing models.

The first part involves conducting a comparative analysis between the proposed relation extraction method and existing methods. Accuracy comparisons are made across the entire dataset as well as individual sub-datasets. Through this analysis, we aim to identify the factors contributing to the discrepancies in results and elucidate potential avenues for optimization. The existing methods selected for comparison include the relation extraction method based on the \({S}^{2}\) semantic model proposed by Jian26, the relation acquisition method based on the extended \({S}^{2}\) (\(Ex\_{S}^{2}\))semantic model proposed by He27 and the relation extraction method based on the fusion semantic model \({S}^{2}P\)+\({L}^{2}\) proposed by Yu28.

In the second part of our experimentation, we will provide an overview of the key parameters of the Seq2FT model and proceed to compare its performance against existing models using the experimental dataset. Throughout the comparative analysis, we will ensure consistency in training steps and verification methods, allowing us to contrast the variations in the loss function trajectory and overall solution accuracy exhibited by different models. Subsequently, we will conduct a comprehensive analysis to identify the underlying factors contributing to the observed differences in results and outline potential directions for model optimization. The models selected for comparison include the Graph-Transformer model20, the Graph-to-tree model with pseudo-dual learning scheme(PseDual-G2T)2, the LLaMA model(Math-LLaMA)21, The GPT-3.5 model20 and The GPT-4o-mini37 model.It should be noted that the various versions of the two GPT models are employed exclusively for the evaluation of output accuracy, with no experimental comparisons made regarding their loss function trajectories.

Experiment of relation acquisition methods

During preprocessing, we employ 10 semantic models to effectively detect and extract direct relations within the problem text, thereby generating an annotated sequence. To accommodate the various electrically significant physical quantities (such as voltage, current, resistance, electric power, electric energy, and electric heat) present in the dataset, we determine the size of the state register in the one-hot encoder to be 7, corresponding to the number of relevant quantities. This enables us to encode and represent the different categories of information in a suitable format for further analysis and processing.

Table 8 presents the accuracy results obtained from the four relation acquisition methods applied to the TexPE-3 K dataset, along with the average accuracy for each subset. It is evident that our constructed relation acquisition method demonstrates clear advantages across all subsets, and even exhibits a slight overall advantage on the complete dataset, thus validating the effectiveness of our optimization efforts. In contrast, the experimental data reveals that the results of the existing methods remain relatively consistent across the complete dataset and individual subsets, whereas our method exhibits noticeable differences. We hypothesize that as the number of feature categories increases during the one-hot encoding process, the dimensionality of the feature space also increases, potentially leading to a decrease in accuracy.

Model experiment

During the development of the Seq2FT model, we determined the word embedding dimension to be 128, taking into account the size of the experimental dataset. The hidden state dimension of the GRU encoder was set to 512, and we applied a neuron discard rate of 50%. As for the GCN encoder, the hidden state dimension was set to 512, and the tensor dimension was fixed at 5. In the training process, we utilized a batch size of 64 for individual data samples, a learning rate of 0.001, and a weight decay of 1e-5. To assess the accuracy of the model’s solutions, we employed fivefold cross-validation.

The trajectory of the loss function can serve as an indicator for evaluating the model’s learning capacity. In this study, the evaluation of the loss function primarily focuses on its decay trend and the identification of potential overfitting, which serve as key indicators for assessing the model’s learning capability. The absolute value of the loss function itself is not the primary concern.

As illustrated in Fig. 8, extending the training period results in more stable and well-behaved loss curves for the Seq2FT and Transformer models. In contrast, the PseDual-G2T and LLaMA models exhibit irregular loss patterns, indicating potential instability or convergence issues in their training process.This finding indicates that the Seq2FT model does not demonstrate a significant disadvantage in learning capability, despite being based on a more fundamental technique.

However, it is important to acknowledge that the learning capacity of the Seq2FT model remains inferior to that of the Graph-Transformer model, which incorporates more sophisticated architectural designs and optimization strategies. However, it is noteworthy that the loss curve of the Graph-Transformer model is not only smoother but also demonstrates more complete and consistent convergence compared to that of the Seq2FT model. This observation highlights the structural advantages of the Graph-Transformer architecture, particularly its ability to model complex relational dependencies through graph-based representations. By effectively capturing both local and global contextual information, the Graph-Transformer facilitates more stable optimization and accelerates convergence during training.

In contrast, while the Seq2FT model achieves reasonable performance, its loss trajectory appears comparatively less smooth and exhibits slower convergence. This may be partially attributed to the sequential nature of its architecture, which, although effective in modeling temporal dependencies, may be less expressive in capturing complex structural relationships inherent in the data. Nevertheless, this also highlights potential opportunities for enhancing the Seq2FT model by integrating more expressive relational modeling mechanisms or hybrid architectures that combine the strengths of sequence-based and graph-based representations. These insights provide valuable directions for future work aimed at further improving the model’s learning capacity and generalization performance.

Table 9 presents the solution accuracy of the six models. The results indicate that the Seq2FT model achieves approximately 2% higher solving accuracy compared to the PseDual-G2T, and Math-LLaMA models. However, the Graph-Transformer model and the two variants of the GPT model achieve comparable solution accuracies to that of the proposed Seq2FT model. This observation suggests that, despite its tailored design for the target task, the Seq2FT model does not exhibit a significant performance advantage over state-of-the-art models built upon the Transformer architecture. These results highlight the competitive effectiveness of existing Transformer-based frameworks, particularly those leveraging full self-attention mechanisms and structural inductive biases, in capturing complex patterns in the data.

In future work, we plan to enhance the Seq2FT model by incorporating advanced Transformer techniques such as graph-aware attention, relational encoding, and structural priors, which have demonstrated significant improvements in tasks involving non-Euclidean data. Additionally, the integration of multi-scale context modeling and dynamic attention mechanisms could further improve the model’s ability to capture both sequential and structural dependencies, thereby improving its generalization and overall performance. By leveraging these innovations, we aim to refine the Seq2FT architecture to better compete with top-performing models in the Transformer family and achieve superior results in the target ___domain.

Conclusions

This paper presents the Seq2FT model, which is specifically designed for solving junior middle school electrical text problems. The model is capable of identifying both explicit and implicit relations within the problem text and learning to generate tree-based solution formulas. Unlike traditional models, the nodes in the formula tree generated by Seq2FT are not limited to ordinary binary tree nodes; they also include specific nodes that represent electrical theorem formulas. To facilitate this, we construct a graph-based theorem library and utilize the Graph Convolutional Network (GCN) for processing and identification. This innovative approach simplifies the final tree-based solution formulas and leads to promising experimental results.

Discussion

This paper focuses on exploring the formula-solving characteristics of physical problems and their mathematical solutions, aiming to make significant advancements in this field. However, there are certain limitations to be addressed. Firstly, the scope of physical problems addressed in this paper is primarily limited to electrical text problems, and the constructed model may not effectively handle the diversity of problem types and various forms of expression. Moreover, the process of solving physical problems often involves incorporating ___domain-specific laws and experiences. While this paper uses large-scale dataset training to impart some rules and experiences to the model, it does not extensively explore more efficient approaches or methods for knowledge integration. These two aspects serve as important directions for future research in this area. Nevertheless, the results presented in this paper demonstrate the successful application of formula-based solutions to physical problems, with the annotation of different problem types. This contributes to enhancing the model’s capability in processing textual problems and improving the accuracy of problem-solving.

Data availability

The data that support the findings of this study are available on https://github.com/Anypp/ElectricalProblems.

References

Zhang, J. et al. Graph-to-Tree Learning for Solving Math Word Problems. in Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics 3928–3937 (Association for Computational Linguistics, Stroudsburg, PA, USA, 2020). https://doi.org/10.18653/v1/2020.acl-main.362.

Bin, Y., Shi, W., Ding, Y., Yang, Y. & Ng, S. K. Solving math word problems with re-examination (2023).

Jie, Z., Li, J. & Lu, W. Learning to Reason Deductively: Math Word Problem Solving as Complex Relation Extraction. in Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers) 5944–5955 (Association for Computational Linguistics, Stroudsburg, PA, USA, 2022). https://doi.org/10.18653/v1/2022.acl-long.410.

Najafi, S. & Fyshe, A. Weakly-Supervised Questions for Zero-Shot Relation Extraction (2023), [Online]. Available: http://arxiv.org/abs/2301.09640.

Yan, Z., Jia, Z., & Tu, K. An Empirical Study of Pipeline vs. Joint Approaches to Entity and Relation Extraction, Short Papers. [Online]. Available: https://github.com/.

Sun, Q. et al. Joint extraction of entities and overlapping relations by improved graph convolutional networks. Appl. Intell. 52(5), 5212–5224. https://doi.org/10.1007/s10489-021-02667-x (2022).

Xu, M., Pi, D., Cao, J. & Yuan, S. A novel entity joint annotation relation extraction model. Appl. Intell. 52(11), 12754–12770. https://doi.org/10.1007/s10489-021-03002-0 (2022).

Hong, Y. et al. SMART: A situation model for algebra story problems via attributed grammar. Proc. AAAI Conf. Artif. Intell. 35(14), 13009–13017. https://doi.org/10.1609/aaai.v35i14.17538 (2021).

Liu, Q. et al. RODA: Reverse operation based data augmentation for solving math word problems. IEEE/ACM Trans. Audio Speech Lang. Process 30, 1–11. https://doi.org/10.1109/TASLP.2021.3126932 (2022).

Qin, J., Lin, L., Liang, X., Zhang, R. & Lin, L. Semantically-Aligned Universal Tree-Structured Solver for Math Word Problems (2020), [Online]. Available: http://arxiv.org/abs/2010.06823.

Wu, Q., Zhang, Q., Wei, Z. & Huang, X. Math Word Problem Solving with Explicit Numerical Values. in Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing (Volume 1: Long Papers) 5859–5869 (Association for Computational Linguistics, Stroudsburg, PA, USA, 2021). https://doi.org/10.18653/v1/2021.acl-long.455.

Guo, F., Jian, P., Wang, Y., & Wang, Q. A Framework of Cross-Modal Learning for Solving Geometry Problems. in 2021 IEEE International Conference on Engineering, Technology & Education (TALE) 506–512 (IEEE, 2021). https://doi.org/10.1109/TALE52509.2021.9678945.

Jian, P., Guo, F., Wang, Y. & Li, Y. Solving geometry problems via feature learning and contrastive learning of multimodal data. Comput. Model. Eng. Sci. 136(2), 1707–1728. https://doi.org/10.32604/cmes.2023.023243 (2023).

Guo, F. & Jian, P. A Graph Convolutional Network Feature Learning Framework for Interpretable Geometry Problem Solving. in 2022 International Conference on Intelligent Education and Intelligent Research (IEIR) 59–64 (IEEE, 2022). https://doi.org/10.1109/IEIR56323.2022.10050084.

Lin, X. et al. HMS: A hierarchical solver with dependency-enhanced understanding for math word problem. Proc. AAAI Conf. Artif. Intell. 35(5), 4232–4240. https://doi.org/10.1609/aaai.v35i5.16547 (2021).

Zhang et al. W. Multi-View Reasoning: Consistent Contrastive Learning for Math Word Problem (2022) [Online]. Available: http://arxiv.org/abs/2210.11694.

Li, A., Jiang, X., Liu, B., Liang, J. & Xiao, Y. Tackling Math Word Problems with Fine-to-Coarse Abstracting and Reasoning (2022) [Online]. Available: http://arxiv.org/abs/2205.08274.

Faldu, K., Sheth, A., Kikani, P. & Patel, D. MMTM: Multi-Tasking Multi-Decoder Transformer for Math Word Problems (2022) [Online]. Available: http://arxiv.org/abs/2206.01268.

Shin, S., Park, J. & Ryu, M. Integrating heterogeneous graphs using graph transformer encoder for solving math word problems. IEEE Access 11, 27609–27619. https://doi.org/10.1109/ACCESS.2023.3257571 (2023).

Shakarian, P., Koyyalamudi, A., Ngu, N. & Mareedu, L. An independent evaluation of ChatGPT on mathematical word problems (MWP) (2023) [Online]. Available: http://arxiv.org/abs/2302.13814

Touvron, H. et al. LLaMA: Open and Efficient Foundation Language Models (2023).

Liu, H., Li, C., Wu, Q. & Lee, Y. J. Visual instruction tuning. Adv. Neural Inf. Process. Syst. 36, 34892–34916 (2023).

Zhuang, W., Huang, X., Zhang, X. & Zeng, J. Math-PUMA: Progressive Upward Multimodal Alignment to Enhance Mathematical Reasoning (2024).

Driess, D., Ha, J. S. & Toussaint, M. Learning to solve sequential physical reasoning problems from a scene image. Int. J. Robot. Res. 40(12–14), 1435–1466. https://doi.org/10.1177/02783649211056967 (2021).

Ota, K. et al. Data-efficient learning for complex and real-time physical problem solving using augmented simulation. IEEE Robot. Autom. Lett. 6(2), 4241–4248. https://doi.org/10.1109/LRA.2021.3068887 (2021).

Jian, P., Sun, C., Yu, X., He, B. & Xia, M. An end-to-end algorithm for solving circuit problems. Intern. J Pattern Recognit. Artif. Intell. 33(07), 1940004. https://doi.org/10.1142/S0218001419400044 (2019).

He, B., Yu, X., Jian, P. & Zhang, T. A relation based algorithm for solving direct current circuit problems. Appl. Intell. 50(7), 2293–2309. https://doi.org/10.1007/s10489-020-01667-7 (2020).

Yu, X., Sun, H. & Sun, C. A relation-centric algorithm for solving text-diagram function problems. J. King Saud Univ. – Comput. Inf. Sciences 34(10), 8972–8984. https://doi.org/10.1016/j.jksuci.2022.08.023 (2022).

Ji, S., Pan, S., Cambria, E., Marttinen, P. & Yu, P. S. A survey on knowledge graphs: Representation, acquisition, and applications. IEEE Trans. Neural Netw. Learn. Syst. 33(2), 494–514. https://doi.org/10.1109/TNNLS.2021.3070843 (2022).

Yang, Y., Huang, C., Xia, L. & Li, C. Knowledge Graph Contrastive Learning for Recommendation. in Proceedings of the 45th International ACM SIGIR Conference on Research and Development in Information Retrieval 1434–1443 (ACM, , New York, NY, USA , 2022). https://doi.org/10.1145/3477495.3532009.

Zhang, J. & Li, Y. SL-GCNN: A graph convolutional neural network for granular human motion recognition. IEEE Access 13, 12373–12387. https://doi.org/10.1109/ACCESS.2024.3514082 (2025).

Amiri, B., Haddadi, A. & Farajpour Mojdehi, K. A novel hybrid GCN-LSTM algorithm for energy stock price prediction: leveraging temporal dynamics and inter-stock relationships. IEEE Access 13, 24815–24832. https://doi.org/10.1109/ACCESS.2025.3536889 (2025).

Cao, Y., Hong, F., Li, H. & Luo, P. A bottom-up DAG structure extraction model for math word problems. Proc. AAAI Conf. Artif. Intell. 35(1), 39–46. https://doi.org/10.1609/aaai.v35i1.16075 (2021).

Li, S., Wu, L., Feng, S., Xu, F., Xu, F. & Zhong, S. Graph-to-Tree Neural Networks for Learning Structured Input-Output Translation with Applications to Semantic Parsing and Math Word Problem (2020) [Online]. Available: http://arxiv.org/abs/2004.13781.

Shen, Y. & Jin, C. Solving Math Word Problems with Multi-Encoders and Multi-Decoders. in Proceedings of the 28th International Conference on Computational Linguistics 2924–2934 (International Committee on Computational Linguistics, Stroudsburg, PA, USA, 2020). https://doi.org/10.18653/v1/2020.coling-main.262.

Tsai, S. H., Liang, C. C., Wang, H. M. & Su, K. Y. Sequence to General Tree: Knowledge-Guided Geometry Word Problem Solving (2021) [Online]. Available: http://arxiv.org/abs/2106.00990.

Saadat, A., Sogir, T. B., Chowdhury, M. T. A. & Aziz, S. When Not to Answer: Evaluating Prompts on GPT Models for Effective Abstention in Unanswerable Math Word Problems (2024).

Acknowledgements

The authors gratefully acknowledge the helpful comments and suggestions of the reviewers and editors, which improved the presentation of this research.

Funding

This research was supported by the National Natural Science Foundation of China (No. 62107014), the Youth Talent Support Project of Henan Province (2023HYTP046), and the Key Research Project of Higher Education Institutions in Henan Province (25A520006).

Author information

Authors and Affiliations

Contributions

Conceptualization and methodology, P.J. and Y.W.; software, H.L.; validation, P.J., Y.W. and H.L.; formal analysis and investigation, M.Y.; resources, H.L.; data curation, P.J.; writing—original draft preparation, H.L. and Y.W.; writing—review, edit and revise, Y.W. and H.L.; visualization, H.L.; project administration, P.J. and Y.L.; funding acquisition, Y.W. and P.J. All authors have read and agreed to the published version of the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Wang, Y., Jian, P., Liu, H. et al. A sequence to formula tree model for solving electrical text problems. Sci Rep 15, 19267 (2025). https://doi.org/10.1038/s41598-025-04392-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-025-04392-8