Abstract

Accurate and precise identification of cholelithiasis is essential for saving the lives of millions of people worldwide. Although several computer-aided cholelithiasis diagnosis approaches have been introduced in the literature, their use is limited because Convolutional Neural Network (CNN) models are black box in nature. Therefore, a novel approach for cholelithiasis classification using custom CNN with post-hoc model explanation is proposed. This paper presents multiple contributions. First, a custom CNN architecture is proposed to classify and predict cholelithiasis from ultrasound image. Second, a modified deep convolutional generative adversarial network is proposed to produce synthetic ultrasound images for better model generalization. Third, a hybrid visual explanation method is proposed by combining gradient-weighted class activation with local interpretable model agnostic explanation to generate a visual explanation using a heatmap. Fourth, an exhaustive performance analysis of the proposed approach on ultrasound images collected from three different Indian hospitals is presented to showcase its efficacy for computer-aided cholelithiasis diagnosis. Fifth, a team of radiologists evaluates and validates the prediction and respective visual explanations made using the proposed approach. The results reveal that the proposed cholelithiasis classification approach beats the performance of state-of-the-art pre-trained CNN and Vision Transformer models. The heatmap generated through the proposed hybrid explanation method offers detailed visual explanations to enhance transparency and trustworthiness in the medical ___domain.

Similar content being viewed by others

Introduction

Cholelithiasis is a widespread medical disorder that affects millions of patients worldwide1,2. Cholelithiasis is characterized by the formation of stones commonly known as gallstones in the gallbladder. When gallstones block the flow of bile cause a range of complications such as cholelithiasis, infection of the bile ducts, and inflammation of the gallbladder and pancreas3,4,5. The clinical procedure of cholelithiasis diagnosis relies on diagnostic information collected from physical examination, blood tests, and medical imaging tests, as shown in Fig. 1. However, the clinical procedure is time-consuming and expensive. Sometimes, it may not diagnose accurately6,7. The symptoms of cholelithiasis identified in a patient can be ambiguous as they can overlap with the symptoms of other diseases8,9. Furthermore, diagnosing cholelithiasis, particularly in the early stages can be challenging due to overlapping features of multiple abnormalities present in the gallbladder.

Ultrasonography (USG) is a widely employed imaging test due to its safety, non-invasiveness, and effectiveness in visualizing the gallbladder and associated diseases7,10,11. Despite being a prevalent condition affecting millions of people globally, the diagnostic process for cholelithiasis still relies heavily on radiologists’ manual interpretation of ultrasound images1,2,5. The understanding of radiological findings remains subjective and prone to error12,13. This reliance on humans can lead to variability in diagnostic accuracy, particularly in cases where radiologists are not readily available14. As a result, computer-aided cholelithiasis diagnosis is needed to enhance diagnostic accuracy and clinical decision-making. Moreover, the integration of deep learning into clinical workflows can significantly augment radiologists’ capabilities. Unlike conventional techniques that rely on a fixed set of clinical procedures, deep learning models can continuously improve by learning from new data. This adaptability could prove invaluable in evolving clinical scenarios, where the nature of diseases and imaging techniques may change over time.

Since the advent of CNN, Computer-Aided Diagnosis (CAD) has greatly influenced medical image classification and prediction15,16. Most CAD systems utilize CNNs due to their ability to encode deep visual representations of various diseases17,18. However, inference using deep CNNs is typically slow due to millions of learnable parameters and complex dependencies between regions of ultrasound images. In addition, the opacity of CNN models due to their black box nature presents a critical hurdle in their clinical adoption19,20,21. Explainability is vital in healthcare to build the trust of healthcare professionals in Artificial Intelligence (AI) applications20. Therefore, Explainable AI (XAI) methods have become increasingly crucial for enhancing computer-aided diagnostic precision. XAI methods22,23,24,25,26 aim to interpret the decisions of CNN models to make them more transparent and interpretable. Even though many researchers have attempted CNN model explainability for generic images, developing visual explanation methods for medical images like ultrasound images is challenging.

Motivation

The insights from the literature survey show that the research specific to CNNs in cholelithiasis diagnosis is limited due to the unavailability of open-source ultrasound image datasets27,28. Additionally, existing diagnosis approaches heavily rely on pre-trained CNN models, which often result in complex and large models27,29,30. Consequently, there is a compelling need to enhance diagnostic accuracy while providing explanations to the decision. Furthermore, healthcare professionals need to know the rationale behind the diagnosis provided by the CAD system. Hence, the lack of transparency in CAD systems hinders their integration into clinical practice and limits their acceptance.

Our extensive study and interaction with radiologists from three Indian hospitals, namely, Post-graduate Institute of Medical Education and Research (PGIMER) located in Chandigarh, SIDS Hospital and Research Centre located in Surat, and Parul Sevashram Hospital located in Vadodara city have led us to conclude that the development of accurate, and explainable methods for cholelithiasis diagnosis is a crucial requirement. Thus, a novel cholelithiasis classification approach is proposed by integrating a post-hoc explanation method with a CNN. This research intends to create an explainable computer-aided cholelithiasis diagnosis approach that generates detailed visual explanations for its predictions. Additionally, the proposed approach aims to increase the accuracy of cholelithiasis diagnosis to make it more trustworthy and acceptable for healthcare professionals and patients.

Contributions

The primary contributions in this paper are outlined below:

-

(1)

Generated a new ultrasound image dataset for cholelithiasis by collecting abdominal ultrasound images from three different hospitals in India.

-

(2)

Proposed modified Deep Convolutional Generative Adversarial Network (DCGAN) to generate ultrasound images.

-

(3)

Proposed a novel cholelithiasis classification approach using a custom CNN.

-

(4)

Proposed a hybrid visual explanation method for CNN to explain model prediction.

-

(5)

Presented an exhaustive experimental analysis of the proposed approach on the generated dataset to showcase its efficacy for computer-aided cholelithiasis diagnosis. A comparative study of the custom CNN model with state-of-the-art pre-trained models is presented.

-

(6)

Validated the visual explanation generated by the proposed hybrid visual explanation method by a team of radiologists to illustrate the enhanced trustworthiness of the prediction made by the proposed custom CNN model.

Paper structure

The paper is organized into five sections: Section “Insights from the literature survey” presents a survey of the limited work available in the literature for computer-aided cholelithiasis diagnosis using CNN. It also presents explanation methods suitable for CNN. Subsequently, Section “The proposed cholelithiasis classification using CNN with post-hoc model explanation” illustrates the proposed approach for cholelithiasis classification using custom CNN with the post-hoc model explanation. Additionally, this section introduces the proposed modified DCGAN utilized for ultrasound image generation and the proposed hybrid visual explanation method for elucidating the CNN’s decision process. Section “Experimental design and results” discusses the dataset details, evaluation metrics, experimental setup, and results using various graphs to illustrate the findings. Finally, this paper is concluded with the roadmap of future research in Section “Conclusion”.

Insights from the literature survey

CNNs have significantly impacted the CAD system through multiple medical applications, such as detecting abnormalities, classifying diseases, and organ segmentation. Extensive research effort has been made to utilize CNN for the classification and detection of various diseases by the computer vision community. However, the literature on CAD systems for diagnosing cholelithiasis and other gallbladder diseases is sparse.

Diagnosis of gallbladder diseases using CNN

Several research studies3,6,7,8,10,11,30,31,32,33,34,35,36,37,38 have explored the potential of CNNs for CAD systems to diagnose gallbladder diseases. The first CAD system to detect and classify cholelithiasis from Computer Tomography (CT) images was developed using CNN by Pang et al.27. This system utilized the YOLO-V3 model to detect cholelithiasis and classify gallstones with an average accuracy of 86.5%. Furthermore, the same authors have also developed an Android application for cholelithiasis recognition on mobile devices using a lightweight CNN model29. This application can detect cholelithiasis in less than 4 s with an average accuracy of 90.8%. Jeong et al.30 classified neoplastic polyps from ultrasound images using the Inception-V3 model in another study. Despite demonstrating potential in polyp classification in the gallbladder, this study is constrained by its reliance on manually cropped images. This limitation may hinder its application in real-time clinical situations. Jang et al.11 implemented a ResNet50 to diagnose polypoidal lesions from endoscopic images. Similarly, Chih et al.4 utilized ResNet50 for cholelithiasis detection, and MobileNet-V2 to classify cholecystitis. However, addressing the model generalization capability is essential when considering pre-trained models like ResNet50 and MobileNet-V2 for disease classification. Basu et al.10 proposed a CNN coupled with a Gaussian blurring-based curriculum to detect gallbladder cancer from ultrasound images. Additionally, a curriculum inspired by human visual acuity is proposed to reduce the texture biases of the trained CNN model. While this model surpasses human accuracy, the variations in lesion characteristics of cancer may impact the model’s accuracy.

Few studies in diagnosing gallbladder diseases focus on segmenting lesions and gallstones. For example, a study by Lian et al.6 employs a parameter-adaptive pulse-coupled neural network to segment gallbladder and gallstone regions. However, this method’s slow processing speed limits its real-time clinical applicability. To overcome this limitation, Song et al.35 introduced a CNN model, U-NeXt, with a skip connections module and the attention upsampling block for segmenting gallstones from CT images. However, this model has a limitation in the manual selection of relevant image slices from the CT scan for analysis. In another study, Chen et al.32 utilized an AdaBoost classifier with principal component analysis to classify polyps into neoplastic and non-neoplastic after segmenting the gallbladder region from an ultrasound image. Despite its promising results, the method encounters challenges due to the variability of gallbladder morphology, leading to occasional failures in achieving precise segmentation. Recently, Obaid et al.4 utilized pre-trained CNNs such as VGG16, ResNet152, Inception-V3, and MobileNet to detect nine gallbladder diseases using ultrasound images. While MobileNet yielded the best accuracy, the model’s generalization capabilities are lacking, necessitating further improvements in model robustness for diverse gallbladder conditions.

Various studies have employed CNN architectures like YOLO-V3, Inception-V3, ResNet50, MobileNet, VGG16, ResNet152, and U-NeXt to detect and classify gallbladder abnormalities from ultrasound and CT images. While these CNNs have shown promising results in disease detection, there are challenges, such as reliance on pre-trained models, limited generalization capabilities, and issues related to diagnostic accuracy. Furthermore, none of these studies explore the model’s explainability. Hence, further research is necessary to enhance the model accuracy model’s explainability and improve generalization capabilities for diverse gallbladder conditions.

Explainability of CNN

This section reviews the methods26,39,40,41 available for the explainability of convolutional neural network. The explanation method suitable for CNN, also known as a visual explanation, highlights important pixels based on their contributions on model’s decision42. Different visual explanation methods employed for CNN can be broadly categorized into two groups: (1) Perturbation-based explanations and (2) Activation map-based explanations. Most of these explanation methods fall into the post-hoc approach23,26,43.

Perturbation-based explanations identify the essential features of CNN models by estimating the effect of variations in image features on model output. Local Interpretable Model-agnostic Explanation (LIME)44 and SHapley Additive exPlanations (SHAP)45 are commonly used perturbation-based explanations for medical image classification. LIME generates local explanations by perturbing input features and analyzing their impact on CNN predictions, thereby elucidating the reasoning behind diagnostic decisions. For example, Magesh et al.42 introduced a method for classifying Parkinson’s disease using DaTSCAN image and LIME to generate explanations. In another study, Malhi et al.46 used LIME to explain bloody regions detected by CNN in gastral endoscopy image. Rajaraman et al.24 utilize LIME to define the customized VGG16 model to detect pneumonia in pediatric chest X-ray. Although LIME shows supremacy in interpreting CNN models, the variation in choice of tunable parameters such as surrogate model, choice of segmentation method to generate super-pixels, number of samples to generate, and a classifier function to compare original and perturbed sample may lead to significant variances in results. In contrast, SHAP utilizes Shapley values to attribute features in an additive manner. Shapley values assign individual contributions to each feature with respect to the prediction made by the model. Zhu and Ogino47 use SHAP for CT images to diagnose lung nodules. Velden et al.48 use SHAP to explain predictions made by the CNN model. The key limitation of Shapley values is their reliance on assessing many permutations, which makes SHAP time-consuming.

Zhou et al.49 introduced the first activation map-based explanation, Class Activation Mapping (CAM), calculated using linearly weighted activation maps at different spatial locations. Jiang et al.50 utilized CAM on an ensemble of Inception-V3, ResNet152, and Inception-ResNet-V2 for localizing diabetic retina in fundus images. Xie et al.51 localized lesions in abnormal brain ultrasound images using CAM. Qi et al.52, Kim et al.53, and Zhou et al.54 utilized CAM to localize breast tumours and generate a heatmap using ultrasound images. Herrera et al.55 showed that CAM can precisely highlight interpapillary capillary loop patterns in high-resolution endoscopy image. However, a common drawback of CAM is its dependency on model architecture. Gradient-weighted CAM (Grad-CAM)25,56 is an CAM extension that leverages the convolutional layers’ learned weights to generate a visual explanation.

Hamm et al.57 and Windisch et al.58 leveraged Grad-CAM on a trained CNN model to recognize the attention region of hepatic lesions from multi-phasic MRI. Similarly, Hosch et al.59 utilized Grad-CAM to get insights into an anatomical structure, which contributed to the decision by the VGG variant and ResNet34 from chest X-ray. Sloun et al.60 and Xie et al.61 employed Grad-CAM to highlight the regions of tumours in ultrasound images for the chest and brain, respectively. Although Grad-CAM has been utilized in many CAD systems, it has several limitations: (1) it cannot show fine-grained pixel importance precisely as it only highlights region contributed for a given class globally62; (2) The presence of similar objects may result in an inconsistent heatmap, as Grad-CAM can only identify a single region for a specific class. Hence, several enhancements, including Guided Grad-CAM56, Grad-CAM++63, and Score-CAM64 are devised to address the shortcomings of Grad-CAM and improve the overall quality of explanation. Although these gradient-based visual explanation methods demonstrated supremacy in explaining prediction for natural images, their utilization in interpreting medical images is difficult.

As per insights from the literature survey, there is a need for a simple, accurate, and truly explainable method for computer-aided cholelithiasis diagnosis. The proposed work aims to address these limitations by introducing a CNN coupled with a post-hoc model explanation method, enhancing both diagnostic accuracy and providing interpretable visual explanations of the model’s predictions. The GAP layer used in our proposed custom CNN preserves spatial information relevant to the cholelithiasis class in the feature maps, resulting in high diagnostic precision. The GAP layer also helps in generating detailed visual explanations.

The proposed cholelithiasis classification using CNN with post-hoc model explanation

This section illustrates the proposed approach for cholelithiasis classification. Table 1 represents the notations used in this paper. Figure 2 illustrates the steps of the proposed cholelithiasis classification approach and the flow between them. The proposed approach comprises seven steps: ultrasound image acquisition, pre-processing, augmentation, feature extraction, cholelithiasis classification, visual explanation, and finally, cholelithiasis diagnosis. Algorithm 1 delineates the proposed cholelithiasis classification approach. Algorithm 2 delineates the proposed modified DCGAN. Algorithm 3 delineates the hybrid visual explanation method.

Ultrasound image acquisition

During the acquisition process of ultrasound images, the radiologist adjusts various settings, such as the frequency and depth of the sound waves, to optimize the quality of the resulting images. All ultrasound images are labelled in one of two classes: normal and cholelithiasis, by a team of radiologists. Figure 3 shows a sample ultrasound image captured by expert radiologists. A set of n ultrasound images US = {u1, u2, u3,…, un} is provided as input to Algorithm 1.

Ultrasound image pre-processing

The first step of the proposed approach is to pre-process the ultrasound images. Steps 1–8 of Algorithm 1 represent the steps of pre-processing. Figure 4 shows the pre-processing steps of the ultrasound image. The pre-processing step starts with manual cropping, followed by contrast enhancement. Manual cropping reduces computational overhead by removing unimportant regions from ultrasound images, allowing the analysis to focus on areas of interest. This process is carried out with the assistance of a team of sonography operators and radiologists to ensure consistent and accurate cropping, preserving the critical features necessary for further analysis. During the validation of the cropped images, the radiologists of the respective hospitals identified and reported the issues of invisible boundaries. To address this, contrast enhancement, as given in steps 4–6 of Algorithm 1 is applied to improve the visibility of the gallbladder and gallstone in ultrasound image. The histogram equalization technique effectively improves image contrast by redistributing the gray pixel values of the image65,66. The pixel intensity is normalized in the range of [0, 255], which corresponds to the typical 8-bit grayscale image. Normalization ensures that all pixel values can be effectively redistributed to enhance contrast. Then, the histogram of the ultrasound image is equalized to make the dark areas of the image brighter and the bright areas darker, resulting in a more visually appealing and informative image. Additionally, all intensity values are redistributed to ensure a uniform enhancement across the full intensity range of the ultrasound image. The procedure for performing histogram equalization is as follows:

-

i.

Calculate the image histogram.

-

ii.

Calculate the Cumulative Distribution Function (CDF) of the histogram.

-

iii.

Normalize the CDF values to obtain the equalized histogram.

-

iv.

Map the normalized histogram values back to the original image.

Ultrasound image augmentation

The main objective of generating synthetic ultrasound images is to supplement the existing training data with a diverse set of ultrasound images for better model generalization. After the pre-processing, a set of synthetic ultrasound images is generated using modified DCGAN to present variation in the position of the gallstones and gallbladder to train the proposed custom CNN. The invention of DCGAN67,68,69,70 and its variants is a great breakthrough for artificial image synthesis. While, DCGAN is an important development for artificial image synthesis, it also comes with noteworthy limitations: (1) training instability (2) mode collapse and (3) vanishing gradients. To overcome these limitations, a modified deep convolutional GAN is proposed. The modified DCGAN incorporates several key enhancements:

-

1.

LeakyReLU activation: Rectified Linear Unit (ReLU) activation functions are replaced with LeakyReLU in each layer excluding the output layer, with a leak slope of 0.2, to prevent zero gradients.

-

2.

ConvTranspose2D for up-sampling: ConvTranspose2D also known as a transposed convolution or deconvolution is used to learn parameters that are similar across ultrasound images. This enables the model to produce more detailed and realistic ultrasound images, as the up-sampling process is more closely tied to the learned representations of ultrasound.

-

3.

Batch normalization: Batch normalization mitigates training instability by normalizing the output of each layer. It also reduces the risk of vanishing gradients, making it easier for the network to converge.

The detailed step of the proposed modified DCGAN is given in Algorithm 2. It uses deep convolutional networks to generate images, which can capture high-level features and produce realistic images. Figure 5 shows the architecture of a modified DCGAN.

The generator network comprises a set of de-convolution layers to transform vector Z into the image of the required dimension. The discriminator network comprises a convolution neural network that classifies generated synthetic ultrasound images into real or fake. During the forward pass of the discriminator, the loss \(\log ({\text{D}}({\text{US}}_{\text{j}}^{\text{p}}))\) is computed. Then, a batch of generated images denoted by \(\text{G}({\text{z}}_{\text{j}})\) is passed to the discriminator to compute the loss log(1 − D(G(\({\text{z}}_{\text{j}}\)))). Furthermore, the gradients are computed in the backward pass and summed up, and learning parameters θd and θg are updated. After several iterations, the generator learns to generate ultrasound images identified as real by the discriminator. The synthetic images generated by the proposed modified DCGAN are evaluated using Peak Signal-to-Noise Ratio (PSNR), and Structural Similarity Index Measure (SSIM)71,72. PSNR quantifies the degree of matching between real and fake ultrasound images in a given pair. A greater PSNR value suggests a stronger matching of the intensity between the synthetic ultrasound image and the real ultrasound image. Similarly, SSIM quantifies the degree of structural similarity between two images. A higher SSIM value indicates a greater degree of structural similarity between the synthetic ultrasound image and the real ultrasound image. Finally, a set of synthetic ultrasound images \({\text{US}}^{\text{s}}\) using the trained generator has been generated and returned as the output of Algorithm 1.

The proposed modified DCGAN comprises two CNNs that are trained concurrently in an adversarial manner. During the pre-processing step, the ultrasound images are merely subjected to scaling in a range of − 1 to 1, which is necessary for the Tanh activation function. Stochastic Gradient Descent (SGD) and Adaptive Moment Estimation (ADAM) optimizer with a learning rate of 0.001 are used to train the generator as well as the discriminator. Moreover, batch normalization is used with mini-batch sizes of 16 and 32. In addition, the leaky ReLU activation function is used by the generator and discriminator, respectively.

Feature extraction using custom CNN

Next, the ultrasound images from the pre-processed set \({\text{US}}^{\text{p}}\) and synthetic image set \({\text{US}}^{\text{s}}\) are divided into three subsets, \({\text{Y}}_{\text{train}}, {\text{Y}}_{\text{val}},\text{ and }{\text{Y}}_{\text{test},}\) for training, validation, and testing, respectively, as given in steps 10–13 of Algorithm 1. The training set is utilized for training the CNN, while the validation set is utilized to find optimal hyperparameters. A custom CNN is trained to extract the useful features from the ultrasound images saved in \({\text{Y}}_{\text{train}}\). Figure 6 shows the architecture of the proposed custom CNN. Figure 7 illustrates the layered architecture of custom CNN, composed of five convolutional layers followed by max pooling layers, a global average pooling layer, and three fully connected layers. The custom convolutional layers are designed using variable number of filters (32 and 64) with a fixed kernel size of 3 × 3. A default stride of (1, 1) without any padding is utilized in each convolutional layer. The max-pooling layer adapts to the spatial resolution required to identify important regions in the ultrasound images of cholelithiasis classification. ReLU activation function is utilized except for the output layer, which uses sigmoid activation. Each convolutional layers generate k feature maps that represent different learned features of the input image, such as edges, textures, and patterns. The initial three convolutional layers output 32 feature maps (i.e. k = 32) each. The remaining two convolutional layers out of five layers of our proposed custom CNN produce 64 feature maps (i.e. k = 64) each. As the input ultrasound images pass through the different layers of the CNN, it starts recognizing the regions of the gallbladder and its arbitrary shapes. Finally, the CNN model learns to classify the ultrasound images into two classes, normal and cholelithiasis.

Cholelithiasis classification using trained CNN

Once the CNN model is trained with acceptable performance, test ultrasound images from the \({\text{Y}}_{\text{test}}\) are used to predict the class as normal or cholelithiasis. Additionally, the model outputs the prediction score of the respective class. Steps 16–17 of Algorithm 1 represent the testing phase of CNN. Figure 8 shows a sample output for each class of ultrasound images.

Visual explanation

In this step, a visual explanation method for CNN is proposed by combining the best features of Grad-CAM and LIME given in Algorithm 3. Grad-CAM highlights pathological features indicative of gallstones for cholelithiasis class and gallbladder walls for normal ultrasound image. Meanwhile, LIME offers pixel-level explanations, pinpointing specific regions crucial for the model’s classification decision. Figure 9 illustrates the steps of the proposed visual explanation method. Input to this Algorithm is a test ultrasound image, a trained CNN model M, and a class label of the test image. Initially, a set of feature maps is learned from the last convolution layer of the trained CNN model using a test ultrasound image. Next, gradients of extracted feature maps are computed. Then, the average of gradients is calculated through global average pooling with the RELU function to identify important pixels involved in the prediction. Based on the pixels identified, a set of perturbed images is generated. These perturbed images are generated by altering the pixel values of the test ultrasound image. Furthermore, class probabilities for each of the perturbed images are generated using trained CNN model M. Then the weight of each perturbed image is computed to approximate the top e explainable features using the Lasso regression model. A mask is generated using the top five features with respect to predicted class C. Finally, a heatmap is generated by overlaying important pixels identified as \({\text{H}}^{\text{C}}\) and masks on the test ultrasound image. Equation (1) illustrates the overlay function.

where \(\alpha\) is the weighting factor that controls the influence of the Grad-CAM and LIME on visual explanation. Its value is set between 0 and 1. \(\cdot\) represents the element-wise multiplication of the Grad-CAM heatmap and the LIME mask.

At the last step of our proposed approach, an interface is provided to healthcare professionals such as doctors and radiologists to verify the results and explain the diagnosis to patients.

Experimental design and results

A chain of experiments is performed using different hyperparameters to find the most appropriate experiment configuration for the proposed approach. Furthermore, the results of each experiment are presented to illustrate the findings.

Dataset

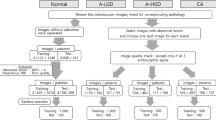

The dataset is a key requirement in model development for cholelithiasis classification and prediction. The quantity and quality of the dataset strongly influence the model’s performance. As per the literature survey, there is no publicly available ultrasound image dataset for cholelithiasis. Therefore, we collected 900 abdominal ultrasound images of 218 patients from PGIMER, Chandigarh, 273 ultrasound images of 30 patients from SIDS Hospital and Research Centre, Surat, and 37 ultrasound images of 15 patients from Parul Sevashram Hospital, Vadodara. The method adopted in the data acquisition is as per ethical guidelines of PGIMER, Chandigarh (Approval date: 14th July 2022), SIDS Hospital and Research Centre, Surat (Approval date: 18th January 2021), and Parul Sevashram Hospital, Vadodara (Approval date: 4th February 2020) respectively. Furthermore, all experiments carried out in this research are in accordance with relevant guidelines and regulations. No personally identifiable information is retained in any of the ultrasound images used. This research uses a collection of ultrasound images anonymously without disclosing patient information. Thus, the need for informed consent is not required from each patient individually. A total of 598 ultrasound images of normal gallbladder and 612 ultrasound images of cholelithiasis (gallbladder with gallstone) are collected. The statistics of this dataset also referred as the original dataset are represented in Table 2. The distribution of ultrasound images of the normal class and cholelithiasis class in the original dataset is presented in Table 3. Out of 1210 images, 846 (70%) ultrasound images are utilized for training, 244 (20%) images for validation, and 120 (10%) images are used for testing to measure the model’s performance. Figure 10 shows sample ultrasound images from the original dataset.

Ultrasound image augmentation

The proposed modified DCGAN given in Algorithm 2 has been used for ultrasound image augmentation from the original ultrasound image dataset described in Table 2. The experimental results of DCGAN reveal that maximum average PSNR of 56.90 and a maximum average SSIM value of 0.99 is achieved after 100 training epochs. A total of 710 synthetic ultrasound images have been generated. Figure 11 shows the samples of generated ultrasound images. After adding synthetic ultrasound images, a new dataset with a total of 1920 (1210 + 710) ultrasound images is generated known as original and synthetic dataset. The distribution of original and synthetic dataset in the training set, validation set, and test set is presented in Table 4. Out of 1920 images, 1344 (70%) ultrasound images are utilized for training, 384 (20%) images for validation, and 192 (10%) images are used for testing to measure the model’s performance.

Evaluation metrics

The results of the proposed cholelithiasis diagnosis approach are assessed using several evaluation metrics. These metrics are broadly classified into i) Quantitative metrics and ii) Qualitative metrics. The evaluation of the proposed cholelithiasis diagnosis approach is critical for determining the robustness of the classifier in diverse clinical scenarios.

Quantitative metrics

The proposed cholelithiasis classification approach has been evaluated using six quantitative metrics: accuracy, precision, recall, specificity, F1-score, and AUC score. Accuracy is the percentage of ultrasound images classified correctly of all input ultrasound images. Precision is the ratio between predicted true positives and all positive predictions. Precision reflects the model’s ability to correctly identify cases of cholelithiasis without misclassifying normal cases as positive. Recall is the ratio between predicted true positive and the total of predicted true positive and false positive cases. Recall indicates the model’s sensitivity in correctly detecting all cases of cholelithiasis among all actual cases. Sensitivity measures the true positive rate by identifying the proportion of correctly predicted true positive cholelithiasis cases. Similarly, specificity measures the proportion of correctly identified true negative cholelithiasis cases. F1-score is a balanced measure of a classifier’s performance computed as the harmonic mean of precision and recall. The F1-score assesses the performance of the model in correctly classifying both cholelithiasis and normal cases while minimizing false positives and false negatives. Equations 2–6 show the respective formulas of accuracy, precision, recall, specificity and F1-core.

Additionally confusion matrix, Area under the curve (AUC), and Receiver operating characteristic (ROC) is utilized to provide a more nuanced understanding of the proposed model performance. Confusion matrices are used to provide a detailed breakdown of each prediction made by the proposed cholelithiasis classification. The AUC quantifies the overall ability of the proposed custom CNN model to discriminate between normal and cholelithiasis classes. Additionally, it represents the area under the ROC curve and provides a single value to evaluate the performance. ROC is used for graphical representation of the trade-off between sensitivity and specificity for different cholelithiasis classification thresholds. These are frequently used metrics to evaluate binary classification models.

Qualitative metrics

The proposed hybrid visual explanation method is assessed by producing a visual interpretation using a heatmap. This is one of the most frequently used qualitative metrics to evaluate the XAI model. Furthermore, interpretation produced using the proposed hybrid visual explanation method is accessed by a team of radiologists at Parul Sevashram Hospital, Vadodara. This is a human-centric metric to measure human trust in model explanations.

Experimental setup

The experiments are conducted on a GPU with the Google Compute Engine backend and the NVIDIA CUDA 11.8 compiler. A total of 78.19 gigabytes of memory and 12.67 gigabytes of RAM are utilized during implementation. Python 3 is used alongside the PyTorch, Keras, and TensorFlow libraries for each experiment. A uniform image size, 224 × 224 is kept for input ultrasound images across all experiments. Binary Cross entropy loss is utilized in the training of models in each experiment. The learning rate is set in the range of 1e−4 to 4e−4. The training of each model utilizes the minibatch optimization to reduce the computational overhead and memory requirements. Other hyperparameters such as batch size, epochs, and optimizer are specific to experiments described in the subsequent section. The best result of each set has been bolded.

Results

This section explains the results and observations obtained from each experiment conducted in this paper. Initially, an exhaustive exploration of the optimal CNN architecture has been conducted to determine the best model for cholelithiasis classification.

Cholelithiasis classification using original and synthetic dataset

The first experiment aims to identify the best optimizer and optimal architecture for the proposed custom CNN for cholelithiasis classification. The main objective of this experiment is to enhance model generalization through the use of original and synthetic datasets. The dataset comprises 1920 ultrasound images, as outlined in Table 4. Instead of flattening feature maps, a global average pooling layer is utilized, which results in a compact model size of 355 KB. Each model in this experiment uses a batch size of 32 and 100 epochs of training. The class weight is 1.005 and 0.994, respectively, for cholelithiasis and normal classes. Table 5 summarizes the results of this experiment. Figure 12 shows the plot of accuracy with respect to epochs for the three custom CNN models developed in this experiment. Furthermore, Fig. 13 shows the loss curves during training and validation of three custom CNN models. Figure 14 shows the confusion matrices. Moreover, Fig. 15 shows the ROC curves to represent the overall performance. Among three optimizers, the proposed custom CNN with ADAM attained the highest accuracy with reasonable precision, recall, F1-score and AUC score.

Cholelithiasis classification using original dataset

The objective of the second experiment is to showcase cholelithiasis classification using the original dataset consists of 1210 ultrasound images as mentioned in Table 2. This experiment is used to perceive the difference in results with the cholelithiasis classification model trained using original and synthetic datasets. The distribution given in Table 3 has been used to perform this experiment. Each model in this experiment uses a batch size of 32 and 100 epochs of training. The class weight is 0.988, and 1.011 respectively for cholelithiasis and normal classes. Table 6 summarizes the results of this experiment. Figure 16 shows the plot of accuracy and loss with respect to epochs for the three custom CNN models developed in this experiment. Figure 17 shows the confusion matrices that summarize the model performance by comparing the predicted labels with the true labels of the ultrasound images. Additionally, Fig. 18 shows the ROC curves to represent the overall performance of the proposed custom CNN. Among three optimizers, the proposed custom CNN with ADAM attained the highest testing accuracy with reasonable precision, specificity, F1-score, and AUC score. Compared to models trained using original and synthetic dataset, minor declinations in training and validation accuracy and recall were observed.

Additionally, a flatten layer is used instead of a GAP layer to demonstrate the efficacy of the proposed custom CNN. The results in Table 7 show that the proposed custom CNN with GAP layer and ADAM optimizer outperforms CNN using the flatten layer. Figure 19 shows the curves of accuracy versus epochs of six custom CNN models. Additionally, Fig. 20 shows loss curves of each of the six custom CNN models. Figure 21 shows the confusion matrices of each CNN model developed using a flatten layer. Figure 22 shows the ROC curves to represent the model performance of the proposed custom CNN with a flatten layer.

Cholelithiasis classification using pre-trained CNN models

Recent works in medical image classification have proven that utilizing transfer learning on a pre-trained model yields exceptional classification results. In the third experiment, a comparative analysis of custom CNN model with existing state-of-the-art pre-trained CNN models namely VGG19, DenseNet161, ResNet50, AlexNet, SqueezeNet1_0, GoogLeNet, and ResNeXt101_64X4D is presented. These CNNs are trained using a dataset of 1210 ultrasound images given in Tables 2 and 3. Pre-trained weights of respective CNNs are used to initialize the parameters. All pre-trained CNNs are trained using ADAM optimizer. Each model in this experiment uses a batch size of 32 and 100 epochs of training. Table 8 presents the results of this experiment. The proposed custom CNN demonstrated superior performance. Moreover, the ResNet50 and DenseNet161 model exhibits almost equal performance as the proposed CNN. Figure 23 shows a comparative study of the observations presented in Table 8.

Cholelithiasis classification using pre-trained ViT models

In the fourth experiment, the proposed custom CNN model is compared with pre-trained ViT models. The encoder and decoder of each ViT model are trained using the AdamW optimizer. Each model in this experiment uses a batch size of 32 and 50 epochs of training. Table 9 presents the summary of results from state-of-the-art pre-trained ViT models. Among the five ViT models, the ViT Large-32 achieved the best performance. However, the proposed custom CNN outperformed the aforementioned ViT models. Figure 24 shows a comparative analysis of the results presented in Table 9.

Post-hoc explanation for the proposed cholelithiasis classification

The fifth experiment aims to explain the CNN model’s prediction for clinical acceptance and adoption of our proposed method. The proposed post-hoc explanation method given in Algorithm 3 has been used to explain the CNN. Heatmap visualization is employed to highlight the important pixels of the input ultrasound image that contribute significantly to the output of the CNN model. The examples in Fig. 25 show that the proposed explanation method provides comprehensive insights and interpretability of CNN for cholelithiasis classification and prediction. However, the proposed method induces a human bias due to the subjective interpretation of the heatmap. Therefore, the proposed model explanation method has been assessed by a team of experienced radiologists at Parul Sevashram Hospital in Vadodara. Involving radiologists in the assessment ensures that the explanations generated by the proposed method align with the needs of healthcare professionals. Their feedback and validation of the model’s interpretations play a significant role in validating the efficacy and reliability of our approach in real-world clinical settings.

Discussion

The proposed method using custom CNN achieved significant enhancements in accuracy with 4.5% gain compared to the base work of cholelithiasis detection proposed by Pang et al.27. Additionally, the findings exhibit that the proposed cholelithiasis classification method surpasses the performance of state-of-the-art pre-trained CNN and ViT models by addressing their limitations. Specifically, while pre-trained CNNs struggle with ___domain-specific challenges in ultrasound imaging, our custom CNN is optimized for this task by focusing on localized features. The proposed custom CNN can be adapted to handle more complex diagnostic tasks with a wider range of gallbladder or other abdominal abnormalities. This can be done by modifying the CNN architecture to include multi-class output layers and fine-tuning the required hyperparameters. Additionally, the trained CNN model can be fine-tuned by leveraging transfer learning. The proposed CNN can handle larger datasets by adding more filters and increasing the depth of the CNN. In the case of very large datasets, distributed training techniques across multiple machines can ensure that the model remains computationally feasible.

The results reveal that the proposed cholelithiasis classification approach using custom CNN succeeds in generating a compact model size of 355 KB with the least number of parameters. The small size of the model allows it to be used for quick diagnosis in the clinical setting. The compactness of our model allows for rapid inference, which is essential for real-time diagnosis. Our preliminary tests indicate that the model can deliver predictions within seconds of ultrasound image acquisition, making it suitable for integration into existing clinical workflows. Hence, the proposed custom CNN has the potential to deliver predictions in real-time. The future research could explore the integration of the proposed approach into clinical practice to assist radiologists in the diagnosis of cholelithiasis and other medical conditions of the gallbladder.

The ultrasound images used in this research are collected from multiple hospitals, and specific information about the types of machines used or the skill levels of the examiners is not available. As ultrasound image quality and diagnostic accuracy can vary significantly depending on these factors, our model’s performance may be influenced by such variability. This variability could affect the model’s ability to generalize effectively across images from different sources. Future work will focus on addressing this limitation by conducting analyses to assess the model’s performance across different ultrasound machines and examiners. Furthermore, the custom CNN could be extended to handle more complex and varied imaging modalities such as CT scans or MRI, which may provide additional diagnostic information. For instance, the fusion of ultrasound with CT images may lead to a more holistic diagnosis of a patient’s condition, ultimately leading to better diagnostic accuracy. This can be done by modifying input channels and introducing variability in convolutional layers.

The performance of the proposed custom CNN is enhanced by utilizing synthetic ultrasound images generated with a modified DCGAN. Moreover, the utilization of a synthetic ultrasound image dataset has a positive impact on model generalization and accuracy. The use of a modified DCGAN can mitigate the data scarcity issue for deep learning models, by generating high-quality synthetic images, thereby enhancing the model’s generalization and robustness.

The heatmap generated through the proposed hybrid explanation method offers detailed visual explanations to enhance transparency and trustworthiness in the medical ___domain. Furthermore, the mask generated from the top five features of the proposed hybrid explanation method offers detailed visual explanations and beats even human radiologists, fostering trust and acceptance among healthcare professionals. The validation conducted by a team of radiologists highlights its efficacy in the real clinical environment. However, the proposed hybrid visual explanation method may induce a human bias due to the subjective interpretation of the heatmap. Therefore, future research could focus on developing more sophisticated and interpretable models that leverage textual explanations to provide actionable insights to radiologists. Furthermore, future research may emphasize explanation methods that can handle complex CNN architectures.

Conclusion

This research paper presents a computer-aided cholelithiasis diagnosis leveraging a convolutional neural network and post-hoc visual explanation method. Experimental evaluation demonstrates that the proposed approach can achieve high diagnostic accuracy while providing interpretable insights into CNN’s reasoning. The proposed custom CNN model attained superior performance than pre-trained CNN and ViT models. In addition, the custom CNN architecture is optimized for real-time performance by employing a GAP layer instead of flatten layer. The small model size enables quick predictions, and the high accuracy ensures reliability in clinical decision-making. The proposed post-hoc explanation method enhances the interpretability of the proposed custom CNN decision-making process. The validation by a team of radiologists underscores its effectiveness in real-world clinical settings. Their feedback and evaluation serve as a human-centric metric, affirming the reliability and utility of our model explanations in aiding medical decision-making. Overall, this research contributes to the advancement of computer-aided diagnosis for cholelithiasis from ultrasound imaging.

Future research directions for the proposed approach include adapting the custom CNN model to tackle more complex diagnostic tasks related to a wider range of gallbladder and abdominal abnormalities. The model could also be extended to handle varied imaging modalities, such as CT scans and MRIs, to provide comprehensive diagnostic insights. Addressing the model’s performance across different ultrasound machines and examiners will further enhance its robustness. Additionally, exploring the integration of this approach into clinical practice can assist radiologists in diagnosing cholelithiasis and other gallbladder conditions. Future work may focus on developing sophisticated and interpretable models that offer textual explanations, providing actionable insights to radiologists, and emphasizing explanation methods compatible with complex CNN architectures.

Data availability

The dataset used in this research paper is collected from PGIMER, Chandigarh, India, SIDS Hospital and Research Centre, Surat, India, and Parul Sevashram Hospital, Vadodara, India. The authors can provide this dataset upon request.

References

Chen, Q. et al. Mechanisms underlying the prevention and treatment of cholelithiasis using traditional Chinese medicine. Evid.-Based Complement. Altern. Med. 2019, 1–9. https://doi.org/10.1155/2019/2536452 (2019).

Dutta, U., Bush, N., Kalsi, D., Popli, P. & Kapoor, V. K. Epidemiology of gallbladder cancer in India. Chin. Clin. Oncol. 8, 1–20. https://doi.org/10.21037/cco.2019.08.03 (2019).

Żulpo, M., Balbus, J., Kuropka, P. & Kubica, K. A model of gallbladder motility. Comput. Biol. Med. 93, 139–148. https://doi.org/10.1016/j.compbiomed.2017.12.018 (2018).

Yu, C.-J. et al. Lightweight deep neural networks for cholelithiasis and cholecystitis detection by point-of-care ultrasound. Comput. Methods Programs Biomed. 211, 106382. https://doi.org/10.1016/j.cmpb.2021.106382 (2021).

Beckingham, I. J. Gallstones. Surgery (U.K.) 47, 846–849. https://doi.org/10.1016/j.mpmed.2019.09.003 (2020).

Lian, J. et al. Automatic gallbladder and gallstone regions segmentation in ultrasound image. Int. J. Comput. Assist. Radiol. Surg. 12, 553–568. https://doi.org/10.1007/s11548-016-1515-z (2017).

Basu, S., Gupta, M., Rana, P., Gupta, P. & Arora, C. RadFormer: transformers with global-local attention for interpretable and accurate gallbladder cancer detection. Med. Image Anal. 83, 102676. https://doi.org/10.1016/j.media.2022.102676 (2022).

Di Ciaula, A. & Portincasa, P. Recent advances in understanding and managing cholesterol gallstones. F1000Research 7, 1–14 (2018).

Hassan Arif, S., Hussein, I. S. & Mohammed, A. A. Duplicated gall bladder with gall bladder polyp presenting with cholecystitis: Case report with literature review. Int. J. Surg. Case Rep. 2019, 103–105. https://doi.org/10.1016/j.ijscr.2019.06.002 (2019).

Basu, S., Gupta, M., Rana, P., Gupta, P., Arora, C. Surpassing the human accuracy: Detecting gallbladder cancer from USG images with curriculum learning. In 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Los Alamitos, CA, USA: IEEE Computer Society, 20854–20864. https://doi.org/10.1109/CVPR52688.2022.02022 (2022).

Jang, S. I. et al. Diagnostic performance of endoscopic ultrasound-artificial intelligence using deep learning analysis of gallbladder polypoid lesions. J. Gastroenterol. Hepatol. (Australia) 36, 3548–3555. https://doi.org/10.1111/jgh.15673 (2021).

Neri, E. et al. What the radiologist should know about artificial intelligence: An ESR white paper. Insights Imaging https://doi.org/10.1186/s13244-019-0738-2 (2019).

Yasaka, K. & Abe, O. Deep learning and artificial intelligence in radiology: Current applications and future directions. PLoS Med. 15, 1–4. https://doi.org/10.1371/journal.pmed.1002707 (2018).

Saba, L. et al. The present and future of deep learning in radiology. Eur. J. Radiol. 114, 14–24. https://doi.org/10.1016/j.ejrad.2019.02.038 (2019).

Lai, Z. & Deng, H. Medical image classification based on deep features extracted by deep model and statistic feature fusion with multilayer perceptron. Comput. Intell. Neurosci. 2018, 1–13. https://doi.org/10.1155/2018/2061516 (2018).

Shen, D., Wu, G. & Suk, H.-I. Deep learning in medical image analysis. Annu. Rev. Biomed. Eng. 19, 221. https://doi.org/10.1146/annurev-bioeng-071516-044442 (2017).

Yadav, S. S. & Jadhav, S. M. Deep convolutional neural network based medical image classification for disease diagnosis. J. Big Data https://doi.org/10.1186/s40537-019-0276-2 (2019).

Sudha, S., Jayanthi, K. B., Rajasekaran, C. & Sunder, T. Segmentation of RoI in medical images using CNN—A comparative study. In IEEE Region 10 Annual International Conference, Proceedings /TENCON, 2019; Oct 2019, 767–771. https://doi.org/10.1109/TENCON.2019.8929648.

Adadi, A. & Berrada, M. Peeking inside the black-box: A survey on explainable artificial intelligence (XAI). IEEE Access 6, 52138–52160. https://doi.org/10.1109/ACCESS.2018.2870052 (2018).

Zhang, Y., Weng, Y. & Lund, J. Applications of explainable artificial intelligence in diagnosis and surgery. Diagnostics 12, 237. https://doi.org/10.3390/diagnostics12020237 (2022).

Borys, K. et al. Explainable AI in medical imaging: An overview for clinical practitioners—Saliency-based XAI approaches. Eur. J. Radiol. 162, 110787. https://doi.org/10.1016/j.ejrad.2023.110787 (2023).

Kumar, D. & Mehta, M. A. An overview of explainable AI methods, forms and frameworks. In Explainable AI: Foundations, Methodologies and Applications (eds Mehta, M. et al.) 43–59 (Springer, 2023). https://doi.org/10.1007/978-3-031-12807-3_3.

Moradi, M. & Samwald, M. Post-hoc explanation of black-box classifiers using confident itemsets. Expert Syst. Appl. 165, 113941. https://doi.org/10.1016/j.eswa.2020.113941 (2021).

Rajaraman, S., Candemir, S., Kim, I., Thoma, G. & Antani, S. Visualization and interpretation of convolutional neural network predictions in detecting pneumonia in pediatric chest radiographs. Appl. Sci. (Switz.) https://doi.org/10.3390/app8101715 (2018).

Selvaraju, R. R., Das, A., Vedantam, R., Cogswell, M., Parikh, D. & Batra, D. Grad-CAM: Why did you say that? Visual Explanations from Deep Networks via Gradient-based Localization. CoRR 2016;abs/1610.0.

Kim, I., Rajaraman, S. & Antani, S. Visual interpretation of convolutional neural network predictions in classifying medical image modalities. Diagnostics https://doi.org/10.3390/diagnostics9020038 (2019).

Pang, S. et al. A novel YOLOv3-arch model for identifying cholelithiasis and classifying gallstones on CT images. PLoS ONE 14, 1–18. https://doi.org/10.1371/journal.pone.0217647 (2019).

Zaman, A. et al. Generative approach for data augmentation for deep learning-based bone surface segmentation from ultrasound images. Int. J. Comput. Assist. Radiol. Surg. 15, 931–941. https://doi.org/10.1007/s11548-020-02192-1 (2020).

Pang, S., Wang, S., Rodríguez-Patón, A., Li, P. & Wang, X. An artificial intelligent diagnostic system on mobile android terminals for cholelithiasis by lightweight convolutional neural network. PLoS ONE 14, 1–30. https://doi.org/10.1371/journal.pone.0221720 (2019).

Jeong, Y. et al. Deep learning-based decision support system for the diagnosis of neoplastic gallbladder polyps on ultrasonography: Preliminary results. Sci. Rep. 10, 7700. https://doi.org/10.1038/s41598-020-64205-y (2020).

Andrén-Sandberg, Å. Diagnosis and management of gallbladder polyps. N. Am. J. Med. Sci. 5, 203–211. https://doi.org/10.4103/1947-2714.95897 (2012).

Chen, T. et al. Computer-aided diagnosis of gallbladder polyps based on high resolution ultrasonography. Comput. Methods Programs Biomed. 185, 105–118. https://doi.org/10.1016/j.cmpb.2019.105118 (2020).

Zhao, Y. et al. Knowledge-aided convolutional neural network for small organ segmentation. IEEE J. Biomed. Health Inform. https://doi.org/10.1109/JBHI.2019.2891526 (2019).

Gupta, D., Mitra Thakur, G. S. & Abhishek,. Detection of gallbladder stone using learning vector quantization neural network. Int. J. Comput. Sci. Inf. Technol. 3, 3934 (2012).

Song, T. et al. U-next: A novel convolution neural network with an aggregation U-net architecture for gallstone segmentation in CT images. IEEE Access 7, 166823–166832. https://doi.org/10.1109/ACCESS.2019.2953934 (2019).

Liew, P. L. et al. Comparison of artificial neural networks with logistic regression in prediction of gallbladder disease among obese patients. Dig. Liver Dis. https://doi.org/10.1016/j.dld.2007.01.003 (2007).

Unisa, S. et al. Population-based study to estimate prevalence and determine risk factors of gallbladder diseases in the rural Gangetic basin of North India. HPB https://doi.org/10.1111/j.1477-2574.2010.00255.x (2011).

Muneeswaran, V. & Pallikonda Rajasekaran, M. Gallbladder shape estimation using tree-seed optimization tuned radial basis function network for assessment of acute cholecystitis. Advances in Intelligent Systems and Computing, 229–39. https://doi.org/10.1007/978-981-10-7566-7_24 (2018).

Islam, M. R., Ahmed, M. U., Barua, S. & Begum, S. A systematic review of explainable artificial intelligence in terms of different application domains and tasks. Appl. Sci. 12, 1353. https://doi.org/10.3390/app12031353 (2022).

Zhang, Qs. & Zhu, Sc. Visual interpretability for deep learning: A survey. Front. Inf. Technol. Electron. Eng. 19, 27–39. https://doi.org/10.1631/FITEE.1700808 (2018).

Alicioglu, G. & Sun, B. A survey of visual analytics for Explainable Artificial Intelligence methods. Comput. Graph. (Pergamon) 102, 502–520. https://doi.org/10.1016/j.cag.2021.09.002 (2022).

Magesh, P. R., Myloth, R. D. & Tom, R. J. An explainable machine learning model for early detection of Parkinson’s disease using LIME on DaTSCAN imagery. Comput. Biol. Med. 126, 104041. https://doi.org/10.1016/j.compbiomed.2020.104041 (2020).

Kaba Gurmessa, D. & Jimma, W. Explainable machine learning for breast cancer diagnosis from mammography and ultrasound images: A systematic review. BMJ Health Care Inform. 31, 100954. https://doi.org/10.1136/bmjhci-2023-100954 (2024).

Ribeiro, M. T., Singh, S. & Guestrin, C. “Why should I trust you?”: Explaining the predictions of any classifier. CoRR 2016;abs/1602.0.

Lundberg, S. & Lee, S.-I. A Unified approach to interpreting model predictions. In 31st Conference on Neural Information Processing Systems (NIPS 2017), Long Beach, CA, USA, 1–10 (2017).

Malhi, A. K., Kampik, T., Pannu, H. S., Madhikermi, M. & Främling, K. Explaining machine learning-based classifications of in-vivo gastral images. Dig. Image Comput.: Tech. Appl. (DICTA) 2019, 1–7 (2019).

Peifei Zhu, M. O. Guideline-based additive explanation for computer-aided diagnosis of lung nodules. In Interpretability of Machine Intelligence in Medical Image Computing and Multimodal Learning for Clinical Decision Support (eds Suzuki, K. et al. et al.) 39–47 (Springer, 2019).

van der Velden, B. H. M., Janse, M. H. A., Ragusi, M. A. A., Loo, C. E. & Gilhuijs, K. G. A. Volumetric breast density estimation on MRI using explainable deep learning regression. Sci. Rep. 10, 18095. https://doi.org/10.1038/s41598-020-75167-6 (2020).

Zhou, B., Khosla, A., Lapedriza, A., Oliva, A. & Torralba, A. Learning deep features for discriminative localization. IEEE Conf. Comput. Vis. Pattern Recognit. (CVPR) 2016, 2921–2929. https://doi.org/10.1109/CVPR.2016.319 (2016).

Jiang, H., Yang, K., Gao, M., Zhang, D., Ma, H. & Qian, W. An interpretable ensemble deep learning model for diabetic retinopathy disease classification. In 2019 41st Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), 2045–2048. https://doi.org/10.1109/EMBC.2019.8857160 (2019).

Xie, B. et al. Computer-aided diagnosis for fetal brain ultrasound images using deep convolutional neural networks. Int. J. Comput. Assist. Radiol. Surg. 15, 1303–1312. https://doi.org/10.1007/s11548-020-02182-3 (2020).

Qi, X. et al. Automated diagnosis of breast ultrasonography images using deep neural networks. Med. Image Anal. 52, 185–198. https://doi.org/10.1016/j.media.2018.12.006 (2019).

Kim, C., Kim, W. H., Kim, H. J. & Kim, J. Weakly-supervised US breast tumor characterization and localization with a box convolution network. In Medical Imaging (2020).

Zhou, L.-Q. et al. Lymph node metastasis prediction from primary breast cancer US images using deep learning. Radiology 294, 19–28. https://doi.org/10.1148/radiol.2019190372 (2020).

García-Peraza-Herrera, L. C. et al. Intrapapillary capillary loop classification in magnification endoscopy: open dataset and baseline methodology. Int. J. Comput. Assist. Radiol. Surg. 15, 651–659. https://doi.org/10.1007/s11548-020-02127-w (2020).

Selvaraju, R. R. et al. Grad-CAM: Visual explanations from deep networks via gradient-based localization. IEEE Int. Conf. Comput. Vis. (ICCV) 2017, 618–626. https://doi.org/10.1109/ICCV.2017.74 (2017).

Hamm, C. A. et al. Deep learning for liver tumor diagnosis part I: Development of a convolutional neural network classifier for multi-phasic MRI. Eur. Radiol. 29, 3338–3347. https://doi.org/10.1007/s00330-019-06205-9 (2019).

Windisch, P. et al. Implementation of model explainability for a basic brain tumor detection using convolutional neural networks on MRI slices. Neuroradiology 62, 1515–1518. https://doi.org/10.1007/s00234-020-02465-1 (2020).

René, H., Kroll, L., Nensa, F. & Koitka, S. Differentiation Between Anteroposterior and Posteroanterior Chest X-Ray View Position With Convolutional Neural Networks. RöFo - Fortschritte auf dem Gebiet der Röntgenstrahlen und der bildgebenden Verfahren 193(02), 168–176. https://doi.org/10.1055/a-1183-5227 (2020).

van Sloun, R. J. G. & Demi, L. Localizing B-Lines in Lung Ultrasonography by Weakly Supervised Deep Learning, In-Vivo Results. IEEE Journal of Biomedical and Health Informatics 24, 957–964. https://doi.org/10.1109/JBHI.2019.2936151 (2020).

Xie, B. et al. Computer-aided diagnosis for fetal brain ultrasound images using deep convolutional neural networks. International Journal of Computer Assisted Radiology and Surgery 15, 1303–1312. https://doi.org/10.1007/s11548-020-02182-3 (2020).

Kuo, C. C. J., Zhang, M., Li, S., Duan, J. & Chen, Y. Interpretable convolutional neural networks via feedforward design. Journal of Visual Communication and Image Representation https://doi.org/10.1016/j.jvcir.2019.03.010 (2019).

Chattopadhay A, Sarkar A, Howlader P, Balasubramanian VN, Chattopadhyay A, Sarkar A, et al. Grad-CAM++: Generalized Gradient-Based Visual Explanations for Deep Convolutional Networks. 2018 IEEE Winter Conference on Applications of Computer Vision (WACV) 2017; abs/1710.11063:839–47. https://doi.org/10.1109/WACV.2018.00097.

Wang, H. et al. Score-CAM: Score-Weighted Visual Explanations for Convolutional Neural Networks. IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW) 2019, 111–119 (2020).

Han, S. et al. A deep learning framework for supporting the classification of breast lesions in ultrasound images. Physics in Medicine and Biology https://doi.org/10.1088/1361-6560/aa82ec (2017).

Ritika, R. & Kaur, S. Contrast Enhancement Techniques for Images - A Visual Analysis. International Journal of Computer Applications 64, 20–25. https://doi.org/10.5120/10727-5679 (2013).

Yi, X., Walia, E. & Babyn, P. Generative adversarial network in medical imaging: A review. Medical Image Analysis https://doi.org/10.1016/j.media.2019.101552 (2019).

Frid-Adar, M. et al. GAN-based synthetic medical image augmentation for increased CNN performance in liver lesion classification. Neurocomputing 321, 321–331. https://doi.org/10.1016/j.neucom.2018.09.013 (2018).

Radford A, Metz L, Chintala S. Unsupervised representation learning with deep convolutional generative adversarial networks. 4th International Conference on Learning Representations, ICLR 2016 - Conference Track Proceedings, 2016, p. 1–16.

Kumar D, Mehta MA, Chatterjee I. Empirical Analysis of Deep Convolutional Generative Adversarial Network for Ultrasound Image Synthesis. The Open Biomedical Engineering Journal 2021;15:71–7. https://doi.org/10.2174/1874120702115010071.

Huang P, Zhao L, Jiang R, Wang T, Zhang X. Self-filtering image dehazing with self-supporting module. Neurocomputing [Internet]. 2021;432:57–69. Available from: https://www.sciencedirect.com/science/article/pii/S0925231220318324

Zhang, X., Wang, T., Luo, W. & Huang, P. Multi-Level Fusion and Attention-Guided CNN for Image Dehazing. IEEE Transactions on Circuits and Systems for Video Technology. 31(11), 4162–4173 (2021).

Acknowledgements

This publication has been supported by the RUDN University Scientific Projects Grant System, project No. 202256-2-000. The authors sincerely thank Dr. Pankaj Gupta and Dr. Chetan Arora, Pathologists, PGIMER Chandigarh, India, Dr. Hiral Shah, Senior radiologist, SIDS Hospital and Research Centre, Surat, and Dr. Anil and the team from Parul Sevashram Hospital, Vadodara for providing valuable knowledge on cholelithiasis and the required data.

Author information

Authors and Affiliations

Contributions

All authors contributed to this research paper in conceptualization, methodology, data collection, and experimental evaluation. The initial draft was prepared by D. K., followed by several reviews and revisions by M. M., K. K. and A. K. The final draft was carefully reviewed and edited by all authors. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Ethical approval

The authors ensure that this work is original. This research is conducted in accordance with the ethical guidelines of life science and medical research involving human subjects and the rules established by the ethics committee. It involves the retrospective use of anonymized abdominal ultrasound images, with no patient interaction. Hence, the need to obtain informed consent is waived by Parul University Institutional Ethics Committee for Human Research.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Kumar, D., Mehta, M.A., Kotecha, K. et al. Computer-aided cholelithiasis diagnosis using explainable convolutional neural network. Sci Rep 15, 4249 (2025). https://doi.org/10.1038/s41598-025-85798-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-025-85798-2