Abstract

Atrial fibrillation (AF) is a common arrhythmia disease with a higher incidence rate. The diagnosis of AF is time-consuming. Although many ECG classification models have been proposed to assist in AF detection, they are prone to misclassifying indistinguishable noise signals, and the context information of long-term signals is also ignored, which impacts the performance of AF detection. Considering all the above problems, we propose a knowledge embedded multimodal pseudo-siamese model. The proposed model comprises a temporal-spatial pseudo-siamese network (TSPS-Net) and a knowledge embedded noise filter module. Firstly, a parallel siamese network architecture is proposed in TSPS-Net to process the multimodal representations. Secondly, a spatiotemporal collaborative fusion mechanism (STCFM) is proposed to fuse multimodal features. Finally, medical knowledge is introduced to design manual features, which are used to distinguish noise and fuse with deep features of ECG to obtain the accurate final result. The model’s performance is verified on the CinC 2017 dataset and the MIT-BIH AF dataset. Experimental results showed that the average accuracy achieved 82.17\(\%\) and 99.11\(\%\). The F1 score of our proposed model on the CinC 2017 dataset and MIT-BIH dataset was 0.787 and 0.970, respectively.

Similar content being viewed by others

Introduction

Electrocardiogram (ECG) is an essential tool for doctors to diagnose heart diseases in clinical medical practice1. It records the process of the heart’s electrical activity, providing insights into its rhythm and functional status. The heart beating process of a single heartbeat in lead II of ECG waveforms can be divided into P wave, QRS complex, and T wave. In clinical practice, arrhythmias are the most common type of abnormal cardiac rhythm disorder, which are highly harmful2,3. Research shows that atrial fibrillation (AF), one type of arrhythmia, is the main factor leading to cardiogenic stroke4. During AF diagnosis, doctors tend to pay more attention to the morphological changes in the waveform. The disappearance of the P wave in the lead II of the ECG and irregularities of the RR interval usually indicate the occurrence of AF5,6,7. However, even well-experienced doctors have a high rate of disease miss diagnosis when encountering a larger amount of ECG, due to the inherent characteristics of the complexity, subtlety, and diversity of ECG8. Furthermore, more than 30% of AF patients do not receive a definitive clinical diagnosis9, which will undoubtedly increase the risk of stroke or even death10. Therefore, an accurate detection model that can assist in a doctor’s diagnosis is essential and significant.

The ECG signals are mainly used in AF detection models. Early AF detection needs to be implemented with the help of feature engineering and machine learning classifiers. However, such manual features do not perform well in model generalization. With the development of deep learning, its good generalization ability and convenience of feature processing have led to the emergence of AF detection models based on deep learning. Some researchers segment ECG into multi-beats and then classify them. Although these approaches have achieved certain results, their performance cannot be further improved due to the lack of contextual information. Furthermore, some indistinguishable noise signals can easily be misclassified. Therefore, a new framework that can combine deep learning with manual features to mine global context features needs to be proposed to better cope with ECG classification.

To solve the above problems, we propose a knowledge embedded multimodal pseudo-siamese model for AF detection, which comprises TSPS-Net and a knowledge embedded module. First, a parallel pseudo-siamese network is proposed to simultaneously learn multimodal ECG signals consisting of temporal signal features and spatial image features comprehensively. This parallel network not only explores the connections between ECG features across spatiotemporal dimensions but also preserves the integrity of temporal characteristics. Second, a multimodal feature fusion mechanism is proposed to fuse different modality features completely. Third, a ___domain knowledge embedding module is proposed to extract more robust features. The main contributions of this paper are as follows:

-

We propose a temporal-spatial pseudo-siamese network (TSPS-Net) to extract features from ECG signals and spectrograms transformed by the Gramian Angular Summation Fields (GASF) algorithm, which can effectively extract the hidden features of the temporal signal and spatial image modalities.

-

We design a spatiotemporal collaborative fusion mechanism in the TSPS-Net, which can effectively fuse signal and spectrogram characteristics extracted from different modalities of ECG through its concise structure.

-

We propose a knowledge embedding module to determine whether the input signal is noise under the guidance of medical ___domain knowledge, and then combine the manual features extracted from the module with the output of TSPS-Net to obtain accurate ECG signal classification.

The remainder of this paper includes the following sections: In the next section, we introduce the related work of ECG classification model. Then, the details of our proposed method are described. In the following section, we present the experimental datasets, the implementation settings, and the experimental results. In the next section, we summarize our work. In the final section, we present the limitations of the current study and future research work.

Related work

Automatic ECG classification is a challenging but meaningful task. In this section, we will introduce related work on ECG classification models, multimodal ECG processing, and fusion algorithms.

Automatic ECG classification model

Earlier automatic ECG classification models relied on clinical experience. Researchers used ___domain knowledge to perform morphological analysis and feature extraction of signals to obtain a series of morphological and statistical features11 and then used these features to train machine learning classifiers, such as random forest12, support vector machine13, decision tree14, KNN15, etc. Gaining from the assistance of clinical knowledge, these knowledge-driven methods can achieve good performance. However, models based on manual features have certain limitations. Researchers need to perform feature selection before training classifiers16, which is cumbersome and results in poor generalization ability.

With the development of deep learning, some research that used CNN17 or RNN18 for ECG classification has gradually emerged. Fang et al.19 applied the VGG to the atrial fibrillation detection task. They converted the ECG signal into Poincaré diagrams and then sent them to the model for classification. Cao et al.20 used a two-layer LSTM for ECG classification tasks and achieved high accuracy. In addition, researchers also attempted to combine CNN with RNN, aiming to leverage the strengths of both networks. Pokaprakarn et al.21 proposed a sequence-to-sequence arrhythmia classification network. A backbone combining CNN and LSTM was used for feature extraction, and the spectrogram modality was added to the model as an auxiliary feature. Huang et al.22 proposed a model that combines CNN and LSTM for the arrhythmia classification task, which achieved high classification accuracy. In addition, Xie et al.23 and Yao et al.24 both used CNN combined with LSTM as the backbone to extract features from ECG and achieved good performance. Ma et al.25 designed a model that combined CNN with SVM and used some manual features, which performed well in the binary classification task of atrial fibrillation. Pan et al.26 used a model combining DenseNet with SVM. Experimental results show that this method performs better than a pure deep learning model on ECG classification. However, convolutional neural networks can not process variable-length input effectively27, leading to the loss of contextual temporal information28.

The work mentioned above demonstrates the application and some shortcomings of knowledge-driven manual feature methods and data-driven deep learning methods in atrial fibrillation or other types of arrhythmia classification tasks. Therefore, researchers try to combine ___domain knowledge with deep learning models to address these shortcomings29,30,31,32. The research33 proposed the ECG-KG framework for reliable ECG classification. ECG-KG contains a knowledge graph module, which can be well utilized to classify abnormal signals using expert knowledge. Moreover, to fully extract the hidden features of the ECG, it is necessary to consider not only the local waveforms in the temporal dimension but also the contextual connections in the spatial dimension. Furthermore, most of the aforementioned work was conducted from the perspective of a single modality, or arranged temporal modality and spatial modality sequentially, rather than treating them as parallel and equivalent. Consequently, incompatibility arose between the two modalities and multimodal features cannot be properly fused34.

Multimodal ECG processing and fusion algorithms

As the amount of data increases further, so does the complexity of the data to be processed. Currently, the single modal ECG classification model cannot extract contextual features well, so researchers have begun to perform multimodal ECG classification. Researchers attempt to extract multimodal data from different perspectives of ECG so that the model can more comprehensively learn the deep feature representation of ECG signals. Some of the work relies on the time-frequency analysis method. By using the time-frequency transformation method, one-dimensional ECG signals are converted into two-dimensional spectral images, and then the images are processed. Ahmad et al.35 used Gramian Angular Field (GAF), recurrence plot, and Markov transition field to convert ECG signals into images and classify them. Mathunjwa et al.36 converted the ECG signal into a recurrence plot and then used CNN for feature extraction and classification. The above work obtained deep features from a spatial perspective, but they ignored the temporal feature. Furthermore, some of the multimodal ECG processing algorithms is guided by prior knowledge. Chen et al.37 composed the mean, variance, skew, and kurtosis of the absolute Fourier transform spectrum of one-dimensional ECG signals, and then combined them with the signal modality. However, this method does not extract the spatial features of ECG signals, and the loss of context information will reduce the model’s performance.

In addition, the feature fusion method is an essential part of the multimodal ECG classification model. An appropriate feature fusion mechanism can help the model make better use of features from different modalities and prevent incompatible fusion. The multimodal fusion mechanism includes feature-level fusion, score-level fusion, and decision-level fusion. In the multimodal fusion mechanism of ECG signals, feature-level fusion methods are often used, including concatenation38 and attention mechanism fusion methods39. Han et al.40 proposed an attention mechanism for multimodal ECG feature fusion. This method combines cross attention mechanism and a multi-head attention mechanism. The research41 proposed a hybrid CNN-GRU model for multimodal fusion. They employed a gated fusion network to fuse the GAF images, Recurrent Plot images, and Markov Transition Field images. Qiu et al.42 reported a deep learning-based multimodal fusion method for fusing the ECG features and clinical features in the prediction of AF. This study used a simple concatenation method to fuse the above two sets of features. Although these works have made some progress, they are not well compatible with both temporal and spatial modalities.

From the related studies mentioned above, we can learn that the current multimodal automatic AF detection models have the following problems. Firstly, the utilization of ___domain knowledge is insufficient, and there is a lack of effective methods to quantify this knowledge in a well-harmonized manner. Secondly, these methods performs poorly when dealing with influence of noisy signals. Lastly, they struggle to effectively handle spatial-temporal feature integration during the multimodal fusion process.

To solve the above problems, we propose KE-MPSNet, a multimodal AF detection model embedded with ___domain knowledge. Firstly, a dual-branch parallel architecture is proposed to extract features from the temporal and spatial modalities respectively to reduce the mutual interference between modalities. Secondly, a spatiotemporal feature fusion mechanism is designed to perform multimodal fusion based on the characteristics of the features. Finally, ___domain knowledge and clinical experience are integrated into the model, which are used as guidance to build a more robust ECG classification model.

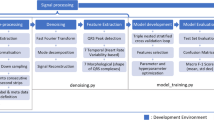

Methods

The proposed knowledge embedded multimodal pseudo-siamese model mainly consists of three parts. The first part is the temporal-spatial pseudo-siamese network (TSPS-Net), which extracts the features of the input signal and is used for classification. The second part is the noise filtering module, which is designed for noise filtering and knowledge description extracting, and uses a decision tree classifier to determine whether the input is noise signal. In the third part, the outputs of TSPS-Net and the knowledge description of the noise filter module are combined to obtain accurate ECG classification results. The framework of KE-MPSNet is shown in Fig. 1.

Temporal-spatial pseudo-siamese network

The proposed TSPS-Net consists of three parts: the first part is the temporal feature extraction branch, which is used to extract temporal features from one-dimensional ECG signals. The second part is the spatial feature extraction branch, which can extract the spatial features from the Gramian Angular Field (GAF) images. The third part is the spatiotemporal fusion branch, which adopts a spatiotemporal collaborative fusion mechanism. Spatial features are fused with temporal features under the guidance of spatiotemporal channel fusion coefficients to extract deeper spatiotemporal features. The architecture diagram of TSPS-Net is shown in Fig. 2.

The backbone of the temporal feature extraction branch adopts the feature extraction part of the Resnet_Wang43, which has been proven to achieve excellent performance in the feature extraction of time series. The temporal feature extraction branch consisted of three blocks. Each block consists of three 1D convolutional layers as well as two batch normalization layers. Batch normalization is performed after each convolution operation. In the temporal feature extraction branch, a larger receptive field can help the network to better capture long-term temporal information, so the receptive field of the residual block is set to 8, 5, and 3, respectively. Before spatial feature extraction, ECG signals are converted into GAF images. In this step, the Gramian Angular Summation Fields (GASF) algorithm is used to convert them. The example GAS images are shown in Fig. 3. The calculation procedure of GASF is shown in Eqs. (1)–(3). The signals are normalized in the first step of the GASF algorithm. Then, the one-dimensional time series are mapped to the polar coordinate system for reencoding.

where, \(\bar{X}\) represents the normalized ECG signal sequence, \(t_{i}\) represents the timestamp, \(\textbf{N}\) is the length of the signal, \(\phi _{i}\) and \(r_{i}\) represents the reconstructed angle and radius respectively. Subsequently, we perform a Gramian-like matrix operation on the transformed angle and radius to obtain the feature matrix. The mathematical form of GASF can be expressed as the cosine form of the angle sum between different sampling points, which is shown in the Eq. (3).

In the part of the spatial feature extraction branch, we use the backbone of ResNet18 as the spatial feature extractor. The spatial feature extraction branch consisted of three blocks as well. Each block consists of 2D convolutional layers, batch normalization layers, and a maximum pooling layer. An average pooling layer exists at the end of the branch. The above two networks can handle the problem of gradient disappearance in traditional CNN through the structure of residual connections. With the above-mentioned temporal and spatial feature extraction network, we can extract the features of ECG signals and put them into the subsequent fusion stage. The network structure is shown in Table 1.

Spatiotemporal collaborative fusion mechanism

The spatiotemporal collaborative fusion mechanism (STCFM) is proposed for spatiotemporal features fusion, which is a concise and efficient fusion mechanism. The structure of STCFM is shown in Fig. 4. Compared with the traditional attention fusion mechanism, it does not require high computational cost and complex calculation processes but can achieve excellent performance. The temporal features obtained from the temporal feature extraction branch are represented as \(F_{t}\in {\mathbb {R}}^{C\times H}\), and the spatial features obtained from the spatial feature extraction branch are represented as \(F_{s}\in {\mathbb {R}}^{C\times H\times W}\), where the subscripts represent the temporal and spatial modal, respectively, and C, H, and W represent the number of channels, height, and weight of features respectively.

We first compress the dimension of the spatial features with two steps respectively, as shown in Eqs. (4) and (5), then the \(F_{s}^{A}\in {\mathbb {R}}^{C\times 1\times 1}\) and \(F_{s}^{M}\in {\mathbb {R}}^{C\times 1\times 1}\) are gained. At the same time, the Eq. (4) is used to transform temporal features into temporal guidance features \(F_{t}^{A}\in {\mathbb {R}}^{C\times 1\times 1}\).

Secondly, two 2D convolutional layers are used to expand \(F_{s}^{A}\) and \(F_{s}^{M}\), and then the \(E_{s}^{A}\) and \(E_{s}^{M}\) are obtained, as shown in the Eq. (6), where \(\delta\) represents the convolution operation, \(f_{ex}\left( \cdot \right)\) represents the features expand function, and W is the weight matrix of convolution layer.

Then, the spatiotemporal channel fusion coefficients \(\lambda \in {\mathbb {R}}^{C\times 1\times 1}\) are calculated by Eq. (7). Equation (8) shows the combination process of the spatiotemporal channel fusion coefficients and spatial features to obtain fusion features FFs under the guidance of the spatiotemporal collaborative fusion mechanism. \(\odot\) represent multiplication operation in Eq. (8).

After we obtain the fused feature FFs, the fusion branch is used for deeper features extraction. Within the fusion branch, there are 2D convolutional layers, batch normalization layers, a dropout layer, and fully connected layers. The output dimensions of the fully connected layer are 2048, 512, 128, 64, and 3. More details about the TSPS-Net can be found in Table 1.

Knowledge embedded noise filter module

The medical diagnosis knowledge of heart disease is set as prior knowledge to design morphological manual features. Then, these features are used for decision tree classification, which can distinguish whether the input signal is indistinguishable noise. These manual features include five main types: QRS morphological features, entropy, signal quality index(SQI), waveform statistical features, and heart rate features. A QRS complex detection procedure is conducted before we perform manual feature extraction. The Pan-Tompkins44 algorithm is used to assist in correcting possible misidentification of R peaks and prevent the jittering T wave from being identified as R peaks. The specific manual features are shown in Table 2.

Based on prior knowledge, when AF occurs, the QRS complex becomes irregular, and the intervals between the Q-, R-, and S-waves become unstable. Based on this, we calculated median and mean values for the Q-, R-, and S-wave intervals, which are relatively stable in regular rhythms and fluctuate when AF occurs.

In the entropy feature, three representative information entropy are selected, which are fuzzy entropy, sample entropy45, and approximate entropy46. These entropy features are used as dimensionless metrics to characterize the complexity of a signal sequence, with larger entropy values representing greater signal complexity. These entropy features are used to measure the magnitude of the probability of generating a new pattern that a time series will produce a new pattern when it changes within a specified time window. The greater the probability of the sequence generating a new pattern, the greater the degree of complexity of the sequence and the greater the entropy value. Entropy preserves the time-series features in the original signal sequence and reflects the properties of the signal sequence in terms of its structural distribution. Therefore, they are used as a measure of the degree of variation within the ECG signal and are primarily used to differentiate the class atrial fibrillation from class other types of arrhythmias.

In the SQI feature part, six SQI features are used in this work, namely sSQI, kSQI, pSQI, basSQI, cSQI, and qSQI, which are very useful in measuring signal quality47. Their calculation methods are shown in Eqs. (9)–(14).

sSQI is used to measure the complexity of the QRS complex:

\(\textit{X}\) represents the ECG signal, \(\mu\) represents the average value of the signal, \(\sigma\) represents the standard deviation of the signal, and \(\textit{E}\left\{ \textit{X}-\mu \right\}\) represents the expectation of \(\left\{ \textit{X}-\mu \right\}\).

kSQI is used to measure whether the signal obeys Gaussian distribution rather than pure noise:

The meaning of each parameter is consistent with the sSQI.

pSQI is used to calculate the power spectral distribution of the QRS complex:

basSQI is used to calculate the baseline stability of the signal and determine the degree of baseline wander:

cSQI is used to measure the degree of change in the RR interval in ECG signals:

\(RR\_list\) represents the R peak point extracted by the algorithm from a signal, std represents the sample standard deviation, and mean represents the mean calculation.

qSQI is used to measure the stability of the signal. It uses two different R peak detection algorithms on the signal and calculates their matching degree to measure the quality of the signal:

R represents the R peak candidate points detected by using different algorithms. The subscripts represent the Hamilton algorithms and the discrete wavelet transform algorithms, respectively, L indicating the number of R peak candidate points extracted. Match represents the number of R candidate point matches within a certain threshold.

Finally, we fuse the knowledge description and vector description obtained above to train a classifier. We set the results obtained by the TSPS-Net as vector description and all manual features as knowledge description. Then, we combine them into 26-dimensional features and use the feature to train an SVM classifier. Consequently, the classifier can combine two different types of features to determine the specific category of the current signal. The accurate type of signal is given by the compound result of the SVM classifier and noise filter module. The signal is regarded as noise if the noise filter module divides the input signal as a noise signal. Otherwise, the signal type is given by the SVM classifier.

Results and discussion

Dataset and implementation details

The performance of our proposed model is verified on two datasets, which are the CinC 2017 (excluding hidden phase data)48 and the MIT-BIH AF dataset49. All data on the CinC 2017 dataset are collected by the portable device, which is easily affected by noise. The MIT-BIH AF dataset is one of the most common AF detection datasets. Therefore, we chose the above two datasets for experimental validation to show that KE-MPSNet can work well in noisy environments while being capable of detecting AF in normal scenarios. We divide 80% of the data into training set, 10% into the validation set, and 10% into the test set. In the noise filter module training, we use all the noise data and randomly select other types of signal in a ratio of 1:3 to form positive samples to construct a dataset, and then divide the training set and testing set in a ratio of 8:2. Furthermore, all our dataset follow the inter-patient pattern when divided. We measure experimental results with the metrics: F1-score, accuracy (Acc), precision (Pre), sensitivity (Sen), specificity (Spe), Matthew’s Correlation Coefficient (MCC), and Kappa.

CinC 2017 Challenge Dataset: The 2017 PhysioNet/ Cardiology Computing in Cardiology Challenge dataset is a short-term single-lead AF classification dataset. The publicly available part contains 8528 individual ECG signals. The length of each signal ranges from 9 seconds to 60 seconds. Its sampling frequency is 300Hz. There are four types of labels: normal rhythm (N), atrial fibrillation (A), other types of arrhythmias (O), and indistinguishable noise (\(\sim\)). Detailed data distribution is shown in Table 3.

MIT-BIH Atrial Fibrillation Dataset: MIT-BIH AF Dataset is an ECG dataset used for atrial fibrillation classification and the dataset was collected in 1983. The dataset recorded ECG signals from 23 subjects. Each signal was sampled at 250Hz. The length of a single signal lasts more than 10 hours, and it is jointly labeled by multiple medical experts. In this work, we only use three types of ECG signals: AF, AFL, and N. Its data distribution is shown in Table 3.

Chapman Dataset: The Chapman dataset was published in 2020 and was created by Chapman University and Shaoxing People’s Hospital. The signals in the Chapman dataset contain a variety of arrhythmia types, of which we only use sinus rhythm (N), atrial fibrillation, and atrial flutter(AFL). Each data entry has a sampling rate of 500 Hz, a data duration of 10 seconds, and contains 12 lead signals, of which we use only the second lead. The detailed data distribution is shown in Table 3.

The proposed model is implemented by using Python and trained on NVIDIA GeForce RTX 3090TI GPU. Our proposed model’s training process is divided into three stages. In the first stage of noise filter module training, entropy is used as the criterion. A grid search algorithm is used to find the optimal parameters combination of min_samples_leaf, max_depth, and min_samples_split. Secondly, in the TSPS-Net training stage, cross-entropy loss is selected as the loss function. An adaptive momentum estimation (Adam) optimizer is used, with the learning rate set to 0.0015, parameter \(\beta\) set to (0.9, 0.999), and decay set to \(1\times 10^{-8}\). The batch size is set to 48. The training epochs are set to 60, and a cosine annealing learning rate adjustment strategy is used for hyperparameter adjustment, which starts after 32 epochs, with the T_mult parameter set to 2 and the eta_min parameter set to 0.02. Finally, in the final stage of support vector machine training, we set the kernel function to radial basis function (RBF), and other parameters are found by the grid search algorithm to find the optimal parameter combination.

Data preprocessing

Since ECG signals are easily affected by environmental interference and human body potential during collection, much noise will be mixed into the original ECG signal, leading to baseline wander, myoelectric interference, and other interference. Therefore, a good data preprocessing method is essential for data cleansing. In this paper, we use the wavelet function to remove the noise mixed in the original signal. A 7-stage Daubechies 5 wavelet basis function is used for wavelet denoising. Subsequently, the VisuShrink method was applied. Hard thresholding is set for the wavelet transform. The result of wavelet denoising is shown in Fig. 5. Subsequently, a max-min data normalization algorithm is conducted to reduce the impact of individual differences on the final classification results. To unify the input of the model, we reconstruct the original data of the dataset to obtain the fixed-length ECG signal. The data reconstruction method proposed in50 is adopted and the length of the reconstructed data is set as 4500 sampling points.

Ablation studies

Knowledge description

Table 4 indicates the experimental results of KE-MPSNet with and without knowledge embedding on the CinC 2017 dataset. From the experimental results, it can be seen that with the assistance of knowledge description, the classification ability of the model in identifying noise is significantly improved, which shows that knowledge embedding can greatly improve the classification performance of the model. After combining with knowledge description, KE-MPSNet’s ability to distinguish between noise and other signals is significantly improved. This is because the knowledge description quantifies signals from multiple aspects. Compared to noise signals, the heart rate features, SQI, and entropy of normal signals are more distinct, which helps us better distinguish them.

Table 5 indicates the experimental results of the CNN and FCN instead of ___domain knowledge for classifying noise on the CinC 2017 dataset. As shown in the table, they did not perform better than ___domain knowledge embedding. the advantage of the knowledge embedded approach is all-encompassing, and this is superior to the fact that the ___domain knowledge features of these signals can be used to discriminate not only between noisy signals but also between normal signals and atrial fibrillation signals. Therefore, the knowledge embedded strategy was finally selected by us to solve the existing problem.

In addition, we performed Mann-Whitney U test among different features (p-value \(\le\) 0.05) in the knowledge description to test the effectiveness of the features. The significance of knowledge description between different classes is compared in Table 6. We observe that the p-values of most manual features in the knowledge description are less than 0.05, indicating that the knowledge description we used has significant statistical significance. Moreover, it can be found that the p-value of the Heart rate Feature on the CinC 2017 dataset is very small, which shows that its significance is more prominent and can well distinguish different types of ECG. Additionally, we found that the distinction between SQI features and Signal Statistical features is relatively not significant for certain signals. This is due to the good overall data quality of the signals.

Feature extraction network

The feature extraction capabilities of different temporal feature extraction branches are compared. The experimental results are shown in Table 7. The results show that the performance of the model is significantly improved by using Resnet_Wang as the temporal branch feature extraction network. We found that the feature extraction ability of Resnet_Wang is the best of them all, with its F1 score reaching 0.749 and accuracy reaching 81.69\(\%\), followed by LSTM and, finally FCN_Wang. Compared to LSTM, Resnt_Wang has better feature extraction capability, and the residual connection can effectively assist the model in extracting features at different scales. The performance of FCN_Wang is the worst because the backbone of FCN_Wang cannot extract fine-grain features completely, weakening the performance of the temporal branch.

On the spatial feature extraction branch, we compare the feature extraction capabilities of CNN and Resnet18. As shown in Table 7, the experimental results show that the residual connection is useful not only on the temporal branch but also on the spatial branch. Under the guidance of residual connection, KE-MPSNet performs better in extracting contextual information in spatial.

Fusion mechanism

In the ablation experiments of the multimodal feature fusion method, we compared the concatenation method, pooling method, traditional attention method, and our proposed STCFM. The concatenation method connects the temporal feature with the spatial feature. Traditional attention methods calculate the weights of each feature vector and integrate them by using these weights. The experimental results are shown in Table 8. Our proposed STCFM method performed best among all methods, achieving 0.749, 81.69\(\%\), 77.99\(\%\), and 73.67\(\%\) in F1-score, accuracy, precision, and sensitivity, respectively, which are increased by 3.8\(\%\), 4.33\(\%\), 3.43\(\%\), and 2.84\(\%\) compared with the best performance of other methods. Compared to other methods, STCFM uses the guidance of spatiotemporal channel fusion coefficients, which can be used to determine the importance of each heartbeat, enabling the model to combine global and local contextual information effectively. Moreover, STCFM combines the dimensional characteristics of two modalities, making the fusion smoother.

Input duration of ECG segments

We verified the effect on model performance regarding the duration of input ECG segments in the CinC 2017 dataset. The experimental results are shown in Table 9. We set the duration of the input to [5s, 10s, 15s, 20s, 25s, 30s], and the corresponding sampling points to [1500, 3000, 4500, 6000, 7500, 9000]. Since the minimum length of ECG signals in the CinC 2017 dataset is 9 seconds, the signals are padded with zero when the input is larger than the original signal length. The performance of the model improves as the input duration increases and reaches its optimization at 15 seconds as well as 20 seconds of input, which then decreases. The 15-second input duration is better than the 20-second on average F1 score and presents the best results in the detection of noise class. Therefore the inputs to the model were set to 4500 sampling points.

TSPS-Net

Table 10 shows the experiment result of our proposed TSPS-Net on the CinC 2017 dataset and MIT-BIH AF dataset. In the experiment on the CinC 2017 dataset, KE-MPSNet performs very well in both 3-classes and 4-classes classification tasks. First, with the assistance of noise filter module, the classification precision of the noise class can be achieved at 100\(\%\). Second, our classification precision in the N class can achieve 83.51\(\%\), 83.71\(\%\), and 98.96\(\%\), because the spatial branch in TSPS-Net can grasp the global state. Since the N class depends on the normal performance of all heartbeats, the model must be capable of extracting contextual features effectively. Moreover, the overall accuracy of KE-MPSNet on the MIT-BIH dataset can achieve 99.11\(\%\), indicating that our spatiotemporal fusion method can effectively distinguish AF and AFL, and has excellent spatiotemporal feature fusion capability. Furthermore, the MCC and Kappa of the experiment results are computed. As shown in the table, it can be seen that the MCC values of KE-MPSNet are greater than 0 for all classes and greater than 0.5 in most cases. In the AF class of the MIT-BIH AF dataset, the MCC value reaches 0.999, which is close to perfect prediction. From the Kappa indices of the experimental results, it can be seen that KE-MPSNet shows good classification consistency on all three tasks of the two datasets, especially on the MIT-BIH AF dataset, with a Kappa index of 0.979. All the Kappa values are higher than 0.6, which means that there is substantial consistency and the classification results are reliable. From the above metrics, our proposed model has a good advantage in discriminating the noise class as well as the AF class, and this detection is trustworthy. This is because the ___domain knowledge we introduced is applicable for noise as well as for AF rhyme, making the model more sensitive for these classes.

Knowledge embedded noise filter module

We verify the classification performance of the noise filter module and the results are demonstrated in Table 6. In this module, manual features are used to determine whether a signal is noise or a distinguishable signal. As shown in Table 11, the precision of distinguishable signals is 91.52\(\%\), indicating that the generalization ability of the module is excellent. We also find that the performance of the noise class is not as good as distinguishable signals. This is because these signals are classified as noise in data annotation, but they are not pure noise and still retain some morphological characteristics of an ECG signal.

Comparative experiment

We have set up the baseline comparative experiment and SOTA comparative experiment. In the baseline comparative experiment, KE-MPSNet is compared with FCN_Wang43, Resnet_Wang43, LSTM50, BiLSTM53, and CNN+ LSTM54. In comparison with the SOTA model, KE-MPSNet is compared with Densenet12155, MobileNet Small56, MobileNet Large56, InceptionNet57, EfficientNet58, BSK_Model59, BIT-CNN62, and the other two models60,61. We compare the performance of the above model using accuracy, F1-score, accuracy, and sensitivity.

The experimental result is shown in Table 12. KE-MPSNet performed best on all two datasets. It can be found that KE-MPSNet achieves excellent performance. Compared with EffcientNet, the F1-score is increased by 8\(\%\), the accuracy is increased by 5.47\(\%\), the precision is increased by 11.88\(\%\), and the sensitivity is increased by 5.67\(\%\) on the CinC 2017 dataset. On the MIT-BIH AF dataset, KE-MPSNet also performs very well. Compared with MobileNet-S56, which is the highest among other models, the accuracy of KE-MPSNet is improved by 0.08\(\%\) and the precision is improved by 0.46\(\%\). This shows that KE-MPSNet performs better than other SOTA models in classification tasks. InceptionTime is a SOTA model for time series prediction that integrates multiple inception modules. Each inception module has the same architecture but different random initialization weights, which can alleviate the class deviation problem in the training stage. However, KE-MPSNet’s excellent global feature extraction ability makes our performance better. EfficientNet uses NAS to uniformly scale the network’s depths, widths, and dimensions, allowing the model to achieve better performance. However, KE-MPSNet performs multimodal processing for one-dimensional ECG signals, which enriches learnable features from different perspectives. So KE-MPSNet performs even better. The sensitivity and F1-score of the MobileNet-S are slightly higher than our proposed model, which is due to the slightly lower classification success rate of KE-MPSNet in the AFL class in the MIT-BIH AF dataset. However, KE-MPSNet’s ability to classify normal ECG signals is better than MobileNet-S. This is due to the spatial branch of TSPS-Net, which makes it better at capturing global ECG information features. In comparison with BSK_Model, KE-MPSNet demonstrates a lead in accuracy as well as precision. In comparison with the model proposed by Tanaka et al., KE-MPSNet demonstrates an advantage in all metrics. Compared to the BSK_Model and Tanaka et al. proposed model, TSPS-Net performs feature extraction in both temporal and frequency domains, so its accuracy and precision will be higher. On the CinC 2017 dataset, our proposed model outperforms the model proposed by Zhang et al. as well as BIT-CNN. Compared to BIT-CNN, KE-MPSNet can discriminate noise well with the help of knowledge embedded, moreover, TSPS-Net can also utilize temporal ___domain as well as frequency ___domain information very well, which explains the superior performance of KE-MPSNet.

Exploration of potential value

We additionally validated the performance of the model using the post-covid dataset (Chapman dataset). In this experiment, we used classification accuracy, sensitivity, F1 score, and specificity to measure the detection performance for each category. MCC, Kappa index, and overall accuracy were also used to measure the overall model performance. The experiment results are shown in Table 13. The validation of the model for the post-covid dataset achieved good results in discriminating between AF and AFL signals, and our model has 100\(\%\) accuracy in detecting AFL. Meanwhile, the Kappa index shows that the detection results from KE-MPSNet are statistically consistent. From the experimental results, it can be seen that KE-MPSNet performs well in the face of both the pre-covid and post-covid datasets. This indicates that KE-MPSNet has good AF detection ability for pre-covid and post-covid ECG signals.

Conclusion

In this paper, a multimodal ECG classification model embedded with ___domain knowledge is proposed, which adopts a parallel architecture to reduce the mutual interference between the two modal features and separate the feature extraction process to reduce the potential mutual interference between different modalities. Then, we propose a spatiotemporal feature fusion mechanism combining dimensional characteristics for feature fusion tasks. At the same time, we also integrate diagnostic knowledge into the model in the form of statistical values to increase the credibility of the features. We verify the effectiveness of the proposed model through experiments conducted on both the CinC 2017 dataset and the MIT-BIH AF dataset. The experimental results and statistical analyses demonstrate that our proposed framework effectively utilizes ___domain knowledge, providing an approach for uniformly quantifying it. Moreover, the model exhibits robust performance under noise interference, underscoring the role of ___domain knowledge in enhancing its resilience to such perturbations.

Limitations of this study

In this study, we explore how ___domain knowledge can be utilized to better perform the task of atrial fibrillation detection in scenarios where noise is present. And we also investigate about how to effectively handle spatial-temporal feature integration during the multimodal fusion process. However, the interference of noise is highly stochastic and disorganized, and this study has not yet addressed the performance of the model under different noise levels. In the future, we will investigate the impact of varying noise interference levels on the performance of AF classification model and integrate the model into a portable wristband.

Data availability

The datasets used in this article are the CinC 2017 Challenge dataset48, Chapman dataset63, and MIT-BIH AF dataset49, and they can be found at https://physionet.org/content/challenge-2017/1.0.0/, https://physionet.org/content/ecg-arrhythmia/1.0.0/, and https://physionet.org/content/afdb/1.0.0/.

Code availability

Readers can find our code through the following link (DOI:https://doi.org/10.5281/zenodo.14609536). Readers are welcome to contact the corresponding author if any questions.

References

Hagiwara, Y. et al. Computer-aided diagnosis of atrial fibrillation based on ecg signals: A review. Inf. Sci. 467, 99–114 (2018).

Nogueira-Garcia, B., Alves, M., Pinto, F. J. & Caldeira, D. The association between asthma and atrial fibrillation: systematic review and meta-analysis. Sci. Rep. 14, 2241 (2024).

Elliott, A. D., Middeldorp, M. E., Van Gelder, I. C., Albert, C. M. & Sanders, P. Epidemiology and modifiable risk factors for atrial fibrillation. Nat. Rev. Cardiol. 20, 404–417 (2023).

Hart, R. G. & Halperin, J. L. Atrial fibrillation and stroke: concepts and controversies. Stroke 32, 803–808 (2001).

Almusallam, M. & Soudani, A. Embedded solution for atrial fibrillation detection using smart wireless body sensors. IEEE Sens. J. 19, 5740–5750 (2019).

Andersen, R. S., Peimankar, A. & Puthusserypady, S. A deep learning approach for real-time detection of atrial fibrillation. Expert Syst. Appl. 115, 465–473 (2019).

Naser, J. A. et al. Secondary tricuspid regurgitation: incidence, types, and outcomes in atrial fibrillation vs. sinus rhythm. Eur. Heart J. ehae346 (2024).

Xie, L., Li, Z., Zhou, Y., He, Y. & Zhu, J. Computational diagnostic techniques for electrocardiogram signal analysis. Sensors 20, 6318 (2020).

Kirchhof, P. et al. 2016 esc guidelines for the management of atrial fibrillation developed in collaboration with eacts. Polish Heart J. 74, 1359–1469 (2016).

Lip, G. Y. et al. Atrial fibrillation and stroke prevention: 25 years of research at ep europace journal. Europace25, euad226 (2023).

Shao, M. et al. Detection of atrial fibrillation from ecg recordings using decision tree ensemble with multi-level features. Physiol. Meas. 39, 094008 (2018).

Kung, B.-H. et al. An efficient ecg classification system using resource-saving architecture and random forest. IEEE J. Biomed. Health Inform. 25, 1904–1914 (2020).

Raj, S. & Ray, K. C. Ecg signal analysis using dct-based dost and pso optimized svm. IEEE Trans. Instrum. Meas. 66, 470–478 (2017).

Kumari, L. et al. Classification of ecg beats using optimized decision tree and adaptive boosted optimized decision tree. SIViP 16, 695–703 (2022).

Venkatesan, C., Karthigaikumar, P. & Varatharajan, R. A novel lms algorithm for ecg signal preprocessing and knn classifier based abnormality detection. Multimed. Tools Appl. 77, 10365–10374 (2018).

Zvuloni, E., Read, J., Ribeiro, A. H., Ribeiro, A. L. P. & Behar, J. A. On merging feature engineering and deep learning for diagnosis, risk prediction and age estimation based on the 12-lead ecg. IEEE Trans. Biomed. Eng. 70, 2227–2236 (2023).

Baloglu, U. B., Talo, M., Yildirim, O., San Tan, R. & Acharya, U. R. Classification of myocardial infarction with multi-lead ecg signals and deep cnn. Pattern Recogn. Lett. 122, 23–30 (2019).

Singh, S., Pandey, S. K., Pawar, U. & Janghel, R. R. Classification of ecg arrhythmia using recurrent neural networks. Proc. Comput. Sci. 132, 1290–1297 (2018).

Fang, B. et al. Dual-channel neural network for atrial fibrillation detection from a single lead ecg wave. IEEE J. Biomed. Health Inform. 27, 2296–2305 (2021).

Cao, P. et al. A novel data augmentation method to enhance deep neural networks for detection of atrial fibrillation. Biomed. Signal Process. Control 56, 101675 (2020).

Pokaprakarn, T. et al. Sequence to sequence ecg cardiac rhythm classification using convolutional recurrent neural networks. IEEE J. Biomed. Health Inform. 26, 572–580 (2021).

Huang, Y., Li, H. & Yu, X. A novel time representation input based on deep learning for ecg classification. Biomed. Signal Process. Control 83, 104628 (2023).

Xie, X., Liu, H., Chen, D., Shu, M. & Wang, Y. Multilabel 12-lead ecg classification based on leadwise grouping multibranch network. IEEE Trans. Instrum. Meas. 71, 1–11 (2022).

Yao, Q., Wang, R., Fan, X., Liu, J. & Li, Y. Multi-class arrhythmia detection from 12-lead varied-length ecg using attention-based time-incremental convolutional neural network. Inf. Fusion 53, 174–182 (2020).

Ma, C. et al. Integration of results from convolutional neural network in a support vector machine for the detection of atrial fibrillation. IEEE Trans. Instrum. Meas. 70, 1–10 (2020).

Pan, X. et al. Deep cross-modal feature learning applied to predict acutely decompensated heart failure using in-home collected electrocardiography and transthoracic bioimpedance. Artif. Intell. Med. 140, 102548 (2023).

Zhang, J. et al. Mlbf-net: A multi-lead-branch fusion network for multi-class arrhythmia classification using 12-lead ecg. IEEE J. Transl. Eng. Health Med. 9, 1–11 (2021).

Acharya, U. R. et al. Automated detection of arrhythmias using different intervals of tachycardia ecg segments with convolutional neural network. Inf. Sci. 405, 81–90 (2017).

Luo, J., Sarica, S. & Wood, K. L. Guiding data-driven design ideation by knowledge distance. Knowl.-Based Syst. 218, 106873 (2021).

Liu, Y., Zou, X., Ma, S., Avdeev, M. & Shi, S. Feature selection method reducing correlations among features by embedding ___domain knowledge. Acta Mater. 238, 118195 (2022).

Wu, H., Yan, Y., Ye, Y., Ng, M. K. & Wu, Q. Geometric knowledge embedding for unsupervised ___domain adaptation. Knowl.-Based Syst. 191, 105155 (2020).

Li, H. et al. Clinical knowledge-based ecg abnormalities detection using dual-view cnn-transformer and external attention mechanism. Comput. Biol. Med. 178, 108751 (2024).

Ge, Z. et al. A knowledge-driven graph convolutional network for abnormal electrocardiogram diagnosis. Knowl.-Based Syst. 296, 111906 (2024).

Gandhi, A., Adhvaryu, K., Poria, S., Cambria, E. & Hussain, A. Multimodal sentiment analysis: A systematic review of history, datasets, multimodal fusion methods, applications, challenges and future directions. Inf. Fusion 91, 424–444 (2023).

Ahmad, Z., Tabassum, A., Guan, L. & Khan, N. M. Ecg heartbeat classification using multimodal fusion. IEEE Access 9, 100615–100626 (2021).

Mathunjwa, B. M., Lin, Y.-T., Lin, C.-H., Abbod, M. F. & Shieh, J.-S. Ecg arrhythmia classification by using a recurrence plot and convolutional neural network. Biomed. Signal Process. Control 64, 102262 (2021).

Chen, L., Lian, C., Zeng, Z., Xu, B. & Su, Y. Cross-modal multiscale multi-instance learning for long-term ecg classification. Inf. Sci. 643, 119230 (2023).

Yang, S. et al. A multi-view multi-scale neural network for multi-label ecg classification. IEEE Trans. Emerg. Topics Comput. Intell. 7, 648–660 (2023).

Kim, Y. K., Lee, M., Song, H. S. & Lee, S.-W. Automatic cardiac arrhythmia classification using residual network combined with long short-term memory. IEEE Trans. Instrum. Meas. 71, 1–17 (2022).

Han, H. et al. Multimodal multi-instance learning for long-term ecg classification. Knowl.-Based Syst. 270, 110555 (2023).

Panchal, R., Tiwari, S. & Agarwal, S. Multimodal image fusion on ecg signals for congestive heart failure classification. Multimed. Tools Appl. 1–13 (2024).

Qiu, Y. et al. Deep learning-based multimodal fusion of the surface ecg and clinical features in prediction of atrial fibrillation recurrence following catheter ablation. BMC Med. Inf. Decis. Mak.24 (2024).

Wang, Z., Yan, W. & Oates, T. Time series classification from scratch with deep neural networks: A strong baseline. In 2017 International joint conference on neural networks (IJCNN), 1578–1585 (2017).

Pan, J. & Tompkins, W. J. A real-time qrs detection algorithm. IEEE Trans. Biomed. Eng. 230–236 (1985).

Zhang, D. et al. An efficient ecg denoising method based on empirical mode decomposition, sample entropy, and improved threshold function. Wirel. Commun. Mob. Comput. 2020, 1–11 (2020).

Liou, J.-W. et al. Ecg approximate entropy in the elderly during cycling exercise. Sensors 22, 5255 (2022).

Zhang, Y., Li, J., Wei, S., Zhou, F. & Li, D. Heartbeats classification using hybrid time-frequency analysis and transfer learning based on resnet. IEEE J. Biomed. Health Inform. 25, 4175–4184 (2021).

Clifford, G. D. et al. Af classification from a short single lead ecg recording: The physionet/computing in cardiology challenge 2017. In 2017 Computing in Cardiology (CinC), 1–4 (2017).

Moody, G. A new method for detecting atrial fibrillation using rr intervals. Proc. Comput. Cardiol. 10, 227–230 (1983).

Zhang, X. et al. Detection of atrial fibrillation from variable-duration ecg signal based on time-adaptive densely network and feature enhancement strategy. IEEE J. Biomed. Health Inform. 27, 944–955 (2022).

Ilse, M., Tomczak, J. & Welling, M. Attention-based deep multiple instance learning. In International conference on machine learning, 2127–2136 (2018).

Nejedly, P. et al. Classification of ecg using ensemble of residual cnns with or without attention mechanism. Physiol. Meas. 43, 044001 (2022).

Saadatnejad, S., Oveisi, M. & Hashemi, M. Lstm-based ecg classification for continuous monitoring on personal wearable devices. IEEE J. Biomed. Health Inform. 24, 515–523 (2019).

Petmezas, G. et al. Automated atrial fibrillation detection using a hybrid cnn-lstm network on imbalanced ecg datasets. Biomed. Signal Process. Control 63, 102194 (2021).

Krasteva, V., Christov, I., Naydenov, S., Stoyanov, T. & Jekova, I. Application of dense neural networks for detection of atrial fibrillation and ranking of augmented ecg feature set. Sensors 21, 6848 (2021).

Howard, A. G. et al. Mobilenets: Efficient convolutional neural networks for mobile vision applications. arXiv preprint[SPACE]arXiv:1704.04861 (2017).

Finding alexnet for time series classification. Ismail Fawaz, H. et al. Inceptiontime. Data Min. Knowl. Disc. 34, 1936–1962 (2020).

Tan, M. & Le, Q. Efficientnet: Rethinking model scaling for convolutional neural networks. In International conference on machine learning, 6105–6114 (2019).

Wan, X. et al. A novel atrial fibrillation automatic detection algorithm based on ensemble learning and multi-feature discrimination. Med. Biol. Eng. Comput. 62, 1809–1820 (2024).

Kamozawa, H., Muroga, S. & Tanaka, M. A detection method of atrial fibrillation from 24-hour holter-ecg using cnn. IEEJ Trans. Electr. Electron. Eng. 18, 577–582 (2023).

Zhang, P. et al. Automatic screening of patients with atrial fibrillation from 24-h holter recording using deep learning. Eur. Heart J. Digit. Health 4, 216–224 (2023).

Lee, H. & Shin, M. Learning explainable time-morphology patterns for automatic arrhythmia classification from short single-lead ecgs. Sensors 21, 4331 (2021).

Zheng, J. et al. A 12-lead electrocardiogram database for arrhythmia research covering more than 10,000 patients. Sci. Data 7, 48 (2020).

Acknowledgements

This research is supported by the Industrial Technology Research and Development Special Project of Jilin Provincial Development and Reform Commission in 2023 (No. 2023C042-6) and the Key Project of Science and Technology Research Plan of Jilin Provincial Department of Education in 2023 (No.JJKH20230763KJ).

Author information

Authors and Affiliations

Contributions

C.L. proposed the idea and design. C.L. wrote the original draft. H.L. reviewed and edited the draft. P.S. and C.P. conducted the experiments and analyzed the data. All authors contributed to the final draft.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Lin, C., Lu, H., Sang, P. et al. A knowledge embedded multimodal pseudo-siamese model for atrial fibrillation detection. Sci Rep 15, 3133 (2025). https://doi.org/10.1038/s41598-025-87115-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-025-87115-3