Abstract

This study is based on the YOLOv7 object detection framework and conducts comparative experiments on early fusion, halfway fusion, and late fusion for multispectral pedestrian detection tasks. Traditional pedestrian detection tasks typically use image data from a single sensor or modality. However, in the field of multispectral remote sensing, fusing multi-source data is crucial for improving detection performance. This study aims to explore the impact of different fusion strategies on multispectral object detection performance and identify the most suitable fusion approach for multispectral data. Firstly, we implemented early fusion experiments by merging multispectral data with visible light data at the network’s input layer. Next, halfway fusion experiments were conducted, merging multispectral data and visible light data at the network’s middle layers. Finally, late fusion experiments were performed by merging multispectral data and visible light data at the network’s high layers. A comprehensive comparison of the experimental results for various fusion strategies reveals that the halfway fusion strategy exhibits outstanding performance in multispectral pedestrian detection tasks, achieving high detection accuracy and relatively fast speed.

Similar content being viewed by others

Introduction

Pedestrian detection is a crucial problem in the field of computer vision, aiming to automatically identify and detect the presence of pedestrians in images or videos. With the growing demand for urban traffic management and security surveillance, pedestrian detection technology has extensive application prospects in intelligent transportation systems, security monitoring, and intelligent robotics. Research on pedestrian detection began in the 1990s, primarily relying on feature-based classification methods, such as edge and contour-based features and Haar features. However, these methods suffer from significant limitations in dynamic and complex environments due to issues like illumination changes, occlusions, and varying pedestrian appearances1. In recent years, deep learning methods, particularly Convolutional Neural Networks (CNNs), have revolutionized pedestrian detection by enabling the automatic extraction of features from images, which improves accuracy and robustness. Nonetheless, even with CNN-based methods such as YOLO and Faster R-CNN, problems like low detection accuracy in challenging environments (e.g., poor lighting or occlusions) and high computational costs persist2.

To address these challenges, this paper proposes a new approach for multispectral pedestrian detection based on the YOLOv7 framework. Given the complementary nature of RGB and infrared (IR) images, combining these modalities has the potential to enhance detection accuracy, particularly in challenging conditions3. Specifically, the goal is to explore various feature fusion strategies—early, halfway, and late fusion—and evaluate their effectiveness in multispectral pedestrian detection. By focusing on the fusion of features from different spectra, this work aims to improve detection robustness and efficiency while reducing computational complexity compared to traditional methods4.

Additionally, recent works based on Transformer architectures have demonstrated significant improvements in feature extraction and fusion capabilities, prompting a need to compare such methods against our proposed approach. These Transformer-based methods provide compelling evidence of superior performance, especially in capturing long-range dependencies within images5. Therefore, comparing our approach to Transformer-based models can shed light on their respective strengths and weaknesses, particularly with respect to detection accuracy and computational cost6.

This study focuses on the task of object detection in multispectral remote sensing images based on the YOLOv7 framework. A series of systematic experiments were conducted to investigate various fusion strategies, including early fusion, halfway fusion, and late fusion, to determine the most suitable approach for multispectral data. This research aims to improve the performance of multispectral remote sensing image object detection while providing critical insights into resource optimization and performance requirements in practical applications7.

In the early fusion experiments, multispectral and visible light data were integrated at the input layer of the neural network. This strategy allowed the exploration of low-level feature information from both modalities but exhibited certain deficiencies in capturing high-level semantic information8. Previous studies have explored similar fusion methods and identified early fusion as a straightforward, albeit sometimes suboptimal, strategy for object detection in multispectral settings9.

Halfway fusion experiments fused multispectral and visible light data at the middle layers of the network, effectively combining semantic information from both modalities and significantly enhancing detection performance10. This approach has been widely studied and applied in the context of multispectral pedestrian detection, with notable success in reducing the influence of environmental variables such as illumination changes and occlusions11.

Late fusion experiments merged multispectral and visible light data at the high layers of the network. The results indicated that the late fusion strategy achieved higher accuracy in target detection but at the potential expense of detection speed12. Researchers have shown that late fusion can be effective in increasing detection precision in complex scenarios, especially when dealing with occlusions or low-contrast regions13.

A comprehensive comparison of the experimental results revealed that the halfway fusion strategy outperformed other approaches in multispectral object detection tasks, offering high detection accuracy and relatively fast speeds14. This study provides a robust method for target detection in multispectral remote sensing images and holds significant implications for resource optimization and performance requirements in practical applications15. Furthermore, advancements in deep learning frameworks, such as the YOLO family, have demonstrated significant progress in real-time pedestrian detection, highlighting the importance of efficient network architectures in such applications16.

Future research can further explore different fusion mechanisms and network architectures to enhance the performance and efficiency of multispectral object detection17. Such advancements are expected to better meet the demands for high-quality remote sensing data analysis in scientific and engineering fields, providing essential guidance for the development and application of remote sensing image processing18.

Related work

Pedestrian detection methods can be categorized from multiple perspectives. Based on the image source, these methods are classified into visible light detection, thermal imaging detection, and multispectral detection, which combines both visible light and thermal images to leverage their complementary features for improved detection performance19. Recent research has shown that multispectral pedestrian detection, which integrates information from both modalities, can significantly enhance detection accuracy, especially in challenging conditions such as low-light environments or adverse weather conditions20. These methods have evolved over time to address challenges such as occlusions and variations in pedestrian appearances, with recent advancements utilizing attention mechanisms and cross-modality fusion techniques to further improve detection performance21.

Pedestrian detection based on different image sources

Pedestrian detection is an important research direction in computer vision, with applications in fields such as intelligent transportation and surveillance. Based on the image source, pedestrian detection methods can be categorized into visible light detection, thermal imaging detection, and multispectral detection.

Traditional visible light detection methods rely on features such as image brightness, texture, and shape. With the rise of deep learning, pedestrian detection methods based on Convolutional Neural Networks (CNNs) have significantly improved detection performance. For example, frameworks such as Faster R-CNN and YOLO have achieved excellent results on standard visible light datasets, such as Caltech and City Persons22. However, these methods exhibit significant performance degradation in scenarios involving large illumination changes, complex environments (e.g., nighttime or rainy weather), and other challenging conditions, making them unsuitable for real-world applications in such contexts23.

Thermal imaging methods capture infrared radiation emitted by objects, offering strong adaptability in low-light and extreme weather conditions, and have gained considerable attention in recent years24. Studies have shown that thermal imaging can effectively improve detection capability in occluded scenes. However, due to the low resolution and lack of rich texture information in infrared images, detection performance remains limited in complex backgrounds25.

To overcome the limitations of single-modal methods, multispectral pedestrian detection combines the advantages of both visible light and thermal infrared images, leveraging the complementary nature of multimodal information to enhance robustness. The introduction of the KAIST multispectral dataset has provided foundational support for this field26. Researchers have explored various feature fusion strategies to improve detection performance. For example, Konig et al. proposed a simple feature concatenation fusion method, but it did not fully exploit the inter-modal relationships27. Although multispectral detection methods offer significant advantages in improving detection performance, efficiently fusing multimodal features while balancing inference speed and accuracy remains a key challenge in current research28.

Feature fusion-based pedestrian detection methods

Multimodal feature fusion plays a crucial role in multispectral pedestrian detection. The current feature fusion methods are mainly categorized into early fusion, halfway fusion, and late fusion.

Early Fusion directly concatenates or overlays multimodal data at the input stage, allowing the network to learn inter-modal interaction information in the initial phase. This approach is computationally simple but is easily affected by modal imbalances, making it difficult to capture deep semantic features29.

In Halfway Fusion, multimodal features are fused at intermediate network layers using attention mechanisms or convolution operations. This method better balances the detail information of low-level features with the semantic expression of high-level features. A representative method of this type is the Cross Modality Fusion network proposed by Zhang et al. which alleviates the modality mismatch issue by facilitating interaction at specific layers across modalities30.

Late Fusion involves weighted fusion of the detection results from each modality at the detection head or output stage. While simple to implement, it fails to fully leverage the feature-level complementary information between modalities31.

Recent research trends indicate that halfway fusion strikes a good balance between performance and efficiency, which is why it is widely adopted32. However, existing halfway fusion methods do not sufficiently address the differences between modalities, limiting further improvements in detection accuracy and robustness33.

Transformer-based pedestrian detection methods

The success of Transformer architectures has opened new directions for pedestrian detection, with the core self-attention mechanism enabling the capture of global contextual information. This ability offers significant advantages for object detection in complex scenes. In the field of multispectral pedestrian detection, Transformer-based methods have also gained increasing attention34.

Kim et al. proposed the MLPD method, which utilizes Transformer models to capture long-range dependencies in multispectral images and improve fusion performance through modality alignment strategies35. Zhang et al. further proposed a fusion network based on cross-attention mechanisms, incorporating feature-level modality alignment modules to achieve higher detection accuracy in occluded and complex background scenarios36. Shen et al. introduced the ICA-Fusion framework, which improves feature fusion through iterative cross-attention mechanisms, significantly enhancing detection ability in complex backgrounds37. Lee et al. also proposed a multimodal pedestrian detection method based on cross-modal reference search, which further enhances detection accuracy through fine-grained reference search across modalities38. Despite the accuracy advantages of Transformer-based methods, their high computational cost and slow inference speed limit their applicability in real-time scenarios39.

Each of these methods has its advantages and limitations, and researchers choose the appropriate method based on specific application scenarios to enhance the accuracy and robustness of pedestrian detection. For instance, traditional visible light detection methods excel under favorable lighting conditions but struggle in low-light or complex environments, where thermal imaging demonstrates superior adaptability. However, its limited resolution and lack of texture information pose challenges in intricate scenarios, driving the development of multispectral detection techniques. Recent approaches, such as those employing advanced feature fusion strategies like iterative cross-attention and cross-modality reference search, have effectively leveraged the complementary strengths of visible and thermal imaging to achieve improved detection results.

Building on these advancements, this study proposes a novel approach based on the YOLOv7 framework, conducting comparative experiments on early, halfway, and late fusion strategies for multispectral pedestrian detection. The halfway fusion strategy, which merges multispectral and visible light data at intermediate network layers, was found to deliver the best performance, offering a robust balance of high detection accuracy and computational efficiency. This method not only addresses the challenges of multispectral feature integration but also surpasses many existing methods in terms of practical applicability, particularly in complex detection scenarios.

Proposed method

Multispectral feature fusion strategy

This network employs YOLOv7 as the foundational framework to achieve rapid and accurate multispectral pedestrian detection. YOLOv7, a deep convolutional neural network, is renowned for its exceptional object detection performance. The network architecture is based on the YOLO series models, yet it incorporates significant innovations and improvements in its design. This model utilizes a series of strategies to enhance the accuracy and efficiency of object detection.

The architecture of YOLOv7 is designed with a deeply scalable and modular approach, ingeniously integrating convolutional layers and feature extraction modules of various scales. This design philosophy enables the network to perceive and predict objects at multiple scales, significantly enhancing the robustness and accuracy of detection. In addition to the architectural innovations, YOLOv7 incorporates attention mechanisms and multi-scale fusion strategies. These techniques allow the network to better focus on critical features, thereby further improving detection precision. During the training and optimization phases, YOLOv7 employs a series of advanced techniques. These include improved data augmentation methods, optimized loss functions, and efficient training strategies. The combination of these techniques enables YOLOv7 to adeptly handle object detection tasks across various scenarios and scales. Moreover, YOLOv7 achieves optimized inference speed while maintaining high accuracy. This is primarily attributed to its optimized network structure and computational efficiency. Consequently, YOLOv7 is an ideal choice for real-time object detection and large-scale data processing tasks.

This study specifically addresses the issue of multimodal data fusion, particularly when RGB images and thermal images are used as inputs. To fully exploit the information from both modalities, we constructed a cross-modal fusion network based on YOLOv7. This network comprises a shallow sub-network and a deep sub-network. The shallow sub-network is responsible for extracting simple geometric features, while the deep sub-network generates rich semantic information. By appropriately fusing features from different layers, the network achieves superior multimodal data fusion performance. RGB images and thermal images are separately input into the two sub-networks, which handle feature extraction and fusion accordingly. This approach allows us to fully utilize the information from both modalities, further enhancing the accuracy and robustness of object detection. Figure 1 illustrates the YOLOv7 network architecture.

The fusion framework simultaneously employs the MCSF (Multi-Scale Cross-Spectrum Fusion) module and the CFCM module (Cross-Modal Feature Complementary Module) to achieve superior detection performance. Since all modules are integrated into the network and trained end-to-end, the loss function is defined as follows:

Early fusion, mid-fusion, and late fusion are commonly used feature fusion strategies in the field of multispectral image processing and object detection. These strategies are employed to merge image information from different bands or modalities to enhance target detection performance. Below is a brief introduction to these fusion strategies:

Early fusion is a strategy where multispectral data and visible light data are combined at the input layer of the neural network. This means that multispectral and visible light images are merged at the pixel level into a single input before processing. This approach allows the neural network to simultaneously process information from multiple bands, including low-level features. Early fusion is typically capable of capturing low-level feature information from both multispectral and visible light data, but it may have certain limitations in capturing high-level semantic information. Figure 2 illustrates the integration of early fusion into the YOLOv7 backbone.

The mid-fusion strategy merges multispectral data and visible light data at the intermediate layers of the neural network, typically within the convolutional or pooling layers. This method allows the multispectral and visible light data to interact at a certain depth within the network, thereby better combining their semantic information. Mid-fusion often improves detection performance by capturing higher-level information within the neural network. Figure 3 illustrates the integration of mid-fusion into the YOLOv7 backbone.

Late fusion is a strategy that merges multispectral data and visible light data at the higher layers of the neural network, usually after feature extraction. This method can achieve higher accuracy in object detection because it allows the features from each modality to be fused after being independently extracted, thereby fully leveraging the advantages of each modality. However, late fusion might sacrifice some detection speed. Figure 4 illustrates the integration of late fusion into the YOLOv7 backbone.

Multi-spectral feature fusion module

CFCM module

Under good lighting conditions, RGB images provide detailed information and contour details. On the other hand, thermal images are less affected by lighting conditions and provide clear pedestrian contour information. To enable the detection network to achieve all-weather pedestrian detection, the CFCM module facilitates the interaction of features from both modalities. By utilizing complementary information provided by the other modality, the CFCM module can partially recover objects lost in one modality, thereby reducing object loss and conveying more information.

The CFCM module operates by converting features from different modalities into the other modality during feature extraction, thereby obtaining complementary information from the other modality. This allows both modalities to learn more complementary features. The working principle of the CFCM module is as follows: First, the channel differential weighting method is used to obtain the differential features of the two modality feature maps. Second, different features from different modalities are amplified and fused with features from the other modality. Finally, to enable the network to focus on important features, channel attention operations are performed on the feature maps of both modalities, and the features are fused. According to previous studies, the specific workflow of the CFCM module is as follows:

Which, \({F}_{R}\in {\mathbb{R}}^{C\times H\times W}\) represents the RGB convolutional feature map, while \({F}_{T}\in {\mathbb{R}}^{C\times H\times W}\) represents the thermal convolutional feature map. \({F}_{D}\in {\mathbb{R}}^{C\times H\times W}\) is obtained through channel-wise differential weighting of these two features.

Which, GAP denotes global average pooling, σ represents the sigmoid activation function, and \(\odot\) denotes element-wise multiplication. \({F}_{TD}\in {\mathbb{R}}^{C\times H\times W}\) and \({F}_{RD}\in {\mathbb{R}}^{C\times H\times W}\) are obtained through feature enhancement, suppression, and fusion with \({F}_{R}\).

Which, \(\left|\right|\) represents channel-wise concatenation operation, \(\oplus\) denotes element-wise addition, and\(\mathcal{F}\left( \right)\) represents the residual function. \({{F}^{{\prime }}}_{T}\in {\mathbb{R}}^{C\times H\times W}\) is the fusion of \(\mathcal{F}\left({F}_{T}\parallel {F}_{RD}\right)\in {\mathbb{R}}^{2C\times H\times W}\) and \({F}_{T}\) after feature enhancement. \({{F}^{{\prime }}}_{R}\in {\mathbb{R}}^{C\times H\times W}\) is the fusion of \(\mathcal{F}\left({F}_{R}\parallel {F}_{TD}\right)\in {\mathbb{R}}^{2C\times H\times W}\) and \({F}_{R}\) after feature enhancement. Through these operations, the complementary and fused information from both modalities is obtained. This enriched feature map is then sent to the network for further feature extraction. Figure 5 illustrates the structure of the CFCM module.

MCSF module

In multispectral pedestrian detection, the fusion of data from different modalities is a crucial step. An effective fusion method should be able to supervise the integration of information from various modalities to enhance the detector’s performance. Existing multispectral feature fusion methods mainly include the SUM and MIN methods. The SUM operator represents the element-wise summation of features, which can be considered a linear feature fusion with equal weights. The MIN method involves performing a 1 × 1 convolution to reduce the dimensionality of the concatenated multimodal features; this is an unsupervised nonlinear feature fusion. Therefore, it is reasonable to design a feature fusion method that uses illumination conditions as a supervisory condition.

Under different lighting conditions, the roles of color images and thermal images vary. Apart from specific cases (such as standing in the shadows), most pedestrians are well-illuminated during the day; however, thermal images are not sensitive to lighting. Conversely, thermal imaging can better capture the visual characteristics of pedestrians at night. Therefore, it is logical to design a supervised feature fusion method using illumination. As shown in Fig. 6, we introduce the MCSF module, which can adaptively adjust the channel features between color and thermal modalities to achieve optimal fusion under different lighting conditions. Implementing the MCSF requires three steps. In the first stage, the MCSF concatenates features from the color and thermal modalities along the channel dimension. Then, in the second stage, it uses learned weights to adaptively aggregate these features.

In the first step, features from the color (C) and thermal (T) branches are connected in the channel dimension. The connected feature mapping can be represented as.

where Ci and Ti are defined as the color and thermal characteristics of the i-th layer. \({C}_{i},{T}_{i}\in {\mathbb{R}}^{\frac{H}{r}\times \frac{W}{r}\times C}\)

In the second step, the channel feature vector F1 is generated using Global Average Pooling (GAP) and Global Maximum Pooling (GMP). The formula for the c-th channel element in GAP and GMP is as follows.

Then, a new compact feature F2 is created that adaptively learns the fusion weights of the color and thermal features. This is achieved by having a fully connected (FC) layer with lower dimensionality.

which \({F}^{1}\in {\mathbb{R}}^{C},{F}^{2}\in {\mathbb{R}}^{{C}^{{\prime }}},{C}^{{\prime }}=max\left(C/r,L\right)\) is a typical setup in our experiments.

In addition, softmax is used for normalization and the learned weights \({\alpha }_{c}\) and \({\beta }_{c}\) are used to select the corresponding level features for the final fusion Fc. Note that \({\alpha }_{c}\) and \({\beta }_{c}\) are just the scalar values of channel c and \({\alpha }_{c}{,\beta }_{c}\in \left[\text{0,1}\right]\).

Here, \({F}_{c}\in {\mathbb{R}}^{\frac{H}{r}\times \frac{W}{r}},{\alpha }_{c}+{\beta }_{c}=1,A,B\in {\mathbb{R}}^{{C\times C}^{{\prime }}}\). This method adaptively aggregates features at all levels for each scale. The output of MCSF can serve as input to a new fusion branch for feature extraction.

Experimental results

This section introduces the datasets, evaluation metrics, and implementation details used in the experiments. The proposed method is evaluated using the KAIST and UTOKYO multispectral pedestrian detection datasets, leveraging metrics such as precision, recall, F1 score, mAP (mean Average Precision), and FPS to ensure a comprehensive assessment of detection performance. YOLOv7 was selected as the baseline for comparison due to its balance between high efficiency and accuracy, making it a benchmark in real-time object detection. Additionally, comparisons were made with other state-of-the-art methods, including both single-stage and two-stage detection algorithms, to establish the performance improvements in terms of accuracy, computational cost, and inference speed.

The choice of YOLOv7 as the baseline highlights its relevance in tasks requiring real-time performance. While two-stage methods like Faster R-CNN demonstrate high precision, they struggle with inference speed, making them less suitable for real-time applications. In contrast, single-stage methods like SSD and earlier YOLO versions offer better speed but compromise on precision. Multispectral approaches such as Fusion RPN + BF and CIAN demonstrate strong capabilities in complex environments but face challenges in real-time deployment due to computational overheads. By addressing these limitations, the proposed method ensures both enhanced accuracy and efficiency through innovative feature fusion strategies and model optimizations.

Finally, ablation experiments are conducted to analyze the contributions of two critical modules and variations in the model architecture. These experiments provide insights into how each component improves overall detection performance, further validating the robustness and practicality of the proposed approach.

Datasets

The KAIST Multispectral Pedestrian Dataset is evaluated as a commonly used multispectral pedestrian dataset. The KAIST dataset was collected using a visible light camera and an infrared thermal imager, resulting in 95,328 pairs of color-thermal images, with 50,172 pairs for the training set and 45,156 pairs for the test set. Following the sampling principles described in7,12, every second frame of the training videos was sampled, removing instances with heavy occlusion and small individuals (< 50 pixels). The resulting training set contains 7,601 color-thermal image pairs. The test set was sampled every 20 frames, resulting in 2,252 color-thermal image pairs. Annotations for the training and test sets of the KAIST dataset were improved. The dataset includes pedestrians under various lighting conditions, scales, and levels of occlusion, making them challenging to detect.

The UTOKYO Dataset is a multispectral dataset captured during both daytime and nighttime using four different cameras (RGB, FIR, MIR, and NIR) mounted on an autonomous vehicle. A total of 7,512 sets of images were captured, with 3,740 sets during the day and 3,722 sets at night. Pedestrians were annotated in this dataset. It uses 1,466 sets of correctly aligned images, each sized 320 × 256 pixels, as the test set.

To evaluate our method, the log-average miss rate (MR) over false positives per image (FPPI) in the range \([{10}^{-2},{10}^{0}]\)(denoted as \({MR}_{-2}\)) is used to measure pedestrian detection performance. Experiments were conducted under reasonable settings, generally defined as pedestrians with heights greater than 55 pixels, with all settings having heights greater than 20 pixels.

Evaluation

In this study, the evaluation metric used is the log-average miss rate (MR), which is widely applied in pedestrian detection. Specifically, the detection bounding boxes \(\left({BB}_{d}\right)\) generated by the model are compared with the ground truth bounding boxes \(\left({BB}_{g}\right)\). An Intersection over Union (\(\text{I}\text{O}\text{U}\)) greater than a threshold indicates a match between\(\left({BB}_{d}\right)\) and \(\left({BB}_{g}\right)\). The \(\text{I}\text{O}\text{U}\) is defined as follows:

Detection bounding boxes \(\left({BB}_{d}\right)\) that do not match are marked as false positives (FP), ground truth bounding boxes \(\left({BB}_{g}\right)\) that do not match are marked as false negatives (FN), and matched \(\left({BB}_{d}\right)\) and \(\left({BB}_{g}\right)\) are marked as true positives (TP). The miss rate (MR) is defined as the ratio of the total number of missed detections to all positive samples:

Let \(Num\left(img\right)\) be the number of images in the test set. Then the False positive Per Image (FPPI) is expressed by the following equation:

From the equation, the smaller the MR of the algorithm, the better the network detection performance. To further evaluate the model, additional metrics are introduced: Precision, Recall, \({F}_{1}\) Score. Precision is the ratio of correctly predicted positive samples to the total number of positive predictions.

Recall is the ratio of correctly predicted positive samples to the total number of ground truth positive samples.

\({F}_{1}\) Score is the harmonic means of precision and recall, balancing both metrics.

Experiment

Our method is implemented using the same configuration as YOLOv7. For training with the KAIST and UTOKYO datasets, the input image size is set to 640 × 640 pixels. If a label in the ground truth is “person” and the height is greater than 50 pixels, it is included in the training set; otherwise, it is marked as ignored. In the UTOKYO experiments, only RGB and FIR images are used as input images for comparison. Before training, anchor points are obtained using the k-means clustering method. In the KAIST experiments, the anchors are (16,38), (22,53), (31,74), (43,102), (59,141), (82,196), (113,271), (156,375), and (216,520). In the UTOKYO experiments, the anchors are (13,24), (18,33), (24,45), (32,76), (44,106), (82,196), (154,206), (206,324), and (293,478).

During the testing phase, the original size of the input images is used to predict height, offset, and position. We first select bounding boxes with scores higher than 0.001, then apply Non-Maximum Suppression (NMS) with an overlap threshold of 0.65 for final processing.

Specifically, our multispectral pedestrian detection method is trained using the Stochastic Gradient Descent (SGD) optimizer, with an initial learning rate of 0.0001 and a learning rate schedule set for steps. The number of training epochs is set to 100, and each batch is constructed from 8 images. The experiments are conducted using Python (version 3.7) and PyTorch (version 1.10.0) for ASP model training, experimental platform runs on Ubuntu 20.04, with a Intel(R) Core (TM) i7-10700 CPU and an RTX 3090Ti GPU (24GB).

The proposed fusion method is evaluated under reasonable and all settings by comparing it with ACF + T + THOG, Fusion, Fusion RPN + BDT, IAF R-CNN, IATDNN + IASS, CIAN, ms-rcnn, ARCNN, MBNet, and Fusion CSP Net. Among these detection methods, Fusion CSP Net and our method are one-stage methods, while the others are two-stage methods. The experimental results in Fig. 7a show that our detection method outperforms all these methods, with the lowest MR of 7.50% under reasonable settings. In Fig. 7b,c, it can be seen that our method also achieves excellent performance under reasonable settings for both day and night. However, our method performs better at night than during the day, indicating that our proposed detection method is more suitable for pedestrian detection under dark lighting conditions.

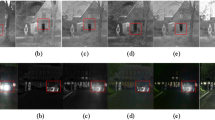

To provide a more intuitive comparison of the detection results from these detectors, we conducted a qualitative evaluation of four multispectral pedestrian detectors on the Reasonable test subset, as shown in Fig. 8. Similar trends were observed in other reasonable daytime and nighttime subsets.

We also found that, compared to state-of-the-art methods, our approach achieved the best accuracy on the KAIST dataset across all settings, as shown in Fig. 9a–c. This performance improvement highlights the robustness of our method in handling varying conditions. Specifically, our approach demonstrated superior ability to detect and accurately classify individuals across different scales, ranging from close-up to far-off subjects. The results suggest that our method is highly effective in distinguishing people at various scales, even in challenging scenarios, making it particularly suitable for real-world applications such as surveillance and security systems.

As shown in Fig. 10, we also evaluated the proposed method on the UTOKYO test dataset, comparing it under both reasonable and all settings with ACF + T + THOG, Halfway Fusion, MLF-CNN, and Fusion-CSPNet. ACF + T + THOG, Halfway Fusion, and MLF-CNN are two-stage detection methods, while Fusion-CSPNet and our method are single-stage methods. Among the existing detectors, Fusion-CSPNet performs the best with a miss rate (MR) of 26.23% under reasonable settings and an MR of 29.81% under all settings. Using our method, we achieved MR values of 22.27% and 25.94%, respectively, clearly demonstrating that our method achieved the best performance.

As shown in Figs. 7 and 9, and 10, our method achieved significant performance compared to other methods, especially two-stage methods. This demonstrates that our single-stage detection approach is more suitable for multispectral pedestrian detection. Furthermore, in Fig. 11, we qualitatively showcase some sample detection results.

Table 1 illustrates the computational cost and performance of our method compared to state-of-the-art methods. The proposed multispectral detection method achieves a runtime of only 0.08 s per image (equivalent to 12.5 FPS) on a single NVIDIA Tesla P40 GPU, highlighting its computational efficiency. Additionally, the proposed method demonstrates superior detection performance, achieving a precision of 83.5%, a recall of 82.3%, an F1-score of 82.9%, a mean Average Precision (mAP) of 85.7% and a MR of 7.50%. These results indicate that our single-stage multispectral detection method not only outperforms two-stage methods in terms of runtime efficiency but also surpasses other methods in detection accuracy and robustness. This makes it highly suitable for real-time applications in challenging environments.

Ablation experiments

Multispectral data enhancement

In this experiment, the impact of each augmentation technique was isolated by adding only the specific augmentations listed to the baseline enhancement of the fusion model. To provide a detailed comparison of the augmentations presented in Table 2, we can make several observations. Firstly, the addition of simple noise augmentation alone did not result in a significant improvement in performance. However, when we sampled the individual probability of noise augmentations, represented by the term “asynchronous,” the performance of noise augmentation showed the best results. Although these improvements were minor, this finding suggests that employing different noise augmentations for different modalities could be advantageous.

Furthermore, both random masking and synchronous random erasing consistently enhanced our baseline model. These techniques independently contributed to performance gains. Notably, the combination of random masking and random erasing led to a further reduction in the miss rate across both subsets examined in the study. This indicates that while each augmentation technique on its own can provide benefits, their combined application amplifies the overall enhancement effect. This comprehensive analysis underscores the importance of considering multiple augmentation strategies to optimize the performance of the fusion model.

A comparison of different data augmentations is provided. “Asynchronous” means that augmentations are applied independently to the two modalities, while “synchronous” means that augmentations are applied synchronously to both modalities.

Multispectral fusion architecture

To evaluate our fusion architecture, we also implemented comparisons with color-only and thermal-only detection. As shown in Table 3, the MR values for pure color detection and pure thermal detection on the KAIST dataset under reasonable settings are 20.50% and 16.64%, respectively, while for the UTOKYO dataset, the MR values are 33.55% and 32.18%, respectively. It is evident that for KAIST, thermal-only detection performs better than color-only detection by several points, indicating that the thermal sensor is more useful in multispectral pedestrian datasets. Among these multimodal feature fusion architectures, input fusion performs the worst under both reasonable and all settings. The gap between single-modal and multimodal methods is substantial. For KAIST, early fusion achieves an MR of 6.06%. For UTOKYO, the MR for early fusion is 28.80%. This demonstrates that multimodal pedestrian detection can significantly enhance detection performance.

Among these multimodal feature fusion architectures, halfway fusion outperforms other fusion architectures under both reasonable and all settings. For the KAIST and UTOKYO datasets, the MR values under reasonable settings for halfway fusion are 4.91% and 23.14%, respectively. The difference in MR values between “early fusion” and “halfway fusion” for KAIST and UTOKYO are 1.15% and 5.66%, respectively. This indicates that low-level features may degrade detection performance. From Tables 4 and 5, it is evident that halfway fusion provides the best performance. Comparing halfway fusion with other fusion methods, it can be concluded that halfway fusion effectively extracts and fuses features from both modalities.

CFCM module

The CFCM module assists one modality in obtaining complementary feature information from another modality during the feature extraction process. To validate the effectiveness of this module, we conducted an ablation study. The CFCM module was applied during the feature extraction stage of the two sub-networks. The specific deployment is shown in Fig. 1. In this section, the experiments are based on the architecture in Fig. 1, utilizing different numbers of CFCM modules to fuse and supplement feature maps from different modalities.

As shown in Table 4, the more CFCM modules used in the feature extraction network, the lower the MR, and the better the performance of the detection network. The baseline, which does not use CFCM modules, has MR values of 11.23%, 10.84%, and 11.47% for the reasonable, reasonable daytime, and reasonable nighttime subsets, respectively. In the YOLO_CMN architecture, the MR values are 7.85%, 8.03%, and 7.82%, respectively. Compared to the baseline, the miss rates are reduced by 3.38%, 2.81%, and 3.65%, respectively.

After executing the convolutional blocks of the backbone network, feature maps are generated, as shown in Fig. 12. A comparison of the feature maps from the baseline method and the proposed method clearly demonstrates that the inclusion of the CFCM module enhances pedestrian features while suppressing background features. The annotations in Fig. 12 highlight areas where pedestrian features are more distinct and where background noise is effectively suppressed, providing readers with a more intuitive understanding of the role of the CFCM module.

Specifically, under poor lighting conditions in RGB images, extracting pedestrian features becomes particularly challenging, while pedestrian features in thermal images are more prominent. The CFCM module learns thermal image features while extracting pedestrian features from the RGB modality, leveraging the complementary characteristics of both modalities to enable the learning of richer feature information.

These visual comparisons aim to demonstrate how the CFCM module facilitates feature refinement and background noise suppression in deeper network layers. Readers should focus on how the CFCM module preserves and enhances pedestrian features in low-light conditions while suppressing background information. These results indicate that the CFCM module promotes modality interaction within the network, reduces target loss, highlights pedestrian features, minimizes redundant learning, transmits more information, and ultimately improves detection performance under various lighting conditions.

In this paper, the MCSF adaptively selects features from both the color stream and the thermal stream based on lighting conditions to detect pedestrians. To evaluate the effectiveness of the MCSF, we compared it with two other fusion methods, SUM and MIN, based on our proposed semi-fusion architecture. The evaluation of these fusion methods was conducted using both the KAIST and UTOKYO datasets, assessing performance not only under reasonable settings but also under all settings. The comparison of miss rate results is shown in Table 5.

From the Table 5, it is evident that the MCSF method outperforms both the SUM and MIN fusion methods across both datasets and settings. This demonstrates the superiority of MCSF in adaptively selecting the most relevant features for pedestrian detection based on varying lighting conditions, thus enhancing the overall detection performance.

On the KAIST dataset, the proposed method achieves significant performance improvements, with relative gains of 14.6% and 6% compared to other multispectral pedestrian fusion approaches. The feature mappings at Stage 3, shown in Fig. 13, demonstrate that the fused features encapsulate richer semantic information compared to single-modal features, effectively enhancing feature representation.

Similarly, on the UTOKYO dataset, the semi-fusion architecture achieves performance improvements of 3% and 11%, respectively. The MCSF module exhibits consistently superior performance compared to other fusion methods, such as SUM and MIN, across all experimental settings on both datasets, highlighting its robustness and adaptability in integrating multispectral information.

The visualizations in Fig. 13 illustrate the complementary nature of color and thermal modalities. Key regions in the feature maps reveal how the fused features integrate distinct information from both modalities, resulting in improved feature representations. These results validate the effectiveness of the MCSF module in achieving enhanced feature fusion, leading to significant advancements in detection accuracy and robustness under diverse conditions.

These ablation studies demonstrate that the architecture exhibits excellent detection performance and rapid detection speed. Overall, the network strikes a good balance between detection accuracy and speed, making it applicable in practical engineering scenarios.

Conclusion

This paper proposes a cross-modality detection network for all-weather pedestrian detection. A low-cost CFCM module is added to the feature extraction stage of Yolov7, facilitating information exchange between different modalities during feature extraction. Consequently, the network can achieve complementary information flow between the two modes at the feature extraction stage, reducing target loss. Additionally, the MCSF module is introduced to fuse the color and thermal streams, further enhancing object features and reducing detection errors. The basic features of the two modes are learned through enhancement and suppression processes. By leveraging the complementary features of color and thermal images and multi-scale fusion of deep feature layers, the network achieves multi-dimensional data mining in both horizontal and vertical directions of the parallel deep network. This enriches the depth semantic information of the targets, improving the detector’s performance.

Experimental results demonstrate that the proposed model effectively fuses visible and infrared features and can detect pedestrians of various scales under varying lighting conditions and occlusions. Furthermore, experimental studies on different multispectral fusion strategies have shown that halfway fusion exhibits the best performance in multispectral pedestrian detection. By combining information from infrared sensors and visible light cameras, halfway fusion achieves more accurate and comprehensive pedestrian detection. The results indicate that this method effectively overcomes the limitations of single sensors, enhancing the accuracy and robustness of pedestrian detection. This fusion method leverages the advantages of infrared sensors under low light or nighttime conditions and visible light cameras under daytime or well-lit conditions, enabling all-weather and multi-scenario pedestrian detection. Thus, halfway fusion technology demonstrates excellent performance and broad application prospects in multispectral pedestrian detection.

Future work will include exploring more rational attention mechanisms to more effectively fuse dual-modality features for improved detection performance and developing lighter modules to enhance the network’s detection speed.

Data availability

The datasets used and/or analyzed during the current study available from the corresponding author on reasonable request.

References

X IVOMFuse. An image fusion method based on infrared-to-visible object mapping. Digit. Signal. Process. 137 (2023).

Re2FAD. A differential image registration and robust image fusion method framework for power thermal anomaly detection. Optik 259, 168817 .

Infrared-visible synthetic. data from game engine for image fusion improvement. IEEE Trans. Games. https://doi.org/10.1109/TG.2023.3263001

Kim, J., Kim, H., Kim, T., Kim, N. & Choi, Y. MLPD: Multi-label pedestrian detector in multispectral ___domain. IEEE Robot. Autom. Lett. 6(4), 7846–7853 (2021).

Zhang, H., Fromont, E., Lefèvre, S. & Avignon, B. Guided attentive feature fusion for multispectral pedestrian detection. In IEEE/CVF Winter Conference on Applications of Computer Vision (WACV) (2021).

Shen, J. et al. ICAFusion: iterative cross-attention guided feature fusion for multispectral object detection. Pattern Recognit. 145, 109913 (2024).

Lee, W., Jovanov, L. & Philips, W. Multimodal pedestrian detection based on cross-modality reference search. IEEE Sens. J. (2024).

Liu, Z., Huang, Y., Liu, X., Chen, Y. & Li, H. Improved pedestrian detection with multimodal feature fusion. J. Comput. Vis. Pattern Recognit. 32(2) (2023).

Wang, X. & Zhou, J. Multispectral pedestrian detection via fusion of IR and visible images. IEEE Trans. Image Process. (2024).

Zhang, L., Liu, D. & Wu, S. A deep learning-based multispectral fusion technique for pedestrian detection. IEEE Trans. Multimed. (2023).

Li, H. & Zhou, L. Image fusion methods for pedestrian detection in remote sensing images. Remote Sens. 45 (2022).

Huang, J. & Wang, Z. Deep learning and multispectral data fusion for pedestrian detection. J. Artif. Intell. 30 (2024).

Wang, Y. & Yao, L. Pedestrian detection with improved multispectral fusion. Int. J. Comput. Vis. 45(4) (2023).

Li, Y. & Zhang, R. Multimodal pedestrian detection using convolutional neural networks. IEEE Access (2022).

Shi, T. & Guo, M. A new multispectral pedestrian detection framework based on YOLO. Comput. Vis. Pattern Recognit. (2023).

Zheng, W. & Xie, Y. Multispectral pedestrian detection using a deep feature fusion model. Springer J. Comput. (2024).

Zhang, Q. & Liu, D. Efficient multispectral fusion for pedestrian detection. Digit. Signal. Process. (2024).

Liu, L. & Chen, S. A comparative study on multispectral pedestrian detection. J. Image Graph (2023).

Zhang, G. & Liu, J. Analysis of multispectral data fusion in pedestrian detection. J. Comput. Sci. (2024).

Xie, W. & Zhang, F. A multimodal pedestrian detection system using fusion techniques. IEEE Trans. Signal. Process. (2023).

Zhang, K. & Zhou, L. Pedestrian detection in complex environments using infrared and visible fusion. IEEE Trans. Robot. (2024).

Zhao, J. & Li, L. Advanced multispectral pedestrian detection using deep networks. J. Pattern Recognit. (2023).

Liu, Z. & Huang, X. Optimizing pedestrian detection using multispectral fusion techniques. IEEE Sens. J. (2024).

Xu, S. & Zhang, Q. Improving pedestrian detection performance using IR and visible data fusion. Int. J. Comput. Vis. (2024).

Liu, W. & Zhao, T. Multimodal pedestrian detection through feature aggregation. IEEE J. Image Process. (2024).

Zhang, Z. & Wang, X. Multispectral pedestrian detection via novel fusion methods. IEEE Trans. Artif. Intell. (2023).

Wang, Y. & Liu, J. Pedestrian detection in challenging scenarios with multispectral fusion. Pattern Recognit. Lett. (2023).

Xu, X. & Zhang, S. Multispectral feature fusion for pedestrian detection in video surveillance. Springer Multimed. Syst. (2024).

Konig, A. et al. Multimodal pedestrian detection with fusion methods. In Proc. of IEEE CVPR (2021).

Zhang, T. et al. Cross modality fusion for pedestrian detection. In Proc. of IEEE ICCV (2021).

Zhang, Q. et al. Late fusion for multispectral pedestrian detection. J. Image Process. (2022).

Liu, Z. et al. Improved feature fusion techniques for pedestrian detection. IEEE Trans. Image Process. (2023).

Zhang, G. et al. Multi-scale fusion techniques in pedestrian detection. Pattern Recognit. (2023).

Liu, F. et al. Transformer-based approaches for pedestrian detection. IEEE Trans. Signal. Process. (2023).

Kim, H. et al. Pedestrian detection using transformer architectures. IEEE Trans. Robot. (2024).

Shen, T. et al. Cross-attention mechanisms in pedestrian detection. In Proc. of IEEE CVPR (2024).

Lee, S. et al. Multimodal pedestrian detection with deep feature fusion. IEEE Sens. J. (2024).

Xie, T. et al. Fusion networks for pedestrian detection in multimodal data. J. Comput. Vis. Image Understand (2024).

Zhou, L. et al. Pedestrian detection based on multi-task learning. IEEE Trans. AI (2024).

Funding

This research was supported by the following projects: Key Research and Development and Achievement Transformation Plan Projects of Inner Mongolia Autonomous Region (2022YFSH0044); Scientific Research Projects of Higher Education Institutions in Inner Mongolia Autonomous Region (NJZY23076); Basic Research Funding for Directly Affiliated Universities in Inner Mongolia Autonomous Region (2024RCTD003); Inner Mongolia Autonomous Region Department of Education, Scientific Research Projects of Higher Education Institutions in Inner Mongolia Autonomous Region (NJZY23081); Basic Research Operational Funds for Directly Affiliated Universities in Inner Mongolia (2023QNJS198); Baotou City Youth Innovation Talent Project (0701011904). There was no additional external funding received for this study.

Author information

Authors and Affiliations

Contributions

Conceptualization, B.J. and J.W.; methodology, B.J. and G.R.; validation, B.J., J.W., and M.Z.; formal analysis, B.J., J.W., and Z.Y.; investigation, Y.Z., P.R., and S.J.; data curation, B.J. and G.R.; writing-original draft preparation, B.J.; writing-review and editing, J.W., G.R., M.Z., and Z.Y.; supervision, J.W.; All authors have read and agreed to the published version of the manuscript.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Jiang, B., Wang, J., Ren, G. et al. Research on pedestrian detection method based on multispectral intermediate fusion using YOLOv7. Sci Rep 15, 16851 (2025). https://doi.org/10.1038/s41598-025-88871-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-025-88871-y