Abstract

This study presents a robust approach for continuous food recognition essential for nutritional research, leveraging advanced computer vision techniques. The proposed method integrates Mutually Guided Image Filtering (MuGIF) to enhance dataset quality and minimize noise, followed by feature extraction using the Visual Geometry Group (VGG) architecture for intricate visual analysis. A hybrid transformer model, combining Vision Transformer and Swin Transformer variants, is introduced to capitalize on their complementary strengths. Hyperparameter optimization is performed using the Improved Discrete Bat Algorithm (IDBA), resulting in a highly accurate and efficient classification system. Experimental results highlight the superior performance of the proposed model, achieving a classification accuracy of 99.83%, significantly outperforming existing methods. This study underscores the potential of hybrid transformer architectures and advanced preprocessing techniques in advancing food recognition systems, offering enhanced accuracy and efficiency for practical applications in dietary monitoring and personalized nutrition recommendations.

Similar content being viewed by others

Introduction

Comprehending the nutritional composition of the foods one eats is crucial for the effective management of several ailments, including metabolic disorders like obesity1. The type and quantity of food consumed must be continuously monitored to achieve this understanding. Manual recording techniques are the traditional means of tracking the type and quantity of food consumed2,3,4. To make this process easier for users, diet-related apps have recently been introduced on mobile devices. However, user error and inattention affect the accuracy of this approach, making it less effective. Several automatic food recognizers (AFRs) have been developed to partially address this issue. These AFRs monitor the types and quantities of food consumed with minimal user intervention. AFRs are classified into multiple groups based on the cues they employ. Noises produced during chewing or swallowing are used in acoustically based methods for food type identification5. Acoustic sensors, such as in-ear microphones6 and throat microphones7, have been used for this purpose. An HMM-based recognizer classified seven foods using acoustic signals captured by a throat microphone, achieving a recognition rate of 81.5% to 90.1%7. Similarly, an in-ear microphone was used in a food recognition study, with results showing that 66% to 79% of seven distinct foods were accurately identified8. However, acoustic cues face significant limitations, as distinguishing between different foods solely based on sound can be challenging.

Food types have also been categorized and portion sizes estimated using visual cues because foods have distinct shapes, textures, and colors9. From the perspective of traditional vision-based pattern recognition, the steps involved in automatically classifying food include classification, feature selection, and segmentation of food images. Artificial neural networks (ANNs) have been utilized for image recognition tasks, leading to the widespread use of neural networks for food type categorization10,11 and calorie estimation12. The following assumptions underpin calorie estimation using visual cues: (1) Each food item has a specific number of calories per size (weight). (2) The appearance of food items is the primary means of identification. (3) Visual information can be used to estimate the size of a food item. It has been found that convolutional neural networks (CNNs) are more accurate than classical pattern recognition techniques for food classification and calorie prediction for 15 distinct food items13.

Classifying a variety of foods with intricate visual features can be difficult14. One major challenge in creating a universal classification model is the variation in color, texture, and shape among various food items. Accurate classification is further complicated by variations in serving sizes and presentation styles. Food images may contain occlusions and overlapping objects, which can complicate segmentation and reduce the precision of classification algorithms15. Additionally, noise and irregularities introduced by environmental factors, such as lighting and camera angles, can affect the performance of classification models. Another significant issue is the lack of large-scale labeled datasets for training robust classification algorithms, leading to problems with scalability and generalization. To address these challenges, sophisticated image processing techniques, reliable feature extraction methods, and the development of extensive and varied datasets for training and evaluation are required16.

Deep Learning (DL) is essential for food recognition because it enables the creation of extremely accurate and effective classification models17,18. Convolutional neural networks (CNNs) are particularly adept at extracting intricate patterns and features from food images, facilitating the reliable classification of a wide variety of food types. Unlike traditional methods that require human feature engineering, DL models can automatically process raw pixel data to extract hierarchical features. Training DL algorithms with sufficient labeled data can revolutionize food recognition and analysis, allowing these algorithms to identify a wide range of food items with high accuracy. These capabilities extend to applications such as calorie estimation, dietary monitoring, and personalized nutrition recommendations, among others19,20.

Given the growing need for accurate and automated dietary assessment tools, this study proposes a novel hybrid approach. The proposed framework integrates advanced preprocessing techniques, feature extraction using the VGG architecture, and a hybrid transformer model combining Vision Transformer and Swin Transformer variants. The model parameters are further optimized using the Improved Discrete Bat Algorithm (IDBA) to enhance classification accuracy and efficiency. This comprehensive approach aims to overcome existing challenges, providing a robust solution for food recognition and dietary monitoring.

The objectives of this research are to address the challenges in food recognition by proposing an integrated approach that combines advanced preprocessing, feature extraction, classification, and optimization techniques. Specifically:

-

Preprocessing enhancement: Implement Mutually Guided Image Filtering (MuGIF) to enhance image quality by reducing noise and improving visual consistency, thereby laying a robust foundation for subsequent analysis.

-

Feature extraction: Leverage the VGG architecture for feature extraction to capture intricate details, such as texture, shape, and color, essential for the accurate classification of diverse food items.

-

Hybrid classification model: Develop and validate a hybrid transformer model that integrates the Vision Transformer and Swin Transformer. This model aims to overcome limitations in existing methods by effectively capturing both global and local features for improved classification accuracy.

-

Optimization via IDBA: Employ the Improved Discrete Bat Algorithm (IDBA) for hyperparameter tuning to achieve optimal model performance, ensuring scalability and adaptability in diverse real-world scenarios.

The manuscript is structured as follows: Section “Introduction” Introduces the overview of the research topic. Section “Related work” Analyzes current models and outlines problem statement. The materials and techniques utilized in the research are described in Section “Materials and methods”. Section “Proposed methodology” provides a brief overview of the suggested model. Section “Results and discussion” presents the comparison of the suggested design with available models. Section “Conclusion and future work” concludes the paper and provides suggestions for further research.

Related work

In the paper by Kim et al.21, a method was proposed utilizing deep learning (DL) to distinguish between various food types and determine the amount of food consumed in pre- and post-meal images. An object detection network with Mask R-CNN was employed to identify food categories and geographical areas. To align pre- and post-meal images, a homography transformation was applied to the post-meal image, considering the locations of the meal plates in both images. The 3D shape of the food was determined to be a cuboid, cone, or spherical cap, depending on the type of food, and the quantity of food consumed was calculated by contrasting the volumes of food in the pre- and post-meal images. The simulation results demonstrated that the accuracy of food region detection and classification reached up to 93.6% and 97.57%, respectively.

Joshua et al.6, utilized the YOLOv5 algorithm to identify a total of 30,800 foods, categorized into 50 distinct food categories. They employed an IMX219-160 camera module (waveshare), a pressure sensor for the A/D module, and an HX711 weight weighing Chenbo load cell weight sensor (1 kg) to examine food identification, weight measurement, and nutritional value. The study showed that with 100% accuracy for weight and nutrition, the analysis of four distinct food types: rice (58%), spicy beef soup (62%), soy-based braised quail eggs (60%), and dried radish (31%), exhibited good identification accuracy.

Potărniche22, presented a system for automatically classifying five food additives based on their absorption of light in the ultraviolet spectrum. Distilled water was used to dissolve measured additive masses, creating solutions with varying concentrations. Two types of samples were analyzed: simple samples with two additive solutions combined, and one additive solution for the samples. The substances displayed absorbance peaks in the range of 190 and 360 nm, with each material exhibiting specific absorbance peaks at particular wavelengths (e.g., the absorbance peak of acesulfame potassium was observed at 226 nm). DL methods were employed to classify the samples, with number labels assigned and datasets separated into testing, validation, and training categories. CNN models produced the best classification results, with an average testing accuracy of 92.38% ± 1.48% and validation accuracy of 93.43% ± 2.01% for a CNN model with three convolutional layers.

Li et al.23, investigated combining DL and NIR-HSI to estimate food nutrition. They proposed OptmWave, a method inspired by reinforcement learning, which simultaneously handled modeling and wavelength selection. The dataset of scrambled eggs with tomatoes yielded the best results, with a determination coefficient of 0.9913 and a root mean square error (RMSE) of 0.3548. The spectral analysis of the selection results was interpretable, suggesting that DL-based NIR-HSI could be used to estimate food nutrition.

Agarwal et al.24, proposed a hybrid architecture utilizing DL algorithms to predict the calorie content of different foods in a bowl. The process involved classifying, segmenting, and determining the calorie content and quantity of each food item. The images underwent segmentation before being classified using the Mask R-CNN framework. After gathering features from the segmented images using the YOLO V5 framework, the food items were identified, and their respective dimensions were ascertained to determine their quantity. The volume of each food item was then used to calculate the calories, achieving an accuracy rate of 97.12% for identifying and predicting food item calories after training on the dataset’s images.

Liu et al.25, proposed developing an AI DL model for multiple-dish food identification based on the EfficientDet model. The model considered three meal types from regional Taiwanese cuisine: mixed-dish, single-dish, and multiple-dish. The results showed a high mean average precision (mAP) of 0.92 for 87 different dish types.

Mukhiddinov et al.26, offered a DL system using an enhanced YOLOv4 model to differentiate between fresh and rotten fruits and vegetables based on object type recognition in images. The system included a collection of fruit and vegetable images, with the YOLOv4 model optimized through data augmentation and performance evaluation. The Mish activation function was utilized to improve the model’s core, resulting in higher average precision than the original YOLOv3 and YOLOv4 models.

Min et al.27, introduced Food2K, the largest dataset for food recognition containing over a million photos and 2,000 categories, outperforming previous datasets in terms of categories and images. They proposed a progressive region enhancement network for food recognition, consisting of two main components enhancing regional features and learning progressive local features.

Abiyev and Adepoju28, proposed a robust food recognition model (FRCNNSAM) based on a self-attention mechanism-equipped deep convolutional neural network. Multiple FRCNNSAM structures were trained and combined to avoid model complexity and overfitting. The model achieved an accuracy of 96.40% on the Food-101 and MA Food-121 datasets.

Sheng et al.29, developed the Efficient Hybrid Food Recognition Net (EHFR–Net), which combined Vision Transformer (ViT) and Convolutional Neural Networks (CNN). They introduced Location-Preserving Vision Transformer (LP-ViT) to preserve positional information while extracting global information, resulting in a unified Hybrid Block (HBlock) that integrated global and local features.

Shams et al.30, investigated the use of the YOLOv5 algorithm for calorie estimation and food recognition in Egyptian cuisine. They provided insights into model tuning, transfer learning, and training procedures, and demonstrated the importance of choosing appropriate evaluation metrics for food detection.

Mohanty et al.31, discussed setting up a benchmark using publicly accessible food photos obtained from research cohorts using the MyFoodRepo mobile app. They published the MyFoodRepo-273 dataset, comprising over 24,000 images divided into 273 classes, and evaluated models’ performance using private test sets.

Puli et al.32, proposed a DL algorithm-based calorie measurement solution using food photos. A convolutional neural network was employed for food calorie calculation, achieving a gradual reduction in volume error estimation. They emphasized the significance of accurate food calorie calculation for promoting optimal health conditions.

Research gap

Despite significant advancements in deep learning (DL) and food recognition technology, several challenges persist. Firstly, current models struggle with variability in food presentation, such as differences in color, texture, and shape, and often fail to accurately classify foods with similar appearances but different nutritional values, critical for dietary monitoring and calorie estimation. Secondly, environmental factors like lighting and camera angles introduce noise, highlighting the need for robust feature extraction methods that perform well under diverse conditions.

Additionally, the lack of large-scale, well-annotated datasets limits the scalability and generalization of DL models. Existing datasets are often narrow in scope and fail to represent the global diversity of foods. Furthermore, there is insufficient research comparing the effectiveness of DL architectures in real-world settings, particularly in complex meal scenarios. Finally, the absence of standardized approaches for measuring food weight, volume, and nutritional content poses challenges for achieving consistent and accurate results across studies. Addressing these gaps requires developing more diverse datasets, advanced feature extraction techniques, and benchmarks to evaluate and improve food recognition systems effectively.

Materials and methods

Materials

Data acquisition

Figures 1 and 2 list the different food categories along with the calorie counts used for this investigation. Various physical characteristics and health impacts were taken into consideration when selecting common foods for the experiment33. If Nutrition Facts were available, the measured weight and the calorie-per-weight value were used to calculate the calorie counts for each food. For foods lacking Nutrition Facts, measured weights and a variety of nutritional data from the Korea Food and Drug Administration (KFDA) published data on calorie-per-weight, food composition, cooking methods, and other topics were used to compute the calorie values. In this study, actual calculated caloric values were obtained without the need for food analysis equipment because the sample ___location could affect the values produced by unevenly distributed food ingredients. Furthermore, using visual cues to estimate representative caloric counts was the goal, so using values from a reputable organization was reasonable. By using the same amount of food in the same cup, all liquid foods were collected. This was done to mitigate any negative effects on food classification and calorie estimation that might result from varying cup shapes and volumes. There were numerous food pairings that had the same appearance but different nutritional values, such as cider and water, tofu and milk pudding, milk soda and milk, and coffee and coffee with sugar. These foods were selected carefully to demonstrate the value of UV and NIR pictures for food categorization and calorie calculation.

Preprocessing using mutually guided image filtering (MuGIF)

Frequency ___domain filtering and spatial filtering are the two main categories of image filtering. Specifically, spatial filtering is a technique used to improve or alter images based on predetermined guidelines34. Usually, it has the following expression:

\(T_{0}\) and \(T\) indicate the output and input signals respectively, \(\Psi \left( {T,T_{0} } \right)\) symbolises the term for fidelity, \(\Phi \left( T \right)\) represents the output’s regularisation term, and \(\alpha\) is a coefficient that is non-negative and balances these two terms.

In order to optimise the guided image’s information and portray the target image T’s relative structure to reference image R, which is defined as the identical structural relationship that exists between the two images:

wherein \(i\) represents a pixel in the picture \((x,y)\) and \({\nabla }_{d}\) symbolises an initial derivative filter concerning the vertical \((v)\) and horizontal \((h)\) directions. The proportionate arrangement \(R(T,R)\) determines the structural variation between T and R.

The following can be used to create the muGIF optimisation objective using the definition of relative structure:

\(\alpha_{t} ,\alpha_{r} ,\beta_{t}\), and \(\beta_{r}\) are employed as non-negative constants to keep the corresponding terms balanced; \(\parallel \cdot \parallel_{2}^{2}\) denotes the \(l_{2}\) norm, \(\left| {T - T_{0} } \right|_{2}^{2}\) and \(\left| {R - R_{0} } \right|_{2}^{2}\) are used to prevent T and R from straying too far from \(T_{0}\) and \(R_{0}\).

It is difficult to solve the optimisation problem above directly. First, attempting to find a close replacement for the relative structure \(R(T,R)\):

where \(\epsilon_{t}\) and \(\epsilon_{r}\) are included to avoid division errors by zero. The corresponding optimisation objective can be replaced using the form below:

wherein \(t,t_{0} ,r\;and\;r_{0}\) are the graphical representations of \(T,T_{0} ,R\) and \(R_{0}\) accordingly. Let \(Q_{d}\) and \(P_{d}\) \(\left( {d \in \left\{ {h,v} \right\}} \right)\) show the ith diagonal element’s diagonal matrices \(\frac{1}{{\max \left( {\left| {\nabla_{d} Ti} \right|,et} \right)}}\) and \(\frac{1}{{\max \left( {\left| {\nabla_{d} Ri} \right|,er} \right)}}\) correspondingly. Consequently, the goal Function (5) is changed into

Herein, \({D}_{d}\) in the d direction, is the discrete gradient operator’s Toeplitz matrix. After muGIF filtering, Alternating Least Squares (ALS) can be used to solve Eq. (6) and get the desired outcome.

Methods

Extraction of features using VGG architecture

A DL algorithm called VGG (Visual Geometry Group) is one of the many network models that have been developed, particularly since the success of AlexNet35. The University of Oxford Visual Geometry Group employed a network with 13 convolutional and 3 fully connected layers to increase their success percentages for the 2014 ILSVRC competition. The network structure consists of a total of 41 layers, including layers named Maxpool, Softmaxlayer, Dropoutlayer, Fullconnectedlayer, and Relulayer. The classification layer is the last layer in this architecture, but this time, the input image needs to be of size 224 × 224 × 3. Unlike architectures with excessive numbers of hyperparameters, VGG employs a more straightforward structure, streamlining the architecture of its neural network in this way. In the literature, VGG16 and VGG19 are differentiated based on the quantity of layers.

Proposed methodology

Figure 3 shows the work flow of the proposed food classification model using Hybrid Transformer.

Hybrid transformer classification

The vision transformer

In computer vision, Vision Transformers (ViTs) are a cutting-edge method that challenges conventional convolutional neural networks (CNNs) in image processing applications. ViTs have demonstrated remarkable success in several computer vision benchmark tests. They are an extension of the transformer architectures initially developed for natural language processing. ViTs are based on a pure transformer architecture as opposed to conventional CNNs36.

The ability of Vision Transformers to process a given patch while weighing the significance of various patches is made possible by the self-attention mechanism. This mechanism makes the model very effective for understanding images by allowing it to capture contextual information and long-range dependencies. An attention matrix is produced by the self-attention mechanism, determining the attention scores in the input sequence between every pair of positions. During the information aggregation process, the significance of each patch is then evaluated using this matrix. The capacity to focus on various areas of the image simultaneously improves Vision Transformers’ awareness of their global context. A series of embeddings, each corresponding to a position in the input sequence, serves as the input for the self-attention block. Within the input sequence, the embeddings represent different positions or tokens. Three vectors are created from the embeddings for every position: key, query, and value, through linear transformations, respectively; the Vision Transformer learns these transformations during training. The output of a Transformer’s self-attention block is a weighted sum of the embeddings it received, derived from attention scores indicating the connections between various input sequence positions. To obtain value, key, and query vectors from each input embedding, linear transformations are applied, and the dot product of the query with the key vectors is used to calculate attention scores. The softmax function is then used to normalize these scores, creating weights reflecting the significance of each position. Each position in the result attends to other positions based on their relevance, obtaining contextual information from the entire input sequence.

One important element that enhances Vision Transformers (ViTs) is their possession of multiple heads that work together to control their attention, allowing the model to identify various patterns and connections in visual input. Concatenation and linear transformation are used to combine the outputs of these parallel attention heads into a final multi-head attention system. Several attention heads are employed, and by focusing on specific elements of the input sequence, both fine-grained and coarse-grained features can be effectively captured by the model. The multihead mechanism is a crucial component in enhancing Vision Transformers’ performance across a range of computer vision tasks, as it improves the representational capacity of the model. The following is a mathematical expression for both multi-head self-attention and self-attention. \({W}^{Q},{W}^{K},{W}^{V}\) weight matrices that were obtained for the transformations of value (V), key (K), and query (Q).

The activation functions consisting of a rectified linear unit (ReLU), two linear activation functions, and a point-wise feedforward network (FFN) receive the multi-head output of the self-attention block are integrated. First and second linear layer weight matrices are represented by X, along with the output of the preceding layer \({W}_{a}\) and \({W}_{b}\), as well as the bias vectors \({B}_{a}\) and \({B}_{b}\). The mathematical expression for the point-wise feed-forward network’s output is as follows:

The element-wise nonlinearity in the model is introduced by the common non-linear activation function, ReLU. The model is able to independently detect complex, asymmetrical patterns specific to every position thanks to this process. An enhanced and more detailed depiction of every position within the ViT is produced by the output of the point-wise FFN, making it possible for the model to recognise and focus on minute details in the input image sequence.

From the input image, a group of fixed-size, non-overlapping patches is generated for Vision Transformers (ViTs) training and inference. After linear embedding, each patch is converted by a trainable linear transformation into a vector that has been flattened. After this positional information and when the transformer’s encoder block is filled with a series of overlapping patches, the model is able to process and extract contextual and spatial information from the image in a sequential manner.

The Swin transformer

Local–global relationships and spatial hierarchies in images can be more easily captured with the Swin Transformer, thanks to its hierarchical structure and movable windows. The way the Swin Transformer works is similar to how ViT does: With the use of a proprietary patch splitting module, it divides the input image into distinct, non-overlapping patches. Every patch is regarded as a "token," and the RGB values of its raw pixel values are combined to form its features. These raw-valued features are then projected into any dimension, C, by means of a layer of linear embedding. The patch tokens in question are then subjected to a set of transformer blocks called Swin Transformer blocks, which include adjusted self-attention calculations. “Stage 1” is formed by these transformer blocks using the linear embedding, maintaining the initial token count. As the network gets deeper, for the purpose of creating a hierarchical representation, fewer tokens are employed via a layer for patch merging. By covering the 4C-dimensional concatenated features with a linear layer and merging the features of each 2 × 2 neighboring patch group, the first patch merge layer lowers the quantity of tokens by four times. The term “Stage 2” describes this initial feature transformation and patch merging block. “Stage 3” and “Stage 4” are created by repeating this process twice.

An innovative self-attention system utilizing shifted windows is presented by the Swin Transformer. In contrast to the standard transformer block’s multi-head self-attention (MSA) model, this method seeks to efficiently record local and global characteristics. Transformer architectures commonly use a system of global self-attention to calculate the connections between every token and every other token when performing vision tasks. Due to its quadratic complexity in relation to token count, for numerous vision tasks, such as dense prediction or high-resolution image presentation, this global computation is not suitable.

The shifted window’s main goal is to implement self-attention in localized windows. Every window consists of non-overlapping M × M sized patches within which self-attention is calculated. Consequently, computational complexity drops: When it comes to patch number, while the window-based multi-head self-attention (MSA) shows linear complexity, the original MSA shows quadratic complexity.

To effectively model window connections, shifted window partitioning is incorporated into the Swin Transformer, which alternates between two patterns over the course of multiple blocks. Using a standard window configuration, the first module computes local self-attention from windows that begin at the top-left pixel and are evenly spaced. Then, the following block of Swin Transformer employs a window layout that is moved by \((M/2,M/2)\) pixels belonging to the previous layer. This tactical modification improves the ability of the model to precisely represent a variety of spatial relationships. The mathematical expression for the Swin transformer blocks’ self-attention is as follows: B is the window’s relative position bias.

Many variations of there are two transformer architectures: Swin and ViT; the base models, ViT-B and Swin-B, are used in this study.

Hyper parameter tuning using IDBA

In order to tune the hybrid Transformer model’s hyperparameters, this paper suggested using IDBA37. One major improvement strategy is to map the formulations of displacement and continuous velocity into combinatorial optimization operators.

The Improved Discrete Bat Algorithm (IDBA) is designed to optimize the hyperparameters of the hybrid transformer model. The architecture of IDBA involves key components such as population initialization, fitness evaluation, velocity and position update mechanisms, and local search strategies. The main features include:

-

Population initialization: A population of bats is initialized with random solutions representing hyperparameter combinations.

-

Velocity and position update: Each bat updates its velocity and position using a dynamic adjustment formula inspired by bat echolocation, ensuring exploration and exploitation in the solution space.

-

Fitness evaluation: The fitness of each bat (solution) is evaluated based on the classification accuracy of the model.

-

Local search strategy: A local search mechanism enhances convergence by fine-tuning promising solutions.

-

Selection mechanism: Bats with the best fitness values are retained for the next iteration, driving the algorithm toward the optimal solution.

The IDBA’s primary steps are explained in the subsections that follow.

Encoding

IDBA encodes data using a vector called π. For this disassembly sequence, π stands for a workable solution. The product I disassembly task is represented by the green rectangle, with tasks 1, 3, 4, and 6 in the disassembly sequence. Similarly, the task of disassembling product II is represented by the orange rectangle, with tasks 2, 4, 3, and 5 in the disassembly order. These tasks are sequentially allocated to the respective workstations for the two products.

Generating feasible solution

It takes into account two disassembly lines, one for each type of product. It produces solutions by sequential insertion, which substantially ensures the randomness and completeness of the disassembly sequence. Algorithm 1 describes the procedures for coming up with a workable solution.

Decoding

As part of the decoding process, the corresponding workstations are assigned the disassembly sequence. According to the task assignment principle, no workstation’s total time can be longer than its cycle time. Following task assignment, each workstation’s assignment is used to calculate the objective function in the mathematical model.

Population initialization

Every member of the population in this paper corresponds to a workable disassembly sequence. The population size is determined by a specific number of individuals within the population. The encoding and population initialization processes are comparable. It uses a random insertion technique to guarantee the population’s randomness. To be more precise, product II nodes are added to product I’s disassembly order. Confirming each disassembly product’s disassembly order relationship on the parallel disassembly line is crucial when creating a workable disassembly sequence for hyperparameter tuning. Given the case study of the two easy-to-assemble product components’ parallel disassembly line, it’s possible that every product’s disassembly sequence produced by the random integer technique will not always work. Consequently, in order to make the generated sequence a workable disassembly sequence.

There are three phases to the process, which involve swap, addition, and subtraction operations. It incorporates tasks into the current unfeasible sequence during the addition stage. It is suggested that task 6 be added before task 1, task 5 before task 2, and task 8 before task 3. The subtraction stage comes next, and its goal is to eliminate tasks that conflict. Tasks that conflict cannot be completed in the same order of disassembly. For instance, task 4 is eliminated because it conflicts with task 7. The swap operation is the final step. It is not possible to guarantee that the disassembly sequence will be feasible after the first two stages are finished. This is a result of certain initial disassembly operations needing to be modified because they do not adhere to the precedence relationship. Tasks 3 and 9 require modification in order to comply with the precedence relationship.

Update of individuals

It has designed two ways to update people. The first one, which concentrates on maintaining precedence, is the Order of Priority Operator for Persistent Crossover (PPX). An operator for mutation derived from the direct predecessor of the task sequence is used in the second technique. Following the acquisition of subsequent individuals \({\pi }_{f}\), A stranger \({\pi }_{m}\) is chosen from the set of existing Pareto solutions, and the PPX operator is run. It is evident from looking at the mechanism that the operator can successfully inherit its parent’s superior genes. Algorithm 2 presents the personal update plan (PPX operator).

IDBA is a local variation strategy that can outperform the local optimal solution by escaping from it. The features of neighborhood mutation also form the basis of the mutation operator’s strategy. The compact pre-task and compact successor task of a mutation point are located after a mutation point is arbitrarily chosen. After that, neighborhood solutions can be produced by dynamically adjusting the mutation point’s ___location. As the point of random mutation, Task 6 is selected, for instance. Tasks 7 and 2 are known to be the tasks that task 6 is currently working on based on the precedence relation matrix P. Because of this, task 6 has two adjustment positions: one behind task 8, the other behind task 5. Finally, it can be placed at any random ___location at one of them.

Population update strategy

The next population is formed by combining the first n people in the population as it stands now, as per the rank and crowding distance methods. Multiple objective functions and the dominance rule are used in multi-objective optimisation to determine an individual’s level of fitness. Suppose that U has objectives. If the solution to Eq. (11) is achieved, then \({x}_{1}\) is dominated by the resolution \({x}_{0}\). When the maximum number of iterations that has been predetermined is reached, the algorithm is terminated.

Results and discussion

Experimental setup

PyTorch (pytorch.org, accessed on December 1, 2023) was used for code implementation, and the University of Arizona’s high-performance computing platform was used for all training.

Performance metrics

The dataset was used to create a classification system, and four main analytical metrics were created to assess its performance: false negative (TN), false positive (FP), true positive (TP), and false negative (FN).

A classification model’s effectiveness is assessed by calculating true assumptions to total assumptions of the assumptions that were made (ACC):

The number of positively detected examples relative to all positive examples is measured by the positive predictive value, or PR:

The proportion of cases classified as positively out of each positive instance is referred to as the real-positive rate (TPR) or sensitivity.

The F1 is an individual numerical value that represents a metric that combines PR and RC:

Feature extraction validation

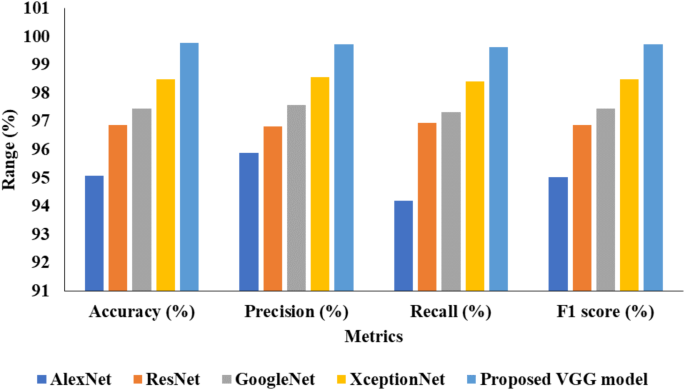

Table 1 provides the feature extraction validation analysis of the proposed VGG model with other existing models.

Table 1 presents a comprehensive feature extraction analysis comparing various DL models, including AlexNet, ResNet, GoogleNet, XceptionNet, and a proposed VGG model. Each model’s performance metrics are displayed, including F1 score, recall, accuracy, and precision. AlexNet achieved an accuracy of 95.07%, with recall, precision, and F1 score values of 95.88%, 94.19%, and 95.03%, respectively. ResNet demonstrated higher accuracy at 96.88%, along with recall, precision, and F1 score all hovering around 96.88%. GoogleNet further improved the metrics with an accuracy of 97.45% and recall, precision, and F1 score values peaking at 97.57%, 97.33%, and 97.45%, respectively. XceptionNet continued the trend of improvement, achieving an accuracy of 98.50% and exhibiting recall, precision, and F1 score values of 98.57%, 98.42%, and 98.50%. The proposed VGG model outperformed all others, achieving an exceptional accuracy of 99.77%, with recall, precision, and F1 score values of 99.72%, 99.63%, and 99.73%, respectively, indicating its superiority in feature extraction analysis. Figure 4 depicts the feature extraction analysis.

Food classification analysis

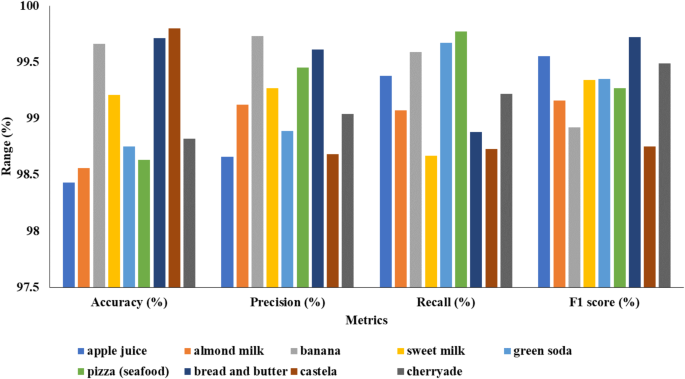

Table 2 describes the classification validation of the suggested Hybrid Transformer model using various food items.

Table 2 showcases the classification validation results of the proposed Hybrid Transformer model across various classes. Each class, including apple juice, almond milk, banana, sweet milk, green soda, pizza (seafood), bread and butter, castela, and cherryade, is evaluated based on its precision, accuracy, recall, and F1 score. Apple juice achieved an accuracy of 98.43%, with precision, recall, and F1 score values of 98.66%, 99.38%, and 99.55%, respectively. Almond milk closely followed with an accuracy of 98.56%, exhibiting recall, precision, and F1 score values of 99.12%, 99.07%, and 99.16%. Banana demonstrated the highest accuracy among the classes at 99.66%, with recall, precision, and F1 score values of 99.73%, 99.59%, and 98.92%. Sweet milk achieved an accuracy of 99.21%, showcasing recall, precision, and F1 score values of 99.27%, 98.67%, and 99.34%. Green soda maintained high performance with an accuracy of 98.75%, precision of 98.89%, recall of 99.67%, and F1 score of 99.35%. Pizza (seafood) achieved an accuracy of 98.63%, exhibiting recall, precision, and F1 score values of 99.45%, 99.77%, and 99.27%. Bread and butter excelled with an accuracy of 99.71%, showcasing recall, precision, and F1 score values of 99.61%, 98.88%, and 99.72%. Castela maintained a high accuracy of 99.80%, with precision, recall, and F1 score values of 98.68%, 98.73%, and 98.75%, respectively. Finally, cherryade demonstrated an accuracy of 98.82%, with recall, precision, and F1 score values of 99.04%, 99.22%, and 99.49%, respectively, indicating the effectiveness of the Hybrid Transformer model across diverse classification tasks. Figure 5 illustrates the analysis of the proposed model’s classification.

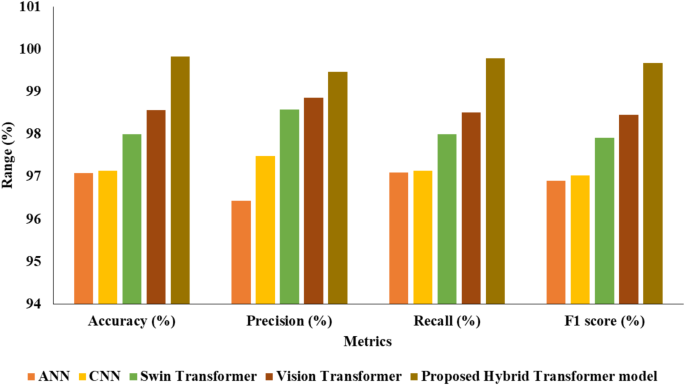

Table 3 represents the classification analysis of the suggested Hybrid Transformer model with other existing models.

Table 3 presents a comprehensive comparison of various classification models with the proposed Hybrid Transformer model. The performance metrics of each method are highlighted for comparison, including precision, accuracy, recall, and F1 score. The Artificial Neural Network (ANN) achieved an accuracy of 97.08%, with recall, precision, and F1 score values of 96.43%, 97.09%, and 96.90%, respectively. The CNN demonstrated a slightly higher accuracy of 97.14%, along with recall, precision, and F1 score values of 97.48%, 97.14%, and 97.02%, respectively. Swin Transformer improved upon these metrics, achieving an accuracy of 98.00%, with recall, precision, and F1 score values of 98.58%, 98.00%, and 97.92%, respectively. Vision Transformer further enhanced the performance, attaining an accuracy of 98.57%, with recall, precision, and F1 score values of 98.86%, 98.51%, and 98.46%, respectively. However, the proposed Hybrid Transformer model outperformed all other methods, achieving exceptional accuracy of 99.83%, with recall, precision, and F1 score values of 99.47%, 99.79%, and 99.67%, respectively, indicating its superiority in classification tasks. Figure 6 provides the comparison of classification models in graphical format.

Calorie estimation validation

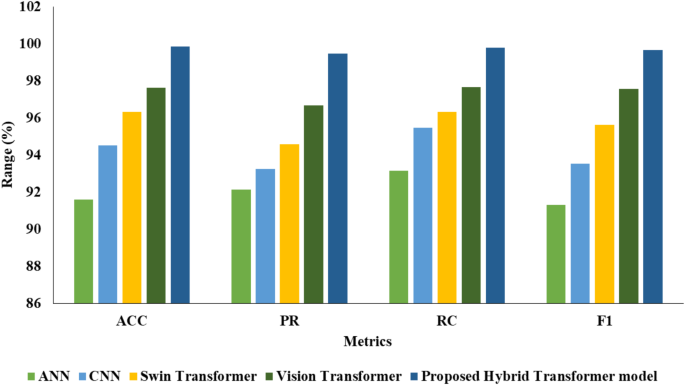

Table 4 represents the classification analysis of the proposed Hybrid Transformer model with other existing models for calorie estimation.

Table 4 provides a comparative analysis of classification models, including Artificial Neural Network (ANN), Swin Transformer, Vision Transformer, Convolutional Neural Network (CNN), and the proposed Hybrid Transformer model, focusing on their performance in calorie estimation tasks. The metrics evaluated include accuracy (ACC), precision (PR), recall (RC), and F1 score (F1). The ANN achieved an accuracy of 91.60%, with precision, recall, and F1 score values of 92.13%, 93.15%, and 91.33%, respectively. The CNN model demonstrated improved performance with an accuracy of 94.52%, along with precision, recall, and F1 score values of 93.24%, 95.47%, and 93.55%, respectively. The Swin Transformer further enhanced accuracy to 96.33%, exhibiting precision, recall, and F1 score values of 94.57%, 96.33%, and 95.62%, respectively. Vision Transformer excelled with an accuracy of 97.64%, showcasing precision, recall, and F1 score values of 96.68%, 97.65%, and 97.56%, respectively. Notably, the proposed Hybrid Transformer model outperformed all other models, achieving outstanding accuracy of 99.83%, with precision, recall, and F1 score values of 99.47%, 99.79%, and 99.67%, respectively, showcasing its effectiveness in calorie estimation tasks. Figure 7 depicts the comparison of classification models with the proposed Hybrid Transformer model for calorie estimation.

Optimization validation

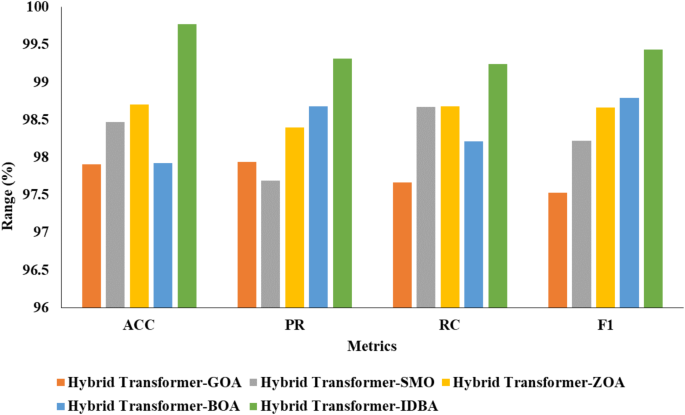

Table 5 presents the comparison table of suggested IDBA model with other existing optimization models.

In Table 5, regarding validation metrics, an analysis is provided contrasting the suggested IDBA model with other current optimization models. Accuracy (ACC), recall (RC), precision (PR), and F1-score (F1) are among the metrics that are assessed. The Hybrid Transformer-GOA (Grasshopper Optimization Algorithm) achieved an accuracy of 97.91%, with precision, recall, and F1 values of 97.94%, 97.67%, and 97.53%, respectively. The Hybrid Transformer-SMO (Spider Monkey Optimization) demonstrated improved performance, attaining an accuracy of 98.47%, along with PR, RC, and F1 values of 97.69%, 98.67%, and 98.22%, respectively. The Hybrid Transformer-ZOA (Zebra Optimization Algorithm) further enhanced the results, achieving an accuracy of 98.70%, with precision, recall, and F1 values of 98.40%, 98.68%, and 98.66%, respectively. Similarly, the Hybrid Transformer-BOA (Bat Optimization Algorithm) exhibited competitive performance, with an accuracy of 97.92%, precision of 98.68%, recall of 98.21%, and F1 of 98.79%. Notably, the proposed Hybrid Transformer-IDBA (Improved Discrete Bat Algorithm) outperformed all other models, achieving exceptional accuracy of 99.77%, precision of 99.31%, recall of 99.24%, and F1 of 99.43%, showcasing its effectiveness in optimization tasks. Figure 8 illustrates the optimization analysis of IDBA with other models.

Discussion

The results demonstrate the superior performance of the proposed hybrid transformer model, achieving a classification accuracy of 99.83% and outperforming state-of-the-art methods, including CNNs, Vision Transformers, and Swin Transformers. This improvement is attributed to the integration of global and local feature extraction capabilities, as supported by recent advancements in transformer-based architectures29,36. The use of Mutually Guided Image Filtering (MuGIF) significantly enhanced image quality, reducing noise and improving feature extraction consistency, consistent with findings in recent studies34,35.

Furthermore, hyperparameter optimization using the Improved Discrete Bat Algorithm (IDBA) played a critical role in fine-tuning the model parameters, yielding higher accuracy and robustness compared to other optimization techniques, as highlighted in related works37. The exceptional performance of the hybrid model across diverse food classes aligns with recent advancements in dietary monitoring systems, such as those reported in studies utilizing Vision Transformers for complex visual tasks.

These findings validate the efficacy of the proposed approach and highlight its potential for real-world applications in automated food recognition and calorie estimation. Future work will focus on expanding dataset diversity and implementing real-time capabilities to enhance model scalability and generalization.

Limitations

Despite the advancements in food recognition using deep learning (DL) techniques, several limitations persist. One major challenge is the variability in food presentation, such as differences in color, texture, and shape among various food items, which complicates the classification process. Environmental factors like lighting and camera angles can introduce noise and irregularities in food images, affecting the performance of classification models. Additionally, the scarcity of large-scale, well-annotated datasets hampers the training of robust DL models, leading to scalability and generalization issues. Another significant limitation is the difficulty in distinguishing between foods with similar appearances but different nutritional values, which is crucial for accurate dietary monitoring and calorie estimation. Furthermore, while various DL architectures have been proposed, there is insufficient research comparing their effectiveness in real-world settings, particularly in complex meal scenarios and diverse environmental conditions. To address these issues, more extensive datasets, sophisticated preprocessing techniques, and robust feature extraction methods are necessary.

Conclusion and future work

This study highlights the development of a robust hybrid transformer-based food recognition system that integrates advanced preprocessing, feature extraction, and optimization techniques. The proposed approach achieves a classification accuracy of 99.83%, demonstrating its effectiveness in addressing challenges such as variability in food presentation, environmental factors, and limited dataset quality. By leveraging Mutually Guided Image Filtering (MuGIF), the Visual Geometry Group (VGG) architecture, and a hybrid model combining Vision Transformer and Swin Transformer, this study establishes a significant advancement in automated dietary monitoring and calorie estimation. The integration of the Improved Discrete Bat Algorithm (IDBA) for hyperparameter tuning further enhances the system’s performance, making it well-suited for practical applications in nutritional research and personalized healthcare.

For future work, expanding the diversity and size of training datasets is crucial to improving model generalization across varied food types and environmental conditions. Additionally, implementing real-time processing capabilities on mobile and wearable devices could make this technology more accessible for everyday use. Exploring multimodal approaches that integrate other data sources, such as textual dietary logs or user health data, could further enhance the robustness and adaptability of food recognition systems in real-world scenarios.

Data availability

The datasets used and/or analyzed during the current study available from the corresponding author on reasonable request.

Code availability

The code is available from the corresponding authors on request.

References

Salim, N. O., Zeebaree, S. R., Sadeeq, M. A., Radie, A. H., Shukur, H. M. & Rashid, Z. N. Study for food recognition system using deep learning. In Journal of Physics: Conference Series Vol. 1963, 012014 (IOP Publishing, 2021).

Chouhan, S. S. et al. Classification of different plant species using deep learning and machine learning algorithms. Wirel. Pers. Commun. 136, 2275–2298 (2024).

Chopra, M. & Purwar, A. Recent studies on segmentation techniques for food recognition: A survey. Arch. Comput. Methods Eng. 29(2), 865–878 (2022).

Chouhan, S. S., Singh, U. P. & Jain, S. Introduction to computer vision and drone technology. In Applications of Computer Vision and Drone Technology in Agriculture 4.0 (eds Chouhan, S. S. et al.) 200–300 (Springer, 2024).

Zhang, Q. et al. Eliminate the hardware: Mobile terminals-oriented food recognition and weight estimation system. Front. Nutr. 9, 965801 (2022).

Joshua, S. R., Shin, S., Lee, J. H. & Kim, S. K. Health to eat: A smart plate with food recognition, classification, and weight measurement for type-2 diabetic mellitus patients’ nutrition control. Sensors 23(3), 1656 (2023).

Khan, R., Kumar, S., Dhingra, N. & Bhati, N. The use of different image recognition techniques in food safety: A study. J. Food Qual. 2021, 1–10 (2021).

Sharma, P. & Sharma, A. Hybrid approach for food recognition using various filters. Int. J. Adv. Comput. Technol. 11(1), 1–5 (2022).

Nivetha, R., Yadav, R. & Hemala, R. A multi-purpose food recognition system using convolutional neural network. In 2023 International Conference on Research Methodologies in Knowledge Management, Artificial Intelligence and Telecommunication Engineering (RMKMATE) 1–5 (IEEE, 2023)

Jiang, S., Min, W., Lyu, Y. & Liu, L. Few-shot food recognition via multi-view representation learning. ACM Trans. Multimed. Comput. Commun. Appl. TOMM 16(3), 1–20 (2020).

Zhao, H., Yap, K. H., Kot, A. C. & Duan, L. Jdnet: A joint-learning distilled network for mobile visual food recognition. IEEE J. Sel. Top. Signal Process. 14(4), 665–675 (2020).

Chouhan, S. S., Singh, U. P., Sharma, U. & Jain, S. Leaf disease segmentation and classification of Jatropha curcas L. and Pongamia pinnata L. biofuel plants using computer vision based approaches. Measurement 171, 108796 (2021).

Hussain, G. Food recognition system: A new approach based on wavelet-LSTM. Sukkur IBA J. Emerg. Technol. 6(1), 35–43 (2023).

Tran, Q. L., Lam, G. H., Le, Q. N., Tran, T. H. & Do, T. H. A comparison of several approaches for image recognition used in food recommendation system. In 2021 IEEE International Conference on Communication, Networks and Satellite (COMNETSAT) 284–289 (IEEE, 2021).

Kumar, R. et al. Hybrid approach of cotton disease detection for enhanced crop health and yield. IEEE Access 12, 132495–132507. https://doi.org/10.1109/ACCESS.2024.3419906 (2024).

Khan, M. I., Acharya, B. & Chaurasiya, R. K. iHearken: Chewing sound signal analysis based food intake recognition system using Bi-LSTM softmax network. Comput. Methods Prog. Biomed. 221, 106843 (2022).

Tahir, G. A. & Loo, C. K. An open-ended continual learning for food recognition using class incremental extreme learning machines. IEEE Access 8, 82328–82346 (2020).

Rahmat, R. A. & Kutty, S. B. Malaysian food recognition using alexnet CNN and transfer learning. In 2021 IEEE 11th IEEE Symposium on Computer Applications & Industrial Electronics (ISCAIE) 59–64 (IEEE, 2021).

Sirajum Munira, S. et al. A Real-Time Junk Food Recognition System Based on Machine Learning (Springer, 2022).

Yuan, H. & Yan, M. Food object recognition and intelligent billing system based on Cascade R-CNN. In 2020 International Conference on Culture-oriented Science & Technology (ICCST) 80–84 (IEEE, 2020).

Kim, J. H., Lee, D. S. & Kwon, S. K. Food classification and meal intake amount estimation through deep learning. Appl. Sci. 13(9), 5742 (2023).

Potărniche, I. A., Saroși, C., Terebeș, R. M., Szolga, L. & Gălătuș, R. Classification of food additives using UV spectroscopy and one-dimensional convolutional neural network. Sensors 23(17), 7517 (2023).

Li, T. et al. Deep learning-based near-infrared hyperspectral imaging for food nutrition estimation. Foods 12(17), 3145 (2023).

Agarwal, R., Choudhury, T., Ahuja, N. J. & Sarkar, T. Hybrid deep learning algorithm-based food recognition and calorie estimation. J. Food Process. Preserv. (2023).

Liu, Y. C., Onthoni, D. D., Mohapatra, S., Irianti, D. & Sahoo, P. K. Deep-learning-assisted multi-dish food recognition application for dietary intake reporting. Electronics 11(10), 1626 (2022).

Mukhiddinov, M., Muminov, A. & Cho, J. Improved classification approach for fruits and vegetables freshness based on deep learning. Sensors 22(21), 8192 (2022).

Min, W., Wang, Z., Liu, Y., Luo, M., Kang, L., Wei, X., Wei, X. & Jiang, S. Large scale visual food recognition. IEEE Trans. Pattern Anal. Mach. Intell. (2023).

Abiyev, R. & Adepoju, J. Automatic food recognition using deep convolutional neural networks with self-attention mechanism. Hum. Centric Intell. Syst. 1–16 (2024).

Sheng, G. et al. A lightweight hybrid model with ___location-preserving ViT for efficient food recognition. Nutrients 16(2), 200 (2024).

Shams, M. Y., Hussien, A., Atiya, A., Medhat, L. & Bhatnagar, R. Food item recognition and calories estimation using YOLOv5. In International Conference on Computer & Communication Technologies 241–252 (Springer Nature Singapore, 2023).

Mohanty, S. P. et al. The food recognition benchmark: Using deep learning to recognize food in images. Front. Nutr. 9, 875143 (2022).

Puli, M. S., Surarapu, M. S. S., Prajitha, K., Shreshta, A., Reddy, G. N. & Simha Reddy, K. V. Food calorie estimation using convolutional neural network. J. Surv. Fish. Sci. 2665–2671 (2023).

Lee, K. S. Multi-spectral food classification and caloric estimation using predicted images. Foods 13(4), 551 (2024).

Zhan, Y., Hu, D., Yu, X. & Wang, Y. Hyperspectral image classification based on mutually guided image filtering. Remote Sens. 16(5), 870 (2024).

Güler, M. & Namlı, E. Brain tumor detection with deep learning methods’ classifier optimization using medical images. Appl. Sci. 14(2), 642 (2024).

Song, B. et al. Classification of mobile-based oral cancer images using the vision transformer and the Swin transformer. Cancers 16(5), 987 (2024).

Zhang, Q. et al. An improved discrete bat algorithm for multi-objective partial parallel disassembly line balancing problem. Mathematics 12(5), 703 (2024).

Funding

The authors declare that no funds, grants, or other support were received during the preparation of this manuscript.

Author information

Authors and Affiliations

Contributions

All authors contributed equally.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Ethics approval

The submitted work is original and has not been published elsewhere in any form or language.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Jagadesh, B.N., Mantena, S.V., Sathe, A.P. et al. Enhancing food recognition accuracy using hybrid transformer models and image preprocessing techniques. Sci Rep 15, 5591 (2025). https://doi.org/10.1038/s41598-025-90244-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-025-90244-4