Abstract

In the modern digital era, owing to technological progressions, the diversification and intensity of cyber-attacks have attained an extraordinary level. Unlike network users, intruders use technological developments and implement attacks to cause operational disruptions, data breaches, and financial losses. The Denial-of-Wallet (DoW) attack adapts the standard Denial-of-Service (DoS) attack. The principle of either attack is equivalent: to use the feedback capability to flood requirements to a service, making it unable to utilize it correctly. The DoW attack goal is to use the limitation of the calculating capability dealing with the cloud service, trying to cause direct financial loss. Federated Learning (FL) has been developed as a guaranteed solution for detecting DoW. This model deals with safety concerns, minimizes the data breach risk, and improves scalability. This manuscript presents a Cyberattack Detection Model for Denial-Of-Wallet Using Advanced Metaheuristic Optimization Algorithms in Federated Learning (CDMDoW-AMOAFL) model. The proposed CDMDoW-AMOAFL model aims to detect and mitigate malicious activities in a network. The z-score normalization is initially applied in the data normalization stage to transform input data into a beneficial format. Furthermore, the proposed CDMDoW-AMOAFL method utilizes the Harris hawk optimization (HHO) model for the feature selection process to identify and select the most relevant features from a dataset. For cyberattack detection, the ensemble models, namely the gated recurrent unit (GRU), temporal convolutional network (TCN), and convolutional autoencoder (CAE) models, are employed. Finally, the modified marine predator algorithm (MMPA) optimally adjusts ensemble models’ hyperparameter values, resulting in better classification performance. A wide-ranging experimentation was performed to prove the performance of the CDMDoW-AMOAFL method under the DoW attack detection dataset. The performance validation of the CDMDoW-AMOAFL technique illustrated a superior accuracy value of 98.12% over existing models.

Similar content being viewed by others

Introduction

The Internet of Things (IoT) utilizes small gadgets to manage and monitor various processes, which is significant to daily routines nowadays1. IoT permits connected gadgets to interact and communicate for a particular drive without the requirement for human interference. These gadgets contain a range of qualities and properties that assist machine-to-machine communications, paving the method for a broad array of technologies and applications2. There are dual kinds of threats in IoT methods: active and passive. Passive threats do not interfere with data and remove sensitive data without recognition. Active threats target methods and accomplish harmful actions that undermine the system’s integrity and privacy3. Cyberattacks and cybercrimes have increased considerably in frequency over the last few years. Several examples of attackers taking control of such gadgets and utilizing bots for launching DoW attacks have been recognized4. Serverless computing is an application utilization structure intended to offer pay-as-you-go event-driven performance. Applications are advanced per every desirable method, and the event implores it5. Serverless function platforms provide the structure to employ code for execution through their cloud and describe the event processing logic that instigates the functions to run utilizing the method. With its association of pay-as-you-go computing and its ability for massive scaling, it permits for the execution of possible threats, DoW that is, the continual invocation and intentional mass of serverless functions, causing economic fatigue of the victim in the form of inflated utilization of bills6.

As DoW is a financial threat, testing such on real commercial platforms is unreasonable. This threat is presently deliberated theoretically as there have been unknown examples of targeted DoW in the wild. Nevertheless, this is not an assuring indicator that has neither been performed7. Given that the only object of DoW is the application’s bill payer, end users would not be directly affected by the threats. As such, it would not be necessary for a business to reveal that they were attacked, maintaining its secret. Artificial intelligence (AI)-based deep learning (DL) and machine learning (ML) models have been given attention in cyber threat recognition. FL is a progressive model with a substantial possibility of enhancing the intellect of IoT gadgets with their level of privacy and security8. While it originates from traditional ML, training models frequently utilize centralized data stores9. Conversely, since information might be stored on an extensive array of gadgets, this approach is viable and unsafe for IoT methods10. Furthermore, FL assists continuous learning and alteration by allowing local technique upgrades without persistent interaction with a centralized server.

This manuscript presents a Cyberattack Detection Model for Denial-Of-Wallet Using Advanced Metaheuristic Optimization Algorithms in Federated Learning (CDMDoW-AMOAFL) model. The proposed CDMDoW-AMOAFL model aims to detect and mitigate malicious activities in a network. The z-score normalization is initially applied in the data normalization stage to transform input data into a beneficial format. Furthermore, the proposed CDMDoW-AMOAFL method utilizes the Harris hawk optimization (HHO) model for the feature selection process to identify and select the most relevant features from a dataset. For cyberattack detection, the ensemble models, namely the gated recurrent unit (GRU), temporal convolutional network (TCN), and convolutional autoencoder (CAE) models, are employed. Finally, the modified marine predator algorithm (MMPA) optimally adjusts ensemble models’ hyperparameter values, resulting in better classification performance. A wide-ranging experimentation was performed to prove the performance of the CDMDoW-AMOAFL method under the DoW attack detection dataset. The key contribution of the CDMDoW-AMOAFL method is listed below.

-

The CDMDoW-AMOAFL technique employs z-score normalization for data pre-processing, ensuring the input features are standardized. This step enhances the model’s performance and stability by transforming the data into a consistent scale. It also improves the accuracy of subsequent steps, such as feature selection and model training.

-

The CDMDoW-AMOAFL model utilizes HHO-based feature selection to detect the most relevant features for cyberattack detection. This methodology mitigates dimensionality and eliminates noise, resulting in a more efficient model. As a result, the accuracy and generalization capability of the model are significantly improved.

-

The CDMDoW-AMOAFL approach integrates an ensemble of GRU, TCN, and CAE to detect cyberattacks. This hybrid method utilizes the merits of every model type, capturing both temporal dependencies and intrinsic features. By incorporating these techniques, the model improves its capability to detect a wide range of cyberattack patterns.

-

The CDMDoW-AMOAFL method implements the MMPA model for parameter tuning, optimizing hyperparameters to enhance performance. This adaptive approach ensures that the model attains the optimum possible configuration for cyberattack detection, significantly improving its accuracy and efficiency.

-

Integrating advanced techniques, such as HHO-based feature selection, GRU, TCN, CAE ensemble, and MMPA-based tuning, presents a novel and highly effective approach to cyberattack detection. This unique integration improves the model’s accuracy and capability to handle diverse attack patterns. Using HHO for feature selection and MMPA for tuning further sets it apart from conventional methods, presenting a more robust solution.

Literature review

Saveetha et al.11 developed a distributed ML method named Federated ML for identifying the occurrence of DDoS threats. However, in federated ML, the method itself might be infected by the harmful collaborative node, which is another concern this paper resolved by storing the technique in blockchain (BC) and by presenting a novel reputation-based miner selection process. The introduced structure incorporates the federation of ML inside the BC method structure for identifying DDoS threats. The authors12 project a POA with an FL-driven Attack Detection and Classification (POAFL-DDC) model in IoT. The DBN technique is utilized in this paper for threat recognition. During this work, the POA is employed to enhance the DBN hyper-parameters. Mansouri et al.13 introduce an intellectually distributed method that utilizes BC and FL for intrusion detection in VANET. Within FL, several NN techniques were applied to distribute model training between vehicles, consequently preserving confidentiality. For instance, compared with a conventionally trained SGD technique, the Federated Trained Model attained higher accuracy through multiple threat categories. Zainudin et al.14 developed a reliable and secure BFL-based IDS structure utilizing a lightweight method for safeguarding the metaverse. The developed federated IDS applied a hybrid client selection (HCS) model to choose higher-quality metaverse edge gadgets. Shirvani et al.15 develop a novel approach to bolster IoT security systems against DDoS threats by leveraging the power of FL that permits various IoT gadgets or edge nodes to collectively develop a global model while preserving data confidentiality and reducing interaction expenses. This projected structure employs IoT gadgets’ collective intellect for real-world threat recognition without yielding sensitive data. In16, an innovative FDIA recognition model is developed depending on FL; a global recognition technique is created. In the presented model, the state owners accomplish an FL model utilizing their information that evades massive data transmission and safeguards data confidentiality. Liu et al.17 introduce an asynchronous FL arbitration structure depending on BiLSTM and attention mechanism (AsyncFL-bLAM). Additionally, the new AsyncFL structure assists in aggregating and uploading the bLAM model parameters asynchronously among client and leader nodes. Husnoo et al.18 introduce a new communication-efficient attack detection framework and FL-based privacy-preserving FedDiSC, allowing the Difference between Cyberattacks and Power System disturbances. An FL method is mainly utilized to enable data acquisition and supervisory control sub-systems of decentralized power grid zones to collectively train a threat recognition technique without sharing sensitive power-related data. Finally, the projected structure needs to be changed for real-time cyber threat recognition in SG.

Saheed and Misra19 present a novel, explainable, privacy-preserving deep neural network (DNN) framework for anomaly detection in CPS-enabled IoT networks. This model utilizes SHpley Additive exPlanations (SHAP) to clarify the DNN’s decision-making, assisting users and cybersecurity experts in validating system resilience. Saheed et al.20 propose a novel hybrid Autoencoder and Modified Particle Swarm Optimization (HAEMPSO) technique for feature selection, integrated with a DNN model for classification. The modified PSO with inertia weight optimization improves the performance of the DNN in terms of accuracy and detection rate. Saheed, Omole, and Sabit21 introduced the genetic algorithm with an attention mechanism and modified the Adam-optimized LSTM (GA-mADAM-IIoT) method. It features six modules: GA for feature selection, mADAM for LSTM optimization, and CCE for cost function optimization, improving model accuracy and performance. Saheed and Chukwuere22 propose an eXplainable AI (XAI) Ensemble Transfer Learning model for detecting zero-day botnet attacks in the Internet of Vehicles (IoV). The model incorporates SHAP for transparency, bidirectional long-short-term memory with autoencoders (BiLAE) for dimensionality reduction, and Barnacle Mating Optimizer (BMO) for optimizing hyperparameters in a convolutional neural network with transfer learning (CNN-TL) architectures like ResNet and MobileNet. Fahim-Ul-Islam et al.23 introduce the federated kolmogorov-arnold network (FedIoMT) method by integrating meta-learning and advanced clustering for robust model aggregation. It also utilizes a novel intrusion detection model that uses the kolmogorov-arnold convolutional network (KANConvNet) as its local classifier. Fereidouni et al.24 introduce a Federated Risk-based Authentication (F-RBA) model for privacy-preserving, scalable authentication. It also utilizes similarity-based feature engineering to handle heterogeneous data, enabling secure, real-time risk evaluation and swift adaptation for new users. Nguyen et al.25 propose a federated NIDS (FedNIDS), a two-stage framework incorporating FL and DNNs to improve attack detection, robustness, and privacy. The first stage trains a global DNN model collaboratively, while the second adapts it to detect novel attack patterns. Zou et al.26 present an edge-assisted FL (E-FPKD) approach for cyber-attack prevention in EV charging stations. The model utilizes feature selection, knowledge distillation, and detection correctness maximization, followed by rule-based intervention at edge servers. Loganathan and SelvakumaraSamy27 present a BC-based, smart contract-aided mechanism for secure, efficient, and anonymous privacy-preserving authentication in IoV networks. The model utilizes ML with HANet for feature selection and MLP/Ridge classifiers to detect malware while optimizing parameters through the Opposition Beluga Whale Optimization (OBWO) methodology. Khaleel et al.28 analyze adversarial attack challenges, offer recommendations, and review cybersecurity applications like intrusion detection and ML defences, underscoring trends and gaps for improved mitigation against advanced threats.

Despite the improvements in cyber-attack detection and prevention mechanisms, various limitations and research gaps remain. Many existing models face difficulty handling non-IID data, which can mitigate the accuracy of FL-based systems. Moreover, some approaches’ reliance on centralized data storage exhibits privacy and security risks. While diverse methods have been proposed to improve model interpretability, many still face difficulty addressing ML models’ “black-box” nature. Furthermore, the existing methods often lack scalability, making them inappropriate for large-scale, real-time applications. There is also a requirement for enhanced optimization techniques to handle the complexity of modern IoT and vehicle networks. Future research should improve these models’ robustness, adaptability, and transparency to tackle growing threats efficiently.

The proposed methodology

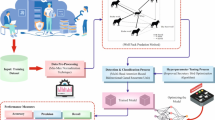

This manuscript presents a novel CDMDoW-AMOAFL model. The proposed model aims to detect and mitigate malicious activities in a network. To accomplish that, the CDMDoW-AMOAFL method uses data normalization, feature selection using HHO, an ensemble of cyberattack detection, and hyperparameter selection. Figure 1 depicts the entire procedure of the CDMDoW-AMOAFL method.

Z-score normalization

At first, the z-score normalization is applied in the data normalization stage to transform input data into a beneficial format29. This technique is chosen for its simplicity and efficiency in standardizing data, ensuring all features have a mean of 0 and a standard deviation of 1. This technique is specifically useful when dealing with datasets with varying scales across features, as it prevents features with larger magnitudes from dominating the model training process. Z-score normalization enhances the convergence rate of optimization algorithms, making the learning process more stable and faster. Compared to other normalization methods like min-max scaling, Z-score normalization is less sensitive to outliers, which is significant when working with real-world data that may contain anomalies. Furthermore, it makes the model more generalizable, enhancing performance across diverse models and preventing overfitting. Overall, its balance of efficiency and robustness makes it an ideal choice for pre-processing in ML tasks.

Z-score normalization is a data pre-processing approach extensively applied in cybersecurity to process DoW attacks. It standardizes features by rescaling them having a standard deviation of 1 and a mean of 0, guaranteeing constant scaling through datasets. During DoW attack recognition, it assists in normalizing the volume of transactions, resource usage metrics, and frequency, improving the anomaly detection accuracy of the models. Removing biases from feature scaling enhances the recognition of refined patterns representative of DoW attacks. This model is essential for improving the strength of ML-based intrusion detection systems in cybersecurity.

HHO-based feature selection

Next, the proposed CDMDoW-AMOAFL technique utilizes the HHO model for the feature selection process to identify and select the most relevant features from a dataset30. This model was chosen for its capability to detect the most relevant features effectually while mitigating dimensionality. By utilizing the HHO approach, the method replicates natural hunting strategies to explore and exploit the feature space, ensuring an optimal selection of features that contribute the most to model performance. Compared to conventional techniques such as recursive feature elimination (RFE) or filter methods, HHO-based feature selection is more robust in handling complex, high-dimensional datasets. It averts overfitting by concentrating on the most influential features, thus enhancing model generalization. Moreover, the global search capability of the HHO model assists in escaping local minima, making it more efficient for feature selection in challenging problems. This results in a more accurate, efficient model that requires fewer computational resources without sacrificing performance. Figure 2 illustrates the steps involved in the HHO method.

Since then, the HHO model has been employed to resolve numerous engineering issues. The HHO is a metaheuristic model, which is best for searching performance. Its four exploitation models might search solution space globally and locally, making the model more effective than others. The HHO technique was stimulated by the intellectual Harris hawk hunting behaviour, which searches for its prey (rabbit).

Initial population.

It signifies the initial locations of hawks. It is made dependent upon a randomly generated selection. Initially, an input \(\:N\) and the total amount of hawks are taken. Next, random hawks are made by eliminating edges randomly to generate an arbitrary graph cut. In the HHO stage, the cut is enhanced in every iteration. \(\:T\) restrains the maximum amount of iteration. In every iteration, the hawk’s locations were upgraded. The stages of HHO are explained below:

Stage 1: Exploration stage. It defines how a Harris hawk discovers the prey search space. Now, hawks are agents, and the prey ___location is the finest candidate solution. Perceiving rabbit is based upon dual tactics. The first tactic \(\:(\)if \(\:q<0.5)\) states perceiving prey as per the locations of other hawks \(\:({X}_{i},i=\text{1,2},3,\dots\:,N\) while \(\:N\) denotes the entire amount of Hawks. The 2nd tactic \(\:(\)if \(\:q\ge\:0.5)\) indicates the recognition of prey as per for balancing on a random tree \(\:{X}_{rand}\). The dual tactics are demonstrated below in Eq. (1).

\(\:{X}_{m}\) is calculated as follows.

Stage 2: Transition stage. It is an in-between phase among the exploration and exploitation phases. It is mathematically formulated below in Eq. (3)

While \(\:E\) denotes the escaping energy of prey, \(\:{E}_{0}\) refers to initial energy, \(\:t\) represents the present iteration count, and \(\:T\) refers to maximum iteration. \(\:{E}_{0}\) value fluctuates within an interval of [-1, 1].

If the value increases from \(\:0\) to 1, the rabbit intensifies, and reducing it from 0 to -1 weakens it. If \(\:\left|E\right|\ge\:1\), the technique arrives at an exploration stage or transfers to an exploitation stage.

Stage 3: Exploitation stage. The dual significant features are hawks’ chase tactics and the prey’s escape behaviour. The four attack tactics depend on the strength of the prey.

-

Tactic 1: (Soft Besiege) When the escaping energy of a rabbit is sufficient and attempts to escape by arbitrary jumps, but it cannot able to escape effectively, \(\:\left|E\right|\ge\:0.5\) and \(\:r\ge\:0.5\). Next, hawks can effortlessly search for prey. The calculations are expressed below:

While \(\:\varDelta\:X\left(t\right)\) signifies the variance among the ___location vector and current position in the \(\:tth\) iteration and jump power of the rabbit throughout the escape process, \(\:J=2\left(1-r5\right)\), meanwhile, \(\:r5\) denotes a randomly generated variable within an interval of \(\:\left[\text{0,1}\right]\). The \(\:J\) value varies at random in every iteration.

-

Tactic 2: (Hard Besiege) If the prey is tired and cannot escape owing to poorer energy, this tactic has been employed. The present locations are upgraded by utilizing the below-mentioned formulation:

-

Tactic 3: (Soft Besiege with Progressive Fast Dives). It is employed when the rabbit has sufficient energy to escape, but the hawks quietly make a soft besiege before the surprise jump. It is smarter than the soft besiege.

The Levy Flight theory was employed in HHO to model prey’s escape. It is employed to mimic random jump and zigzag for escaping. Its mathematical formulation is expressed below:

Here, \(\:D\) denotes the size, \(\:S\) refers to a randomly generated vector of dimension \(\:1\)x\(\:D\), and \(\:LF\) represents the function of Levy Flight.

Whereas \(\:\mu\:\) and \(\:\nu\:\) are randomly generated variables that drained from usual distributions

\(\:\mu\:\sim\:N\left(0,\:{\sigma\:}_{\mu\:}^{2}\right),\:v\sim\:N\left(0,\:{\sigma\:}_{v}^{2}\right),\beta\:\) refers to a Levy distribution parameter within the range of \(\:1<\beta\:\le\:2,\) and

The hawks quickly jump to make the prey exhausted and grab it. The mathematical formulation of Hawk’s next move is given below:

The final tactic for upgrading the Hawks’ ___location is implemented as follows:

-

Tactic 4: (Hard Besiege with Progressive Fast Dives) When escape energy is less than 50% \(\:\left(\left|E\right|<0.5\right)\) and an escape likelihood is less than 50% \(\:\left(r<0.5\right)\). To attain this, they obey a specific regulation set throughout the state of hard besiege.

Here,

\(\:{X}_{m}\left(t\right)\) is attained by utilizing Eq. (2), and \(\:Z\) is acquired by employing Eq. (8)

The fitness function (FF) considers the classification precision and the designated feature counts.

Whereas \(\:ErrorRate\) denotes the classification rate of error employing the designated features. \(\:ErrorRate\) is computed as the number of incorrect classifieds to the number of classifications completed, specified as values amongst (0,1). \(\:\#SF\) refers to selected feature counts, and \(\:\#All\_F\) embodies the total number of features in the novel data. \(\:\alpha\:\) has been applied for controlling the significance of subset length and classification quality.

Ensemble of cyberattack detection

The ensemble models, namely the GRU method, TCN technique, and CAE model, are employed for cyberattack detection. The ensemble model is chosen for their complementary merits in detecting cyberattacks. GRU is particularly effectual at capturing temporal dependencies in sequential data, which is crucial for detecting patterns in attack behaviour over time. TCN outperforms at learning long-range dependencies in time series data, giving a deeper comprehension of temporal dynamics without the vanishing gradient problem typical of traditional RNNs. CAE assists in detecting anomalies by learning effectual representations of data and highlighting outliers, making it efficient for feature extraction and anomaly detection. By incorporating these three models, the ensemble employs the merits of each, enhancing the robustness and accuracy of cyberattack detection. This multi-model approach enhances the capability to capture intrinsic patterns and adapt to diverse attacks, outperforming single-model techniques that may face difficulty handling diverse attack behaviours.

GRU model

The GRU method is a recursive DL model, which does not vanish information rapidly31. In comparison with LSTM, the GRU approach is easier. It contains only dual gates: an update (\(\:z\)) and the reset (\(\:r\)) gate in its framework. It has one lower gate than the LSTM. One of the most extensively applied recurrent neural network (RNN) methods now, GRU is fundamentally an LSTM version. It mainly integrates forget and input gates of LSTM into particular update gates, limiting the training parameters and reducing the training complexity. The update gate saves preceding information to the present state. The input sequence of the following cell is associated with the previous memory cell by the reset gate. The reset gate can select whether to incorporate the preceding information by the current state. GRU contains lower parameters and a faster training period of convergence. The Mean Absolute Error (MAE) loss function is applied to compute the differences between the expected and actual values. The connections among the pertinent amounts are determined by the equations that follow.

Whereas \(\:r\) and \(\:z\) represent reset and update gate, correspondingly, \(\:ht\:\)and\(\:\:{h}^{\sim\:}t\) mean hidden state and candidate hidden state; correspondingly, \(\:Wz\:\)and \(\:Wr\) represent weighted parameters being trained, and \(\:bz\) and \(\:br\) signify noise vectors. These gates control the information flow, which is applied to store the projected values for random periods.

TCN classifier

The TCN is made from 3 basic units, for example, residual connection, causal convolution, and extended convolution, that join the benefits of an RNN and a CNN32. It successfully prevents gradient explosions or gradient hours, which frequently take place in RNNs. The TCN highlights time connection in the prediction of time series. Assumed the sequence of inputs \(\:{X}^{t+1}={x}_{0},{x}_{1},\dots\:,{x}_{\tau\:}\) and the consistently targeted sequence of output \(\:{Y}^{\tau\:+1}={y}_{0},{y}_{1},\dots\:,{y}_{\tau\:}\), the projecting output \(\:{y}_{t}\) for time step \(\:t\) is limited to is determined by the inputs till and comprising time \(\:t\), for example: \(\:{x}_{0},{x}_{1},\dots\:,{x}_{t}\). These guarantees that all predictions \(\:{y}_{t}\) are produced based only on the detected data till that point and the sequence of output prediction is stated as

Whereas \(\:{F}_{\theta\:}\left(\right)\) offers the forward propagation procedure within the NN, \(\:\theta\:\) epitomizes the network parameters.

The architecture guarantees that upcoming data doesn’t spread to the previous. The convolutional layer of TCN avoids the particular step size by sampling at random throughout \(\:Conv\) such that a large receptive area and lengthier time-series dependency are gained at a similar output size. Provided a \(\:1D\) inputs sequence \(\:x\in\:{R}^{n}\) and \(\:Conv\) filters map \(\:0,\cdots\:,k-1\in\:R\), the dilated \(\:Conv\) for the modules \(\:s\) within the sequence is described as shown:

Meanwhile, \(\:k\) characterizes the \(\:Conv\) kernel size, and \(\:s-d\cdot\:j\) seizures previous data. The dilation feature \(\:d\) controls the amount of \(\:0\:\)vectors lying between nearby \(\:Conv\) kernels. Using all applications of the convolutional layer to the sequence of inputs, the dilation feature \(\:d\) produces exponentially.

Nevertheless, as the networking layer counts rise considerably, issues like gradient attenuation or vanishing gradient may occur, mainly in deeper systems handling composite time-series data. To deal with this challenge, cautiously created residual blocks are combined into the TCN. These blocks utilize dilated causal convolutions to enlarge the receptive area while keeping the computation complexity and connection needed for modelling the sequence. All residual blocks comprise numerous dilated convolution layers, \(\:ReLU\) activation functions, weight normalization, and dropout for improved stability and regularization. Moreover, the residual block incorporates inverse and positive residual elements, allowing the method to take bi-directional temporal dependencies successfully. Skip connections are applied to utilize \(\:1\text{x}1\) convolutions, permitting the input feature’s direct mapping to the output, whereas fine-tuning the dimensionality is required. During this residual block, let \(\:x\) remain output from the preceding layer, and let \(\:F\left(x\right)\) remain the outcome of the process carried out by the present layer. Formerly, the amount of \(\:F\left(x\right)\) and \(\:x\) is passed over the activation function of ReLU to get the last output, \(\:y\). This method is stated as

Whereas \(\:x\) refers to input from the preceding layer, \(\:F\left(x\right)\) signifies the converted output of the present layer, and \(\:y\) denotes the last output afterwards using the activation function of \(\:ReLU\). The residual connection guarantees that the present layer output is the calculation of the converted features and the novel input characteristics, letting the method for learning identity mappings well if required.

CAE technique

The CAE method is applied to the classification model33. This approach was chosen over another because it efficiently takes hierarchic models and spatial dependences significant to data. Unlike CAEs, it uses \(\:Conv\) layers to manage intricate data structures, particularly images, while local relations amongst pixels are essential. This framework permits the CAE to successfully research important illustrations utilizing the spatial area of the features. Considering numerous language characteristics, CAEs are modified to manage textual data like sequences of characters or words and use the \(\:Conv\) process over these sequences to seize syntactical and semantical models effectively. These capabilities fine-tune CAEs for machine translation, sentiment analysis, or text creation tasks. Besides, CAEs represent more substantial noise flexibility in the audio processing of data. It might denoise the signal and remove applicable characteristics over contextual distortions. Figure 3 portrays the infrastructure of CAE.

AE is the self-regulated learning model comprising a decoder and an encoder to remove deeper characteristics. This comprises neural networks (NNs). The AE is ANN, incorporating three-layer developments linked in sequence, accurately, the input, output, and hidden layers (HL), where the whole layer functions in USL systems. Assuming an unidentified input data set \(\:{X}_{n}\), while \(\:n=\text{1,2},\dots\:,N\) and \(\:{x}_{n}\in\:{R}^{m}\), the dual stages are specified as demonstrated:

If \(\:h\left(x\right)\) represents the encoding vector originating from the vector of input \(\:x,\) \(\:X\) references the decoder. Moreover, \(\:f\) and \(\:g\:\)signify the encoder and decoder functions, \(\:{W}_{1}\) and \(\:{W}_{2}\) and \(\:{b}_{1}\) and \(\:b\) represent the decoding’s and encoding’s weighted matrix and biased vectors. Stacking many layers of AE is promising, and beneficial higher-level characteristics have been achieved with fewer capabilities, namely abstraction and invariance. A lower error reconstruction should be reached; thus, a better decrease is calculated.

CAE is a kind of AE that combines convolution kernels using NNs. ID-CAE has a robust reconstruction capability and successfully extracts deeper characteristics from higher‐dimension data.

Convolutional layer: During \(\:X\in\:{R}^{L}\), the \(\:1D\) \(\:Conv\) layer utilizes \(\:K\) \(\:Conv\) kernels \(\:{\omega\:}_{i}\in\:{R}^{w}(i=\text{1,2},\:\dots\:,\:K)\) of width \(\:w\) to implement the \(\:Conv\) function over it, stated in Eq. (24).

Now, \(\:b\) implies the bias, \(\:\odot\:\) signifies the convolution calculation of the convolutional kernel and input variable, and \(\:f\) implies the activation function.

Pooling layer: During \(\:T\in\:{R}^{K\odot\:L}\), the wide-ranging usage of\(\:\:\text{m}\text{a}\text{x}-\text{p}\text{o}\text{o}\text{l}\text{i}\text{n}\text{g}\) prompts Eq. (25) assuming the pooling model.

While \(\:S\) signifies the stride, \(\:W\) stands for the pooling window width, and \(\:{T}_{i}\) represents the \(\:i\:th\) feature tensor.

Hyperparameter selection using MMPA

Finally, the MMPA optimally adjusts the hyperparameter values of ensemble models and outcomes in more excellent classification performance34. This model is chosen due to its efficient global search capabilities, which assist in detecting the optimal set of hyperparameters for the model. MMPA replicates the hunting behaviour of marine predators, allowing it to explore the search space effectually while averting local optima, a common issue in conventional optimization methods. Unlike grid or random search, MMPA adapts to the complexity of the problem, giving a more refined search process that results in an improved performance. It also balances exploration and exploitation, making it appropriate for tuning hyperparameters in high-dimensional, nonlinear settings. The robustness, flexibility, and capability of the MMPA model to handle large search spaces give it a significant advantage over simpler methods like gradient-based optimization or evolutionary algorithms. As a result, MMPA improves the model’s accuracy, stability, and efficiency, making it a valuable tool for fine-tuning complex models. Figure 4 depicts the overall architecture of the MMPA technique.

This paper initially presents the typical MPA, followed by an MMPA approach using four modifications according to the normal MPA. Finally, the implementation stages of the MMPA are explained.

During this typical MPA, the prey matrices \(\:P\) and the elite \(\:E\) are described as shown:

On the other hand, \(\:N\) denotes the size of the population, and \(\:D\) means dimension. The symbols \(\:{X}_{i,j}\) in the matrix, \(\:P\) characterize the \(\:j\:th\) size of the \(\:i\:th\) prey. XB’s notation signifies the best predator vector with the finest fitness simulated \(\:N\) times to build the elite matrix \(\:E.\) The first ___location \(\:{X}_{0}\) of MPA is arbitrary and is established by the succeeding equation.

The notations \(\:{X}_{\text{m}\text{a}\text{x}}\) and \(\:{X}_{\text{m}\text{i}\text{n}}\) symbolize the lower and upper limits of the primary individual, correspondingly. \(\:rand\) stands for a randomly generated uniform number from the interval of \(\:\left[\text{0,1}\right].\)

Phase1: The prey runs quicker than the predator associated with the exploration phase. The predator’s optimal action plan is to stay even, whereas the prey searches for optimization in broad varieties.

However, \(\:Iter<{\text{M}\text{a}\text{x}}_{-}Iter/3\)

\(\:Iter\) signifies the present iteration counts. \(\:Max\_Iter\) symbolizes the maximal iteration counts. \(\:Stepsiz{e}_{i}\) refers to the movement phase of individual populations. \(\:{M}_{B}\) indicates the Brownian motion. The notation \(\:\otimes\:\) characterizes the product of dual vectors. \(\:{R}_{v}\) is a vector formed from randomly generated numbers between \(\:0\:\)and\(\:\:1\). The symbol \(\:\alpha\:\) means constant valued at 0.5.

Phase 2: The predator and prey travel at approximately the same speed. So, this is a transition phase in which the prey is responsible for exploration, whereas the predator is within exploitation control. The whole population is divided into dual segments applied for exploitation and exploration.

But \(\:{\text{M}\text{a}\text{x}}_{-}Iter/3<Iter<2Max\_Iter/3\)

\(\:{M}_{L}\) signifies random vector numbers representing the LF. The formulation for \(\:\omega\:\) is provided as demonstrated:

The \(\:\omega\:\) assists as a determining factor, normalizing the predator’s distance of movement. Similar to the present iteration counts, its value reduces. So, the model’s capability to utilize is enhanced.

Phase3: During this last phase of the optimizer procedure, the predator exceeds the prey regarding velocity. In these succeeding mathematical equations, small step sizes are combined to the elite ___location over LF to discover other locations by a more excellent fitness value.

However, \(\:Iter>2{\text{M}\text{a}\text{x}}_{-}Iter/3\)

Besides, environmental challenges, like the outcome of the eddy formation and fish aggregation tool (FADs), require consideration later. They can result in a change of behaviour in marine predators. The mathematic formulation of the result of FADs is transcribed as shown:

The symbol \(\:U\) refers to binary vector \(\:0\) or 1. The FADs represent a constant valued at 0.2 that characterizes the likelihood of the effect of FADs. \(\:r\) stands for randomly generated uniform value amongst \(\:(0\),1).

Initialization-based tent mapping.

During the presented MMPA model, it is combined into the initialization stage to enhance the hunting capability of the initial phase. Utilizing the tent mapping model can successfully stimulate population diversity and make the distribution of sensor nodes more uniform.

The notation \(\:I\) characterizes chaotic sequences within the range \(\:\left[\text{0,1}\right]\). \(\:n\) embodies the \(\:n\:th\:\)dimension. The notation \(\:{I}_{0}\) indicates the primary values that are random. Here, \(\:\epsilon\:\) refers to a constant valued at 0.5. Its formula is transcribed as shown:

The primary ___location \(\:{X}_{0}\) subsequently gained by the mapping process that is formulated as demonstrated:

Hybrid search tactic

The complete optimizer procedure of the typical MPA is separated into 3 phases. Meanwhile, just a single-motion form is applied in phases 1 and 3. To resolve this issue, the hybrid search approach comprising the arbitrary movement understanding and the use of the optimal individual is presented in the proposed MMPA model’s search procedure. The mathematic demonstration of the approach is provided as shown:

Now, \(\:Q\) denotes uniform random vector numbers inside the interval of \(\:\left[\text{0,1}\right].\) \(\:A\) signifies a \(\:D\)-dimensional vector with fixed. \(\:C\) stands for the adaptive controller feature, and its equation is written down as:

Through the improved present iteration counts, the adaptive controller factor \(\:C\) may fine-tune the dimensions of the search range. This tactic is applied in phases 1 or 3 of MMPA but with various restrictions. At phase 1, the limitation of Eq. (42) is defined as \(\:i=N/2,\cdots\:N\). It infers that the 1st half of the population moves forward to discover utilizing Eq. (30). In contrast, the 2nd half discovers utilizing Eq. (43). The limitation at phase 3 is established by the probability of mutation \(\:R\) that denotes a randomly generated value in \(\:\left[\text{0,1}\right].\) If the \(\:R\)-value is superior to 0.8, Eq. (43) is applied. Or else Eq. (37) is selected.

Golden Sine Guiding Mechanism.

This model id originated from the golden sine algorithm (GSA) to enhance the convergence capability and is combined into the presented MMPA. The GSA presents a golden section coefficient \(\:\tau\:\) into the sine model that can improve the convergence rate and enhance the optimizer capability. The optimizer procedure is stated mathematically:

\(\:{H}_{1}\) refers to a randomly generated value range between \(\:(0\)-\(\:2\pi\:)\), whereas \(\:{H}_{2}\) represents a randomly formed value in the range \(\:(0\)-\(\:\pi\:).\) \(\:{O}_{i}^{t}\) characterizes the best ___location of the \(\:i\:th\) individual in the \(\:t\:th\) iteration. Either \(\:{G}_{1}\) or \(\:{G}_{2}\) denotes golden-sine features, and their formulations are as shown:

\(\:{\omega\:}_{1}\) and \(\:{\omega\:}_{2}\) mean constants \(\:\pi\:\) and \(\:-\pi\:\), correspondingly the golden section coefficient \(\:\tau\:\) equivalent to \(\:(\sqrt{5}-1)/2.\)

The MMPA model originates from an FF to achieve a boosted classification performance. It outlines an optimistic number to embody the better outcome of the candidate solution. In this paper, the minimization of the classification rate ratio was reflected as FF. Its mathematical formulation is represented in Eq. (48).

Experimental validation

This section examines the performance analysis of the CDMDoW-AMOAFL technique under the DoW attack detection dataset35. This dataset contains 50,000 samples under dual bots such as TRUE and FALSE, as depicted in Table 1. The total number of features is 18, but only 14 have been selected. The suggested technique is simulated using the Python 3.6.5 tool on PC i5-8600k, 250GB SSD, GeForce 1050Ti 4GB, 16GB RAM, and 1 TB HDD. The parameter settings are provided: learning rate: 0.01, activation: ReLU, epoch count: 50, dropout: 0.5, and batch size: 5.

Figure 5 establishes the correlation matrix created by the CDMDoW-AMOAFL technique. The outcomes specify that the CDMDoW-AMOAFL method efficiently detects and precisely identifies TRUE and FALSE classes.

Figure 6 establishes the pairplot matrix created by the CDMDoW-AMOAFL technique. The outcomes specify that the CDMDoW-AMOAFL method efficiently detects and precisely identifies TRUE and FALSE classes.

Figure 7 establishes the confusion matrix created by the CDMDoW-AMOAFL methodology below different epochs. The results indicate that the CDMDoW-AMOAFL method accurately detects and recognizes all dual-class labels.

Table 2; Fig. 8 signify the attack detection of the CDMDoW-AMOAFL methodology under different epochs. The outcomes imply that the CDMDoW-AMOAFL methodology correctly recognized the samples. With 500 epochs, the CDMDoW-AMOAFL technique presents an average \(\:acc{u}_{y}\), \(\:pre{c}_{n}\), \(\:rec{a}_{l}\), \(\:{F1}_{score}\), and \(\:{AUC}_{score}\)of 95.03%, 95.27%, 95.03%, 95.03%, and 95.03%, correspondingly. Besides, based on 1500 epoch, the CDMDoW-AMOAFL technique presents an average \(\:acc{u}_{y}\), \(\:pre{c}_{n}\), \(\:rec{a}_{l}\), \(\:{F1}_{score}\), and \(\:{AUC}_{score}\)of 97.12, 97.21%, 97.12%, 97.12%, and 97.12%, respectively. In addition, depending upon 2500 epochs, the CDMDoW-AMOAFL method provides average \(\:acc{u}_{y}\), \(\:pre{c}_{n}\), \(\:rec{a}_{l}\), \(\:{F1}_{score}\), and \(\:{AUC}_{score}\)of 97.66%, 97.71%, 97.66%, 97.66%, and 97.66%, correspondingly. At last, depending on 3000 epochs, the CDMDoW-AMOAFL method provides average \(\:acc{u}_{y}\), \(\:pre{c}_{n}\), \(\:rec{a}_{l}\), \(\:{F1}_{score}\), and \(\:{AUC}_{score}\)of 98.12%, 98.14%, 98.12%, 98.12%, and 98.12%, correspondingly.

Figure 9 illustrates the training (TRA) \(\:acc{u}_{y}\) and validation (VAL) \(\:acc{u}_{y}\) analysis of the CDMDoW-AMOAFL technique below dissimilar epochs. The \(\:acc{u}_{y}\:\)analysis is calculated within the range of 0-3000 epochs. The figure highlights that the TRA and VAL \(\:acc{u}_{y}\) analysis exhibitions have an increasing trend, which informed the capacity of the CDMDoW-AMOAFL methodology with superior performance across multiple iterations. Simultaneously, the TRA and VAL \(\:acc{u}_{y}\) leftovers closer across the epochs, which indicates inferior overfitting and exhibitions maximal performance of the CDMDoW-AMOAFL methodology, assuring continuous prediction on hidden samples.

Figure 10 shows the TRA loss (TRALOS) and VAL loss (VALLOS) curves of the CDMDoW-AMOAFL approach under dissimilar epochs. The loss values are computed across an interval of 0-3000 epochs. It is denoted that the TRALOS and VALLOS values exemplify a diminishing tendency, informing the CDMDoW-AMOAFL approach’s capacity to balance a trade-off between data fitting and simplification. The continuous reduction in loss values promises the maximum performance of the CDMDoW-AMOAFL model and tunes the prediction results over time.

In Fig. 11, the precision-recall (PR) graph outcomes of the CDMDoW-AMOAFL methodology below different epochs clarify its performance by plotting Precision beside Recall for each class. The figure demonstrates that the CDMDoW-AMOAFL technique continually achieves superior PR analysis over dissimilar classes, representing its capacity to keep up an essential section of true positive predictions amongst every positive prediction (precision), where likewise pick up a significant proportion of actual positives (recall). The stable rise in PR analysis among every class illustrates the efficiency of the CDMDoW-AMOAFL technique in the classification procedure.

Figure 12 shows the ROC analysis of the CDMDoW-AMOAFL methodology below different epochs. The outcomes suggest that the CDMDoW-AMOAFL technique gains higher ROC values across each class, indicating a significant capacity for discerning the classes. This reliable trend of maximal ROC analysis across multiple classes indicates the capable outcomes of the CDMDoW-AMOAFL technique on predicting classes, highlighting the robust nature of the classification procedure.

The comparative results of the CDMDoW-AMOAFL approach with existing methodologies are illustrated in Table 3; Fig. 1336,37,38. The simulation result stated that the CDMDoW-AMOAFL approach outperformed superior performances. Based on \(\:acc{u}_{y}\), the CDMDoW-AMOAFL method has better \(\:acc{u}_{y}\) of 98.12% while the MobileNetV2, ResNet50, VGG16, LSTM, SVC-SOM, LSTM-autoencoder, and GRU-Binary Classification methods have achieved lower \(\:acc{u}_{y}\) of 95.66%, 98.03%, 97.48%, 95.60%, 95.45%, 97.62%, and 97.00%, correspondingly. Furthermore, depending on \(\:pre{c}_{n}\), the CDMDoW-AMOAFL method has a greater \(\:pre{c}_{n}\) of 98.14%, where the MobileNetV2, ResNet50, VGG16, LSTM, SVC-SOM, LSTM-autoencoder, and GRU-Binary Classification methods have accomplished worst \(\:pre{c}_{n}\) of 95.85%, 98.03%, 97.62%, 96.27%, 97.51%, 97.22%, and 95.31%, correspondingly. Besides, based on \(\:{F1}_{score}\), the CDMDoW-AMOAFL method has a better \(\:{F1}_{score}\) of 98.12%, whereas the MobileNetV2, ResNet50, VGG16, LSTM, SVC-SOM, LSTM-autoencoder, and GRU-Binary Classification methods have gained lesser \(\:{F1}_{score}\) of 95.79%, 97.29%, 96.92%, 96.68%, 95.76%, 95.33%, and 97.57%, respectively.

Table 4; Fig. 14 depict the computational time (CT) analysis of the CDMDoW-AMOAFL technique over existing models. Among the listed models, CDMDoW-AMOAFL technique demonstrates the fastest CT at 5.13 s, significantly outperforming others. MobileNetV2, ResNet50, and VGG16 Method, while effectual, take longer with CTs of 18.80, 21.40, and 13.81 s, respectively. The LSTM Method and SVC-SOM have relatively higher CTs, at 10.80 and 22.17 s. The LSTM-autoencoder model performs with a time of 8.81 s, illustrating moderate efficiency. Meanwhile, the GRU-Binary classification model has the longest CT at 21.97 s, emphasizing the varying performance of these models in terms of time efficiency for diverse tasks. The diverse CTs highlight the requirement to choose models based on task requirements, with faster models like CDMDoW-AMOAFL model appropriate for time-sensitive tasks and others models favored for accuracy.

Conclusion and future work

In this manuscript, a novel CDMDoW-AMOAFL model is presented. The proposed CDMDoW-AMOAFL model relies on identifying and mitigating the malicious activities in a network. At first, the z-score normalization is applied in the data normalization stage to transform input data into a beneficial format. Next, the proposed CDMDoW-AMOAFL designs the HHO model for the feature selection process to identify and select the most relevant features from a dataset. The ensemble models, namely the GRU method, TCN technique, and CAE model for cyberattack detection, have been deployed. Finally, the MMPA optimally adjusts the hyperparameter values of ensemble models and outcomes in more excellent classification performance. A wide-ranging experimentation was performed to prove the performance of the CDMDoW-AMOAFL method under the DoW attack detection dataset. The performance validation of the CDMDoW-AMOAFL technique illustrated a superior accuracy value of 98.12% over existing models. The limitations of the CDMDoW-AMOAFL technique comprise the reliance on a single dataset, which may not fully represent the diversity of real-world scenarios. Additionally, the performance of the model might degrade when applied to larger, more complex datasets or under dynamic conditions. Another limitation is the computational cost, as some methods need significant resources for training and inference, which could limit their scalability. Furthermore, the study does not account for adversarial attacks, which can compromise the reliability of the models. Future work should explore the inclusion of diverse datasets, optimize computational efficiency, and investigate the robustness of the models against adversarial threats. Moreover, incorporating real-time detection and response capabilities could additionally improve the practical applicability of the system.

Data availability

The data that support the findings of this study are openly available at https://data.mendeley.com/datasets/g8g9vdxyvn/1, reference number [25].

References

Le, A., Epiphaniou, G. & Maple, C. A comparative cyber risk analysis between federated and self-sovereign identity management systems. Data Policy. 5, e38 (2023).

Aljrees, T., Kumar, A., Singh, K. U. & Singh, T. Enhancing IoT Security through a Green and Sustainable Federated Learning Platform: Leveraging Efficient Encryption and the Quondam Signature Algorithm. Sensors, 23(19), 8090 (2023).

Mazzocca, C., Romandini, N., Mendula, M., Montanari, R. & Bellavista, P. TruFLaaS: trustworthy federated learning as a service. IEEE Internet Things J. 10 (24), 21266–21281 (2023).

Yazdinejad, A., Dehghantanha, A., Parizi, R. M., Srivastava, G. & Karimipour, H. Secure intelligent fuzzy blockchain framework: Effective threat detection in iot networks. Computers in Industry, 144, 103801. (2023).

Driss, M., Almomani, I., Huma, Ahmad, J. & Z. and A federated learning framework for cyberattack detection in vehicular sensor networks. Complex. Intell. Syst. 8 (5), 4221–4235 (2022).

Jahromi, A. N., Karimipour, H. & Dehghantanha, A. December. Deep federated learning-based cyber-attack detection in industrial control systems. In 2021 18th International Conference on Privacy, Security and Trust (PST) (pp. 1–6). IEEE. (2021).

BP, S. V. Incremental research on cyber security metrics in android applications by implementing the ML algorithms in malware classification and detection. J. Cybersecur. Inform. Manage. 3 (1), 14–20 (2020).

Sánchez, P. M. S. et al. Studying the robustness of anti-adversarial federated learning models detecting cyberattacks in Iot spectrum sensors. IEEE Trans. Dependable Secur. Comput. 21 (2), 573–584 (2022).

Alazab, M. et al. Federated learning for cybersecurity: concepts, challenges, and future directions. IEEE Trans. Industr. Inf. 18 (5), 3501–3509 (2021).

Sait, A. R. W., Pustokhina, I. & Ilayaraja, M. Mitigating DDoS attacks in wireless sensor networks using heuristic feature selection with deep learning model. J. Cybersecur. Inform. Manage. https://doi.org/10.54216/JCIM.000106 (2019).

Saveetha, D., Maragatham, G., Ponnusamy, V. & Zdravković, N. An integrated federated machine learning and blockchain framework with optimal miner selection for reliable DDOS attack detection. IEEE Access. https://doi.org/10.1109/ACCESS.2024.3413076 (2024).

Al-Wesabi, F. N. et al. Pelican optimization algorithm with federated learning driven attack detection model in internet of things environment. Future Generation Comput. Syst. 148, 118–127 (2023).

Mansouri, F., Tarhouni, M., Alaya, B. & Zidi, S. A distributed intrusion detection framework for vehicular Ad Hoc networks via federated learning and Blockchain. Ad Hoc Networks, 167, 103677. (2025).

Zainudin, A. et al. Blockchain-inspired collaborative cyber-attacks detection for Securing metaverse. IEEE Internet Things J. 11(10), 18221–18236 (2024).

Shirvani, G., Ghasemshirazi, S. & Alipour, M. A. Enhancing IoT Security Against DDoS Attacks through Federated Learning. arXiv preprint arXiv:2403.10968. (2024).

Lin, W. T., Chen, G. & Zhou, X. Privacy-preserving federated learning for detecting false data injection attacks on power system. Electric Power Systems Research, 229, 110150. (2024).

Liu, Z., Guo, C., Liu, D. & Yin, X. An asynchronous federated learning arbitration model for low-rate Ddos attack detection. IEEE Access. 11, 18448–18460 (2023).

Husnoo, M. A. et al. FedDiSC: A computation-efficient federated learning framework for power systems disturbance and cyber attack discrimination. Energy and AI, 14, 100271. (2023).

Saheed, Y. K. & Misra, S. CPS-IoT-PPDNN: A new explainable privacy preserving DNN for resilient anomaly detection in Cyber-Physical Systems-enabled IoT networks. Chaos, Solitons & Fractals, 191, 115939. (2025).

Saheed, Y. K., Usman, A. A., Sukat, F. D. & Abdulrahman, M. A novel hybrid autoencoder and modified particle swarm optimization feature selection for intrusion detection in the internet of things network. Frontiers in Computer Science, 5, 997159. (2023).

Saheed, Y. K., Omole, A. I. & Sabit, M. O. GA-mADAM-IIoT: A new lightweight threats detection in the industrial IoT via genetic algorithm with attention mechanism and LSTM on multivariate time series sensor data. Sensors International, 6, 100297. (2025).

Saheed, Y. K. & Chukwuere, J. E. Xaiensembletl-iov: A new explainable artificial intelligence ensemble transfer learning for zero-day botnet attack detection in the internet of vehicles. Results in Engineering, 24, 103171. (2024).

Fahim-Ul-Islam, M., Chakrabarty, A., Alam, M. G. R. & Maidin, S. S. A Resource-Efficient federated learning framework for intrusion detection in IoMT networks. IEEE Trans. Consum. Electron. https://doi.org/10.1109/TCE.2025.3544885 (2025).

Fereidouni, H., Hafid, A. S., Makrakis, D. & Baseri, Y. F-RBA: A Federated Learning-based Framework for Risk-based Authentication. arXiv preprint arXiv:2412.12324. (2024).

Nguyen, Q. H., Hore, S., Shah, A., Le, T. & Bastian, N. D. FedNIDS: A federated learning framework for Packet-based network intrusion detection system. Digit. Threats: Res. Pract. 6 (1), 1–23 (2025).

Zou, L. et al. Cyber Attacks Prevention Towards Prosumer-Based EV Charging Stations: An Edge-Assisted Federated Prototype Knowledge Distillation Approach. IEEE Transactions on Network and Service Management. (2024).

Loganathan, R. & SelvakumaraSamy, S. An efficient privacy-preserving authentication scheme for internet of vehicles based on blockchain technology with hybrid adaptive network. Peer-to-Peer Networking and Applications 18(3), 99. (2025).

Khaleel, Y. L. et al. Network and cybersecurity applications of defense in adversarial attacks: A state-of-the-art using machine learning and deep learning methods. Journal of Intelligent Systems, 33(1), 20240153. (2024).

Fei, N., Gao, Y., Lu, Z. & Xiang, T. Z-score normalization, hubness, and few-shot learning. In Proceedings of the IEEE/CVF International Conference on Computer Vision (pp. 142–151). (2021).

Islam, M. R., Islam, M. S., Boni, P. K., Sarker, A. S. & Anam, M. A. Solving the maximum cut problem using Harris Hawk optimization algorithm. PLOS ONE. 19 (12), e0315842 (2024).

Kanwal, A. et al. Navigating the stock market: the role of State-of-the-Art deep learning techniques. Al-Balqa J. Res. Stud. 27 (4), 79–105 (2024).

Gan, J. et al. Improving short-term photovoltaic power generation forecasting with a bidirectional temporal convolutional network enhanced by temporal bottlenecks and Attention Mechanisms. Electronics, 14(2), 214 (2025).

Prakash, U. M. et al. S.N. and Multi-scale feature fusion of deep convolutional neural networks on cancerous tumor detection and classification using biomedical images. Scientific Reports, 15(1), 1105 (2025).

Wang, G. & Li, X. Wireless Sensor Network Coverage Optimization Using a Modified Marine Predator Algorithm. Sensors, 25(1), p.69. (2024).

Kelly, D., Glavin, F. G. & Barrett, E. DoWNet—classification of Denial-of-Wallet attacks on serverless application traffic. J. Cybersecur. 10 (1), tyae004 (2024).

Mousa, A. K. & Abdullah, M. N. An improved deep learning model for DDoS detection based on hybrid stacked autoencoder and checkpoint network. Future Internet 15(8), 278. (2023).

Ramzan, M. et al. Distributed denial of service attack detection in network traffic using deep learning algorithm. Sensors 23(20), 8642. (2023).

Author information

Authors and Affiliations

Contributions

Elvir Akhmetshin: Conceptualization, methodology development, experiment, formal analysis, investigation, writing. Dilshod Hudayberganov: Formal analysis, investigation, validation, visualization, writing. Rustem Shichiyakhi: Formal analysis, review and editing. Sandeep Yellisetti : Methodology, investigation. Sumit Chandra: Review and editing.Rahul Deo Shukla: Discussion, review and editing. Lalitha Kumari Pappala: Conceptualization, methodology development, investigation, supervision, review and editing.All authors have read and agreed to the published version of the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Ethics approval

This article contains no studies with human participants performed by any authors.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Akhmetshin, E., Hudayberganov, D., Shichiyakh, R. et al. An intelligent federated learning boosted cyberattack detection system for Denial-Of-Wallet attack using advanced heuristic search with multimodal approaches. Sci Rep 15, 14265 (2025). https://doi.org/10.1038/s41598-025-96986-5

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-025-96986-5