Abstract

Myelin surrounds axonal membranes to increase the conduction velocity of nerve impulses and thus reduce communication delays in neural signaling. Changes in myelination alter the distribution of delays in neural circuits, but the implications for their operation are poorly understood. We present a joint computational and non-linear dynamical method to explain how myelin-induced changes in axonal conduction velocity impact the firing rate statistics and spectral response properties of recurrent neural networks. Using a network of spiking neurons with distributed conduction delays driven by a spatially homogeneous noise, we combined probabilistic and mean field approaches. These reveal that myelin implements a gain control mechanism while stabilizing neural dynamics away from oscillatory regimes. The effect of myelin-induced changes in conduction velocity on network dynamics was found to be more pronounced in presence of correlated stochastic stimuli. Further, computational and theoretical power spectral analyses reveal a paradoxical effect where the loss of myelin promotes oscillatory responses to broadband time-varying stimuli. Taken together, our findings show that myelination can play a fundamental role in neural computation and its impairment in myelin pathologies such as epilepsy and multiple sclerosis.

Similar content being viewed by others

Introduction

Nonlinear systems with delayed feedback have widespread applications across the fields of physics1, engineering2 and biology3. In biological neural networks, time delays reflect the combined effect of slow synaptic and dendritic transmission, and the finite propagation velocity of nerve impulses along axons. Moreover, delayed neural signaling has been shown to support a wide range of nonlinear phenomena in the brain, ranging from collective synchronization4,5, stochastic resonance6 and induction of brain oscillations7 to various types of noise-induced bifurcations8,9,10,11,12,13. For this reason, time delays have been implicated with a rich repertoire of physiological dynamics supporting brain function12,14,15,16.

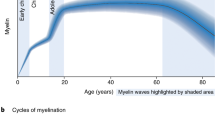

In the nervous system, axonal conduction delays are not free parameters but are strictly regulated by myelin, a fatty substance enwrapping neural membranes. Myelin enables saltatory conduction, and greatly accelerates the propagation of action potentials (APs) along axons. Produced by glia cells, myelin compensates for variability in length and caliber between different axons17,18,19, adjusting the time required for APs to propagate towards their post-synaptic targets (known as conduction delay). Conduction delays are of prime importance for the faithful transmission of correlated and temporally structured fluctuations resulting from recurrent connectivity20,21, sensory stimuli and functionally relevant afferent inputs. Such correlated stimuli challenge both the limited coding capacity of neurons22, as well as the temporal coordination of neural signaling17,19,21,23. To face this challenge, glial cells can adaptively adjust the amount of myelin along axons, speeding up or slowing down the propagation of APs by tuning conduction velocity, thereby stabilizing neuronal trafficking12,13,24,25,26,27. Indeed, myelin has recently been shown to be plastic, supporting and imparting flexibility for various neural computations, notably by opimizing conduction delay distributions amongst neuronal assemblies27,28,29,30,31 with important consequences on neural dynamics, synchrony and brain function13,21,27,32,33. Research on glial cells, especially myelin, has emerged as one of the most effervescent and rapidly evolving areas in neuroscience (see34 and references therein).

In the light of the manifest richness of delayed feedback systems3, it is no surprise that myelin-induced changes in axonal conduction velocity (and delays) influence neural signaling and transform the dynamics of neural circuits and how they respond to time-varying fluctuations. Yet, networks with time delays are amongst the most challenging to characterize mathematically, which severely limits data interpretation. We here explore this problem using a spiking neural network of excitatory neurons, with distributed delay and spatially correlated (i.e., evenly applied to all neurons) additive noise. In our analysis, we considered conduction velocities over a range aligned with experimental observations made in the central nervous system across multiple mammalian species19,35,36.

We develop a computational strategy for stochastic spiking networks, and associated non-Markovian theory, that lifts the restriction of single delays to reveal a host of new delay density-dependent dynamical phenomena of physiological and clinical relevance. Leveraging mean field theory, we develop a method to determine the statistics of the stationary probability density function associated with the collective neuron activity in this network. Building on previous results on stochastic delay equations37,38,39,40,41, this approach permits the derivation of implicit expressions for statistical moments of the mean field dynamics. Most importantly, it reveals that delay distributions may regulate the activity of cells in these networks by tuning their individual dynamic range and encoding capability for noise-like stimuli. Using this approach, we characterize changes in neural activity resulting from variation in axonal conduction velocity reflecting various levels of myelination.

While it is generally assumed that delays mainly influence synchrony and oscillations, our mathematical and numerical results show that myelin-induced changes in conduction velocity implement a gain control mechanism amplifying neural activity, independently of synaptic coupling, and irrespective of the presence of oscillations. This gain control mechanism was further found to be especially salient in the presence of dynamic stimuli by amplifying the collective response of the neurons to time-varying signals. Changes in conduction velocity were found to shape the information coding capacity of the network, suggesting that myelination may be tuned in a contextual way to optimize the faithful encoding of stimuli of a given amplitude and baseline noise into network firing properties21,42. In addition, power spectral analysis reveals that the dispersion of time delays, resulting from either variability in conduction velocities and/or axonal lengths, regularizes neural activity, suppressing oscillatory activity and promoting asynchronous dynamics. These findings echo effects seen experimentally under the influence of activity-dependent myelination17,24,26,27,29. Taken together, these results allow us to draw conclusions about how myelination may solicit nonlinear neural interactions to implement gain control and optimize the encoding capability of neural circuits. Further, they enable us to make predictions about potential dynamical consequences of myelin disruption in pathological states, such as those seen in epilepsy43 and multiple sclerosis44.

Results

Model

We analyzed a widely utilized spiking recurrent network of excitatory neurons, in which the membrane potentials ui (i = 1, …, N) evolve according to the following set of stochastic delayed equations with additive correlated noise:

where τs is the membrane time constant, L[ui] = −ui represents linear relaxation, and Jij are synaptic weights. Neurons are randomly connected with a connection probability ρ. The spike trains \({X}_{j}={\sum }_{\{{t}_{j}\}}\delta (t-{t}_{j})\) follow non-homogeneous Poisson processes of rate f[uj], i.e., Xj ~ Poisson(f [uj]). A spatially correlated stimulus \(S(t)=I+\sqrt{2D}\xi (t)\) is evenly applied to all neurons, and consists of a constant component I and Gaussian white noise ξ(t) with variance 2D. The correlation in space is maximal, i.e. we consider a spatially uniform Gaussian white noise stimulus. The system above describes the dynamics of a network in which neurons may be clustered locally (e.g., cortical microcircuits) or distributed across distant brain areas. Therefore, action potentials experience conduction delays τij = ℓij/c, where ℓij corresponds to the length of individual axons with a conduction velocity c, which depends on axonal myelination. Further details about this model and underlying assumptions can be found in the Methods section.

Our analysis starts with the observation that the collective activity of neurons in this network model is highly sensitive to myelination. Exposing all neurons to a spatially correlated stimulus, a consistent increase in the mean network firing rate could be observed whenever conduction velocity - reflecting myelination - is increased. As shown in Fig. 1, faster conduction was found to amplify neural activity and firing rates, and the network response to the applied noisy stimuli became more salient. Since the same noisy input induces larger firing activity for increased conduction velocity, these first numerical findings suggest a control of the systems susceptibility or gain. These effects on firing rate and gain result entirely from the modified conduction velocity, since all other parameters - such as synaptic weights - were kept constant. The presence of the observed response amplification could also be confirmed by computing the network mean firing rate distribution, where faster conduction promotes increased probability of high firing rates (see below).

a Diagram depicting an idealized, simplified representation of a neural network with poor myelination, in which neurons (black circles) are connected through axons (edges) expressing different levels of myelin (illustrated in shades and thickness of red). b Dynamics of a network of Poisson spiking neurons with low (c = 0.5 m/s) conduction velocity. A raster plot of the network spiking activity shown alongside the network mean firing rate above shows the response of the network to a spatially correlated (i.e., evenly applied to all neurons) stochastic stimulus S(t). The histogram corresponds to the firing rate distribution of the network (right red insert). c Diagram of a neural network with higher myelination (illustrated in shades and thickness of blue). d For the same stimulus S(t), if conduction velocity is increased (c = 50 m/s) to reflect higher myelination, the response of the network is amplified and mean firing rates increase (blue line). This also manifests as a change in the network firing rate distribution, which is now heavily skewed (right blue insert). In (a, b), axonal conduction delays τij = lij/c are Gamma distributed with shape k = 2 and scale θ = 4/c ms and axonal length distribution lij. Except from conduction velocity (i.e., (c), all other parameters are identical). The network is fully (i.e., all-to-all) connected and synaptic weights are both positive and identical. e Illustration of the mechanism of gain control. Changes in conduction velocity lead to a net change in normalized firing rate and response to stimuli. The red line corresponds to c = 0.5 m/s while the blue line corresponds to c = 50 m/s. f The membrane potential statistics for high (blue histogram; c = 50 m/s) and low (red histogram; c = 0.5 m/s) conduction velocity differ. The other parameters in the model (cf. Methods section) used for these exemplar simulations are N = 100, \({J}_{ij}=\bar{J}=0.9\), ρ = 1, I = 0 and D = 0.05. The activation function is nonlinear with parameters β = 2, h = 0.5.

Mean field statistics

To better understand this phenomenon, we examined how conduction velocity (i.e. c), reflecting the amount of myelin covering axons, shapes the dynamical properties of cells in the network. This was accomplished by computing the probability density function of the network-averaged instantaneous potential \(\bar{u}\), a quantity that fluctuates in time as determined by the network collective dynamics. This was done for two different categories of delay distributions: Gamma, and uniformly distributed. This was first computed numerically through simulations (see Subsection Mean Field Moments in the Methods section for details of the mathematical analysis), which then motivated our theoretical work. Within the range of parameters examined here, Fig. 2 indicates that the probability density function of the network mean may be well approximated by a Gaussian—a feature that unlocks a variety of properties that makes this problem amenable to a thorough analytical characterization. These simulations also reveal changes in mean field statistics: increasing the conduction velocity increases both the mean and variance of the network mean potential, or conversely, larger conduction delays tend to suppress both the mean and variance of the network mean activity.

a Mean field probability density function computed numerically for a network of spiking neurons with Gamma-distributed delays. The mean field probability density function may be well approximated by a Gaussian (solid lines), and its mean and variance changes as conduction velocity increases. The associated delay distributions are plotted alongside their analytical probability distributions. b Distribution of delays following a Gamma distribution. Here, the shape parameter is k = 2 and the scale θ = 4/c ms. c Corresponding mean field distribution for uniformly distributed delays. d Distribution of delays following a uniform distribution. Here, \({l}_{\max }=10\) mm. Numerical simulations81 of the network ensemble average \(\bar{u}\) (shaded areas) are shown alongside normal distributions with associated mean and variance (curves) for c = 5.0 m/s (blue), c = 2.5 m/s (black) and c = 1.0 m/s (red), respectively. Simulations were performed using the Euler-Maruyama scheme, with a population of N = 100 neurons over trials of duration T = 500 s with steps of 1ms and D = 0.1. Other parameters are \({J}_{ij}=\bar{J}=0.9\), ρ = 1, I = 0, and the activation function is nonlinear with parameters β = 2, h = 0.5.

As a consequence of this Gaussian property, high-order cumulants of the probability density function (i.e., \({\rho }_{S}(\bar{u})\)) can be assumed to be small, so that the mean field \(\bar{u}\) in Eq. (2) may be used to develop equations for the dynamics of the mean (i.e., μ) and variance (i.e., σ2). This allows the derivation of the stationary distribution without relying on a Fokker-Planck formalism38,40,41,45. It is important to mention that our approach and the Fokker-Planck approach are equivalent in the present case of Gaussian probability density functions. We used this strategy to compute the stationary mean and variance of the mean field and characterized their respective dependence on myelin - that is, on conduction velocity and resulting delay distribution—and on the noise intensity D which can be seen as the strength of the spatially correlated noise stimulus. As shown in Fig. 3a, b for both Gamma- and uniformly distributed delays, conduction velocity increases both the mean and variance of the mean field \(\bar{u}\), confirming the numerical observations in Figs.1 and 2. While the changes in mean and variance induced by faster conduction velocity portrayed in Fig. 3 might appear small, they in fact support significant variations in mean firing rate (see Fig. 1 and below). We could further confirm the alignment between our analytical derivations and numerical results, which were found to be in good agreement over multiple orders of magnitude of the noise amplitude. We note that deviations between our analytical and numerical results were however observed for higher values of the noise variance, as the system approaches a regime characterized by stochastic/coherence resonance21,46. However, it is interesting to note that the theoretical predictions for the variance were accurate over a broader range of noise variance compared to the mean.

a Stationary mean of the average over network nodes \(\bar{u}\) computed numerically (triangles) plotted alongside the stationary mean μS computed analytically (dotted lines, see Methods section Eqs. (18), (22)) for Gamma distributed delays, and across various conduction velocities considered (c = 5.0 m/s, blue c = 2.5 m/s, black; c = 1.0 m/s, red) and various orders of noise variances D. The gray shaded area is bounded by the limit cases where there are no delays (top, c → ∞) or alternatively if delays become infinite (bottom, c → 0). Both of these limits were computed analytically. Significant deviations between numerical and analytical results emerge for strong noise variances D (marked by asterisks). b Equivalent plot for uniformly distributed delays. c Stationary variance (i.e., σ2) of the network ensemble average \(\bar{u}\) for Gamma distributed delays, computed both numerically (triangles) and analytically (dotted lines), see Methods section for details. Here, deviations appear for large noise variances D (marked as asterisks). d Same as (c) for uniformly distributed delays. In (a, c), k = 2 and θ = 4 mm/c. In (b, d), \({l}_{\max }=10\) mm. The network is fully connected (i.e., ρ = 1) and synaptic weights are both positive and identical (i.e., \({J}_{ij}=\bar{J}\)). Simulations were performed using the Euler-Maruyama scheme, with a population of N = 100 neurons over trials of duration T = 500 secs with steps of 1 ms. Other parameters are \({J}_{ij}=\bar{J}=0.9\), ρ = 1, I = 0, and the activation function is nonlinear with parameters β = 2, h = 0.5.

The results presented in Fig. 3 were obtained using an all-to-all network in which synaptic weights are identical. To examine the validity of our analysis in presence of connectivity disorder, we computed the stationary mean and variance in sparse networks by changing the connection probability ρ21. For simplicity, synaptic weights were scaled so that the mean connectivity does not vary with connection probability i.e. \({J}_{ij}=\bar{J}/\rho\). As shown in Fig. S1, the gain amplification caused by increase in conduction velocity was preserved across a wide range of connection probabilities. The computed mean and variance were also found to be in good agreement with our theoretical predictions. To assess whether this gain modulation effect can be generalized to other forms of non-linearity (i.e., Eq. (17)), we performed additional network simulations using alternative square root and logarithmic response functions. As shown in Fig. S2, the gain modulation effect induced by changes in conduction velocity is qualitatively preserved for both the stationary mean and variance, irrespective of the response function considered.

The changes in statistics portrayed in Figs. 1–3 together with our theoretical analysis suggest that myelination impacts the recurrent interactions of the network by modulating the mean and variance of the neural activity. The augmented responses correspond to an increased saliency of the spatially correlated temporal Gaussian white noise stimulus of strength D offset by a constant I. This results directly from the expression of mean field moments. Notably, the stationary variance \({\sigma }_{s}^{2}\) increases with the stimulus intensity D.

The mechanism behind the increased mean and variance of the firing rate is rather subtle. Figure S3 shows that it is a consequence of the behavior of the two-time covariance of the mean field as the delays change. Specifically, when the delays are shorter, i.e. the conduction velocities are larger, the covariances \(\langle \bar{u}(t)\bar{u}(t-{\tau }_{k})\rangle\) for each delay τk in the distribution increase; this is intuitively expected since the two quantities being correlated are more similar when the delay is shorter. This can be seen more readily in the theory for the Linear networks, but the effect is present also in the Nonlinear networks (see Methods). The impact is felt in the variance of the mean field (Eq. (13)), which increases. These stronger fluctuations in the mean field lead to larger mean firing rates. As conduction velocities are decreased, these delay-dependent correlations are suppressed: the network behaves as if it were effectively disconnected (i.e., \({\sigma }_{S}^{2}=D\)).

Consequently, by increasing conduction velocity, myelin promotes correlated activity, i.e. temporal coordination between cells, and as a consequence, the mean and variance of the firing rate increase. It is the combination of the delays and the noise that lead to this effect; otherwise, the fixed point of the mean field would not depend on the delays, which is what is commonly expected in deterministic delayed systems.

Gain control and susceptibility depend on conduction velocity

Taken together, the above results suggest that neural networks may utilize changes in myelination and axonal conduction velocity, as well as resulting shifts in activity portrayed in Fig. 3, to amplify their response to stimuli - which in turn could be favorably read out by a downstream network. Indeed, as we now show, the variance D of the spatially correlated noise stimulus and the input strength I influence network firing statistics and sensitivity to these variables in a manner that is contingent on the distribution of delays between neurons, and specifically, on the mean conduction velocity. The aforementioned changes in stationary mean and variance of the network ensemble average \(\bar{u}\) in Fig. 3 may hence be recast as the manifestation of a gain control effect, in which the saliency of neuronal responses can be amplified through adjustments in axonal conduction velocity21.

To characterize the dynamic range and sensitivity of the network, we computed the susceptibility of the network for different conduction velocities. This susceptibility, or gain G, is given by Eq. (21) and quantifies how the mean firing rate changes with the noise variance (i.e. D) or the constant input strength (i.e. I), for different values of c. This gain corresponds to the slope of the network activation function F averaged over the probability density of the mean potential (cf. Eq. (21) in the Methods section). We remind here that as D is swept across the shown range, the values of the mean μS and variance σS have to be continually and self-consistenly recomputed in order to evaluate the gain function.

As can be seen in Fig. 4a, the mean firing rate scales monotonically with noise amplitude, as one intuitively expects. Moreover, as conduction velocity c increases, the mean firing rates of the individual neurons - and thus of the whole network - increase as well, a direct consequence of the phenomenon portrayed in Fig. 3. We note that despite seemingly small changes in mean membrane potential (i.e., Fig. 3), firing rates experience important changes, increasing by about 20% as conduction velocity is increased from 1m/s to 5m/s, and even more if conduction velocity is increased further.

a Mean network firing rate as a function of noise variance D for various axonal conduction velocities (c = 5.0 m/s, blue; c = 2.5 m/s, black; c = 1.0 m/s, red). Firing rates are computed by multiplying the network firing probability F by a factor of 100. These rates expectedly scale with D, but are also amplified by higher conduction velocity. The gray shaded area is bounded by the limit cases where there are no delays (top, c → ∞) or alternatively if delays become infinite (bottom, c → 0). Both of these limits were computed analytically. The theory (dashed line) agrees well with the simulation of the full spiking network. b Network gain G or susceptibility as a function of D for different conduction velocities. These theoretical curves represent the slope of the curves in (A), and reveal an optimal noise strength that decreases with conduction velocity. Gray lines represent the same cases as in (a). c Mean firing rate as a function of constant input strength I for different values of c. Theory (dashed line) is accurate up to ~I = 0.045, after which discrepancies grow due to the nonlinear behavior of the network and the fact that the theory does not account for increased spiking noise variance in the network simulations. d Susceptibility as a function of input strength I for different c values. The theory is accurate again over the same range as in (c). Network simulations for higher values of I reveal a susceptibility peak due to the inflection point present in (c). The network is assumed to fully connected (i.e., ρ = 1) and synaptic weights are both positive and identical (i.e., \({J}_{ij}=\bar{J}\)). The model assumes a distribution of axonal lengths and delays are Gamma-distributed with k = 2 and θ = (4 mm/c). Other parameters are \(\bar{J}=0.9\), ρ = 1, and the activation function is nonlinear with parameters β = 2, h = 0.5.

Such gain control effect must however be regarded from the perspective of the neurons’ limited dynamic range and coding capacity: stimuli that are too weak may fail to elicit a visible postsynaptic response in terms of network firing rate. If their amplitude is too strong, such stimuli may in contrast lead to saturation (i.e., postsynaptic neuron fires continuously). As direct manifestation of this, Fig. 4b shows that the gain peaks as a function of the noise strength, and this peak shifts to smaller values of D as the velocity increases. This indicates that while myelin might control the response gain, it is the stimulus amplitude D that dictates whether the susceptibility is increased or decreased over the neurons dynamic range. This suggests that myelination may be tuned in a contextual way to amplify the response to stimuli of a given amplitude and/or baseline noise into network firing properties21,42.

Figure 4c shows the corresponding results when the (constant) input bias (i.e., I) is increased. While the mean firing rate expectedly changes as I is increased, the effect of conduction velocity and delay distribution does not appear to impact the response significantly compared to the noise strength D. The same trend can be seen in Fig. 4d, where the susceptibility monotonically increase with input strength but does not vary significantly with conduction velocity. These observations suggest that conduction velocity mainly influences the response of the network to dynamic, time-varying stimuli and not constant inputs.

These observations together suggest that conduction velocity may be tuned in a contextual way to optimize the faithful encoding of stimuli into network firing properties21,42. We further note the good agreement of our theory with the numerical simulations of the spiking network, but only as long as I does not exceed the range shown. Beyond that, the susceptibility also peaks, albeit with a relative invariance to conduction velocity. We hypothesize that this is due to the system being pushed into a more nonlinear regime, in particular one in which neglecting the increase in Poisson noise leads to a substantial error. Coherence resonance may further amplify this discrepancy (see below).

Conduction delay variability stabilizes network dynamics

We concluded our study by examining the effect of delay dispersion on the response of our network to time-varying stimuli. For that purpose, we relaxed previous assumptions by which the dynamics of the network were assumed to be non-oscillatory. To this end, we computed the power spectral density S(ω) of the mean field \(\bar{u}\) for various delay distributions. We examined purely stochastic inputs (i.e. setting I=0) and deliberately considered a limit case in which the delay distribution can be approximated by a Gaussian, so that the mean and variance may be modulated independently from one another (see Methods section Power spectral density).

As can be seen in Fig. 5a–c, increasing the mean delay - i.e., decreasing the degree of myelination and thus the conduction velocity - expectedly introduces resonant oscillatory modes in the network, whose frequencies scale with conduction velocity. Such frequencies can easily be determined through linear stability analysis, corresponding to imaginary eigenmodes. These oscillatory frequencies are constrained by the membrane time constant, the network recurrent interactions, and the conduction delay(s). Increasing the variance of the delay distribution (while keeping the mean delay constant) suppresses these resonances, as the power spectral density converges towards a purely Lorentzian distribution for higher frequencies ω i.e., \(S(\omega )\approx D{\left(\pi (1+{\omega }^{2})\right)}^{-1}\). We highlight that the latter corresponds to the uncoupled case where Jij = 0, in which the mean field in Eq. (23) simplifies to a non-delayed Langevin equation. To better understand the mechanism at play, we performed linear stability analysis of our mean field system, computing explicitly the eigenvalues and how they depend on conduction velocity and delay variance (see Eq. (26)). As shown in Fig. 5d–f, increasing the dispersion of the delays while keeping their mean constant shifts complex eigenvalue away from the imaginary axis. This indicates that delay dispersion quenches the oscillatory modes of the system, making the system increasingly linear. These observations echo previous findings42,47,48,49,50 showcasing the strongly stabilizing influence of delay dispersion, by which the system becomes effectively decoupled through the suppression of oscillatory modes, thereby promoting irregular, asynchronous activity.

a–c Gaussian delay distributions (left) determine the shape of the mean field power spectral density S(ω) (right). The Gaussian mean and variance are independent from each other. Here, the mean delay is kept constant and given by m = kθ/c, while the variance is increased from s2 = 0.01 to 0.5 (lines with increased shading). To compute the power spectral density, we assumed a stimulus S(t) with noise variance D = 0.05 and zero bias i.e., I = 0. The dashed line corresponds to the Lorentzian power spectral density associated with \(\bar{J}=0\). Increasing the conduction velocities shifts the mean delay towards smaller values. Conduction velocities are given by c = 5.0 m/s (blue lines, (a)); c = 2.5 m/s (black lines, (b)); c = 1.0 m/s (red lines, (c)). d–f Eigenvalues associated with the characteristic polynomial (cf. Eq. (26) in the Methods section) whenever conduction velocity c is fixed and the delay variance s2 is varied. Increasing the delay dispersion s2 shifts the oscillatory eigenvalues away from the imaginary axis and stabilizes the dynamics. Different conduction velocities are given by c = 5.0 m/s (blue shading, (d)), c = 2.5 m/s (black shading, (e)) and c = 1.0 m/s (red shading, (f)). Other parameters are \(\bar{J}=0.9\), ρ = 1, k = 2, θ = 4 mm, I = 0, and the activation function is nonlinear with parameters β = 2, h = 0.5.

Discussion

The influence of time delays on nonlinear stochastic systems has been thoroughly studied38,40,41, especially from the perspective of synchrony47,48,51,52. However, their consequence on simple computational properties of neural networks has received comparatively less attention. Here we highlight that the delay distribution determines how the network may encode the stimulus strength into the mean and variance of the firing rate, the network’s susceptibility and the power spectrum of its activity. Action potential conduction velocity is strongly influenced by the presence (and amount) of myelin covering the axon, which impacts the temporal coordination of neural signaling with important consequences on network dynamics13,18,19,21,27,29. Glial-mediated changes in conduction velocity along axons have been shown to be titrated autonomously to promote AP coincidence17, reinforcing the probability of post-synaptic response and hence to enhance information transfer21,53 as well as the resilience of brain function13,26. We leveraged mean field theory for stochastic delay equations46,54,55,56 to investigate how conduction velocity - through its influence on conduction delays - influences the activity of recurrent networks and response to stimuli, in order to unveil the potential functional role of myelin in neural computations.

Using mean field theory, we analyzed an excitatory network of spiking neurons with distributed delay and an additive, spatially correlated stimulus evenly applied to all neurons. The shape of the delay distribution in this network was allowed to change with conduction velocity, meant to reflect the net effect of myelin on AP propagation. Building on a set of assumptions supported numerically, we derived the stationary moments for the probability density function of the network average and found that they depend on the network delays. This contrasts with previous findings in similar networks57, which did not find any delay dependence of stationary states. Considering these delay distributions, we quantified the mean response of the network and its variability to changes in stimulus bias and noise strength. This analysis revealed that conduction velocity - through its effect on delay distribution - operates as a gain control mechanism, amplifying network firing rates to shared (correlated) input. Indeed, faster conduction velocity decreases delay dispersion (i.e., renders conduction delays more similar to each other).

Figure S3 reveals that the gist of the effect lies in the covariance of the mean field potential at two times separated by the delays. When the delays are small, these two mean potentials are more similar, and their covariance increases. The same is true for all the delays in the distribution. Evaluating the effect of these covariances in the theoretical expressions we have derived for the variance of the mean field potential shows that this variance then increases, as seen in Fig. 3; in turn, this increased noisiness causes a higher mean network firing rate, as seen in Fig. 3 as well.

The network gain (i.e. susceptibility) was found to exhibit a peak as a function of noise strength revealing that conduction velocity may be tuned to optimize the response of the network to these changes. Further, large conduction delays, i.e. slow conduction, was found to promote oscillatory activity in the recurrent network. We point out that this negative correlation of conduction velocity and spectral peak power surprisingly contrasts to the positive correlation between conduction velocity and mean firing rate mentioned above. Our theory reproduces the gain properties seen in our numerical simulations of the spiking network, provided that the system is not pushed too far into its nonlinear regime.

Taken together, our computational and theoretical results suggest that myelinating glia in even simplified recurrent neural networks can play an important role in controlling firing rate statistics, along with their sensitivity to input changes beyond the induction of oscillations. Factoring in delay distributions also expands our knowledge about the determinants of firing rate distributions measured in real neural systems58,59. Over the past decades, there has been a surge of interest in understanding the role played by myelin in brain function, health and disease26,27,30,60,61,62. Oligodendrocytes have since been implicated with a plethora of biological functions across the CNS, ranging from learning, memory consolidation, stimulus discrimination to functional resilience13,28,31,32,33,60,62,63. Yet, understanding how myelin - and by extension glia - shape the computational properties of neural networks remains a formidable challenge, notably because of the complexity of non-linear delayed feedback systems.

In light of these results, both insufficient and excessive conduction velocity (i.e., myelination) represent potential disruptors of neural function. Recent experiments have causally linked excessive myelination to seizures in mice models of generalized epilepsy43. The gain control effect described here may thus be involved in supporting pathologically high firing rates and correlated neural activity, both of which accompany seizures64. Conversely, our model also predicts that decrease in conduction velocity predisposes the network to lower firing rates and gain, which may be associated with impairments observed in demyelinating diseases such as multiple sclerosis (MS)28,44,65,66,67,68.

Our results extend previous work on neural networks to study the combined effects of distributed delays and spatially correlated noise, in particular its consequences for the magnitude of delay-induced activity correlations in the presence of noise. For example, studies have analyzed oscillations in single delay rate-based models with finite-size, spatially uncorrelated noise69, in neural field models with spatially correlated noise of adjustable spatial correlation length and fixed delay70, as well as leaky integrate-and-fire stochastic networks without57,71 and with72 spatial noise correlations. In particular, it was shown theoretically and numerically in73 that distributed delays dampen the nonlinearity associated with a Hopf bifurcation from which oscillatory activity arises, and that making the delay distributed promotes asynchronous rather than synchronous activity in excitatory-inhibitory networks57,71. The key novelty in our work is that the delays influence the mean network firing rate and its variance - a subtle non-intuitive property revealed by our simulations and confirmed by our theory. Delays are generally thought to not influence the fixed points of dynamical systems. However, we have shown that this effect can occur due to changes in the autocorrelation of the mean field at lags equal to the delays. Our linear and nonlinear network theory shows that these correlations, pictured in Fig. S3, increase when the delays are smaller, i.e. when there is more myelin, and result in a larger variance of the mean field, leading to a higher mean firing rate. These paradoxical but well grounded results advance our understanding of neural network dynamics in the presence of distributed delays and noise.

We should emphasize that our theoretical approach can explain the effects reported here, and which could be experimentally confirmed. In particular, it agrees with the appearance in our numerical simulations of spectral peaks at oscillatory frequencies and their harmonics when the delay distribution has a larger mean delay (Fig. 5), and the somewhat unusual feature where this occurs in conjunction with a decreasing mean rate. Moreover, our theoretical approach provides further insight as to how the peaks become less pronounced the more distributed the delays are, as expected and seen in57,71.

Our approach suffers from limitations which should be developed in future studies. Such assumptions were made to facilitate the mathematical analysis our our model. First, the network model considered here is purely excitatory. Future work addressing the impact of inhibition on our results is of great interest. How conduction velocity along both excitatory and inhibitory axons influences the dynamics of such networks would provide important information about the functional role of myelin, especially given that excitatory and inhibitory neurons are known to display different myelination profiles74,75.

We have introduced variability in conduction delays by sampling axonal lengths from a given probability (i.e., either Gamma or uniform) distribution, while keeping conduction velocity the same for all network axons. However, myelin distribution, caliber and/or g-ratio may vary along and between axons. Including this additional source of variability would lead to axon-specific conduction velocities. We note that, however, that resulting conduction delays would remain distributed - and hence the current results would remain qualitatively unaffected.

The network under study and associated mean field (see Methods section) are known to exhibit oscillations and/or stochastic/coherence resonance21,46,51,52,54,55,56,76. The modulation of time delays in such systems is a complex problem, that may notably involve non-linear effects such as oscillation amplifications and amplitude death47,48,77. Such dynamics yield non-Gaussian probability density profiles, and are further characterized by complex correlation rates γ. As such, our approach holds for positive values of synaptic weights (\(\bar{J} > 0\)) below the multistability threshold, as well as for small values of the response gain β and noise amplitude D. We have also used a small delay expansion41 to approximate the correlation rate γ, which would otherwise be both complex and satisfy a transcendental equation, greatly complicating the analysis. Despite this approximation, we highlight that our analysis remains in good agreement with the numerical simulations.

In Fig. S4, we compare the effect of varying other parameters- such as β, h and \(\bar{J}\) - on the mean network firing rate. These results indicate that these parameters might have precedence in supporting changes in firing rates seen in experiments. One however needs to be careful by making this comparison, since the relationship between conduction velocity and time delay is non-linear: large (resp. small) changes in conduction velocity can cause small (resp. large) changes in time-delay. How changes in myelin may interfere with cellular excitability is an important problem that warrants further exploration.

In addition, our network is fully connected (i.e., all-to-all) which was done for simplicity. We orient the reader to21, where a similar network was examined with sparse connectivity. In our mean field equation (Eq. (2) in the Methods section), we have performed multiple approximations that limit the applicability of our analysis to small noise amplitudes (i.e., D). Indeed, the first order approximation of the correlation rate γ neglects any contribution of noise intensity on correlations and hence on the variance predicted by our analysis. We have also neglected fluctuations in the neurons’ potential resulting from Poisson spiking. Such random fluctuations, whose amplitude scale with firing rate, have a multiplicative effect on the dynamics of Eq. (1) and may thus have profound influence on network stability37; it likely explains the limitation of our theory at high input strength. Combined, we hypothesize that these approximations might be involved in the deviations observed for high noise intensity in Fig. 3A, B. Lastly, our model is abstract and neglects multiple relevant physiological details in order to preserve mathematical tractability. Further work is required to augment the biological accuracy of our model and its ability to mimic real brain circuits and the influence of glia on neural function.

Methods

Model

We considered a generic and widely used class of spiking recurrent neural networks in which the potential ui, i = 1. . . N, of neurons is governed by the set of stochastic delayed equations with additive correlated noise

where τs corresponds to the membrane time constant, L[ui] = −ui is a linear relaxation operator, and Jij are synaptic weights. The neurons are assumed to be randomly connected with a connection probability ρ. Spike trains \({X}_{j}={\sum }_{\{{t}_{j}\}}\delta (t-{t}_{j})\) are assumed to obey non-homogeneous Poisson processes with rate r[uj], where r is a given sigmoidal response function54 and δ( ⋅ ) is the Dirac delta function. That is, Xj ~ Poisson(r[uj]). A 1/N scaling has been included so that coupling between network nodes remains finite in the limit of large networks. An additive, spatially correlated input \(S(t)=I+\sqrt{2D}\xi (t)\) is evenly applied to all neurons, and is interpreted as being a functionally meaningful stimulus applied equally to all neurons, for instance resulting from coherent afferent input. This stimulus is composed of an input I, which is constant over the network and over time, and a spatially homogeneous Gaussian white noise term ξ(t) of variance 2D, satisfying \(\langle \xi (t)\xi ({t}^{{\prime} })\rangle =\delta (t-{t}^{{\prime} })\). Here, 〈⋅〉 denotes the ensemble average. Since it is additive, the stimulus S(t) does not depend on any of the system’s state variables, so that 〈S(t)〉 = I.

Equation (1) describes the dynamics of a spatially distributed network wherein neurons can either be clustered spatially (e.g., cortical microcircuit) or distant from each other (e.g., located in different brain areas). As such, the interactions within this network occur via axonal pathways which may extend globally through white matter, or alternatively locally between cortical layers, for instance. Due to this spatial separation, action potentials transit along axons of various lengths ℓ with axonal conduction velocity c and thus experience a conduction delay τ = ℓ/c. The axonal conduction velocity depends on the amount of myelin covering the corresponding axon. Specifically, resulting conduction delays τij > 0 between two neurons i and j in Eq. (1) are assumed to be random and collectively sampled from a probability distribution q(τ) (see section below).

Under specific assumptions21,46,54,55 and whenever \({{\mathbb{E}}}_{N,N}[{J}_{ij}]\equiv \bar{J} \, \ne \, 0\), Eq. (1) admits a well defined mean field for the network ensemble average potential \(\bar{u}\equiv {{\mathbb{E}}}_{N}[{u}_{i}]\). Here \({{\mathbb{E}}}_{N,N}[{J}_{ij}]\) and \({{\mathbb{E}}}_{N}\) are the network average across the N × N connections and N neurons of the network, respectively. Then

for \({\bar{u}}_{{\tau }_{k}}=\bar{u}(t-{\tau }_{k})\) and conduction delay times {τk}, τ1 ≤ τ2 ≤ ⋯ ≤τK. We orient the reader to the Subsection Mean field derivation and 21,54,55 for a thorough derivation. In this mean field equation (2), we have introduced the network average response function \(f[\bar{u}]\equiv {{\mathbb{E}}}_{N}[r]\)46,54,55. We also expressed the sum above over the K = N2 conduction delays for notational convenience. The delay times are linked to the delay distribution by \(q(\tau )={\sum }_{k=1}^{K}\delta (\tau -{\tau }_{k})/K\).

Equation (2) is our starting point. As defined, this mean field corresponds to a nonlinear stochastic delay differential equation (SDDE), a well-studied38,40,41,45,51,52,76, yet challenging family of dynamical systems. We are here interested in determining the first two moments of the stationary probability density function \({\rho }_{S}(\bar{u})\) for the mean activity \(\bar{u}\), and how such moments depend on the delay distribution q(τ). By doing so, our goal is to complement our understanding of the generic effect of delay distribution on the dynamics of non-linear networks, and formulate conclusions about the underlying functional role of myelin towards gain control in neural circuits.

Mean field moments

In what follows, we set the time constant to τs = 1 (which we choose to correspond to a time scale of 10ms) for simplicity and consider a purely excitatory network, i.e. \(\bar{J} \, > \, 0\), for which the dynamics of Eq. (2) possess a single equilibrium. This regime holds whenever the response gain \(df/d\bar{u}\) is small, and whenever synaptic weights \(\bar{J}\) remain below the multistability threshold. Previous studies have successfully used approximating approaches by which the Fokker-Plank equation for the probability density function \(\rho (\bar{u},t)\) may be formulated in presence of time delay, despite non-Markovian dynamics38,40,41,45. We here opt for an alternative (yet still approximate) strategy. We will assume that the a priori unknown stationary probability density function \({\rho }_{S}(\bar{u})\) is normally distributed with mean μS and variance \({\sigma }_{S}^{2}\), that is

This assumption implies that the stationary state of Eq. (2) in absence of noise is non-oscillatory, and that associated eigenvalues of the corresponding linearized system are purely real. While Eq. (2) may admit imaginary eigenvalues, resulting approximations by which eigenvalues are purely real remains valid over a wide range of parameter values, and is well supported by numerical observations (see Fig. 3c, d). By virtue of this Gaussian approximation, all high order cumulants of \({\rho }_{S}(\bar{u})\) vanish, and we may thus characterize the mean field \(\bar{u}\) in Eq. (2) by developing explicit differential equations for the first and second moment, whose fixed points correspond to the stationary statistics of \({\rho }_{S}(\bar{u})\). Note that in the following derivations, we assume that the relaxation operator L is linear.

The first moment, defined by

is the mean potential at time t. Taking this expectation over the density of the mean potential at time t on both sides of Eq. (2), the time-dependent mean potential μ(t) is found to obey the following delayed (noise-free) differential equation:

since 〈S〉 = I, and where we have used the linearity of the operator L. We here introduce the expectation of the activation function f

which also corresponds to the ensemble average firing rate of neurons in the network. One may hence determine the stationary mean μS through the equilibria of Eq. (4) above, which are implicitly defined by

Similarly, the second moment defined by

can be shown to obey the following differential equation39,41

applying the chain rule \(\frac{d}{dt}\langle {\bar{u}}^{2}\rangle =2\langle \bar{u}\frac{d\bar{u}}{dt}\rangle\). Introducing the covariance terms

one may hence consider the equilibrium of Eq. (7) to obtain an expression for the stationary second moment

where the stationary average \({\langle U\rangle }_{S}=\int\,U{\rho }_{S}(\bar{u})d\bar{u}\) is performed over the stationary probability density function \({\rho }_{S}(\bar{u})={\lim }_{t\to \infty }\rho (\bar{u},t)\). The stationary value of the third term on the right hand side of Eq. (8) may be approximated through the property of the Ornstein-Uhlenbeck process39

Since \({\sigma }_{S}^{2}={\langle {\bar{u}}^{2}\rangle }_{S}-{\mu }_{S}^{2}\) and \({\langle \bar{u}I\rangle }_{S}={\mu }_{S}I\), replacing the above in Eq. (8) and subtracting \({\mu }_{S}^{2}\) on both sides gives us an implicit expression for the stationary variance,

The covariance terms \({C}_{k}[{\mu }_{S},{\sigma }_{S}^{2}]\) correspond to the average over the stationary joint probability density function \({\rho }_{S}(\bar{u},{\bar{u}}_{{\tau }_{k}})\)40

The above integral is to be performed over two random variables \(\bar{u}\) and \({\bar{u}}_{{\tau }_{k}}\), which are assumed to be Gaussian with mean μS, variance \({\sigma }_{S}^{2}\) and covariance \(\langle \bar{u}{\bar{u}}_{{\tau }_{k}}\rangle ={\sigma }_{S}^{2}{p}_{k}\), where \({p}_{k}={e}^{-\gamma {\tau }_{k}}\) is the autocorrelation and γ is the correlation rate (see Subsection Covariances in Non-linear networks for more details). We discuss how to determine the correlation rate in further detail in the subsequent sections below. Hence, as per Eq. (3), the joint probability density function may be written explicitly as

where \(Q={((\bar{u}-{\mu }_{S})/{\sigma }_{S}-{p}_{k}({\bar{u}}_{{\tau }_{k}}-{\mu }_{S})/{\sigma }_{S})}^{2}\). Let us now examine specific examples.

Linear networks

To illustrate the approach above, let us first consider the simplistic case in which the response function is given by \(f[\bar{u}]=\bar{u}\) with the constant input I = 0. The mean field dynamics in Eq. (2) may be rewritten as

The stationary mean μS, which corresponds to the equilibrium of Eq. (12), is here given by

since \(\bar{J} \, > \, 0\). Determining the variance \({\sigma }_{S}^{2}\) of the stationary probability density function requires to compute the K covariance terms \({C}_{k}[{\mu }_{S},{\sigma }_{S}^{2}]\) which as per Eq. (10) simplify in the linear case to

As such, since μS = 0, Eq. (9) becomes

We note that whenever K ≫ 1, the above sum can be approximated by an integral to obtain our final expression for the linear case

where \({{\mathcal{L}}}[-\gamma ]={\mathbb{E}}[{e}^{-\gamma \tau }]=\int_{0}^{\infty }{e}^{-\gamma \tau }q(\tau )d\tau\) is the Laplace transform of the delay probability density function q(τ). To determine the correlation rate γ, we first observe that the dynamics of Eq. (12) may be well described for K ≫ 1 by the following Ornstein-Uhlenbeck process41

where \(\alpha =1-\bar{J}{{\mathcal{L}}}[-\gamma ]\). This result follows from the analysis of the characteristic equation of Eq. (12), associated with the trivial fixed point \({\bar{u}}_{o}=0\) in absence of noise, here given by

where \(\lambda \in {\mathbb{C}}\) is the eigenvalue. The eigenvalue λ in the equation above generically depends on both the stationary mean μS and variance \({\sigma }_{S}^{2}\). However, for τk small, one may consider the first order expansion41 \(\lambda =-1+\bar{J}+\epsilon\), ϵ ≪ 1 in Eq. (16), for which the correlation rate becomes \(\gamma =-1+\bar{J}\), which we note is purely real. Substituting into Eq. (14) yields an explicit expression for the variance in the linear case i.e.,

We note that this result corresponds to the approximation provided in previous work41.

Nonlinear networks

We now examine the case where the activation function in Eq. (2) is instead given by the sigmoid

Here, β is the nonlinear response gain and h the excitability threshold of the neurons. Note that Eq. (17) corresponds to the individual mean firing rate of the neurons in the network, i.e. rj = f [uj]. In this more involved case, the stationary mean is given by the following implicit equilibrium relationship

where the functional F at equilibrium reflecting the ensemble average firing rate in the network is given as per Eq. (5),

We note that in contrast to the linear case, now the stationary mean of \(\bar{u}\) implicitly depends on its variance. To compute \({\sigma }_{S}^{2}\), one needs to examine the covariance terms Ck[μ, σ2] at equilibrium. Following a rather laborious technical derivation (see Subsection Covariances in non-linear networks), one can obtain

with the average gain G of the network

This mean network gain is expressed as an average of the single neuron gain \({f}^{{\prime} }(\bar{u})\equiv df/d\bar{u}\) over the density of the average potential. This gain of the network quantifies how the mean activity (i.e. firing rate) varies in response to changes in the mean potential, which itself is a function of all the parameters of the system. The network gain will be used later when gain control properties are investigated. It can be written in terms of network parameters and (μS, σS) as

Replacing into Eq. (9) yields the variance of the mean potential

Using the fact that \({\mu }_{s}\bar{J}F[{\mu }_{S},{\sigma }_{S}^{2}]+{\mu }_{s}I={\mu }_{S}^{2}\) as per Eq. (18), we may consider K ≫ 1 to obtain our final expression for the stationary variance in the nonlinear case

We note that this equation together with Eq. (18) implicitly define the stationary probability density function in Eq. (3). In other words, (μS, σS) must be computed (numerically) as the solution to these two equations. If one was to scan a parameter in the model, as we do below for the noise and input strength parameters D and I, the solution set would define a curve of such solutions in the (μS, σS) space, along which the functions F and G can be evaluated.

Here, the correlation rate γ is still undefined, but can nonetheless be approximated. As in the section above, the correlation rate is also associated with the eigenvalue \(\lambda \in {\mathbb{C}}\) of Eq. (2), which in the non-linear case corresponds to

where the fixed point \({\bar{u}}_{o}\) satisfies the implicit equilibrium relationship \(\bar{{u}_{o}}=\bar{J}f[{\bar{u}}_{o}]+I\) for D = 0. We here adopt the same approach as in the linear case and consider τk small, for which the first order expansion yields the real-valued eigenvalue \(\lambda \approx -1+\bar{J}{f}^{{\prime} }[{\bar{u}}_{o}]+\epsilon\) for ϵ ≪ 1, allowing us to approximate the correlation rate as \(\gamma =-1+\bar{J}\) to first order. Substituting into Eq. (22) leads to an implicit expression for the variance

The above expressions for the statistics of the mean field agree well with numerical simulations (see Fig. 3a–d).

Myelin and conduction delay distributions

To model the effect of myelin, we examined the effect of different conduction velocities c on the propagation of action potentials along axons of given lengths ℓ. We considered two specific cases, in which resulting conduction delays τ = ℓ/c adopt different distributions:

Gamma distributed delays

Let us assume that the K = N2 axons separating neurons in Eq. (1) possess individual lengths ℓij randomly sampled from a Gamma distribution i.e. ℓij ~ Γ[k, θ], where k and θ refer to the shape and scale parameters, respectively. Further, the APs propagate along those axons with constant conduction velocity c, a value that scales with level of myelination along axons. Resulting conduction delays τij = ℓij/c, for a given conduction velocity c are hence also Gamma distributed with density q(τ) = Γ[k, θ/c]. We point out that the mean connection strength \(\bar{J}\) is independent of the connection topology given by the distribution of connection lengths ℓij. In the limit of large N, the associated Laplace transform \({{\mathcal{L}}}[-\gamma ]\) is given by

Uniformly distributed delays

Alternatively, axons separating neurons may display individual lengths ℓij randomly sampled from a uniform distribution i.e. \({\ell }_{ij} \sim U[0,{\ell }_{\max }]\). Note that in contrast to the Gamma distributed case above, the inclusion of a lower bond implies the presence of axons of zero lenghts, allowing neurons to communicate instantaneously for all conduction velocities. With this distribution, the resulting conduction delays τij = ℓij/c are also uniformly distributed with density \(q(\tau )=U[0,{\ell }_{\max }/c]\). In this case, the associated Laplace transform \({{\mathcal{L}}}[-\gamma ]\) reads

Power spectral density

To gain better insight as to the effect of distributed delays on the response of the network to time-varying stimuli, we computed the power spectral density S(ω) of the mean field \(\bar{u}\). Specifically, we linearized Eq. (2) around the deterministic equilibrium \({\bar{u}}_{o}\) to obtain the scalar SDDE,

where we assumed K ≫ 1. Taking the Fourier transform yields

where \(\hat{\bar{u}}=\hat{\bar{u}}(\omega )\) and \(\hat{\xi }(\omega )\) are the Fourier transforms of the mean field and the external driving noise, respectively. Note that we have used the linearity of the operator L. Given that \(\langle \hat{\xi }(\omega ){\hat{\xi }}^{* }({\omega }^{{\prime} })\rangle =\delta (w-{w}^{{\prime} })/2\pi\), where * denotes the complex conjugate,

with the power spectral density

Here, Re(P[a, ω]) and Im(P[a, ω]) correspond to the real and imaginary parts of the characteristic polynomial P[a, ω] associated with the eigenvalue \(\lambda =a+i\omega \in {\mathbb{C}}\), respectively. The polynomial P[a, ω] associated to Eq. (23) for D = 0 is given by

which simplifies for a = 0 to

To separate the (potentially distinct) contributions of the mean delay versus delay variance on the power spectrum in Eq. (25), we considered Gamma distributed delays i.e., q(τ) = Γ(k, θ/c) in the limiting case for large mean and variance. Indeed, whenever k ≫ 1, the delay distribution q approaches a normal distribution i.e. \(q(\tau )\approx {{\mathcal{N}}}(m,{s}^{2})\) with mean delay m = kθ and variance s2 = kθ2. This allows to express the Fourier transform \(\hat{q}\) in Eq. (27) in a separable form i.e., \(\hat{q}(\omega )={e}^{-\frac{1}{2}{\omega }^{2}{s}^{2}}{e}^{i\omega m}\). We relaxed the premises above, and assumed that the mean delay m and variance s2 may be modulated independently in a regime where m > s. While this clearly does not hold for the Gamma distributed case (since the mean and variance are not independent), this approach remains insightful for delay distributions, that adopt a Gaussian shaped profile. We note, that one may alternatively consider uniformly distributed delays within the interval [m − δτ, m + δτ], in which case the delay distribution adopts a mean m and variance s2 = 3δτ2 instead. This yields qualitatively similar results as those portrayed in Fig. 5.

Mean field derivation

The evolution equation (1) describes how the activity of single neurons evolve on short time scales. This short time scale is observed by the neurons’ spike-coupling. The equation can be written as

with

Let us consider the eigenvalue spectrum of the connectivity matrix J46 with the basis vectors \(\{{\psi }_{j}\},\,\{{\phi }_{i}\},\,{\psi }_{j},{\phi }_{j}\in {{{\mathcal{C}}}}^{N}\) and the eigenvalues \({\lambda }_{j}\in {{\mathcal{C}}}\), which obey

Here, † denotes the complex conjugate transposition and δji is the Kronecker symbol with δii = 1 and δij = 0, i ≠ j. Then

and

The terms εj denote the error of the approximation E[XY] ≈ E[X]E[Y] applied in Eq. (30) and \({\varepsilon }_{j}^{\tau }\) represents the approximation error made by replacing the finite sum by an integral in Eq. (31)46. Both error terms vanish for infinite networks, i.e. N → ∞, yielding Eq. (32). The term \({p}_{j}^{\tau }(T)\) denotes the probability density function of the conduction delays τij in connections to the node j.

Inserting Eq. (32) into Eq. (29) yields

where we assumed homogeneous conduction delay statistics with \({p}_{j}^{\tau }(x)=q(x)\) and we approximated the network mean over ψk and Xj(t − T) by the product of their respective mean values \(\bar{{\psi }_{k}}\) and \(\bar{X}(t-T)\). This approximation holds well for large networks. Multiplying Eq. (28) by \({({\psi }_{k}^{{\dagger} })}_{i}\), taking the corresponding sum and inserting Eq. (33) leads to

It can be shown46,78, that imbalanced infinite Erdoes-Renyi networks can be chosen to have an edge spectrum with a single eigenvalue λ1 and the eigenvector ψ1 = (1/N, …, 1/N)t and a vanishing bulk spectrum. Then for k = 1

with \(U(t)={\psi }_{1}^{{\dagger} }{{\bf{u}}}(t)\). If the time constant τs is large, i.e. tens of milliseconds, then the equation considers slow response activity U(t) and fast incoming δ − function-like spike trains (included in \(\bar{X}(t)\)). Now choosing the slow time scale as the primary scale of description of the network’s dynamics, we average the system activity over a short time window Δt with

yielding

Here,

where the last equation assumes asynchronous neural activity in the population in line with previous studies57,71 and Mj is the number of spikes at neuron j in the time interval [t − Δt; t].

Since the delay times τij of number K = N2 are discrete values, we can write

which leads to the final equation (2) with \(\bar{J}={\lambda }_{1}/K\).

Covariances in non-linear networks

The individual covariance terms \({C}_{k}[{\mu }_{S},{\sigma }_{S}^{2}]\) in Eq. (9) are given by the average over the stationary joint probability density function \({\rho }_{S}(\bar{u},{\bar{u}}_{{\tau }_{k}})\)40 as per Eq. (10),

where two random variables \(\bar{u}\) and \({\bar{u}}_{{\tau }_{k}}\) possess the joint probability distribution \({\rho }_{S}(\bar{u},{\bar{u}}_{{\tau }_{k}})\) given by Eq. (11). The variables \(\bar{u}\) and \({\bar{u}}_{{\tau }_{k}}\) are assumed to be Gaussian with mean μS, variance \({\sigma }_{S}^{2}\) and covariance \(\langle \bar{u}{\bar{u}}_{{\tau }_{k}}\rangle ={\sigma }_{S}^{2}{p}_{k}\), where \({p}_{k}={e}^{-\gamma {\tau }_{k}}\) is the autocorrelation and γ is the correlation rate. To facilitate the computation of the integral in Eq. (34), we can consider instead the change of variable \(\bar{u}\to {\bar{u}}^{{\prime} }+{\mu }_{S}\), \({\bar{u}}_{{\tau }_{k}}\to {\bar{u}}_{{\tau }_{k}}^{{\prime} }+{\mu }_{S}\) and write instead

where the surrogate variables \({\bar{u}}^{{\prime} }\) and \({\bar{u}}_{{\tau }_{k}}^{{\prime} }\) are also Gaussian, but with mean 0, which greatly simplifies the calculation. Equation (35) can now me expressed as the sum

with

and

Since \({\rho }_{S}({\bar{u}}^{{\prime} },{\bar{u}}_{{\tau }_{k}}^{{\prime} })={p}_{S}({\bar{u}}^{{\prime} }| {\bar{u}}_{{\tau }_{k}}^{{\prime} }){p}_{S}({\bar{u}}_{{\tau }_{k}}^{{\prime} })\) and assuming a linear correlation pk between \({\bar{u}}_{{\tau }_{k}}\) and \({\bar{u}}_{{\tau }_{k}}^{{\prime} }\), \({p}_{S}({\bar{u}}^{{\prime} }| {\bar{u}}_{{\tau }_{k}}^{{\prime} })\) is Gaussian with mean \({p}_{k}{\bar{u}}_{{\tau }_{k}}^{{\prime} }\) and variance \({\sigma }_{S}^{2}(1-{p}_{k}^{2})\). Then the first term on the right hand side can readily be computed79,

while by normalization the second term becomes,

where F is given by Eq. (19). Using the expressoin for the mean network gain (i.e., Eq. (21)), and combining Eq. (36) and (37) obtained above yields the desired expression for the covariances,

Network simulations

For the present study, network simulations rely on the use of the Euler-Maruyama scheme with a time step of dt = 0.001 sec, implemented in Python and using the Numba package to speed up integration. The duration of the simulations, as well as the specific value of the parameter used in each case are specified in the figure panels, whenever appropriate. Our code was tested for numerical stability for various values of the time step dt, and the value of dt = 0.001 sec was selected for convenience, guaranteeing numerical stability while limiting simulation time. The nonhomogeneous Poisson spike trains with rate f[u] were generated using the method described in80, that is a spike occurs within a time step dt whenever the probability \(P=1-\exp (-f[u]dt)\) exceeds the value of a uniform random deviate within the range (0, 1), independently sampled at each time step. For simplicity, the response function f used for individual neurons is given in Eq. (17). Due to the presence of delays, history functions for the potential variables uj in Eq. (1) were assumed to be trivial, and uniform across neurons i.e., uj(s) = 0 for \({\tau }_{\max }\le s\le 0\), ∀ j where \({\tau }_{\max }=\max [{\tau }_{ij}]\) corresponds to the maximal delay obtained in the network in each realization.

Data availability

The data analyzed in this study has been generated using custom algorithms, accessible as detailed in the Code availability statement below.

Code availability

The algorithms and numerical methods that support the findings of this study are available from GitHub (https://github.com/Jeremie-Lefebvre/Lefebvre-et-al-Comm-Phys-2025).

References

Buldú, J., Garcia-Ojalvo, J. & Torrent, M. Delay-induced resonances in an optical system with feedback. Phys. Rev. E 69, 046207 (2004).

Eurich, C. & Milton, J. Noise-induced transitions in human postural sway. Phys. Rev. E 54, 6681 (1996).

Erneux, T. Applied Delay Differential Equations 1st edn, Vol. 204 (Springer, Heidelberg, 2009).

Hutt, A., Bestehorn, M. & Wennekers, T. Pattern formation in intracortical neuronal fields. Netw. Comput. Neural Syst. 14, 351–368 (2003).

Hutt, A. & Rougier, N. Activity spread and breathers induced by finite transmission speeds in two-dimensional neural fields. Phys. Rev. E 82, R055701 (2010).

Liu, C. et al. The effects of time delay on the stochastic resonance in feed-forward-loop neuronal network motifs. Comm. Nonlin. Sci. Num. Sim. 19, 1088–1096 (2014).

Hashemi, M., Hutt, A., Hight, D. & Sleigh, J. Anesthetic action on the transmission delay between cortex and thalamus explains the beta buzz observed under propofol anesthesia. PLoS 12, e0179286 (2017).

Choi, M., Kim, H., Kim, D. & Hong, H. Synchronization in a system of globally coupled oscillators with time delay. Phys. Rev. E 61, 371 (2000).

Dhamala, M., Jirsa, V. & Ding, M. Enhancement of neural synchrony by time delay. Phys. Rev. Lett. 92, 074104 (2004).

Sen, M. K., Bag, B. C., Petrosyan, K. G. & Hu, C.-K. Effect of time delay on the onset of synchronization of the stochastic kuramoto model. J. Stat. Mech. https://doi.org/10.1088/1742-5468/2010/08/P08018 (2010).

Ray, S., Sen, M., Baura, A. & Bag, B. Lifetime of the incoherent state of coupled phase oscillators. Eur. Phys. J. B 85, 306 (2012).

Deco, G., Jirsa, V., McIntosh, A., Sporns, O. & Kötter, R. Key role of coupling, delay, and noise in resting brain fluctuations. Proc. Natl Acad. Sci. USA 106, 10302–10307 (2009).

Noori, R. et al. Activity-dependent myelination: a glial mechanism of oscillatory self-organization in large-scale brain networks. Proc. Natl Acad. Sci. USA 117, 13227–13237 (2020).

Roxin, A., Brunel, N. & Hansel, D. Role of delays in shaping spatiotemporal dynamics of neuronal activity in large networks. Phys. Rev. Lett. 94, 238103 (2005).

Deco, G., Jirsa, V., Robinson, P., Breakspear, M. & Friston, K. The dynamic brain: from spiking neurons to neural masses and cortical fields. PLoS Comput. Biol. 4, e1000092 (2008).

Cabral, J. et al. Metastable oscillatory modes emerge from synchronization in the brain spacetime connectome. Commun. Phys. 5, 184 (2022).

Salami, M., Itami, C., Tsumoto, T. & Kimura, F. Change of conduction velocity by regional myelination yields constant latency irrespective of distance between thalamus and cortex. Proc. Natl. Acad. Sci. 100, 6174–6179 (2003).

Swadlow, H., Rosene, D. & Waxman, S. Characteristics of interhemispheric impulse conduction between prelunate gyri of the rhesus monkey. Exp. Brain Res. 33, 455–467 (1978).

Swadlow, H. A. & Waxman, S. G. Axonal conduction delays. Scholarpedia 7, 1451 (2012).

Doiron, B., Litwin-Kumar, A., Rosenbaum, R., Ocker, G. & Josić, K. The mechanics of state-dependent neural correlations. Nat. Neurosci. 19, 383–393 (2016).

Talidou, A., Frankland, P., Mabbott, D. & Lefebvre, J. Homeostatic coordination and up-regulation of neural activity by activity-dependent myelination. Nat. Comput. Sci. 2, 665–676 (2022).

Shadlen, M. & Newsome, W. The variable discharge of cortical neurons: implications for connectivity, computation, and information coding. J. Neurosci. 18, 3870–3896 (1998).

Izhikevich, E. Polychronization: computation with spikes. Neural Comput. 18, 245–282 (2006).

Gibson, E. et al. Neuronal activity promotes oligodendrogenesis and adaptive myelination in the mammalian brain. Science 344, 1252304 (2014).

Auer, F., Vagionitis, S. & Czopka, T. Evidence for myelin sheath remodeling in the cns revealed by in vivo imaging. Curr. Biol. 28, 549–559 (2018).

Mount, C. & Monje, M. Wrapped to adapt: experience-dependent myelination. Neuron 95, 743–756 (2017).

Fields, R. A new mechanism of nervous system plasticity: activity-dependent myelination. Nature Rev. Neurosci. 16, 756–767 (2015).

Steadman, P. et al. Disruption of oligodendrogenesis impairs memory consolidation in adult mice. Neuron 105, 150–164 (2020).

Fields, R. & Bukalo, O. Myelin makes memories. Nat. Neurosci. 23, 469–470 (2020).

Xin, W. & Chan, J. Myelin plasticity: sculpting circuits in learning and memory. Nat. Rev. Neurosci. 21, 682–694 (2020).

Cheng, S.-M. & Carr, C. Functional delay of myelination of auditory delay lines in the nucleus laminaris of the barn owl. Dev. Neurobiol. 67, 1957–1974 (2007).

Bells, S. et al. Changes in white matter microstructure impact cognition by disrupting the ability of neural assemblies to synchronize. J. Neurosci. 37, 8227–8238 (2017).

Bells, S. et al. White matter plasticity and maturation in human cognition. Glia 67, 2020–2037 (2019).

Nature Neuroscience. Glia move to the foreground. Nat. Neurosci. 27, 1427–1427 (2024).

Ferraina, S., Paré, M. & Wurtz, R. H. Comparison of cortico-cortical and cortico-collicular signals for the generation of saccadic eye movements. J. Neurophysiol. 87, 845–858 (2002).

Swadlow, H. A. Efferent neurons and suspected interneurons in s-1 forelimb representation of the awake rabbit: receptive fields and axonal properties. J. Neurophysiol. 63, 1477–1498 (1990).

Horsthemke, W. & Lefever, R. Noise-Induced Transitions: Theory and Applications in Physics, Chemistry, and Biology (Springer, Heidelberg, 1984).

Frank, T. & Beek, P. Stationary solutions of linear stochastic delay differential equations: applications to biological systems. Phys. Rev. E 64, 021917 (2001).

Frank, T., Beek, P. & Friedrich, R. Fokker-Planck perspective on stochastic delay systems: exact solutions and data analysis of biological systems. Phys. Rev. E 68, 021912 (2003).

Frank, T. Delay Fokker-Planck equations, perturbation theory, and data analysis for nonlinear stochastic systems with time delays. Phys. Rev. E 71, 031106 (2005).

Frank, T. Kramers–moyal expansion for stochastic differential equations with single and multiple delays: Applications to financial physics and neurophysics. Phys. Lett. A 360, 552–562 (2007).

Pajevic, S., Plenz, D., Basser, P. & Fields, R. Oligodendrocyte-mediated myelin plasticity and its role in neural synchronization. eLife 12, e81982 (2023).

Knowles, J. et al. Maladaptive myelination promotes generalized epilepsy progression. Nat. Neurosci. 25, 596–606 (2022).

Rahmanzadeh, R. et al. Myelin and axon pathology in multiple sclerosis assessed by myelin water and multi-shell diffusion imaging. Brain 144, 1684–1696 (2021).

Guillouzic, S., L’Heureux, I. & Longtin, A. Small delay approximation of stochastic delay differential equations. Phys. Rev. E 59, 3970 (1999).

Lefebvre, J. & Hutt, A. Induced synchronization by endogenous noise modulation in finite-size random neural networks: a stochastic mean-field study. Chaos 33, 123110 (2023).

Reddy, D. V. R., Sen, A. & Johnston, G. L. Time delay induced death in coupled limit cycle oscillators. Phys. Rev. Lett. 80, 5109–5112 (1998).

Atay, F. Distributed delays facilitate amplitude death of coupled oscillators. Physical Rev. Lett. 91, 094101 (2003).

Meyer, U. et al. Distributed delays stabilize neural feedback systems. Biol. Cybern. 99, 79–87 (2008).

Tavakoli, S. & Longtin, A. Multi-delay complexity collapse. Phys. Rev. Res. 2, 033485 (2020).

Hutt, A., Lefebvre, J. & Longtin, A. Delay stabilizes stochastic systems near a non-oscillatory instability. Europhys. Lett. 98, 20004 (2012).

Lefebvre, J. & Hutt, A. Additive noise quenches delay-induced oscillations. Europhys. Lett. 102, 60003 (2013).

Naud, R. & Longtin, A. Spike-diffuse-spike model of axonal propagation links demyelination to compound action potential dispersion. J. Math. Neurosci. 9, 3 (2019).

Hutt, A., Mierau, A. & Lefebvre, J. Dynamic control of synchronous activity in networks of spiking neurons. PloS ONE 11, e0161488 (2016).

Lefebvre, J., Hutt, A., Knebel, J.-F., Whittingstall, K. & Murray, M. Stimulus statistics shape oscillations in nonlinear recurrent neural networks. J. Neurosci. 35, 2895–2903 (2015).

Hutt, A., Lefebvre, J., Hight, D. & Kaiser, H. Phase coherence induced by additive Gaussian and non-Gaussian noise in excitable networks with application to burst suppression-like brain signals. Front. Appl. Math. Stat. 5, 69 (2020).

Brunel, N. Dynamics of sparsely connected networks of excitatory and inhibitory spiking neurons. J. Comput. Neurosci. 8, 183–208 (2000).

Lim, S. et al. Inferring learning rules from distributions of firing rates in cortical neurons. Nat. Neurosci. 18, 1804–1810 (2015).

Ly, C. & Marsat, G. Variable synaptic strengths controls the firing rate distribution in feedforward neural networks. J. Comput. Neurosci. 44, 75–95 (2018).

Williamson, J. & Lyons, D. Myelin dynamics throughout life: an ever-changing landscape ? Front. Cell. Neurosci. 12, 424 (2018).

Allen, N. & Lyons, D. Glia as architects of central nervous system formation and function. Science 362, 181–185 (2018).

Suminaite, D., Lyons, D. & Livesey, M. Myelinated axon physiology and regulation of neural circuit function. Glia 67, 2050–2062 (2019).

Seidl, A. Regulation of conduction time along axons. Neuroscience 276, 126–134 (2014).

Jasper, H. Jasper’s Basic Mechanisms of the Epilepsies 4th edn, vol. 80 (OUP USA, Bethesda (MD), 2012).

Bacmeister, C. et al. Motor learning drives dynamic patterns of intermittent myelination on learning-activated axons. Nat. Neurosci. 25, 1300–1313 (2022).

Pan, S., Mayoral, S., Choi, H., Chan, J. & Kheirbek, M. Preservation of a remote fear memory requires new myelin formation. Nat. Neurosci. 23, 487–499 (2020).

Kohama, S., Rosene, D. & Sherman, L. Age-related changes in human and non-human primate white matter: from myelination disturbances to cognitive decline. Age 34, 1093–1110 (2012).

Wang, F. et al. Myelin degeneration and diminished myelin renewal contribute to age-related deficits in memory. Nat. Neurosci. 23, 481–486 (2020).

Dumont, G., Northoff, G. & Longtin, A. Linear noise approximation for oscillations in a stochastic inhibitory network with delay. Phys. Rev. E 90, 012702 (2014).

Hutt, A., Sutherland, C. & Longtin, A. Driving neural oscillations with correlated spatial input and topographic feedback. Phys. Rev. E 78, 021911 (2008).

Brunel, N. & Hakim, V. Fast global oscillations in networks of integrate-and-fire neurons with low firing rates. Neural Comput. 11, 1621–1671 (1999).

Doiron, B., Lindner, B., Longtin, A., Maler, L. & Bastian, J. Oscillatory activity in electrosensory neurons increases with the spatial correlation of the stochastic input stimulus. Phys. Rev. Lett. 93, 048101 (2004).

Longtin, A. Oscillation onset in neural delayed feedback. In Proc. Third Neural Information Processing Systems Conf. (eds. Lippmann, R. P., Moody, J. E. & Touretzky, D. S.) 130–136 (NIPS, 1993).

Yang, S. M., Michel, K., Jokhi, V., Nedivi, E. & Arlotta, P. Neuron class–specific responses govern adaptive myelin remodeling in the neocortex. Science 370, eabd2109 (2020).

Call, C. L. & Bergles, D. E. Cortical neurons exhibit diverse myelination patterns that scale between mouse brain regions and regenerate after demyelination. Nat. Commun. 12, 1–15 (2021).

René, A. & Longtin, A. Mean, covariance, and effective dimension of stochastic distributed delay dynamics. Chaos 27, 114322 (2017).

Pajevic, S., Basser, P. & Fields, R. Role of myelin plasticity in oscillations and synchrony of neuronal activity. Neurosci. 276, 135–147 (2014).

Hutt, A., Wahl, T., Voges, N., Hausmann, J. & Lefebvre, J. Coherence resonance in random Erdos-Renyi neural networks : mean-field theory. Front. Appl. Math. Stat. 7, 697904 (2021).

Ng, E. W. & Geller, M. A table of integrals of the error functions. J. Res. 73B, 1–44 (1969).

Gerstner, W. & Kistler, W. Spiking Neuron Models: Single Neurons, Populations, Plasticity (Cambridge University Press, 2002).

Lam, S. K., Pitrou, A. & Seibert, S. Numba: a llvm-based python jit compiler. In Proc. Second Workshop on the LLVM Compiler Infrastructure in HPC, LLVM ’15, 1–6 (Association for Computing Machinery, New York, NY, USA, 2015).

Acknowledgements

We thank the National Sciences and Engineering Research Council of Canada (NSERC Grants RGPIN-2017-06662) for support of this research.

Author information

Authors and Affiliations

Contributions