Abstract

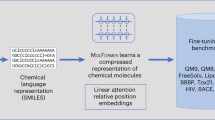

Optimizing a candidate molecule’s physiochemical and functional properties has been a critical task in drug and material design. Although the non-trivial task of balancing multiple (potentially conflicting) optimization objectives is considered ideal for artificial intelligence, several technical challenges such as the scarcity of multiproperty-labelled training data have hindered the development of a satisfactory AI solution for a long time. Prompt-MolOpt is a tool for molecular optimization; it makes use of prompt-based embeddings, as used in large language models, to improve the transformer’s ability to optimize molecules for specific property adjustments. Notably, Prompt-MolOpt excels in working with limited multiproperty data (even under the zero-shot setting) by effectively generalizing causal relationships learned from single-property datasets. In comparative evaluations against established models such as JTNN, hierG2G and Modof, Prompt-MolOpt achieves over a 15% relative improvement in multiproperty optimization success rates compared with the leading Modof model. Furthermore, a variant of Prompt-MolOpt, named Prompt-MolOptP, can preserve the pharmacophores or any user-specified fragments under the structural transformation, further broadening its application scope. By constructing tailored optimization datasets, with the protocol introduced in this work, Prompt-MolOpt steers molecular optimization towards ___domain-relevant chemical spaces, enhancing the quality of the optimized molecules. Real-world tests, such as those involving blood–brain barrier permeability optimization, underscore its practical relevance. Prompt-MolOpt offers a versatile approach for multiproperty and multi-site molecular optimizations, suggesting its potential utility in chemistry research and drug and material discovery.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

27,99 € / 30 days

cancel any time

Subscribe to this journal

Receive 12 digital issues and online access to articles

118,99 € per year

only 9,92 € per issue

Buy this article

- Purchase on SpringerLink

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

Data availability

The datasets used in this study and the data generated in this study are available at https://github.com/wzxxxx/Prompt-MolOpt and https://doi.org/10.5281/zenodo.11080951 (ref. 47). Source data are provided with this paper.

Code availability

The models were implemented using Python (v.3.6.13) with dgl(v.0.7.1) and PyTorch (v.1.6.0). The data processing and metrics calculation were implemented using Python (v.3.6.13) with scikit-learn (v.0.21.3), NumPy (v.1.19.2) and Pandas (v.1.1.5). The code for Prompt-MolOpt, Prompt-MolOptm and Prompt-MolOptP is publicly available at https://github.com/wzxxxx/Prompt-MolOpt and https://doi.org/10.5281/zenodo.11080951 (ref. 47).

References

Fromer, J. C. & Coley, C. W. Computer-aided multi-objective optimization in small molecule discovery. Patterns 4, 100678 (2023).

Nicolaou, C. A. & Brown, N. Multi-objective optimization methods in drug design. Drug Discov. Today Technol. 10, e427–e435 (2013).

Jorgensen, W. L. Efficient drug lead discovery and optimization. Acc. Chem. Res. 42, 724–733 (2009).

Leelananda, S. P. & Lindert, S. Computational methods in drug discovery. Beilstein J. Org. Chem. 12, 2694–2718 (2016).

Zhang, X. et al. Efficient and accurate large library ligand docking with KarmaDock. Nat. Comput. Sci. 3, 789–804 (2023).

Shen, C. et al. Boosting protein–ligand binding pose prediction and virtual screening based on residue–atom distance likelihood potential and graph transformer. J. Med. Chem. 65, 10691–10706 (2022).

Maia, E. H. B., Assis, L. C., De Oliveira, T. A., Da Silva, A. M. & Taranto, A. G. Structure-based virtual screening: from classical to artificial intelligence. Front. Chem. 8, 343 (2020).

Gentile, F. et al. Artificial intelligence-enabled virtual screening of ultra-large chemical libraries with deep docking. Nat. Protoc. 17, 672–697 (2022).

Choung, O.-H., Vianello, R., Segler, M., Stiefl, N. & Jiménez-Luna, J. Extracting medicinal chemistry intuition via preference machine learning. Nat. Commun. 14, 6651 (2023).

Cheshire, D. R. How well do medicinal chemists learn from experience? Drug Discov. Today 16, 817–821 (2011).

Shan, J. & Ji, C. MolOpt: a web server for drug design using bioisosteric transformation. Curr. Comput. Aided Drug Des. 16, 460–466 (2020).

Yang, H. et al. ADMETopt: a web server for ADMET optimization in drug design via scaffold hopping. J. Chem. Inf. Model. 58, 2051–2056 (2018).

Dossetter, A. G., Griffen, E. J. & Leach, A. G. Matched molecular pair analysis in drug discovery. Drug Discovery Today 18, 724–731 (2013).

Tu, Z. & Coley, C. W. Permutation invariant graph-to-sequence model for template-free retrosynthesis and reaction prediction. J. Chem. Inf. Model. 62, 3503–3513 (2022).

Jin, W., Barzilay, R. & Jaakkola, T. Multi-objective molecule generation using interpretable substructures. In Proc. 37th International Conference on Machine Learning 4849–4859 (PMLR, 2020).

Kong, D. et al. Dual-space optimization: improved molecule sequence design by latent prompt transformer. Preprint at https://arxiv.org/abs/2402.17179 (2024).

Shi, C. et al. GraphAF: a flow-based autoregressive model for molecular graph generation. Preprint at https://arxiv.org/abs/2001.09382 (2020).

Zang, C. & Wang, F. Moflow: an invertible flow model for generating molecular graphs. In Proc. 26th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining 617–626 (ACM, 2020).

Jin W., Barzilay R. & Jaakkola T. Junction tree variational autoencoder for molecular graph generation. In Proc. 35th International Conference on Machine Learning 2323–2332 (PMLR, 2018).

Podda, M., Bacciu, D. & Micheli, A. A deep generative model for fragment-based molecule generation. In Proc. 23rd International Conference on Artificial Intelligence and Statistics 2240–2250 (PMLR, 2020).

Chen, Z., Min, M. R., Parthasarathy, S. & Ning, X. A deep generative model for molecule optimization via one fragment modification. Nat. Mach. Intell. 3, 1040–1049 (2021).

Floridi, L. & Chiriatti, M. GPT-3: its nature, scope, limits, and consequences. Minds Mach. 30, 681–694 (2020).

Castro Nascimento, C. M. & Pimentel, A. S. Do large language models understand chemistry? A conversation with ChatGPT. J. Chem. Inf. Model. 63, 1649–1655 (2023).

Guo, H., Zhao, S., Wang, H., Du, Y. & Qin, B. Moltailor: tailoring chemical molecular representation to specific tasks via text prompts. Preprint at https://arxiv.org/abs/2401.11403 (2024).

Ye, G. et al. DrugAssist: a large language model for molecule optimization. Preprint at https://arxiv.org/abs/2401.10334 (2023).

Zhou, K., Yang, J., Loy, C. C. & Liu Z. Conditional prompt learning for vision-language models. In Proc. IEEE/CVF Conference on Computer Vision and Pattern Recognition 16816–16825 (IEEE, 2022).

He, Y. et al. HyperPrompt: prompt-based task-conditioning of transformers. Preprint at https://arxiv.org/abs/2203.00759 (2022).

Liu, P. et al. Pre-train, prompt, and predict: a systematic survey of prompting methods in natural language processing. ACM Comput. Surv. 55, 1–35 (2023).

Zhang, X. et al. Clamp: prompt-based contrastive learning for connecting language and animal pose. In Proc. IEEE/CVF Conference on Computer Vision and Pattern Recognition 23272–23281 (IEEE, 2023).

Teterwak, P., Sun, X., Plummer, B. A., Saenko, K. & Lim S.-N. CLAMP: contrastive language model prompt-tuning. Preperint at https://arxiv.org/abs/2312.01629 (2023).

Born, J. & Manica, M. Regression transformer enables concurrent sequence regression and generation for molecular language modelling. Nat. Mach. Intell. 5, 432–444 (2023).

Seidl, P., Vall, A., Hochreiter, S. & Klambauer, G. Enhancing activity prediction models in drug discovery with the ability to understand human language. In Proc. 40th International Conference on Machine Learning 30458–30490 (PMLR, 2023).

Wu, Z. et al. Chemistry-intuitive explanation of graph neural networks for molecular property prediction with substructure masking. Nat. Commun. 14, 2585 (2023).

Wu, Z. et al. Mining toxicity information from large amounts of toxicity data. J. Med. Chem. 64, 6924–6936 (2021).

Jin, W,. Yang, K., Barzilay, R. & Jaakkola, T. Learning multimodal graph-to-graph translation for molecule optimization. In Proc. International Conference on Learning Representations 856 (ICLR, 2019).

Jin, W., Barzilay, R. & Jaakkola, T. Hierarchical generation of molecular graphs using structural motifs. In Proc. 37th International Conference on Machine Learning 4839–4848 (PMLR, 2020).

Bickerton, G. R., Paolini, G. V., Besnard, J., Muresan, S. & Hopkins, A. L. Quantifying the chemical beauty of drugs. Nat. Chem. 4, 90–98 (2012).

Delaney, J. S. ESOL: estimating aqueous solubility directly from molecular structure. J. Chem. Inf. Comput. Sci. 44, 1000–1005 (2004).

Xu, C. et al. In silico prediction of chemical Ames mutagenicity. J. Chem. Inf. Model. 52, 2840–2847 (2012).

Xiong, G., et al. ADMETlab 2.0: an integrated online platform for accurate and comprehensive predictions of ADMET properties. Nucleic Acids Res. 49, W5–W14 (2021).

Wu, Z. et al. MoleculeNet: a benchmark for molecular machine learning. Chem. Sci. 9, 513–530 (2018).

Cid, J. M. et al. Discovery of 3-cyclopropylmethyl-7-(4-phenylpiperidin-1-yl)-8-trifluoromethyl [1,2,4] triazolo [4,3-a] pyridine (JNJ-42153605): a positive allosteric modulator of the metabotropic glutamate 2 receptor. J. Med. Chem. 55, 8770–8789 (2012).

Wildman, S. A. & Crippen, G. M. Prediction of physicochemical parameters by atomic contributions. J. Chem. Inf. Comput. Sci. 39, 868–873 (1999).

Wishart, D. S. et al. DrugBank 5.0: a major update to the DrugBank database for 2018. Nucleic Acids Res. 46, D1074–D1082 (2018).

Olivecrona, M., Blaschke, T., Engkvist, O. & Chen, H. Molecular de-novo design through deep reinforcement learning. J. Cheminform. 9, 48 (2017).

Schlichtkrull, M. et al. Modeling relational data with graph convolutional networks. In Proc. 15th International Conference, ESWC 593–607 (Springer, 2018).

Zhenxing, W. et al. Leveraging language model for advanced multi-property molecular optimization via prompt engineering. Zenodo https://doi.org/10.5281/zenodo.11080951 (2023).

Acknowledgements

This study was financially supported by the National Key R&D Program of China (grant number 2021YFF1201400 to T.H.), the National Natural Science Foundation of China (grant numbers 22220102001 to T.H., 82404512 to Z.W. and 22303083 to J.W.), the Natural Science Foundation of Zhejiang Province of China (grant number LD22H300001 to T.H.), the China Postdoctoral Foundation (grant number 2023M742993 to Z.W.) and the Postdoctoral Fellowship Program of CPSF (grant number GZB20240671 to Z.W.).

Author information

Authors and Affiliations

Contributions

T.H., C.Y.H., D.C. and Z.W. designed the research study. Z.W. developed the method and wrote the code. Z.W., O.Z., X.W., L.F., H.Z., J.W. H.D., D.J. and Y.D. performed the analysis. Z.W., T.H., C.Y.H. and D.C wrote the paper. All authors read and approved the paper.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Nature Machine Intelligence thanks Arvind Ramanathan and the other, anonymous, reviewer(s) for their contribution to the peer review of this work.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Extended data

Supplementary information

Supplementary Information

Supplementary Figs. 1–5, Discussion 1–5 and Tables 1–5.

Source data

Source Data Fig. 1

The structural optimization of compound 1. The table provides the Source Data for Fig. 1 and also includes the SlogP and BBBP prediction values of the original and optimized molecules. The table also presents information on the top-10 optimized molecules by Prompt-MolOptP on the basis of prompt tokens ‘BBBP’ and ‘BBBP lipop’, including the SMILES, SlogP and BBBP prediction values.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Wu, Z., Zhang, O., Wang, X. et al. Leveraging language model for advanced multiproperty molecular optimization via prompt engineering. Nat Mach Intell 6, 1359–1369 (2024). https://doi.org/10.1038/s42256-024-00916-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s42256-024-00916-5