Abstract

Digital technology has become crucial for the protection of cultural relics, enabling their acquisition, preservation, exhibition, and dissemination in a non-contact manner.At present, the three-dimensional(3D) visualization technology of cultural relics focuses on the research of non-true 3D visualization, which cannot provide continuous viewing angle and takes a long time to render. This paper focuses on the acquisition and reproduction of digital information related to cultural relics, and proposes to achieve the true 3D display of cultural relics from a continuous perspective based on integrated imaging(InIm). Building up on traditional InIm, an Element image array (EIA) generation algorithm based on local depth template matching is designed by using spatial geometry relation. Experimental results show that the algorithm not only enables naked-eye 3D visualization of cultural relics, but also the generation speed is more than twice that of the compared 3D display data source generation algorithm.

Similar content being viewed by others

Introduction

Cultural relics record the state of cultural, economic and political development of a country and a nation in a specific period of time, and reflect authenticity, non-replicability and irreplaceability. However, with the passage of time, natural environmental changes and human activities have led to the ongoing destruction of ancient cultural relics. How to use new technology and new means to allow human beings to appreciate cultural relics at the same time, to protect cultural relics treasures from destruction, so as to maximize their preservation, has become an imminent global issue.In order to solve the above problems, a lot of research work has been carried out at home and abroad. For example, the United States and Italy have cooperated to carry out “The Digital Michelangelo Project” and the European Community has also jointly carried out the “Virtual Heritage” program [1]. In recent years, the Dunhuang Academy has also been committed to the use digital technology to preserve and restore Dunhuang mural paintings and other endangered cultural relics. In addition, 3D digital reproduction plays a crucial role, especially for historical artifacts that have never been publicly displayed. For example, the Palace Museum houses over 1.5 million cultural relics, yet less than 2% are exhibited to the public [2].By employing 3D digital technologies, previously unseen cultural relics can be presented to the public,whether it is the popularization of history and culture or artistic aesthetic shaping have a positive effect. Because of this, in recent years, more and more online museums have been put on line one after another to visualize the 3D data of cultural relics, so that the audience can view the target of interest only online. However, current online museums mostly is a single cultural relics show,and requiring users to manually adjust perspectives.

This limitation impedes simultaneous viewing from multiple angles,and this method can only achieve 2D display.Some researchers also use 3D scanning or 3D printing technology to restore and virtual display cultural relics, but these technologies require specific equipment to be placed in specific locations, which limits the overall development [3]. In addition, the accuracy of 3D scanning technology is positively related to the quality of the equipment, which greatly increases the cost of the technology and is not easy to carry and spread.

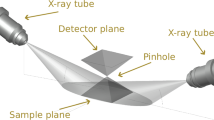

This paper presents a technology for true 3D visualization of cultural relics using naked-eye viewing, based on InIm.The schematic diagram of this technology is shown in Fig. 1. In this technology, the micro-lens array in the acquisition process is simulated by computer to assist in the “one-search-one” optical path mapping from the EIA to the original scene(OS) image, the OS color information and depth information (spatial information) recorded in a 2D EIA,EIA is a 3D light field display data source seamlessly spliced by multiple EIs. Each EI is a local image formed by the intersection of the original light field rays through each micro-lens, equal to the physical size of the micro-lens. Finally, through the display micro-lens array, the light path intersects in space, restoring the 3D light field information of the scene and realizing the 3D visualization of cultural relics. In the EIA generation process, based on the spatial geometry relationship in InIm, we proposed a local depth template matching element image array generation algorithm, which greatly shortened the generation time of 3D display data sources.

To summarize,our main contributions in this paper can be summarized as follows:

-

1.

We propose a naked-eye 3D visualization technology for cultural relics that eliminates the need for auxiliary equipment and allows unrestricted viewing within the effective viewing angle. This technology achieves a natural, true 3D visual effect of cultural relics with full parallax and continuous viewing angles.

-

2.

We introduce a low-cost and efficient digital acquisition technology for cultural relics. It enables the simultaneous acquisition of color and spatial data information for a group of related cultural relics in a single shot.

-

3.

We present an algorithm for generating EIA based on local depth template matching. This algorithm reduces time costs without compromising the quality of EIA generation, thus advancing research into real-time naked-eye 3D reproduction of cultural relics.

The paper is structured as follows: a brief overview of recent digital 3D techniques on cultural artifacts is given in “The related work”. The research methodology of this paper is described in “The proposed method”.Experimental validation of the proposed method and discussion in “Experiment and discussion”. The conclusion of the methodology and future research directions are described in “Conclusion”.

The related work

3D visualization of cultural relics

The target cultural relics after 3D scanning and data processing, the corresponding digital model can be obtained, on the basis of which the 3D visualization of cultural relics can be realized. At present, there are two main forms, one is the use of 3D printing technology to complete the physical visualization of the model construction; the other is in the 3D data reconstruction, rendering the formation of 3D model after the use of cloud computing technology, to build a display system of cultural relics, and the 3D model in the system of unified organization, dispatch. The former physical visualization can more intuitively show the shape, structure and proportion of objects, which helps better understanding and communication. For example, Kantaros A. et al. have used 3D scanning and 3D printing to study late Bronze Age pottery and artifacts in the Piraeus Archaeological Museum [4, 5].However, the printing accuracy of the technology is limited and it is not possible to realize anytime, anywhere viewing.

The latter for the digital 3D visualization, because it has a lightweight, easy to disseminate, can be better adapted to the public in the modern fast-paced life under the new reading and browsing habits and other outstanding advantages, so that it has gained the attention of the industry, and has become an effective means of digital communication of cultural relics. Into the twenty-first century, cultural relic digitization has evolved into two distinct development paths: the first emphasizes high-fidelity reproduction of physical artifacts, exemplified by Google’s “Google Arts & Culture” project [6], aiming for lifelike representations; the second is completely detached from the scenes of physical museums, and pursues the pursuit of immersive interactions with "virtual". With the pursuit of people's viewing experience, the 3D representation of highly restored physical cultural relics has become the key research content of digital 3D visualization of cultural relics.

The 3D visualization technology used in this study is a true 3D light field display technology that can embody correct 3D perception and occlusion in vertical and horizontal directions, multi-view and full-color.

3D digital acquisition of cultural relics

The 3D digitization of precious historical artifacts has strict requirements and must ensure non-destructive acquisition, so at present the main means of non-contact 3D information acquisition is used. The non-contact acquisition of cultural relics is based on the theoretical knowledge of physics and mathematics, and the 3D spatial digital information of the object can be obtained only by means of laser radar, structured light, sound wave or electromagnetism, etc. Non-contact acquisition can be divided into active and passive types. Among them, the active acquisition equipment is mainly sent to the object of the physical feedback signal converted to the object of the 3D digital information, with a faster conversion speed, is currently the main digital acquisition method. For example, Bernardini utilized 3D laser scanning technology based on the time-of-flight method to digitize Michelangelo's sculpture [7].Ren of Zhejiang University used projection coding structured light technology, completed the 3D model reconstruction of Jinsha jade chisel and Liangzhu cultural relics black pottery tripod [8]. Although the reconstruction effect of the above techniques is still good, it is still very time-consuming to generate high-quality 3D models even if the modeling is carried out for a single object rather than the whole acquisition. For example, according to the 3D Conform Project [9], it takes up to 20 h to acquire a 50 × 50 × 50cm object using structured light acquisition technology. In order to solve the problem of consuming too much time, some researchers proposed the automatic processing of 3D scanning data of cultural relics to realize accurate and independent digital cultural relics design [10]. In actual viewing, people’s naked eyes can only view the surface features of the object and the spatial features it is located in, while the integrity of the inner cavity of the object is highly required in the above 3D reconstruction process, which is even more time-consuming and costly. Passive acquisition is based on the principle of stereo vision measurement and can be categorized into monocular vision, binocular vision, and multiocular vision acquisition according to the number of images from different angles used in generating digital information. Depth information is acquired by calculating the relationship between multiple view images or a set of consecutive images taken at different viewpoints to generate point cloud data. The stereo vision acquisition method is relatively flexible and convenient, and mainly relies on image processing algorithm. The increase in the number of cameras can improve accuracy and restore accuracy, but it will also make the hardware system more complex and increase the cost.

The digital acquisition method based on the principle of monocular stereo vision is adopted in this paper. Research teams such as Kookmin University [11] and Dalian University of Technology [12] have conducted research on this issue. With the development of deep learning theory, David Eigen [13] applied deep learning method to monocular image depth estimation for the first time, which made 3D light field information acquisition based on monocular vision more simple and suitable for large-scale and complex scene acquisition. Therefore, in this paper, monocular stereo vision is used as an InIm scene information acquisition method, color images and their registered depth images are used, and local depth matching templates are constructed in the mapping process to achieve rapid generation of EIA.

The proposed method

InIm was proposed by Lippmann in 1908 [14], inspired by the study of the bionic structure of insect compound eyes, which is an advanced and complex imaging system consisting of many closely spaced and hemispherically distributed small eyes with the advantages of small size, distortion-free imaging, wide field-of-view, and sensitive motion tracking capability. InIm simulates the structure with a micro-lens array, which acts like an insect's small eye and can map multi-angle, large-field spatial information of the target.

As shown in Fig. 2 the InIm can be divided into the OS acquisition process and the reproduction light field reconstruction process, the acquisition process will be the color information of the OS according to the spatial information of its corresponding position to determine its mapping position through the analog micro-lens array, recorded in the 2D EIA. In the reconstruction process, according to the reversibility of the light path, the 2D EIA is passed through the micro-lens array with the same specification as that of the acquisition process, and the light rays intersect in space to form a 3D reproduction of the OS, and this study focuses more on the stage of generating EIA in the process of scene information acquisition.

The way to generate EIA by using the color image and depth image of the OS is to build a mapping model from the original light field to EIA by simulating the geometric relationship of the 3D spatial light path, which mainly includes two parts: establishing the mapping position relationship and writing the color information. This approach has received extensive attention from researchers because of the convenience of the digital acquisition process and the high resolution of the generated images, but it also suffers from the problems of long computation time and complicated mapping process, etc. In 2012, the research of this method was carried out by Li et al. [15], the principle is shown in Fig. 3, a point \(A\) in the OS field through different micro-lens optical centers to form a series of homologous points. Due to the limitation of the micro-lens size, in the case of the micro-lens array closely arranged, the size of the elemental image is not more than the size of the micro-lens pitch(\(P\)). By observing the position of the red circle in Fig. 3, it is found that \(A_{3}\) has exceeded the imaging range of its corresponding micro-lens and is an invalid mapping. Another, as shown by the dotted line in Fig. 3, there is a phenomenon that multiple pixels of the OS are mapped to the same display pixel, which belongs to redundant mapping. The above situation is the main reason for the inefficiency of this method. In addition, in the mapping process of points parallel to the depth step of the \(Z - axis\), the EIA will have holes. Since the EIA uses discrete point coordinates to represent the spatial position, integer operation is required in the mapping process. After the integer is taken, some pixel points on the EIA will have no mapping points, which will also cause holes. The existence of these voids seriously affects the image quality and the subsequent 3D reproduction effect.

In 2020, Gu et al. [16] proposed a reverse mapping from the display light field to the OS to generate EIA, as shown by the orange rays in Fig. 3, set out from each display pixel to find its unique mapping point in the original light field. This algorithm solves the redundant mapping problem and effectively improves the operation speed, as well as solves the problem of holes caused by not matching the mapping points. However, because it requires repeated iterative comparisons according to the change of depth in the process of finding the unique mapping point, and it needs to set out from each pixel point on the EIA to find the matching mapping point individually, it is still time-consuming, and has not reached the ideal state of generating speed. In order to solve the above problems, the EIA generation method based on local depth matching is proposed in this study, so as to complete the digital acquisition of 3D information such as surface characteristics and color characteristics of cultural relics. First, build a "one-search-one" local depth matching template from EIA to the OS image, and use the one-time matching method of two depth range matrices to replace the method of repeated iterative comparison according to the depth change in literature [16], so as to reduce the calculation amount in the process of finding the unique mapping point for a single display pixel.The second is the global application of local depth matching template, which utilizes the spatial relationship of InIm to apply the local depth matching template of a display pixel in an EI to its co-located pixels in the whole EIA, reducing the computational amount of finding the mapping point for the global display pixel. In this paper, the display pixels at the same ___location in different EI of the same EIA are defined as "co-located point".

Constructing a "one-search-one" localized deep matching template

This study is based on the principle of Ray Tracing and takes one square micro-lens as example to illustrate the process of EIA generation,as shown in Fig. 4.

From the display pixel \(A\left( {x,y} \right)\) in the \(i\) row and \(j\) column of the EI, the light ray is emitted through the optical center \(O\left( {x_{0} ,y_{0} ,z} \right)\) of the micro-lens, and intersects with the OS image at \(A\left( {x_{A} ,y_{A} ,z_{A} } \right)\),\(x_{A}\),\(y_{A}\) can be calculated by Eq. (1):

\(g\) is the distance from the EIA plane to the micro-lens array.

In the actual mapping process, the space mapping path of the light is physical coordinates, and the transformation to the image is pixel coordinates. Therefore, in the calculation process of the formula, it is necessary to pay attention to the coordinate unit conversion in the mapping process. Next, the coordinate transformation relationship in this research is described.Hypothesis \(i\),\(j\) be pixel coordinates in EI, and \(i_{A}\),\(j_{A}\) be pixel positions in the OS image. \(P_{D}\) is the size of pixels in EIA, that is, the display pixel size of the display device in the reproduction process, \(P_{I}\) is the size of pixels in the OS image, then the following relationship exists:

D is the depth of the optical field center depth plane central depth plane (CDP) with the expression:\(D = \frac{f \times g}{{g - f}}\),\(f\) is the focal length of the micro-lens.

In summary, in the process of calculating \(x_{A}\),\(y_{A}\) only the depth \(z_{A}\) is an unknown quantity, as long as to find out the unique depth plane of the light intersecting with the original light field, to obtain \(z_{A}\), so that according to the Eq.(1) calculated \(x_{A}\), \(y_{A}\),the "one-search-one" mapping from \(A\) to the OS image pixel can be completed.

The core of the “one-search-one” mapping: The light emitted from a display pixel in EI intersects with the minimum depth plane (\(z_{\min }\)) and maximum depth plane (\(z_{\max }\)) of the OS, respectively, and establishes a depth change matrix from \(z_{\min }\) to \(z_{\max }\) located in the range of two intersection points. Meanwhile, a depth matrix of pixels located in the range of two intersection points in the OS depth image can be established. In the OS depth image, one pixel position corresponds to only one depth value, so if the depth values of the pixels in the same position of the two matrices are equal, the mapping point position in the scene image matching the display pixel can be found. The specific process is described as follows:

First of all, since the integrated imaging technology has strict requirements on the depth range of the reconstructed light field, depth adjustment and inversion of the depth data z in the original light field should be carried out according to the method in literature [18], and the depth data h of the original light field should be compressed into the depth range of the reconstructed light field. Taking a single data as an example, the conversion formula is as follows:

\(h_{\min }\),\(h_{\max }\) are the minimum and maximum depth values known in the depth image.

Next, “one-search-one” mapping of a certain display pixel \(A\) is performed. As shown in Fig. 4, when the hardware device parameters are determined, the light field has a fixed displayable depth range, which is represented as \(\Delta d = \frac{{2 \times D \times P_{I} }}{P}\).\(P\) is the pitch of the micro-lens, from which \(z_{\min }\) and \(z_{\max }\) of the reproduced light field can be calculated and expressed as \(z_{\min } = D - \frac{\Delta d}{2}\),\(z_{\max } = D + \frac{\Delta d}{2}\).

The light emitted by \(A\) is compared with the \(z_{\min }\) and \(z_{\max }\) points \(A_{\min }\) and \(A_{\max }\), respectively.\(A_{\min }\) and \(A_{\max }\) can be calculated from Eq. 1.

For the sake of narrative simplicity, omit the \(Y - axis\), establish the coordinate system shown in Fig. 5, take the \(X - axis\) direction coordinates as the reference, Assuming that the mapping point on line segment \(A_{\min } A_{\max }\) changes \(\Delta x = P_{I}\) as the step length, as shown by the short red solid line in Fig. 5, the depth change step in this process can be expressed as follow:

Then the matrix length \(z_{len}\) corresponding to the range of values of \(z_{A}\) for \(A\left( {x_{A} ,y_{A} } \right)\) can be expressed as Eq. 6.

Observation of the above equation reveals that \(z_{len}\) is related to the change of \(abs\left( {x_{0} - x} \right)\),when the device parameters are determined, that is, it is related to the position of display pixels in EI.

If the pixel coordinates change once in the \(X - axis\) direction and the \(Y - axis\) pixel coordinates are all valued once in sequence within the \(A_{\min } A_{\max }\) range, then a pixel coordinate matrix \(\left( {i_{A} ,j_{A} } \right)_{Arr}\) about \(\left( {x_{A} ,y_{A} } \right)\) can be established according to Eq.(1) and Eq.(2) with the size of \(z_{len} \times z_{len}\), as shown in Eq. 7. Then the matrix \(Z_{Arr}\) with the size of \(z_{A}\) of a pixel position in the \(\left( {i_{A} ,j_{A} } \right)_{Arr}\) matrix can be established, and the size is both \(z_{len} \times z_{len}\), as shown in Eq. 8.

According to the coordinate pair matrix corresponding to, then the OS depth range matrix,with the same size as can be obtained in the 2D depth image of the original light field. At this time, the depth data is converted according to Eq. 4, and assuming that the depth image is represented as, as shown in Eq. 9.

Finally, the values of matrix \(Z_{Arr}\) and matrix \(H_{Arr}\) in the same position are compared to find the position with the smallest absolute value of the difference. Assuming that the matrix is in row \(m\) and column \(n\), the relationship is as follows:

Then we find the unique mapping ___location of \(A\),that is \(\left( {i_{A} ,j_{A} } \right)\) in OS, extract the corresponding color information from the OS color image, and assign it to \(A\) according to Eq. (1) and Eq. (2). This completes the construction of the “one-search-one” local depth matching template for display pixel \(A\).

In this process, this study calculates the effective step size of \(Step_{z}\) according to changes in \(\Delta x\). Compared with the method in reference [16], the search step size is expanded on the premise of ensuring the search accuracy. At the same time, the method of comparing two depth range matrices at one time is used instead of the method of repeated depth transformation and comparison from \(z_{\max }\) to \(z_{mim}\) in reference [16]. The calculation time of “one-search-one” mapping process of single display pixel is greatly saved.

Local depth matching template application

As shown in Fig. 6, the display pixels \(A\), \(B\), and \(C\) at the same position in different EI on same EIA are “co-located point” to each other. It can be found that the light rays emitted from the co-located points through the optical centers of the corresponding micro-lenses are parallel to each other, and the intersections with the same depth planes in the OS also have a specific geometrical parallelism relationship. Assume that \(Z_{Arr}\) and \(H_{Arr}\), two depth matrices that display pixel \(A\) about Eq. 8 and Eq. 9, have been obtained, and take this as a template to bring the intersection information of display pixel \(B\) and \(C\) with \(z_{mim}\) and \(z_{\max }\) into \(Z_{Arr}\) and \(H_{Arr}\), that is, two depth matrices for display pixel \(B\) or \(C\) can be obtained respectively. Then complete the "one-search-one" mapping of the corresponding display pixel according to the previous steps. Similarly, the mapping information of all co-located points of display pixel \(A\) can be obtained by following the above steps.

Similarly, in accordance with the above steps, the two scene depth matching templates for displaying the other positional pixel points in the EI in which pixel \(A\) is located to find the mapping point are sequentially calculated, and then applied to their co-located points, and the generation of the EIA can be completed.

In this process, we take one EI as a master, build a local depth matching template for each display pixel in the master EI in turn and apply it to its co-located pixels to complete the global mapping, which reduces the computational amount of the global mapping process compared to the way of calculating the mapping of each display pixel of EIA in reference [16].

Experiment and discussion

Analysis of 3D visual effect of cultural relics with naked eyes

In order to verify the 3D visualization effect of the method proposed in this paper, an InIm optical display platform as shown in Fig. 7 is built. A Redmi Note 11 5G cell phone is used as the display device with a resolution of 2400pixel × 1080pixel, and the micro-lens array consisted of 100 × 100 square lenses with a side length of 1 mm arranged closely together, and the specific experimental parameters are shown in Table 1.

In the experiment, we used the color image shown in Fig. 8 and the depth image generated by the monocular depth estimation algorithm in Literature [17], which is aligned with the color image, with a resolution of 200pixel × 200pixel, to generate the EIA shown in Fig. 9 using the method in this paper. The EIA is placed on the optical display platform of Fig. 7, and the actual 3D reproduction images presented through the display micro-lens array is shown in Fig. 10, where it can be observed that the relative positions of the "wine glass" and the "copper coin" or the relative positions of the overall target and the boundary have an obvious displacement.It can be shown that the method of this paper is effective for generating a continuous viewing angle of the InIm 3D light field display of EIA, can realize the naked eye 3D visualization of cultural relics. In this experiment, due to the limited nature of the display device, only EIA of 1080pixel × 1080pixel is generated, and in the practical application, according to the specifications of the display device and micro-lens array, the EIA of any size matching the optical display platform and conforming to the human eye viewing can be generated for the 3D reproduction.

In order to verify the generalization of this algorithm in other scenes, the experiment selects other images in the data [Footnote 1], generates the corresponding EIA, OS images, EIAs and they reconstructed 3D light field multi-view images are shown in Fig. 11.It can be seen that in the first set of images, “toy dog”,and the second set of images, "geometry" have obvious parallax changes in different viewpoints relative to the global, which proves the generalization of the algorithm in different scenes.

Analysis of digital acquisition methods of cultural relics

As this research tends to be applied in the field of cultural relics appreciation, it pays more attention to the reproduction of color features and spatial features on the surface of cultural relics, which can reduce the complexity in the process of digital acquisition of cultural relics. In addition, in the process of acquisition, this paper only needs to use one camera to capture one color image of the target cultural relic or one color image and one depth image with the depth camera. Compared with the existing digital acquisition technology of cultural relic, the scale limitation of the target cultural relic is weakened, and even large-scale cultural relic can be matched with the existing equipment for 3D visualization.And it doesn’t take any more time.

Analysis of cultural relics 3D display data source generation speed

Assuming that the sum of pixels of the OS image is \(V\), the sum of EI pixels is \(u\), and a total of \(M \times N\) micro-lenses are used, so EIA has \(M \times N \times u\) display pixels.The algorithm in reference [15] maps "one-to-many" from a certain pixel point in the OS image to all pixel positions in the EIA, and then stores and compares to select the most matched mapping position. An EIA requires \(V \times M \times N \times u\) mapping process. However, the algorithm in reference [16] and the algorithm in this paper emit light from a certain display pixel in EIA to the OS image to carry out "one-search-one" mapping, and the mapping complexity of the whole EIA is only related to \(M\),\(N\), and \(u\), but not to \(V\), and the generation time is greatly reduced compared with the algorithm in reference [15]. In the process of seeking the mapping point from the elemental image array to the original light field, the present invention deduces the formula for the effective change of the step length of the depth in the determined search range according to the spatial relationship, as shown in Eq. 5, which changes with the change of the pixel position of the light ray emitted from the elemental image, but no matter how it is changed, the pixel position on is always varied in a range of \(P_{I}\), which can ensure the accuracy of the search. Compared with the fixed search step in literature [16], the search step in this algorithm becomes larger and the number of searches is reduced, saving the search time. In addition, in the process of generating a single EI, we deduce the effective change step,\(Step_{z}\),in determining the depth within the search range according to the spatial relationship, as shown in Eq. 5, then \(\frac{{{2} \times g}}{P} \times P_{I} \le Step_{z} \le g \times P_{I}\).When the focal length is constant, the image quality of light field reconstruction will be reduced with the increase of micro-lens pitch \(P\). Therefore, micro-lenses with small aperture are usually selected in InIm [19, 20], that is,\(\frac{P}{f} < 1\). In order to observe the reality of the OS, if \(g > f\), then the minimum value of \(Step_{z}\) is selected,that is,\(\frac{{{2} \times g}}{P} \times P_{I} > P_{I}\).Compared with the depth fixed search step \(P_{I}\) in reference [16], the search step size in this algorithm is larger, but the pixel position of the OS image always changes within a \(P_{I}\) range, which can ensure the search accuracy. On this basis, two depth change matrices are constructed, and the ___location of mapping points is determined by one-time matching of the two depth matrices, which saves the matching time of mapping points.

To verify the objectivity of the above analysis, a buffer write screen is used in Python3.9 single-threaded mode on a PC with AMD Ryzen 5 2600 Six-Core Processor 3.40 GHz.The time used to generate EIA under different OS image pixels number, different EI pixels number, and different micro-lens array number is compared with the methods in references [15] and [16]. Fig. 12 shows the time taken by the three algorithms when the OS image has different pixel numbers and the generated EI that is 17pixel × 17pixel and micro-lens array number is 10 × 10. Obviously, the time taken by the algorithm in reference [15] is not only much longer than that of the algorithm in reference [16] and the algorithm in this paper, but also significantly increases with the increase of the OS image pixel numbers. However, as the number of pixels in the OS increases, the time used by the algorithm in reference [16] and our algorithm does not change significantly, which proves that the running time of the algorithm in reference [16] and our algorithm has nothing to do with the complexity of the OS.

Fig. 13 shows the time taken by the algorithm in Reference16 and the algorithm in this paper when the same OS image generates a single EI of different sizes. It can be found that the generation speed of the algorithm in this paper still has advantages when generating a single EI. It can be verified that it is effective to improve the speed of display content generation by "expanding effective search step \(Step_{z}\)" and "using two depth matrices to match mapping points at one time" in the process of mapping point matching.

In order to further verify the inference that the proposed algorithm has a more obvious speed advantage in generating and matching larger lens array sizes, Fig. 14 compares the time taken by the proposed algorithm and the algorithm in reference [16] when generating and matching different micro-lens array number for the same OS image with the same EI size,that is 17pixel × 17pixel. It can be found that although the time taken by both algorithms increases with the increase of micro-lens array number, however, with the growth trend of the bar chart, it can be observed that when EI is constant, with the increase of micro-lens array number, the growth trend of the time used by the algorithm in reference [16] is faster and faster than that of our algorithm, while the time used by the algorithm in this paper always maintains a slow growth rate. This demonstrates the effectiveness of the proposed idea of applying local depth matching templates at co-located points to improve EIA generation speed.

In addition, we evaluated the quality of the 3D multi-view image of light field reproduced by the proposed algorithm and the comparison algorithm. Taking the middle view of "toy dog" as an example, the SSIM of the reconstructed image by the proposed algorithm was 0.78, while the SSIM of the reconstructed image in reference [15] and [16] was 0.67 and 0.74, respectively. It is enough to prove that the algorithm in this paper can improve the generation speed under the premise of ensuring the generation quality.

Conclusion

This paper proposes a naked-eye 3D visualization method of cultural relics based on InIm to achieve true 3D reproduction of a single or a group of cultural relics, and an algorithm based on the generation of EIA under local depth template matching to achieve the rapid generation of InIm display content.

This work focuses more on the digital acquisition phase of cultural relics. By inputting monocular color information and registration depth information of the target cultural relics, using the spatial structure and ray tracing principle of the InIm system, the EIA is generated by successively establishing the local depth matching template in the "one-search-one" mapping of all display pixels in an EI, and applying it to co-located points to complete the mapping of other EI. The experimental results show that this technique can not only realize the 3D reconstruction of cultural relics with naked eyes, but also has significant advantages in the generation speed compared with the faster comparison algorithm.

Due to the uniqueness of realizing true three-dimensional naked eye through non-contact acquisition, this technology is particularly important for protecting some cultural relics that are threatened by environmental changes or human factors, and can become an effective means to promote the three-dimensional digital publicity technology of cultural relics, and promote the realization and application of light field display technology in the digital field of cultural relics. In addition, the technology can be applied to entertainment, medical, military and other fields, and effectively promote the research process of three-dimensional light field technology. In the future work, we will be committed to the study of the real 3D visualization of cultural relics with large viewing angle, large depth of field and real time.

Availability of data and materials

Not applicable.

References

Levoy M et al. The digital Michelangelo project: 3D scanning of large statues. Proceedings of the 27th annual conference on Computer graphics and interactive techniques. 2000.

Zhang J, Guo L, Wei K. Shallow of antiquities three-dimensional art digital display design. Value Eng. 2012;31(21):279280.

Kantaros A, Ganetsos T, Petrescu FIT. Three-dimensional printing and 3D scanning: emerging technologies exhibiting high potential in the field of cultural heritage. Appl Sci. 2023;13(8):4777.

Kantaros A, et al. The use of 3D scanning and 3D color printing technologies for the study and documentation of late bronze age pottery from east-central Greece. J Mechatron Robot. 2024;8:11–9.

Kantaros A, Soulis E, Alysandratou E. Digitization of ancient artefacts and fabrication of sustainable 3D-printed replicas for intended use by visitors with disabilities: the case of Piraeus archaeological museum. Sustainability. 2023;15(17):12689.

Verde A, José MV. Virtual museums and google arts & culture: alternatives to the face-to-face visit to experience art. Int J Edu Res. 2021;9(2):43–54.

Bernardini F, et al. Building a digital model of Michelangelo’s Florentine Pieta. IEEE Comput Graph Appl. 2002;22(1):59–67.

Qing R, Diao Changyu L, Dongming, et al. Three-dimensional reconstruction of cultural relics based on structured light. Dunhuang Stud. 2005;05:107–11.

Sansoni G, Trebeschi M, Docchio F. State-of-the-art and applications of 3D imaging sensors in industry, cultural heritage, medicine, and criminal investigation. Sensors. 2009;9(1):568–601.

Sitnik R, Maciej K. Automated processing of data from 3D scanning of cultural heritage objects. Digital Heritage: Third International Conference, EuroMed 2010, Lemessos, Cyprus, November 8-13, 2010. Proceedings 3. Springer Berlin Heidelberg, 2010.

Jung G, Yong-Yuk W, Sang Min Y. Computational large field-of-view RGB-D integral imaging system. Sensors. 2021;21(21):7407.

Yan X, Qionghua W. Integrated imaging 3D information acquisition technology. Infrared Laser Eng. 2020;49(03):66–77.

Eigen D, Christian Puhrsch RF. Depth map prediction from a single image using a multi-scale deep network. Adv Neural Inf Process Syst. 2014;27:2014.

Lippmann G. La photographie integrale. Comptes-Rendus. 1908;146:446–51.

Li G, et al. Simplified integral imaging pickup method for real objects using a depth camera. J Optical Soc Korea. 2012;16(4):381–5.

Yue-Jia-Nan G, Pia-Yan, Li-Jin D. Element image generation algorithm based on reverse mapping. Acta Optica Sinica. 2020;40(19):98–106.

Wang Qi, Piao Y. Depth estimation of supervised monocular images based on semantic segmentation. J Vis Commun Image Represent. 2023;90: 103753.

Pham D-Q, et al. Depth enhancement of integral imaging by using polymer-dispersed liquid-crystal films and a dual-depth configuration. Optics Lett. 2010;35(18):3135–7.

Kuo P. Research on Design and Application of optical imaging system based on Microlens array.2017,Tianjin University,PhD dissertation.

Wang Qi, et al. Visual angle enlargement method based on effective reconstruction area. Jpn J Appl Phys. 2023;62(10): 102002.

Funding

This work was supported by the Project of the Jilin Provincial Department of Science and Technology under Grant 20220201062GX.

Author information

Authors and Affiliations

Contributions

L.W. and Y.W.:Contribute substantially to the conception and design of work, and to the acquisition, analysis, or interpretation of data;Q.L.and Q.W.g:Visualize experimental analysis;Y.W.:Final approval of the version to be published.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Not applicable.

Competing interests

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Wang, L., Wang, Y., Liu, Q. et al. Naked-eye 3D visualization of cultural relics based on integrated imaging. Herit Sci 12, 374 (2024). https://doi.org/10.1186/s40494-024-01462-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s40494-024-01462-4