Abstract

Advances in Artificial Intelligence envision a promising future, where the personalized Internet of Things can be revolutionized with the ability to continuously improve system efficiency and service quality. However, with the introduction of laws and regulations about data security and privacy protection, centralized solutions, which require data to be collected and processed directly on a central server, become impractical for personalized Internet of Things to train Artificial Intelligence models for a variety of ___domain-specific scenarios. Motivated by this, this paper introduces Cedar, a secure, cost-efficient and ___domain-adaptive framework to train personalized models in a crowdsourcing-based and privacy-preserving manner. In essentials, Cedar integrates federated learning and meta-learning to enable a safeguarded knowledge transfer within personalized Internet of Things for models with high generalizability that can be rapidly adapted by individuals. Through evaluation using standard datasets from various domains, Cedar is seen to achieve significant improvements in saving, elevating, accelerating and enhancing the learning cost, efficiency, speed, and security, respectively. These results reveal the feasibility and robust-ness of federated meta-learning in orchestrating heterogeneous resources in the cloud-edge-device continuum and defending malicious attacks commonly existed in the Internet, thereby unlockingthe potential of Artificial Intelligence in reforming personalized Internet of Things.

Similar content being viewed by others

Introduction

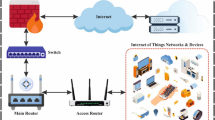

Personalized Internet of Things (PIoT) systems are expected to significantly enhance the way people live and work by connecting distributed devices to sense multimodal data, support ubiquitous computing, and provide on-demand services1,2,3. To bridge the physical and virtual worlds, PIoT can drive the transformation to an advanced digital era by widely applying Artificial Intelligence (AI) models across various domains to not only improve overall system efficiency but also enhance individual service quality, e.g., driver distraction detection to improve driving safety for Smart Mobility4, proactive product management to increase user satisfaction for Smart Economy5, and AI-assisted self-diagnosis to improve health for Smart Healthcare6.

As the intelligent and autonomous core of PIoT, AI models are generally envisioned as being trained at central servers by gathering and processing high-quality samples, and then, fine-tuned according to individual preferences to handle personalized requests7,8,9. Although similar approaches have been widely used in other applications, to realize the full potential of the extensive resources within the cloud-edge-device continuum of PIoT, they face the following issues:

1) Centralized training at the server becomes insufficient: Data volume and quality as well as knowledge transfer across domains are decisive factors for training capable models. However, with the introduction of laws and regulations about data security and privacy protection, it becomes infeasible and burdensome for centralized model training solutions to access and process private data sensed and preserved on user sides, as well as to foster knowledge sharing and fusion.

2) Customized deployment at PIoT clients becomes increasingly important: Model customization relies on private data that may reveal user status, behaviors, and preferences. Centrally trained models are typically encoded with general knowledge incapable of giving best-fitting responses for each individual. Due to the data silos caused by privacy protection, it becomes mandatory and critical to have models customized and fine-tuned in each PIoT client, in turn, gaining the ability to continuously update personalized models and also elevate individual service quality.

Hence, there is a need for a different approach that can support data security and privacy protection as well as model training and adaptation for smart PIoT services across various domains. Accordingly, a crowdsourcing-based, collaboration-oriented, and privacy-enhancing approach known as Federated Learning (FL) has been proposed, which can train a global model by aggregating local models uploaded from learning participants instead of collecting and processing their private data directly in the server10,11. In general, FL can ensure that the distributed private data is processed locally at each PIoT client to collaboratively train a global model with performance equivalent to the ones trained centralizedly by the server, however, it still encounters three key challenges, namely:

1) Privacy protection exacerbates data heterogeneity to learn generalizable models. While PIoT services are in use, private data sensed from the user is, in general, non-independent and identically distributed (Non-IID), reflecting the behavioral difference among users12. Without violating the premise of privacy protection, FL can not directly manipulate the data samples processed at each client to train local models, and in turn, the global model that is updated by aggregating collected local models may over-learn on major samples, inhibiting the transfer of minor but important knowledge. It can not only make the model fail to converge or produce sub-optimal results but also hinder the model from being personalized.

2) The cost-effectiveness tends to decrease in iterative and collaborative learning. Even though raw data is not collected and transmitted to the server, the communication cost to exchange model parameters between the server and PIoT clients still exists and accumulates along with the increase of learning rounds13. When the bandwidth of PIoT is limited, which is common in real-world applications, performance bottlenecks can be easily detected, especially when frequent interactions between the server and clients is required to train the global model.

3) Learning over the open network is vulnerable to security attacks. During learning, model parameters are shared bi-directionally between the server and clients. Privacy protection announced by FL becomes fragile when an open network like the Internet is used, as attacks such as data inversion14 and model poisoning15 can be launched by malicious users. In turn, private user data used in learning is at risk of being leaked, and the global model may fail to be trained or contain injected knowledge showing abnormal behaviors.

To address these challenges, various solutions have been proposed. Specifically, data heterogeneity can be mitigated by either performing local data filtering or imputation to prepare high-quality training samples16,17,18,19, or adapting knowledge transfer techniques, such as meta-learning20, knowledge distillation21, and prototype learning22, to train global model with key knowledge extracted from private data. Moreover, to improve the cost-efficiency, besides the direct reduction on model size and learning rounds23,24,25, the optimization on the collaboration topology and mode of the cloud-edge-device continuum26,27,28 has also been discussed. Finally, security and privacy protection algorithms, such as homomorphic encryption29,30, differential privacy31,32, and secure multi-party computation33,34, can be applied to defend malicious attacks. Although current solutions can be deployed to resolve a specific challenge with suitable performance, a clear flaw can still be observed that the direct combination of these technologies for PIoT is unfeasible, leading to uneven gains, e.g., with an increase of the security level, the learning cost may rise; or with a decrease of communication costs, the model performance may decline.

In this work, to address these issues and make FL more compatible with PIoT, a secure, cost-efficient and ___domain-adaptive framework named Cedar is introduced to enable meta-knowledge transfer, layer-wise aggregation, and high-performance personalization. Cedar is open-sourced and encapsulated as a library of GOLF, which is a project to support decentralized and secured deep learning for Internet of Things (IoT). It is available at https://github.com/IntelligentSystemsLab/generic_and_open_learning_federator/. In general, Cedar, first, employs and enhances meta-learning in FL, forming federated meta-learning, to enable the transfer of inter-knowledge extracted from heterogeneous private data. Second, it designs and implements an asymmetric model uploading process to not only improve the cost-effectiveness of collaborative learning but also secure private data and models to be sniffed and poisoned, respectively. Finally, it enables the customization and personalization of globally shareable meta-models to better assist users in completing tasks from multiple domains.

Results

Framework overview

As illustrated in Fig. 1a, Cedar works in three phases, namely learning preparation, meta-model training, and model deployment. Specifically, (1) during the first phase, upon receiving an incoming model training or updating request, the learning coordinator (i.e., the authorized server in PIoT systems) will parse it to configure a learning task. First, the coordinator defines the task specification about data requirements and model structure as well as initial hyperparameters. Then, the coordinator creates a learning consortium by selecting and activating suitable clients (i.e., PIoT devices that are available with the required training data). (2) After that, the meta-model will be trained iteratively and securely through the interaction between the coordinator and activated clients. First, the coordinator dispatches the task specification to the activated clients. Then, each client will start the local training for local model. Then, the local model will be measured layer-wisely and only upload these layers containing significant contributions to the coordinator. Finally, the server aggregates received local models to update the global meta-model. Note that the above client-server interaction ends until the global meta-model converges or a predefined maximum number of learning rounds is reached. (3) Once the global meta-model is trained, it can be deployed for personalization. Clients within PIoT systems can download the meta-model and fine-tune it based on their local data to get personalized models.

a Particularly, (1) before the training, Cedar parses the request to define the learning task, and then, creates a learning consortium with selected clients. (2) During the training, Cedar trains the global meta-model through the iterative interactions between the coordinator and activated clients. (3) After the training, PIoT clients can download the latest global meta-model for model deployment. In addition, Ceder consists of four core functions, including (1) meta-learning to handle data heterogeneity (b), (2) adaptive model update to improve cost-efficiency (c), (3) security protection to prevent malicious attacks (d), and 4) model personalization for optimal performance in various application contexts (e).

To make the entire process secure, cost-efficient and ___domain-adaptive, Cedar designs and implements four core functions. First, considering that there are significant variations in resources within the cloud-edge-device continuum as reflected by data heterogeneity, instead of extracting localized knowledge from the clients and aggregating them in the coordinator for the global model (which is widely used in conventional solutions), as shown in Fig. 1b, Cedar allows clients to rapidly adapt to varying data distributions across different environments via meta-learning, extracting structural knowledge from private data. Knowledge from different clients, encoded in their local models, can be uploaded to the coordinator to collaboratively form a global meta-model with high generalizability and transferability. By fine-tuning the global meta-model, cross-device knowledge can be localized to support diverse personal contexts. Second, as shown in Fig. 1c, comparing to common methods that upload all the model parameters without any changes, Cedar can adaptively identify and prioritize the updates of model layers with more important information to not only reduce learning cost but also accelerate model convergence for a cost-efficient collaboration with resource-constrained PIoT devices. Third, as shown in Fig. 1d, protecting the data security and user privacy may make PIoT systems vulnerable to malicious attackers, who can easily disguise themselves as legitimate users during the learning to launch attacks such data inferring and model poisoning. To address that, Cedar safeguards the entire learning process by preparing asymmetric models in model uploading phase to prevent the reconstruction of sensitive data and detecting abnormal local updates in the model aggregation phase to ensure the quality and security of PIoT device updates. Accordingly, Cedar can optimize the convergence direction of the global model and prevent it from being misled by malicious information. Finally, as shown in Fig. 1e, Cedar enables PIoT users from diverse domains, who exhibits substantial diversity in resources and operational requirements, to download the latest global meta-model and perform personalized fine-tuning based on their private data before the actual usage, thereby achieving optimal local deployment performance. More details about Cedar can be found in “Methods” and the Supplementary Information (SI Section 2).

Performance across multiple domains

To reveal the merit of Cedar in supporting multiple domains, twelve standard datasets representing three typical tasks (i.e., structured data regression, text classification, and image classification) in PIoT systems are utilized. For different tasks, models covering small, medium and large sizes are trained based on Cedar and other five baselines in terms of FedAvg10, FedFomaml35, FedReptile20, FedMeta-MAML36, and FedMeta-SGD36. Finally, to make a fair comparison, the model accuracy during the training and after the localization are calculated, respectively. The detailed setting of each task and the complete evaluation results are given in the Supplementary Information (SI Sections 5.1, 4.4, and 5.3–5.14, respectively).

As shown in Fig. 2, Cedar can significantly improve the model training performance as well as model adaptation speed to support tasks under different complexities for multiple domains. It is worth noting that to support the three types of tasks, the models employed are Cnn1D, MBiLSTM, and ResNet18, respectively. For improved clarity and visual appeal, the mean values of the baseline methods are plotted to prevent overcrowding the figure with excessive curves, which could compromise readability. Additionally, the range of metric values achieved by the baselines is illustrated to compared with Cedar, clearly demonstrating that the proposed method consistently outperforms all baseline methods. Detailed plots without simplification, showing individual curves for each method, are available in the Supplementary Information (SI Section 4.4).

For each figure in a–f, it includes 1) the data distribution per client on the top, 2) the model training performance on the bottom-left, and 3) the model adaptation performance on the bottom-right. Particularly, panels a, b, panels c, d, and panels e, f correspond to the results for BSD, SST2, and FER, respectively. Note that α = 0.1 (a, c, e on the bottom-left) and α = 10.0 (b, d, f on the bottom-right) represent cases with high and low heterogeneity, respectively. Since SFDDD uses one-to-one mapping to assign the private data of drivers to clients, g shows its model training and adaptation performance with its original data distribution. Overall, a, b in yellow background represent structured data regression tasks, c, d in blue background represent text classification tasks, and e–g in green background represent image classification tasks.

Specifically, as illustrated by the heatmap in each learning scenario, the task complexity is represented by the data heterogeneity among clients and accordingly, tasks with α = 0.1 is more complex than the one with α = 10, as the local data of their clients tends to be more Non-IID. While analyzing their results for different tasks in Fig. 2a to f, a common and consistent pattern across all tasks can be observed that during the model training phase, the learning curve of Cedar keeps ahead with less fluctuations comparing to the baselines, and in the model adaptation phase, by performing a few round of local training, on average about 10 rounds, the model from Cedar can be rapidly tailored with better personalized performance to support ___domain-related and user-oriented local contexts.

Second, during the training phase, Cedar consistently outperforms the five baselines across various task types and complexities. For structured data regression tasks, which are relatively simpler (as reflected by the overall performance achieved by the baselines), Cedar demonstrates robust results compared to the baselines with an improvement of 17.77% and 20.01% in high and low heterogeneity scenarios (illustrated in the bottom-left sub-figures of Fig. 2a and b), respectively. For text classification tasks, which are generally more challenging (as shown by the fluctuating learning curves of the baselines in the bottom-left sub-figures of Fig. 2c, d), Cedar exhibits stable and superior performance, achieving a 22.75% improvement over the baselines. Similarly, for image classification tasks, Cedar enables rapid convergence within fewer training rounds and consistently maintains higher performance throughout the training process, achieving an average performance boost of 60.39%.

Third, the performance difference is enlarged during the model adaptation phase. Cedar demonstrates significantly better stability compared to baselines across various tasks and heterogeneity levels. Specifically, in simpler regression tasks as illustrated in Fig. 2a, b, Cedar maintains long-term and stable performance with minimal fluctuation, achieving an overall improvement of 17.91%, which is applicable to the relatively simple data distribution and similar task demands in real-world PIoT applications. In contrast, for classification tasks shown in Fig. 2c, g, the model performance during the initial adaptation phase is suboptimal, reflecting the limitations of directly applying a pre-trained generalized model, which is probably due to the fact that the pre-trained models usually fail to adequately capture the specific data features of individual users. However, after only a few rounds of adaptation, the model’s performance increases rapidly, demonstrating strong potential for personalization on PIoT devices. Especially Cedar shows an average improvement of 19.48%, providing strong evidence of its advantages in dynamic adaptation and customized optimization.

Finally, as shown in Fig. 2g, the clients for SFDDD do not exhibit significant data heterogeneity, primarily because each client is created to mimic a real driver with associated private data and no data manipulation is performed. In addressing the inherent feature heterogeneity of the dataset, Cedar achieves 65.67% and 58.53% improvements over the baselines in model training and adaptation, respectively. This result indicates that the application of Cedar can dramatically increase model performance in not only training but also adaptation to assist real-world scenarios.

Performance against data heterogeneity

The data heterogeneity can be measured by two factors, namely (1) the heterogeneity level α, which guides the Dirichlet distribution37 to generate heterogeneous data for each client, and (2) the client participation proportion ς, which indicates how many clients will be activated to join the learning. In general, the first factor affects the individual heterogeneity in terms of data sparsity, and the second factor presents the overall heterogeneity in terms of data diversity. It is worth noting that this analysis is made based on image classification tasks, and since the data of SFDDD reflects original data heterogeneity of drivers, missing the control on the first factor, it is not considered in this analysis. The data distribution of the datasets (i.e., FMNIST, FER and ISIC) used in this analysis at different α values is given in the Supplementary Information (SI Figures 12–14). Meanwhile, to eliminate the influence of model architecture, three models (i.e., MobileNetV2, ResNet18, and DenseNet121) are also employed here for the analysis.

Accordingly, by manipulating α = [0.1, 0.5, 1.0, 5.0, 10.0] to generate heterogeneous data for each client, 5 scenarios to test the influence of individual heterogeneity are created for each learning task (i.e., FMNIST, FER and ISIC). As shown in Fig. 3a, the changes on training performance measured by model accuracy are plotted for each task. When analyzing the median value of each box, it can be seen that the model accuracy of Cedar can stay on the top across all testing scenarios. Moreover, shorter boxes and fewer outliers can also be obtained by Cedar in most cases, indicating its merit in handling data with various heterogeneity levels. In general, such results show that Cedar can maintain a higher and more stable performance across three tasks to mitigate the impact introduced by individual heterogeneity.

For each sub-figure in a–c, it illustrates the accuracy distribution of a model trained by the compared methods, namely FedAvg, FedMeta, FedFomaml, FedReptile and Cedar, in 5 individual heterogeneity levels manipulated by α = [0.1, 0.5, 1.0, 5.0, 10.0]. In each box, the blue line represents the median, the red triangle represents the mean, the upper and lower edges of the box represent the upper and lower quartiles, the whiskers indicate 1.5 times the interquartile range, and the circles represent outliers. Horizontally, each set of three sub-figures represents the results for FMNIST (a), FER (b), and ISIC (c), respectively. Vertically, the three sub-figures with yellow, blue, and green backgrounds correspond to the results for MobileNetV2, ResNet18, and DenseNet121, respectively. Finally, d show the average accuracy achieved by the three models over the above three datasets with α = 0.1 in 5 overall heterogeneity levels controlled by ς = [0.2, 0.4, 0.6, 0.8, 1], i.e., MobileNetV2 in yellow background, ResNet18 in blue background, and DenseNet121 in green background. Specific results for each dataset are presented in the Supplementary Information (SI Section 4.5).

Similarly, by adjusting ς = [0.2, 0.4, 0.6, 0.8, 1.0], 5 scenarios to test the performance in addressing the overall heterogeneity for each learning task are also prepared. As shown in Fig. 3b, the average model accuracy achieved by the compared methods in collaborating related clients are summarized for each model. Specifically, with the increase of ς, the model accuracy of the baselines grows, as more diverse information can be incorporated to reduce the chance in providing random results for unseen or minor samples and over-fitting issues can be eased with more data used in training less biased models. Surprisingly, models trained by Cedar can consistently achieve a relatively high performance, even when few clients (i.e., ς = 0.2) are involved. It shows the advancement of Cedar in handling overall heterogeneity, and also its capability in extracting and aggregating key information from the minority to train more balanced models. This ability can also be observed in each analyzed dataset under different ς, whose results are summarized in the Supplementary Information (SI Section 4.5).

Performance on communication efficiency

Since FL only allow the private data to be processed locally, there is no transmission cost for raw data. However, the frequent interactions between the clients and the server during the meta-model training to exchange related parameters may become burdensome and intrusive for PIoT clients, making them less willing to use the service. Therefore, it is critical to measure the cost-efficiency of the meta-model training process, i.e., to train relatively high performance model with fewer communication costs and learning rounds. To conduct a fair comparison, the achievable metric values under specified communication costs and the first round reaching predefined targets are used as the indicators. In the following discussion, the results for BSD, SST5, and FER are used, and the results for the rest datasets are given in the Supplementary Information (SI Section 4.6).

First, as shown in Fig. 4a, d, g, by applying a layer-wise model uploading method, which can select and upload layers with critical information to the server for global model update, a significant reduction on the size of parameters to be uploaded from a client to the server is achieved by Cedar. In each learning round, the average cost reduction per client to train the three models is 8.67%, 23.36%, and 13.06%, respectively. Moreover, the mean cost distribution per client shares a similar pattern with a clear gap to the method without filtering, indicating that Cedar plays a neutral role in saving the communication cost for each client regardless of the models to be trained.

Results for a dataset in each task type are illustrated: BSD for structured data regression (a–c), SST5 for text classification (d–f), and FER for image classification (g–i). Specifically, a, d, g show the distribution of parameters to be uploaded by each client per learning round. b, e, h present the achievable metric values under specified communication cost budgets. c, f, i illustrate the first round reaching predefined targets with the optimal achievable metric value marked at the top when the target cannot be reached by certain methods. The bar charts represent the mean values within 10% of the budget or target, with error bars indicating the variance. The budget or target values are labeled below each sub-figure. The results for the rest datasets are provided in the Supplementary Information (SI Section 4.6).

Second, it is doubtful that the cost reduction per client may affect the overall model performance. To address that, an additional evaluation was conducted to determine the achievable metric values (i.e., RMSE for BSD, and Accuracy for SST5 and FER) under given communication cost budgets, and to ensure a comprehensive and fair comparison, best results within 10% of the given budget (i.e., 0.4GB for BSD, 1.2GB for SST5 and 19.0GB for FER) are used. Note that the budget costs vary due to the complexity of the tasks and the models to be trained (i.e., Cnn1D for BSD, MBiLSTM for SST5 and ResNet18 for FER). As illustrated in Fig. 4b, e, and h, Cedar can significantly outperform the baselines, achieving average performance improvements of approximately 19.50%, 24.27%, and 70.20% across the three cases, respectively. These results demonstrate that the meta-model training process implemented by Cedar is more cost-efficient, delivering superior performance under identical conditions.

Finally, to verify that the cost reduction will not affect the overall training speed, the rounds required to achieve the specified metric value are recorded with results illustrated in Fig. 4c, f, and i, which also present the results within 10% variations of the given target (i.e., RMSE for BSD reaches 1.60, Accuracy for SST5 reaches 0.46, and Accuracy for FER reaches 0.36). It can be seen that compared to the best result achieved by the baselines, Cedar can significantly optimize the training by about 49.76%, 98.02%, and 95.96%, respectively. Hence, the above analyses prove that Cedar is cost-efficient in shortening the overall learning duration as well as reducing the communication cost per client to train a desired model without any performance degradation.

Performance against malicious attacks

The ability of Cedar in defending against malicious attacks in terms of gradient inversion for data sniffing and label flipping for model poisoning is evaluated, as presented in Fig. 5. For a fair comparison, besides FedAvg10, 6 variants of DP (Differential Privacy) (i.e., 3 variants with Gaussian distribution, noted as DP-N, and other 3 variants with Laplacian distribution, noted as DP-La), MQ (Mixed Quantization)38 and 2 pFGN (Pruned Frequency-based Gradient Defence) methods39 are used as baselines against the inverting gradient attack; and CMA (Coordinate-wise Median Aggregation)40, LFRPPFL (Label-Flipping-Robust and Privacy-Preserving FL)41 and PEFL (Privacy-Enhanced FL)42 are used as baselines against the local flipping attack. The detailed description and configuration of these methods are presented in the Supplementary Information (SI Section 1.2).

In a, it visualizes the ground true (GT), and also the reconstructed images generated by the compared methods. Moreover, under each reconstructed image, the values of PSNR and SSIM are highlighted. In b, it illustrates the loss of the gradient inversion attack (GIA) process in 2500 rounds, in which, the loss range for baselines on the bottom and the actual loss for Cender on the top are compared. In c, it presents the ASR of label flipping (with flipped labels and images) for three multi-class classification tasks (i.e., FMNIST, AGNews, and SST5), in which, Cedar is compared with four state-of-the-art baselines. For each sub-figure in c, it represents the results of a specific dataset, which include bar chats representing the result for the attack represented below, circles indicating the mean value of all simulated attacks, and error bars showing coverage intervals ranging from the 5th percentile to the 95th percentile, covering the middle 90% of the result distribution. It is worth noting that the above evaluations are done using ResNet18 for image classification tasks and MBiLSTM for text classification tasks. Moreover, images presented are sourced from public datasets or reconstructed according to the experiment results. The complete evaluation results are provided in the Supplementary Information (SI Section 4.7).

In general, the gradient inversion aims to infer the private data of each client by intercepting its local model uploaded to the coordinator. To better analyze the result, the reconstructed data together with related PSNR (Peak Signal-to-Noise Ratio) and SSIM (Structure Similarity Index Measure) is visualized, as presented in Fig. 5a. Intuitively, most of the reconstructed images have vaguely discernible details, within which, pFGD exhibits the best protection results among the baselines with completely distorted reconstructions. However, Cedar is superior to pFGD not only objectively when quantifying PSNR and SSIM values but also subjectively when visualizing rebuilt images (similar to a television screen with noise). It shows that no valuable information can be observed and accordingly, perceived from Ceder, which can efficiently and effectively prevent the leakage of local private data. Furthermore, the loss curve of the reconstruction algorithm is also recorded and presented in Fig. 5b. It explains why Cedar can obtain the best performance, as a relatively high loss is maintained, hindering the algorithm to successfully infer the original image.

Beside that, an extreme case is adopted to test the capability of Cedar in defending model poisoning, such as the attack of label flipping. In the test, 40% of the clients participated in the training are configured to be malicious, and these malicious clients employ the same attack strategy by flipping the data from the first class to the second, the second to the third, and so on, until the final class is flipped back to the first. As illustrated in Fig. 5c, which presents the attack success rate (ASR) of each method, Cedar can maintain the lowest ASR across all testing scenarios in the given examples from different application domains. In terms of the overall result distribution, the mean values of Cedar consistently outperform the baselines, where the minimum average improvement is observed in SST5 at 49.11%, while the maximum average improvement reaches 62.26% in FMNIST. The additional results of flipping each class are presented in the Supplementary Information (SI Sections 3.1 and 4.7).

Ablation study

To assess the effectiveness of each module in Cedar, four variants are defined and compared, as shown in Table 1. Note that all the experiments are conducted based on the common settings outlined in Supplementary Information (SI Section 5.1). First, in scenarios without attacks, the layer-wise uploading mechanism (as shown in column M2 of Table 1, where only this mechanism is applied) demonstrates significant performance improvements. This is largely due to its ability to effectively capture critical information within key model layers. In contrast, as indicated by M3 (using only adaptive model aggregation), the performance improvements are less consistent. This instability may stem from the exclusion of specific updates, which could lead to the loss of essential information. However, by combining these two mechanisms, M4 can maintain high performance, as key information can be effectively and efficiently extracted, and aggregated.

Moreover, to evaluate the performance of different variants under attack scenarios, classification tasks with 40% of clients launching label-flipping attacks are tested. Unsurprisingly, the performance of the basic method (M1) deteriorates dramatically, particularly in more complex image classification tasks. While the layer-wise uploading mechanism (M2) enhances overall training efficiency, its performance improvement remains limited, as it cannot mitigate the impact of malicious information. In contrast, incorporating update detection and adaptive weighting (M3) can significantly alleviate this impact with performance improvements often exceeding twice that of M2. Overall, the synergistic integration of these two mechanisms (M4) can achieve performance comparable to scenarios without attacks, which show the efficiency and effectiveness of Cedar in safeguarding and stabilizing the collaboratively learning within PIoT.

Discussion

To make distributed computing and collaborative learning more compatible for PIoT, Cedar is designed and implemented by integrating federated learning and meta-learning to offer a general framework, through which, significant improvements can be achieved in saving, elevating, accelerating and enhancing the learning cost, efficiency, speed, and security, respectively, across various application domains.

Specifically, first, the heterogeneity within local data can be efficiently and effectively mitigated by Cedar, as it can extract and aggregate key local knowledge for a global meta-model with optimal parameters to be personalized. Even through the evaluation shows that the improvements achieved by Cedar are significant and the computational complexity is acceptable and controllable, directly related to the chosen model and the size of the stimuli, as analyzed in the Supplementary Information (SI Section 2.4), the ability of Cedar to support the heterogeneity of physical devices, including variations in communication and computation specifications, requires further investigation. Limited resources on some PIoT devices may hinder model training, leading to increased training times or even failures. To address these challenges, techniques such as model quantization and knowledge distillation can be employed to reduce computational overhead. Additionally, implementing a hierarchical training strategy could enable devices with varying computational capacities to participate in training at different frequencies. These optimizations would enhance Cedar’s adaptability to the dynamic nature of ubiquitous PIoT, whose availability may fluctuate across time and space.

Second, the cost-effectiveness is crucial for PIoT systems and services, which may affect the user acceptance rate if the learning process is tedious and troublesome, i.e., causing network delays, UI (user interfaces) lags, and battery droppings. To address that, Cedar enhances the model learning procedure with not only reduced learning costs and system overhead but also improved model performance and training speed. Since the current enhancement is achieved by the filtering of model layers to extract key local information, in which, prior knowledge is needed for an optimal performance, it would be worth exploring dedicated methods that can make the filtering more adaptive. Meanwhile, PIoT devices often experience unstable network connections, which can substantially hinder real-time interactions with the coordinator during model training. To address this challenge, Ceder can be enhanced with techniques such as gradient compression and asynchronous update strategies. These methods enable Ceder to reduce communication overhead and accommodate varying upload frequencies, allowing devices with high network latency or limited bandwidth to participate more flexibly and, consequently, support a broader range of application scenarios.

Third, as revealed by the evaluation results, Cedar can safeguard the learning process against malicious attacks that commonly exist in PIoT to either sniffer user private data or poison the model. In contrast to conventional computation intensive algorithms, e.g., differential privacy, Cedar is not only lightweight in computing but also superior in security protection, making it more suitable for PIoT. To further enhance the robustness of Cedar, it requires studies on novel mechanisms in protecting other kinds of adversarial attacks, e.g., Byzantine attacks. Moreover, the current security mechanisms implemented by Cedar primarily focus on data and model protection, with limited attention to hardware-level security, particularly against physical attacks such as channel eavesdropping and device tampering. To address this gap, integrating blockchain technology could enhance transparency and traceability in the training process, effectively preventing tampering and forgery of exchanged content. Additionally, exploring hardware-level security measures, such as designing and deploying trusted execution environments, could provide more robust protection, significantly enhancing the resilience of PIoT systems against attacks while strengthening overall security.

Finally, the model adaptation of Cedar enables the knowledge transfer and model fine-tuning at each PIoT client to support personal requests. This capability is vital for PIoT systems across various domains, e.g., transportation, healthcare, finance, economy, etc. In general, Cedar can dramatically improve the performance of personalized model through few rounds of local training, however, to support more complex tasks, it is essential to integrate multi-modal knowledge and accommodate diverse system configurations. On one hand, the potential of leveraging large language models (LLMs) as personal assistants to address multi-modal data for individual PIoT clients can be explored. On the other hand, containerization technologies, such as Docker, can be employed to ensure system compatibility across various devices. Collectively, these measures can significantly enhance the scalability and flexibility of Cedar to support PIoT across various domains.

Methods

Multi-___domain dataset preprocessing

In total, twelve standard datasets from various domains are utilized, encompassing three types of task, i.e., BSD, CAH, RWQ, and OLSRC for structured data regression; SST5, AGNews, IMDB, and SST2 for text classification; and FMNIST, FER, ISIC, and SFDDD for image classification. Most datasets consist of predefined training and test sets. For datasets without such division, they are randomly divided into the training and test sets according to a ratio of 8:2. Moreover, to mitigate the issue of sample imbalance between different classes in classification datasets, their training sets are augmented using techniques such as random duplication, image cropping, image rotation, and other oversampling methods. The aim of these enhancements is to regulate the generation of heterogeneous data, as outlined in Subsection 6, thereby enabling the performance analysis across different levels of data heterogeneity. Additionally, dataset with inherent individual heterogeneity (e.g., SFDDD) is not rebalanced, as it naturally reflects the data heterogeneity observed in real-world scenarios. The summary of the preprocessed data is presented in the Supplementary Information (SI Section 4.2). Remarkably, the facial images and associated data used in this paper are solely derived from publicly available datasets that explicitly permit their use for research purposes. We strictly adhere to the terms and conditions set by the dataset providers, ensuring full compliance with all relevant regulations and avoiding any potential violations.

Heterogeneous data generation

To simulate the data sensed by PIoT clients, the heterogeneous subsets of the standard datasets are created according to the Dirichlet distribution37. Assuming that there are N subsets and K classes within the dataset. The vector of class distribution within all subsets can be formulated as \(\vec{q}\in {{\mathbb{R}}}^{N\times K}\), where all elements in \(\vec{q}\) are non-negative and \({\parallel \! \! \overrightarrow{{q}_{n}} \! \!\parallel }_{1}=1\). To generate heterogeneous datasets, \(\vec{q}\backsim Dirichlet(\alpha,{G}_{0})\) is used, where G0 is the original distribution of the data, and α is the concentration parameter corresponding to the heterogeneity level. With the increase of α, the obtained distribution will be close to the original distribution and vice versa. It is worth noting that for regression datasets, Sturges’ rule is applied to partition continuous values into multiple discrete bins, followed by sampling based on the Dirichlet distribution.

PIoT client creation

PIoT clients are considered as the owner of data subsets and can be divided into two kinds, namely training clients and test clients. For SFDDD, since its data is organized according to driver IDs, in total, 26 clients (equal to the number of drivers) are created, among which, 21 and 5 clients are used for training and test, respectively. While as for other eleven datasets, 50 training clients and 10 test clients are created with above-generated heterogeneous datasets assigned. Moreover, during the evaluation, training clients are randomly selected and activated according to the client participation rate ς. Thus, a group of clients prepared for an evaluation CG can be defined by a quintuple, namely \({C}_{G}=\{\tilde{D},\tilde{D},\tilde{M},\alpha,\varsigma \}\), where \(\tilde{D}\), \(\tilde{D}\), and \(\tilde{M}\) stand for the dataset, model structure, and FL approach employed for each task, respectively. In total, 784 client groups are created and used to test the efficiency and effectiveness of the proposed framework. The experiments were conducted on two workstations: one equipped with an Intel Xeon Gold 5218R CPU and four NVIDIA RTX 3090 GPUs, and the other with an Intel Core i9-13900KS CPU and a single NVIDIA RTX 4090 GPU. For more details, please check the Supplementary Information (SI Section 5.2).

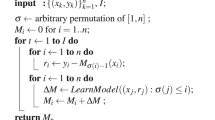

Global meta-model training

Three models, i.e., MobileNetV2, ResNet18, and DenseNet121, are used. Their linear layers are tuned to be consistent with the output classes. Adam and cross-entropy are used as the optimizer and the loss function, respectively. During the global meta-model training process, each PIoT client will run independently and only utilize its local data to perform local training. In the meanwhile, the central server will collect and aggregate the local models uploaded by PIoT clients to update the global meta-model. To test the robustness of Ceder in defending against malicious attacks, the client-server interaction is supported by an open network, i.e., the Internet. Through the iterative exchange of training parameters between the clients and the server, the global meta-model is trained.

To improve the cost-efficiency in learning high-performance meta-models and also ensure the ability to prevent private data leakage and model poisoning, Cedar implements an asymmetric and layer-wise model uploading and updating mechanism. Within each training round, based on representational similarity analysis, first, each client detects model layers with most important information and uploads these layers instead of the complete local model to the server; and second, while the local updates are received, the server records their responses to given stimuli, analyzes their similarity to detect benign updates, and aggregates these benign updates to update the global meta-model. It is worth noting that during the model aggregation, adaptive weights are applied, which are calculated according to the response similarities. In general, based on this strategy, Cedar can ensure the safety of private data and the stability of global meta-model training against data inferring and model poisoning attacks. The detailed workflow of meta-model training is described in the Supplementary Information (SI Section 2.1).

Meta-model personalized deployment

Before the actual usage of the global meta-model, it needs to be fine-tuned and then deployed as personalized models to better support various in-context services4,43,44. To support the model deployment, within Cedar, a PIoT client can directly download the global meta-model from the coordinator, and run a few rounds of local training to generate the personalized model. It is worth noting that since only the global meta-model is transmitted from the server to the client, there is no risk of private data leakage and model tampering in this phase. The specific deployment modes and their performance analysis are described in the Supplementary Information (SI Sections 2.2, 3.2, and 5.15)

System attack simulation

Even though conventional FL methods only allow the private data to be processed by the data owners, there are still security concerns in PIoT systems, especially those deployed on open networks, e.g., the Internet. To test the robustness of Cedar in security protection, two kinds of malicious clients are simulated, namely 1) the ones to infer private data by applying gradient inversion14,45,46 on intercepted local models, and 2) the ones to prevent the model convergence or mislead the model with abnormal behaviors by launching label flipping attacks15,41,47. After the configuration of the two kinds of attackers, they are deployed in the learning process. Accordingly, the efficiency and effectiveness of Cedar in security protection can be evaluated and compared with other baselines. The detailed configuration of these two kinds of malicious clients is given in the Supplementary Information (SI Section 2.6).

Data availability

BSD (Bike Sharing Dataset) is available at https://archive.ics.uci.edu/dataset/275/bike+sharing+dataset. CAH (California Housing Dataset) is available at https://www.dcc.fc.up.pt/~ltorgo/Regression/cal_housing.html. RWQ (Red Wine Quality) is available at https://archive.ics.uci.edu/ml/datasets/wine+quality. OLSRC (OLS Regression Challenge to Predict Cancer Mortality Rates) is available at https://data.world/nrippner/ols-regression-challenge. SST5 (Stanford Sentiment Treebank with 5 labels) is available at https://nlp.stanford.edu/sentiment/. AGNews (AG’s corpus of news articles) is available at http://groups.di.unipi.it/~gulli/AG_corpus_of_news_articles.html. IMDB (50K Movie Reviews from Internet Movie Database) is available at https://www.kaggle.com/datasets/lakshmi25npathi/imdb-dataset-of-50k-movie-reviews. SST2 (Stanford Sentiment Treebank with 2 labels) is available at https://huggingface.co/datasets/nyu-mll/glue. FMNIST (Fashion Modified National Institute of Standards and Technology)48 is available at https://github.com/zalandoresearch/fashion-mnist. FER (Facial Expression Recognition) is available at https://www.kaggle.com/c/challenges-in-representation-learning-facial-expression-recognition-challenge. ISIC (The International Skin Imaging Collaboration) is available at https://challenge.isic-archive.com/data/#2019. SFDDD (State Farm Distracted Driver Detection) is available at https://www.kaggle.com/c/state-FARM-distracted-driver-detection. The data preprocessed based on the above twelve standard datasets and used in this paper is publicly available at https://github.com/IntelligentSystemsLab/generic_and_open_learning_federator/tree/main/example/data/non_iid_data. Source Data for each figure/table are provided with this paper in a single Excel file. Source data are provided with this paper.

Code availability

The latest version of Cedar is included in GOLF under the terms of an open source license. The source code is publicly accessible under the MIT License with a citation file (DOI: 10.5281/zenodo.14968819) at: https://github.com/IntelligentSystemsLab/generic_and_open_learning_federator/.

References

Khan, A. A., Laghari, A. A., Li, P., Dootio, M. A. & Karim, S. The collaborative role of blockchain, artificial intelligence, and industrial internet of things in digitalization of small and medium-size enterprises. Sci. Rep. 13, 1656 (2023).

Aminizadeh, S. et al. The applications of machine learning techniques in medical data processing based on distributed computing and the internet of things. Comput. Methods Prog. Biomed. 241, 107745 (2023).

Shao, S., Zheng, J., Guo, S., Qi, F. & Qiu, I. X. Decentralized ai-enabled trusted wireless network: a new collaborative computing paradigm for internet of things. IEEE Netw. 37, 54–61 (2023).

Liu, S. et al. Afm3d: an asynchronous federated meta-learning framework for driver distraction detection. IEEE Trans. Intell. Transp. Syst. 25, 9659–9674 (2024).

Pallathadka, H. et al. Applications of artificial intelligence in business management, e-commerce and finance. Mater. Today. Proc. 80, 2610–2613 (2023).

Lai, J. et al. Practical intelligent diagnostic algorithm for wearable 12-lead ECG via self-supervised learning on large-scale dataset. Nat. Commun. 14, 3741 (2023).

Kirk, H. R., Vidgen, B., Röttger, P. & Hale, S. A. The benefits, risks and bounds of personalizing the alignment of large language models to individuals. Nat. Mach. Intell. 6, 383–392 (2024).

You, L. et al. Aifed: An adaptive and integrated mechanism for asynchronous federated data mining. In IEEE Transactions on Knowledge and Data Engineering 1–17 (IEEE, 2023).

Soltoggio, A. et al. A collective ai via lifelong learning and sharing at the edge. Nat. Mach. Intell. 6, 251–264 (2024).

McMahan, B., Moore, E., Ramage, D., Hampson, S. & Arcas, B. A. y. Communication-efficient learning of deep networks from decentralized data. In Proc 20th International Conference on Artificial Intelligence and Statistics, 1273–1282 (PMLR, 2017).

Karargyris, A. et al. Federated benchmarking of medical artificial intelligence with MedPerf. Nat. Mach. Intell. 5, 799–810 (2023).

Jiang, S., Li, Y., Firouzi, F. & Chakrabarty, K. Federated clustered multi-___domain learning for health monitoring. Sci. Rep. 14, 903 (2024).

Almanifi, O. R. A., Chow, C.-O., Tham, M.-L., Chuah, J. H. & Kanesan, J. Communication and computation efficiency in federated learning: a survey. Internet Things 22, 100742 (2023).

Zhang, C. et al. Generative gradient inversion via over-parameterized networks in federated learning. In Proc IEEE/CVF International Conference on Computer Vision (ICCV), 5126–5135 (IEEE, 2023).

Chen, G., Li, K., Abdelmoniem, A. M. & You, L. Exploring representational similarity analysis to protect federated learning from data poisoning. In Companion Proceedings of the ACM on Web Conference 2024, 525–528 (ACM, 2024).

Vahidian, S., Morafah, M., Chen, C., Shah, M. & Lin, B. Rethinking data heterogeneity in federated learning: Introducing a new notion and standard benchmarks. IEEE Trans. Artif. Intell. 5, 1386–1397 (2024).

Wehbi, O. et al. Fedmint: Intelligent bilateral client selection in federated learning with newcomer iot devices. IEEE Internet Things J. 10, 20884–20898 (2023).

Li, P. et al. Filling the missing: Exploring generative AI for enhanced federated learning over heterogeneous mobile edge devices. In IEEE Transactions on Mobile Computing 1–14 (IEEE, 2024).

Wang, X., Zhu, T. & Zhou, W. Supplement data in federated learning with a generator transparent to clients. Inf. Sci. 666, 120437 (2024).

Ren, H., Anicic, D. & Runkler, T. A. Tinyreptile: Tinyml with federated meta-learning. In Proc 2023 International Joint Conference on Neural Networks (IJCNN), 1–9 (IEEE, 2023).

Shao, J., Wu, F. & Zhang, J. Selective knowledge sharing for privacy-preserving federated distillation without a good teacher. Nat. Commun. 15, 349 (2024).

Dai, Y. et al. Tackling data heterogeneity in federated learning with class prototypes. In Proceedings of the AAAI Conference on Artificial Intelligence, 7314–7322 (AAAI Press, 2023).

Chen, R. et al. Service delay minimization for federated learning over mobile devices. IEEE J. Sel. Areas Commun. 41, 990–1006 (2023).

Khan, F. M. A., Abou-Zeid, H. & Hassan, S. A. Deep compression for efficient and accelerated over-the-air federated learning. IEEE Internet of Things Journal 1–1 (IEEE, 2024).

Jiang, Z. et al. Computation and communication efficient federated learning with adaptive model pruning. IEEE Trans. Mob. Comput. 23, 2003–2021 (2024).

Chen, X. et al. Multicenter hierarchical federated learning with fault-tolerance mechanisms for resilient edge computing networks. IEEE Transactions on Neural Networks and Learning Systems 1–15 (IEEE, 2024).

Deng, Y. et al. A communication-efficient hierarchical federated learning framework via shaping data distribution at edge. IEEE/ACM Transactions on Networking 1–16 (IEEE, 2024).

You, L. et al. Federated and asynchronized learning for autonomous and intelligent things. IEEE Netw. 38, 286–293 (2024).

Hijazi, N. M., Aloqaily, M., Guizani, M., Ouni, B. & Karray, F. Secure federated learning with fully homomorphic encryption for iot communications. IEEE Internet Things J. 11, 4289–4300 (2024).

Wang, B., Li, H., Guo, Y. & Wang, J. Ppflhe: A privacy-preserving federated learning scheme with homomorphic encryption for healthcare data. Appl. Soft Comput. 146, 110677 (2023).

Yang, X., Huang, W. & Ye, M. Dynamic personalized federated learning with adaptive differential privacy. Adv. Neural Inf. Process. Syst. 36, 72181–72192 (2023).

Wei, K. et al. Personalized federated learning with differential privacy and convergence guarantee. IEEE Trans. Inf. Forensics Security 18, 4488–4503 (2023).

Chen, L., Xiao, D., Yu, Z. & Zhang, M. Secure and efficient federated learning via novel multi-party computation and compressed sensing. Inf. Sci. 667, 120481 (2024).

Muazu, T. et al. A federated learning system with data fusion for healthcare using multi-party computation and additive secret sharing. Comput. Commun. 216, 168–182 (2024).

Agarwal, M., Yurochkin, M. & Sun, Y. Personalization in federated learning. In Federated Learning: A Comprehensive Overview of Methods and Applications, 71–98 (Springer, 2022).

Chen, F., Luo, M., Dong, Z., Li, Z. & He, X. Federated meta-learning with fast convergence and efficient communication. preprint at https://arxiv.org/abs/1802.07876 (2018).

Yan, R. et al. Label-efficient self-supervised federated learning for tackling data heterogeneity in medical imaging. IEEE Trans. Med. Imaging 42, 1932–1943 (2023).

Ovi, P. R., Dey, E., Roy, N. & Gangopadhyay, A. Mixed quantization enabled federated learning to tackle gradient inversion attacks. In Proc IEEE/CVF Conference on Computer Vision and Pattern Recognition, 5045–5053 (IEEE, 2023).

Palihawadana, C., Wiratunga, N., Kalutarage, H. & Wijekoon, A. Mitigating gradient inversion attacks in federated learning with frequency transformation. In European Symposium on Research in Computer Security, 750–760 (Springer, 2023).

Yin, D., Chen, Y., Kannan, R. & Bartlett, P. Byzantine-robust distributed learning: Towards optimal statistical rates. In Proceedings of the 35th International Conference on Machine Learning, 5650–5659 (2018).

Shen, X., Liu, Y., Li, F. & Li, C. Privacy-preserving federated learning against label-flipping attacks on non-iid data. IEEE Internet Things J. 11, 1241–1255 (2024).

Liu, X. et al. Privacy-enhanced federated learning against poisoning adversaries. IEEE Trans. Inf. Forensics Security 16, 4574–4588 (2021).

Yang, L., Huang, J., Lin, W. & Cao, J. Personalized federated learning on non-iid data via group-based meta-learning. ACM Trans. Knowl. Discov. Data 17, 1–20 (2023).

Yu, F. et al. Communication-efficient personalized federated meta-learning in edge networks. IEEE Trans. Netw. Serv. Manag. 20, 1558–1571 (2023).

Geiping, J., Bauermeister, H., Dröge, H. & Moeller, M. Inverting gradients-how easy is it to break privacy in federated learning? Adv. Neural Inf. Process. Syst. 33, 16937–16947 (2020).

Hatamizadeh, A. et al. Do gradient inversion attacks make federated learning unsafe? IEEE Trans. Med. Imaging 42, 2044–2056 (2023).

Rodríguez-Barroso, N., Jiménez-López, D., Luzón, M. V., Herrera, F. & Martínez-Cámara, E. Survey on federated learning threats: Concepts, taxonomy on attacks and defences, experimental study and challenges. Inf. Fusion 90, 148–173 (2023).

Xiao, H., Rasul, K. & Vollgraf, R. Fashion-mnist: a novel image dataset for benchmarking machine learning algorithms. Preprint at https://arxiv.org/abs/1708.07747 (2017).

Acknowledgements

This work was supported by the National Key Research and Development Program of China (2023YFB4301900), the Guangdong Basic and Applied Basic Research Foundation (2023A1515012895), the Department of Science and Technology of Guangdong Province (2021QN02S161), and the National Research Foundation Singapore under the AI Singapore Programme (AISG Award No: AISG2-TC-2023-008-SGKR). The work of H. V. Poor was supported in part by a grant from Princeton Language and Intelligence. Figure 1 was created with graphic elements provided by Freepik (https://www.freepik.com) and Iconfont (https://www.iconfont.cn). Figure 2, Fig. 5, SI Fig. 1, and SI Figs. 15–17 were created with graphic elements provided by Iconfont (https://www.iconfont.cn).

Author information

Authors and Affiliations

Contributions

L.Y., Z.G. and C.Y. designed research. L.Y. and Z.G. performed the experiments. C.Y.-C.C., Y.Z., and H.V.P. worked together to analyze and discuss the experimental results. All authors contributed to the writing and revising of the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Nature Communications thanks Yang Liu and the other, anonymous, reviewer(s) for their contribution to the peer review of this work. A peer review file is available.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Source data

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

You, L., Guo, Z., Yuen, C. et al. A framework reforming personalized Internet of Things by federated meta-learning. Nat Commun 16, 3739 (2025). https://doi.org/10.1038/s41467-025-59217-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41467-025-59217-z