Abstract

Beliefs that the US 2020 Presidential election was fraudulent are prevalent despite substantial contradictory evidence. Why are such beliefs often resistant to counter-evidence? Is this resistance rational, and thus subject to evidence-based arguments, or fundamentally irrational? Here we surveyed 1,642 Americans during the 2020 vote count, testing fraud belief updates given hypothetical election outcomes. Participants’ fraud beliefs increased when their preferred candidate lost and decreased when he won, and both effects scaled with partisan preferences, demonstrating partisan asymmetry (desirability effects). A Bayesian model of rational updating of a system of beliefs—beliefs in the true vote winner, fraud prevalence and beneficiary of fraud—accurately accounted for this partisan asymmetry, outperforming alternative models of irrational, motivated updating and models lacking the full belief system. Partisan asymmetries may not reflect motivated reasoning, but rather rational attributions over multiple potential causes of evidence. Changing such beliefs may require targeting multiple key beliefs simultaneously rather than direct debunking attempts.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

27,99 € / 30 days

cancel any time

Subscribe to this journal

Receive 12 digital issues and online access to articles

118,99 € per year

only 9,92 € per issue

Buy this article

- Purchase on SpringerLink

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

Data availability

Data are publicly shared at https://doi.org/10.5281/zenodo.5730630, release version 5.0.0.

Code availability

Code is publicly shared (along with the data) at https://doi.org/10.5281/zenodo.5730630, release version 5.0.0. All analyses were performed with R version 3.6.3 (https://www.r-project.org). For reproducibility, we used the checkpoint package, which installs all needed R packages as they were on a specific date. We set the date to June 30, 2021.

References

Hahn, U. & Harris, A. J. L. in Psychology of Learning and Motivation 41–102 (Elsevier, 2014).

Tappin, B. M., van der Leer, L. & McKay, R. T. The heart trumps the head: desirability bias in political belief revision. J. Exp. Psychol. Gen. 146, 1143–1149 (2017).

Rollwage, M., Zmigrod, L., de-Wit, L., Dolan, R. J. & Fleming, S. M. What underlies political polarization? A manifesto for computational political psychology. Trends Cogn. Sci. 23, 820–822 (2019).

Van Bavel, J. J. & Pereira, A. The partisan brain: an identity-based model of political belief. Trends Cogn. Sci. 22, 213–224 (2018).

Van Bavel, J. J., Rathje, S., Harris, E., Robertson, C. & Sternisko, A. How social media shapes polarization. Trends Cogn. Sci. 25, 913–916 (2021).

Lord, C. G., Ross, L. & Lepper, M. R. Biased assimilation and attitude polarization: the effects of prior theories on subsequently considered evidence. J. Pers. Soc. Psychol. 37, 2098–2109 (1979).

Batson, C. D. Rational processing or rationalization? The effect of disconfirming information on a stated religious belief. J. Pers. Soc. Psychol. 32, 176–184 (1975).

Munro, G. D. & Ditto, P. H. Biased assimilation, attitude polarization, and affect in reactions to stereotype-relevant scientific information. Pers. Soc. Psychol. Bull. 23, 636–653 (1997).

Finkel, E. J. et al. Political sectarianism in America. Science 370, 533–536 (2020).

Iyengar, S., Lelkes, Y., Levendusky, M., Malhotra, N. & Westwood, S. J. The origins and consequences of affective polarization in the United States. Annu. Rev. Polit. Sci. 22, 129–146 (2019).

Rutjens, B. T., Sutton, R. M. & van der Lee, R. Not all skepticism is equal: exploring the ideological antecedents of science acceptance and rejection. Pers. Soc. Psychol. Bull. 44, 384–405 (2018).

Gollwitzer, A. et al. Partisan differences in physical distancing are linked to health outcomes during the COVID-19 pandemic. Nat. Hum. Behav. 4, 1186–1197 (2020).

Doell, K. C., Pärnamets, P., Harris, E. A., Hackel, L. M. & Van Bavel, J. J. Understanding the effects of partisan identity on climate change. Curr. Opin. Behav. Sci. 42, 54–59 (2021).

Kunda, Z. The case for motivated reasoning. Psychol. Bull. 108, 480–498 (1990).

Sharot, T. & Garrett, N. Forming beliefs: why valence matters. Trends Cogn. Sci. 20, 25–33 (2016).

Bromberg-Martin, E. S. & Sharot, T. The value of beliefs. Neuron 106, 561–565 (2020).

Taber, C. S., Cann, D. & Kucsova, S. The motivated processing of political arguments. Polit. Behav. 31, 137–155 (2009).

Dorfman, H. M., Bhui, R., Hughes, B. L. & Gershman, S. J. Causal inference about good and bad outcomes. Psychol. Sci. 30, 516–525 (2019).

Gershman, S. J. How to never be wrong. Psychon. Bull. Rev. 26, 13–28 (2019).

Jern, A., Chang, K.-M. K. & Kemp, C. Belief polarization is not always irrational. Psychol. Rev. 121, 206–224 (2014).

Pearl, J. Probabilistic Reasoning in Intelligent Systems: Networks of Plausible Inference (Morgan Kaufmann, 2014).

Litman, L., Robinson, J. & Abberbock, T. TurkPrime.com: a versatile crowdsourcing data acquisition platform for the behavioral sciences. Behav. Res. Methods 49, 433–442 (2017).

Kim, M., Park, B. & Young, L. The psychology of motivated versus rational impression updating. Trends Cogn. Sci. 24, 101–111 (2020).

Bhui, R. & Gershman, S. J. Paradoxical effects of persuasive messages. Decision 7, 239–258 (2020).

Eil, D. & Rao, J. M. The good news–bad news effect: asymmetric processing of objective information about yourself. Am. Econ. J. Microecon. 3, 114–138 (2011).

Mobius, M. M., Niederle, M., Niehaus, P. & Rosenblat, T. S. Managing self-confidence: theory and experimental evidence. Management Science 68, 7793–7817 (2022).

Levitsky, S. & Ziblatt, D. How Democracies Die (Crown Publishing Group, 2018).

Berlinski, N. et al. The effects of unsubstantiated claims of voter fraud on confidence in elections. J. Exp. Polit. Sci. 1–16 (2021); https://doi.org/10.1017/XPS.2021.18

Clayton, K. et al. Elite rhetoric can undermine democratic norms. Proc. Natl Acad. Sci. USA 118, e2024125118 (2021).

Enders, A. M. et al. The 2020 presidential election and beliefs about fraud: continuity or change? Elect. Stud. 72, 102366 (2021).

Lewandowsky, S. et al. The Debunking Handbook 2020 (2020); https://doi.org/10.17910/b7.1182

Hyman, I. E. Jr & Jalbert, M. C. Misinformation and worldviews in the post-truth information age: commentary on Lewandowsky, Ecker, and Cook. J. Appl. Res. Mem. Cogn. 6, 377–381 (2017).

Nyhan, B. & Reifler, J. Displacing misinformation about events: an experimental test of causal corrections. J. Exp. Polit. Sci. 2, 81–93 (2015).

Benkler, Y. et al. Mail-in Voter Fraud: Anatomy of a Disinformation Campaign. Berkman Center Research Publication (2020); https://doi.org/10.2139/ssrn.3703701

Grant, M. D., Flores, A., Pedersen, E. J., Sherman, D. K. & Van Boven, L. When election expectations fail: polarized perceptions of election legitimacy increase with accumulating evidence of election outcomes and with polarized media. PLoS ONE 16, e0259473 (2021).

Schaffner, B. F. & Luks, S. Misinformation or expressive responding? Public Opin. Q. 82, 135–147 (2018).

Graham, M. H. & Yair, O. Expressive Responding and Trump’s Big Lie [Paper presenation]. Midwest Political Science Association Annual Meeting (2022); https://m-graham.com/papers/GrahamYair_BigLie.pdf

Berinsky, A. J. Telling the truth about believing the lies? Evidence for the limited prevalence of expressive survey responding. J. Polit. 80, 211–224 (2018).

Bakshy, E., Messing, S. & Adamic, L. A. Political science. Exposure to ideologically diverse news and opinion on Facebook. Science 348, 1130–1132 (2015).

Flaxman, S., Goel, S. & Rao, J. M. Filter bubbles, echo chambers, and online news consumption. Public Opin. Q. 80, 298–320 (2016).

Charpentier, C. J., Bromberg-Martin, E. S. & Sharot, T. Valuation of knowledge and ignorance in mesolimbic reward circuitry. Proc. Natl Acad. Sci. USA 115, E7255–E7264 (2018).

Iyengar, S. & Hahn, K. S. Red media, blue media: evidence of ideological selectivity in media use. J. Commun. 59, 19–39 (2009).

Sharot, T. & Sunstein, C. R. How people decide what they want to know. Nat. Hum. Behav. 4, 14–19 (2020).

Pereira, A., Harris, E. & Van Bavel, J. J. Identity concerns drive belief: the impact of partisan identity on the belief and dissemination of true and false news. Group Process. Intergr. Relat. 26, 24–47 (2021).

Vlasceanu, M. & Coman, A. The impact of social norms on health-related belief update. Appl. Psychol. Health Well Being 14, 453–464 (2022).

Jerit, J. & Barabas, J. Partisan perceptual bias and the information environment. J. Polit. 74, 672–684 (2012).

Beck, J. S. & Beck, A. T. Cognitive Behavior Therapy (Guilford Press, 2020).

Johnson, N. F. et al. The online competition between pro- and anti-vaccination views. Nature 582, 230–233 (2020).

Druckman, J. N. & McGrath, M. C. The evidence for motivated reasoning in climate change preference formation. Nat. Clim. Change 9, 111–119 (2019).

Bertoldo, R. et al. Scientific truth or debate: on the link between perceived scientific consensus and belief in anthropogenic climate change. Public Underst. Sci. 28, 778–796 (2019).

Faul, F., Erdfelder, E., Buchner, A. & Lang, A.-G. Statistical power analyses using G*Power 3.1: tests for correlation and regression analyses. Behav. Res. Methods 41, 1149–1160 (2009).

Cohen, J. Statistical Power Analysis for the Behavioral Sciences (Academic Press, 2013).

Bullock, J. G. & Lenz, G. Partisan bias in surveys. Annu. Rev. Polit. Sci. 22, 325–342 (2019).

Prior, M., Sood, G. & Khanna, K. You cannot be serious: the impact of accuracy incentives on partisan bias in reports of economic perceptions. Quart. J. Polit. Sci. 10, 489–518 (2015).

Efron, B. The Jacknife, the Bootstrap and Other Resampling Plans (SIAM, 1982).

Acknowledgements

We thank J. M. Carey, R. Muirhead and B. J. Nyhan for providing comments on a previous version of this manuscript. R.B.-N. is an Awardee of the Weizmann Institute of Science—Israel National Postdoctoral Award Program for Advancing Women in Science. M.J. was supported by a National Science Foundation (NSF) grant (2020906). The funders had no role in study design, data collection and analysis, decision to publish or preparation of the manuscript.

Author information

Authors and Affiliations

Contributions

Study design: R.B.-N. and T.D.W. Data collection: R.B.-N. Data analysis: R.B.-N. Supervision, expertize and feedback: M.J. and T.D.W. Bayesian model formulation: M.J. Conceptual framework development: R.B.-N., M.J. and T.D.W. Writing: R.B.-N., M.J. and T.D.W.

Corresponding authors

Ethics declarations

Competing interests

This research was conducted in part while M.J. was a Visiting Faculty Researcher at Google Research, Brain Team (Mountain View, CA, USA). This work was not part of a commercial project. The other authors declare no competing interests.

Peer review

Peer review information

Nature Human Behaviour thanks Eric Groenendyk and the other, anonymous, reviewer(s) for their contribution to the peer review of this work. Peer reviewer reports are available.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Extended data

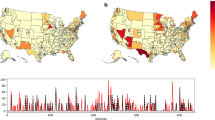

Extended Data Fig. 1 Demographic and partisan affiliation of participants.

(a) Number of participants for each combination of preferred candidate (x axis) and political affiliation (color). (b) Number of participants from each state who preferred each candidate (color). (c) Kernel density plot of participants’ age as a function of the preferred candidate (color). Preferred candidate: Dem = Biden; Rep = Trump; Affiliation: Dem = Democrat; Rep = Republican; Ind = Independent; Other.

Extended Data Fig. 2 Preference and prior belief data.

Kernel density plots of: (a) preference strength; (b) prior subjective probability of win by the preferred candidate; and (c) prior fraud belief. (d) Scatterplot for prior fraud belief as a function of prior subjective probability of win by the preferred candidate. Gray area represents 95% confidence interval; each point represents a single participant (with 30% opacity). Color represents the preferred candidate: Rep = Trump (red); Dem = Biden (blue). (e) Fraud belief update as a function of the scenario (loss or win according to the hypothetical map) and the preference strength (categorized into three ordered categories). Points represent single participants, and error bars represent standard error of the mean across participants.

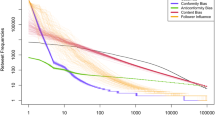

Extended Data Fig. 3 Simulations of predicted fraud update across the prior belief space for all models.

Predictions are shown for each of the four models: (a) The original Bayesian model (note that the plot for the Bayesian model is also the plot for the Hypothesis Desirability model, because fitting the latter to the data yields \(\alpha = 0\)); (b) the Outcome Desirability model, with predictions based on the mean preference strength in the sample (u = .95) and the strength of bias that best fits the data (α = .3); (c) the Fraud-Only model; and (d) the Random Beneficiary model.

Extended Data Fig. 4 Update of true vote belief.

Simulations of predicted true vote belief update across the prior belief space for the Bayesian model and the Outcome Desirability model. Outcome Desirability model predictions assume the strength of bias that best fits the data (α = .3) and are shown for the mean preference strength in the sample (u = .95) as well as a lower value (u = .55) for comparison.

Extended Data Fig. 5 Empirical and predicted fraud belief updates across models.

Empirical fraud belief updates as a function of the predicted fraud belief updates from each model (based on empirically measured priors). Each point represents a single participant. The 95% confidence regions are for the linear approximation of the regression of empirical upon predicted fraud belief updates (the lines have slopes less than unity in part because of regression effects). Note that the plot for the Bayesian model is also the plot for the Hypothesis Desirability model, because fitting the latter to the data yields \(\alpha = 0\).

Extended Data Fig. 6 Predicted posterior fraud belief for each scenario across models.

Paralleling the empirical results from Fig. 1c, the model-based predictions of the posterior fraud belief for each participant (N = 828) are presented as a function of the map scenario (hypothetical winner), for each of the four models: (a) The original Bayesian model (note that the plot for the Bayesian model is also the plot for the Hypothesis Desirability model, because fitting the latter to the data yields \(\alpha = 0\)); (b) the Outcome Desirability model; (c) the Fraud-Only model; and (d) the Random Beneficiary model. In all panels, the prior values are based on the empirically obtained prior fraud beliefs from the original survey, only for participants who completed the follow-up survey. Points represent single participants, and error bars represent standard error of the mean across participants.

Extended Data Fig. 7 Empirically measured priors from the follow-up survey.

(a-b) Heat maps for the proportion of participants with each combination of v (probability of the preferred candidate winning the true votes) and c (probability of fraud, if present, favoring the preferred candidate), based on the follow-up sample, for (a) Democratic participants and (b) Republican participants. (c-d) Heat map of the mean preference strength of participants for each combination of v and c, for (c) Democratic participants and (d) Republican participants. For panels C and D, mean preference is shown only for cells with >1% of participants.

Extended Data Fig. 8 Predicted fraud belief update as a function of prior win belief across models.

Paralleling the empirical results from Fig. 1e, the model-based predictions of the fraud belief update for each participant are presented as a function of the empirically measured prior probability of the preferred candidate’s win. Dashed lines show linear fits to models’ predictions, with 95% confidence regions. For comparison, solid lines show the linear fits to the observed empirical patterns (Fig. 1e). Note that the plot for the Bayesian model is also the plot for the Hypothesis Desirability model, because fitting the latter to the data yields \(\alpha = 0\).

Supplementary information

Supplementary Information

Supplementary Text, Figs. 1–4 and Tables 1–16.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Botvinik-Nezer, R., Jones, M. & Wager, T.D. A belief systems analysis of fraud beliefs following the 2020 US election. Nat Hum Behav 7, 1106–1119 (2023). https://doi.org/10.1038/s41562-023-01570-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s41562-023-01570-4