Abstract

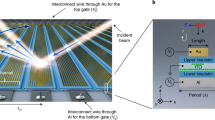

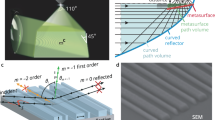

Structured light has proven instrumental in three-dimensional imaging, LiDAR and holographic light projection. Metasurfaces, comprising subwavelength-sized nanostructures, facilitate 180°-field-of-view structured light, circumventing the restricted field of view inherent in traditional optics like diffractive optical elements. However, extant-metasurface-facilitated structured light exhibits sub-optimal performance in downstream tasks, due to heuristic design patterns such as periodic dots that do not consider the objectives of the end application. Here we present 360° structured light, driven by learned metasurfaces. We propose a differentiable framework that encompasses a computationally efficient 180° wave propagation model and a task-specific reconstructor, and exploits both transmission and reflection channels of the metasurface. Leveraging a first-order optimizer within our differentiable framework, we optimize the metasurface design, thereby realizing 360° structured light. We have utilized 360° structured light for holographic light projection and three-dimensional imaging. Specifically, we demonstrate the first 360° light projection of complex patterns, enabled by our propagation model that can be computationally evaluated 50,000× faster than the Rayleigh–Sommerfeld propagation. For three-dimensional imaging, we improve the depth-estimation accuracy by 5.09× in root-mean-square error compared with heuristically designed structured light. Such 360° structured light promises robust 360° imaging and display for robotics, extended-reality systems and human–computer interactions.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

27,99 € / 30 days

cancel any time

Subscribe to this journal

Receive 12 print issues and online access

209,00 € per year

only 17,42 € per issue

Buy this article

- Purchase on SpringerLink

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

Data availability

Our 360° synthetic dataset and the learned metasurface phase map are available via GitHub at https://github.com/ches00/360-SL-Metasurface.

Code availability

The code used to generate the findings of this study is available via GitHub at https://github.com/ches00/360-SL-Metasurface.

References

Geng, J. Structured-light 3D surface imaging: a tutorial. Adv. Opt. Photonics 3, 128–160 (2011).

Dammann, H. & Gortler, K. High-efficiency in-line multiple imaging by means of multiple phase holograms. Opt. Commun. 3, 312–315 (1971).

Zhou, C. & Liu, L. Numerical study of Dammann array illuminators. Appl. Opt. 34, 5961–5969 (1995).

He, Z., Sui, X., Jin, G., Chu, D. & Cao, L. Optimal quantization for amplitude and phase in computer-generated holography. Opt. Express 29, 119–133 (2021).

Shi, L., Li, B., Kim, C., Kellnhofer, P. & Matusik, W. Towards real-time photorealistic 3D holography with deep neural networks. Nature 591, 234–239 (2021).

Tseng, E. et al. Neural étendue expander for ultra-wide-angle high-fidelity holographic display. Nat. Commun. 15, 2907 (2024).

Peng, Y., Choi, S., Padmanaban, N. & Wetzstein, G. Neural holography with camera-in-the-loop training. ACM Trans. Graph. 39, 185 (2020).

Baek, S.-H. & Heide, F. Polka lines: learning structured illumination and reconstruction for active stereo. In Proc. IEEE/CVF Conference on Computer Vision and Pattern Recognition 5757–5767 (IEEE, 2021).

Yang, S. P., Seo, Y. H., Kim, J. B., Kim, H. & Jeong, K. H. Optical MEMS devices for compact 3D surface imaging cameras. Micro Nano Syst. Lett. 7, 8 (2019).

Zhang, X. et al. Wide-angle structured light with a scanning MEMS mirror in liquid. Opt. Express 24, 3479–3487 (2016).

Arbabi, A., Horie, Y., Bagheri, M. & Faraon, A. Dielectric metasurfaces for complete control of phase and polarization with subwavelength spatial resolution and high transmission. Nat. Nanotechnol. 10, 937–943 (2015).

Yu, N. et al. Light propagation with phase discontinuities: generalized laws of reflection and refraction. Science 334, 333–337 (2011).

So, S., Mun, J., Park, J. & Rho, J. Revisiting the design strategies for metasurfaces: fundamental physics, optimization, and beyond. Adv. Mater. 35, 2206399 (2022).

Overvig, A. C. et al. Dielectric metasurfaces for complete and independent control of the optical amplitude and phase. Light Sci. Appl. 8, 92 (2019).

Kim, I. et al. Pixelated bifunctional metasurface-driven dynamic vectorial holographic color prints for photonic security platform. Nat. Commun. 12, 3614 (2021).

Wu, P. C. et al. Dynamic beam steering with all-dielectric electro-optic III–V multiple-quantum-well metasurfaces. Nat. Commun. 10, 3654 (2019).

Deng, Y., Wu, C., Meng, C., Bozhevolnyi, S. I. & Ding, F. Functional metasurface quarter-wave plates for simultaneous polarization conversion and beam steering. ACS Nano 15, 18532–18540 (2021).

Wang, S. et al. A broadband achromatic metalens in the visible. Nat. Nanotechnol. 13, 227–232 (2018).

Khorasaninejad, M. & Capasso, F. Metalenses: versatile multifunctional photonic components. Science 358, eaam8100 (2017).

Kim, K., Yoon, G., Baek, S., Rho, J. & Lee, H. Facile nanocasting of dielectric metasurfaces with sub-100 nm resolution. ACS Appl. Mater. Interfaces 11, 26109–26115 (2019).

Yoon, G., Kim, K., Huh, D., Lee, H. & Rho, J. Single-step manufacturing of hierarchical dielectric metalens in the visible. Nat. Commun. 11, 2268 (2020).

Zheng, G. et al. Metasurface holograms reaching 80% efficiency. Nat. Nanotechnol. 10, 308–312 (2015).

Ni, X., Kildishev, A. V. & Shalaev, V. M. Metasurface holograms for visible light. Nat. Commun. 4, 2807 (2013).

Huang, L. et al. Three-dimensional optical holography using a plasmonic metasurface. Nat. Commun. 4, 2808 (2013).

Yoon, G., Lee, D., Nam, K. T. & Rho, J. ‘Crypto-display’ in dual-mode metasurfaces by simultaneous control of phase and spectral responses. ACS Nano 12, 6421–6428 (2018).

Kim, I. et al. Stimuli-responsive dynamic metaholographic displays with designer liquid crystal modulators. Adv. Mater. 32, 2004664 (2020).

Heurtley, J. C. Scalar Rayleigh–Sommerfeld and Kirchhoff diffraction integrals: a comparison of exact evaluations for axial points. J. Opt. Soc. Am. A 63, 1003–1008 (1973).

Totzeck, M. Validity of the scalar Kirchhoff and Rayleigh–Sommerfeld diffraction theories in the near field of small phase objects. J. Opt. Soc. Am. A 8, 27–32 (1991).

Wen, D. et al. Helicity multiplexed broadband metasurface holograms. Nat. Commun. 6, 8241 (2015).

Pang, H., Yin, S., Deng, Q., Qiu, Q. & Du, C. A novel method for the design of diffractive optical elements based on the Rayleigh–Sommerfeld integral. Opt. Lasers Eng. 70, 38–44 (2015).

Kim, G. et al. Metasurface-driven full-space structured light for three-dimensional imaging. Nat. Commun. 13, 5920 (2022).

Li, Z. et al. Full-space cloud of random points with a scrambling metasurface. Light Sci. Appl. 7, 63 (2018).

Hartley, R. & Zisserman, A. Multiple View Geometry in Computer Vision (Cambridge Univ. Press, 2003).

Blender, O. Blender—a 3D modelling and rendering package; http://www.blender.org

Yang, W. et al. Direction-duplex Janus metasurface for full-space electromagnetic wave manipulation and holography. ACS Appl. Mater. Interfaces 15, 27380–27390 (2023).

Chang, A. X. et al. ShapeNet: an information-rich 3D model repository. Preprint at https://arxiv.org/abs/1512.03012 (2015).

Acknowledgements

S.-H.B. acknowledges the National Research Foundation (NRF) grants (RS-2023-00211658, NRF-2022R1A6A1A03052954) funded by the Ministry of Science and ICT (MSIT) and the Ministry of Education of the Korean government, and the Samsung Research Funding & Incubation Center for Future Technology grant (SRFC-IT1801-52) funded by Samsung Electronics. J.R. acknowledges the Samsung Research Funding & Incubation Center for Future Technology grant (SRFC-IT1901-52) funded by Samsung Electronics, the POSCO-POSTECH-RIST Convergence Research Center program funded by POSCO, the NRF grants (RS-2024-00356928, RS-2024-00337012, RS-2024-00416272, NRF-2022M3C1A3081312) funded by the MSIT of the Korean government, and the Korea Evaluation Institute of Industrial Technology (KEIT) grant (No. 1415185027/20019169, Alchemist project) funded by the Ministry of Trade, Industry and Energy (MOTIE) of the Korean government. G.K. acknowledges the NRF PhD fellowship (RS-2023-00275565) funded by the Ministry of Education (MOE) of the Korean government.

Author information

Authors and Affiliations

Contributions

S.-H.B., J.R., E.C. and G.K. conceived the idea and initiated the project. E.C. designed the propagation model. E.C., G.K. and J.Y. verified the propagation model. E.C. and Y.J. performed the end-to-end training and synthetic experiments. E.C., G.K. and Y.J. implemented the experimental prototype. G.K. fabricated the devices. J.R. guided the material characterization and device fabrication. All authors participated in discussions and contributed to writing the paper. All authors confirmed the final paper. S.-H.B. and J.R. guided all aspects of the work.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Nature Photonics thanks Haoran Ren and the other, anonymous, reviewer(s) for their contribution to the peer review of this work.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Extended data

Extended Data Fig. 1 Additional experimental demonstration of 360° light projection.

a–c 360° projection onto a hemispherical screen. d–f Bird-eye view of 360° projection in an enclosed room.

Extended Data Fig. 2 Qualitative comparison of depth estimation.

We captured an indoor scene with various objects for qualitative comparisons of 3D imaging. We compared our 360° structured light against 360° multi-dot structured light and passive stereo. The red boxes in the image represent a flat and smooth floor. 360° structured light successfully reconstructs the depth map of the scene, while the multi-dot structured light and passive method show noticeable holes and lack smoothness in their results. When compared to the heuristically-designed multi-dot structured light, our 360° structured light with learned metasurface yields more robust 3D imaging performance exploiting its non-uniform intensity distribution and distinct features on potential locations of corresponding point. Passive stereo struggles with texture-less scenes and it inevitably requires sufficient ambient illumination. Our 360° structured light enables robust 3D imaging under low ambient light conditions. Please refer Supplementary Note 13 for the method to ensure a fair comparison and additional evaluation.

Extended Data Fig. 3 Additional experiment results of 3D imaging with 360° structured light.

This presents additional qualitative results of various real-world scenes. It shows that 360° structured light enables accurate reconstruction on the four additional scenes containing various objects, including furniture, dolls, umbrellas, balls and human subjects.

Extended Data Fig. 4 Additional qualitative results on synthetic scenes.

The qualitative results are illustrated, which include rendered images and estimated depth for each comparative method. In the qualitative evaluation, our 360° structured light outperforms the other methods. Notably, in texture-less scenes, the performance gap is more pronounced.

Supplementary information

Supplementary Information

Supplementary Notes 1–15, Figs. 1–15 and Tables 1–9.

Supplementary Video 1

Our end-to-end learning process. The video consists of two parts. First, it demonstrates the learned metasurface phase map and the corresponding structured-light pattern. As discussed in the main text, the initial iterations of training establish the overall structure of the pattern, whereas subsequent iterations refine the finer details. This progression is visualized in the video. Next, the video showcases the simulation of a captured image, the estimated depth map and the ground truth during the training iterations. In the first stage of training, the captured image evolves as the metasurface phase map is learned in conjunction with the depth-estimation network. The video shows this dynamic process, highlighting the learned structured light. In the second stage of training, the metasurface phase map is fixed, and the focus solely shifts to optimizing the depth-estimation network. Consequently, the captured image remains consistent throughout this stage, whereas the quality of the estimated depth map progressively improves with the refined depth-estimation network.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Choi, E., Kim, G., Yun, J. et al. 360° structured light with learned metasurfaces. Nat. Photon. 18, 848–855 (2024). https://doi.org/10.1038/s41566-024-01450-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s41566-024-01450-x

This article is cited by

-

Three-dimensional varifocal meta-device for augmented reality display

PhotoniX (2025)

-

Single-cell bilayer design of a terahertz six-channel metasurface for simultaneous holographic and grayscale images

Scientific Reports (2025)

-

Monolithic silicon carbide metasurfaces for engineering arbitrary 3D perfect vector vortex beams

Nature Communications (2025)

-

Mechanically robust and self-cleanable encapsulated metalens via spin-on-glass packaging

Microsystems & Nanoengineering (2025)

-

Nonlocal Huygens’ meta-lens for high-quality-factor spin-multiplexing imaging

Light: Science & Applications (2025)