Abstract

Gastric cancer (GC) is the third leading cause of cancer death worldwide. Its clinical course varies considerably due to the highly heterogeneous tumour microenvironment (TME). Decomposing the complex TME from histological images into its constituent parts is crucial for evaluating its patterns and enhancing GC therapies. Although various deep learning methods were developed in medical field, their applications on this task are hindered by the lack of well-annotated histological images of GC. Through this work, we seek to provide a large database of histological images of GC completely annotated for 8 tissue classes in TME. The dataset consists of nearly 31 K histological images from 300 whole slide images. Additionally, we explained two deep learning models used as validation examples using this dataset.

Similar content being viewed by others

Background & Summary

The subjective evaluation of histological slides for GC remains the gold standard for its diagnosis and guides its clinical treatment. The corresponding histological slide images are routinely available and can offer a wealth of information about TME, which plays a critical role in the disease progression and is a key determinant of treatment response in GC patients1,2,3. Thus, there is a high clinical need to comprehensively evaluate the TME patterns in the slide images of individual GC patients4,5,6,7.

Extensive research into TME patterns have provided new insights into the diagnosis and prognosis of various cancers8,9,10,11,12,13,14,15,16. Based on the quantification of various tissue components in the TME, several histological image biomarkers have been developed. For instance, Kather et al. quantified different tissue components within the TME of colorectal cancer (CRC) slides, developing a deep stroma score that significantly predicts CRC patient survival17. The work by Liang et.al. employed a deep learning-guided framework to analyze TME patterns in liver cancer histological images, discovering a novel tissue spatial biomarker strongly linked to patient prognosis18. Similarly, Wang et al. introduced the DeepGrade model, which utilizes TME features from whole-slide histopathology images of breast cancer to generate a risk score, serving as a prognostic biomarker and providing clinically relevant information beyond traditional histological grading19. Rong et al. developed a deep learning method for TME quantification within breast cancer histological images, identifying TME features as independent biomarkers for survival prediction20. Additionally, Wang et al. used a graph convolutional network to study the cell spatial organization in the TME derived from lung and oral cancer histological images, obtaining a spatial feature that excelled in prognostic and predictive tasks21. Another study by Wang et al. created a deep learning tool to explore the TME in lung cancer histological images, finding a cell spatial image biomarker predictive of patient survival and associated with gene expression22. Fremond et al. analyzed TME patterns in endometrial cancer histological images, identifying morpho-molecular correlates that could serve as a prognostic factor for risk stratification23.

Given these findings, it is evident that TME patterns in histological images play a crucial role in the diagnosis and prognosis of various cancers. Specifically, the TME patterns of GC have been shown to significantly impact its progression and therapeutic responses24. For example, Liu et al. found that different components in GC TME play their own roles in inducing the immune tolerance to promote the GC progress25. However, the extraction and analysis of TME features in GC histological images are are hindered by a scarcity of images with detailed TME annotations. To this end, we build, to our knowledge, the largest histological image dataset of GC with detailed annotation of TME components, aiming to provide a platform for exploring TME patterns in histological images, potentially discovering new biomarkers and guiding treatment strategies for gastric cancer. In addition, this dataset can also serve as a foundation for developing a pre-trained feature extractor, valuable for transfer learning in diagnosis and prognosis of other cancer types. Furthermore, compared to the existing large dataset, GasHisSDB, our dataset offers significant advantages mainly in two key aspects. While GasHisSDB only provides binary classification (normal/abnormal), our dataset features eight distinct tissue classes within the TME, enabling more comprehensive gastric diagnosis analysis. Notebly, our dataset integrates comprehensive clinical information alongside histological images, whereas GasHisSDB is only limited to image data.

Methods

Slides preparation and digitization

Formalin-fixed, paraffin-embedded tissue slides were provided by the Cancer Hospital of Harbin Medical University between the years 2013 and 2015. A total of 300 H&E-stained slides of gastric cancer patients were collected from the hospital archives according to the pathology reports. The study was approved by the Research Ethics Committee of the Harbin Medical University (ID: KY2024-16). The ethics committee has issued a waiver of consent and approved the open publication of the dataset. All tissue and clinical information were collected from patients from whom written informed consent had been obtained for research purposes. All histological slides were scanned with Aperio AT2 scanner (Leica Biosystems, Germany) scanner equipped with an objective lens with a magnification of 20× to obtain the histological images saved in.svs file format.

Annotation process

The slides were evaluated by two junior pathologists (Huiying Li, Kexin Chen) with more than 5 years of experience in the field and a senior pathologist (Yang Jiang) with more than 10 years of experience in order to confirm the diagnosis of each case and to annotate each slide image. In order to make quality control, a three-step annotation process was performed as follows, the initial labeling step, the verification step, and the final check step. We referred to these pathologists as A (senior pathologist), B (junior pathologist), and C (junior pathologist). An image was firstly randomly assigned to reader B or C. Once the labeling was finished, the image and annotation were then passed on to another reader for review. Finally, the annoations were checked by reader A. Specifically, eight tissue classes related to the tumor microenvironment (TME) were annotated in each slide image: adipose tissue (ADI), debris (DEB), mucus (MUC), muscle (MUS), lymphocyte aggregates (LYM), stroma (STR), normal mucosa (NOR), and tumor epithelium (TUM) (see Fig. 1). These annotated slide images in.svs file format were then tiled into 224 × 224 patches and saved in.png format. Each patch was labeled with the same tissue label as its corresponding tissue region. Finally, a total of nearly 31 K patches were extracted from 300 slide images. The detailed data distribution of these slide images and patches are illustrated in Fig. 2.

Clinical data acquisition

The clinical data of these GC patients were obtained from the records in the information system of the Cancer Hospital of Harbin Medical University. The corresponding variables of the data are displayed in Table 1. Personal identifiers were removed to prevent the identification of individual patients.

Data Records

The complete dataset, named HMU-GC-HE-30K, is publicly available on Figshare (https://doi.org/10.6084/m9.figshare.25954813)26. It consists of two components: a file containing the annotated image patches from the corresponding histological slide images, and a spreadsheet named “HMU-GC-Clinical.csv”, which includes the clinical data tabulated according to Table 1. The dataset file structure is shown in Fig. 3. Histological slide images in.svs format, patch images in.png format, and clinical information data are provided. The labeled patch images of the slide images are stored in folders named according to the corresponding tumor microenvironment (TME) tissue components, such as ADI, DEB, MUC, etc. These images and related clinical information can be used to extract histological TME features for various downstream tasks, such as prediction and prognosis.

Technical Validation

The slide evaluation and annotation process involved a quality control process with three pathologists: two junior pathologists with over 5 years of experience and one senior pathologist with over 10 years of experience. The process followed a workflow where images were first randomly assigned to either of the junior pathologists for initial labeling. The labeled images were then cross-reviewed by the other junior pathologist, and finally, all annotations underwent a final check by the senior pathologist to ensure accurate diagnosis and annotation of each slide image.

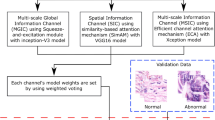

The CHD dataset comprises 3,887 images for each of the eight Tumor Microenvironment (TME) components. To validate the CHD dataset proposed in this study, we used two previously published models: the Transformer architecture (ViT)27 and CNN-based model EfficientNet28, for the classification analysis of the eight TME tissue components mentioned above (see Fig. 4). Note that the models were trained using a 10-fold cross validation strategy. Initially, 20% of the dataset was allocated as an independent test set. The remaining data are for 10-fold cross-validation. To ensure proportional representation of each component in both of the training and test sets, a stratified sampling method was employed.

Workflow of data preprocessing and model architecture. (A) The histological slide image, that is, whole slide image (WSI) is digitized, segmented, and tessellated into 224 × 224 patches. (B) ViT model pipeline: Patch image is linearly projected into flattened patches, followed by feature extraction via a transformer layer with multi-head attention. Predictions for various tissue classes are performed using a multi-layer perceptron (MLP). (C) EfficientNet model pipeline: The input image undergoes initial feature extraction via a convolution layer, followed by deep feature extraction using MBConv blocks. Extracted features are then processed through global average pooling, a flatten layer, a dropout layer, and a fully connected (FC) layer for the prediction of various tissue classes.

For the classification task using ViT, we utilized the pretrained parameters downloaded from Hugging Face(https://huggingface.co/). The ViT model architecture included a dropout layer set at 0.3. We trained the model using the Adam optimizer with a learning rate of 0.001 and a weight decay of 1e-4. Early stopping was implemented with a patience of 5 epochs based on improvements in validation loss. As shown in Fig. 5, the 10-fold cross-validation model was applied to the independent test set, achieving an AUC of 0.94. Similarly, for the classification task using EfficientNet, we developed a custom model based on the EfficientNet architecture available in the efficientnet_pytorch library. To expedite model training, we used the pretrained weights of EfficientNet-B0. The optimizer, learning rate, and early stopping strategy were the same as those used for the ViT model. This model achieved an AUC of 0.96 on the independent test set.

Code availability

The code used in this study was written in Python3 and is available at GitHub (https://github.com/TomHardy1997/GCTC). The code is based on PyTorch (version 1.13.1) and OpenSlide (version 1.2) and provides custom dataloaders for use of the CHD dataset.

References

Kanavati, F. & Tsuneki, M. A deep learning model for gastric diffuse-type adenocarcinoma classification in whole slide images. Scientific Reports 11, 20486, https://doi.org/10.1038/s41598-021-99940-3 (2021).

Kuntz, S. et al. Gastrointestinal cancer classification and prognostication from histology using deep learning: Systematic review. European Journal of Cancer 155, 200–215, https://doi.org/10.1016/j.ejca.2021.07.012 (2021).

Wang, X. et al. Predicting gastric cancer outcome from resected lymph node histopathology images using deep learning. Nature Communications 12, 1637, https://doi.org/10.1038/s41467-021-21674-7 (2021).

Deng, Y. et al. Artificial intelligence applications in pathological diagnosis of gastric cancer. Heliyon 8 (2022).

Jiang, Y. et al. Non-invasive tumor microenvironment evaluation and treatment response prediction in gastric cancer using deep learning radiomics. Cell Reports Medicine 4 (2023).

Song, Z. et al. Clinically applicable histopathological diagnosis system for gastric cancer detection using deep learning. Nature communications 11, 4294 (2020).

Wang, Y. et al. Cell graph neural networks enable the precise prediction of patient survival in gastric cancer. NPJ precision oncology 6, 45 (2022).

Baghban, R. et al. Tumor microenvironment complexity and therapeutic implications at a glance. Cell Communication and Signaling 18, 1–19 (2020).

Bilotta, M. T., Antignani, A. & Fitzgerald, D. J. Managing the TME to improve the efficacy of cancer therapy. Frontiers in immunology 13, 954992 (2022).

Cao, R., Yuan, L., Ma, B., Wang, G. & Tian, Y. Tumour microenvironment (TME) characterization identified prognosis and immunotherapy response in muscle-invasive bladder cancer (MIBC). Cancer Immunology, Immunotherapy 70, 1–18 (2021).

Chen, R. J. et al. Pan-cancer integrative histology-genomic analysis via multimodal deep learning. Cancer Cell 40, 865–878. e866 (2022).

Gao, R. et al. Harnessing TME depicted by histological images to improve cancer prognosis through a deep learning system. Cell Reports Medicine (2024).

Giraldo, N. A. et al. The clinical role of the TME in solid cancer. British Journal of Cancer 120, 45–53, https://doi.org/10.1038/s41416-018-0327-z (2019).

Jin, M.-Z. & Jin, W.-L. The updated landscape of tumor microenvironment and drug repurposing. Signal Transduction and Targeted Therapy 5, 166, https://doi.org/10.1038/s41392-020-00280-x (2020).

Sheng, W., Li, X., Li, J., Mi, Y. & Li, F. Evaluating prognostic value and relevant gene signatures of tumor microenvironment characterization in esophageal carcinoma. Journal of Gastrointestinal Oncology 12, 1228 (2021).

Tiwari, A., Trivedi, R. & Lin, S.-Y. Tumor microenvironment: barrier or opportunity towards effective cancer therapy. Journal of Biomedical Science 29, 83, https://doi.org/10.1186/s12929-022-00866-3 (2022).

Kather, J. N. et al. Predicting survival from colorectal cancer histology slides using deep learning: A retrospective multicenter study. PLoS medicine 16, e1002730 (2019).

Liang, J. et al. Deep learning supported discovery of biomarkers for clinical prognosis of liver cancer. Nature Machine Intelligence 5, 408–420, https://doi.org/10.1038/s42256-023-00635-3 (2023).

Wang, Y. et al. Improved breast cancer histological grading using deep learning. Annals of Oncology 33, 89–98 (2022).

Rong, R. et al. A Deep Learning Approach for Histology-Based Nucleus Segmentation and Tumor Microenvironment Characterization. Modern Pathology 36, 100196, https://doi.org/10.1016/j.modpat.2023.100196 (2023).

Wang, S. et al. Deep learning of cell spatial organizations identifies clinically relevant insights in tissue images. Nature Communications 14, 7872, https://doi.org/10.1038/s41467-023-43172-8 (2023).

Wang, Y. et al. Transcriptional intra-tumour heterogeneity predicted by deep learning in routine breast histopathology slides provides independent prognostic information. European Journal of Cancer 191, 112953 (2023).

Fremond, S. et al. Interpretable deep learning model to predict the molecular classification of endometrial cancer from haematoxylin and eosin-stained whole-slide images: a combined analysis of the PORTEC randomised trials and clinical cohorts. The Lancet Digital Health 5, e71–e82 (2023).

Zeng, D. et al. Tumor microenvironment characterization in gastric cancer identifies prognostic and immunotherapeutically relevant gene signatures. Cancer immunology research 7, 737–750 (2019).

Liu, Y., Li, C., Lu, Y., Liu, C. & Yang, W. Tumor microenvironment-mediated immune tolerance in development and treatment of gastric cancer. Frontiers in Immunology 13, 1016817 (2022).

Lou, S. et al. Gastric Cancer Histopathology Tissue Image Dataset (GCHTID). figshare. Dataset. https://doi.org/10.6084/m9.figshare.25954813.v1 (2024).

Dosovitskiy, A. et al. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv preprint arXiv:2010.11929 (2020).

Tan, M. & Le, Q. in International conference on machine learning. 6105–6114 (PMLR).

Acknowledgements

The study was supported by National Natural Science Foundation of China (NSFC) (82304250, 82273734), National Natural Science Foundation of China’s General Program (82072640), Outstanding Youth Project of Heilongjiang Natural Science Foundation (YQ2021H023), Collaborative Innovation Projects of “Double First-class” Disciplines in Heilongjiang Province (LJGXCG2023-087), Climing program of Harbin Medical University Cancer Hospital (PDYS-2024-14), Heilongjiang Provincial Natural Science Foundation of China (LH2023H096), the Postdoctoral research project in Heilongjiang Province (LBH-Z22210), the China Postdoctoral Science Foundation (2023MD744213).

Author information

Authors and Affiliations

Contributions

S.L., J.J., L.W., L.C. and P.H. conceived of the project, S.L. and J.J. designed the detection model and developed the algorithms, H.L., Y.J., K.C, P.H. provided and annotated the slide images, J.J., M.H., X.Z., K.G., L.W. and Q.Z. participated in workstation environment deployment. J.J., X.Z. contributed to the preprocess of the data. S.L. and L.C. wrote the manuscript. All the authors revised the paper.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Lou, S., Ji, J., Li, H. et al. A large histological images dataset of gastric cancer with tumour microenvironment annotation for AI. Sci Data 12, 138 (2025). https://doi.org/10.1038/s41597-025-04489-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41597-025-04489-9