Abstract

While prognosis and risk of progression are crucial in developing precise therapeutic strategy in neovascular age-related macular degeneration (nAMD), limited predictive tools are available. We proposed a novel deep convolutional neural network that enables feature extraction through image and non-image data integration to seize imperative information and achieve highly accurate outcome prediction. The Heterogeneous Data Fusion Net (HDF-Net) was designed to predict visual acuity (VA) outcome (improvement ≥ 2 line or not) at 12th months after anti-VEGF treatment. A set of pre-treatment optical coherence tomography (OCT) image and non-image demographic features were employed as input data and the corresponding 12th-month post-treatment VA as the target data to train, validate, and test the HDF-Net. This newly designed HDF-Net demonstrated an AUC of 0.989 (95% CI 0.970–0.999), accuracy of 0.936 [95% confidence interval (CI) 0.889–0.964], sensitivity of 0.933 (95% CI 0.841–0.974), and specificity of 0.938 (95% CI 0.877–0.969). By simulating the clinical decision process with mixed pre-treatment information from raw OCT images and numeric data, HDF-Net demonstrated promising performance in predicting individualized treatment outcome. The results highlight the potential of deep learning to simultaneously process a broad range of clinical data to weigh and leverage the complete information of the patient. This novel approach is an important step toward real-world personalized therapeutic strategy for typical nAMD.

Similar content being viewed by others

Introduction

Age-related macular degeneration (AMD) is a leading cause of irreversible blindness worldwide, and the number of affected population is expected to reach 288 million by 20401. It is a debilitating, chronic and progressive disease that independence and overall quality of life decline in parallel with visual impairment2. Over the past several years, anti-vascular endothelial growth factor (anti-VEGF) injection has become the mainstay of treatment for neovascular AMD (nAMD), and has significantly improved visual outcomes and prevent vision loss in most patients3. However, high cost and the need for frequent injections result in substantial healthcare burden on both patients and physicians4,5. In addition, questions persist regarding whether an intensive anti-VEGF therapy may have negative effects on nonvascular tissues and influence in the retinal pigment epithelium (RPE) and choriocapillaris integrity6,7,8. Moreover, each injection poses a small yet significant risk of complications threatening eyesight including endophthalmitis, retinal tear or detachment, and vitreous hemorrhage9. All these staggering disease burden and potential risks have prompted search for a more precise approach for dosage minimization.

Artificial intelligence (AI) has played an increasingly prominent role in every aspect of ophthalmology in recent years10,11. With the great potential of imitating the neural structure of the brain, deep learning has risen to the forefront in healthcare. Inspired by the structure of the primary visual cortex, convolutional neural network (CNN) represents the latest revolution of deep learning technologies, which is capable of effectively analyzing medical images12. Besides automated detection of features, successful application of AI has been reported for individualized treatment requirements and outcome prediction. Bogunovic et al. evaluated a total of 317 subjects with OCT images from baseline, month 1, and month 2 to predict anti-VEGF injection requirements13. Schmidt-Erfurth et al. introduced a prognostic model using random forest machine learning to predict visual outcomes after 12 months of anti-VEGF injection in the setting of a randomized controlled trial14. Rohm et al. proposed a model for visual acuity (VA) prediction based on clinical data from electronic medical records (EMR) and measurement features from OCT15. Although prior studies have presented proof-of-principle evidence, longitudinal datasets and serial OCT images are often required to make predictions. Furthermore, previous deep learning models were often developed based on pre-defined extracted features associated with nAMD that were confirmed to be clinically relevant in literatures. CNN has been proven very effective for image classifications, thus, medical image analysis is one of the early applications of CNNs in healthcare. Nonetheless, even for imaging-based medical specialties, clinical data is crucial to guide image interpretation and clinical practice. Therefore, multimodal deep learning models that can ingest both image and clinical data have shown an increased role in healthcare.

The aim of this study is to introduce a novel CNN architecture automatically and simultaneously process real-world image and non-image data for VA outcome prediction after 12-months of anti-VEGF treatment. Since it is of great interest to be able to predict treatment outcomes for each patient at the very beginning of the therapeutic course, we use only basic patient demographics, baseline OCT image and baseline BCVA. An important additional aim of this study is to compare the accuracy with traditional CNN algorithm to unleash the potential of deep learning and facilitate resource management, therapeutic decision making, and patient counseling.

Materials and methods

Data sources and study population

We retrospectively reviewed the medical records of patients with nAMD who underwent an intravitreal injection (IVI) of anti-VEGF drugs in the interval of three consecutive monthly injections and pro re nata injections (PRN) at the Taipei Veterans General Hospital from November 2013 to July 2018. The inclusion criteria were as follows: (1) age ≥ 55 years; (2) a diagnosis of typical nAMD confirmed with fluorescein angiography (FA), indocyanine green angiography (ICGA), or OCT angiography; (3) no documented IVI of anti-VEGF within 6 months prior to study entry; (4) Patients with one-year follow-up data available. The exclusion criteria were: (1) Patients with other intraocular vascular, inflammatory, infective, or ischemic diseases such as polypoidal choroidal vasculopathy, uveitis, or retinal vein occlusion…etc.; (2) Patients with history of intraocular operation other than IVI within the following 12-month treatment period, including cataract surgery and vitreoretinal surgery. This study followed the tenets of the Declaration of Helsinki and was approved by the Institutional Review Board of the Taipei Veterans General Hospital.

Data and OCT image pre-processing

The pretherapeutic spectral-___domain (SD) OCT images were acquired at baseline with AngioVue Imaging System (RTVue-SD OCT; Optovue Inc, Fremont, CA, USA). The OCT images consisted of the 10-mm horizontal and vertical B-scans of the cross line report of the macular scan, which were cut and resized to 620 × 620 pixel centered at fovea using the region of interest (ROI) cutting technique. The two images of the same eye were allocated into the same dataset.

For patient demographics and clinical data, baseline best-corrected visual acuity (BCVA), gender, and age were extracted from the electronic medical records. SD-OCT scans were preprocessed using motion artifact removal to reduce image artifacts caused involuntary eye motion during acquisition. OCT images were randomly separated into the training, validation and testing dataset. There were no images of the same patient simultaneously assigned to the two datasets. The analysis of visual outcome is evaluated at baseline and 12th months after treatment. We defined the “improved case” to consist of patients with visual acuity increase ≥ 2 lines of Snellen chart at 12th month after treatment. Patients with visual acuity increase < 2 lines were defined as “unimproved case” (Fig. 1A).

Overview of the AI model

HDF-Net was designed as a deep learning model that could predict treatment outcome using baseline clinical data and OCT image by Industrial Technology Research Institute of Taiwan (Fig. 1B). The HDF-Net described herein was granted provisional patent 63/091,280 on Oct. 13th, 2020.

Contrary to conventional classification approach in which extracted features are fed into algorithms, HDF-Net automatically learn representative complex features directly from the image and numeric data itself. The overall structure of the HDF-Net is shown in Fig. 2. The baseline OCT image and clinical data are the input dataset to the HDF-Net. The first stage of HDF-Net is the image feature extraction network, which consists of five convolutional layers (from Conv1 to Conv5) and three maximum pooling layers. Output signal of the final pooling layer, which is the feature map extracted from the OCT image, is flatten to be reshaped into a vector as the input signal of the next stage. The classification network consists of two fully connected layers (FC6 and FC7) with a dropout probability of 0.5 and a final 1 × 2 softmax layer (FC8, output layer) served as a two-class classifier. An addition input layer is designed for the corresponding numeric data, and is concatenated with the layer FC6. Layer FC7 hybridizes the image features extracted from the first stage and the numeric features from baseline patient data. Rectified linear unit (ReLU), the most commonly used activation function, is applied after each hidden layer. To normalize layer inputs, a batch normalization (BN) layer is added after layer FC6.

The architecture of HDF-Net. The feature extraction network consists of five convolution layers. The first convolutional layer has 96 kernels within the size of 11 × 11, presented as "Conv1, 11 × 11 conv, 96", while the second convolutional layer has 256 kernels within the size of 5 × 5, the third and fourth convolutional layer has 384 kernels within the size of 3 × 3, and the fifth convolutional layer has 256 kernels within the size of 3 × 3.

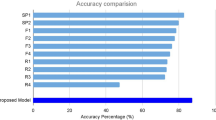

Performance comparison between HDF-Net, ResNet50 and AlexNet

The performance of the three models (HDF-Net, ResNet5016 and AlexNet17) was evaluated using cross-validation techniques with the dataset split randomly into 80% and 20% for training and testing sets, respectively. The AlexNet classifier was trained with baseline OCT image dataset using Caffe deep learning framework. The transfer learning technique was applied by initializing the five convolutional layers of AlexNet with the weights pre-trained on ImageNet, and the base learning rate was set to 0.001 for the stochastic gradient descent (SGD) to re-train the whole AlexNet model with a batch size of 100. In comparison, we trained HDF-Net with heterogeneous dataset including not only baseline OCT images but the corresponding numeric clinical data (pretherapeutic BCVA, gender, and age). The batch size and the base learning rate were the same as those set in the training process of ResNet50 and AlexNet.

Evaluation of model and statistical analysis

To evaluate the performance and to assess how the results would generalize to an independent data set, we used a holdout cross validation method. We randomly assign data points to the training and testing dataset, following a training/validation splitting ratio of 0.75/0.25. The quantitative performance of the two predictive models across all the validated predictions is summarized with an area under the receiver operating characteristic (ROC) curve and presented as sensitivity and specificity at an operating point.

Ethical statement

The authors are accountable for all aspects of the work in ensuring that questions related to the accuracy or integrity of any part of the work are appropriately investigated and resolved.

Informed consent statement

Patient consent was waived due to the privacy rule, as deemed by the Institutional Review Board.

Results

Patient characteristics

The demographics of all patients/eyes included are summarized in Table 1. Of all 698 evaluable patients, 232 were male (33.24%) and 466 were female (66.76%). The mean (± SD) age of patients was 78.47 (± 9.88) years. 165 patients had at least 2-line VA improvement, and 533 failed to achieve VA improvement ≥ 2 line. The mean baseline BCVA of the “improved cases” and “unimproved cases” was 0.32 ± 0.15 and 0.19 ± 0.15, respectively; The mean 12th-month BCVA was 0.62 ± 0.16 and 0.16 ± 0.16, respectively, after treatment.

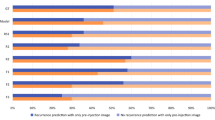

Prediction of visual outcomes

We evaluated the performance of the model in an internal validation set. A total of 1396 image series representing 698 individuals was successfully exported from the image archive. 75% were used for model training. The HDF-Net predicts the one-year VA outcome with AUC of 0.989 (95% CI 0.970–0.999), accuracy of 0.936 (95% confidence interval [CI] 0.889–0.964), sensitivity of 0.933 (95% CI 0.841–0.974), and specificity of 0.938 (95% CI 0.877–0.969). While the metrics for ResNet50 were AUC of 0.924 (95% CI 0.870–0.934), accuracy of 0.852 (95% CI 0.841–0.933) sensitivity of 0.795 (95% CI 0.716–0.896), and specificity of 0.895 (95% CI 0.822–0.952); and the metrics for AlexNet were AUC of 0.936 (95% CI 0.894–0.978), accuracy of 0.895 (95% CI 0.841–0.933), sensitivity of 0.824 (95% CI 0.716–0.896), and specificity of 0.942 (95% CI 0.880–0.973). Figure 3 describes the area under the receiver operating characteristic (ROC) curve (AUC) and demonstrates learning curves of internal validation.

Receiver operating characteristic (ROC) curves and learning curve of the HDF-Net, ResNet50 and AlexNet for VA outcome prediction. (A) For binary classification tasks of VA improved or unimproved, the presented HDF-Net (red line) performs equally or better than the traditional AlexNet (orange line) and ResNet50 (purple line). (B) Loss versus iteration graph of HDF-Net showing loss of 0.351 at 16,000 iteration; (C) Accuracy versus iteration graph of HDF-Net showing a final validation accuracy of 93.6% at 16,000 iteration.

Attention maps generated on OCT images by HDF-Net

Consequent to the evaluation of OCT images using the HDF-Net, attention maps were generated and overlaid on the OCT images to represent in a quantitative manner the relative contributions that areas in each image made to the ascertainment decision. Moving on to model explanation, heatmaps demonstrated that areas contributed most consequentially to visual outcome identified by HDF-Net are fovea contour, the ellipsoid zone (EZ), and other pathological features associated with nAMD, in agreement with what clinicians deem relevant in prognosis. Examples of these attention maps representing true and false outcome forecast are shown in Fig. 4. This verification allows us to safely suggest that the HDF-Net displays a degree of validity and is making classifications based on anatomical integrity and pathological features rather than systemic errors that cannot be explained. A common classification error is seen on OCT images with subretinal hyperreflective material (SHRM), which may be a collection of neovascular tissue, fibrosis, exudate or hemorrhage.

Representative horizontal scans of SD-OCT and corresponding superimposed heatmaps. Presented are (A) an example of an OCT image with the HDF-Net correctly predicted as an improved case (85 M; baseline VA:0.2; 12th month VA: 0.5); (B) The network located attention on the margin of SRF, and on retinal pigment epithelial mottling. Also, some attention corresponded to islands of preserved ellipsoid zone (EZ) reflecting preserved visual potential. (C) An example of an OCT image with the HDF-Net correctly predicted as an unimproved case (94 M; baseline VA:0.05; 12th month VA: 0.1); (D) The attention is located at the subfoveal disciform scar and disruption of the EZ. There is also some attention on the chorioretinal atrophy with adjacent loss of outer retinal layers. (E) An example of an OCT image with the HDF-Net predicted to improve but the patient failed to achieve VA improvement > = 2 lines (96 M; baseline VA:0.4; 12th month VA: 0.4); (F) The attention is located primarily on the preserved EZ and the subretinal hyperreflective material (SHRM) with fibrovascular components. A relatively normal fovea contour was also identified. (G) An example of an OCT image with the HDF-Net predicted not to improve but the patient demonstrated VA improvement > = 2 lines (82 M; baseline VA:0.4; 12th month VA: 0.6); (H) The attention is located on the SHRM with fibrovascular/hemorrhagic components. Also some attention on the disruption of the EZ and the hyperreflective zone of the inner retina.

Discussion

AI is making precision medicine a reality, strengthened by digital healthcare revolution including integrated electronic health records and advancement of computational power. In fact, prediction is not new to ophthalmology. A variety of risk scores have been investigated to determine individual risk for different ocular diseases, in search for a personalized medicine approach. However, it was only until the rise of deep learning algorithms that the concept of forecasting precise treatment outcome can be realized. Our study demonstrates that a deep learning neural network was effective at predicting one year treatment outcome from baseline OCT images (AlexNet accuracy: 0.895, sensitivity: 0.824, specificity: 0.942, AUC: 0.936), and the accuracy was even higher when clinical data were combined using a novel CNN model (HDF-Net accuracy: 0.936, sensitivity: 0.933, specificity: 0.938, AUC: 0.989).

The prediction of nAMD visual outcome derived from OCT features has been previously studied by other groups13,18. For instance, Schmidt-Erfurth et al. introduced a model to predict visual outcomes in the setting of a randomized controlled trial, and demonstrated R2 = 0.34 if only the baseline data was considered14; Rohm and colleagues developed and validated a model in 456 patients, and showed successful VA prediction within an error margin of 8 letters after one year of real-world anti-VEGF therapy15. All of these studies were particularly comprehensive in including “pre-defined” OCT measurement data, such as central retinal thickness, subretinal fluid (SRF) and intraretinal fluid (IRF), into the machine learning model. However, some important anatomic aspects had been missed out since they were difficult to capture in currently available automated segmentation methods. In fact, the conventional machine learning approach such as random forest requires the input data to be in the form of a feature vector instead of an OCT image itself, which makes the whole process highly time-consuming in feature labelling and highly dependent on the feature extraction technique19.

Based on prior evidence, baseline BCVA has become an important prognostic factor for final visual outcomes20. However, to date, automated analysis of “raw” retinal OCT images in combination with baseline BCVA in a single CNN model has not yet been explored to predict treatment outcome in nAMD. To address these limitations, we developed the HDF-Net to predict visual outcome simply by using baseline OCT image and three demographic covariates (age, gender, and baseline BCVA). In contrast to traditional machine learning approach, CNN model accepts a sample as an image and performs feature extraction and classification via hidden layers. But the challenges remain in how to input hybrid heterogeneous image and non-image data into a single CNN architecture. Unlike conventional CNN approaches which can only allow single type of data as input, we used a data fusion approach that enables simultaneous processing of multiple types of data with heterogeneous features extracted from different sources. Thereby, the power of CNN can be unleashed for image and non-image data all at the same time.

The difference between HDF-net and other published data fusion CNN approaches, such as HDF-CNN is the input method of numeric data. The HDF-CNN uses the matrix format to perform heterogeneous data fusion21. Hence, all heterogeneous data becomes a single input instance, and the heterogeneous data features are extracted through CNN. However, there may be some information loss in numeric data after the convolutions and pooling. Furthermore, pertinent features among numeric data extracted by convolution layers may turn out to be insignificant. In contrast, the HDF-net we proposed here is designed to merge image features and numeric data into a single feature vector after the feature extraction layers. The classification layer of HDF-Net will later on automatically determine the feature weight among various input data. In this work, we showed that the HDF-Net approach is superior to models such as ResNet50 and AlexNet at accurately predicting VA outcome in a real-life population. HDF-Net encompasses many advantages including automatic feature extraction in non-labeled samples, finding hidden structure from sparse and hyper-dimensional data, and non-image data hybridization. Therefore, it holds the potential to offer a robust decision support with non-image data integration as genetic factors are known to be involved in determining nAMD prognosis22,23.

A strength of this study is that it is a model validated based on real-world population comprising not only treatment-naive individuals. Previous studies regarding AI predicting treatment outcome frequently used trial dataset, such as that from the HARBOR study, as it offers a standardized imaging data and well-designed treatment protocol from a large sample size. Nonetheless, real-world nAMD studies showed discrepancies in several aspects when compared to randomized controlled trials (RCTs). An analysis of 49,485 eyes assessing anti-VEGF intensity and VA change found that real-world nAMD patients receive fewer injections and experience worse visual outcomes compared with patients receiving fixed, frequent therapy in RCTs. Furthermore, patients with older age and poor baseline VA may be particularly prone to undertreatment24. This suggests potential bias in the results of studies validating AI algorithms only in trial settings. Although this study was designed as retrospective, all included patients were approved for Taiwan National Health Insurance (NHI) reimbursed anti-VEGF injections after a scrutinized cross-check on clinical diagnosis and OCT images from the Bureau of NHI. This further supports the diagnosis accuracy and standardized treatment protocol following the reimbursement scheme.

Another major strength of this study is that it aims far beyond identifying “pre-defined OCT features” but generates prediction rules from “raw OCT images”. In a study comparing the performance of retinal specialists and an AI algorithm, retinal specialists were found to have imperfect accuracy and low sensitivity in detecting retinal fluid whereas AI achieved a higher level of accuracy25. This supports our hypothesis that feeding AI with only “pre-defined OCT features” could limit the scope of its application as it became obvious that AI might be able to outperform human intelligence. Prior studies have demonstrated favorable accuracy of deep learning approach to detect “pre-defined OCT features” such as retinal fluid on OCT scans25,26, yet quantifying the retinal fluid cannot directly guide clinical practice as controversies remain in SRF tolerance after initiation of treatment27,28,29. Treating nAMD with an ultimate goal of completely drying the retina could also increase the risk of macular atrophy, causing deteriorated long-term visual outcomes8,30.

This study has some limitations and there are several factors to be improved to optimize the results. A major limitation of most deep-learning models is the issue of “black-box” indicating that their predictions might be hard to interpret. In this study, we applied heatmaps to localize image regions influencing the classification. Although heatmap is a useful clue to highlight which part of image guided the CNN model to its decision, it does not provide information about the reason behind it. By processing both image and non-image data in one single CNN architecture, we found that adding numeric clinical data from baseline might enhance the performance of the model. But, the optimal feature weighting among image and non-image data remains as a question of major interest. Another limitation is the relatively small sample size that may have jeopardized our statistical analysis. However, each participant was comprehensively assessed with horizontal and vertical OCT scans, providing a rich set of reliable data.

The therapeutic response of nAMD varies widely in real-world setting, and was difficult to predict in the past. In this study, we presented and validated a novel deep learning-based approach utilizing baseline OCT and clinical information including baseline BCVA to predict the 12-month visual outcome after standard anti-VEGF therapy for active nAMD. The combination of heterogenous clinical and image data in HDF-Net holds the potential to serves as a solid decision support tool for clinicians to deliver evidence-based personalized treatment. This breakthrough marks a new era in AI guiding treatment decisions and patient expectations. Future studies are warranted to evaluate both economic impact and patient perceptions regarding outcome predictions.

Data availability

The data presented in this study are available on request from the corresponding author. The data are not publicly available due to patient privacy IRB requirement.

References

Wong, W. L. et al. Global prevalence of age-related macular degeneration and disease burden projection for 2020 and 2040: A systematic review and meta-analysis. Lancet Glob. Health 2, e106–e116 (2014).

Schippert, A. C., Jelin, E., Moe, M. C., Heiberg, T. & Grov, E. K. The impact of age-related macular degeneration on quality of life and its association with demographic data: Results from the NEI VFQ-25 questionnaire in a norwegian population. Gerontol. Geriatric Med. 4, 2333721418801601 (2018).

Schmidt-Erfurth, U. et al. Guidelines for the management of neovascular age-related macular degeneration by the European Society of Retina Specialists (EURETINA). Br. J. Ophthalmol. 98, 1144–1167 (2014).

Jaffe, D. H., Chan, W., Bezlyak, V. & Skelly, A. The economic and humanistic burden of patients in receipt of current available therapies for nAMD. J. Comp. Eff. Res. 7, 1125–1132 (2018).

Wykoff, C. C. et al. Optimizing anti-VEGF treatment outcomes for patients with neovascular age-related macular degeneration. JMCP 24, S3–S15 (2018).

Gemenetzi, M., Lotery, A. J. & Patel, P. J. Risk of geographic atrophy in age-related macular degeneration patients treated with intravitreal anti-VEGF agents. Eye 31, 1–9 (2017).

Ford, K. M., Saint-Geniez, M., Walshe, T., Zahr, A. & D’Amore, P. A. Expression and role of VEGF in the adult retinal pigment epithelium. Invest. Ophthalmol. Vis. Sci. 52, 9478–9487 (2011).

Abdelfattah, N. S., Zhang, H., Boyer, D. S. & Sadda, S. R. Progression of macular atrophy in patients with neovascular age-related macular degeneration undergoing antivascular endothelial growth factor therapy. Retina 36, 1843–1850 (2016).

Day, S. et al. Ocular complications after anti-vascular endothelial growth factor therapy in medicare patients with age-related macular degeneration. Am. J. Ophthalmol. 152, 266–272 (2011).

Yang, J. et al. Artificial intelligence in ophthalmopathy and ultra-wide field image: A survey. Exp. Syst. Appl. 182, 115068 (2021).

Li, T. et al. Applications of deep learning in fundus images: A review. Med. Image Anal. 69, 101971 (2021).

Shen, D., Wu, G. & Suk, H.-I. Deep learning in medical image analysis. Annu. Rev. Biomed. Eng. 19, 221–248 (2017).

Bogunovic, H. et al. Prediction of anti-VEGF treatment requirements in neovascular AMD using a machine learning approach. Invest. Ophthalmol. Vis. Sci. 58, 3240–3248 (2017).

Schmidt-Erfurth, U. et al. Machine learning to analyze the prognostic value of current imaging biomarkers in neovascular age-related macular degeneration. Ophthalmol. Retina 2, 24–30 (2018).

Rohm, M. et al. Predicting visual acuity by using machine learning in patients treated for neovascular age-related macular degeneration. Ophthalmology 125, 1028–1036 (2018).

He, K., Zhang, X., Ren, S. & Sun, J. Deep Residual Learning for Image Recognition. https://arxiv.org/abs/1512.03385 [cs] (2015).

Krizhevsky, A., Sutskever, I. & Hinton, G. E. ImageNet Classification with Deep Convolutional Neural Networks. in Advances in Neural Information Processing Systems vol. 25 (Curran Associates, Inc., 2012).

Liu, Y. et al. Prediction of OCT images of short-term response to anti-VEGF treatment for neovascular age-related macular degeneration using generative adversarial network. Br. J. Ophthalmol. https://doi.org/10.1136/bjophthalmol-2019-315338 (2020).

Boulesteix, A.-L., Janitza, S., Kruppa, J. & König, I. R. Overview of random forest methodology and practical guidance with emphasis on computational biology and bioinformatics. WIREs Data Min. Knowl. Discov. 2, 493–507 (2012).

Schmidt-Erfurth, U. & Waldstein, S. M. A paradigm shift in imaging biomarkers in neovascular age-related macular degeneration. Prog. Retin. Eye Res. 50, 1–24 (2016).

Lv, F. et al. Detecting fraudulent bank account based on convolutional neural network with heterogeneous data. Math. Probl. Eng. 2019, 1–11 (2019).

DeAngelis, M. M. et al. Genetics of age-related macular degeneration (AMD). Hum. Mol. Genet. 26, R45–R50 (2017).

Gorin, M. B. & daSilva, M. J. Predictive genetics for AMD: Hype and hopes for genetics-based strategies for treatment and prevention. Exp. Eye Res. 191, 107894 (2020).

Ciulla, T. A., Hussain, R. M., Pollack, J. S. & Williams, D. F. Visual acuity outcomes and anti-vascular endothelial growth factor therapy intensity in neovascular age-related macular degeneration patients: A real-world analysis of 49 485 eyes. Ophthalmol. Retina 4, 19–30 (2020).

Keenan, T. D. L. et al. Retinal specialist versus artificial intelligence detection of retinal fluid from OCT. Ophthalmology https://doi.org/10.1016/j.ophtha.2020.06.038 (2020).

Schlegl, T. et al. Fully automated detection and quantification of macular fluid in OCT using deep learning. Ophthalmology 125, 549–558 (2018).

Guymer, R. H. et al. Tolerating subretinal fluid in neovascular age-related macular degeneration treated with Ranibizumab using a treat-and-extend regimen: FLUID study 24-month results. Ophthalmology 126, 723–734 (2019).

Jaffe, G. J. et al. Macular morphology and visual acuity in the comparison of age-related macular degeneration treatments trials. Ophthalmology 120, 1860–1870 (2013).

Schmidt-Erfurth, U., Waldstein, S. M., Deak, G.-G., Kundi, M. & Simader, C. Pigment epithelial detachment followed by retinal cystoid degeneration leads to vision loss in treatment of neovascular age-related macular degeneration. Ophthalmology 122, 822–832 (2015).

Grunwald, J. E. et al. Growth of geographic atrophy in the comparison of age-related macular degeneration treatments trials. Ophthalmology 122, 809–816 (2015).

Author information

Authors and Affiliations

Contributions

(I) Conception and design: T.-C.Y., L.A.-C., D.Y.-S., L.Y.-H., S.-J.C., C.P.-H., L.C.-J., M.-C.T., Y.-B.C. (II) Administrative support: C.P.-H., L.C.-J., M.-C.T. (III) Provision of study materials or patients: T.-C.Y., S.-J.C., Y.-B.C. (IV) Collection and assembly of data: T.-C.Y., L.A.-C., D.Y.-S., Y.-B.C. (V) Data analysis and interpretation: T.-C.Y., L.A.-C., D.Y.-S. (VI) Manuscript writing: All authors. (VII) Final approval of manuscript: All authors.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Yeh, TC., Luo, AC., Deng, YS. et al. Prediction of treatment outcome in neovascular age-related macular degeneration using a novel convolutional neural network. Sci Rep 12, 5871 (2022). https://doi.org/10.1038/s41598-022-09642-7

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-022-09642-7

This article is cited by

-

Associations of presenting visual acuity with morphological changes on OCT in neovascular age-related macular degeneration: PRECISE Study Report 2

Eye (2024)

-

Prediction of anti-vascular endothelial growth factor agent-specific treatment outcomes in neovascular age-related macular degeneration using a generative adversarial network

Scientific Reports (2023)