Abstract

A main function of network intrusion detection systems (NIDSs) is to monitor network traffic and match it against rules. Oblivious NIDSs (O-NIDS) perform the same tasks of NIDSs but they use encrypted rules and produce encrypted results without being able to decrypt the rules or the results. Current implementations of O-NIDS suffer from slow searching speeds and/or lack of generality. In this paper, we present a generic approach to implement a privacy-preserving O-NIDS based on hybrid binary gates. We also present two resource-flexible algorithm bundles built upon the hybrid binary gates to perform the NIDS’s essential tasks of direct matching and range matching as a proof of concept. Our approach utilizes a Homomorphic Encryption (HE) layer in an abstract fashion, which makes it implementable by many HE schemes compared to the state-of-the-art where the underlying HE scheme is a core part of the approach. This feature allowed the use of already-existing HE libraries that utilize parallelization techniques in GPUs for faster performance. We achieved a rule encryption time as low as 0.012% of the state of the art with only 0.047% of its encrypted rule size. Also, we achieved a rule-matching speed that is almost 20,000 times faster than the state of the art.

Similar content being viewed by others

Introduction

Network intrusion detection system (NIDS) is a critical component of an enterprise targeted at protecting the internal network and information systems from malicious intruders 1. Typically, a NIDS is composed of three components: a network sensor, an analysis server, and a management console. The database of all the known threats is represented as a list of rules or a ruleset. The analysis server of NIDS will scan the network traffic in/out of the network sensor while applying conditions stipulated in the ruleset to find any packet that has a high chance of being part of a malicious activity, raising an alert in the management console. In this research, we aim to design and build an efficient and scalable Oblivious NIDS (O-NIDS), where the ruleset is created and encrypted by trusted personnel, while the network sensor and analysis server operate at a remote, insecure environment hosted and administrated by an adversary who is untrusted, honest-but-curious observer (HCB). The analysis server operates on the encrypted ruleset to generate encrypted alerts, which are communicated back in a secure way to the trusted personnel on a trusted management console. Such technology allows providing NIDS as a cloud service and enables cybersecurity protection at remote hostile locations without revealing the secret ruleset which can be classified according to some government regulations or the trade secret of cybersecurity research companies. In these applications context, the main idea is to separate between the rules creator and evaluator. The rules creator is a trusted authority, e.g. governmental agency, which creates and obfuscates the rules, while the rules evaluator is a remote party, e.g. a cloud service, responsible for performing the matching process between the obfuscated rules and the plaintext data under inspection without being able to understand the matching results. This is different from the currently used cloud IDS technologies like Google Cloud IDS2 or Azure Cloud firewall3, where the matching/detection results exist in plain and a role-based access model is used to prevent unauthorized access to these results. In our proposed model, the results themselves are encrypted on the data level so the rules-evaluator side has essentially no capability to decrypt or understand the results.

To achieve the principle idea of keeping the rules and the results incomprehensible to the rules-evaluator, Homomorphic Encryption (HE) schemes can be utilized. HE is a cryptographic technology that allows performing computations on encrypted data without accessing the secret key 4 and, consequently, without being able to decrypt the encrypted input or output data of such computations. While the available HE technology can provide a working prototype for the core problem, there is no efficient or scalable solution that can support a modern NIDS design with thousands of rules against multiple Gbits/s of network traffic.

Main contributions

This journal manuscript is an extended version of a WIP paper in HOST2022, entitled: Mahmoud Abdelhafeez Sayed and Mostafa Taha. Oblivious Intrusion Detection System. In 2022 IEEE International Symposium on Hardware Oriented Security and Trust (HOST). IEEE, 2022. In this paper, we present a privacy-preserving NIDS by implementing hybrid matching operations on the very fundamental binary level. Developing the matching at a bit level allows natural extension to any binary circuit or Finite State Machine (FSM) and eventually to a complete oblivious NIDS. Moreover, we propose two algorithms built on top of the proposed hybrid operations that implement two fundamental NIDS tasks; direct matching and range matching. The proposed algorithms introduce wide resource-performance trade-offs to fit with the resources and performance requirements of different environments. In addition, the proposed hybrid gates approach is library-independent, i.e., they can be implemented with any homomorphic encryption library that supports binary or radix-2 operations. To demonstrate the advantage of library independence, we propose an implementation that utilizes a GPU-accelerated HE library to enhance the performance. The proposed solutions are tested using TFHE5, Microsoft SEAL6, and PALISADE7 on CPU and cuFHE8 on GPU. In a nutshell, compared to the state of the art, the proposed solution provides:

-

1.

HE library independency.

-

2.

Higher Security Level.

-

3.

Faster rule encryption time.

-

4.

Smaller encrypted rule size.

-

5.

Lower memory requirements in most cases.

-

6.

Faster matching time.

-

7.

Flexible time \(\times\) memory trade.

-

8.

Faster decryption time

-

9.

Modular structure that allows partial modifications and updates to the rules and also allows combining results of different modules/algorithms in a straightforward way.

These features will be discussed in detail in section “Discussion”.

Organization

The rest of this paper is organized as follows. Section “System model and requirements” shows the proposed system model along with the system requirements. Section “Related work” summarizes the related work. Section “HE hybrid plain-cipher binary gates” describes the idea and implementation of hybrid gates. Section “Hybrid rules matching algorithms” demonstrates the utilization of hybrid gates in implementing two sample oblivious NIDS fundamental machines; The Hybrid Direct Matching (HDM) and Hybrid Range Matching (HRM) machines with different versions. Section “Security analysis” discusses the adversary model and its goals along with the security propositions of the proposed system and their fulfillment proofs. Section “Performance acceleration with parallel processing” demonstrates a proposed parallel model implementation of HDM machine on GPU. The experimental setup is presented in section “Experimental setup and benchmarking results” along with performance measurements for the different versions of the hybrid machines implemented with different libraries on different platforms. The section also compares our approaches with the state of the art, illustrating the strength points of each and where each one is most suitable. A discussion of the proposed system capabilities and limitations is presented in section “Discussion”. Finally, the paper is concluded in section “Conclusion”.

System model and requirements

In this section, we present an overview of the oblivious NIDS model with a hybrid matching layer and its essential requirements.

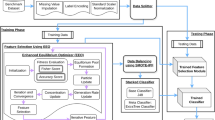

To achieve the idea of encrypted NIDS rules and results, we use the system overview depicted in Fig. 1. There are two involved parties; namely, the Trusted Client which represents the rules creator and the Cloud Side which represents the rules evaluator. The rules are created in plain at the Trusted Client and then encrypted using a private key. The encrypted rules are sent, over a public unsecured channel, to the Cloud Side for matching against the plaintext network traffic. The matching process results in an encrypted result, which is sent back to the Trusted Client, over a public unsecured channel, for decryption and taking necessary actions. Hence, the core research problem of this solution is to match plaintext network traffic against encrypted rules to generate encrypted matching results. For this model, the fundamental requirements are:

- MR1:

-

Data Access (A) The rules and the matching results are comprehensible only to the Trusted Client and shall be encrypted and incomprehensible to the Cloud Side. (B) Trusted Client has no access to the plain network stream There are two parties participating in the process; the Trusted Client and the Cloud Side. The Trusted Client creates and encrypts the rules and has no access to the plaintext data on the Cloud Side. On the other hand, the Cloud Side has access to the plain network stream and matches it against the encrypted rules producing encrypted results that can be decrypted only by the Trusted Client.

- MR2:

-

No Intermediate Communications Once the encrypted rules are transmitted from the Trusted Client to the Cloud Side, no further communications shall be required except for sending back the results. Any intermediate communications and bandwidth consumption would degrade the system's practicability or even render the system completely useless, as sending the plain network stream itself to the Trusted Client may require less traffic in this case.

- MR3:

-

Small Result The size of the encrypted result sent back to the Trusted Client shall be smaller than the plain network stream size. The encrypted matching result should be as small as possible, at least smaller than the plaintext size, for the same reasons as in MR2.

- MR4:

-

Reusability An encrypted rule shall be reusable. Reusing a rule against different plain network stream blocks must not leak any information about the rule, the matching results, or the relationship between the rules to the Cloud Side.

- MR5:

-

Modularity The scheme shall provide a computationally efficient method to combine different independent and reusable sub-rules into complex rules. Since each rule is typically composed of many small conditions, denoted here as sub-rules, e.g. source and destination IP and port numbers, protocol, and conditions on the payload, the scheme shall provide a computationally efficient method for the cloud side to reuse the matching result of each condition/sub-rule across many rules. I.e., matching against a specific source IP address shall happen once in the system, regardless of how many rules are using this condition. In other words, the matching machines shall be designed as independent and reusable modules. This provides the flexibility required by modern NIDSs to implement complex rules.

Related work

One important field of research that is in close proximity to our research problem is Secure Multiparty Computations (MPC/SMPC)9. In SMPC environment, there are multiple parties who want to jointly calculate a shared function over their secret inputs without revealing their inputs to each other. After performing an SMPC protocol, only the result is revealed to all participating parties. However, none of the currently used SMPC protocols satisfy our model requirements stated in section “System model and requirements” as shown next.

Yao’s garbled circuit technique (GC)10,11,12 and its improvements13,14,15 are a generic SMPC protocol that is designed to evaluate any logic function between multiple parties. This protocol adopts an encrypted look-up tables approach to represent logic gates. One party, called the garbler, is responsible for creating and encrypting the circuit by creating suitable tags for all wires and providing encrypted look-up tables for the circuit gates according to these tags. The garbler then sends the encrypted look-up tables, without the wire tags, and its input tag to the other party; the evaluator. The evaluator receives the encrypted circuit and asks the garbler for their input tag using an Oblivious Transfer (OT)16,17 protocol so that the garbler does not know what the evaluator’s input is. Then, the evaluator evaluates the circuit gate by gate, without being able to comprehend any intermediate result, till they get the final result, which is shared with the garbler. In the problem of O-NIDS the look-up tables defy MR4 (Reusability) since the output space depends on the size of the look-up table of the output gate. An adversary may try different input combinations on the same circuit to construct a relationship between the inputs and outputs of the circuit, which compromises the rule secrecy. One other problem when trying to use this approach with our model is that it does not provide MR5 (Modularity). The evaluator has no way to combine the results of the sub-rules matching machines.

Goldreich, Micali, and Wigderson provided another famous generic SMPC protocol (GMW protocol)18,19 for evaluating logic functions securely between multiple parties. The core idea of GMW protocol is that each of the participating parties has an additive share of the value of every wire in the circuit under execution. At any time, when the parties decide to reveal the plain value of any wire, usually the output wire, they simply broadcast their secret shares of this wire and add them. In GMW protocol, evaluating a logic gate means calculating an additive secret share of the output wire for each of the participating parties. For XOR and NOT gates, the parties can evaluate the gates and calculate their output’s secret share locally without communicating with other parties. However, in the case of AND, OR, NAND, or NOR gates, the parties must communicate and apply Oblivious Transfer to evaluate the gate. In the problem of O-NIDS, these communications contradict MR2 (No Intermediate Communications), as the Trusted Client and the Cloud Side would have to communicate for all gates except XOR and NOT gates, which nullifies the usefulness of the system. A similar SMPC protocol for arithmetic circuits was developed by Ben-Or, Goldwasser, and Wigderson (BGW protocol)20. The key difference here is that the BGW protocol utilizes the concept of Shamir’s secret shares21 instead of additive shares. Similar to GMW, the secret shares of the addition gates outputs can be calculated locally by the participating parties. However, communication using Oblivious Transfer is required for evaluating multiplication gates which fails MR2. In addition, since both GMW and BGW use deterministic encryption schemes, reusing the circuit, i.e. rule, with different plain network stream inputs would allow the operator to gain information about the relationship between the circuit inputs and outputs, which essentially compromises the rule’s secrecy and fails MR4.

Kolesnikov22,23 presented a Gate Evaluation Secret Sharing (GESS) scheme which can be thought of as the information-theoretic analog of Yao’s garbled circuit. GESS allows two parties to secretly evaluate a Boolean Formula. For a boolean gate G, the output wire is assigned two secret values to represent the two possible outcomes. Each wire of the two input wires is then given two labels (total of 4 labels) such that the valid combination between any two labels from the two input wires can be used to reconstruct the label of the output wire. Thus, GESS exhibits similarities to Yao’s GC but distinguishes itself by eliminating the need for garbled tables. This characteristic positions GESS as a generalization of Yao’s GC. The problem with GESS is that its label size increases with circuit depth. Also, like Yao’s GC, it contradicts MR4 due to the usage of deterministic encryption schemes. It also doesn’t satisfy MR5 (Modularity), as there is no way to combine the results of the sub-rule matching machines. It should be noted here that both Yao’s GC and GESS require Oblivious Transfer at the input gate to allow the evaluator to get its input label secretly from the circuit creator. In our application, this Oblivious Transfer can be eliminated since the evaluator’s input, i.e. the plain network stream at the Cloud Side, is not a secret so no need for Oblivious Transfer, and the creator can send all possible input labels for the evaluator along with the circuit/rule.

The protocols of Private Set Intersection (PSI)24,25 represent important custom SMPC protocols that share a close idea to our model. PSI allows the participating parties to evaluate the intersection between their secret datasets without revealing anything else. In the O-NIDS problem, PSI does not satisfy MR1 (Result secrecy) because they were designed to reveal the intersection to all parties. PSI also contradicts MR3 (Small Result) and MR5 (Modularity), since it is conceptually designed to find and send an answer for all matched elements (intersection) so there is no clear way to aggregate the sub-rule matching results into single final result.

Another possible solution to the O-NIDS problem that could be considered is to use program obfuscation. Program obfuscation is an already-existing research problem that mainly targets protecting trade secrets and intellectual property and obstructing the attempts to reverse engineering privately owned software artifacts. Program obfuscation exists for different evasive functions and has numerous schemes, as in26,27,28 for obfuscating Boolean conjunctions,29 for obfuscating simple pattern matching, and30 for obfuscating Deterministic Finite Automatas (DFAs). The target of program obfuscation is to protect the evaluating circuit, but the default is to have the result readily available in plaintext at the evaluating system. This violates MR1 in the O-NIDS application, where the result needs to be kept encrypted and can only be evaluated by a remote trusted entity with access to the decryption key.

There are some preliminary attempts proposed in the literature for implementing a privacy-preserving NIDS but they did not reach practical performance metrics. Niksefat et al.31 used SMPC to securely evaluate an Oblivious Deterministic Finite Automata (ODFA), where a server (i.e. Trusted Client) creates the ODFA and a client (i.e. Cloud Side) evaluates it on plain network stream. However, this model protects only the OFDA but not the matching result which contradicts MR1. A survey of privacy issues in NIDS 32 studied over 27 IDS designs with some privacy properties and concluded that privacy-preserving signature-based NIDS is still an open problem that requires some advances in HE libraries. Sgaglione et al. 33 introduced a complete IDS capable of protecting the signature rules using homomorphic encryption, but they proposed to encrypt the plain network stream before comparison against the encrypted rules. The proposed solution required around 12 seconds to process a plain network stream of only 8 bytes.

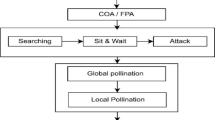

Genise, Gentry, Halevi, Li, and Miccianciot [GGHLM]34 presented a promising modified somewhat homomorphic encryption scheme that enabled executing encrypted non-deterministic finite automata (NFA) over plaintext, which can be used into a privacy-preserving NIDS. Their application takes a regular expression (or regex) as input and then creates an NFA that implements this regex by assigning every letter in the regex alphabet an encrypted transition matrix with predefined dimensions according to the used parameters and definining the NFA’s state as an encrypted vector of a relevant length. The Cloud Side has to pick the corresponding encrypted transition matrix for each input letter in the plain network stream, do matrix \(\times\) vector multiplication process, and send back the final state vector to the Trusted Side for verification. While this approach presents a high cloud searching speed, the length of the network traffic is limited and must be pre-defined before deployment. Also, the rule encryption time is very long preventing this solution from being used in adaptive NIDSs35,36,37. Additionally, the solution is not modular (MR5) and there is no way to combine results of sub-rules matching machines which is a typical feature of modern NIDSs. Other performance metrics, advantages, and drawbacks of this scheme are discussed in section “Experimental setup and benchmarking results”.

It should be noted that the secrecy principle of our proposed solution lies in hiding the data, i.e. the signature or the rule for which the NIDS is searching, not the evaluation circuit. This is different from the GGHLM approach that aims to hide the circuit and the data it processes.A summary of the compatibility of the major schemes from the literature with our model requirements is shown in Table 1.

HE hybrid plain-cipher binary gates

The term “hybrid-gate” is introduced here to refer to any binary gate that performs a logical operation between plaintext bit(s) and ciphertext bit(s) and produces an encrypted output. Hybrid gates are not supported in any HE library that targets binary encoding like TFHE 5 and FHEW 38 because, at first glance, the output will be Transparent. For example, if a plaintext input to an AND gate is 0 the output must be the encryption of 0 regardless of the value of the encrypted input. The ciphertext output would be transparent to the adversary if any non-linear gate (AND, NAND, OR, NOR) is used. Here we introduce a secure multiplexer implementation for both linear and non-linear hybrid gates as presented in the following subsection.

Hybrid logic gates

XOR and XNOR gates are linear gates, i.e. if the plaintext input is fixed to 0 or 1, the output would be the same as the encrypted input or its complement, respectively. This can be used in constructing a multiplexer implementation of a hybrid XOR gate. The multiplexer is controlled by 1-bit of plaintext (\(I_p\)), while the multiplexer inputs are the 1 homomorphically-encrypted bit (\(I_c\)) and its complement (\(\overline{I_c}\)). The output of the gate, \(Q_{XOR}\), represents the encrypted result. The input arrangement follows from the truth table, Table 2, and the proposed gate is illustrated in Fig. 2a and can be expressed as in (1). Since the value of the encrypted input is unknown with an equal probability of being 0 or 1, the value of the output will be equally unknown with the same probabilities. This means that the adversary cannot deduce any information about the output by controlling the value of one input and the output won’t be transparent. Hybrid XNOR gates can be implemented similarly as shown in Fig. 2b.

The multiplexer implementation for the linear gates can also be extended to implement non-transparent non-linear gates as shown in Fig. 2. We assume that the Cloud Side is not provided with any known data, i.e., it cannot distinguish between the data pieces provided by the Trusted Client. Also, we assume that \(\overline{I_c}\) is not computed by the cloud, so that \(I_c\), \(\overline{I_c}\), the encryption of 0 (Enc(0)), and the encryption of 1 (Enc(1)) are all oblivious inputs. The multiplexer inputs for the implementation of the hybrid gates are summarized in Table 3 which can be used to provide similar expressions like (1).

Hybrid generic multi-inputs logic gates

The hybrid multiplexer concept can be extended to implement hybrid gates with more than 2 inputs, multi-input compound gates, and logic functions that have hybrid inputs. In general, a hybrid logic function with n plain input bits (\(I_{p_i}\)) and m HE ciphertext bits(\(I_{c_j}\)) will be in the form of \(f(I_{p_0},I_{p_1},\ldots ,I_{p_{n-1}},I_{c_0},I_{c_1},\ldots ,I_{c_{m-1}})\), with \(2^n\) combinations for plaintext bits. For any plaintext bits combination k, the logic function can be reduced to a function \(f_k(I_{c_0},I_{c_1},\ldots I_{c_{m-1}})\) with pure HE inputs only. This suggests the multiplexer implementation shown in Fig. 2g for the function f, where the multiplexer has n selectors, representing the plaintext inputs, and \(2^n\) inputs where each input \(I_k\) corresponds to a function \(f_k\) of pure HE arguments. The functions \(f_k\) can be realized with pure HE gates available in the HE library.

Hybrid rules matching algorithms

In this section, we present two application algorithms that utilize the hybrid gates:

-

1.

Hybrid Direct Matching (HDM): Matching of plaintext stream against a homomorphically encrypted ciphertext rule. The algorithm describes how an oblivious NIDS at the Cloud Side can use the hybrid gates to search within the payload for an exact match against an encrypted signature rule.

-

2.

Hybrid Range Matching (HRM): Matching of a plaintext number against homomorphically encrypted range rule e.g. greater, less, within, and/or equal encrypted rule number(s). The algorithm shows how an oblivious NIDS at the Cloud Side can utilize the hybrid gates to implement range comparison rules, e.g. IP or port ranges, between an encrypted range rule and packet headers within the network stream.

Each of the two presented algorithms contains multiple variants that represent the flexible trade-off between speed and memory utilization. The resource requirements of these algorithms in terms of computation and communication complexity are also presented in this section and is quantified in section “Experimental setup and benchmarking results”. We also describe how to use these algorithms to implement a full practical rule and how to estimate its resource requirements. Finally, we present an approach to extend the application of the hybrid gates to generic hybrid logic circuits and hybrid Finite State Machines to implement regular expressions.

The proposed algorithms are comprised essentially of three parts:

-

EncRules: This code runs on the Trusted-Client side to perform the following tasks:

-

Set the parameters of the underlying HE library by the trusted personnel.

-

Set the signature rules by the trusted personnel and encrypt them using the HE library to generate the encrypted rules.

-

Send the encrypted rules to the Oblivious-NIDS.

-

-

Search/Compare: This code runs on the Cloud-Side to search through and/or compare the traffic against the encrypted rules to generate encrypted result bits. Here, all the hybrid gates of section “HE hybrid plain-cipher binary gates” can be used to perform direct matching, ranges, and wildcards as required by modern NIDSs.

-

DecResult: This code runs on the Trusted-Client side to decrypt the result packet and generate an alert if necessary.

The first and last parts that include encrypting the rules and decrypting the result are quite similar for both algorithms; however, this is not the case with the second part as it depends on the required matching task. In all of the proposed algorithms, the O-NIDS does not need, nor it is able, to decrypt the rule, encrypt the plaintext stream, or understand the result.

For the algorithms demonstrated in this paper, it should be noted that SK and CK indicate Secret Key and Cloud Key, respectively. Also, a gate x denoted by \(HHE _x\) means the hybrid gate x, which can be implemented in a way similar to (1) and Table 3. A gate y denoted by \(HE _y\) is the homomorphic gate y, and its implementation depends on the homomorphic encryption library used. While the proposed implementations can be modified to accommodate when the plaintext word size is different from the encrypted rule word size, it is assumed here that they have the same size to reduce implementation-related complexity and give more focus on the concept.

Hybrid direct matching (HDM) algorithms

The main aim of this algorithm is to search for an exact match of an encrypted word in the payload of the network stream. For this task, two variations that trade off speed for memory are presented.

Hybrid direct matching V1 (HDMv1)

In this variation, only the rule word is encrypted and sent to the cloud, which does all the required processing by utilizing the hybrid and fully homomorphic gates to match the encrypted word against all plain stream words, producing one encrypted sub-result bit for each plain word, and then aggregating the sub-result bits into a final result bit using fully homomorphic OR gates.

Hybrid direct matching V2 (HDMv2)

In this variation, the rule word itself is not encrypted; however, all the possible \(2^{|word|}\) sub-result bits associated with matching the rule word against all possible \(2^{|word|}\) plain words are encrypted and sent to the cloud. Hence, the Cloud Side does not have to calculate the sub-result bits for each plain word as it just needs to select them from the available sub-result bits array, and then aggregate the sub-result bits into a final result bit similar to HDMv1. Although this table lookup of the appropriate answer can work with any method that employs probabilistic encryption, only HE can support further processing on the sub-result bits to calculate a required final result and/or to aggregate them with results from other rules or algorithms. With this design, HDMv2 should have faster processing speed in the cloud at the cost of higher memory usage compared to HDMv1.

Implementation of HDM

Algorithm 1 describes the rule encryption at the Trusted Client side for the HDM. Depending on the HDM variation, either the rule word or the possible answers are encrypted. As an example use case, the rule can be assigned to be the 8-bit ASCII representation of the letter “A”. The HDMv1 will generate 8 HE-encrypted bits, each representing the encryption of 1 bit from the rule. However, HDMv2 will generate an array of \(2^8\) HE-encrypted bits with the Enc(0) everywhere except at the index of the ASCII representation of “A” which gets the Enc(1). Algorithm 2 shows the hybrid searching process on the Cloud Side. In the case of HDMv1, the Cloud Side has to process each word from the plaintext stream against the encrypted rule word. As illustrated in algorithm 3, This is done by using the bit-by-bit hybrid XNOR between each bit in the plain stream word and the encrypted rule word and doing fully homomorphic AND between the XNOR result bits. On the other hand, HDMv2 skips the previous step as it has all the possible answers already pre-calculated so the Cloud Side has only to select the result bit for each plaintext stream word as demonstrated in step 6 of Algorithm 2. Following the previous use case, the Corpus is a sequence of plaintext network stream of 8-bit characters. \(|\text {Corpus}|\) is the number of characters in the network stream that will be matched against the rule. The result of matching individual characters is reported in ResultCorpus, and the overall result bit is reported in EncResult. Regardless of the used variation, the overall result bit is calculated by aggregating the result bits of the hybrid words matching using fully homomorphic OR stage.

HDMv2 as generalized hybrid gate—It should be noted here that the proposed implementation of HDMv2 resembles a generalized hybrid gate as described in section “Hybrid generic multi-inputs logic gates” and represented in Fig. 2g. HDMv2 utilizes a 256 \(\times\) 1 hybrid multiplexer with an 8-bit selector to implement the per-character matching circuit portion of HDMv1 (realized in software by the function IsEqualWord in Algorithm 3. The hybrid multiplexer inputs are the \(2^8\) encrypted answer bits, while the 8-bit selector is the plaintext word that is being matched against the rule. This hybrid multiplexer with a fan-in of 256 is realized using a look-up table, where the table has 256 entries that resemble the multiplexer inputs, and an entry’s index represents the 8-bit value fed to the multiplexer selector lines. The circuit realization of IsEqualWord in HDMv1 consists of eight 2-bit hybrid XNOR gates + seven fully homomorphic AND gates, and the whole circuit is realized in HDMv2 by the 256\(\times\)1 hybrid multiplexer with 8-bit selector at the expense of greater rule size.

Hybrid range matching (HRM) algorithms

The main aim of these algorithms is to perform Greater than (G), Less than (L), Range (R), and Equal (E) operations on a plaintext number against an encrypted rule described by a single confidential encrypted number in case of operations G, L, and E while using two confidential encrypted numbers in case of the R operation. In this context, the plaintext number can represent the port number in a packet header, while the encrypted number can represent the rule monitored by the NIDS. For this task, we present three possible variations that trade-off speed for memory.

Hybrid range matching V1 (HRMv1)

Similar to HDMv1, HRMv1 variation encrypts only the rule number in the case of G, L, or E operations or the two rule numbers in case of R operation at the trusted side and sends it to the Cloud Side which does all the required processing using combination of hybrid and fully homomorphic gates. The final result is produced as a single encrypted bit and sent back to the secure side for verification.

Hybrid range matching V2 (HRMv2)

HRMv2 variation follows a similar procedure as HDMv2, by pre-calculating and encrypting all the possible answers. For any specific rule number(s) and operation combination, there are \(2^{|rule|}\) possible results to be calculated, encrypted, and sent to the Cloud Side. In this case, the Cloud Side will not have to do any processing as it just picks the encrypted answer from the possible results pool based on the plaintext number value under inspection and sends it back to the trusted side for verification or further aggregates it with other results if required.

Hybrid range matching V3 (HRMv3)

HRMv3 variation holds a middle ground between HRMv1 and HRMv2. If the rule number length is n bits, instead of encrypting all of the \(2^{n}\) possibilities, HRMv3 divides the rule length into 2 parts of n/2 bits each: high part and low part. It then calculates and encrypts all possible answers for each part individually based on the rule’s value and required operation, stores them in two \(2^{n/2}\) encrypted-bit arrays for E operation, or 3 arrays for G,L operations (additional auxiliary array is required in this case), and sends them to the cloud. The Cloud Side has to do a few processing steps to aggregate the selected sub-result bits from the \(2^{n/2}\) encrypted-bit arrays into a final answer. Needless to say, HRMv3 can utilize any number of divisions \(\ge\)2; however, we selected 2 divisions as a sample of flexibility. Using this design methodology, HRMv1 should have the best memory requirements compared to the other variations, and HRMv2 should be the fastest while HRMv3 stands a middle ground between them in both memory and speed metrics.

Implementation of HRM

The rule encryption step for HRMv1 and HRMv2 is quite similar to HDMv1 and HDMv2 by encrypting the rule number/word itself or encrypting all possible answers, respectively, as previously shown in algorithm 1. The difference for HRMv2 is that the condition in step 8 of Algorithm 1 for a possible answer to be encrypted as 1 or 0 depends on the required operation being G, L, E, or R. HRMv3 has a slightly different rule encryption procedure, as the rule bits are divided into high and low parts with equal number of bits before encrypting the possible answers for each part individually. Algorithm 4 describes the rule encryption process for HRMv3 for the G operation. Following the same use case of section “Hybrid direct matching (HDM) algorithms”, we assume looking for an 8-bit input number from the network stream that is greater than 65 (0100 0001). Hence, the rule is “0100 0001”, RuleH will be “0100” and RuleL will be “0001”. EncRules will be a \(3\times 2^4=3\times 16\) matrix. The rows of the EncRules matrix, EncRules[0][x], EncRules[1][x], and EncRules[2][x], represent the true condition for Index > RuleH, Index = RuleH, and Index > RuleL, respectively. Hybrid comparison at the cloud for HRMv1 approach utilizes gate-level minimized versions of the standard binary logic comparison circuits similar to the ones found in39. HRMv2 cloud task is similar to HDMv2 in algorithm 2 i.e. the cloud’s role is to pick the answer from the available answers pool. The hybrid comparison for HRMv3 with G operation is demonstrated in algorithm 5. Based on the plain number value, the cloud selects two partial results bit from the high and low possible answers pools along with one other bit from an auxiliary pool and combine these bits using HE gates into a final answer bit. Essentially, we are looking for a true condition at EncRule[0][PlainH] which represents PlainH > RuleH, or the conjunction (AND) of a true condition at EncRule[1][PlainH] which represents PlainH = RuleH and a true condition at EncRule[2][PlainL] which represents PlainL > RuleL.

The verification step is the same in all HDM and HRM algorithms; the encrypted result bit is sent back to the Trusted Client for decryption and verification, as described by Algorithm 6. It should be noted that in all HRM versions, the implementations for operations other than G follow similar procedures and can be deduced from the described HRM algorithms.

HRMv2 and HRMv3 as generalized hybrid gates—Similar to HDMv2, HRMv2 is actually a generalized hybrid gate that utilizes a \(2^{16}\times 1\) hybrid MUX with 16-bit plaintext number as a selector. On the other hand, HRMv3 uses three \(2^8\times 1\) hybrid MUXs to implement the hybrid range matching functions, with the plaintext 16-bit number divided into two 8-bit slices used as selectors to these multiplexers (as described in Algorithm 5). Both HRMv2 and HRMv3 simplify the circuit in HRMv1 into the bigger hybrid multiplexers but at the cost of increased memory requirements for the same rule. As with the case of HDMv2, the massive fan-in circuits of HRMv2 and HRMv3 are realized with look-up tables.

Computational and communicational complexity of HDM, HRM algorithms

To calculate the computational and communicational complexity for HDM and HRM algorithms, we assume the number of bits per plain rule word to be n which is the same number of bits per plaintext network stream word. The number of plaintext network stream words is assumed to be N. For the computation complexity, we should note that the calculation time of the hybrid gates is negligible compared to the time consumed by the fully HE gates. This is because the execution time of the hybrid multiplexer that implements the hybrid gate is negligible (The output is a selection from the input) compared to the time-consuming bootstrapping operation that follows the fully HE gates (except for HE_NOT gate as it does not add noise and does not require bootstrapping) Thus, the calculation complexity is directly related to the HE gates while the other HHE gates and/or hybrid multiplexers are neglected in the calculations. On the other hand, the communicational complexity is proportional to the encrypted rule size, as the encrypted rule is transmitted from the trusted client to the cloud side to be used in the matching machine. The result bits that are sent back from the cloud side to the trusted client also contribute to the communication complexity; however, their effect depends on how frequently the trusted client needs the results to be communicated back. The computational and communicational analysis of GGHLM is also presented in section “Analysis of GGHLM”.

Analysis of HDM complexity

Execution of HDMv1 requires executing n HHE_XOR gates and \(n-1\) HE_AND gates per word, and it requires \(N-1\) HE_OR gates to calculate the final results for N words stream. On the other hand, HDMv2 skips the per-word HHE_XOR and HE_AND gates by pre-calculating their final results using 1 generic hybrid multiplexer per word (negligible execution time) and requires only \(N-1\) HE_OR gates similar to HDMv1 to aggregate the per-word results into a final result. Thus, the speed gain of HDMv2 over HDMv1 can be described by (2) which indicates that HDMv2 is n times faster than HDMv1.

For the communicational requirements, HDMv1 requires n encrypted bits per rule word, while HDMv2 requires \(2^n\) bits which is significantly larger then HDMv1. The computational requirements, i.e. # HE gates, and communication requirements, i.e. rule size as # of encrypted bits per rule, and the corresponding complexities of HDMv1 and HDMv2 are summarized in Table 4.

Analysis of HRM complexity

For Greater Than (G) or Less Than (L) operations, the execution of optimally minimized HRMv1 requires executing \((n-1)\) HE_AND gates, \((n-1)\) HE_OR gates, and n HHE_AND gates per plaintext number. HRMv2 executes only 1 big hybrid multiplexer per plaintext number. HRMv3 which divides the rule and plaintext word into two sections requires 3 big hybrid multiplexers, 1 HE_AND, and 1 HE_OR per plaintext number. For the Equal (E) operation, HRMv1 reduces to HDMv1 and requires the same number of gates. HRMv3 executes two hybrid multiplexers and 1 HE_AND per plaintext number, while HRMv2 remains the same as in the G or L operations. For the Range (R) operation, HRMv1 and HRMv3 require twice the number of gates if compared to G or L operations per plaintext number plus an additional HE_AND gate to combine the sub-results of G and L operations into R. However, HRMv2 still uses the same 1 hybrid multiplexer for the range operation.

All HRM machines, like HDM machines, require \((N-1)\) HE_OR gate to combine the per-number sub-results of N numbers into one final result. It should be noted that the execution of the hybrid multiplexers consumes negligible time.

Regarding the communicational complexity, HRMv1 requires n encrypted rule bits for G, L, and E operations, while it requires 2n encrypted rule bits for range operation. HRMv3 requires \(3\times 2^{n/2}\) encrypted rule bits for G, L, and E operations, and requires twice this number for the R operation. Like HDMv2, HRMv2 requires \(2^n\) encrypted rule bits for all operations. The computational requirements, i.e. # HE gates, and communication requirements, i.e. rule size as # of encrypted bits per rule, and the corresponding complexities of HRMv1, HRMv2, and HRMv3 are summarized in Table 5.

As an example to demonstrate the data in Table 5, HRMv1-R operation is achieved by combining the results of G operation on the lower range limit and L operation on the higher range limit using HE_AND gate. For matching a plaintext word with length n bits against a rule of the same length, the L operation requires \(n-1\) HE_OR gates and the same number of HE_AND gates. G operation requires the same number of gates as L operation. An additional HE_AND gate is required to combine the results from both L and G operations. Thus, the total number of gates required for matching one plaintext word is \(4(n-1)+1\). Combining the results of N words requires \(N-1\) HE_OR gates and hence the total number of gates required for HRMv1-R operation on N words is \((4(n-1)+1)N + N-1)=2(2n-1)N-1 \rightarrow {\mathcal {O}}(nN)\). HRMv1-R requires the encryption of 2 n-bit rule words, this its memory requirements is \(2n \rightarrow {\mathcal {O}}(n)\).

Analysis of GGHLM

GGHLM processes the input plaintext units from the plain network stream by performing a Matrix \(\times\) Vector operation for each unit. We use the term “unit” to describe the plaintext input chunk to the algorithm, as it may be the same or different from the term “word” we used for HDM and HRM earlier. Let r be the base of the number/character system used by GGHLM, this means it has r possible different symbols from the plaintext network stream. For example, for the binary base \(r=2\) only 0 and 1 are expected as symbols while if the base is the English language alphabet capital letters then the expected symbols are A, B, C,..., and Z with \(r=26\). This number corresponds directly to the number of transition matrices that should be encrypted at the trusted client and sent to the cloud side. Thus, the communication complexity of GGHLM becomes \({\mathcal {O}}(r)\) which is independent of the rule size.

The computation complexity of GGHLM is related to the number of Matrix \(\times\) Vector operations. If we use the same terminology of N words and n bits per word as with HDM and HRM, then GGHLM would need to execute approximately \(Nn/(log_2r)\) Matrix \(\times\) Vector operations to process N words. This means the computation complexity of GGHLM is \({\mathcal {O}}(\frac{Nn}{log_2r})\). This indicates the trade-off in GGHLM, where increasing the base r increases the communicational complexity and reduces the computation complexity.

Extension to complete rules and batching

As the proposed matching machines are built upon the elementary hybrid logic gates concept, this results in two important features:

-

Customizability: Any of the HDM or HRM machines can be fine-tuned for a specific matching objective by changing the underlying HE logic circuit. In fact, a fully customized Finite State Machine (FSM) can be implemented using the hybrid gates to achieve a certain target.

-

Modularity: The outputs of the matching machines can be easily combined using HE_AND or HE_OR as required.

The above-mentioned features allow the construction of complex rules easily by implementing a matching machine for each part of the complex rule and then combining the results using the appropriate HE gates.

For illustration, we consider a SURICATA rule40 that takes the form of (alert protocol source_IP source_Port -> destination_IP/destination_subnet destination_Port ). In this case, the source IP can be an exact match or a range, which can be achieved by HDM and HRM machines respectively. It should be noted that the provided implementation of HDM machines in Algorithms 2 and 3 is designed to search for an encrypted rule word in plaintext corpus; however, it can be easily modified to direct match a certain number of rule words against the same number of plaintext words as may be required in an exact source IP matching and the same applies to the source port. On the destination side, the rule specifies a destination network in the above example. In this case, the Trusted Client may decide to take advantage of this to reduce the rule size, and hence the matching time, by matching only against the network address bits, or they can decide to provide more obfuscation by performing dummy matching against the host bits. Dummy matching can be achieved by implementing dummy hybrid gates that have all of their inputs as the encrypted value of the same plain value. The destination port can be treated as the source port, and the matching sub-results of each part of the rule can be then combined using HE_AND gates to get a final result. A numeric example to the requirements of a complete SURICATA rule is demonstrated in section “Extension to complete rule sets”.

One other advantage of the modularity feature is the ability to batch the results. A typical application of an oblivious NIDS may not require too frequent matching results for every network traffic element to be sent back to the Trusted Client. Rather, it may require the Cloud Side to keep watching for some certain rule in the incoming network traffic and send back a single result bit after a defined period of time to the Trusted Client to tell if there is a hit or not. If a hit was scored, the Trusted Client may decide to instruct the Cloud Side to drill down for the network traffic element that caused the hit by sending back the sub-result bits of a specific time period to the Trusted Client. This batching process can be achieved by collecting the matching result of the rule against the network traffic elements using HE_OR gates, and it is useful in lowering the required network traffic between the Trusted Client and the cloud server.

Generalization to arbitrary circuits and regular expressions

Pattern-matching intrusion detection systems rely extensively on regular expressions, a fundamental concept in computer science and formal language theory. Regular expressions, as succinct representations of patterns in strings, can precisely define attack signatures and serve as the basis for identifying malicious activities within network traffic and system logs, making them essential for effective intrusion detection systems. Numerous attempts and approaches can be found in the literature to optimize the regular expression matching algorithms for intrusion detection, as surveyed in41. Implementing regular expressions in intrusion detection often involves utilizing finite state machines (FSM), also known as finite automata, which offer a systematic approach to processing and recognizing patterns. Finite state machines provide the necessary computational framework for interpreting regular expressions, enabling efficient pattern matching in large datasets42.

The multiplexer implementation of the hybrid gates provides a complete set of standard and generalized hybrid logic gates that take both plaintext and ciphertext inputs and produce ciphertext outputs. On the other hand, HE algorithms and libraries provide a complete set of standard fully HE gates that take ciphertext input and produce ciphertext output without decrypting the input or output at any stage. Combining hybrid gates, fully HE gates, and standard plain logic gates allows the construction of any hybrid logic circuit that can accept both plain and encrypted inputs and produce an encrypted output. Since an FSM is essentially a logic circuit and can be constructed using logic gates, a hybrid FSM can be built using a combination of hybrid and fully HE gates. Once the hybrid FSM is constructed, it can be used in hybrid-matching the regular expression against the plaintext data. The steps to implement a hybrid matching machine for a regular expression are as follows:

-

1.

Define the rule: The trusted client defines the rule based on the intrusion detection requirements.

-

2.

Create the regular expression: The trusted client converts the rule into a regular expression.

-

3.

Create the FSM: The trusted client converts the regular expression into an FSM.

-

4.

Create the logic circuit: The trusted client implements the FSM using hybrid and fully HE gates, defining the hybrid parts and fully HE parts.

-

5.

Create an encoding table: The trusted client creates a look-up encoding table to encode plaintext input into ciphertext bits which will be used as input to the FSM created before.

-

6.

Send the FSM and the encoding table to the cloud side.

-

7.

Encode the network stream: The cloud side encodes the plaintext stream into ciphertext bits using the encoding table provided by the trusted client.

-

8.

Match the encoded stream: The cloud side feeds the FSM with the encoded input, evaluates the circuit, and updates the FSM’s current state.

-

9.

Send back matching result: The cloud side sends the encrypted FSM’s state back to the trusted client for decryption and decision-making as required.

Figure 3 describes a generic block diagram for a hybrid logic circuit that implements a regular expression as an FSM. It should be noted here that the encoder results, the internal bits of the circuit, and the current status of the FSM are all ciphertext bits that cannot be decrypted or comprehended by the cloud side. However, the circuit elements and connections of the FSM can be reverse-engineered to reveal the structure of the regular expression even though the literals themselves are still encrypted. Thus, a dedicated security analysis and improvising technique to encrypt the regular expression structure are required to achieve the security goals of an ONIDS.

Security analysis

In this section, we describe the adversary model and goals along with the expected attack surfaces. Then we discuss the security propositions against the adversary goals and demonstrate their applicability under the hybrid binary gates and the matching machines. Finally, we present a summary of the security of the adopted HE scheme (TFHE).

As mentioned in section “Introduction”, the two main entities in the system model are the Trusted Client and the Cloud Side. We focus mainly on the security at the Cloud Side.

Adversary model

The potential adversary is the Cloud Side operator, which is modeled as an honest-but-curios operator43. The Cloud Side operator shall honestly follow all the instructions of the protocol, provide back the results, and has no intention of deceiving the Trusted Client or falsifying the results. In this context, the adversary’s main goals are:

- G1:

-

Decrypt the encrypted secret rules.

- G2:

-

Decrypt the encrypted matching results.

The main attack surfaces are:

- S1:

-

The underlying HE scheme.

- S2:

-

Related encrypted rules segments.

- S3:

-

Related encrypted results.

- S4:

-

The matching circuit.

- S5:

-

Side-channel analysis of the matching process.

The adversary is assumed to have access to all information being processed within the cloud server including physical side-channel leaks. For example, it may try to achieve the above-mentioned goals through techniques like:

-

Intermediate Values Access: The adversary has access to all information being processed within the cloud along with step-by-step code execution values and results.

-

Side Channel Analysis: The adversary is able to do any kind of passive side-channel analysis including power measurements, time measurements, and accessing memory locations.

-

Code Reusing: While the honest adversary follows the code and provides the result back to the Trusted Client, it can rerun the code on its own with different inputs to try to deduct a relationship between the inputs and the outputs of a circuit/program.

Security propositions

In order to defend against the adversary goals, the proposed system must preserve the secrecy of the rules and the matching results on the Cloud Side along with protection against any possible information leak that might happen during the process execution. In the following, we list the security requirements of the proposed system model with their fulfillment proofs.

Adversary goal G1: decrypting secret rules

Proposition 1

(Rules Secrecy) The encrypted rules processed with Hybrid Gates shown in Fig. 2 are as secure against decryption as the underlying HE scheme.

Proof

Every data bit in the rule is encrypted independently using HE at the Trusted Client side. From the perspective of the adversary, all rule bits have a 50% probability of being 0 or 1, and their encrypted values look different even if the plaintext bits share the same value. From the perspective of surface S1, the attacker has to directly break the underlying HE scheme to decrypt the rules. Also, the attacker gains no advantage through the circuit (S4) in this case, because processing the encrypted bits through Hybrid Gates does not change their probability of being 0 or 1. Hence, Hybrid Gates do not increase the advantage of an adversary in decrypting secret data bits in the rule and the encrypted rule is as secure against decryption as the HE scheme. The complexity of decrypting HE bits depends on the scheme and the chosen parameters. \(\square\)

The secret rule format depends on the matching machine version as shown in algorithms 1 and 4 where either the rule bytes are directly encrypted, as in the case of HDMv1 and HRMv1, or look-up tables that represents the rules, as the case in HDMv2, HRMV2, and HRMV3, are encrypted and sent to the Cloud Side. In either case, the Cloud Side receives the homomorphically encrypted rule bits as inputs to hybrid gates or as entries of look-up tables with equal probability of being 1 or 0 and with no visible similarity between any of them.

Proposition 2

(Secure Rule Reusability) Reusing an encrypted rule with related network traffic does not leak additional information to the Cloud Side operator.

Proof

The proposed implementation depends on the semantic security and probabilistic nature of the underlying HE scheme. Thus, even if the plain value \(Decrypt(f(I_{p1},I_c))\) may be equal to \(Decrypt(f(I_{p2},I_c))\), the operator can see only \(f(I_{p1},I_c)\) and \(f(I_{p2},I_c)\) which look completely different. This results from providing different encrypted values to all rule bits even if they have the same plain value as stated in the proof of Proposition 1. The only case where the results may look similar is when using the same plaintext against the same rule, which is a trivial case. \(\square\)

Proposition 3

(Secure Usage of Related Rules) Matching against related rules does not leak additional information to the Cloud Side operator.

Proof

As every rule bit is encrypted independently using an HE scheme, the Cloud Side operator would not be able to determine any similarity between the encrypted rules. Consequently, the Cloud Side operator would not be able to perform related rules attacks. \(\square\)

In the case of complex or complete rules as shown in section “Extension to complete rules and batching”, different rules may reuse similar sub-rules, e.g. same source IP address so the adversary may make use of the S2 surface. In this case, the adversary can understand that the same component is used in different rules, but it is still not able to decrypt the rule or that specific component. This is a direct trade-off for modularity of the proposed system and rule reusability. If absolute no leakage is required, the trusted client can encrypt every rule independently even if there are common components. This can be done by preventing re-using sub-rules in multiple rules i.e. each rule has its components/sub-rules encrypted independently even if this leads to encrypting the same plain sub-rule multiple times. In this case, the common components would look different in the homomorphic ___domain and the adversary would not be able to construct a relationship between these rules. However, this will come at the price of increased memory usage of encrypting the same component multiple times.

Adversary goal G2

Proposition 4

(Secret Result) The output of any Hybrid Gate is as secure against decryption as the underlying HE scheme.

Proof

The multiplexer implementation of the hybrid gates essentially selects one of the input bits to be the output bit. So, the output bit would have a 50% probability of being 1 or 0 because each of the input bits has the same probability of being 1 or 0 as shown in the proof of proposition 1. Hence, the adversary would be able to decrypt the result if and only if s/he is able to decrypt the underlying HE bits. \(\square\)

The proposed algorithms in section “Hybrid rules matching algorithms” have either a pure hybrid binary gate/function structure, as the case with the look-up approach in HDMv2 and HRMv2, or a mixed structure with hybrid gates and fully HE gates, like in HDMv1, HRMv1, and HRMv3. The secret result of a pure hybrid gate/function flows directly from the secrecy of its inputs, as the multiplexer implementation selects one of the input cipher bits that are already covered by homomorphic encryption to be the output. Thus, utilizing S4 provides no advantage against the secret result here. On the other hand, the secrecy of the result of a fully HE gate flows from the underlying HE scheme. A mixed structure has its input layer comprised of hybrid gates, which does not change the secrecy of the input bits, followed by a fully HE gates layer. S3 also does not give an advantage to the attacker, because the encrypted results would look different even if their plaintext values are the same, following the same argument in proposition 2. Thus, the only attack surface that may help the adversary is S1, which has the complexity and security of the underlying HE scheme.

Proposition 5

(Side-Channel Leakage Resistance) The secret rules and encrypted results are secure against side-channel attacks as the underlying HE scheme during the matching process.

Proof

Applying side-channel analysis against any of the matching machines might reveal only the cloud key, which is already public, not the private key as the latter does not exist at the Cloud Side. Thus, both the rules and the encrypted result are secure against side-channel analysis as the underlying HE scheme. \(\square\)

This proposition applies to both G1 and G2, where the adversary here tries to utilize S5 to gain an advantage in decrypting the secret rules or results using side-channel analysis. There are some recent successful attempts in the literature to recover the private key of popular HE schemes like TFHE at the Cloud Side using side-channel attacks. In44, the authors demonstrated the idea of introducing curated perturbations in the ciphertext bits that are returned to the Trusted Client. Depending on the perturbation value, the Trusted Client may or may not be able to decrypt the encrypted bit. The attack depends on utilizing the feedback sent from the Trusted Client to the Cloud Side in case of decryption failure to recover the error or noise value around ciphertext bits and, consequently, the secret key. However, this attack considers essentially a malicious Cloud Side operator and a Trusted Client that sends feedback in case of decryption failure, which is not the case in our system and adversary models.

Security summary of TFHE

As shown in the previous sections, The security of the proposed hybrid matching machines depends on the security of the underlying hybrid matching gates which, in turn, depends on the security of the underlying Homomorphic Encryption Scheme. In our case, the security of the TFHE scheme flows from the hardness of the Learning With Errors (LWE) problem45. Security of LWE scheme is considered an independent and more general topic from the design of TFHE. Hence, estimating the security of TFHE, similar to any other LWE scheme, relies on the active cryptographic community at large. One of the most popular and regularly updated tools to estimate the security of LWE schemes is the Security Estimates for Lattice Problems project, denoted here as the LWE Security Estimator46, started by the research work in47. The tool combines algorithms from different resources to attack a given LWE scheme. The algorithms include:

-

1.

bkw: A variant of the Blum-Kalai-Wasserman algorithm48 that uses coding techniques to reduce the memory requirement and improve the running time.

-

2.

usvp: A primal attack that uses lattice reduction to find a short vector in the secret key lattice and recover the secret key.

-

3.

bdd: A primal attack that uses lattice reduction and Babai’s nearest plane algorithm49 to find a close vector in the secret key lattice and recover the secret key

-

4.

bdd hybrid: A hybrid attack that combines bdd with a meet-in-the-middle technique to reduce the dimension of the problem and increase the success probability.

-

5.

bdd mitm hybrid: A hybrid attack that combines bdd with a meet-in-the-middle technique and a birthday paradox technique to reduce the dimension of the problem and increase the success probability.

-

6.

dual: A dual attack that uses lattice reduction to find a short vector in the error distribution lattice and decrypt ciphertexts.

-

7.

dual hybrid: A hybrid attack that combines dual with a meet-in-the-middle technique to reduce the dimension of the problem and increase the success probability.

For each of these algorithms, the tool reports various metrics including:

-

1.

rop: The number of ring operations required to execute the attack algorithm, expressed in bits of security. This is the main measure of the hardness of breaking the scheme.

-

2.

red: The number of ring operations required to perform lattice reduction, expressed in bits of security.

-

3.

svp: The number of ring operations required to solve the Shortest Vector Problem, expressed in bits of security.

-

4.

mem: The memory requirement of the algorithm, expressed in bits.

The tool can also be used to scan a range of parameters using the class ParameterSweep. LWE Security Estimator is still evolving and new algorithms and/or metrics are being added as they become available. In the context of TFHE, we work primarily with different types of ciphertexts: Learning With Error on the Torus (TLWE) and Ring Learning With Error on the Torus (TRLWE) (RLWE sample has a similar construction to the LWE sample but it is defined over polynomials instead of real numbers). Both are required to perform bootstrapping. In order to estimate the security of these parameters, we evaluate the LWE Security Estimator on the TLWE parameters as well as the TRLWE parameters and opt for the smallest rop of the two schemes from all the studied attack algorithms.

The parameters of TLWE and TRLWE in the default configuration of50, which we used, are defined in the following list and their values are presented in Table 6 along with the security estimates evaluated by46.

-

q: Modulus of the space \({\mathbb {Z}}/q{\mathbb {Z}}\) of integers for the LWE samples. It represents how many bits are used to represent each coefficient in the TLWE and TRLWE samples. The higher q, the higher the security and computation time.

-

n: Dimension of the LWE sample vector. n represents the length of the secret key in bits. The higher n, the higher the security and computation time.

-

\(e_{tlwe}\): Standard deviation of the Gaussian distribution function for the TLWE error term. A very low value of \(e_{tlwe}\) is not secure and a very high value will force a decryption failure. LWE Security Estimator must be used to find a good value.

-

k: The number of polynomials in the TRLWE sample.

-

N: The order of each polynomial. N Efficient bootstrapping relies on N being a power of 2. \(N \times k\) represents the length of TRGSW key that is used to encrypt the secret key for bootstrapping.

-

\(e_{trlwe}\): Standard deviation of the Gaussian distribution function for the TRLWE encryption.

-

pbs_base_log: Specifies the base of the decomposition for the Polynomial Bootstrapping (PBS) operation, i.e., the Blind Rotation.

-

pbs_level: Specifies the number of levels in the decomposition for the Polynomial Bootstrapping (PBS) operation.

-

ks_base_log: Specifies the base of the decomposition for the Key-Switching (KS) operation.

-

ks_level: Specifies the number of levels in the decomposition for the Key-Switching (KS) operation.

The security estimator results presented in Table 6 show a minimum security estimate of 124 bits for TFHE, which comes from the number of ring operations required by the attack on TWLE and for the lattice reduction.

Performance acceleration with parallel processing

The abstracted dependence of the hybrid gates approach on the underlying HE scheme allowed us to utilize and test GPU-accelerated HE libraries to enhance the performance of our algorithms. In this section, we present the implementation details of HDMv1 on top of the GPU accelerated library cuFHE as a sample demonstration of the implementation flexibility of the hybrid gates approach and to show the achievable performance gain.

cuFHE8 is a bootstrappable FHE library that implements the TFHE scheme on Compute Unified Device Architecture - CUDA enabled GPUs utilizing the improved CUDA implementation of the numbertheoretic transform (NTT) in cuHE library51. cuFHE is a binary-based FHE library with an API that provides simple & high-level basic functions for basic HE operations. Basic operations like key generation, encryption, and decryption are performed on CPU, while CUDA implementations are provided for the actual Boolean operations functions like AND, OR, ... etc to allow executing these operations on GPU. Compared to TFHE, cuFHE does not provide a time-accelerated implementation for any single gate on its own; however, it allows the execution of multiple gates in a parallel fashion on the GPU which reduces the average required time by each gate as a result. This means the speedup gain from cuFHE depends on the HE circuit design itself; a circuit that can be executed in parallel paths would achieve a speedup gain while a circuit that adopts serial flow of the data bits will not benefit much if implemented using cuFHE.

The parallelization model depends on executing the maximum possible number of independent Boolean operations (gates) in parallel. In such implementations, the circuit must be viewed from a gate-level perspective. The circuit is divided into levels, where each level contains a number of independent gates that should be executed in parallel. The execution of each level should be finished before descending to a lower level to achieve data availability and synchronization between the levels. An optimum design should contain the smallest number of levels to limit the sequential execution and waiting time between levels. On the other hand, each HE gate execution in cuFHE is assigned an independent kernel launch, and the maximum possible number of parallel kernel launches depends essentially on the available hardware, i.e. the number of Streaming Multiprocessors (SMs), which directly limits the maximum number of gates to be executed in parallel. Therefore, the designer must be careful in tuning the number of levels and number of gates per level to achieve the best performance. Figure 4 illustrates a section of the adopted parallel model of HDMv1 for matching a single 8-bit plain word against an encrypted rule word with the same size. The first level, L1, is the hybrid XNOR (HHE_XOR) between the plain character bits and the cipher rule bits which utilizes the hybrid MUX model described in section “HE hybrid plain-cipher binary gates”. These HHE_XNOR operations can be executed in parallel in a single level as they are independent. The next step is to perform HE_AND operations between all the result bits from the previous level, if all the bits are 1s then there is a match, if any bit is 0 then this is a miss. As can be seen in Fig. 4, the HE_AND operations are executed in tree fashion to maximize the number of operations per level and minimize the number of levels. The final result is a cipher bit that indicates whether there is a match or not.

This matching process can be executed for multiple plain words (string) at the same time to maximize parallelization and efficiency. As the matching process produces a cipher bit result for each word, an HE_OR operation can be executed on all result bits to get a single final result bit that indicates if there is a match in the string under processing. To demonstrate the process, Fig. 5 shows the circuit section that implements the HE_OR operation between 6 result bits, as an example, in a tree fashion similar to the previous HE_AND stage to maximize parallelization and efficiency. The circuit can be extended to accommodate for any number of bits. The required number of gates in each level of the matching process of N 8-bit plain words against one encrypted rule word with the same size is presented in Table 7 as a reference.

Experimental setup and benchmarking results

In this section, the experimental setup, configuration settings, and the parameters used to test the algorithms in section “Hybrid rules matching algorithms” and the parallel model in section “Performance acceleration with parallel processing” are presented. Benchmarking results for the proposed algorithms variations, GGHLM approach variations, and the parallel model are also demonstrated. The benchmarking methodology targeted four objectives:

-

1.

Implementing one of the proposed algorithms using different HE libraries on CPU to compare their performance and to demonstrate the library-independency of the hybrid gates approach.

-

2.

Performing a detailed benchmarking comparison on CPU between our approaches built on top of TFHE library and the current state-of-art, illustrating their pros and cons and highlighting the suitable operation applications for each.

-

3.

Testing the applicability and resultant gain of implementing a sample of our algorithms in a parallel form on top of a GPU-accelerated HE library.

-

4.

Demonstrating the practical resources requirements for matching against a complex rule as pointed in section “Extension to complete rules and batching”.

The code snippets were written using C++ since it is common between all the tested HE libraries. Also, the experiments were performed on a PC with a Core I9-10900 processor and 32 GB of memory with an Nvidia RTX 3090. Elementary calculations and measurements were performed on TFHE and GGHLM to get the time and memory requirements for the fundamental operations in both libraries. The results allowed theoretical extrapolation to the time and memory requirements of more complex operations at any scale. To confirm the extrapolated expectations, all HDM, HRM, and GGHLM implementations were executed on a single thread, and the maximum resident memory and total execution time were measured using “/usr/bin/time -v” Linux command.

Performance evaluation under different HE libraries

To achieve the first target, HDMv1 variation is implemented using 3 different libraries: TFHE50, Microsoft SEAL (BFV scheme)6, and PALISADE (BFV, BGV, and TFHE schemes)7. It should be noted that BFV and BGV are integer-based schemes and were configured with modulo-2 arithmetic to fit with our implementations. Also, they are leveled schemes which means they support operations on ciphertext to a limited depth, that is configured by their parameters, compared to the bootstrabable schemes like TFHE that supports operations or circuits on ciphertext to any arbitrary depth. This is why the SEAL implementation of BGV scheme is not reported in this paper as it did not provide a sufficient multiplication depth suitable for our application within a reasonable choice of parameters. While CKKS scheme52 is implemented by SEAL and PALISADE, it is used for approximate computations on integers and complex numbers, which does not fit with our target application. FHEW scheme53 is also implemented in PALISADE; however, it is not tested because TFHE is considered an improved and faster version of FHEW 50. The HE libraries parameters in all of these implementations are chosen to ensure a minimum of 128 bits of security.

As mentioned in section “Hybrid rules matching algorithms”, the plaintext word size and the ciphertext rule size are chosen to be the same to not overcomplicate the implementation. The word size here is selected to be 8 bits, the rule is one encrypted word, and the plaintext stream consists of 10 words so the task would simulate the searching for 1 HE encrypted ASCII character in a stream of 10 plain characters. The results of this experiment are presented in Table 8 which shows a wide variety of search speeds. Even for the same HE scheme, e.g. BFV or TFHE, the searching speed is different based on the used library. It should be noted that for integer-based schemes, the searching algorithm requires a multiplication depth of at least 7 otherwise the decryption will fail. PALISADE-BFV with a depth of 7 shows the minimum searching time while PALISADE-BGV with a depth of 50 is the slowest. The multiplication depth has a significant impact on the performance. Increasing the multiplication depth (i.e. searching circuit depth) increases the searching time and the size of the encrypted bit. PALISADE-BFV scheme shows better performance than SEAL-BFV especially at high depths. It should be noted that TFHE library has infinite multiplication depth since it is a bootstrapable scheme, and it will eventually achieve better results for deeper circuits (e.g. longer plaintext searching strings) in addition to the independent encrypted bit size.

Performance comparison with state-of-art

To achieve the second objective, we compared the performance of our proposed algorithm bundles against the encrypted NFA approach of GGHLM targeting the two tasks presented earlier in section “Hybrid rules matching algorithms”, namely the Hybrid Direct Matching and the Hybrid Range Matching. For this purpose, all HDM and HDR variations are implemented using TFHE with configuration parameters that ensure a minimum of 128 bits of security. The elementary measurements for GGHLM showed that the encryption time for a transition matrix of a member in the regex alphabet is ~5 s, and its size is ~33 MB. A homomorphic matrix \(\times\) vector operation takes ~5 ms. For TFHE on the other hand for our approaches, The encryption time of 1 bit is ~21 usec and its size is ~2.4 KB. A homomorphic binary gate execution takes ~13 ms. These numbers can be used to predict the time and memory costs of more complex operations of any scale, and they proved good accuracy compared to the actual measurements presented next.

Performance of the hybrid direct matching algorithm

For the HDM machine, the task is to search for an encrypted character rule in a stream of 10 plaintext characters, similar to the task of the first objective, so the rule/word size is selected as 8 bits. To perform the same task with GGHLM, a regular expression in the form of “.*c.*” is inputted to their implementation, where “.” represents a wildcard (The currently available implementation of GGHLM does not support the wild card “.”. So the wildcard was replaced by a logical OR between all 256 possibilities of an 8-bit word), “*” is the Kleene star, and “c” is the rule character. A random 10-byte string is fed to the NFA as input to represent the plaintext stream. The configuration parameters were chosen as suggested in GGHLM to achieve a minimum of 51 bits of security. While it is possible, theoretically, to change the parameters to achieve 103 security bits, the provided implementation54 did not allow that practically.