Abstract

Internal employees have always been at the core of organizational security management challenges. Once an employee exhibits behaviors that threaten the organization, the resulting damage can be profound. Therefore, analyzing reasonably stored behavioral data can equip managers with effective threat monitoring and warning solutions. Through data-mining, a knowledge graph for internal threat data is deduced, and models for detecting anomalous behaviors and predicting resignations are developed. Initially, data-mining is employed to model the knowledge ontology of internal threats and construct the knowledge graph; subsequently, using the behavioral characteristics, the BP neural network is optimized with the Sparrow Search Algorithm (SSA), establishing a detection model for anomalous behaviors (SBP); additionally, behavioral sequences are processed through data feature vectorization. Utilizing SBP, the LSTM network is further optimized, creating a predictive model for employee behaviors (SLSTM); ultimately, SBP detects anomalous behaviors, while SLSTM predicts resignation intentions, thus enhancing detection strategies for at-risk employees. The integration of these models forms a comprehensive threat detection technology within the organization. The efficacy and practicality of detecting anomalous behaviors and predicting resignations using SBP and SLSTM are demonstrated, comparing them with other algorithms and analyzing potential causes of misjudgment. This work has enhanced the detection efficiency and update speed of abnormal employee behaviors, lowered the misjudgment rate, and significantly mitigated the impact of internal threats on the organization.

Similar content being viewed by others

Introduction

Internal employees can bypass firewalls and other security measures, posing a covert threat that often inflicts greater damage on an organization than external cyberattacks1,2. Following the "Prism Gate" revelations, public concern about internal security and the impact of internal threats deepened. According to the 2020 internal Threat Report by Securonix, the rise in internal threat incidents has led to disruptions in organizational operations, loss of critical data, brand damage, and revenue decline. Annually, the damage caused by malicious internal attacks or inadvertent negligence continues to increase, impacting organizations across all industry sectors worldwide3. The Global Data Breach Report of 2021 and 2020 shows that internal threats were responsible for 8% and 7.4% of data breaches, respectively, resulting in losses of $461 million and $432 million.

Traditional detection methods for internal threats involve matching employee behaviors with anomalous patterns stored in a behavioral database, if a match is found, the behavior is flagged as anomalous, triggering a response to the threat4,5. However, the effectiveness of this approach depends heavily on the currency of the behavioral database and the precision of the matches, which often fail to detect new types of internal attacks or control false positives. Moreover, organizations with a large workforce face higher risks of systematic issues, such as high false positive rates, scalability challenges, and poor review processes6,7. Over time, these traditional methods, which involve continuous discovery of new threats and modeling, have become outdated and increasingly costly8.

Internal employees, who control core assets and internal equipment, mainly cause internal threats unintentionally or intentionally by accessing organizational systems and leaking confidential information9,10,11. Compared to external attacks, internal threats are marked by information transparency, concealment, and higher risk. Thus, preventing internal attacks, enhancing system security, and minimizing the likelihood and impact of internal risks are critical concerns for organizations12. As organizational members engage in numerous internal activities daily, generating vast amounts of behavioral data, integrating this data with methods to detect anomalous behaviors could significantly enhance the efficiency and speed of internal threat detection, reduce false positive rates, and substantially decrease the damage caused by internal threats13.

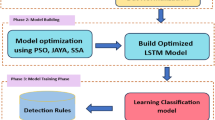

To enhance the efficiency and accuracy of analyzing employee behaviors, the knowledge graph for internal threat data is reasoned, and a model for detecting anomalous behavior and predicting the resignation tendencies of employees is developed. Initially, using data-mining, the knowledge ontology of internal threat is modeled, and the corresponding knowledge graph is constructed. Subsequently, based on behavioral characteristics, the BP neural network is optimized with the Sparrow Search Algorithm (SSA), establishing a detection model for anomalous behaviors (SBP). Furthermore, behavioral sequences are processed through data feature vectorization. Utilizing SBP, the LSTM network is optimized, creating a prediction model for employee behaviors (SLSTM). Finally, SBP detects abnormal behaviors, while SLSTM predicts resignation intentions, enhancing detection strategies for at-risk employees. This combination forms a threat detection technology within the enterprise. In practical applications, employee threat behaviors are often highly correlated, and organizational managers do not classify an employee as a threat based solely on one anomalous behavior, instead, they integrate and analyze various behavioral domains of the employees, thus employing a detection strategy based on the detection-prediction model.

Data-mining reasoning knowledge graph

Ontology modeling

The CERT-IT (r6.2) internal threat dataset from the Software Engineering Institute at Carnegie Mellon University is utilized. This dataset provides a stream of behavioral logs from multiple domains, recording employees' operational behaviors at specific times. CERT-IT (r6.2) comprises both structured and unstructured data, including the Employee Position table, which outlines the organizational structure, and the Psychological Test table, which reflects personal states of employees; the subsequent tables, namely the Logon, File, Device, Email, and Http tables, contain time-sequenced employee behavior logs14,15. These logs are predominantly structured data, detailing the behaviors recorded. The methodology for ontology modeling based on dataset characteristics is depicted in Fig. 1.

The ontology hierarchy is shown in Fig. 2. In the process of entity extraction, since each table is structured data, different rules can be used for different tables to extract entities. According to the employee ontology attributes, the corresponding information design rules of the employee information table are entity extracted16. According to the date ontology attribute, the design rules are entity extracted. According to the behavior ontology attribute, the design rules are entity extracted. Based on the relationship between the entities, it is possible to determine by which employee the behavior occurred on which specific date17.

Data preprocessing

By counting the number of times each employee logged on to each computer in the Logon data, the tables of employee personal computers were obtained. By counting the monthly employee personnel list, the employee's resignation date is recorded. Obtaining these two basic features, two data features can be added to the study for each behavior: whether the operation is performed through the person's computer and whether the resignation is imminent. These two data features can be a good fit for the behavioral characteristics in internal threat behaviors and the reasons for the behaviors.

Logon data contains behavior code, time, employee ID, computer ID, and logon (logout), as shown in Table A1. File data contains behavior code, time, employee ID, computer ID, file operated, specific behaviors (open, copy, write, delete), whether to move to removable devices and file information, as shown in Table A2. Device data contains behavior code, time, employee ID, computer ID, files operated, specific behaviors (Connect, Disconnect), as shown in Table A3. Email data contains behavior code, time, employee ID, computer ID, sender, receiver, carbon copyist, behaviors (Send, View), size, content, attachment, as shown in Table A4 and Table A5. Http data contains behavior code, time, employee ID, computer ID, access URL, behaviors (Visit, Upload, Download), content, as shown in Tables A6 and A7.

Detection and prediction of anomalous behavior based on sparrow search algorithm

Sparrow search algorithm

The SSA is a novel intelligent optimization algorithm inspired by the foraging and anti-predator behaviors of sparrows18. The sparrow population is represented as follows:

where d represents the dimension; n is the number of sparrows. The fitness matrix for all sparrow populations is expressed in the following form:

where n indicates the total number of sparrows.

In the SSA, explorers with relatively high fitness are prioritized for food during each search round. Moreover, as explorers are tasked with seeking areas of higher fitness, they influence the movement patterns of the entire sparrow population, covering a broader area19. The position of explorers is updated in each iteration as follows:

where t is the iteration number; itermax represents the maximum number of iterations; Xi,j indicates the position of the i-th sparrow in the j-th dimension; α ∈ (0,1) is a random number. R2 ∈ [0, 1] and ST ∈ [0.5, 1] represent the warning and safety values, respectively; Q is a normally distributed random number; and L is a 1 × d matrix (all elements are 1). When R2 < ST, it signals the absence of predators, allowing the explorer to continue searching20. When R2 ≥ ST, it indicates that a scout has detected a predator and alerted the sparrows, necessitating a move to a safer area to engage in anti-predator behavior21.

The predator's position is updated each iteration are as follows:

where \(X_{p}^{t + 1}\) is the current ___location with the highest food abundance (highest fitness) among the discoverers; \(X_{worst \, }^{t}\) is the ___location globally with the least food (lowest fitness) globally; \(A^{ + } = A^{T} \left( {AA^{T} } \right)^{ - 1}\), A represents a column vector at the same latitude as the individual sparrow, filled with random values of 1 and −1. When \(i > \frac{n}{2}\), predators seek to move away from less favorable positions, and conversely, predators move towards the discoverer's position for better opportunities.

The scout's position is updated each iteration as follows:

where \(X_{best \, }^{t}\) is the current global optimal position; β controls the step size parameters; K ∈ (−1, 1) is a random number; fi is the current scout's position; fg and fw are the current global optimal and worst fitness values, respectively; and ε is a constant to prevent the denominator from becoming zero22.

The specific execution flow of the SSA algorithm is depicted in Fig. 3:

Detection model of anomalous behavior

Five scenarios comprise the internal threat, each associated with specific anomalous behaviors. The BP neural network efficiently handles large-scale data without requiring detailed insight into the internal operation mechanism, making it ideal for detecting internal threats. Consequently, the SSA is employed to optimize the BP neural network, creating an enhanced algorithm (SBP) for detecting anomalous behavior, as illustrated in Fig. 4:

Input: the lengths of the behavior vectors for detecting Logon, File, Device, Email, and Http are 5, 9, 5, 8, and 8, respectively. FC layer: the output of the F1 layer is presented in Eq. (6); the output of the F2 layer is detailed in Eq. (7). Deactivation layer: Dropout is utilized to address the overfitting issue. Output: A Softmax function outputs the probabilities of anomalous and normal behaviors as detailed in Eq. (8). To assess the effectiveness of neural network training, the cross-entropy loss function is employed as shown in Eq. (9).

where \(w_{ij}^{(1)}\) represents the weight of the j-th neuron of the input linked to the i-th neuron of the F1 layer; xj denotes the value of the j-th neuron of the input; n0 denotes the number of neurons in the input; n1 denotes the number of neurons in F1; \(b_{i}^{(1)}\) denotes the bias value of the i-th neuron in F1; and F(x) denotes the activation function. Where \(w_{ij}^{(2)}\) represents the weight of the j-th neuron of Dropout linked to the ith neuron of F2; xj denotes the value of the jth neuron of the input; n2 denotes the number of neurons present in Dropout; n3 denotes the number of neurons in F2; \(b_{i}^{(2)}\) represents the bias of the i-th neuron in F2; and F(x) denotes the activation function.

where yi represents the output of the ith neuron in the output.

The SBP is utilized to identify suitable hyperparameters, as demonstrated in Fig. 5. The first step defines the movement space. The second step sets the initial position of the sparrows randomly. The third step achieves the sparrow population adaptation by constructing the initial BP neural network and calculating the cross-entropy loss function after 10 cycles. In the fourth step, the position of each sparrow is updated based on the sparrow population fitness and the position of each sparrow, updating the optimal sparrow position if the fitness exceeds the optimal fitness. The fifth step outputs the best sparrow position if the maximum number of iterations is reached, otherwise, the iteration continues. Based on the optimal sparrow position, the optimal hyperparameters for the neural network are determined, as shown in Table 1.

Prediction model of anomalous behavior

The LSTM network enables the carrying of information across multiple time steps, addressing issues such as early signal failure and gradient disappearance, as illustrated in Fig. 6. The LSTM network consists of ct to save long-term memory and \(c_{t}^{\prime }\) to save short-term memory, regulated by an input gate (it), forgetting gate (ft), and output gate (ot).

The input gate (it) determines the proportion of new input information to be added to the long-term memory ct, i.e., specifically how much of the current input xt and the previous output ht are incorporated into the new neuron, as shown in Eq. (10):

The forgetting gate (ft) controls the retention of information in the long-term memory ct, as specified in Eq. (11):

The short-term memory (\(c_{t}^{\prime }\)) is refreshed, as described in Eq. (12):

Long-term memory (ct) involves the combination of previous state and input through gating mechanisms to compute the state for the next time step, as indicated in Eq. (13):

The output gating (ot) manages the utilization of the combined long and short-term memories as output information, as outlined in Eq. (14):

The final output appears in Eq. (15):

Variables Wxi, Whi, bi represent the weight coefficients and bias terms for the input gate; Wxf, Whf, bf for the forgetting gate; Wxo, Who, bo for the output gate; Wxc, Whc, bc for the current input to short-term memory \(c_{t}^{\prime }\). "°" denotes that the matrices are multiplied according to the same positional elements; σ and tanh are activation functions.

The LSTM network is applied to predict employee behaviors. Drawing on the prediction models of language, each behavior is viewed as a word or phrase to facilitate predictions of subsequent text. This approach extracts hidden features of user behaviors to forecast the following sequence of actions, including behaviors such as Logon, Logoff, File, Device, Email, Http. The SSA optimizes the LSTM network to develop an enhanced algorithm (SLSTM) for predicting employee behaviors, as depicted in Fig. 7:

Input: behaviors are converted into 34-dimensional vectors, and a fixed length time_step is selected for predicting subsequent steps, with each sample's input size being (34 × time_step). LSTM1 layer: outputs of the i-th neuron from LSTM1, LSTM2, and LSTM3 layers are presented in Eq. (15). Deactivation layer: Dropout addresses the overfitting issue. Output: the tailored LSTM network predicts six types of employee behaviors. The cross-entropy loss function is presented in Eq. (16). The process for optimizing the SLSTM using SSA is illustrated in Fig. 8 and summarized Table 2.

Results

Detection results of anomalous behavior

To assess the effectiveness of the SSA in optimizing the hyperparameters of the network structure, SBP and BP with manually set original hyperparameters were compared, as depicted in Fig. 9. The experimental environment included a Windows 10 system, an Intel (R) Core (TM) i9-10900 K CPU @ 3.70 GHz, an NVIDIA Ge Force RTX 3070, 64 GB memory, a 1TSSD hard drive, the Keras framework, and the Python language. Comparative experiments were conducted between the SBP detection model and BP23, PSO-BP24, and RF25, as illustrated in Fig. 10. Analysis of Fig. 9 reveals that the SBP detection model outperforms the BP network in all indicators. Regarding the loss function value, SBP achieves significantly better performance, manifesting a faster stabilization in decreasing trends, with a consistently lower loss function value. In terms of accuracy, SBP attains the highest accuracy more rapidly, and its final accuracy exceeds that of BP. The superiority of SBP over manually set BP models is evident. Figure 10 presents a comprehensive comparison across five behavioral domains, with SBP showing the best overall performance under all four indicators, highlighting the efficacy of the designed SBP detection model for identifying internal threats.

Prediction results of anomalous behavior

To verify the effectiveness of the SSA to optimize the hyperparameters of the LSTM network, the SLSTM and the LSTM network with the original hyperparameters set manually are compared in the experiment, as shown in Fig. 11. Analyzing Fig. 11, compared with the LSTM model, the SLSTM model has advantages in all four indicators. From the perspective of the training set, the loss function of SLSTM is more stable and lower loss compared to LSTM, and the accuracy of SLSTM is higher and stable. From the point of view of testing set, SLSTM loss value is much lower and more stable compared to LSTM and this feature is also found in accuracy.

The proposed SLSTM is compared with LSTM26, PSO-LSTM27, PSO-BP24, and BP23 and the results are shown in Fig. 12. With LSTM, PSO-LSTM, PSO-BP, and BP, the SLSTM model can predict the employee behavior very well with the prediction accuracy of 90.35%.

According to the statistical analysis of the prediction results, the prediction errors of the SLSTM model designed in this paper are mainly focused on predicting Logon and Logoff behaviors, as shown in Table 3. The main reason is the occurrence of one person logging on to multiple computers at the same time, i.e., the employee logs on to another computer without logging out of the last logon computer. Analyzing Table 1 shows that CBE3219 logged into PC-2264 and then logged into PC-3168 and logged out quickly. The analysis reveals that CBE3219's job title is Chief Engineer, which has the authority to log in to multiple computer devices, and the detection strategy designed in this paper does not consider the influence of job title on behavior prediction, which ultimately leads to incorrect detection results.

Strategy validation and optimization

In practical applications, the threat behaviors of employees are often highly correlated, and organizational managers will not judge the employee performing the behavior as an internal threat just because of an anomalous behavior, but rather, they will integrate and analyze the various behavioral domains of the employees. Therefore, a detection strategy of anomalous behaviors is given based on the detection-prediction model.

A baseline for the frequency of anomalous behaviors is established: all behaviors are detected, and the statistical results show that the average daily number of anomalous behaviors for each employee is two times, so the baseline for the normal daily frequency of anomalous behaviors is set to two times. Therefore, the design of detection strategy: the employee's behaviors in the last month, if more than 5 days of anomalous behaviors more than 4 times, it is included in the scope of the examination of anomalous employees, the specific strategy implementation process shown in Fig. 13.

Inspection using the designed policy revealed 66 anomalous employees. These include four established anomalous employees, KSX2874, WOD1366, VUE3294, and OPO2632. After checking, it was found that the remaining 62 anomalous employees' positions were all IT Admin, and the number of their anomalous behaviors regarding Logon was found to be high. The reason is that IT Admin employees need to frequently log into other people's computers to do their work, so they are misjudged by the model.

Taking the misjudged employee (CYW2322) as an example, the total number of anomalous behaviors is 2359, and the average daily number of anomalous behaviors is more than 5. Among them, there are 1938 anomalous behaviors of Logon, which occupy 82.15% of the total anomalous behaviors. Other anomalous behaviors (File, Device, Email, and Http) accounted for 2.20% (52), 2.89% (68), 7.50% (177), and 5.26% (124) of the total anomalous behaviors, respectively, as shown in Fig. 14.

For the above reasons, the strategy is adjusted. If the employee whose position is Non-IT Admin has more than 4 instances of anomalous behaviors more than 5 days in a month, he/she will be included in the anomalous employee examination. If the employee with the position of IT Admin, excluding the behavior of the Logon, shows anomalous behaviors more than 4 times in more than 5 days in a month, he/she will be included in the anomalous employee examination. The optimized detection-prediction strategy of anomalous behaviors is shown in Fig. 15.

The optimized detection-prediction strategy detected four anomalous employees, KSX2874, WOD1366, VUE3294, and OPO2632. Of the established anomalous employees, only SLE3655 was not detected and there were no normal employees that were misclassified. Analysis of employee SLE3655 revealed only four established anomalous behaviors: on September 06, 2022, SLE3655 uploaded his resume to the dropbox website four times in a row. The employee's threat behaviors are characterized by low frequency and small scope, and are difficult to be detected in practice. Therefore, in practice, organizational managers can strengthen the monitoring of internals' job search behavior.

Conclusion

A knowledge graph is developed through data-driven methods, and a detection and prediction model of anomalous behaviors is also established using SSA based on behavioral characteristics, forming an internal threat monitoring technology. The main conclusions are:

-

(1)

A knowledge graph for internal threats has been constructed, significantly enhancing both storage and query efficiency.

-

(2)

Five features of anomalous behavior data are identified, and the BP neural network is optimized through SSA. A detection model for anomalous behaviors is then developed. Three prevalent algorithms—BP, PSO-BP, and RF—are used for comparative analysis with SBP, demonstrating the effectiveness of the SBP model in detecting anomalous behaviors.

-

(3)

Behavioral sequences are utilized as data features, and the LSTM network is optimized via SSA to develop the prediction model of anomalous behaviors. Four widely used algorithms—LSTM, PSO-LSTM, BP, and PSO-BP—are compared, and the prediction accuracy of SLSTM is found to be 90.35%, underscoring the utility of the detection-prediction model in identifying anomalous behaviors.

-

(4)

In practical applications, employee threat behaviors are often interconnected, and organizational managers do not consider a single anomalous behavior as indicative of an internal threat. Instead, they assess various behavioral aspects of employees collectively. Thus, a strategy for detecting anomalous behaviors among internal employees is proposed and refined, and its effectiveness is confirmed through experimental validation.

As research progresses, it has been recognized that improvements are still necessary. Certain data features in the dataset remain underutilized, such as the temporal characteristics of Http behavior and the lack of correspondence between the interpersonal relationships evidenced in Email behavior and the appointment data. Additionally, the construction of knowledge graphs fails to adequately distinguish individuals, involving personal computers, emails, and files. Future efforts should aim to enhance the precision of data searches, offering further potential for performance enhancement.

Data availability

The data is unrestricted and can be obtained by contacting corresponding author.

References

Beekman, J. A., Woodaman, R. F. A. & Buede, D. M. A review of probabilistic opinion pooling algorithms with application to insider threat detection. Decis. Anal. 17(1), 39–55 (2020).

Prabhu, S. & Thompson, N. A primer on insider threats in cybersecurity. Inf. Secur. J. Glob. Perspect. 31(5), 602–611 (2022).

Al-Mhiqani, M. N. et al. A new intelligent multilayer framework for insider threat detection. Comput. Electr. Eng. 97, 107597 (2022).

Georgiadou, A., Mouzakitis, S. & Askounis, D. Detecting insider threat via a cyber-security culture framework. J. Comput. Inf. Syst. 62(4), 706–716 (2022).

Wei, Y., Chow, K. P. & Yiu, S. M. Insider threat prediction based on unsupervised anomaly detection scheme for proactive forensic investigation. For. Sci. Int. Digit. Invest. 38, 301126 (2021).

Ye, X. & Han, M. M. An improved feature extraction algorithm for insider threat using hidden Markov model on user behavior detection. Inf. Comput. Secur. 30(1), 19–36 (2022).

Erola, A. et al. Insider-threat detection: Lessons from deploying the CITD tool in three multinational organisations. J. Inf. Secur. Appl. 67, 103167 (2022).

Alabdulkreem, E. et al. Optimal weighted fusion based insider data leakage detection and classification model for ubiquitous computing systems. Sustain. Energy Technol. Assess. 54, 102815 (2022).

Deep, G., Sidhu, J. & Mohana, R. Insider threat prevention in distributed database as a service cloud environment. Comput. Ind. Eng. 169, 108278 (2022).

Roy, P., Sengupta, A. & Mazumdar, C. A structured control selection methodology for insider threat mitigation. Proc. Comput. Sci. 181, 1187–1195 (2021).

Zhang, C. et al. Detecting insider threat from behavioral logs based on ensemble and self-supervised learning. Secur. Commun. Netw. 2021, 1–11 (2021).

Sav, U. & Magar, G. Generating data for insider threat detection for cybersecurity. Think India J. 22(39), 65–69 (2019).

Alsowail, R. A. & Al-Shehari, T. A multi-tiered framework for insider threat prevention. Electronics 10(9), 1005 (2021).

Le, T., Le, N. & Le, B. Knowledge graph embedding by relational rotation and complex convolution for link prediction. Exp. Syst. Appl. 214, 119122 (2023).

Yu, C. et al. A knowledge graph completion model integrating entity description and network structure. Aslib J. Inf. Manag. 75(3), 500–522 (2023).

Boughareb, R., Seridi, H. & Beldjoudi, S. Explainable recommendation based on weighted knowledge graphs and graph convolutional networks. J. Inf. Knowl. Manag. 3, 2250098 (2023).

Du, W. et al. Sequential patent trading recommendation using knowledge-aware attentional bidirectional long short-term memory network (KBiLSTM). J. Inf. Sci. 49(3), 814–830 (2023).

Wu, R. et al. An improved sparrow search algorithm based on quantum computations and multi-strategy enhancement. Exp. Syst. Appl. 215, 119421 (2023).

Zhang, Z. & Han, Y. Discrete sparrow search algorithm for symmetric traveling salesman problem. Appl. Soft Comput. 118, 108469 (2022).

Feng, B. et al. Hydrological time series prediction by extreme learning machine and sparrow search algorithm. Water Supply 22(3), 3143–3157 (2022).

An, G. et al. Ultra short-term wind power forecasting based on sparrow search algorithm optimization deep extreme learning machine. Sustainability 13(18), 10453 (2021).

Wen, J. & Wang, Z. Short-term load forecasting with bidirectional LSTM-attention based on the sparrow search optimisation algorithm. Int. J. Comput. Sci. Eng. 26(1), 20–27 (2023).

Zhang, J., Wang, R. & He, Y. Application of employee performance assessment based on improved non-linear back propagation learning BP neural algorithm. J. Converg. Inf. Technol. 7(23), 186–194 (2012).

Li, N. & Li, M. Forecast of chemical export trade based on PSO-BP neural network model. J. Math. 2022, 1487746 (2022).

Weinblat, J. Forecasting European high-growth firms—A random forest approach. J. Ind. Competit. Trade 18(3), 253–294 (2018).

Ma, Q. & Rastogi, N. DANTE: Predicting insider threat using LSTM on system logs. In 2020 IEEE 19th International Conference on Trust, Security and Privacy in Computing and Communications (TrustCom). 1151–1156 (IEEE, 2020).

Mao, Y. et al. Analysis of road traffic speed in Kunming plateau mountains: A fusion PSO-LSTM algorithm. Int. J. Urban Sci. 26(1), 87–107 (2022).

Funding

This work is supported by the project of Jilin Higher Education Association (JGJX2023D662).

Author information

Authors and Affiliations

Contributions

Conceptualization, Yutong Meng; methodology, Xiao Zhang; software, Xiao Zhang; validation, Xiao Zhang and Yutong Meng; formal analysis, Xiao Zhang and Yutong Meng; data curation, Xiao Zhang and Yutong Meng; writing—review and editing, Xiao Zhang and Yutong Meng. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Zhang, X., Meng, Y. Detection and prediction of anomalous behaviors of enterprise’s employees based on data-mining and optimization algorithm. Sci Rep 14, 17716 (2024). https://doi.org/10.1038/s41598-024-68315-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-024-68315-9