Abstract

Causal discovery with prior knowledge is important for improving performance. We consider the incorporation of marginal causal relations, which correspond to the presence or absence of directed paths in a causal model. We propose the Marginal Prior Causal Knowledge PC (MPPC) algorithm to incorporate marginal causal relations into a constraint-based structure learning algorithm. We provide the theorems of conditional independence properties by combining observational data and marginal causal relations. We compare the MPPC algorithm with other structure learning methods in both simulation studies and real-world networks. The results indicate that, compare with other constraint-based structure learning methods, MPPC algorithm can incorporate marginal causal relations and is more effective and more efficient.

Similar content being viewed by others

Introduction

Causality discovery has been used in many fields, such as medicine1,2, sociology3 and bioinformatics4. In practice, causal structures are usually represented by Directed Acyclic Graphs (DAGs)5,6,7,8,9. In a DAG, a directed edge between two vertices represents a direct causal relation; a marginal causal relation between two vertices is denoted by a directed path, which is a sequence of consecutively directed edges5. However, using constraint-based structure learning methods, it is possible to acquire solely a completely partially directed acyclic graph (CPDAG), which represents a group of Markov equivalent DAGs10.

In order to improve these learning algorithms, incorporating background knowledge such as expert knowledge has drawn increasing amounts of attention11,12,13. In practical scenarios, researchers often possess a preexisting understanding of the causal system. For instance, in the field of medicine, previous studies may indicate that drinking causes liver cancer14. In the field of biology, it is reasonable to assume that genetic variables, such as single nucleotide polymorphisms (SNPs), are not affected by phenotypes15. Several methods that address prior knowledge for causal discovery have appeared in the literature. These methods can incorporate knowledge on the presence or absence of direct causal relations16,17, on a total ordering of the variables18, or the complete structure of the network19. However, such prior knowledge may not be direct causal relations in large causal systems containing hundreds of variables12. A direct causal relation can be treated as a marginal causal relation between two adjacent variables. Thus, marginal relations are more accessible than direct causal relations in practical terms13.

However, incorporating marginal causal knowledge into causal discovery is challenging. Most causal structures cannot be directly inferred from observational data. An intuitive method is to learn a CPDAG, then check the marginal causal relations in all possible graphs represented by the CPDAG. This approach is inefficient, however, when the learned Markov equivalence class contains a large number of DAGs20. Previous study of Borboudakis and Tsamardinos extended the causal model to address cases with prior marginal causal relations12. PC-PDAG method proposed by Borboudakis and Tsamardinos consists of two steps. The first step uses the PC algorithm to compute the PDAG, and the second step incorporates causal prior knowledge to obtain the maximal partially directed acyclic graph (MPDAG)12. The main drawback of this method is that if the PDAG obtained in the first step is incorrect due to statistical errors, it will lead to the second step failing to correctly incorporate the marginal causality or producing an incorrect MPDAG. Researchers have also proposed several score-based structure learning algorithms to address the problem of marginal causal relations. Chen et al.21 tackled marginal causal relations using an equivalence class tree (EC-Tree). Wang et al.22 proposed an exact search algorithm (ACOG) based on order graph to handle structure learning in the presence of incorrect marginal causal relations. However, these methods are computationally demanding and are therefore unable to manage networks with more than 35 nodes22,23. Li and van Beek24 proposed a constraint-based hill-climbing method, MINOBSx, to integrate direct and marginal causal relations relatively quickly. However, it still requires significant computation time compared to algorithms that do not consider marginal causal relations, especially when dealing with networks with more than 40 nodes.

In this paper, we examine incorporating marginal causal knowledge into a constraint-based structure learning algorithm. We characterize the conditional independence properties of marginal causal relations. Based on the proposed theorems, we propose an effective algorithm, the Marginal Prior Causal Knowledge PC (MPPC) algorithm, to learn the MPDAG given observational data and a set of causal prior knowledge. We then compare the performances of the MPPC algorithm with those of PC-stable algorithm, MMHC algorithm, PC-PDAG method proposed by Borboudakis and Tsamardinos and MINOBSx method proposed by Li using simulated networks and real networks.

The remainder of the paper is structured as follows: section “Methods” introduces the notation and definitions. Then, we propose theorems for indirect marginal causal prior knowledge and the MPPC algorithm. Next, in section “Results” we evaluate the proposed MPPC algorithm on both simulations and real networks. We discuss the advantages and limitations of this study in section “Discussion”.

Methods

Causal DAG models

Let \({\mathcal{G}}\left( {V,E} \right)\) be a DAG with a vertex set V and an edge set E. The undirected graph obtained from replacing all directed edges of \({\mathcal{G}}\) with undirected edges is the skeleton of \({\mathcal{G}}\). When there is an edge (undirected or directed) between two variables A and B in a graph \({\mathcal{G}}\), A and B are adjacent. When there is a directed edge \(A \to B\) in a graph \({\mathcal{G}}\), A is a parent of B; equivalently, B is a child of A. The parents, children, and adjacent vertices of variable A in a DAG \({\mathcal{G}}\) are denoted by \(pa\left( {A,{\mathcal{G}}} \right)\),\({ }ch\left( {A,{\mathcal{G}}} \right)\) and \(adj\left( {A,{\mathcal{G}}} \right)\), respectively. If there is a directed (undirected) edge between any two consecutive vertices \(\left( {X_{i} ,X_{i + 1} ,X_{i + 2} , \ldots ,X_{j} } \right)\), then it is a directed (undirected) path. A is a cause of B if there is a directed path from A to B. For simplicity, we use \({ }X_{i} \to X_{j} \) and \(X_{i} \dashrightarrow X_{j}\) to denote a directed edge and a directed path, respectively. We use  to denote there is no directed path from \(X_{i}\) to \(X_{j}\). If there is a directed path from \(X_{i}\) to \(X_{j}\), \(X_{i}\) is an ancestor (indirect cause) of \(X_{j}\) and \(X_{j}\) is a descendant of \(X_{i}\), denoted by \(X_{i} \in an\left( {X_{j} ,{\mathcal{G}}} \right)\) and \(X_{j} \in de\left( {X_{i} ,{\mathcal{G}}} \right)\). A v-structure is a structure of \(A \to B \leftarrow C\) with constraint that \(A\) is not adjacent to \(C\), and the vertex \(B\) is called a collider.

to denote there is no directed path from \(X_{i}\) to \(X_{j}\). If there is a directed path from \(X_{i}\) to \(X_{j}\), \(X_{i}\) is an ancestor (indirect cause) of \(X_{j}\) and \(X_{j}\) is a descendant of \(X_{i}\), denoted by \(X_{i} \in an\left( {X_{j} ,{\mathcal{G}}} \right)\) and \(X_{j} \in de\left( {X_{i} ,{\mathcal{G}}} \right)\). A v-structure is a structure of \(A \to B \leftarrow C\) with constraint that \(A\) is not adjacent to \(C\), and the vertex \(B\) is called a collider.

A DAG encodes the conditional independence (CI) relations induced by d-separation25. When two DAGs are Markov equivalent, their conditional independence relations are the same26,27. According to Pearl et al.28, if two DAGs have the same skeleton and colliders, then they are equivalent. An equivalence class can be represented by a CPDAG \({\mathcal{G}}^{*}\). Given a consistent background knowledge set K with respect to \({\mathcal{G}}^{*}\), it is possible that some undirected edges in \({\mathcal{G}}^{*}\) can be oriented, resulting in a partially directed graph \({ \mathcal{H}}\). A maximal PDAG (MPDAG)13 can be constructed by orienting some undirected edges in \({\mathcal{H}}\) using Meek’s criteria16.

Let \(P_{{\mathcal{G}}}\) be the joint distribution of a given DAG \({\mathcal{G}}\). The causal Markov assumption indicates that \(P_{{\mathcal{G}}}\) can be decomposed as \(P\left( {x_{1} , \ldots ,x_{n} } \right) = \mathop \prod \limits_{i = 1}^{n} P\left( {x_{1} |pa\left( {x_{1} ,{\mathcal{G}}} \right)} \right)\). If the conditional independencies in \(P_{{\mathcal{G}}}\) and in DAG \({\mathcal{G}}\) are the same, we say that \(P_{{\mathcal{G}}}\) is faithful to \({\mathcal{G}}\). In this paper, we also assume that there is no hidden variable or selection bias.

PC algorithm

PC (Peter and Clark) algorithm can find the global structure of high-dimensional CPDAG10. The PC algorithm utilizes CI tests to determine the skeleton and then employs Meek rules to establish the direction of edges. The PC-stable algorithm improved the oracle PC algorithm by removing part of its order-dependence9. The PC-stable algorithm, outlined in Algorithm 1, includes three main steps. Step 1 uses CI tests to ascertain the skeleton of DAG and separation sets between vertices. Then, Step 2 and 3 orient the remaining undirected arcs. The PC-stable algorithm is able to take prior knowledge into consideration. The PC algorithm is computationally efficient and is capable to learn large CPDAG of hundreds of variables29,30,31.

Algorithm 1. The PC PC-stable algorithm

Conditional independence conditions for marginal causal relation

Prior knowledge constitutes a set of constraints during structure learning. In this study, we take both positive marginal causal knowledge and negative marginal causal knowledge into consideration. A positive marginal causal relation between two vertices A and B indicates that there exists a directed path from A to B (i.e., \(A \in an\left( B \right)\)), denoted by \(A \dashrightarrow B\). Similarly, a negative marginal causal relation indicates no directed path from A to B (i.e., \(A \notin an\left( B \right)\)), denoted by  . In structure learning algorithms, we use whitelist marginal prior causal knowledge and blacklist marginal prior causal knowledge to denote a positive marginal causal relation and a negative marginal causal relation, respectively. In this section, we will show several properties of marginal prior causal knowledge. First, the concept of the minimal separating set is introduced.

. In structure learning algorithms, we use whitelist marginal prior causal knowledge and blacklist marginal prior causal knowledge to denote a positive marginal causal relation and a negative marginal causal relation, respectively. In this section, we will show several properties of marginal prior causal knowledge. First, the concept of the minimal separating set is introduced.

Definition 1

(minimal separating set). Given a directed graph \({ \mathcal{G}}\left( {{\varvec{V}},{\varvec{E}}} \right)\), a pair of vertices \(X,Y \in {\varvec{V}}\), and a set \(S \subset {\varvec{V}}\) such that \(X,Y \notin S\) and \(X \bot Y|S\). We say that S is a minimal separating set of X and Y if there is no subset of it \(S^{\prime} \subset S\) such that \(X \bot Y|S^{\prime}\), represented by \(msep\left( {X,Y} \right)\).

Definition 1 means that a separating set is minimal if and only if none of its subsets separates the target node pair. Note that, the separation sets outputted from PC-based algorithms are the minimal separating sets of node pairs. PC-based algorithms subsequently increase the number of vertices in a separating set during the adjacency phase and output the minimal separating set. Minimal separating sets are important for linking marginal prior causal knowledge with statistical conditional independence tests, as stated in the following lemma by Pearl32.

Lemma 1

Given a directed graph \({\mathcal{G}}\left( {{\varvec{V}},{\varvec{E}}} \right)\), a pair of nodes \(X,Y \in {\varvec{V}}\), and a set S is a minimal separating set of X and Y if \(S \cap \left( {an\left( X \right) \cup an\left( Y \right)} \right) = S\).

With Lemma 1 and the pairwise independencies provided by Koller and Friedman33, we can efficiently find a minimal separating set of node pair X and Y if we have the marginal causal relation between X and Y, and obtain the following theorems.

Theorem 1

Let \({\mathcal{G}}\) be a DAG. For any two distinct non-adjacent vertices X and Y in \({\mathcal{G}}\). X is an ancestor of Y (marginal causal relation \(X \dashrightarrow Y\)) in \({\mathcal{G}}\) if every variable in the minimal separating set of X and Y is an ancestor of Y. Specifically, when the number of nodes in the minimal separation set S is 1, then node is an ancestor of Y and a descendant of X.

Theorem 2.

Let \({\mathcal{G}}\) be a DAG. For any two distinct non-adjacent vertices X and Y in \({\mathcal{G}}\). X is not an ancestor of Y (marginal causal relation  ) in \({\mathcal{G}}\) if every variable in the minimal separating set of X and Y is not a descendant of X.

) in \({\mathcal{G}}\) if every variable in the minimal separating set of X and Y is not a descendant of X.

Theorems 1 and 2 indicates that the marginal causal relations can be transformed into a necessary causal relation set based on the minimal separating sets, and minimal separating sets can be found using the conditional independence tests of observational data. Thus, we can incorporate marginal causal relations with observational data before learning the whole PDAG. With Theorems 1 and 2, we can iteratively decompose the marginal causal relations into necessary causal relations.

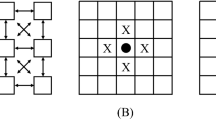

Example 1

Figure 1 is an example of Theorem 1. Figure 1a is the underlying true DAG \({\mathcal{G}}\). Given the complete undirected graph \({\mathcal{C}}\) in Fig. 1b and prior knowledge that A is an ancestor of E (\(A \dashrightarrow E\)), we would like to find the corresponding necessary causal relations. Note that, the true DAG is unknown. Suppose that we also have conditional independence, which states that A and E are independent with respect to separating set \(\left\{ {C,D} \right\}\) and that A and E are dependent with respect to only C or D or \(\emptyset\), i.e., \(\left\{ {C,D} \right\} \in msep\left( {A,E} \right)\). As shown in Fig. 1c, based on Theorem 1, the necessary causal relation of prior knowledge \(A \dashrightarrow E\) is that E is a descendant of C and D (\(C \dashrightarrow E\) and \(D \dashrightarrow E\)).

An example to illustrate Theorem 1.

Example 1 shows that we can transform marginal prior causal knowledge into necessary causal relations. In addition to leveraging the conditional independence property of marginal causal relations, we also utilize the topological order property33 inherent in marginal causal relations. By applying Causal Relation Scanning Algorithm (Algorithm 2) to the positive and negative marginal causal relations obtained through the conditional independence property, we derive a new topological order relation among the nodes.

Algorithm 2. Causal Relation Scanning Algorithm

Marginal Prior Causal Knowledge PC (MPPC) algorithm

We now propose the Marginal Prior Causal Knowledge PC (MPPC) algorithm. MPPC algorithm can be treated as a modification of the PC-stable algorithm with marginal prior causal knowledge. The main modification of the MPPC algorithm is in the adjacency phase, which is encoded in the pseudo-code of Algorithm 3, lines 1–24.

Originally, the adjacency phase consisted of creating a complete undirected graph and testing conditional independence of every pair of vertices based on a threshold \(\alpha\). The skeleton graph is estimated after the adjacency search phase. We modified the adjacency phase by adding the testing procedure of marginal causal relations based on the prior knowledge set \({\mathbf{K}} = \left\{ K \right\}_{i = 1}^{M}\) and Theorem 1 and 2, where each \(K_{i}\) is of the form \(X_{i} \dashrightarrow X_{j}\) or  , \(X_{i} ,X_{j} \in V\). This means that for every variable-pair in K, we record the minimal separating set according to the conditional independence test and add new marginal causal relations to K based on Theorems 1 and 2. After the adjacency phase, we orient the edges in skeleton to the direction of causal relation set K according to the acyclic assumption, that is, for any \(X_{i} \dashrightarrow X_{j}\) in K, if \(X_{i} - X_{j}\) in the skeleton, then we orient \(X_{i} - X_{j}\) as \(X_{i} \to X_{j}\).

, \(X_{i} ,X_{j} \in V\). This means that for every variable-pair in K, we record the minimal separating set according to the conditional independence test and add new marginal causal relations to K based on Theorems 1 and 2. After the adjacency phase, we orient the edges in skeleton to the direction of causal relation set K according to the acyclic assumption, that is, for any \(X_{i} \dashrightarrow X_{j}\) in K, if \(X_{i} - X_{j}\) in the skeleton, then we orient \(X_{i} - X_{j}\) as \(X_{i} \to X_{j}\).

It is worth noting that Algorithm 3 applies not only to marginal prior causal knowledge, but also to a mixture of marginal prior causal knowledge and direct prior knowledge, since direct prior knowledge can be treated as marginal prior causal knowledge between adjacent vertices. If prior knowledge set K includes a subset of direct prior knowledge, there will be directed arcs reflecting this direct prior knowledge in the final graph.

For the v-structure phase, we find every unshielded triple \(X_{i} - X_{j} - X_{k}\) in the skeleton and test whether \(X_{j}\) is in the separating set of \(X_{i}\) and \(X_{k}\). If \(X_{j}\) does not exist in every separating set of \(X_{i}\) and \(X_{k}\) we obtained from adjacency phase, then \(X_{i} - X_{j} - X_{k}\) is marked as a v-structure \(X_{i} \to X_{j} \leftarrow X_{k}\). Finally, we apply Meek rules in the orientation phase, which is similar to the oracle PC-stable algorithm. These rules are applied repeatedly until no additional edges can be oriented, resulting in a MPDAG16,27.

Example 2

Figure 2 is an example of how MPPC works. Figure 2a shows the true DAG \({\mathcal{G}}\). Suppose that we have background knowledge that states that A is an ancestor of E (\(A \dashrightarrow E\)). The background knowledge and complete undirected graph \({\mathcal{C}}\) are shown in Fig. 2b. Figure 2c–e shows the adjacency step of MPPC algorithm, where l is the number of vertices in the separating sets. From Fig. 2c to d, three edges are removed, and two causal relations (\(A \dashrightarrow B\) and \(B \dashrightarrow E\)) are added based on prior knowledge \(A \dashrightarrow E\) and conditional independence \(A \bot E|B\). From Fig. 2d to e, two additional causal relations are added similarly based on Theorem 1. Figure 2f shows the maximal partial directed graph resulting from orienting the skeleton in Fig. 2e. In addition to \(\left\{ {A \to B,C \to E,D \to E} \right\}\), \(B - C\) and \(B - D\) are oriented as \(B \to C\) and \(B \to D\), respectively, since \(A \to B - C\) and \(A \to B - D\) are not v-structure based on separating sets. Thus, Fig. 2f and the causal relations in Fig. 2e are the outputs of the MPPC algorithm.

Algorithm 3. MPPC algorithm

Simulations

In the experimental simulations, we use the random.graph function in R package bnlearn to randomly generate the structure of DAGs and randomly choosing \(p \in \left\{ {10,{ }20,{ }30} \right\}\) percent of marginal causal relations according to the generated DAGs. For real-world networks, we use ECOLI70, MAGIC-IRRI and ARTH150 in the Bayesian Network Repository34. Then, we use linear structural equation models to generate synthetic data sets based on the structures. We compare the MPPC method with PC-stable9 (with only directed prior knowledge, denoted by PC(d_exp)) algorithm, PC-stable (with marginal and directed prior knowledge, denoted by PC(all_exp)), the PC-PDAG algorithm proposed by Borboudakis and Tsamardinos12 (denoted by PC-PDAG), and Max–Min Hill-Climbing algorithm35 (denoted by MMHC). We also use the MINOBSx24 method as a comparative approach in the instance analysis based on the ALARM network. Note that, PC-PDAG algorithm needs PDAG as input, thus we use the PDAG learned from PC-stable algorithm without prior knowledge as suggested by Borboudakis and Tsamardinos12. In all experiments, PC-stable algorithm and MMHC algorithm is called from R-package bnlearn and PC-PDAG algorithm is called from MATLAB function provided by Borboudakis and Tsamardinos12.

We generate random DAGs \({\mathcal{G}}\) with n nodes and mean in-degree d, where n is chosen from \(\left\{ {50,{ }100,{ }150,200,250} \right\}\) and d is chosen from \(\left\{ {1,{ }2,{ }3,{ }4} \right\}\). For a sampled \({\text{G}}\left( {n,d} \right)\) graph, we draw an edge weight \(\beta_{ij}\) from a Uniform distribution, \({\text{U}}\left( {0.8,1.6} \right)\), for each directed edge \(X_{i} \to X_{j}\) in \({\mathcal{G}}\). We use the following linear structured equation model to simulate data,

where \(\in_{1} , \ldots , \in_{n}\) are independent \({\mathcal{N}}\left( {0,1} \right)\) noises36. We then generate \( N = 2000,10000\) samples for every simulated DAG. The marginal prior causal knowledge set K with respect to \({\mathcal{G}}\) was generated by randomly choosing \(p \in \left\{ {10,{ }20,{ }30} \right\}\) percent of marginal causal relations according to \({\mathcal{G}}\), i.e., variable pairs such as \(\left( {X,Y} \right)\), where X is an ancestor of Y (\(X \dashrightarrow Y\)) or X is not an ancestor of Y ( ). For each situation, we generated 100 (\({\mathcal{G}},{\mathbf{K}}\)) pairs.

). For each situation, we generated 100 (\({\mathcal{G}},{\mathbf{K}}\)) pairs.

We use precision, recall, F1 score, Structural Hamming Distance (SHD) and Constraint Satisfaction Rate22 (CSR) to evaluate and compare the performances of different methods. For each (\({\mathcal{G}},{\mathbf{K}}\)) pair in the simulation and experimental studies, we computed the corresponding MPDAG and used the MPDAG as the gold standard for comparison with the estimated network. Given that the outputs of MMHC algorithm and MINOBSx algorithm are DAGs, we also reformulated the criterion for True Positive: for an undirected edge in an MPDAG, an edge in the estimated network with any direction is considered a True Positive. Precision is defined as \({\text{Precision}} = \frac{{\text{Ture Positives}}}{{\left( {{\text{Ture Positives}} + {\text{False Positives}}} \right)}}\) and recall is defined as \({\text{Recall}} = \frac{{\text{Ture Positives}}}{{\left( {{\text{Ture Positives}} + {\text{False Negatives}}} \right)}}\). F1 score is defined as \({\text{ F}}1 = 2 \times \frac{precision \times recall}{{precision + recall}}\). The SHD between two PDAGs, as implemented in bnlearn35, measures the distance between the underlying true graph and the learned graph. The CPU time and the total number of statistical tests (number of calls to test conditional independence) are used to evaluate the algorithm efficiency.

Evaluation with real-world networks and dataset

In addition to the randomized networks, three large real-world Gaussian BN taken from the bnlearn repository were also utilized: ECOLI7037, MAGIC-IRRI38 and ARTH15039. ECOLI70 is a network with 46 nodes and 70 arcs, representing the protein-coding genes of E. coli. MAGIC-IRRI is a large network with 64 nodes and 102 arcs, modeling multiple traits in plant genetics. The third network is a large network, ARTH150, with 107 nodes and 150 arcs, capturing gene expression and proteomics data of Arabidopsis thaliana. The BN was used to generate data, with the sample size set to 2000. The marginal prior causal knowledge set K with respect to the networks was generated by randomly choosing \(p \in \left\{ {10,{ }20,{ }30} \right\}\) percent of the marginal causal relations. We generated 100 (observational data, knowledge) pairs from each of the three networks.

We utilize the datasets provided by Beinlich40 to compare the effectiveness and efficacy of various structure learning methodologies including MINOBSx algorithm. ALARM is a medium network with 37 nodes and 46 arcs. The marginal prior causal knowledge set K with respect to the networks was generated by randomly choosing \(p \in \left\{ {5,{ }10,{ }20} \right\}\) percent of the marginal causal relations. To evaluate the performance of different methods, we calculate the CSR, SHD and running time.

Ethics approval and patient consent statement

Ethical approval was not sought, because this study only involved public networks and data.

Results

Evaluation with random DAGs

Figures 3 and 4 show the experimental results for randomly generated causal models, with 10,000 samples, \(p = 10,{ }20,{ }30\) and \({\text{n}} = 50,{ }100,{ }150,200,250\) (Fig. 3), and \(p = 10,{ }20,{ }30\) and \({\text{d}} = 1,{ }2,{ }3,{ }4\) (Fig. 4). The average F1 score (Figs. 3A and 4A), SHD (Figs. 3B and 4B) and CSR (Figs. 3C and 4C) of five methods (PC-stable with only directed prior knowledge, PC-stable with all knowledge, MMHC, PC-PDAG and MPPC) are reported. The results of precision and recall, and results on randomly generated graphs with 2000 samples can be found in Supplement S.2.

We first compare the effectiveness of MPPC algorithm with other methods. Clearly, the F1 score of the MPPC algorithm is statistical significantly higher than that of the other methods (Supplement Table S2), and the SHD of the MPPC algorithm is statistical significantly lower than that of the other methods (Supplement Table S1), especially when the number of vertices is large. The CSR results indicate that the MPPC algorithm consistently achieves a high CSR, close to 1, in all cases. This demonstrates that the MPPC algorithm is more effective at incorporating prior knowledge compared to other structure learning methods. While PCPDAG method has poorer CSR and SHD than MPPC. This is because PC-PDAG needs to learn the PDAG using PC without knowledge and then incorporate marginal prior causal knowledge with the learned PDAG. If the PDAG is wrong, PC-PDAG algorithm can hardly incorporate marginal prior causal knowledge since the knowledge may conflict with the learned wrong graph. When the number of vertices or edge degree is large, the probability of an incorrect PDAG being learned from PC is larger. The performance of MPPC is usually better than other methods since it directly incorporates marginal prior causal knowledge with observational data and statistical tests during structure learning.

We next compare the efficiency of MPPC algorithm with other methods. The average number of statistical tests (Fig. 5A) and CPU time (Fig. 5B) are reported in Fig. 5. The CPU time of PC-PDAG method is the total time needed to learn the PDAG and incorporate the prior knowledge. The results show that it takes significantly longer to estimate the maximal PDAG when the number of vertices is large. The figure suggests that the MPPC method is faster than other constraint-based methods and is similar to MMHC method. The number of CI tests of MPPC is smaller than that of other methods. Besides, the running time of PC-PDAG significantly increases but that of MPPC is stable when the percentage of prior knowledge increases. This is because PC-PDAG needs to learn a PDAG and then recurrently incorporate each prior knowledge, while MPPC can decrease the number of statistical tests using prior knowledge.

Evaluation with real networks

The results of the real-world networks are given in Fig. 6. Similar to the simulation results of randomly generated graphs. The F1 score (the first row of Fig. 6) of MPPC algorithm is higher than that of other methods and the SHD (the second row of Fig. 6) of MPPC is lower. The CSR results indicate that the MPPC algorithm consistently achieves a high CSR. The F1 score of MPPC increases significantly when prior knowledge increases. On the other hand, the CPU time and the number of statistical tests of the MPPC algorithm are lower than those of the other methods in large networks (Supplement S.3).

Evaluation with real dataset

We apply the methods to ALARM dataset of Beinlich40. The results of MPPC, PC-PDAG and MINOBSx method are presented in Table 1, which provides a summary of the CSR, SHD and CPU time. The results indicate that the MPPC method exhibits better performance than PC-PDAG, but a slight inferiority to the MINOBSx method in ALARM network. However, MPPC algorithm has significantly faster computational speed compared to MINOBSx algorithm.

Discussion

Causal discovery from observational data has been used in numerous fields. In certain scenarios, researchers may possess prior knowledge in the form of marginal causal relations. We propose a constraint-based algorithm to estimate the maximal PDAG from observational data with marginal prior causal knowledge. We provide conditional independence properties to utilize marginal causal relations. Based on these properties, we propose a constraint-based structure learning algorithm with marginal prior causal knowledge, the MPPC algorithm, to estimate the maximal PDAG. The experiment results show that the network structure estimated by the MPPC algorithm in large networks is closer to the real structure, with a lower SHD compared to other structure learning algorithms. The MPPC algorithm reduces the number of conditional independence tests compared to the PC-PDAG algorithm, thereby lowering the computational burden. Additionally, the MPPC algorithm effectively incorporates marginal causal relations, resulting in estimated networks that satisfy a higher proportion of prior knowledge. For small and medium networks, the MINOBSx algorithm achieves higher accuracy in the estimated network structure but requires significantly more computational time, which can be up to tens of times more than that required by the MPPC algorithm. The proposed MPPC algorithm offers a trade-off between accuracy and efficiency. In the context of practical studies, researchers can select more applicable structure learning algorithms according to their specific requirements.

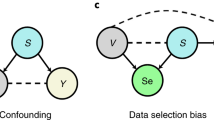

Our study has limitations. Firstly, we considered only directed marginal causal relationships in this study, including positive marginal causal relations (\(X \dashrightarrow Y\)) and negative marginal causal relationships ( ). However, undirected marginal relations without clear direction (\(X - - Y\)) may exist in practice. These marginal undirected relations might arise from directed causal relations or confounding paths between nodes. Additionally, in the presence of unmeasured variables, such associations could result from selection bias. Integrating marginal undirected relations with structure learning algorithms remains a direction for future research. Furthermore, we have only considered continuous or discrete variables in our study and have not addressed the case of mixed-type data. Adapting the algorithm for mixed-type data requires modifying the conditional independence test. In addition, our proposed MPPC algorithm dose not account for latent variables or selection bias. A possible future work is to examine statistical properties of marginal causal relations under the framework of Maximal Ancestral Graphs41 (MAGs) and incorporating marginal prior causal knowledge with the FCI41,42 algorithm to deal with hidden variables and selection bias. In our experiments, we randomly select the marginal prior causal knowledge from all possible variable pairs, which may cause a waste of knowledge. It is worth considering the amount of information of different causal relations to improve the efficiency of algorithm43. Another possible extension is to consider the degree of belief in each causal prior knowledge44.

). However, undirected marginal relations without clear direction (\(X - - Y\)) may exist in practice. These marginal undirected relations might arise from directed causal relations or confounding paths between nodes. Additionally, in the presence of unmeasured variables, such associations could result from selection bias. Integrating marginal undirected relations with structure learning algorithms remains a direction for future research. Furthermore, we have only considered continuous or discrete variables in our study and have not addressed the case of mixed-type data. Adapting the algorithm for mixed-type data requires modifying the conditional independence test. In addition, our proposed MPPC algorithm dose not account for latent variables or selection bias. A possible future work is to examine statistical properties of marginal causal relations under the framework of Maximal Ancestral Graphs41 (MAGs) and incorporating marginal prior causal knowledge with the FCI41,42 algorithm to deal with hidden variables and selection bias. In our experiments, we randomly select the marginal prior causal knowledge from all possible variable pairs, which may cause a waste of knowledge. It is worth considering the amount of information of different causal relations to improve the efficiency of algorithm43. Another possible extension is to consider the degree of belief in each causal prior knowledge44.

Data availability

All the data are publicly available at https://github.com/YuYF97/MPPC and https://doi.org/https://doi.org/10.1126/science.1105809. All the code is availability at https://github.com/YuYF97/MPPC.

References

Flores, M. J., Nicholson, A. E., Brunskill, A., Korb, K. B. & Mascaro, S. Incorporating expert knowledge when learning Bayesian network structure: A medical case study. Artif. Intell. Med. 53, 181–204 (2011).

Asvatourian, V., Leray, P., Michiels, S. & Lanoy, E. Integrating expert’s knowledge constraint of time dependent exposures in structure learning for Bayesian networks. Artif. Intell. Med. 107, 101874 (2020).

Rasmussen, Z. A. et al. Examining the relationships between early childhood experiences and adolescent and young adult health status in a resource-limited population: A cohort study. PLOS Med. 18, e1003745 (2021).

Friedman, N., Linial, M., Nachman, I. & Peer, D. Using Bayesian networks to analyze expression data. In Proceedings of The Fourth Annual International Conference on Computational Molecular Biology 127–135 (2000).

Pearl, J. Causality (Cambridge University Press, 2009).

Spirtes, P. & Glymour, C. An algorithm for fast recovery of sparse causal graphs. Soc. Sci. Comput. Rev. 9, 62–72 (1991).

Margaritis, D. & Thrun, S. Bayesian network induction via local neighborhoods. Adv. Neural Inf. Process. Syst. 12, 145 (1999).

Tsamardinos, I., Aliferis, C. F. & Statnikov, A. Time and sample efficient discovery of Markov blankets and direct causal relations. In Proceedings of the Ninth ACM SIGKDD International Conference on Knowledge Discovery and Data Mining 673–678 (2003).

Colombo, D. & Maathuis, M. H. Order-independent constraint-based causal structure learning. J. Mach. Learn. Res. 15, 3921–3962 (2014).

Spirtes, P., Glymour, C. & Scheines, R. Causation, Prediction, and Search (Springer, Uk, 2001).

Borboudakis, G., Triantafilou, S., Lagani, V. & Tsamardinos, I. A constraint-based approach to incorporate prior knowledge in causal models. In ESANN (2011).

Borboudakis, G. & Tsamardinos, I. Incorporating causal prior knowledge as path-constraints in bayesian networks and maximal ancestral graphs. In Proceedings of the 29th International Coference on International Conference on Machine Learning 427–434 (2012).

Fang, Z. & He, Y. Ida with background knowledge. In Conference on Uncertainty in Artificial Intelligence 270–279 (PMLR, 2020).

Global Burden of Disease Liver Cancer Collaboration et al. The burden of primary liver cancer and underlying etiologies from 1990 to 2015 at the global, regional, and national level: Results from the global burden of disease study 2015. JAMA Oncol. 3, 1683 (2017).

Amar, D., Sinnott-Armstrong, N., Ashley, E. A. & Rivas, M. A. Graphical analysis for phenome-wide causal discovery in genotyped population-scale biobanks. Nat. Commun. 12, 350 (2021).

Meek, C. Causal inference and causal explanation with background knowledge. In Proceedings of the Eleventh conference on Uncertainty in artificial intelligence 403–410 (1995).

Angelopoulos, N. & Cussens, J. Bayesian learning of Bayesian networks with informative priors. Ann. Math. Artif. Intell. 54, 53–98 (2008).

Cooper, G. F. & Herskovits, E. A Bayesian method for the induction of probabilistic networks from data. Mach. Learn. 9, 309–347 (1992).

Heckerman, D., Geiger, D. & Chickering, D. M. Learning Bayesian networks: The combination of knowledge and statistical data. Mach. Learn. 20, 197–243 (1995).

He, Y., Jia, J. & Yu, B. Counting and exploring sizes of Markov equivalence classes of directed acyclic graphs. J. Mach. Learn. Res. 16, 2589–2609 (2015).

Chen, E. Y.-J., Shen, Y., Choi, A. & Darwiche, A. Learning Bayesian networks with ancestral constraints. In Advances in Neural Information Processing Systems, vol. 29 (eds. Lee, D. et al.) (Curran Associates, Inc., 2016).

Wang, Z., Gao, X., Yang, Y., Tan, X. & Chen, D. Learning Bayesian networks based on order graph with ancestral constraints. Knowl. Based Syst. 211, 106515 (2021).

Wang, Z., Gao, X., Tan, X. & Liu, X. Learning Bayesian networks using A* search with ancestral constraints. Neurocomputing 451, 107–124 (2021).

Li, A. & van Beek, P. Bayesian network structure learning with side constraints. In Proceedings of the Ninth International Conference on Probabilistic Graphical Models, vol 72 (eds. Kratochvíl, V. & Studený, M.) 225–236 (PMLR, 2018).

Pearl, J. Probabilistic Reasoning in Intelligent Systems: Networks of Plausible Inference (Morgan kaufmann, 1988).

Verma, T. S. & Pearl, J. Equivalence and synthesis of causal models. In Probabilistic and Causal Inference: The Works of Judea Pearl 221–236 (2022).

Andersson, S. A., Madigan, D. & Perlman, M. D. A characterization of Markov equivalence classes for acyclic digraphs. Ann. Stat. 25, 505–541 (1997).

Pearl, J., Geiger, D. & Verma, T. Conditional independence and its representations. Kybernetika 25, 33–44 (1989).

Kalisch, M. et al. Understanding human functioning using graphical models. BMC Med. Res. Methodol. 10, 14 (2010).

Stekhoven, D. J. et al. Causal stability ranking. Bioinformatics 28, 2819–2823 (2012).

Zhang, X. et al. Inferring gene regulatory networks from gene expression data by path consistency algorithm based on conditional mutual information. Bioinformatics 28, 98–104 (2012).

Tian, J., Paz, A. & Pearl, J. Finding Minimal D-Separators (Citeseer, 1998).

Koller, D. & Friedman, N. Probabilistic Graphical Models: Principles and Techniques (MIT press, 2009).

Scutari, M. Learning Bayesian networks with the bnlearn R package. J. Stat. Softw. 35, 456 (2010).

Tsamardinos, I., Brown, L. E. & Aliferis, C. F. The max-min hill-climbing Bayesian network structure learning algorithm. Mach. Learn. 65, 31–78 (2006).

Fang, Z., Liu, Y., Geng, Z., Zhu, S. & He, Y. A local method for identifying causal relations under Markov equivalence. Artif. Intell. 305, 103669 (2022).

Schäfer, J. & Strimmer, K. A shrinkage approach to large-scale covariance matrix estimation and implications for functional genomics. Stat. Appl. Genet. Mol. Biol. 4, 456 (2005).

Scutari, M., Howell, P., Balding, D. J. & Mackay, I. Multiple quantitative trait analysis using Bayesian networks. Genetics 198, 129–137 (2014).

Opgen-Rhein, R. & Strimmer, K. From correlation to causation networks: A simple approximate learning algorithm and its application to high-dimensional plant gene expression data. BMC Syst. Biol. 1, 37 (2007).

Beinlich, I. A., Suermondt, H. J., Chavez, R. M. & Cooper, G. F. The ALARM monitoring system: A case study with two probabilistic inference techniques for belief networks. In AIME 89, vol. 38 (eds. Hunter, J. et al.) 247–256 (Springer, 1989).

Richardson, T. & Spirtes, P. Ancestral graph Markov models. Ann. Stat. 30, 962–1030 (2002).

Zhang, J. On the completeness of orientation rules for causal discovery in the presence of latent confounders and selection bias. Artif. Intell. 172, 1873–1896 (2008).

He, Y.-B. & Geng, Z. Active learning of causal networks with intervention experiments and optimal designs. J. Mach. Learn. Res. 9, 2523–2547 (2008).

Tan, M., AlShalalfa, M., Alhajj, R. & Polat, F. Combining multiple types of biological data in constraint-based learning of gene regulatory networks. In 2008 IEEE Symposium on Computational Intelligence in Bioinformatics and Computational Biology 90–97 (IEEE, 2008).

Acknowledgements

We want to acknowledge the investigators of the bnlearn repository.

Funding

FX was supported by the National Key Research and Development Program of China under Grant 2020YFC2003500.

Author information

Authors and Affiliations

Contributions

FX and HL conceived the study. YY, LH and XL contributed to the simulation analysis. SW a contributed to figures. YY, HL and FX wrote and modified the manuscript. All authors reviewed and approved the final manuscript.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Yu, Y., Hou, L., Liu, X. et al. A novel constraint-based structure learning algorithm using marginal causal prior knowledge. Sci Rep 14, 19279 (2024). https://doi.org/10.1038/s41598-024-68379-7

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-024-68379-7