Abstract

Net radiation (Rn), a critical component in land surface energy cycling, is calculated as the difference between net shortwave radiation and longwave radiation at the Earth’s surface and holds significant importance in crop models for precision agriculture management. In this study, we examined the performance of four machine learning models, including extreme learning machine (ELM), hybrid artificial neural networks with genetic algorithm models (GANN), generalized regression neural networks (GRNN), and random forests (RF), in estimating daily Rn at four representative sites across different climatic zones of China. The input variables included common meteorological factors such as minimum and maximum temperature, relative humidity, sunshine duration, and shortwave solar radiation. Model performance was assessed and compared using statistical parameters such as the correlation coefficient (R2), root mean square errors (RMSE), mean absolute errors (MAE), and Nash–Sutcliffe coefficient (NS). The results indicated that all models slightly underestimated actual Rn, with linear regression slopes ranging from 0.810 to 0.870 across different zones. The estimated Rn was found to be comparable to observed values in terms of data distribution characteristics. Among the models, the ELM and GANN demonstrated higher consistency with observed values, exhibiting R2 values ranging from 0.838 to 0.963 and 0.836 to 0.963, respectively, across varying climatic zones. These values surpassed those of the RF (0.809–0.959) and GRNN (0.812–0.949) models. Additionally, the ELM and GANN models showed smaller simulation errors in terms of RMSE, MAE, and NS across the four climatic zones compared to the RF and GRNN models. Overall, the ELM and GANN models outperformed the RF and GRNN models. Notably, the ELM model's faster computational speed makes it a strong recommendation for Rn estimates across different climatic zones of China.

Similar content being viewed by others

Introduction

Solar radiation is the essential energy source driving biological and physical processes on the Earth's surface1,2. Accurate assessment and prediction of solar radiation form the foundation for precision agriculture management, including quantifying crop water requirements and evaluating crop productivity3. However, instrumentation for measuring solar radiation is installed only at selected sites within specific regions, constrained by cost and technological limitations4,5,6,7,8,9. For instance, China has 752 national weather stations, but fewer than one-fifth are equipped to observe solar radiation10. Similarly, in America, the ratio of stations observing solar radiation to those observing air temperature is less than 1:10011. This scarcity underscores the importance of estimating solar radiation using climatic variables12.

Numerous models and techniques have been proposed for estimating solar radiation, encompassing the Angström–Prescott linear regression model, the Annandale method, and various machine learning (ML) models9,13. Among them, ML models, particularly artificial neural networks (ANN), have emerged as promising methods for solar radiation estimation14,15,16,17,18. Wang18 compared the accuracy of multilayer perceptron (MLP) models, GRNN models and radial basis neural network (RBNN) models in estimating daily solar radiation; results showed that MLP and RBNN models performed better than GRNN and the improved Bristow Campbell model. These studies highlighted the good potential for solar energy applications across various regions.

New ML methods have also been introduced for solar radiation simulation, such as the wavelet-coupled support vector machine (W-SVM) approach19, the Wavelet Transform (WT) algorithm incorporated into the SVM model20, and the extreme learning machine (ELM)21,22. These novel approaches have demonstrated superiority over traditional models in terms of efficiency, viability, and accuracy.

Nevertheless, while ML models have proven effective in estimating or predicting solar radiation, their performance may differ across stations and climatic regions. Moreover, existing studies primarily focus on solar radiation estimation using ML models, leaving the applicability of ML models to estimate all-wave net surface radiation (Rn) unexplored. Rn, representing the net flux density of radiation at the Earth's surface, plays a vital role in biological reactions such as photosynthesis and physical processes like water heat flux cycling23,24,25, thus Rn is generally considered as a most important input variable in calculating crop evapotranspiration and crop growth model. Although approximately 120 stations observe global solar radiation within China's meteorological network, even fewer are equipped with Rn monitoring instruments. Therefore, the development of applicable models for Rn estimation can furnish essential tools for investigating Earth's surface energy distribution, with implications for eco-hydrological processes, crop growth modeling, and solar energy development26,27.

An integration study focusing on Rn estimation using an ML model based on meteorological variables across diverse climatic zones has not been extensively explored, which motivates the present research. This study aims to estimate Rn through four ML models (the ELM, backpropagation neural networks optimized by a genetic algorithm (GANN), random forests (RF), and GRNN, and subsequently compare the performances of these models in Rn estimation across different climatic zones in China.

Materials and methods

Study sites and data sets

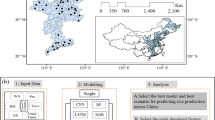

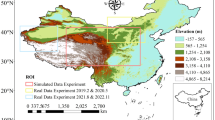

In the present study, four meteorological stations were strategically selected to represent different climatic zones within China. These include the Urumqi station, situated in the temperate continental zone; the Lhasa station, located in the mountain plateau zone; Beijing, representing the temperate monsoon zone; and the Kunming station, indicative of the subtropical monsoon zone. The geographical distribution of these four stations is depicted in Fig. 1.

The temperate continental zone where Urumqi station is typically an arid region, characterized by low annual precipitation (approximately 300 mm yr−1, as detailed in Table 1). Conversely, the mountain plateau, temperate monsoon, and subtropical monsoon zones represented by Lhasa, Beijing, and Kunming stations are generally categorized as semi-arid, semi-humid, and humid regions. A summary of the meteorological variables in each station is available in Table 1.

The daily meteorological dataset used for this study was obtained from the National Meteorological Information Center of China (http://data.cma.cn/). This comprehensive dataset encompasses daily maximum air temperature (Tmax), minimum air temperature (Tmin), sunshine duration (S), relative humidity (RH), shortwave solar radiation (Rs), and net radiation (Rn) for the period 2000–2014. It is important to note that these datasets were subject to rigorous quality control by the National Meteorological Administration. This was carried out with standard data processing methods. Any gaps in the data, caused by issues such as instrument maintenance or power failures, were addressed using linear interpolation. This method ensures continuity and reliability in the data, facilitating more robust analyses and conclusions.

The Random Forest analysis was conducted to identify the environmental factors (Tmax, Tmin, S, RH and Rs) significantly affecting the response variable Rn using the“rfPermute”package28. As shown in Fig. 2. The importance of the climatic variables to Rn prediction followed the order of RH, Rs, S, Tmin and Tmax in all stations, with the RH as the most important variable. The importance of the same climatic variables to Rn also varied across the sites, as quantified by the Mean Decrease in Accuracy (%IncMSE).

Artificial intelligence models for solar radiation estimation

Extreme learning machine

The Extreme Learning Machine (ELM) represents a specific form of the Feedforward Neural Network (FFNN) model, as illustrated in Fig. 3. Structurally, an FFNN model typically encompasses three principal layers: the input layer (containing the input variables), the hidden layer (comprising neurons), and the output layer (yielding the predicted Rn in this study).

Distinctively, the ELM model permits random assignment of the input weights and analytical determination of the output weight. This leads to advantages such as superior learning efficiency and enhanced generalization capability as compared to traditional FFNN models. Within the ELM framework, various input parameters can be systematically adjusted, ensuring the integrity of mapping between different layers. Conversely, in traditional approaches, the parameters of the hidden layers are assigned randomly, without subsequent modification.

The net result of this architectural distinction is that the learning or training efficiency of the ELM model is typically far greater than that of conventional feed-forward network learning algorithms29,30. For an in-depth exploration of the theoretical foundations and applications of ELM, readers are directed to several seminal works in the field20,29,30,31,32,33,34.

In an FFNN model, multiple neurons facilitate the connections between the distinct layers of the network. Assume that the number of input variables is denoted as n and the number of output variables is represented as m. Correspondingly, the input layer of the FFNN model will consist of n neurons, while the output layer will comprise m neurons. Interposed between these input and output layers, the hidden layer will incorporate l neurons. This structure establishes a hierarchical framework for information processing, with each layer transforming the input data towards the desired output through the application of weights, biases, and activation functions. The connection weight w was assumed as follows:

where wij is the weight between the ith neuron in the input layer and the jth neuron in the hidden layer.Similarly, the connection weights β between the neurons in the hidden layer and output layer is described as:

Assuming b for hidden layer neuron is:

The input matrix X is estimated as:

The output matrix Y is estimated as:

Therefore, T is constructed as follows:

where \({\varvec{w}}_{i} = \, [w_{i1} ,w_{i2} , \cdots ,w_{in} ]\); \({\varvec{x}}_{j} = \, [x_{1j} ,x_{2j} , \cdots ,x_{nj} ]^{T}\). g(x) is the activation function.

Equation (6) is rewritten as:

where Tʹ represents the transposition of T; H represents the hidden layer output matrix, and it is presented as:

ELM can study l different samples with tiny errors32,35, since it assigns the random variables to the hidden nodes and computes the output weights by matrix H, even though the number of hidden neurons is less than that of distinct samples21. There are two principles in the ELM model:

Theorem 1

Assuming that a given FFNN l additive nodes, and g(x) is differentiable in any intervals, the H is invertible and \(\left\| {\user2{H\beta - T}^{\prime } } \right\| = 0\)32,35.

Theorem 2

Given any small error ε > 0, and g(x) is infinitely differentiable in any intervals, there exists l ≤ n such that any different input vectors are generated according to any continuous probability distribution \(\left\| {{\varvec{H}}_{N \times M} {\varvec{\beta}}_{M \times m} - \user2{T^{\prime}}} \right\| < \varepsilon\) with a probability of 132,35.

Based on Theorem 1, we can derive:

where yi = [y1j, y2j, ···, ymj]T (j = 1, 2, ···, Q).

In many cases, the number of hidden neurons is smaller than the training data Q. Thus, according to Theorem 2:

ELM randomly determines w and b, and remains unchanged during training. The output weight β is determined by solving the least squares solution:

and the solution is:

where H + represents the generalized inverse of the matrix H33.

Artificial neural networks

The process of using an ANN model is mainly divided into two phases: training and testing. The training phase includes variable selection, data segmentation, and normalization. Within the network, independent neurons are connected by transfer functions, forming a pathway for information flow. The ANN begins by receiving data and learning through a process of fitness adjustment. The output results are compared to the target values, and if the error exceeds the expected threshold, forward propagation is replaced by backpropagation. This enables the network to change the weights and thresholds of neurons in each layer, reducing the error in signal transmission. The ANN can iteratively adjust the model's parameters by employing backpropagation to minimize the error between prediction and ground truth, resulting in the best possible prediction14. Many studies utilize the ANN model with the backpropagation learning algorithm (BPNN)36,37. Still, the BPNN's iterative nature to find the optimal parameters might result in local optima instead of global optima. Genetic algorithms can mitigate these issues with their capacity for extensive global search38. As a type of evolutionary algorithm, the genetic algorithm simulates natural selection and genetic mechanisms to find all solutions within the solution space rapidly. Unlike other methods, it avoids local optima and can leverage inherent parallelism for distributed computing, thus enhancing the solving speed. Therefore, the backpropagation ANN model was further optimized by incorporating the genetic algorithm (GANN). The flowchart detailing the GANN model can be found in Fig. 3.

Random forests

The RF algorithm, utilized in this study, is a supervised ensemble learning method that integrates multiple classification or regression trees. Building upon the bagging model, RF adds an extra layer of randomness by selecting samples for training at random to create independent decision trees, as depicted in Fig. 3. Each of these trees independently produces predictions through regression. The final output of the RF model is derived from the average value of these predictions. By employing many classifiers in an ensemble and utilizing regression techniques, the RF model exhibits a more robust potential for improving model performance compared to any single classifier or a typical ensemble of classifiers39. This robustness allows RF to handle high-dimensional regression tasks and complex nonlinear problems. Moreover, the risk of overfitting can be reduced through regression within the model. For comprehensive insights into the RF model and its applications, references such as Breiman, Karimi et al., Matin and Chelgani can be consulted39,40,41.

For an ensemble of classifiers \(h_{1} ({\varvec{X}})\),\(h_{2} ({\varvec{X}})\), …,\(h_{k} ({\varvec{X}})\), and with the training set drawn at random from the distribution of the random vector Y, X, the margin function between the two can be represented by:

where \(I( \cdot )\) denotes the indicator function. The margin assesses the extent of the average number of votes at X, Y for the right class surpasses the average vote for any other class. The generalization predicted value error of RF model (PE*) is estimated as:

If the decision trees number is large, two theorems are suitable for such conditions:

Theorem 3

As the number of decision trees increases, the sequences \({\varvec{\theta}}_{k}\), \(PE^{*}\) converges to:

The theorem shows that RF will not overfit as more trees are added, but will produce a limit of generalization error.

Theorem 4

The maximum generalization error is estimated as follows:

where \(\overline{\rho }\) represents the correlation expected value of individual classifier; \(s^{2}\) indicates the strength of the classifier. Theorem 2 reveals that the maximum limit of generalization error was reduced by increasing the strength and decreasing the correlation.

Generalized regression neural network

GRNN were first introduced by Specht42 and have been distinguished for their ability to approximate nonlinear relational mapping functions using training data. A GRNN typically consists of four layers, including the input layer, pattern layer, summation layer, and output layer, as depicted in Fig. 343. The pattern and summation layers are unique to GRNN, with the radial basis function serving as an activation function within the network. This structure underpins the fundamental principle of GRNN, which is nonlinear regression. Although GRNN shares some similarities with the common nonlinear multilayer feedforward network model (BPNN), the two models diverge in their underlying operations. A significant difference lies in GRNN's utilization of a global approximation algorithm, in contrast to the iterative training procedure of BPNN. Consequently, GRNN avoids the issues associated with local minima problems, a common challenge in BPNN33,42,44. Overall, the flexibility and non-iterative nature of GRNN position it as an effective tool for complex nonlinear modeling, with particular strengths in overcoming certain limitations of traditional neural network techniques. The basic formula involved in the GRNN model is given in Eq. (17).

where x and y represent the input and output variable, respectively,\(f(x,y)\) is the joint probability density function of x and y. \(E({y \mathord{\left/ {\vphantom {y X}} \right. \kern-0pt} X})\) is the mean value of y. The function value is calculated based on the following formula43 (Tabari et al., 2012):

where wij is the target output for input xi and output j, \(h_{i} = \exp ( - \frac{{D_{i}^{2} }}{{2\sigma^{2} }})\), represents the output of a hidden layer neuron;\(D_{i}^{2} = (x - u_{i} )^{{\hbox{T}}} (x - u_{i} )\) represents the squared distance between the input vector x and the training vector ui, σ is the width coefficient of the Gaussian function.

Model training and testing

To estimate Rn, the model incorporated daily Tmax, Tmin, RH, S, and Rs as its key input variables. We allocated the data spanning from 2000 to 2009, representing two-thirds of the complete dataset, for training purposes. The subsequent data from 2010 to 2014, making up the remaining third, served as the test set. For enhanced model performance, all variables underwent normalization to mitigate disparities arising from their inherent scales. The normalized algorithm is given in the following equation34:

where xnorm, x0, xmin, and xmax are the normalized, original, minimum, and maximum values of the variable x, respectively.

Performance evaluation

We utilized various statistical accuracy indicators to evaluate the model's accuracy based on the testing data. These included the root mean square error (RMSE), mean absolute error (MAE), Nash–Sutcliffe coefficient (NS), and relative error. The definitions for each of these metrics were provided in accordance with the following equations45:

where Xi and Yi are the ith observed and predicted values, respectively, \(\overline{{X_{i} }}\) and \(\overline{{Y_{i} }}\) represent the mean values of Xi and Yi, respectively, and m is the size of the test sample.

Results and discussions

The four ML models, namely ELM, GANN, RF, and GRNN, have been employed to estimate Rn values across four distinct climatic zones within China. Initially, a comparative analysis was conducted to evaluate the computational time required by each model. Utilizing MATLAB R2015a software on a system equipped with an Intel Xeon 2 E3-1230 v2 processor and 12-GB RAM, the ELM model demonstrated a running time of approximately 1 s across the different climatic zones. In contrast, the running times for the remaining models were notably higher, registering at 296 s, 161 s, and 21 s for GANN, RF, and GRNN, respectively. ELM's efficiency can be attributed to its relatively simple learning algorithm for single-hidden layer FFNN structures. It was anticipated that the learning speed of ELM would surpass that of traditional learning algorithms, as previously evidenced in the works of Huang et al.29,30. Consequently, ELM exhibits superiority over other models in the speed of estimating Rn. Supporting evidence of the ELM model's faster learning speed, facilitated by the ease of random assignment to hidden node parameters, has also been documented by Huang et al.29 and Feng et al.33.

The relationships between the measured Rn values and the estimates produced by the four ML models (ELM, GANN, RF, and GRNN) for each distinct climatic zone within China are delineated in Figs. 4, 5, 6, 7. Statistical analysis reveals that Rn estimates provided by each model exhibit significant correlations with the corresponding measured values across the various climatic zones (P < 0.001). Specifically, linear regression slopes were observed to be 0.815–0.868 for ELM, 0.815–0.870 for GANN, 0.810–0.857 for RF, and 0.812–0.845 for GRNN. Additionally, the coefficient of determination (R2) ranged from 0.838 to 0.963 for ELM, 0.836 to 0.963 for GANN, 0.809 to 0.959 for RF, and 0.812 to 0.949 for GRNN model. The substantial agreement between the measured and estimated Rn values attests to the potential applicability of these four ML models in Rn estimation within varying climatic contexts. Nevertheless, all four models exhibited a slight tendency to underestimate Rn across different climatic zone. Moreover, a consistent pattern emerged wherein the models demonstrated optimal performance within the temperate monsoon zone (specifically at the Beijing station), as compared to other regions. This observed regional performance disparity may be attributable to variations in the climatic conditions characterizing each individual station.

Scatter plot of the measured versus the estimated daily net radiation (Rn) by extreme learning machine (ELM), backpropagation neural networks optimized by genetic algorithm (GANN), random forests (RF) and generalized regression neural networks (GRNN) model in temperate monsoon zone (Beijing station).

Scatter plot of the measured versus the estimated daily net radiation (Rn) by extreme learning machine (ELM), backpropagation neural networks optimized by genetic algorithm (GANN), random forests (RF) and generalized regression neural networks (GRNN) model in subtropical monsoon zone (Kunming station).

Scatter plot of the measured versus the estimated daily net radiation (Rn) by extreme learning machine (ELM), backpropagation neural networks optimized by genetic algorithm (GANN), random forests (RF) and generalized regression neural networks (GRNN) model in temperate continental zone (Urumuqi station).

Box plots have been employed to elucidate the distribution of both observed and estimated daily Rn values generated by the four ML models, as depicted in Fig. 8. Analysis indicates that the distributional characteristics of the estimated Rn values by the various models closely mirror those of the observed Rn. However, the four models collectively demonstrate lower distribution disparities across each climatic zone, indicating a consistent underestimation of Rn. Moreover, a marked contrast is evident in the distribution of measured Rn across the different climatic zones. This variation can be ascribed not solely to the heterogeneous climatic conditions inherent to each station but also to the underlying surface condition. The influence of surface albedo on Rn is substantial, as documented by Campbell and Norman46, and the uncertainty associated with surface albedo across different underlying surfaces poses challenges to the precise determination of Rn. Such complexities may contribute to the observed error in each model's estimation of Rn within the context of the present study.

Box plots of measured compared with the estimated daily net radiation (Rn) by extreme learning machine (ELM), backpropagation neural networks optimized by genetic algorithm (GANN), random forests (RF) and generalized regression neural networks (GRNN) model in different climatic zone. (a) Mountain plateau zone. (b) Temperate monsoon zone. (c) Subtropical monsoon zone. (d) Temperate continental zone.

Figure 9 illustrates the monthly variations of both measured and estimated Rn, along with the absolute error between these values, across different climatic zones. The estimated monthly average Rn values derived from the four ML models are in substantial agreement with the measured quantities, with both sets of data exhibiting a bell-shaped pattern. Specifically, lower Rn are apparent in winter (December to February), while higher values are observed in summer (June to August). The disparities between the underestimation and overestimation of Rn by the different models fluctuate across the climatic zones. Within the mountain plateau zone, all ML models generally underestimate Rn annually, except for RF and GRNN in May, with absolute errors ranging from – 2.01 to 0.13 MJ m2 d⁻1. For the temperate monsoon zone, the four models consistently underestimate Rn throughout the year, with absolute errors spanning from – 2.02 to – 0.22 MJ m2 d⁻1. In the subtropical monsoon zone, the models overestimate Rn in summer while underestimating it in other seasons, with absolute errors varying between – 1.00 and 0.72 MJ m2 d⁻1. Conversely, within the temperate continental zone, all models overestimate Rn in winter while underestimating it in other seasons, with absolute errors fluctuating between 1.44 and 0.22 MJ m2 d⁻1. These findings shed light on the intricate relationship between the models' estimations and actual measurements of Rn, and emphasize the need to consider regional climatic variations in the interpretation of the results.

Comparison between measured and estimated monthly average net radiation (Rn) by extreme learning machine (ELM), backpropagation neural networks optimized by genetic algorithm (GANN), random forests (RF) and generalized regression neural networks (GRNN) model in different climatic zone. (a) Mountain plateau zone. (b) Temperate monsoon zone. (c) Subtropical monsoon zone. (d) Temperate continental zone.

Table 2 presents the statistical parameters that detail the performance of the four ML models in estimating Rn for each climatic zone within the study area. Overall, the performance of the models is satisfactory, with Root Mean Square Error (RMSE) values ranging from 1.386–2.025 MJ m2 d⁻1 for the ELM model, 1.360–2.122 MJ m2 d⁻1 for the GANN model, 1.457–2.186 MJ m2 d⁻1 for the RF model, and 1.487–2.126 MJ m2 d⁻1 for the GRNN model. The ELM and GANN models slightly outperformed the RF and GRNN models across the four climatic zones, according to RMSE statistics. Notably, the lowest RMSE values for the ELM and GANN models were recorded in the subtropical monsoon zone, at 1.386 and 1.360 MJ m2 d⁻1, respectively, while the highest values were observed in the mountain plateau zone. Mean Absolute Error (MAE) values ranged from 1.010 to 1.601 MJ m2 d⁻1 for the ELM model, 1.010 to 1.627 MJ m2 d⁻1 for the GANN model, 1.068 to 1.693 MJ m2 d⁻1 for the RF model, and 1.148 to 1.684 MJ m2 d⁻1 for the GRNN model. The accuracy of the ELM and GANN models was found to be slightly superior to the RF and GRNN models, as indicated by MAE statistics. The Nash–Sutcliffe (NS) efficiency values for the ELM and GANN models were also marginally higher than those for the RF and GRNN models, with values ranging from 0.768 to 0.926 for the ELM model, 0.760 to 0.925 for the GANN model, 0.755 to 0.917 for the RF model, and 0.756 to 0.894 for the GRNN model. The NS values for the ELM and GANN models were lowest in the mountain plateau zone, while the highest values were detected in the temperate monsoon zone. These statistical insights underscore the nuanced differences in the performance of the models across various climatic conditions and contribute to a comprehensive understanding of their applicability in Rn estimation.

In a comparative analysis, the performances of the ELM and GANN models in predicting Rn are demonstrably superior to those of the RF and GRNN models. The ELM model, characterized by its approach of randomly selecting hidden nodes and determining output weight through specific analytical methods, diverges from traditional ANN models that require human intervention for optimal parameter determination14. This characteristic may contribute to its enhanced performance compared to conventional artificial neural networks. Prior research has corroborated that ELM can yield higher prediction accuracy for various hydrological and meteorological variables such as streamflow47, evapotranspiration48, solar radiation21, and soil temperature14. Although traditional neural network models offer flexibility in handling nonlinear relationships, their reliance on the backpropagation method to obtain network weights introduces a susceptibility to local minimum issues during the learning process49,50,51. To address the shortcomings associated with backpropagation in traditional ANN models, this study employed a genetic algorithm to enhance the neural networks. As a result, the GANN model's performance in estimating Rn was found to be superior to that of the RF and GRNN models. In summary, the ELM and GANN models exhibit higher accuracy in Rn estimates compared to the RF and GRNN models, with the ELM model particularly recommended for assessing Rn across various climatic zones in China, owing to its remarkable speed and performance. The inferior stability in the RF model's simulations may be attributable to its lack of hyper-parameter tuning and its method of randomly selecting training data to construct classifiers40. Furthermore, a study by Yamaç52 emphasized that RF models might not perform as effectively as other artificial intelligence algorithms in specific applications, such as sugar beet evapotranspiration estimation.

A salient limitation of the four ML models is the lack of transparency in their underlying physical principles. In contrast to physically-based models that articulate explicit mathematical expressions, these ML models function as data-driven black-box systems. Consequently, their adaptability can become constrained in scenarios where the requisite training data are unavailable43,53. Despite this limitation, the inherent compatibility of ML models allows them to be hybridized with other algorithms, offering the potential to enhance the precision of target variable estimation. A notable example can be found in the work of Nahvi et al.54, who applied a self-adaptive evolutionary approach to optimize the hidden module of the ELM. This improvement led to an increase in the daily soil temperature estimation accuracy.

Furthermore, in the context of Rn estimation, ML models often consider a multitude of meteorological variables as input. The selection of these variables requires careful consideration and analysis of their various combinations to determine the optimal set for each specific study area. Such a targeted approach is not only vital for Rn estimation but is also of substantial importance for data-scarce regions where variable selection becomes critical21.

Accurate estimation of daily net radiation plays a pivotal role in optimising water management and crop productivity across China's diverse agricultural landscapes. Our study reveals the availability and effectiveness of four machine learning models in predicting daily net radiation in different climate zones in China, and recommends ELM as the most recommended model due to its accuracy and computational speed. However, all models in our study were found to slightly underestimate Rn, future foucs on integration of real-time data into our model architecture and optimisation of model parameters using heuristic optimisation methods may increase model adaptability and accuracy in changing environments55,56.

Conclusions

In the investigation of estimating daily Rn across four distinct climatic zones in China, four ML models, namely ELM, GANN, RF, and GRNN, were employed. Utilizing conventional meteorological variables alongside net radiation data, the models were trained and tested to assess their efficacy. The statistical analysis revealed satisfactory estimations for daily Rn across the models. The RMSE ranged from 1.392 to 2.025 MJ m−2 d−1 for ELM, 1.360 to 2.122 MJ m−2 d−1 for GANN, 1.457 to 2.186 MJ m−2 d−1 for RF, and 1.487 to 2.126 MJ m−2 d−1 for GRNN. The MAE spanned from 1.010 to 1.601 MJ m−2 d−1, 1.010 to 1.627 MJ m−2 d−1, 1.068 to 1.603 MJ m−2 d−1, and 1.148 to 1.684 MJ m−2 d−1, respectively, while the NS ranged from 0.768 to 0.926, 0.760 to 0.925, 0.755 to 0.917, and 0.756 to 0.894 for the four models.

Comparatively, the distribution of estimated Rn closely aligned with the observed values, with minimal distributional discrepancies identified across the climatic zones. Upon an overall examination, the ELM and GANN models demonstrated superiority over the RF and GRNN models in the precision of Rn estimations. Specifically, the ELM model emerged as highly recommended for Rn estimation across different climatic zones in China, attributed to its combination of remarkable computational efficiency and performance accuracy.

Data availability

All data, models, and code generated or used during the study appear in the submitted article.

References

Feng, Y., Zhang, X., Jia, Y., Cui, N., Hao, W., Li, H. & Gong, D. High-resolution assessment of solar radiation and energy potential in China. Energy Convers. Manag. 240 (2021).

Zhang, Y. X., Cui, N. B., Feng, Y., Gong, D. Z., & Hu, X. T. Comparison of BP, PSO-BP and statistical models for predicting daily global solar radiation in arid Northwest China. Comput. Electron. Agric. 164 (2019).

Feng, Y., Cui, N. B., Gong, D. Z., Zhang, Q. W. & Zhao, L. Evaluation of random forests and generalized regression neural networks for daily reference evapotranspiration modelling. Agric. Water Manag. 193, 163–173 (2017).

Besharat, F., Dehghan, A. A. & Faghih, A. R. Empirical models for estimating global solar radiation: A review and case study. Renew. Sustain. Energy Rev. 21, 798–821 (2013).

Dorvlo, A. S. S., Jervase, J. A. & Al-Lawati, A. Solar radiation estimation using artificial neural networks. Appl. Energy 71, 307–319 (2002).

El-Sebaii, A. A., Al-Hazmi, F. S., Al-Ghamdi, A. A. & Yaghmour, S. J. Global, direct and diffuse solar radiation on horizontal and tilted surfaces in Jeddah, Saudi Arabia. Appl. Energy 87, 568–576 (2010).

Hassan, G. E., Youssef, M. E., Mohamed, Z. E., Ali, M. A. & Hanafy, A. A. New temperature-based models for predicting global solar radiation. Appl. Energy 179, 437–450 (2016).

Khatib, T., Mohamed, A. & Sopian, K. A review of solar energy modeling techniques. Renew. Sustain. Energy Rev. 16, 2864–2869 (2012).

Teke, A., Yildirim, H. B. & Celik, O. Evaluation and performance comparison of different models for the estimation of solar radiation. Renew. Sustain. Energy Rev. 50, 1097–1107 (2015).

Pan, T., Wu, S., Dai, E. & Liu, Y. Estimating the daily global solar radiation spatial distribution from diurnal temperature ranges over the Tibetan Plateau in China. Appl. Energy 107, 384–393 (2013).

Zarzalejo, L. F., Polo, J., Martin, L., Ramirez, L. & Espinar, B. A new statistical approach for deriving global solar radiation from satellite images. Sol. Energy 83, 480–484 (2009).

Yadav, A. K. & Chandel, S. S. Solar radiation prediction using artificial neural network techniques: A review. Renew. Sustain. Energy Rev. 33, 772–781 (2014).

Feng, Y., Gong, D. Z., Jiang, S. Z., Zhao, L. & Cui, N. B. National-scale development and calibration of empirical models for predicting daily global solar radiation in China. Energy Convers. Manag. 203, 112236 (2020).

Feng, Y., Cui, N. B., Hao, W. P., Gao, L. L. & Gong, D. Z. Estimation of soil temperature from meteorological data using different machine learning models. Geoderma 338, 67–77 (2019).

Gairaa, K., Khellaf, A., Messlem, Y. & Chellali, F. Estimation of the daily global solar radiation based on Box-Jenkins and ANN models: A combined approach. Renew. Sustain. Energy Rev. 57, 238–249 (2016).

Jiang, Y. Computation of monthly mean daily global solar radiation in China using artificial neural networks and comparison with other empirical models. Energy 34, 1276–1283 (2009).

Kashyap, Y., Bansal, A. & Sao, A. K. Solar radiation forecasting with multiple parameters neural networks. Renew. Sustain. Energy Rev. 49, 825–835 (2015).

Wang, L. et al. Solar radiation prediction using different techniques: Model evaluation and comparison. Renew. Sustain. Energy Rev. 61, 384–397 (2016).

Deo, R. C., Wen, X. & Qi, F. A wavelet-coupled support vector machine model for forecasting global incident solar radiation using limited meteorological dataset. Appl. Energy 168, 568–593 (2016).

Mohammadi, K. et al. Predicting the wind power density based upon extreme learning machine. Energy 86, 232–239 (2015).

Shamshirband, S., Mohammadi, K., Yee, P. L., Petkovic, D. & Mostafaeipour, A. A comparative evaluation for identifying the suitability of extreme learning machine to predict horizontal global solar radiation. Renew. Sustain. Energy Rev. 52, 1031–1042 (2015).

Feng, Y. et al. Machine learning models to quantify and map daily global solar radiation and photovoltaic power. Renew. Sustain. Energ Rev. 118, 109393 (2020).

Jiang, B. et al. Empirical estimation of daytime net radiation from shortwave radiation and ancillary information. Agric. For. Meteorol. 211, 23–36 (2015).

Kaicun, W. & Shunlin, L. An improved method for estimating global evapotranspiration based on satellite determination of surface net radiation, vegetation index, temperature, and soil moisture (2008).

Lu, J., Tang, R., Tang, H. & Li, Z.-L. Derivation of daily evaporative fraction based on temporal variations in surface temperature, air temperature, and net radiation. Remote Sens. 5, 5369–5396 (2013).

Bisht, G. & Bras, R. L. Estimation of net radiation from the moderate resolution imaging spectroradiometer over the continental United States. IEEE Trans. Geosci. Remote Sens. 49, 2448–2462 (2011).

Hwang, K., Choi, M., Lee, S. O. & Seo, J.-W. Estimation of instantaneous and daily net radiation from MODIS data under clear sky conditions: A case study in East Asia. Irrig. Sci. 31, 1173–1184 (2013).

Shi, X. et al. Tree species richness and functional composition drive soil nitrification through ammonia-oxidizing archaea in subtropical forests. Soil Biol. Biochem. 187, 109211 (2023).

Huang, G.-B., Chen, L. & Siew, C.-K. Universal approximation using incremental constructive feedforward networks with random hidden nodes. IEEE Trans. Neural Netw. 17, 879–892 (2006).

Huang, G.-B., Zhu, Q.-Y. & Siew, C.-K. Extreme learning machine: theory and applications. Neurocomputing 70, 489–501 (2006).

Kariminia, S., Shamshirband, S., Motamedi, S., Hashim, R. & Roy, C. A systematic extreme learning machine approach to analyze visitors’ thermal comfort at a public urban space. Renew. Sustain. Energy Rev. 58, 751–760 (2016).

Mohammadi, K. et al. Extreme learning machine based prediction of daily dew point temperature. Comput. Electron. Agric. 117, 214–225 (2015).

Feng, Y., Gong, D., Mei, X. & Cui, N. Estimation of maize evapotranspiration using extreme learning machine and generalized regression neural network on the China Loess Plateau. Hydrol. Res. 48, 1156–1168 (2017).

Feng, Y., Cui, N., Zhao, L., Hu, X. & Gong, D. Comparison of ELM, GANN, WNN and empirical models for estimating reference evapotranspiration in humid region of Southwest China. J. Hydrol. 536, 376–383 (2016).

Huang, G. B., Zhu, Q. Y., & Siew, C. K., IEEE. Extreme learning machine: A new learning scheme of feedforward neural networks. IEEE International Joint Conference on Neural Networks (IJCNN), Budapest, Hungary, pp. 985–990 (2004).

Kumar, M., Raghuwanshi, N. S. & Singh, R. Artificial neural networks approach in evapotranspiration modeling: A review. Irrig. Sci. 29, 11–25 (2011).

Shiri, J. et al. Comparison of heuristic and empirical approaches for estimating reference evapotranspiration from limited inputs in Iran. Comput. Electron. Agric. 108, 230–241 (2014).

Gao, L., Gong, D., Cui, N., Lv, M. & Feng, Y. Evaluation of bio-inspired optimization algorithms hybrid with artificial neural network for reference crop evapotranspiration estimation. Comput. Electron. Agric. 190, 106466 (2021).

Matin, S. S. & Chelgani, S. C. Estimation of coal gross calorific value based on various analyses by random forest method. Fuel 177, 274–278 (2016).

Breiman, L. Random forests. Mach. Learn. 45, 5–32 (2001).

Karimi, S., Shiri, J. & Marti, P. Supplanting missing climatic inputs in classical and random forest models for estimating reference evapotranspiration in humid coastal areas of Iran. Comput. Electron. Agric. 176, 105633 (2020).

Specht, D. F. A general regression neural network. IEEE Trans. Neural Netw. 2, 568–576 (1991).

Tabari, H., Kisi, O., Ezani, A. & Talaee, P. H. SVM, ANFIS, regression and climate based models for reference evapotranspiration modeling using limited climatic data in a semi-arid highland environment. J. Hydrol. 444, 78–89 (2012).

Kisi, O. The potential of different ANN techniques in evapotranspiration modelling. Hydrol. Process. 22, 2449–2460 (2008).

Gong, D., Hao, W., Gao, L., Feng, Y. & Cui, N. Extreme learning machine for reference crop evapotranspiration estimation: Model optimization and spatiotemporal assessment across different climates in China. Comput. Electron. Agric. 187, 106294 (2021).

Campbell, G. S. & Norman, J. M. An Introduction to Environment Biophysics. Spring-Verlag (1998).

Taormina, R. & Chau, K.-W. ANN-based interval forecasting of streamflow discharges using the LUBE method and MOFIPS. Eng. Appl. Artif. Intell. 45, 429–440 (2015).

Jia, Y. et al. Optimization of an extreme learning machine model with the sparrow search algorithm to estimate spring maize evapotranspiration with film mulching in the semiarid regions of China. Comput. Electron. Agric. 201, 107298 (2022).

Kisi, O. Modeling solar radiation of Mediterranean region in Turkey by using fuzzy genetic approach. Energy 64, 429–436 (2014).

Kumar, M., Raghuwanshi, N. S., Singh, R., Wallender, W. W. & Pruitt, W. O. Estimating evapotranspiration using artificial neural network. J. Irrig. Drain. Eng. 128, 224–233 (2002).

Sudheer, K. P., Gosain, A. K. & Ramasastri, K. S. Estimating actual evapotranspiration from limited climatic data using neural computing technique. J. Irrig. Drain. Eng. 129, 214–218 (2003).

Yamac, S. S. Artificial intelligence methods reliably predict crop evapotranspiration with different combinations of meteorological data for sugar beet in a semiarid area. Agric. Water Manag. 254 (2021).

Kisi, O. & Cimen, M. Evapotranspiration modelling using support vector machines. Hydrol. Sci. J. J. Des Sci. Hydrol. 54, 918–928 (2009).

Nahvi, B., Habibi, J., Mohammadi, K., Shamshirband, S. & Al Razgan, O. S. Using self-adaptive evolutionary algorithm to improve the performance of an extreme learning machine for estimating soil temperature. Comput. Electron. Agric. 124, 150–160 (2016).

Li, Y. & Yang, Z. Application of EOS-ELM with binary Jaya-based feature selection to real-time transient stability assessment using PMU data. IEEE Access 5, 23092–23101 (2017).

Zhang, Y., Li, T., Na, G., Li, G. & Li, Y. Optimized extreme learning machine for power system transient stability prediction using synchrophasors. Math. Probl. Eng. 2015, 529724 (2015).

Acknowledgements

During the process of conducting this study, we deeply felt the support and assistance of many people. Firstly, we would like to express our gratitude to the members of our mentor's research team for providing valuable guidance and advice. In particular, Daozhi Gong made significant contributions to the conceptualization of this project in the early stages of research. Although he was not listed in the author list due to his participation in the overall amount of work that did not meet the author criteria, his contribution was crucial to the successful implementation of this study. At the same time, thank you to all colleagues who participated in data collection and analysis, it’s your efforts that have enabled the smooth completion of this study. We would like to thank the National Climatic Centre of the China Meteorological Administration for providing the climate database used in this study. This work was also financially funded by the Science and Technology Program of Sichuan (2023YFN0024) and National Key Research and Development Program of China (2021YFD1600803-1).

Author information

Authors and Affiliations

Contributions

H.Y.: Supervision, Resources; S. J.:methodology, simulation analysis, investigation and writing of original manuscript; R.S. and S.L.: Format Editing; J.W. and X.K.: Grammar review; H.Z: Review & Editing; M.W.: Software, Validation; D.G. and M.C.: Conceptualization, Methodology; C.Z.: Project administration. All authors have read and agreed to the published version of the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Yu, H., Jiang, S., Chen, M. et al. Machine learning models for daily net radiation prediction across different climatic zones of China. Sci Rep 14, 20454 (2024). https://doi.org/10.1038/s41598-024-71550-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-024-71550-9