Abstract

To solve the issue of diagnosis accuracy of diabetic retinopathy (DR) and reduce the workload of ophthalmologists, in this paper we propose a prior-guided attention fusion Transformer for multi-lesion segmentation of DR. An attention fusion module is proposed to improve the key generator to integrate self-attention and cross-attention and reduce the introduction of noise. The self-attention focuses on lesions themselves, capturing the correlation of lesions at a global scale, while the cross-attention, using pre-trained vessel masks as prior knowledge, utilizes the correlation between lesions and vessels to reduce the ambiguity of lesion detection caused by complex fundus structures. A shift block is introduced to expand association areas between lesions and vessels further and to enhance the sensitivity of the model to small-scale structures. To dynamically adjust the model’s perception of features at different scales, we propose the scale-adaptive attention to adaptively learn fusion weights of feature maps at different scales in the decoder, capturing features and details more effectively. The experimental results on two public datasets (DDR and IDRiD) demonstrate that our model outperforms other state-of-the-art models for multi-lesion segmentation.

Similar content being viewed by others

Introduction

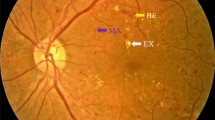

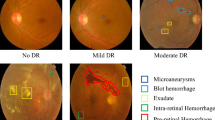

Among the complications caused by diabetes, diabetic retinopathy (DR) has a high incidence, which is an important cause of visual defects and permanent blindness1,2,3. Until now, the most recognized treatment is the early diagnosis and intervention, which can avoid 90\(\%\) of vision loss4. Thus, DR screening on a regular basis is effective in detecting the disease earlier and reducing the rate of blindness caused by DR. The main pathological features of DR lesions in color fundus images often present as microaneurysms (MAs), hemorrhages (HEs), soft exudates (SEs), and hard exudates (EXs). Especially MAs are the earliest symptom of DR lesions. These four-category lesions are the basis of physicians’ diagnosis, so accurate segmentation is essential for the correct detection and diagnosis of DR lesions. However, as shown in Fig. 1a, the shape and the size of each lesion can vary widely, e.g., MAs are generally very small, ranging from one pixel to a few pixels, while some HEs can reach a maximum of thousands of pixels5. Different lesions may share similar characteristics, e.g., MAs and HEs are both red lesions, while SEs and EXs are both bright lesions. Therefore, in clinical practice, it is very cumbersome and time-consuming for ophthalmologists to manually identify these lesions from a large number of fundus images. The identifying results are all dependent on ophthalmologists’ experience, which may lead to missed and misdiagnosed cases. Above all, in order to reduce the workload of ophthalmologists and improve the accuracy of diagnosing DR, automatic segmentation technology for DR lesions has become a trend.

The distribution relationship of four categories of lesions, where segmented EX, HE, MA and SE are marked in red, green, blue and yellow. (a) is an example of DR lesions in fundus images from the DDR dataset. (b) shows the distribution relationships between different lesions that SEs usually surround HEs and EXs usually surround MAs. (c) shows the distribution relationships between vessles and different lesions that EXs are mostly located around the main trunks of vessels, whereas MAs are mostly located on both sides of the capillaries.

Compared with single-lesion segmentation, multi-lesion segmentation, which primarily involves segmenting MAs, HEs, SEs, and EXs, holds greater significance for the diagnosis of DR. However, multi-lesion segmentation faces more challenges due to significant differences in lesion size, shape, and intensity. Several effective methods have been proposed in this field, yet two issues remain inadequately addressed in previous research. Firstly, there is a lack of effective interaction between lesions and vessels, leading to the less sensitivity of the model to the areas around vessels and the loss of interdependence between lesions and vessels. Secondly, a unified structure makes it difficult to execute appropriate feature extraction strategies for different types of lesions with large-scale differences.

As shown in Fig. 1b, most EXs typically appear in a circular pattern around one or several MAs, while most SEs commonly appear at the periphery of HEs. This information, emphasized by the self-attention head, can be better used to differentiate multiple lesions. As shown in Fig. 1c, MAs are generally distributed at the margins of the capillaries, while SEs are near the trunk of upper and lower arteries. The cross-attention head effectively utilizes the vessel branch and lesion branches.

Much of the research has only achieved the segmentation of just one or two lesions, neglecting the dependencies between blood vessels and lesions, and the dependencies among multiple lesions. Huang et al.6 first utilized vascular information for four-category lesion segmentation simultaneously, and proposed the pathological connections between lesions and vessels and confirmed that these connection contributes to multi-lesion segmentation. However, first, the cross-attention head in the relation transformer block (RTB) they proposed introduced some noise in vessel features, and vessel features were only fused in single-scale features after up-sampling without effectively using multi-scale information of vessels. Therefore, although helpful in lesion segmentation, vascular information was not fully and effectively utilized, which caused negative impacts on the performance of multi-lesion segmentation. Second, they didn’t take full advantage of multi-scale lesion information, resulting in the difficulty that scale inconsistency between lesions poses to the multi-lesion segmentation: large lesions need to utilize long-range contexts in deep features, while small lesions need details in shallow features.

Motivated by the above observations, we propose in this work a novel attention fusion approach that more efficiently utilizes vascular information as prior knowledge without introducing new noise. Our proposed model uses a dual-branch UNet structure consisting of two encoder branches, which extract lesion and vessel features, respectively, and an attention fusion transformer encoder (AFTE), which is integrated between two branches. Specifically, both branches extract features by convolving input images, but since lesion features are more complex, a shift block is used in the lesion branch to extract local features further and enhance deep feature extraction.

An attention fusion block (AFB) is designed, which is a combination of a self-attention head and a cross-attention head in AFTE. Distinguishing from the traditional combination approach, i.e., a simple parallel, series, or summation of the self-attention head and the cross-attention head, vascular information is injected into the key in the attention mechanism with the aim of simultaneously capturing strong similarity relationships between lesions and vessels, and relationships among multiple lesions.

The self-attention head of AFB is employed to find the certain spatial arrangement for the majority of lesions in fundus images, which is consistent with the pathological causes of DR lesions. The cross-attention head, achieved by adding vascular information, i.e., the distribution of blood vessels in fundus images, to the key of the attention mechanisms, aids the localization of lesions. Furthermore, the selective introduction of vascular information only in the key can reduce the introduction of noise in vessel features. At the same time, vascular information at two different scales of features is fused in the encoder and the decoder to improve the integration of lesion features and vessel features.

Another improvement proposed in this paper is the optimization of the multi-scale feature fusion in the decoder. Existing works only convolve simply concatenated multi-scale features equally without the distinction between different scales. A scale-adaptive attention (SAA) is proposed to perform dynamic selection of different scales during the fusion to make multi-scale features fusion more flexible and improve the consistency of the fused multi-scale features.

To evaluate the efficiency of the proposed model, it is trained and tested on DDR and IDRiD datasets. Experimental results show that, compared with other state-of-the-art models, our model achieves outstanding results in the task of fundus lesion segmentation including segmenting EX, MAs, and SEs on two datasets. Furthermore, the ablation studies are conducted on the DDR dataset and the effectiveness of our improvements is validated in DR lesion segmentation.

In summary, our contributions are as follows:

-

1.

We propose a prior-guided attention fusion Transformer including a dual-branch encoder to extract lesion features and vessel features. In the proposed attention fusion transformer encoder (AFTE), vessel features extracted from the vessel branch as prior knowledge guide lesion segmentation. In the lesion branch, a shift block is added to expand the receptive field.

-

2.

We propose an attention fusion block (AFB) that integrates two encoder branches using a new mechanism of attention at two different scales to more fully and effectively utilize vascular information while exploring the internal relationships between lesions.

-

3.

In the decoder, we propose a scale-adaptive attention (SAA) to dynamically adjust the fusion of multi-scale features and solve the issue of conflicts in different scale features.

The rest of this paper is organized as follows. Section Related works briefly reviews some closely related works, including the studies of DR lesion segmentation and the shift mechanism. Section Methodology explains the details of our proposed model. Section Experiments includes the experimental settings and evaluation approaches, and illustrates the testing performance of each model by conducting different ablation studies. Section Conclusion discusses and summarises the experimental results.

Related works

Lesion segmentation in fundus images

Due to the development of DL models, various DL-based methods have been used for lesion segmentation.

For single lesion segmentation, Guo et al.7 proposed a multi-channel bin loss function to deal with the class imbalance and loss imbalance in EX segmentation, which focus on pixels that are difficult to classify. Mo et al.8 designed a fully convolutional residual network(FCRN) incorporating multi-level hierarchical information to segment the exudates. However, such models are only applicable to single lesion segmentation and are unable to be extended to other lesion segmentation.

For the same type of lesion segmentation, DR lesions are divided into red lesions (MAs and HEs) and bright lesions (SEs and EXs) according to the characteristics of lesions in fundus images. Playout et al.9 proposed a fully convolutional architecture that combines pixel-level training and image-level weakly supervised training with labels. They used two identical decoders, each of which specializes in segmenting red and bright lesion categories, and introduced an exchange layer that shares parameters softly between two decoders. However, their network lacks a sufficiently large receptive field to consider a wider context, resulting in segmenting some larger lesions into multiple smaller lesions rather than predicting complete boundaries.

For simultaneous segmentation of multiple lesions, Kumar et al.10 used iterative morphological approaches and watershed segmentation methods to detect and remove blood vessels and optic discs and obtain the structures of MAs and HEs used to extract seven features to train a classification model for automated detection of DR. Guo et al.11 developed L-Seg on the top of HED12 by adding a side extraction layer to each convolutional layer group of VGG-net13 to extract and fuse the features of different scales, and handling the class imbalance issue and segmenting DR lesions simultaneously. Liu et al.5 noticed the impact of different lesion scales and proposed a novel Many-to-Many Reassembly of Features(M2MRF) that maintains discriminative information about small lesions as much as possible and captures long-term spatial dependencies.

Transformer in fundus images

Transformer14, a convolution-free structure, has become one of the most widely used models in the field of natural language processing (NLP)15,16 since its introduction in 2017, for the capability of modeling long-range dependencies for data. It has an encoder-decoder structure, both of which are tandem by several similar blocks. Each block comprises components such as masked multi-head attention, layer normalization(LayerNorm), position-wise feed-forward network, etc. Additionally, there are cleverly inserted residual connections between these components.

Before introducing Transformer to the field of computer vision (CV) , there have been numerous attempts to combine convolution neural network (CNN) and attention17,18, but none of them yielded better results than CNN. Until 2020, Dosovitskiy et al.19, inspired by the successful experiences in NLP, proposed a pioneering model with the fewest modifications to the standard transformer structure: Vision Transformer (ViT), which lays a solid foundation for the adoption of transformer-based techniques in the field of CV. Swin Transformer20 improves on ViT and expands the applicability of Transformer, making it more suitable as a generic backbone for CV tasks. Nowadays, ViT variants have rapidly been applied to many CV tasks, such as image classification, object detection, semantic segmentation, etc., as a new research trend.

With the rapid development of Transformer in the field of CV, Transformer-based methods have also been widely used in the field of medical image analysis. Whether using Transformer alone21,22 or combining it with CNN, both local and global information of the image can be better captured. He et al.23 noticed the impact of different lesion scales. They progressively integrated multi-scale features from two or three adjacent encoding layers by a progressive feature fusion (PFF) block and then dynamically selected features at different scales by a dynamic attention block (DAB). Huang et al.6 developed a novel network for better interaction between lesions and vessels by introducing self-attention and cross-attention blocks after UNet. They proposed a Global Transformer Block(GTB) inspired by GCNet24 to emphasize more useful channel information in each position and a relation Transformer block (RTB) to explore the dependency among lesions and other retinal tissues.

Blood vessel and lesion segmentation

In an early task of segmenting DR lesions by morphological methods, blood vessels are easily mistaken for red lesions, so morphological structures such as vessels are detected and removed before the actual lesion segmentation. Even when applying deep neural networks(DNN) to DR lesion segmentation, some works still remove blood vessels in the stage of image preprocessing. Jaskirat Kaur et al.25 developed a generalized EX segmentation method consisting of three phases, namely retinal image enhancement, segmentation and elimination of anatomical structures, and EX segmentation, which is robust in the sense that it uses dynamic decision thresholds without considering large variations in retinal fundus images from different datasets. Al-hazaimeh et al.26 detected and removed optic disc and vessels respectively by introducing the Circular Hough Transform (HCT) and the Bias Corrected Separated Possibilistic Neighborhood FCM (BCSPNFCM) algorithm, which adds spatial information to segment vessels to distinguish blood vessels from DR lesions and selects appropriate features for EX, MA, and HE segmentation by feeding the processed feature maps into the Deep Convolutional Neural Network27.

Vascular structure has always been closely associated with DR lesions, and both MAs and EXs around capillaries are caused by vascular atresia. As the disease worsens, vessels may rupture, leading to the formation of HEs and SEs near the arteries from the leaked blood and lipoproteins, respectively. These are consistent with the presentation in fundus images. Huang et al.6 utilized the prior. Due to the lack of labeled fundus datasets for blood vessels and lesions, they used pseudo vascular masks provided by semi-supervised learning and adopted cross-attention mechanisms to assist in detecting lesion areas. However, they failed to fully utilize multi-scale features and only learned at one scale.

Shift block

Shift operation was proposed as an effective alternative to spatial convolution operation in 2017. Specifically, it employs a three-layer structure consisting of two Conv \(1\times 1\) and shift operation. In the follow-up work, shift operation is extended into various variants , including active shift28, sparse shift29, and partial shift30.

Wang et al.31 integrated the partial shift with Swin Transformer, introduced a shift block. The block comprises shift operation, LayerNorm, and MLP network. The combined model outperforms the Swin Transformer on tasks such as image classification, object detection, and segmentation, providing evidence for the substitutability of self-attention mechanisms.

In this work, we introduce the shift block into the feature extraction of fundus lesions. Without increasing computational cost, it further expands the receptive field by incorporating spatial neighborhood information to establish better contextual relationships.

Methodology

In this section, we first briefly overview the proposed network and then detail each proposed module, including the shift block, the attention fusion block (AFB), and the scale-adaptive attention (SAA).

Overall architecture

The overall architecture of the proposed network. (a) is the overall architecture consisting of three components: a dual-branch encoder, a decoder and a classification head, where \(C_{1}=32\), \(C_{2}=64\), \(C_{3}=128\), \(C_{4}=256\) and \(C_{5}=512\) denote the number of feature channels at five scales, respectively, \(H=512\), \(W=512\) denote the scale of pre-processed images. (b) and (c) are the structures of Conv1 and Conv2, where h and w denote the height and width of features at the current scale. In our experiment, \(c'=2c\) when downsample is True.

As shown in Fig. 2a, the proposed network adopts a two-branch encoder-decoder architecture. The encoder consists of three main components: a lesion branch including a shift block for processing three-channel color fundus images, a vessel branch for handling single-channel grayscale vessel masks, and an attention fusion transformer encoder (AFTE) that integrates features from both branches. The decoder includes an upsampling structure and a SAA mechanism. Finally, a classification head is employed to map the outputs of the decoder, generating segmentation probabilities for four-category lesions.

The input sizes of the vessel and lesion branches of the encoder are \(1\times H\times W\) and \(3\times H\times W\), respectively. Each branch undergoes convolution operation to obtain features \(F_{ves}\) for vessels and \(F_{lesion}\) for lesions, as illustrated in Fig. 2b and c for Conv1 and Conv2 structures. For each set of convolution operation, the downsample flag is set to “True” and the input images are downsampled to 1/4 of the original spatial dimensions only in the first convolution block. Before feeding lesion features into the AFTE, a shift block is used to enhance contextual information through shift and linear operation. Finally, the AFTE injects results from the vessel branch into the lesion branch, leveraging the vascular information extracted by the AFB to improve the accuracy of lesion feature learning.

The upsampling in the decoder follows the standard ViT structure, utilizing self-attention head from AFB. The structure of Up Conv2 is similar to Conv2, with the distinction of using Upsample instead of Downsample. Subsequently, the SAA calculates activation weights for each scale, facilitating a weighted fusion of features based on their inherent information to assign attention automatically. Finally, the classification head, a three-layer structure comprising two Conv \(1\times 1\) and an Upsample, predicts the final multi-lesion segmentation results based on features incorporating multi-scale information.

Shift block

The structure of the shift block consists of shift operation, LayerNorm, and a Multi-Layer Perceptron (MLP) connected in series, as shown in Fig. 3a. Specifically, shift operation involves shifting a portion of channels of the inputs along four spatial directions (up, down, left, right) by one pixel, as shown in Fig. 3b. Pixels exceeding the boundary are discarded, while blank pixels are filled with zeros. The remaining channels remain unchanged. Take the input \(F_{sh}\in R^{c\times h\times w}\) as an example, shift operation can be formulated as follows:

where \(\gamma\) is a scaling factor controlling the number of channels to be shifted. In our experiments, \(\gamma =\frac{1}{12}\), which results in a total of 1/3 channels being shifted. Shifted features are then passed through a LayerNorm and an MLP. The MLP, consisting of two Linear and a GELU activation function, sequentially increases channels dimension from c to 4c, applies a non-linear activation, and projects channels back to c, yielding the output \(F_{sh\_out}\in R^{c\times h\times w}\) for the shift block. It is worth noting that shift operation incurs low computational costs and requires no explicit calculations, which reduces a significant number of FLOPs during training.

The shift block is introduced before lesion features entering the AFTE. By repeatedly shifting lesion features in four spatial directions, the spatial receptive field is further expanded to enhance the correlation area between lesions and vessels, which contributes to a better understanding of global information in images. Therefore, shift operation aids the model in being more robust to variations in images, thereby improving the generalization performance of our model.

Attention fusion block

The AFTE follows a standard Transformer encoder structure, comprising three parts: multi-head attention mechanism, LayerNorm, and MLP, as shown in Fig. 3c. It takes two same-sized features, lesion features \(F_{lesion}\) from the previous layer and vessel features \(F_{ves}\) as input, producing new lesion features as output.

AFB, the core of AFTE as shown in Fig. 3d, introduces a novel fusion of self-attention and cross-attention, effectively capturing inherent dependencies between different lesions and between lesions and vessels simultaneously. In AFB, four heads are utilized, each of which is composed of a Conv \(1\times 1\) and a reshape. One head serves as the key generator for \(F_{ves}\), denoted as \(K_v\), while the other three are used as query, key, and value generators for \(F_{lesion}\), denoted as \(Q_l\), \(K_l\), \(V_l\), respectively. Given the generated \(K_v\left( F_{ves}\right) , Q_l\left( F_{lesion}\right) , K_l\left( F_{lesion}\right) , V_l\left( F_{lesion}\right) \in R^{hw\times c}\), where h, w, and c represent spatial height, width, and channel number, respectively, the similarity matrix is computed by the dot product of \(Q_l\left( F_{lesion}\right)\) and \(K_l\left( F_{lesion}\right)\) in the standard self-attention mechanism. While in AFB, spatial relationships between lesions and vessels is introduced by adding vascular information to the key generator. Specifically, \(K_l\left( F_{lesion}\right)\) and \(K_v\left( F_{ves}\right)\) are added to obtain a new key with vascular information, denoted as \(K_{fusion}\), and then compute the similarity matrix based on the fusion key. The calculation is described as follows:

where \(F_{sim}\in R^{hw\times hw}\) is the similarity matrix, and \(\lambda\) denotes the weight of vessel features. The larger \(\lambda\) is, the more the model focuses on the area around blood vessels, and the greater the role of vascular information in attention is. Subsequently, based on the integrated similarity matrix that incorporates vascular information, the new attention features is calculated as follows:

where Linear represents a linear embedding using a Conv \(1\times 1\). The subsequent LayerNorm and MLP are the same as those in the shift block.

During the formation of different lesion features, mutual influences manifest in the images as certain spatial relationships between lesions. Additionally, some lesions have a more widespread distribution. The self-attention head can leverage distant contextual information in lesion features, capturing both long and short-distance dependencies between different lesions, which enables the model to learn these semantic relationships better. Furthermore, since the image has similar local regions, the self-attention head can establish global correlations for these regions, aiding the model in understanding the relationships between different parts of the feature map.

Since there are inherent pathological connections between vessels and lesions, vessel features are introduced in the cross-attention head to provide crucial prior knowledge for lesion segmentation. The similarity between lesion features and vessel features is computed by injecting vascular information into the key in the attention mechanism. This utilization of the dependencies between lesions and vessels allows the model to focus sensitively on potential lesion areas around vessels, enhancing the local correlations for better localization of MA and SE. Moreover, AFTE is employed at two scales to exploit the vascular information fully.

Scale-adaptive attention

Different scale information helps enhance the robustness of our model. As shown in Fig. 3e, the proposed SAA is composed of attention calculation and feature fusion.

In the attention calculation, \(F_{sc}\) is input features at four scales, and \(F_{sc}^i\) is the feature at each scale. Initially, Upsample and Conv \(1\times 1\) are sequentially performed to standardize the scale to \(F_{same_sc}^i \in R^{C_{4}\times \frac{H}{2}\times \frac{W}{2}}\), \(i\in \left\{ 1,2,3,4\right\}\), where \(C_{4}\), \(\frac{H}{2}\),and \(\frac{W}{2}\) represent the channel number, height, and width of the standardized feature, respectively. This operation can be expressed as:

Then, the AdaptivePooling operation is utilized to perform spatial averaging for each channel of each scale, obtaining global features \(F_{spa\_avg}^i\in R^{C_{4}\times 1\times 1}\), \(i\in \left\{ 1,2,3,4\right\}\).

Then, the mean value along the channel is further calculated:

where \(F_{spa\_avg}^{i,j}\) represents the j-th node in \(F_{spa\_avg}^{i}\). Finally, the Sigmoid activation function is applied to activate the obtained mean value, yielding importance scores for each scale. These scores are then multiplied with their corresponding \(F_{sc}^i\), resulting in the activated features for the i-th scale:

In the feature fusion, the activated features for each scale are first concatenated along the channel dimension. Subsequently, the concatenated features are passed through a Conv_Norm layer, comprising a Conv \(1\times 1\) and a BatchNorm, resulting in the output \(F_{sc\_out}\in R^{C_{4}\times \frac{H}{2}\times \frac{W}{2}}\). This operation can be expressed as:

Among images at each scale, distinct feature information is leveraged to adjust the weights for each scale. Adaptive adjustment of fusion weights is more effective in integrating coarse-grained structure from large-scale features and rich details from small-scale features than treating features from different scales equally.

Experiments

Datasets

Our model is evaluated on two publicly available datasets with pixel-level annotations. DDR dataset32 is a general high-quality dataset of 757 color fundus images suitable for segmentation tasks, where 383 images are used for training, 149 for validation, and 225 for testing. The dataset includes 486 images with EX, 601 with HE, 570 with MA, and 239 with SE. The dataset has some low-quality images with degradation factors such as uneven lighting, underexposure, and overexposure. Therefore, it is the largest and most challenging dataset.

IDRiD dataset33 was released at the International Symposium on Biomedical Imaging (ISBI) in Challenge 2018 for the segmentation and grading of DR lesions. This dataset consists of 81 images with a resolution of \(4288 \times 2848\), with EX and MA in each image, 80 with HE, and 40 with SE. There is an official split between training and test sets: 54 for training and 27 for testing.

Implementation details

To obtain the vascular structure in the images, a pre-trained model is employed on the CHASE34 and DRIVE35 datasets to extract vascular masks from the raw images. Subsequently, due to limited available video memory, both the larger color fundus images and vascular masks are uniformly resized to \(512\times 512\). Finally, considering the scarcity of training data, data augmentation, including random vertical flipping, random horizontal flipping, random scaling and random rotation, is applied to the resized fundus images before training to mitigate overfitting and enhance the model’s generalization.

In this work, all experiments are implemented using PyTorch as the backend and performed on a workstation equipped with two 24GB memory NVIDIA GeForce RTX 4090 GPU cards. The model is trained with a batch size of 4 for 1400 epochs. The model is optimized using the Adam optimizer with a momentum of 0.9 and weight decay of 0.001. The initial learning rate is set to \(2\times 10^{-4}\) and adjusted using the ReduceLROnPlateau method. To address the challenge of the generally small foreground proportions in fundus images, binary cross-entropy loss and soft dice loss are employed to train the model for each lesion.

Evaluation metrics

Since fundus images suffer from category imbalance, and the Precision-Recall (PR) curve is more sensitive to imbalanced data due to its focusing on true positive precision and recall, the area under the PR curve (AUPR) is used to measure the performance of the proposed model, which is consistent with the IDRiD challenge. The class-wise IoU score and class-wise F1 score are also calculate, which are common evaluation metrics in the field of medical image analysis. The former indicates the overlap between the predicted and ground true segmented regions, and the latter is the harmonic mean of Precision and Recall. The evaluation metrics are defined as follows:

In addition, similar to the definition of mAP in the object detection, the mean AUPR (mAUPR), mean IoU (mIoU), and mean F1 (mF1) are also computed to evaluate the overall performance of the proposed model for the multi-category lesion segmentation.

Performance comparison

Visualized segmentation results of our model on (a) the DDR dataset and (b) the IDRiD dataset compared with other state-of-the-art models including UNet, DeepLabV3+, UNet++, CE-Net, PSPNet, where EXs, HEs, MAs and SEs are marked in red, green, blue and yellow, respectively. The white arrows indicate the differences between the predictions of all the models and the ground truth (GT).

Our model is compared with 11 state-of-the-art models on two datasets, including UNet36, UNet++37, DeepLabV3+38, CE-Net39, PSPNet40, EAD-Net41, L-Seg11, RILBP-YNet42, RTNet6, PMCNet23, and SAA43. The first five are general semantic segmentation models, and the latter six are lesion-specific segmentation models. The same experimental setup as the proposed model is used for a fair comparison. All models are applied to segment all four-category lesions using a unified structure.

Visualized segmentation results using different scale combinations and weighting strategies. (a) is the positive effect of complete identification of the arterial trunk on the nearby HE and SE segmentation, whereas (b) is the negative effect of incomplete identification of the capillaries on the EX and MA segmentation. EXs, HEs, MAs and SEs are marked in red, green, blue and yellow, respectively. The white arrows indicate the differences between the ground truth (GT) and the predictions of all the models.

The comparative results of all models on the two datasets are presented in Table 1, and visualization results are presented in Fig. 4a and b respectively. Our model exhibits excellent performance on the DDR dataset in terms of AUPR, IoU, and F1. Particularly in AUPR, the segmentation performance for all four-category lesions reaches optimal, indicating that our model partially overcomes the class imbalance issue. Even for the relatively fewer MAs, our segmentation performance shows a noticeable improvement. For the IDRiD dataset, our model achieves the best performance for HEs and SEs, with AUPR increased by 2.4 and 2.1\(\%\), respectively, compared with the suboptimal model (SAA). This improvement may stem from the generally effective segmentation of upper and lower arterial trunks in the vascular masks, allowing the model to focus on SEs closer to the main arteries, as illustrated in Fig. 5b. However, AUPR for EXs and MAs are slightly lower than SAA, with reductions of 2.2\(\%\) and 1.7\(\%\), respectively. This discrepancy may arise from poor segmentation of capillaries in some images, where MAs are distributed around capillaries, and most EXs surround MAs. The inaccurate identification of capillary regions hinders the model from focusing on potential MAs and adjacent EXs in these areas, as shown in Fig. 5a. Furthermore, our model consistently ranks first in mAUPR, mIoU, and mF1 for all four-category lesions on both datasets. Particularly on the DDR dataset, compared with the suboptimal model, our model demonstrates a significant improvement of at least 2.6\(\%\), 4.2\(\%\) and 5.5\(\%\) in these metrics, indicating superior overall performance in multi-lesion segmentation and highlighting its robust competitiveness.

Ablation studies on the DDR dataset

Ablation experiments are conducted on the DDR dataset to better understand the impact of each component of our model. First, we verify the effectiveness of three important components: shift block, AFTE, and SAA, then analyze their results. Then, to verify the effectiveness of vascular information as prior knowledge for lesion segmentation, we discuss its contribution in terms of attention. Due to the main role of AFTE in achieving the fusion of lesion information and vascular information through AFB, in order to further select the appropriate fusion method in key, we investigate the specific implementation of AFB. Finally, we conduct a comparative study of the scales at which the fused vascular information is available. In these experiments, the remaining model structures remain the same as the proposed model.

Ablation studies of components

Visualized segmentation results for different combinations of three modules on DDR dataset. (a) demonstrate the segmentation results of different methods on EXs and MAs, showing that our model is better than other combinations, while (b) demonstrate the results on HEs and SEs, showing that the combination (base+AFTE+Shift Block) has the best segmentation. The pink arrows indicate the differences between GT and the results of different combinations.

Figure 6 visualizes the segmentation results of our model with different components, and Table 2 shows the specific comparison results of the AUPR, IoU, F1-score, mean AUPR (mAUPR), mean IoU (mIoU) and mean F1-score (mF1) of four lesions for different components of our model. In Table 2, Row 1 is the results of the baseline, where the encoder includes the lesion branch without shift block and AFTE, and the decoder includes an upsampling structure without SAA. Compared with the baseline, Row 2 demonstrates that the AFTE allows more flexible attention generation, adapting to the context of multi-lesion and vessel features to achieve adaptive coordination of lesion and vascular information. This enhances the model’s ability to model dependencies among multiple lesions and improves the recognition of each lesion, which meets our expectations.

Row 3 confirms that shift block can help capture spatial relationships of small-scale structures by using minor pixel shifts, improving sensitivity to EXs and MAs. Additionally, as the shapes and sizes of the four-category lesions vary, shift block introduces non-linear transformations, diversifying feature representations and aiding the model in identifying the complex texture features of HEs and SEs so that the best segmentation effect is achieved for HEs and SEs, as shown in Fig. 6b. Moreover, the combination of AFTE and shift block (Row 4) outperforms the use of AFTE alone, which indicates that shift block enhances the correlation in the regions between lesions and vessels by shifting features within the channels to take advantage of spatial neighbors, enabling AFTE to learn the dependencies between lesions and vessels better.

It is observed from Row 5 that SAA can effectively integrate the features of EXs, HEs and MAs at different scales. Since irregularly shaped SEs may have large differences at different scales, the SAA is unable to integrate these inconsistent feature representation adequately and efficiently, which results in a degradation of segmentation performance.

Although our components do not consistently yield significant improvements for each lesion individually, their combined use brings about greater overall improvement. Compared with the baseline, our model achieves a 4.2\(\%\) increase in mAUPR, a 3.6\(\%\) increase in mIoU, and a 4.4\(\%\) increase in mF1, demonstrating the best overall segmentation performance.

Ablation studies of AFB

The impact of the key on segmentation performance is investigated, as shown in Table 3, where \(k_l\) and \(k_v\) denotes generated attention weights based on multi-lesion features alone and vessel features alone, respectively. Row 2, 3 and 4 list the AUPR, indicating the impact of only lesion features, fused vessel features and lesion features by concatenation and by summation on multi-lesion segmentation.

Row 4 shows a significant improvement in AUPR for SEs, EXs and MAs, which conforms to our previous analysis that different vascular structures are strongly associated with MAs and SEs. At the same time, EXs have a certain distribution relationship with MAs. However, the segmentation of capillary limits the attention to MAs, resulting in less pronounced improvement in AUPR for MAs compared with Row 2.

Additionally, it is found that introducing vascular information influences HE segmentation. This may be because HEs, compared with other lesions, share more similar features, specifically color, with vessels. When the model attempts to learn lesions around vessels, the less distinctive features of HEs can be learnt, making cross-learning more challenging. Nevertheless, incorporating vessel features as prior knowledge is beneficial for enhancing the overall performance of multi-lesion segmentation.

Having established that vascular information contributes to multi-lesion segmentation, the specific method for fusing vascular information is further discussed. The results for two fusion methods are presented in Rows 3 and 4 of Table 3. Here, the symbol “+” represents the direct addition of the two keys generated based on lesion features and vessel features, while “concat” represents the concatenation of the two keys along the channel dimension. It can be observed that introducing vascular information through concatenation leads to a decline in segmentation for three lesions and the overall model due to information conflicts. This indicates that the channel-wise concatenation cannot effectively leverage vascular information. In contrast, the spatial summation allows better fusion between the two types of information and improves the ability to model complex features.

Additionally, the AFB is compared with the RTB in RTNet, which injects vessel features using a standard cross-attention mechanism and then connects it with multi-lesion features processed through a common self-attention mechanism, denoted as [Self, Cross]. Different from the RTB, which incorporates vascular information in both the key and value generators, the AFB incorporates vascular information in the key generator only. From Table 3, it can be seen that our model can focus on the region around vessels more precisely without being disturbed by unnecessary noise because the reduction of incorporating vascular information effectively reduces noise from vessel features.

Analysis on \(\lambda\)

The hyperparameter \(\lambda\) in AFB denotes the percentage of vascular information in attention. Table 4 show the performance comparison of segmentation by setting \(\lambda\) to 0.4, 0.5, and 0.6, respectively. The results indicates that the segmentation is more accurate in the case of 0.5. Compared with the optimal setting(\(\lambda =0.5\)), increasing or decreasing the vascular information by 10\(\%\) leads to a loss of about 1.7\(\%\).

Analysis on fusion scale

Experiments on two combinations of three scales:\(\frac{H}{4}\times \frac{W}{4}\) and \(\frac{H}{16}\times \frac{W}{16}\), \(\frac{H}{8}\times \frac{W}{8}\) and \(\frac{H}{16}\times \frac{W}{16}\) are conducted. Deeper features corresponding to larger scales contain more contextual relationships, which facilitates capturing the global structures. Introducing vessel features into deep features allows the combination of global structural information with local details around vessels, thereby improving the segmentation of lesions, as shown in Table 5.

Conclusion

In this study, we propose a prior-guided attention fusion Transformer for multi-lesion segmentation of diabetic retinopathy. In this model, we propose a new AFB which combines self-attention and cross-attention mechanisms to incorporate both lesion information and vascular information in the key generator. The self-attention is used to find long-distance dependency relationships between lesions, and the cross-attention is used to effectively integrate valuable vascular information which guides the model more accurately locating different lesions in complex fundus images as prior knowledge. To our best knowledge, this is the first work to employ such prior-guided attention fusion method in fundus lesion segmentation. In order to further extract complex lesion features and improve the sensitivity of the model to structural features at small scales, the shift block is introduced to our model to improve the robustness of the model. The SAA is proposed to calculate the multi-scale attention of features generated during the upsampling process to address the issue of inconsistent lesion scales in fundus images. The segmentation results on two public datasets indicate that our model performs well overall in various multi-lesion segmentation tasks.

However, due to the lack of datasets providing both pixel-level multi-lesion labels and vascular annotations, vascular masks obtained from pretrained models are inevitably coarse-grained, which causes incomplete utilization of vascular information and introduction of noise, resulting in a degradation of the HE segmentation.

In the future, we will explore an unsupervised learning strategy to extract vascular information from fundus images, and continue improving the multi-scale fusion method to accurately focus on details or semantic information in lesion features at different scales where vascular information is incorporated.

Data availability

The datasets used and/or analysed during the current study are available from the corresponding author on reasonable request.

References

Thomas, R., Halim, S., Gurudas, S., Sivaprasad, S. & Owens, D. Idf diabetes atlas: A review of studies utilising retinal photography on the global prevalence of diabetes related retinopathy between 2015 and 2018. Diabetes Res. Clin. Pract. 157, 107840 (2019).

Ciulla, T. A., Amador, A. G. & Zinman, B. Diabetic retinopathy and diabetic macular edema: Pathophysiology, screening, and novel therapies. Diabetes Care 26, 2653–2664 (2003).

Raman, R., Gella, L., Srinivasan, S. & Sharma, T. Diabetic retinopathy: An epidemic at home and around the world. Indian J. Ophthalmol. 64, 69 (2016).

Wong, T. Y. et al. Guidelines on diabetic eye care: the international council of ophthalmology recommendations for screening, follow-up, referral, and treatment based on resource settings. Ophthalmology 125, 1608–1622 (2018).

Liu, Q., Liu, H., Ke, W. & Liang, Y. Automated lesion segmentation in fundus images with many-to-many reassembly of features. Pattern Recogn. 136, 109191 (2023).

Huang, S., Li, J., Xiao, Y., Shen, N. & Xu, T. Rtnet: relation transformer network for diabetic retinopathy multi-lesion segmentation. IEEE Trans. Med. Imaging 41, 1596–1607 (2022).

Guo, S. et al. Bin loss for hard exudates segmentation in fundus images. Neurocomputing 392, 314–324. https://doi.org/10.1016/j.neucom.2018.10.103 (2020).

Mo, J., Zhang, L. & Feng, Y. Exudate-based diabetic macular edema recognition in retinal images using cascaded deep residual networks. Neurocomputing 290, 161–171. https://doi.org/10.1016/j.neucom.2018.02.035 (2018).

Playout, C., Duval, R. & Cheriet, F. A novel weakly supervised multitask architecture for retinal lesions segmentation on fundus images. IEEE Trans. Med. Imaging 38, 2434–2444. https://doi.org/10.1109/TMI.2019.2906319 (2019).

Kumar, S., Adarsh, A., Kumar, B. & Singh, A. K. An automated early diabetic retinopathy detection through improved blood vessel and optic disc segmentation. Optics Laser Technol. 121, 105815 (2020).

Guo, S. et al. L-seg: An end-to-end unified framework for multi-lesion segmentation of fundus images. Neurocomputing 349, 52–63. https://doi.org/10.1016/j.neucom.2019.04.019 (2019).

Xie, S. & Tu, Z. Holistically-nested edge detection. In 2015 IEEE International Conference on Computer Vision (ICCV), 1395–1403, https://doi.org/10.1109/ICCV.2015.164 (2015).

Simonyan, K. & Zisserman, A. Very deep convolutional networks for large-scale image recognition. In International Conference on Learning Representations (2015).

Vaswani, A. et al. Attention is all you need. Adv. Neural Inf. Process. Syst. 30, 1 (2017).

Egonmwan, E. & Chali, Y. Transformer and seq2seq model for paraphrase generation. In Proceedings of the 3rd Workshop on Neural Generation and Translation, 249–255 (2019).

Shi, Y. et al. Emformer: Efficient memory transformer based acoustic model for low latency streaming speech recognition. In ICASSP 2021-2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), 6783–6787 (IEEE, 2021).

Wang, X., Girshick, R., Gupta, A. & He, K. Non-local neural networks. In Proceedings of the IEEE conference on computer vision and pattern recognition, 7794–7803 (2018).

Carion, N. et al. End-to-end object detection with transformers. In European conference on computer vision, 213–229 (Springer, 2020).

Dosovitskiy, A. et al. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv preprint arXiv:2010.11929 (2020).

Liu, Z. et al. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF international conference on computer vision, 10012–10022 (2021).

Le Dinh, T., Lee, S.-H., Kwon, S.-G. & Kwon, K.-R. Covid-19 chest x-ray classification and severity assessment using convolutional and transformer neural networks. Appl. Sci. 12, 4861 (2022).

Krishnan, K. S. & Krishnan, K. S. Vision transformer based covid-19 detection using chest x-rays. In 2021 6th International Conference on Signal Processing, Computing and Control (ISPCC), 644–648 (IEEE, 2021).

He, A. et al. Progressive multiscale consistent network for multiclass fundus lesion segmentation. IEEE Trans. Med. Imaging 41, 3146–3157 (2022).

Ni, J., Wu, J., Tong, J., Chen, Z. & Zhao, J. Gc-net: Global context network for medical image segmentation. Comput. Methods Programs Biomed. 190, 105121 (2020).

Kaur, J. & Mittal, D. A generalized method for the segmentation of exudates from pathological retinal fundus images. Biocybernetics Biomed. Eng. 38, 27–53. https://doi.org/10.1016/j.bbe.2017.10.003 (2018).

Al-hazaimeh, O. M., Abu-Ein, A. A., Tahat, N. M., Al-Smadi, M. A. & Al-Nawashi, M. M. Combining artificial intelligence and image processing for diagnosing diabetic retinopathy in retinal fundus images. International Journal of Online & Biomedical Engineering18 (2022).

Sun, Y., Xue, B., Zhang, M., Yen, G. G. & Lv, J. Automatically designing cnn architectures using the genetic algorithm for image classification. IEEE Trans. Cybernetics 50, 3840–3854. https://doi.org/10.1109/TCYB.2020.2983860 (2020).

Jeon, Y. & Kim, J. Constructing fast network through deconstruction of convolution. Advances in neural information processing systems31 (2018).

Chen, W., Xie, D., Zhang, Y. & Pu, S. All you need is a few shifts: Designing efficient convolutional neural networks for image classification. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 7241–7250 (2019).

Lin, J., Gan, C. & Han, S. Tsm: Temporal shift module for efficient video understanding. In Proceedings of the IEEE/CVF international conference on computer vision, 7083–7093 (2019).

Wang, G., Zhao, Y., Tang, C., Luo, C. & Zeng, W. When shift operation meets vision transformer: An extremely simple alternative to attention mechanism. In Proceedings of the AAAI Conference on Artificial Intelligence 36, 2423–2430 (2022).

Li, T. et al. Diagnostic assessment of deep learning algorithms for diabetic retinopathy screening. Inf. Sci. 501, 511–522 (2019).

Porwal, P. et al. Idrid: Diabetic retinopathy-segmentation and grading challenge. Med. Image Anal. 59, 101561 (2020).

Owen, C. G. et al. Retinal arteriolar tortuosity and cardiovascular risk factors in a multi-ethnic population study of 10-year-old children; the child heart and health study in england (chase). Arterioscler. Thromb. Vasc. Biol. 31, 1933–1938 (2011).

Staal, J., Abràmoff, M. D., Niemeijer, M., Viergever, M. A. & Van Ginneken, B. Ridge-based vessel segmentation in color images of the retina. IEEE Trans. Med. Imaging 23, 501–509 (2004).

Ronneberger, O., Fischer, P. & Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015: 18th International Conference, Munich, Germany, October 5-9, 2015, Proceedings, Part III 18, 234–241 (Springer, 2015).

Zhou, Z., Rahman Siddiquee, M. M., Tajbakhsh, N. & Liang, J. Unet++: A nested u-net architecture for medical image segmentation. In Deep Learning in Medical Image Analysis and Multimodal Learning for Clinical Decision Support: 4th International Workshop, DLMIA 2018, and 8th International Workshop, ML-CDS 2018, Held in Conjunction with MICCAI 2018, Granada, Spain, September 20, 2018, Proceedings 4, 3–11 (Springer, 2018).

Chen, Y., Meng, Q. & Zhang, J. Effects of the notch angle, notch length and injection rate on hydraulic fracturing under true triaxial stress: An experimental study. Water 10, 801 (2018).

Gu, Z. et al. Ce-net: Context encoder network for 2d medical image segmentation. IEEE Trans. Med. Imaging 38, 2281–2292. https://doi.org/10.1109/TMI.2019.2903562 (2019).

Zhao, H., Shi, J., Qi, X., Wang, X. & Jia, J. Pyramid scene parsing network. In Proceedings of the IEEE conference on computer vision and pattern recognition, 2881–2890 (2017).

Wan, C. et al. Ead-net: A novel lesion segmentation method in diabetic retinopathy using neural networks. Disease Markers 2021, 6482665 (2021).

Pavani, P. G., Biswal, B. & Gandhi, T. K. Simultaneous multiclass retinal lesion segmentation using fully automated rilbp-ynet in diabetic retinopathy. Biomed. Signal Process. Control 86, 105205 (2023).

Bo, W., Li, T., Liu, X. & Wang, K. Saa: scale-aware attention block for multi-lesion segmentation of fundus images. In 2022 IEEE 19th International Symposium on Biomedical Imaging (ISBI), 1–5 (IEEE, 2022).

Acknowledgements

This work was supported by national natural science foundation of China under Grant 82071995, and key research and development program of Jilin Province, China under Grant 20220201141GX.

Author information

Authors and Affiliations

Contributions

Chenfangqian Xu and Xiaoxin Guo conceived and conducted the experiments, and wrote the main manuscript text, Guangqi Yang and Yihao Cui prepared and preprocessed the experimental data, Longchen Su and Hongliang Dong provided the software support, and Xiaoying Hu and Songtian Che analyzed and discussed the experimental results. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Xu, C., Guo, X., Yang, G. et al. Prior-guided attention fusion transformer for multi-lesion segmentation of diabetic retinopathy. Sci Rep 14, 20892 (2024). https://doi.org/10.1038/s41598-024-71650-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-024-71650-6

Keywords

This article is cited by

-

Convolutional block attention gate-based Unet framework for microaneurysm segmentation using retinal fundus images

BMC Medical Imaging (2025)

-

Advancing Visual Perception Through VCANet-Crossover Osprey Algorithm: Integrating Visual Technologies

Journal of Imaging Informatics in Medicine (2025)