Abstract

The rotary motor plays a pivotal role in various motion execution mechanisms. However, an inherent issue arises during the initial installation of the encoder grating, namely, eccentricity between the centers of the encoder grating and motor shaft. This eccentricity substantially affects the accuracy of motor angle measurements. To address this challenge, we proposed a precision encoder grating mounting system that automates the encoder grating mounting process. The proposed system mainly comprises a near-sensor detector and a push rod. With the use of a near-sensor approach, the detector captures rotating encoder grating images, and the eccentricity is computed in real-time. This approach substantially reduces the time delays in image data transmission, thereby enhancing the speed and accuracy of eccentricity calculation. The major contribution of this article is a method for real-time eccentricity calculation that leverages an edge processor within the detector and an edge-vision baseline detection algorithm. This method enables real-time determination of the eccentricity and eccentricity angle of the encoder grating. Leveraging the obtained eccentricity and eccentricity angle data, the position of the encoder grating can be automatically adjusted by the push rod. In the experimental results, the detector can obtain the eccentricity and eccentricity angle of the encoder grating within 2.8 s. The system efficiently and precisely completes a encoder grating mounting task in average 25.1 s, and the average eccentricity after encoder grating mounting is 3.8 µm.

Similar content being viewed by others

Introduction

An optical rotary encoder is a crucial device for measuring the rotation angle of a motor shaft. Such as machine tools, robots and measuring machines1. Its accuracy directly impacts the precision of motion control in industrial equipment2,3. During the initial installation of an optical rotary encoder grating, an eccentricity error typically occurs between the centers of the encoder grating and motor shaft (Fig. 1). This eccentricity error substantially influences the accuracy of motor angle measurements in high-precision machines4, precision turntables5 and metrology instruments6. Various methods, such as encoder sensor output signal analysis7,8,9,10,11,12, multichannel photoelectric sensors13,14,15, and innovative encoder grating patterns16,17, have been proposed to address the challenge of eccentricity-induced rotation angle measurement errors. However, these methods often introduce complexity into the rotation angle acquisition process and increase product costs. An eccentricity adjustment system using a charge-coupled device (CCD) camera and motion control card has also been proposed to mitigate the eccentricity error during encoder grating assembly for rotary motors18. Nevertheless, time delays in image data transfer between the CCD camera and image processing computer result in lower efficiency and precision levels in the encoder grating mounting process. In this article, an encoder grating mounting system is proposed, which can achieve efficient and high-precision encoder grating mounting.

Researchers have explored software-based compensation methods to address motor angle measurement errors, such as the adaptive differential evolution-Fourier neural network model7, built error model based on the sensor data8,9, digital signal bandpass filtering method10, variational mode decomposition with a coarse-to-fine selection scheme11 and a method based on the phase feature of the moiré signal12. These methods entail the use of numerical analysis of the digital converter output data from optical rotary encoders to decompose factors influencing motor angle measurement errors and compensate for errors induced by installation eccentricity. However, these numerical analysis-based methods require extensive data processing, thereby prolonging processing times, which does not conform with the requirements for real-time compensation.

Hardware-based detection and compensation methods for eccentricity-induced motor angle measurement errors have been proposed. For instance, Gurauskis et al.13 and Yu et al.14 proposed an encoder with multiple photoelectric sensors to mitigate mechanical installation errors. Wang et al.15 proposed a photodiode area-compensation method and designed a four-channel photodiode array chip for optical encoders to address measurement angle errors. In addition, Li et al.16 developed a spider-web patterned scale encoder grating monitored by two orthogonally arranged dual photoelectric sensors, enabling real-time eccentricity detection and compensation. Dalboni et al.17 uses a half-Vernier patterned scale encoder grating and two photoelectric sensors to implement an absolute rotary encoder. However, the use of multichannel sensors for eccentricity detection and compensation induces potential errors due to the sensor placement precision and complicates the rotation angle acquisition process.

If the eccentricity error is addressed during the initial installation of an encoder grating, the induced motor angle measurement error can be directly reduced. Wang et al.18 proposed an eccentricity adjustment system based on a CCD camera and a motion control card. The system involves the use of a CCD camera and a computer to detect eccentricity by capturing and processing encoder grating images. Then, a microscale two-dimensional translation table is controlled to adjust the encoder grating position. However, the system must transmit massive image data from the CCD camera to a computer. The time delay in the transmission process results in imprecision and a low efficiency in encoder grating mounting. In addition, this system does not consider the randomness of the position of different motor shafts or the shape of different encoder grating edges19. This randomness makes it impossible to batch-adjust the encoder grating eccentricity.

In this article, we propose a precision encoder grating mounting system that automates the encoder grating mounting process. The proposed system mainly comprises a near-sensor detector and a push rod. The encoder grating has an alignment ring concentric with the grating, and the eccentricity of the grating can be determined based on the position of the alignment ring. With the use of a near-sensor approach, the detector captures rotating encoder grating images, and the eccentricity is computed in real-time. This approach substantially reduces the time delays in image data transmission, thereby enhancing the speed and accuracy of eccentricity calculation.

The major contribution of this article is a method for real-time eccentricity calculation that leverages an edge processor within the detector and an edge-vision baseline detection algorithm. This method enables real-time determination of the eccentricity and eccentricity angle of the encoder grating. Leveraging the obtained eccentricity and eccentricity angle data, the push rod automatically adjusts the position of the encoder grating.

The key novelty involves a pipeline baseline feature-extraction module in the near-sensor detector, which can obtain the features of the baseline in the capturing image without buffering the entire image. The baseline feature-extraction module can pipeline the image process. As such, baseline features can be extracted in real time upon sensor read-out completion.

In the experimental results, the detector can obtain the eccentricity and eccentricity angle of the encoder grating within 2.8 s. The system efficiently and precisely completes a encoder grating mounting task in average 25.1 s, and the average eccentricity after the encoder grating mounting is 3.8 µm.

The remainder of this article is structured as follows. In chapter 2, the structure of the proposed encoder grating mounting system is described. Chapter 3 provides an introduction of the encoder grating eccentricity detection method proposed in this article. In Chapter 4, the proposed detector based on a near-sensor computing architecture is introduced. Chapter 5 presents the system implementation and experimental results. Finally, Chapter 6 is the conclusion.

Encoder grating mounting system

We propose an efficient high-precision encoder grating mounting system (Fig.2). The system comprises a detector, controller, focus motor, high-precision servo motor, and push-rod motor. A rotary motor that is connected to the servo motor through a coupling in a shaft-to-shaft manner supports the encoder grating at its top. The detector employs near-sensor computing to capture encoder grating images and calculate encoder grating eccentricity. The focus motor aims to adjust the distance between the detector and encoder grating for optimal imaging. The high-precision servo motor propels both the rotary motor and encoder grating. The push-rod motor adjusts the encoder grating mounting eccentricity by precisely driving the push rod to push the edge of the encoder grating. The controller directs the overall encoder grating mounting operation.

The encoder grating is initially installed on the rotary motor shaft within the system. The encoder grating has an alignment ring concentric with the grating. A light-curing adhesive is applied between the rotary motor shaft and the encoder grating. After the mounting process, ultraviolet lights is used to solidify the adhesive to fix the encoder grating and rotary motor shaft. The system efficiently detects the initial mounting eccentricity and can be used to accurately mount the encoder grating as follows.

First, the detector captures a encoder grating image, and the average gradient of the image is calculated. The controller activates the focus motor for autofocusing using the maximum average gradient method20, ensuring a clear encoder grating image.

Second, the controller orchestrates a steady \(360^{\circ }\) rotation of the shaft of the rotary motor, facilitated by the servo motor. Synchronously, the detector captures alignment ring and code track images of the encoder grating at high speed, thereby performing real-time image processing. The detector establishes the relationship between the alignment ring position in an image and the servo rotation angle using the method described in Section 3 to determine the encoder grating eccentricity e and corresponding servo motor rotation angle (eccentricity angle \(\theta _{\max }\)).

Third, the controller aligns the radial line of the rotary motor at the eccentricity angle \(\theta _{\max }\) with the push rod by driving the servo motor. Subsequently, the push-rod motor guides the push rod to gradually approach the edge of the encoder grating, which the detector monitors in real time. Upon reaching the edge, the push rod swiftly pushes the encoder grating across a distance equal to the eccentricity, adjusting its position.

Finally, the system again verifies the mounting eccentricity through the detector. If the eccentricity is below the set threshold, the system completes the encoder grating mounting process. Otherwise, the system repeats the above process from the third step.

The mounting system encompasses the proposed near-sensor computing architecture-based detector. This detector enables high-speed image capture and real-time image processing during the autofocusing, eccentricity detection, and adjustment steps. This system substantially reduces the time delays during image data capture and transmission, thereby enhancing its timeliness. In addition, it efficiently detects the eccentricity and mitigates randomness in the motor shaft position and encoder grating edge shape. The rapid response capability enables the controller to precisely drive the motors within the system. Therefore, the mounting system can efficiently and precisely accomplish encoder grating mounting tasks.

Eccentricity calculation method

Design of the method

We propose an eccentricity calculation method to determine the eccentricity error between the centers of the encoder grating and motor shaft (Fig. 3). The detector swiftly captures and processes a encoder grating image during servo motor rotation. We consider the alignment ring as the baseline in the encoder grating image and calculate the eccentricity via the following steps.

First, the servo motor continuously rotates the encoder grating and simultaneously transmits the rotation angle to the edge processor through the controller. The initial servo motor rotation angle is \(\theta _0\). The image sensor captures encoder grating images throughout the rotation process. The edge processor acquires a encoder grating image from the image sensor and records the rotation angle \(\theta\) when the image sensor completes exposure.

Next, the edge processor detects the baseline in a encoder grating image. It extracts the baseline center point column coordinate c from the encoder grating image and retrieves the stored servo motor rotation angle \(\theta\). The combination of the column coordinate c and rotation angle \(\theta\) produces coordinate points \((\theta ,c)\) of the \(\theta -c\) curve. The edge processor stores this coordinate point and proceeds to manage the next encoder grating image frame. After the servo motor completes a \(360^{\circ }\) rotation, several coordinate points are used by the edge processor to fit two functions, namely, \(c = f_{\min }(\theta )\) and \(c = f_{\max }(\theta )\).

Finally, we use (1) to determine two extreme points, namely, \(P_{\min }(\theta _{\min },c_{\min })\) and \(P_{\max }(\theta _{\max },c_{\max })\), for the two functions \(f_{\min }\) and \(f_{\max }\).

The servo motor angle \(\theta _{\max }\) corresponds to the rotation angle associated with eccentricity (eccentricity angle \(\theta _{\max }\)). Based on the extreme values, the encoder grating eccentricity e is calculated by the edge processor on the basis of (2).

Error analysis of the method

The alignment ring on the encoder grating is circular, but due to the inherent defect of etching process, there is a deviation from the ideal circular shape. We consider the roundness of the alignment ring to be the systematic error. The roundness of the alignment ring is limited during the production process of the encoder grating, and the fitting step during the eccentricity calculation can reduce the impact of the circularity error.

The eccentricity calculation method requires multiple frames of encoder grating images to obtain baseline coordinates and servo motor rotation angles for eccentricity determination via fitting. The eccentricity calculation accuracy is related to the acquisition of servo motor rotation angles and the sensor frame interval. The rotation angle is recorded in the detector every 1 ms, and the detector caches the rotation angle during sensor exposure. Thus, the error in the rotation position for eccentricity can be ignored, and we only analyze the error in the calculated eccentricity induced by the sensor frame interval.

Figure 4 shows the error \(\varepsilon\) during eccentricity calculation induced by the sensor frame interval. The alignment ring circle, O, with eccentricity e, rotates by \(\gamma\) within the sensor frame interval. The error \(\varepsilon\) can be calculated as follows (3):

where \(\gamma\) denotes the encoder grating rotation angle within the frame interval, and e denotes the eccentricity. Moreover, \(\gamma\) can be calculated as follows (4):

where v denotes the servo motor rotation speed, and FPS is the sensor frame rate. Combining (3) and (4), we can obtain the following:

With increasing FPS value, \(\varepsilon\) decreases. The near-sensor detector enables high-speed image capture and real-time processing capabilities, thereby reducing eccentricity calculation errors and achieving greater accuracy in encoder grating mounting.

Near-sensor computing architecture-based detector

Detector architecture

The detector architecture is shown in Fig. 5. It comprises a lens, a high-speed global shutter image sensor, an edge processor, and interfaces. A prime microscope lens is employed to capture encoder grating images. The image sensor efficiently captures a clear encoder grating image at high speed during encoder grating rotation. The edge processor processes the obtained encoder grating image in real time to calculate the eccentricity. The image sensor, edge processor, and interfaces are located on three printed circuit boards, which are stacked and connected through board-to-board connectors.

The detector performs image acquisition and processing in the near-sensor edge processor. The application of near-sensor computing reduces the image transmission distance, thereby decreasing the time from image acquisition to output. The real-time result output reduces the delay in data transmission and simplifies the connection between the detector and the controller. This reduction substantially reduces the closed-loop control delay of the mounting system, resulting in a more efficient encoder grating mounting process. Moreover, the tight connection of the boards reduces the detector’s size and enhances integration.

Design of the eccentricity calculation in the edge processor

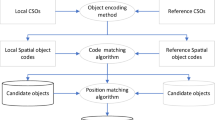

The image processing module of the eccentricity calculation in the edge processor is shown in Fig. 6. The eccentricity calculation in the edge processor comprises real-time edge-vision baseline detection and eccentricity fitting. After the sensor captures a encoder grating image, real-time edge-vision baseline detection is used in the edge processor to identify the alignment ring as the baseline. This process yields baseline center point coordinates and the average gradient of the image. Then, the eccentricity-fitting module establishes the relationship between the alignment ring position in the image and the servo motor rotation angle to calculating the encoder grating eccentricity and eccentricity angle.

The real-time edge-vision baseline detection encompasses morphology-filtering (Fig. 6a), baseline-segmentation (Fig. 6b), and baselinefeature-extraction modules (Fig. 6c). The morphology-filtering module aims to remove interference from the code channels. In the baseline-segmentation module, the edge image gradient is used to filter the baseline, and the baselinefeature-extraction module calculates the baseline features through the connected components of the labeled image. In real-time edge-vision baseline detection, data acquisition and processing from sensor readouts are concurrently performed, thereby effectively minimizing the processing latency. After baseline detection, the eccentricity-fitting module (Fig. 6d) establishes the relationship between the image alignment ring position and the servo motor rotation angle.

The design of each module is as follows.

Morphology filtering

The morphology-filtering module encompasses dilation-erosion, subtraction, erosion-dilation, and Gaussian filter components is shown in Fig. 6a. The dilation-erosion structure element is circular with a diameter of 2d. Moreover, the erosion-dilation structure element is circular with a diameter of d/2, where d denotes the image baseline width. This width is determined based on the sensor pixel size, lens magnification, and alignment ring width.

First, the encoder grating image is subjected to dilation–erosion processing to eliminate the baseline in the image. For each pixel, the maximum values within the neighborhood of the circular structure element are calculated in the dilation-erosion process. Subsequently, the resulting image subtracts the encoder grating image to eliminate the code track, retaining only the baseline. Afterward, the image, after subtraction, is subjected to erosion-dilation processing to eliminate the influence of the remaining corner pixels and code plate dirt. Finally, after erosion-dilation, the image is subjected to a Gaussian filter to blur the edges.

The morphology-filtering module effectively eliminates lines wider than d and removes effects due to dust from the image, resulting in a clear baseline image.

Baseline segmentation

The baseline-segmentation module is shown in Fig. 6b. This module encompasses the processes of gradient calculation, non-maximum suppression (NMS), dual-threshold segmentation, and baseline filtering. After the application of the morphology-filtering module, the image retains the alignment ring and connecting lines of the code track. Accurately determining the center point of the alignment ring is challenging, given that the alignment ring in the image exhibits width and pixel values close to the maximum values. First, the image gradient is employed in the baseline-segmentation module to identify the edge of the alignment ring. Subsequently, NMS and dual-threshold are used in the module to generate a binary edge image. Further,in the module, gradient-based filtering is applied to address the lines connecting the code track. The following outlines the operation of each step in the baseline-segmentation module.

-

Gradient calculation

-

After morphology-filtering, the Sobel operator is used to calculate the gradient of the image. Notably, the x- and y-direction gradients,namely, \(G_x\) and \(G_y\), respectively, of the image are calculated. Subsequently, it determines the gradient magnitude |G| and the gradient direction angle \(\varphi\) based on \(G_x\) and \(G_y\).

-

Non-maximum suppression

-

The image is subjected to non-maximum suppression (NMS) based on the gradient magnitude and direction following gradient calculation. This step involves suppressing the non-maximum values of the three pixels along the gradient direction within a \(3\times 3\) neighborhood. The center pixel along the gradient direction within the \(3\times 3\) neighborhood is divided into four directions (0, 90, 45, and 135 degrees). Three pixels in the \(3\times 3\) neighborhood are then selected according to the gradient direction of the center pixel. If the gradient magnitude value of the center pixel is the maximum, it is retained. Otherwise, it is set to zero to suppress this non-maximum pixel.

-

Dual-threshold segmentation

-

Dual-threshold segmentation aims to binarie the edge image. This step helps remove spots caused by dirt on the encoder grating. Dual-threshold segmentation effectively resolves the binary edges of the alignment ring that may become disconnected after threshold segmentation. Pixels with values greater than the high threshold are considered strong edges, whereas those between the low and high thresholds are considered weak edges.

-

Baseline filter

-

In the baseline filter, the gradient direction is used to filter the edges of the alignment ring and eliminate other edges in the binary edge image. The relative positions of the sensor and encoder grating determine whether the gradient direction of the alignment ring falls within a certain range. If the gradient direction of the edge image pixel occurs outside this range, the baseline filter eliminates the pixel from the binary edge image.

Baseline feature-extraction

The baseline feature-extraction module is shown in Fig. 6c. The detail design of the baselinefeature-extraction module is shown in Fig. 7. This module encompasses edge filling, connected component labeling, connected component feature extraction, and baseline-feature computation. The binary edge image, after the baseline segmentation module, includes both strong and weak edges, along with the corresponding gradient directions for each binary edge pixel. The baselinefeature-extraction module can derive features to obtain coordinate points \((\theta ,c)\). The following outlines the operation of each step in the baselinefeature-extraction module.

-

Edge filling

-

The pixel values in the binary edge image after baseline segmentation are not continuous due to the four-direction approximation of the gradient direction during the NMS step. Small spots in the image attributed to dirt on the encoder encoder grating also contribute to binary edge image discontinuity. In the edge filling process, Algorithm 1 is used to fill the center pixel in the \(3\times 3\) neighborhood. When the center pixel is zero, the edge-filling algorithm determines the pixel value and gradient direction in the eight-direction neighborhood. If the difference in the gradient directions is less than \(45^{\circ }\), the center position in the binary edge image and the gradient direction image are filled.

-

Connected component labeling

-

The connected component labeling process establishes the connectivity of each edge pixel. It assigns the same label to the connected edge pixels, generating a labeled image after filling the binary edge image. The algorithm described in Algorithm 2 executes connected component labeling of a binary edge image, and a labeled image is produced. It aims to identify the start, middle, and end states of the connected component, thereby assigning corresponding label numbers to the binary edge image using a \(3\times 3\) neighborhood. A label number prediction buffer for the next row is generated based on the connection between the center pixel and the next row of pixels. This buffer predicts the label number for the current pixel during label assignment in the subsequent row.

-

Connected component feature extraction

-

After connected component labeling, distinct label numbers are assigned to each connected edge in the binary edge image, generating a labeled image. Connected component feature extraction aims to retrieve connected component features based on the labeled images and their gradient direction . The connected component feature buffer includes the sum of the pixel row and column coordinates, the sum of the pixel gradient directions, and the pixel quantity of the strong and weak edges of each connected component.

Baseline-feature computation

-

In baseline-feature computation, features that align with the encoder grating alignment ring characteristics are selected, and the baseline features are output. It begins by sorting the pixel quantities of strong and weak edges in the connected component feature buffer to obtain the two largest features. The next steps involve dividing the sum of the pixel row and column coordinates by the total pixel quantity of strong and weak edges to derive the line centers and dividing the sum of pixel gradient directions by the total pixel quantity of strong and weak edges to determine the line directions. Subsequently, it aims to assess the validity of the line centers and directions. Finally, the mean value of the two line centers is calculated to obtain the baseline center column coordinate c. Combined with the servo motor rotation angle \(\theta\), this process yields the coordinate point \((\theta ,c)\) needed for eccentricity fitting.

Eccentricity fitting

The eccentricity-fitting module is shown in Fig. 6d. It can use several column coordinates c and servo motor rotation angles \(\theta\) to fit two functions and calculate the encoder grating eccentricity e and corresponding servo motor rotation angle \(\theta _{\max }\). It employs the following calculation steps . First, the module stores the baseline detection outputs c and \(\theta\) of each frame and then identifies the two extreme column coordinates after the encoder grating is rotated by \(360^{\circ }\). In the module, two cubic spline functions \(c = f_{\min }(\theta )\) and \(c = f_{\max }(\theta )\) are used to fit several coordinate points around the two extreme values, after which the extreme points of the functions \(P_{\min }(\theta _{\min },c_{\min })\) and \(P_{\max }(\theta _{\max },c_{\max })\) are obtained. Finally, the module aims to calculate the eccentricity \(e=(c_{\max }-c_{\min })/2\) and the eccentricity angle \(\theta _{\max }\).

The edge processor performs near-sensor computing of an image and processes the data stream retrieved from the sensor. It efficiently calculates the eccentricity by obtaining coordinates before the next frame. Real-time edge-vision baseline detection involves the use of pipeline processing to determine the baseline center point and image average gradient. This approach eliminates the need for frame buffering during image processing, thereby reducing the latency associated with notable image data transmission and acquiring more coordinate points for fitting. The increase in coordinate points enhances the accuracy of eccentricity calculations. Moreover, the controller uses real-time output from the detector for autofocusing and closed-loop control of encoder grating driving. This enables the handling of the rotary motor shaft position and variations in the encoder grating edge positions across multiple mountings.

Implementation and experimental results

Near-sensor detector

Baseline detection method comparison

The encoder grating images captured by the sensor were collected for comparison and to assess the effectiveness of the proposed baseline detection method. We compared this approach with traditional line detection methods, i.e., the traditional Hough Transform21, and Line Segment Detector (LSD)22 methods.

The detection result of our proposed baseline detection method is shown in Fig. 8a. It can be used to accurately detect and mark the position of the alignment ring in the image. The detection result of the traditional Hough transform is shown in Fig. 8b. It cannot be used to detect the position of the alignment ring. The LSD method can be used to detect the position of the alignment ring, but the boundary lines of the code track cannot be effectively removed, as shown in Fig. 8c. The proposed baseline detection method accurately detects the baseline position and eliminates interference from other line segments.

Hardware implementation

The sensor in the detector is a high-speed global shutter image sensor. The image resolution and frame rate of the image sensor input are \(1920\times 1080\) px and 100 fps, respectively. The resolution of image processing is \(1440\times 540\) px at the sensor center. The width of the alignment ring is \(8\,\upmu \hbox {m}\), the pixel size of the sensor is \(4.5\,\upmu \hbox {m}\), and the magnification of the microscope lens is \(6\times\). Consequently, we can calculate the image baseline width in the sensor image as \((8/4.5\times 6)\) and is approximately 11 px in the focused state. Moreover, the horizontal field of view of the image is \(1080\,\upmu \hbox {m}\). Thus, the alignment ring of the encoder grating after the initial installation is in sight.

According to (5), when the servo motor rotation speed is \(180^{\circ }\)/s, the shooting frame rate is 100 fps, and the eccentricity is \(500\,\upmu \hbox {m}\), the eccentricity calculation error \(\varepsilon\) is \(0.25\,\upmu \hbox {m}\). The calculation error is less than the sensor resolution, which is \(0.75\, \upmu \hbox {m}\)/px, and can meet the accuracy requirements of eccentricity calculation.

For the edge processor in the detector, a Xilinx Kintex-7 xc7k325tffg676-2 field-programmable gate array (FPGA) is used, which exhibits 1 GB of DDR3 800M external memory to realize the image and information HDMI display buffer. The edge processor realizes the acquisition of sensor images and eccentricity calculations. Real-time edge-vision baseline detection is implemented using high-level synthesis language for pipeline design. The eccentricity-fitting and interface-control modules are implemented by MicroBlaze, allowing the reuse of FPGA resources.

The detector interfaces involve the use of EtherCat to transmit messages with to the controller and HDMI to display the real-time image and processing results.

The timing sequence of the eccentricity calculation method implementation in the detector is shown in Fig. 9. The detector records the servo motor rotation angle for a fixed period of 1 ms. The sensor in the detector captures a encoder grating image with a period of 10 ms and an exposure time of 0.5 ms. The initial servo motor rotation angle is \(\theta _0\). In the entire eccentricity calculation process, the motor is rotated by \(360^{\circ }\) at a speed of \(180^{\circ }\)/s. Consequently, the detector can acquire 200 coordinate points within 2 s for eccentricity calculation. When the image sensor completes exposure, the edge processor initially caches the servo motor rotation angle \(\theta\). Subsequently, the edge processor performs real-time edge-vision baseline detection to obtain the baseline center point column coordinate c when read from the sensor. After baseline detection, the edge processor stores the coordinate points \((\theta ,c)\). The edge processor performs baseline detection in each frame and completes the process before the next frame. The time spent on baseline detection in each frame is 8.4 ms. After the servo motor completes a \(360^{\circ }\) rotation, the detector performs eccentricity fitting of the stored data to obtain the eccentricity and corresponding rotation angle. The entire eccentricity calculation time is 2.8 s.

FPGA resource usage and processing speed evaluation

In this article, we proposed real-time baseline detection for encoder grating mounting, marking the first instance of such a method.

A comparison of the resource usage and processing speed values is shown in Table 1. The results from previous studies23,24,25,26 are shown for comparison. Zhou et al.23 implemented a gradient-based Hough transform method for edge extraction. Lam et al.24 proposed a road edge line detection algorithm based on convolutional neural networks. Li et al.25 established a method for real-time line detection of crops by statistically counting the number of pixels in specific image rows. Zhang et al.26 accelerated the LSD line detection algorithm on an FPGA for conveyor belt detection. Our on-chip memory usage is 4,356 kbit, more than 30% lower than those of Zhou et al.23 (6,624 kbit) and Lam et al.24 (19,692 kbit). Our reported processing speed is 8.41 ms per frame, more than 8% higher than that reported by Li et al.25 (210.80 ms/frame) and Zhang et al.26 (9.22 ms/frame).

As a result, although a reduction in the processing time may increase the on-chip memory usage in hardware implementation, our approach strikes a balance between resource usage and processing speed. Our novel baseline detection method can be used to filter encoder grating code track characteristics to effectively extract baseline ___location information.

Encoder grating mounting system

System implementation

The encoder grating mounting system is shown in Fig. 10a. Details of the encoder grating pushing part are shown in Fig. 10b. Moreover, the position of the alignment ring and the eccentricity between the encoder grating and the motor shaft and are shown in Fig. 10c. The controller module in the system uses INOVANCE PAC800, with the development environment of InoProShop 1.6.2 and the backend debugging interface of Qt5. The encoder grating is initially installed on the rotary motor shaft within the system. A light-curing adhesive is applied between the rotary motor shaft and the encoder grating. After mounting, ultraviolet lights is used to solidify the adhesive to fix the encoder grating and rotary motor shaft. Subsequently, the automatic encoder grating mounting process is initiated. After the encoder grating mounting process is completed, the rotary motor is replaced with a new one and the encoder grating for the next rotary motor is mounted.

Figure 11 shows the time needed for each encoder grating mounting system step. The focusing time is 2 s, the encoder grating eccentricity calculation time is 2.8 s, and the adjustment time is 6.5 s. The mounting time of the encoder grating is related to the push encoder grating times. Every time the encoder grating is pushed, the mounting time increases by approximately 10 seconds. The system can achieve a encoder grating mounting time of approximately 15 s with a single push encoder grating.

Experimental results

We exported all the results data of the real-time baseline detection during the entire mounting process from the controller. The baseline center point column coordinate c during the mounting process as shown in Fig. 12. During this mounting process, the encoder grating was pushed twice. The eccentricity is the peak value of the fitting curve during the calculation and verification stages. In the adjustment step, due to the randomness of the encoder grating edge, feedback control through the baseline position is required to complete the correction. Therefore, the time of the adjustment step has a certain randomness, which can reduce the time required for adjustment steps by increasing the speed of the pushing rod movement. However, if the push rod moves too fast, it will cause an overshoot when it touches the edge of the encoder grating. When the eccentricity is small, the overshoot will cause the push distance to be greater than the eccentricity. Furthermore, according to the characteristics of the initial position of the batch encoder grating, we can quickly move the push rod to the position near the encoder grating, and then perform feedback control.

We used an optical microscope OLYMPUS BXFM to observe the mounted encoder grating and verified that the eccentricity detection result is consistent. The test setup for eccentricity measurement is shown in Fig. 13. We randomly selected a mounted motor, fixed it under the optical microscope, and aligned the lens with the alignment ring on the encoder grating. Then, we manually rotated the motor shaft slowly, identified the eccentricity position and calculated the eccentricity. The eccentricity of the encoder grating is the difference between the coordinates of the alignment rings at two extreme positions. We tested three different samples. The calculation results for the microscope indicated that the eccentricity occurs within \(1\,\upmu \hbox {m}\) of the error of the proposed detector, as detailed in Table 2. This error is related to the resolution of the detector (\(0.75\,\upmu \hbox {m}\)/px) and the eccentricity calculation error (\(0.25\,\upmu \hbox {m}\)). Meanwhile, the roundness of the alignment ring can also cause error, we consider it to be systematic error. The circularity error also exist in microscopic observations, and the errors of different encoder gratings are different. We cannot eliminate this error through calibration.

The experimental mounting system incorporated 50 encoder gratings. Notably, 25 were mounted manually, and 25 were mounted using the encoder grating mounting system. Table 3 provides the encoder grating eccentricity after mounting and the eccentricity used in previous studies. The proposed encoder grating mounting system exhibited an average mounting time of 25.1 s and an eccentricity of \(3.8\,\upmu \hbox {m}\) after mounting. The average number of push encoder grating times of the mounting system is 2.1 times. The maximum number of push encoder grating times is 5 times, which occurred 2 times during the installation of 25 encoder gratings. Because of variations in worker proficiency, the manual mounting time of the encoder gratings varied between 30 and 40 s. The most adept manual mounting workers achieved an average eccentricity of \(13.7\,\upmu \hbox {m}\) for manually mounted encoder gratings. Notably, the proposed encoder grating mounting system yielded a substantial 72.3% reduction in eccentricity compared with that resulting from manual mounting. The encoder grating mounting system proposed by Wang et al.18 achieved an eccentricity error of \(6.0\,\upmu \hbox {m}\) after mounting, with an average mounting time of 32.4 s. Our encoder encoder grating mounting system provided automatic encoder grating mounting with high efficiency and precision.

To highlight the significance of the encoder grating mounting eccentricity, a comparison was made with the eccentricity of encoder gratings in other encoder eccentricity compensation works, such as Hou et al.10, and Gurauskas et al.13. Our system substantially reduced encoder grating eccentricity at the mounting stage, alleviating the complexity of eccentricity compensation algorithms and directly enhancing the accuracy of the rotary motor.

Conclusions

We proposed an innovative encoder grating mounting system for efficient and high-precision automatic encoder grating installation. The system mainly comprises a near-sensor computing eccentricity detector and a push rod. The eccentricity detector rapidly captures rotating encoder grating images and performs real-time eccentricity calculations near the sensor, thereby minimizing image data transmission delays and enhancing both the speed and accuracy in eccentricity detection. Moreover, a novel method for eccentricity calculation that leverages an edge processor within the detector and an edge-vision baseline detection algorithm was proposed. This method could facilitate the real-time determination of the eccentricity and eccentricity angle of the encoder grating . Subsequently, based on the obtained eccentricity and eccentricity angle data, the push rod automatically adjusts the encoder grating position. As validated against experimental results, the proposed detector can obtain the eccentricity and eccentricity angle of a encoder grating within 2.8 s. The proposed system efficiently and precisely accomplishes encoder grating mounting tasks with an average time of 25.1 s. The resulting average eccentricity of the encoder grating mounting was measured at \(3.8\,\upmu \hbox {m}\). These outcomes confirm the effectiveness of the proposed system in achieving rapid and accurate encoder grating installation.

In future work, additional types of alignment rings for encoder gratings should be considered. Extending the type of adaptation allows for more types of encoders to be installed. The grating mounting system we propose has programmable capabilities and can perform eccentricity detection for different types of gratings by changing the real-time detection algorithm. We aim to deal with mounting the encoder gratings with different types of alignment rings such as in combination with the code track, transparent rings, or only half-rings.

Data availability

The datasets generated during and analyzed during the current study are available from the corresponding author on reasonable request.

References

Ellin, A. & Dolsak, G. The design and application of rotary encoders. Sens. Rev. 28, 150–158. https://doi.org/10.1108/02602280810856723 (2008).

Hsieh, T.-H., Watanabe, T. & Hsu, P.-E. Calibration of rotary encoders using a shift-angle method. Appl. Sci.-Basel 12, 5008. https://doi.org/10.3390/app12105008 (2022).

Zhang, W. Y. & Zhu, H. R. Analysis of impact mechanism of axial errors on circular grating corner sensor. In Seventh Asia Pacific Conference on Optics Manufacture and 2021 International Forum of Young Scientists on Advanced Optical Manufacturing (APCOM and YSAOM 2021), 1051–1056, https://doi.org/10.1117/12.2617193 (2022).

Barakauskas, A., Barauskas, R., Kasparaitis, A., Kausinis, S. & Jakštas, A. Error modelling of optical encoders based on moiré effect. J. Vibroeng. 19, 38–48. https://doi.org/10.21595/jve.2016.17132 (2017).

Chen, G. Improving the angle measurement accuracy of circular grating. Rev. Sci. Instrum. 91, 065108. https://doi.org/10.1063/5.0001660 (2020).

Zhao, G. B. et al. On-line angle self-correction strategy based on a cobweb-structured grating scale. Meas. Sci. Technol. 32, 055001. https://doi.org/10.1088/1361-6501/abdd72 (2021).

Deng, F., Chen, J., Wang, Y. Y. & Gong, K. Measurement and calibration method for an optical encoder based on adaptive differential evolution-Fourier neural networks. Meas. Sci. Technol. 24, 055007. https://doi.org/10.1088/0957-0233/24/5/055007 (2013).

Jia, H. K., Yu, L. D., Zhao, H. N. & Jiang, Y. Z. A new method of angle measurement error analysis of rotary encoders. Appl. Sci.-Basel 9, 3415. https://doi.org/10.3390/app9163415 (2019).

Behrani, G., Mony, A. & Gireesh Sharma, N. Modeling and validation of eccentricity effects in fine angle signals of high precision angular sensors. Sensor Actuat. A-Phys. 345, 113774. https://doi.org/10.1016/j.sna.2022.113774 (2022).

Hou, H., Cao, G. H., Ding, H. C., Li, K. & Zhao, C. F. Detection and compensation of installation eccentricity of photoelectric encoder based on double highpass filter. Opt. Eng. 61, 064107. https://doi.org/10.1117/1.OE.61.6.064107 (2022).

Zhou, N., Cai, N., Zhao, J., Li, W. J. & Wang, H. Error compensation for optical encoder based on variational mode decomposition with a coarse-to-fine selection scheme. IEEE T Instrum. Meas. 72, 1–10. https://doi.org/10.1109/TIM.2023.3235460 (2023).

Huang, Y. et al. An eccentricity error separation method for rotary table based on phase feature of moiré signal of single reading head. Photonics-Basel 10, 1267. https://doi.org/10.3390/photonics10111267 (2023).

Gurauskis, D., Kilikevičius, A., & Mažeika, D. Development research of miniature high precision modular rotary encoder kit based on dual optical sensors. In IEEE Open Conference of Electrical. Electronic and Information Sciences (eStream)1–5, 2023. https://doi.org/10.1109/eStream59056.2023.10134846 (2023).

Yu, H., Wan, Q. H., Liang, L. H. & Zhao, C. H. Analysis and elimination of grating disk inclination error in photoelectric displacement measurement. IEEE T Ind. Electron. 71, 1–8. https://doi.org/10.1109/TIE.2023.3294641 (2023).

Wang, X., Liang, Y., Zhang, W., Yang, X. & Hao, D. N. An optical encoder chip with area compensation. Electronics-Switz 11, 3997. https://doi.org/10.3390/electronics11233997 (2022).

Li, X. et al. A novel optical rotary encoder with eccentricity self-detection ability. Rev. Sci. Instrum. 88, 115005. https://doi.org/10.1063/1.4991058 (2017).

Dalboni, M. & Soldati, A. Absolute two-tracked optical rotary encoders based on Vernier method. IEEE T. Instrum. Meas. 72, 1–12. https://doi.org/10.1109/TIM.2022.3225052 (2023).

Wang, Y., Pei, Z., Liu, X., Fu, P. & Wang, F. The design of control system of grating eccentricity adjustment based on motion control card. In 2015 International Conference on Computational Intelligence and Communication Networks (CICN), 1575–1578. https://doi.org/10.1109/CICN.2015.299 (2015).

Albrecht, C., Klöck, J., Martens, O. & Schumacher, W. Online estimation and correction of systematic encoder line errors. Machines 5, 1. https://doi.org/10.3390/machines5010001 (2017).

Fu, B. Y. et al. Shape from focus using gradient of focus measure curve. Opt. Laser Eng. 160, 107320. https://doi.org/10.1016/j.optlaseng.2022.107320 (2023).

Gonzalez, R. C., Woods, R. E. & Eddins, S. L. Digital Image Processing Using MATLAB 2nd edn, 549–557 (Prentice Hall, Hoboken, 2003).

Grompone von Gioi, R., Jakubowicz, J., Morel, J.-M. & Randall, G. LSD: A line segment detector. Image Process On Lin. 2, 35–55. https://doi.org/10.5201/ipol.2012.gjmr-lsd (2012).

Zhou, X., Ito, Y. & Nakano, K. An efficient implementation of the gradient-based hough transform using DSP slices and block RAMs on the FPGA. In 2014 IEEE International Parallel & Distributed Processing Symposium Workshops, 762–770. https://doi.org/10.1109/IPDPSW.2014.88 (2014).

Lam, D. K., Du, C. V. & Pham, H. L. QuantLaneNet: A 640-FPS and 34-GOPS/W FPGA-based CNN accelerator for lane detection. Sensors-Basel 23, 6661. https://doi.org/10.3390/s23156661 (2023).

Li, S., Zhang, Z., Du, F. & He, Y. A new automatic real-time crop row recognition based on SoC-FPGA. IEEE Access 8, 37440–37452. https://doi.org/10.1109/ACCESS.2020.2973756 (2020).

Zhang, C., Chen, S., Zhao, L., Li, X. & Ma, X. FPGA-based linear detection algorithm of an underground inspection robot. Algorithms 14, 284. https://doi.org/10.3390/a14100284 (2021).

Funding

This work is supported by National Science and Technology Major Project (2021ZD0109800), National Natural Science Foundation of China (62334008) and National Natural Science Foundation of China (62274154).

Author information

Authors and Affiliations

Contributions

J.Y.: Data curation, Formal analysis, Investigation, Methodology, Software, Validation and Writing - original draft. R.D.: Conceptualization, Funding acquisition, Methodology and Writing - review & editing. W.Z.: Resources, Validation. X.W.: Writing - review & editing. J. X.: Writing - review & editing. J. L.: Writing - review & editing. N.W.: Visualization, Writing - review & editing. L.L.: Supervision, Writing - review & editing.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Yu, J., Dou, R., Zhang, W. et al. Precision encoder grating mounting: a near-sensor computing approach. Sci Rep 14, 22249 (2024). https://doi.org/10.1038/s41598-024-72452-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-024-72452-6