Abstract

Insider threats pose a significant challenge in cybersecurity, demanding advanced detection methods for effective risk mitigation. This paper presents a comparative evaluation of data imbalance addressing techniques for CNN-based insider threat detection. Specifically, we integrate Convolutional Neural Networks (CNN) with three popular data imbalance addressing techniques: Synthetic Minority Over-sampling Technique (SMOTE), Borderline-SMOTE, and Adaptive Synthetic Sampling (ADASYN). The objective is to enhance insider threat detection accuracy and robustness in imbalanced datasets common to cybersecurity domains. Our study addresses the lack of consensus in the literature regarding the superiority of data imbalance addressing techniques in this field. We analyze a human behavior-based dataset (i.e., CERT) that reports users’ Information Technology (IT) activities with a substantial number of samples to provide a clear conclusion on the effectiveness of these balancing techniques when coupled with CNN. Experimental results demonstrate that ADASYN, in conjunction with CNN, achieves a ROC curve of 96%, surpassing SMOTE and Borderline-SMOTE in enhancing detection accuracy in imbalanced datasets. We compare the results of these three hybrid models (CNN + imbalance addressing techniques) with state-of-the-art selective studies focusing on ROC, recall, and accuracy measures. Our findings contribute to the advancement of insider threat detection methodologies.

Similar content being viewed by others

Introduction

Insider threat detection is a critical area of cybersecurity that focuses on identifying and mitigating threats from within an organization. An insider threat refers to the potential danger posed by individuals within an organization, including workers, previous employees, vendors, or business partners, who possess privileged knowledge about the firm’s security protocols, data, and computer systems. These individuals might misuse this information to exploit the organization’s security vulnerabilities, leading to potential data breaches or other malicious activities. Recent increases in insider assaults have prompted specialists to give them greater attention. Gurucul1 performed a survey in 2023 and found that 74% of businesses report an increase in the frequency of insider assaults. The study also revealed a concerning prevalence of insider threats. Over half of businesses have been affected in the past year, with 8% experiencing a significant number (20 or more) of insider incidents. Despite these statistics, many businesses continue to prioritize perimeter security while neglecting the insider threat, which accounts for an estimated 75% of all attacks2.

Insider risks can be classified into various categories, including malicious insiders, negligent insiders, and infiltrators. Malicious insiders deliberately inflict harm on the organization, whether for personal benefit, retribution, or other underlying motives (e.g., employees stealing sensitive data to sell to competitors or disgruntled employees sabotaging IT systems). Negligent insiders are individuals who don’t have malicious intent but inadvertently cause harm due to carelessness (e.g., employees who fall for phishing scams or those who mishandle sensitive data). Infiltrators are external attackers who gain access to the organization’s network and act as insiders. They might compromise an employee’s account or pose as a contractor to gain access.

Insider threat detection is crucial for individuals and organizations for many reasons: Insiders inherently have more access and trust than external actors, so this access can allow them to bypass traditional security measures. With the rise of digital information and the ease of data transfer, insider incidents have become more frequent. Insider threats can be devastating, leading to significant financial losses, damage to an organization’s reputation, and legal repercussions. Detecting insider threats is more challenging than external threats because insiders possess authorized access to an organization’s resources. There are several techniques to protect against insider threats:

-

1.

User Behavior Analytics (UBA) systems monitor users’ activities in real time and compare them against historical baselines to identify anomalies. If a user suddenly downloads large amounts of data or accesses sensitive information, they usually don’t; it could be a sign of an insider threat.

-

2.

Data Loss Prevention (DLP) tools are utilized to oversee and regulate the movement of data. The DLP technology can effectively restrict unlawful data transfers, guaranteeing that confidential information remains within the firm and is not disclosed to external entities.

-

3.

Implementing access controls to restrict employees’ access to only the information necessary for their job responsibilities.

-

4.

Training and awareness that educate employees about the risks and signs of insider threats can turn them into an active defense layer. Training and awareness can report suspicious activities, reducing the risk of insider incidents.

-

5.

Endpoint monitoring tools that track employee device activities ensure that no malicious actions are taken and company policies are followed.

-

6.

Forensic Analysis tools help to trace back actions, recover lost data, and provide evidence in the event of a suspected insider incident.

Deep Learning (DL), a subset of machine learning, has shown great promise in various domains, including cybersecurity. Neural Networks, which are part of a subset of representation-based AI approaches inspired by brain function, are the defining feature of Deep Learning. Learning how to represent features automatically is made possible by Deep Learning algorithms. Deep Neural Networks (DNNs) have highly efficient designs that consist of numerous (deep) layers of nodes3. Regarding insider threat detection, DL techniques can be particularly effective due to their ability to learn complex patterns from large datasets.

Various DL strategies can be utilized to mitigate the risk of insider threats. Recurrent Neural Networks (RNNs)4 are designed to recognize patterns in data sequences, making them suitable for time-series data like user activity logs. The RNNs can be used to analyze sequences of user actions over time, identifying anomalies or patterns consistent with malicious activities. Long Short-Term Memory (LSTM) Networks5 are a specific type of RNN that can learn and understand long-term relationships between data points. The LSTMs possess expertise in categorizing, manipulating, and forecasting time series by considering temporal intervals of uncertain length. The LSTMs can be used to analyze longer sequences of user activities, capturing patterns that traditional methods might miss. Autoencoders6 are unsupervised neural networks that learn to encode data in a way that they can reproduce the original data from the encoded form. They can be used for anomaly detection, so training the autoencoder on “normal” user behavior and anomalies (potential insider threats) can be detected by observing when the network struggles to encode and decode a piece of data accurately.

Variational Autoencoders (VAEs) are types of autoencoders that add probabilistic encoders and decoders. The VAEs can generate new data that are similar to the input data. Like autoencoders, VAEs can be trained on normal behavior and used to detect anomalies. Generative Adversarial Networks (GANs)7 are comprised of two interconnected networks, namely a generator and a discriminator, which undergo simultaneous training. The generator’s objective is to generate data, whereas the discriminator’s objective is to differentiate between genuine and generated data. The GANs can generate synthetic data for the minority class (malicious activities) to resolve the disparity in class distribution within the dataset. This enhanced dataset can then be used to train more balanced and accurate models. User Behavior Analytics8, using DL, combines DL models with user behavior analytics to create a system that learns typical user behavior patterns and flags deviations as potential threats. Such systems can continuously adapt to evolving user behaviors, ensuring they remain effective over time. Embedding DL models9 can convert categorical data, like user IDs or application names, into dense vectors (embeddings) that capture the relationships between items. These embeddings can provide richer representations of user activities, enhancing the efficacy of models to detect insider threats.

Insider threat analysis is underexplored due to insufficient empirical evidence and an unequal data distribution. This study proposes an insider threat detection model that integrates an imbalance addressing technique with the CNNs DL algorithm. While their main application is in image processing, CNNs DL algorithm can also be utilized to analyze any data with a grid-like structure, such as time series data. Transforming logs or sequences of user activity into a format suitable for CNNs (e.g., a 2D grid) allows the network to detect local patterns or anomalies in the data.

In this paper, we combine the convolutional neural networks and Imbalanced addressing technique to assess the improvement of the performance of insider threat detection. The paper’s key contribution is the comprehensive comparative evaluation of three popular data imbalance addressing techniques (SMOTE, Borderline-SMOTE, and ADASYN) integrated with Convolutional Neural Networks (CNN) for insider threat detection. Moreover, we compare the results of these three hybrid models to those of selective studies from the literature. The training and validation are done using a human behavior-based dataset with a substantial number of augmented samples. Thus, the study provides clear insights into the effectiveness of imbalance-addressing techniques, advancing the application of DL to enhance insider threat detection methodology in cybersecurity.

The rest of this paper is organized as follows. Methods from related works for detecting insider threats that rely on DL are reviewed and analyzed in Sect. “Related work”. The comparative evaluation methodology is presented in Section “Comparative evaluation methodology”. The outcomes of the DL models employed to address the insider threat issue are presented and discussed in Section “Experimental results”. Section “Conclusion” concludes the study and presents future work.

Related work

Insider threats have been a crucial point of discussion because of their reputation as one of the most challenging types of cyberattacks to counter. Because of this assumption, anomaly-based approaches dominate the field of insider threat detection. A common technique for identifying anomalies is using neural networks for classification10. There has been a recent uptick in using DL techniques to assess insider threats.

As a result of their high dimensionality, complexity, heterogeneity, and sparsity, traditional shallow ML models cannot properly use user behavior data for insider threat detection11. However, DL can be useful for monitoring employee actions inside a company to identify potential threats from within. Because of its ability to learn numerous layers of hidden representations from complicated data based on its deep structure, DL is a representation-learning method11. Recent research has suggested several strategies for identifying insider threats, including using Deep Feedforward Neural Networks (FNNs), CNNs, and Recurrent Neural Networks (RNNs). Some cutting-edge deep-learning techniques for detecting insider threats are discussed here. An attention LSTM was suggested by Tian et al.12, which predicts typical user behavior and flags deviations as harmful activity. Parallel feature extraction was employed to distinguish malicious from benign data using a multi-head attention-LSTM (which has shown significant priority in neural language processing). The Dempster-Shafer theory was then applied to the input data to establish whether or not it represented a threat.

LSTM has been used as an unsupervised anomaly detection approach by several researchers13,14,15. This method attempts to fix issues that regular neural networks (RNNs) have, namely a lack of temporal gradients in lengthy data sequencing. It was also pointed out by16 that RNNs aren’t very efficient and that the approach isn’t very stable, mainly when used on a lengthy run of skewed data. Most practical anomaly detection applications include large training datasets and an uneven distribution in such training sets, making RNN a less desirable option. Meng et al.17 recommended analyzing insider risks using LSTM-RNNs and Kernel PCA. The model was constructed and evaluated on the CMU CERT Insider Threat dataset v6.2. Unlike deep learning models, the suggested method’s performance was evaluated in contrast to more conventional ML techniques like support vector machines and isolation forests. Results showed that the TPR for this method was 92.46%, the FPR was 6.8%, the precision was 95.12%, and the accuracy was 93.85%. The original log data was pre-processed meticulously, allowing for this precision level. To train and evaluate the model, features must be retrieved from the standardized and aggregated events based on the characteristics and actions of the persons involved.

Rastogi et al.18 present an alternative method, DANTE, which uses system logs to build a sequence of events for each user over a particular period to demonstrate truth. Their model uses LSTM to analyze the sequences and distinguish typical from abnormal behaviors. The CERT insider threat dataset further categorizes threats into one of five groups. The model’s accuracy is relatively reasonable but has a high FP rate. CNN has been used in several studies and proposals in recent years19,20,21,22. In23, a novel method for identifying insider threats is presented, promising a viable solution. Insiders were categorized in this case by picture classification. Before extracting the characteristics, they employed the under-sampling method. A greyscale picture was created for each user within a day after 20 characteristics were extracted. The grayscale picture was then sent to a convolutional neural network pre-trained at great depth. Learning transfer on MobileNetV2, VGG19, and ResNet50 pre-trained models was the primary emphasis of the article, and the findings were encouraging. In24, he also conducted research, employing a CNN that he had trained from scratch to distinguish between malicious and benign colored pictures. Although they used an extensive time range where each picture represented activity over weeks or months, their findings were encouraging.

User behavior profiling, as described in25, is an alternative method for detecting insider threats; it involves observing and investigating user behavior action sequences. The researchers proposed a hybrid ML model composed of CNN and multi-state long short-term memory (MSLSTM) to single out a stabilizer anomaly in the observed behavior patterns. First, the long short-term memory (LSTM) deep neural network is used to sample temporal action sequences from user behavior to extract temporal properties and encode each user behavior action sequence. Input and output lengths of arbitrary size are processed by LSTMs, with the features being transformed into a low-dimensional real vector matrix of a fixed size. To help CNN identify suspicious insider activity, we feed it fixed-size feature matrices that employ fixed-size inputs and outputs to classify a user’s action sequence as typical or out of the ordinary. Multi-state LSTM achieved an accuracy of 0.9042 on the training data, while on the testing data, it achieved an accuracy of 0.9047. According to the findings of experiments, multi-state LSTMs are superior to single-state ones.

A user behavior-based detection technique employing LSTM and CNN models was proposed in26. A CNN is fed abstracted temporal characteristics extracted from an LSTM trained on user activities to classify data. These are then transformed into fixed-sized matrices and fed into the CNN classifier. On a freely available dataset, this research achieved an AUC of 0.9449. An area under the curve of 0.94 demonstrates that CNN may address user action sequences. By combining the output of the CNN model trained with the query name as input and a Random Forest (RF) base classifier, Lambion et al.26 propose an ensemble classifier that further enhances accuracy. Thus, the RF classifier used the 12-dimensional feature space, using the average CNN probability as one of the features. On the validation set, the model attained an Area under the ROC Curve (AUC) of 99.84%, a True Positive Rate (TPR) of 99.49%, and a False Positive Rate (FPR) of 1%. This high AUC and TPR at 1%FPR in detecting tunneled DNS may be attributed to the fact that the ensemble classifier is built from many different basis classifiers.

In addition, Yuan et al.11 demonstrated that by fusing CNN and LSTM for DNN, a hybrid, high-performance anomaly detection model is attainable. They’re developing a method for designing features that may identify insider risks. The LSTM is first used to learn human behavior and language through the network’s operations by extracting and abstracting temporal aspects of users. The next step involves applying CNN algorithms to fixed-size matrices constructed from the data retrieved by LSTM to spot outliers and potential insider threats. While insider threats and anomalies have been successfully identified, findings on accuracy and false positive rates have been omitted. Delays in converting features to fixed-size matrices further hinder the method’s overall performance. Table 1 presents a brief overview of the most relevant studies in the literature. Each approach used different methodologies, utilized different versions of the dataset, and had diverse outcomes regarding insider threat detection.

Despite the extensive study of insider threat analysis over the years, developing novel methodologies has been constrained by the inadequate resolution of the highly unbalanced insider threat dataset problem. Therefore, the investigation of a practical approach for overcoming imbalances in insider threat detection using a deep learning model is lacking in existing research. This work aims to fill this gap by evaluating the suitable model and imbalance technique.

Comparative evaluation methodology

Traditionally, data theft can be detected by monitoring user activity using a set of rules put in place by the organization’s security officers27. However, if a rule does not exist, then the user will not be reported. Another method is active threat hunting by the security officers. Both of these methods require human expertise to detect attacks. A newer approach is to use a user and entity behavior analytics tool, which detects a deviation from a user’s normal behavior using a machine learning approach. However, employing a predictive model that uses an ML algorithm on an imbalanced dataset has produced high inaccuracy and bias28. The lack of real-world data and the issue of data imbalance mean that insider threat analysis remains an understudied research area. Therefore, we evaluate three hybrid deep learning models coupled with imbalanced addressing techniques to tackle the problem. An overview of such a hybrid model is presented in Fig. 1.

Users’ IT activities profiling

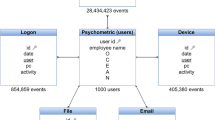

The efficacy of the suggested approach is assessed via experimentation using the widely used CERT4.2 insider threat dataset29. The CERT team at Carnegie Mellon University’s Software Engineering Institute generated the dataset to facilitate research and development in insider threat identification. More details of the dataset generation are presented in28.

The dataset comprises 32,770,227 activity records produced by a group of 1000 users. Within this group, 70 individuals have been identified as engaging in harmful activities, intentionally included in the dataset by ___domain experts. The dataset encompasses several threat scenarios; hence, we choose to use the subset that specifically pertains to the insider danger of data leaking. It briefly outlines the data leakage attack scenario as “A user who did not previously utilize removable drives. Then, he starts logging into an organization system after normal working hours, uses removable devices, uploads data to wikileaks.org, and leaves the organization shortly thereafter”30. Originally, the dataset included many log files, log on/off, file operations, HTTP, email, and removable devices, so we combined the files and selected the observations that represent the data leakage threat scenario specified above. A brief description of the utilized observations is presented in Table 2.

When we analyze the CERT dataset for insider threat detection, we focus on patterns that deviate from the norm. For instance, unusual login times, frequent switching between different PCs, or a mismatch between user behavior and their typical profile could indicate a threat. Machine learning models, especially those involving anomaly detection, can be trained using this data. The models learn the normal behavior patterns of users and then identify deviations that might indicate malicious activities. The dataset analysis includes many challenges, such as data quality, bias, scalability, and privacy concerns. Ensuring the data is accurate and well-formatted is crucial. Incorrect timestamps or user IDs can lead to faulty analyses. Care must be taken to avoid biases, such as not wrongly flagging night-shift workers as suspicious due to their unusual working hours. The large dataset (over 2 million observations) requires efficient data processing and analysis methods. Handling the data responsibly and respecting privacy laws and regulations is vital, especially since it involves user activity. In our role as insider threat detection researchers, the CERT dataset involves data preprocessing to prepare the dataset, experimental data analysis to understand patterns, feature engineering to create meaningful variables, and model development for prediction. The ultimate goal is to provide actionable understandings that help in enhancing the protection against insider threats.

Feature selection

In this section, we present the feature selection process. From the combined dataset, we extracted the features for the machine learning model. Each feature represents a variable that the model will use to make predictions. Choosing the right features is crucial in machine learning. It impacts the model’s ability to learn and make accurate predictions. In this case, features like ‘hour_of_day’, ‘day_of_week’, etc., are chosen based on their potential relevance to predicting malicious activities. The selected features are listed in Table 3.

Converting categorical labels to a binary format (0 or 1) is a common preprocessing step, especially for binary classification tasks. Most machine learning algorithms require numerical input, so this conversion is essential. The feature selection process is a crucial part of preparing data for modeling. It involves understanding the dataset, selecting relevant features, and appropriately encoding labels. The selection of features (e.g., hour_of_day, day_of_week, etc.) suggests a focus on temporal patterns and user behavior, which are key aspects in insider threat detection. The binary encoding of the label aligns with typical practices in classification tasks, where we are looking to create a predictive model that can classify actions as either malicious or non-malicious based on the selected features. In summary, the feature selection approach emphasized understanding the dataset, identifying variables with high predictive potential, and ensuring proper encoding of labels to build an effective ML model for insider threat detection.

Encoding

Label Encoding (LE) is a process used in machine learning to convert categorical text data into a numerical format. This transformation is crucial because most machine learning algorithms work better or exclusively with numerical data. Applying the LE to the features (hour_of_day, day_of_week, user, pc, action, and malicious) in the dataset requires careful consideration of the nature of each feature to determine if the LE process is appropriate. In Table 4, we show the type of feature and encoding process.

While the LE process is a straightforward and efficient method to convert categorical features into a machine-readable format, it’s important to consider the nature of each feature and the type of machine-learning model to be used. In this study, we select the LE as it is crucial in the feature engineering phase and can significantly impact the performance of our proposed model.

Dataset splitting

In insider threat detection, the train-test split is a fundamental step in DL modeling. It allows us to train the DL model on a large portion of the dataset while keeping a separate subset for unbiased evaluation of model performance. This is to assess how well our proposed model generalizes to unseen data, which is critical in insider threat detection where the DL model’s predictive accuracy is critical. We utilized the train_test_split function from scikit-learn, a popular machine learning library31, to split the insider threat dataset into training and testing sets, as shown in Table 5.

As shown in Table 5, the given dataset has a large size of 2,308,813 records. The 80/20 parameter specifies that 20% of the data will be used for the test set. Consequently, 80% will be used for the training set. Given the feature matrix of the dataset size of 2,308,813 records, the test set will contain 461,763 records, and the training set will contain 1,847,050 records. As seen in Table 5, the dataset is large, so the train-test split will result in substantial subsets, which is beneficial for training robust models. However, it also means that the computational resources and time required for training and testing the models will be significant. The choice of an 80/20 split is a common heuristic in machine learning, balancing the need for sufficient training data with enough testing data to validate the model’s performance.

Scaling

Scaling is a crucial preprocessing step in many machine learning pipelines, especially when different features have varying scales and ranges. The purpose is to standardize features by removing the mean and scaling to unit variance. This is particularly useful for algorithms that assume features are centered around zero and have variance in the same order32. The StandardScaler from the scikit-learn library is utilized to scale the features in your insider threat dataset. The StandardScaler standardizes features by removing the mean and scaling to unit variance using the following equation:

where x is the original feature value, and µ is the mean of the feature. σ is the standard deviation of the feature, while z is the standardized (scaled) feature value. For example, the original values of the “hour_of_day” feature range from 0 to 23 (assuming a 24-hour format). These values are centered around their mean and scaled by their standard deviation. For instance, if the average “hour_of_day” is 12 and the standard deviation is 6, a value of 6 would be transformed to (6 − 12) / 6 = − 1.

For neural networks, including our proposed CNNs model applied in this study, standard scaling helps faster convergence during training by ensuring all inputs are on a similar scale. It’s also crucial to use the same scaling parameters (mean and standard deviation calculated from the training set) for both the training and testing datasets to maintain consistency and model performance.

Imbalance addressing

Synthetic minority over-sampling technique (SMOTE)

Implementing SMOTE33 in our insider threat detection scenario is essential as the employed CERT dataset is extremely imbalanced. The SMOTE is used to address the class imbalance issue in a dataset. Class imbalance occurs when the number of instances of one class significantly outweighs the other, leading to a biased model towards the majority class. In the utilized dataset, the classes are highly imbalanced, as shown in Fig. 2.

We apply the SMOTE technique to address the highly imbalanced classes of the dataset and prepare data for the CNN model. SMOTE generates synthetic samples for the minority class. This is done by randomly selecting a point from the minority class and computing the k-nearest neighbors for this point. The synthetic points are then added between the chosen point and its neighbors. This process helps balance the class distribution without causing an overfitting problem, which might occur with simple over-sampling. In our insider threat detection scenario, malicious activities (346) are much lower than normal activities (2308467). This imbalance can result in poor DL model performance, especially when detecting malicious activities. SMOTE is an oversampling technique in which synthetic samples are generated for the minority class. This method is used to overcome the overfitting problem posed by random oversampling. Here’s how the SMOTE algorithm34 typically works.

The SMOTE works in the feature space of the minority class. It assumes that the feature space is continuous. The choice of k (number of nearest neighbors) is critical. A small value of k might lead to overfitting, as the synthetic samples might be too close to the original minority samples. A significant value of k might make the synthetic samples too generalized. After we applied the SMOTE on the training set, we got the balanced dataset as presented in Table 6.

The randomness in choosing the nearest neighbors and in the vector scaling ensures diversity in the synthetic samples. However, this randomness also means that results can vary slightly each time SMOTE is applied unless a fixed random seed is used. In our implementation, we put it as default. The SMOTE assumes that the minority class instances are of good quality. If the minority class contains outliers or noise, the synthetic samples generated might not be representative. Several variations of the SMOTE algorithm, like Borderline-SMOTE, SVM-SMOTE, etc., are designed to handle specific scenarios or data types more effectively. After we explore the process of addressing the imbalance issue of the dataset and its significance in the context of preparing data for the CNN model, we illustrate the implantation of the CNN model in the next section.

Borderline-SMOTE

The Borderline-SMOTE algorithm is an extension of the SMOTE technique designed to create synthetic samples near the decision boundary where the classes are overlapping or close. This focus on the borderline examples aims to provide more information to the classifier about the decision boundary, potentially improving its ability to discriminate between classes. Borderline-SMOTE is an oversampling technique that focuses on creating synthetic samples near the borderline where classes overlap. This variation of SMOTE is beneficial when the classes are not well separated. The algorithm identifies the borderline examples of the minority class by considering the examples that are misclassified by a k-nearest neighbor classifier. It then generates new synthetic samples along the lines of joining these borderline minority class samples to their nearest neighbors in the minority class. This technique is designed to strengthen the decision boundary for the minority class. It is often more effective than the original SMOTE in scenarios where classes are imbalanced and not clearly defined. Here is an in-depth explanation of the Borderline-SMOTE algorithm35:

The effectiveness of Borderline-SMOTE depends on the ability to identify the “malicious insider” instances correctly. This identification is crucial because adding synthetic samples in the wrong regions can introduce noise and make the decision boundaryless distinct. The choice of k (number of nearest neighbors) is significant. A small k might make the algorithm too sensitive to noise, while a large k might fail to capture the complexity of the decision boundary. The proportion of “malicious insider” instances to oversample is also a parameter that can be adjusted. It’s not always necessary to oversample all “malicious insider” instances to the same degree.

The adaptive synthetic (ADASYN)

The ADASYN sampling approach is an algorithm used to create synthetic samples for imbalanced datasets, particularly aimed at improving classifier performance for minority class examples. ADASYN is based on the idea of adaptively generating minority data samples according to their distributions: more synthetic data is generated for minority class samples that are harder to learn compared to those that are easier. The algorithm36 can be outlined as follows.

Let d be the dimension of the input feature space, X the minority class sample set, and Y the majority class sample set. For each xi ∈ X:

-

1.

Compute ri, the ratio of majority class samples in the k-nearest neighbors of xi.

-

2.

Normalize ri to obtain the density distribution \({\stackrel{\sim}{r}}_{i}\) which estimates the distribution of the minority class.

-

3.

Calculate gi, the number of synthetic samples to generate for xi, as gi = G ⋅ \({\stackrel{\sim}{r}}_{i}\).

-

4.

Generate each synthetic sample s using the equation above.

There are many advantages to applying the ADASYN algorithm for insider threat detection. By focusing more on the samples that are difficult to learn, ADASYN can help classifiers form a decision boundary that is better at generalizing the minority class. The ADASYN Balanced Training Set compared with the original set is shown in Table 7.

Unlike other oversampling techniques that treat all minority class samples equally, ADASYN adapts to the inherent data structure. It reduces the classifier’s bias towards the majority class by making the decision boundary more sensitive to the minority class. Otherwise, there are limitations for ADASYN. Like SMOTE, ADASYN is sensitive to noise and outliers in the minority class, which can result in generating poor-quality synthetic samples. The k-nearest neighbor search can be computationally expensive, especially for large datasets with many features. ADASYN may not perform well in very high-dimensional spaces due to the curse of dimensionality.

Convolutional neural networks (CNNs)

The CNN37 is a category of deep neural networks that are particularly effective for tasks that require the recognition of patterns in input data, such as images. It has been revolutionary in fields like computer vision due to its ability to learn hierarchies of features through backpropagation. We provide an introduction to the CNN architecture and its fundamental components. CNNs are powerful neural network architectures traditionally known for their performance in visual tasks such as image recognition. For insider threat detection, the use of CNN implies a creative approach where the tabular data might have been transformed into a format suitable for convolution operations, such as a 1D convolution over sequences or a 2D convolution over structured input layers. CNNs are capable of learning local patterns, and in the context of insider threat detection, this could mean identifying specific patterns of user behavior that are indicative of malicious activities. The fundamental components of CNN are as follows:

-

Convolutional layers: Convolutional layers apply a convolution operation to the input, passing the result to the next layer. This operation involves a filter or kernel that slides over the input data and computes dot products. The mathematical expression for the convolution operation for a 2D input is:

$$\:\left(f\:\text{*}\:g\right)\left(i,\:j\right)=\:\sum\:m\:\sum\:n\:f\:\left(m,\:n\right)\cdot\:\:g\left(i-m,\:j-n\right)\:\:$$(4)where f is the input image and g is the kernel. The convolution operation (f ∗ g) (i, j) is defined as the sum of element-wise products of f and a flipped version of g as it is shifted over f.

-

Activation functions: After the convolution operation, an activation function is applied to introduce non-linearity into the network. The most common activation function is the Rectified Linear Unit (ReLU). ReLU is defined as:

$${\text{ReLU}}\left(x\right)={\text{max}}(0,x)$$(5)This function sets all negative values in the output of the convolution operation to zero and is applied element-wise.

-

Pooling layers: Pooling layers reduce the dimensionality of the data by combining the outputs of neuron clusters at one layer into a single neuron in the next layer. Max pooling is a common pooling function. For max pooling with a 2 × 2 filter, the operation can be defined as:

$${\text{MaxPooling}}\left(f\right)\:(i,j)=\text{max}\left(f\right(i,j),\:f(i+1,j),\:f(i,j+1),\:f(i+1,j+1\left)\right)$$(6)This operation takes the maximum value of a 2 × 2 area.

-

Fully connected layers: After several convolutional and pooling layers, the high-level reasoning in the neural network is done via fully connected layers. Neurons in a fully connected layer have full connections to all activations in the previous layer. The operation in a fully connected layer can be expressed as:

$$\:y=Wx+b$$(7)where W is the weight matrix, x is the input vector, and b is the bias vector.

-

Output layer: The final layer is typically a softmax function for multi-class classification tasks. The softmax function gives a probability distribution over classes. The softmax function is defined as:

$$\:\text{S}\text{o}\text{f}\text{t}\text{m}\text{a}\text{x}\left(xi\right)=\frac{{e}^{x}i}{{\sum\:}_{k}{\:e}^{{x}_{k}}}$$(8)where xi is the input to the softmax function, xi represents the i-th component of the vector x, and i indexes over all input scores.

The CNN is trained using backpropagation and an optimization algorithm, typically stochastic gradient descent (SGD) or its variants. The process involves:

-

1.

Forward pass: Compute the output of the network by applying the convolution operations, activation functions, pooling, and fully connected layer operations sequentially.

-

2.

Loss calculation: Calculate the loss using a loss function. For classification, cross-entropy loss is common:

$${\text{CrossEntropy}}(y,y ^{ \wedge } )=-\:\sum\:i\:yi\:{\text{log}}\:(y^i)$$(9)where y is the true label in one-hot encoded form and y^ is the predicted probability distribution from the softmax output.

-

3.

Backward pass: Compute the gradient of the loss concerning the weights of the network by applying the chain rule (gradient descent). Update the weights of the network in the direction that minimally decreases the loss.

-

4.

Iteration: Repeat the forward pass, loss calculation, and backward pass for many epochs or until the loss converges.

CNNs are sophisticated architectures that automatically and adaptively learn spatial hierarchies of features from input data. The design and efficiency of CNNs come from their ability to exploit the correlation in spatial data through the use of shared weights (in convolutional filters) and pooling operations. In practice, a CNN might consist of numerous stacked convolutional, activation, and pooling layers, leading to a deep architecture capable of high-level feature extraction. The exact architecture of a CNN will depend on the specific task and the nature of the input data. For functions like insider threat detection, CNNs could be adapted to work with sequential data. CNNs are 38,39characterized by their significant depth, consisting of numerous convolutional and pooling layers, resulting in high performance for classification tasks.

Experimental results

We tested the considered hybrid models for detecting insider threats on the CERT r4.2 insider risk dataset29, which undergoes the preparation processes outlined in (Sections “Users’ IT activities profiling”–“Scaling”). The suggested model section outlines various preprocessing phases applied to the dataset, such as consolidation, missing data handling, refinement, feature extraction, and encoding. We used the ROC curve as ROC curves offer a more nuanced evaluation of a model’s performance, especially in situations involving imbalanced classes, varying decision thresholds, and critical decision-making contexts such as insider threat, a graphical tool, to visualize the model’s performance under various conditions.

SMOTE + CNN

The SMOTE helps to balance class distribution by generating synthetic examples of the minority class. This approach can improve the detection of insider threats by creating a dataset where rare threat instances are better represented, allowing CNN to learn features relevant to both classes. A CNN is capable of capturing complex patterns through its layered structure, which includes convolutional layers for feature extraction and fully connected layers for classification. In this context, it would have been trained to detect patterns indicative of insider threats based on the enriched dataset provided by SMOTE. The analysis of the Receiver Operating Characteristic (ROC) curve with an Area Under the Curve (AUC) of 0.94, in the context of applying the SMOTE algorithm and the CNN for insider threat detection, requires a multidimensional approach. The results of applying SMOTE and CNN are presented in Fig. 3.

The AUC of 0.94 indicates that the model has a high probability of distinguishing between the positive class (Malicious Insiders) and the negative class (Benign insiders). A curve that climbs quickly towards the top-left corner of the plot suggests a high true positive rate (sensitivity) and a low false positive rate (1-specificity), which is ideal for reliable threat detection. The ROC curve is a graphical plot that illustrates the diagnostic ability of a binary classifier as its discrimination threshold is varied. The AUC provides a single scalar value that summarizes the overall performance of the classifier across all thresholds. An AUC of 0.94 implies that there is a 94% chance that the classifier will rank a randomly chosen positive instance higher than a randomly chosen negative one, regardless of the threshold.

A high AUC indicates that the classifier can differentiate between normal behavior and potential insider threats. For insider threat detection systems, the model has learned meaningful and discriminative features from the data, which indicate malicious activity. The steepness of the initial curve leading towards the top-left indicates that a significant number of threats can be detected with a low false positive rate, which is critical in minimizing disruptions to normal operations and reducing the workload of security analysts. The SMOTE addresses class imbalance by generating synthetic examples of the underrepresented class—here, the insider threats. By oversampling the minority class, SMOTE can lead to a more balanced dataset, which helps prevent the classifier from being biased towards the majority class and potentially overlooking rare threat instances. The improved performance, as evidenced by the high AUC, suggests that SMOTE has enabled CNN to learn from a more representative sample of the minority class, thus better capturing the complex patterns associated with threats.

The CNNs surpass hierarchical feature learning by leveraging their deep and structured layering of convolutional layers, which are well-suited for detecting spatial and temporal patterns in data. In the case of insider threat detection, the CNN may be processing features such as login times, file access patterns, network usage, and other user behaviors to detect anomalies. The ROC curve indicates that CNN’s success implies that it can effectively extract and utilize these features to classify behavior as normal or threatening. The analysis of the ROC curve with an AUC of 0.94 demonstrates that the combination of SMOTE and CNN is likely a potent approach for insider threat detection.

Borderline-SMOTE + CNN

Insider threat datasets typically suffer from a class imbalance problem because malicious activities are rare compared to normal activities. Such imbalance can bias a classifier to over-predict the majority class. The Borderline-SMOTE algorithm specifically aims to generate synthetic examples near the decision boundary. Doing so helps the classifier better understand the complex region where classes overlap and improve the decision-making capability regarding borderline cases. By focusing on the samples that are difficult to classify, Borderline-SMOTE enhances the representation of the minority class in the training data, which may lead to improved detection rates of actual insider threats. The CNNs are known for their powerful feature extraction capabilities. In this scenario, CNN would have learned to detect complex patterns and anomalies in user behavior indicative of insider threats. The CNN likely includes multiple layers that can detect various levels of abstraction. Lower layers may detect simple patterns, while deeper layers can recognize more complex sequences of user actions that could be malicious. The results of applying a combination of Borderline-SMOTE and CNN are depicted in Fig. 4.

Figure 4 displays the performance of the CNN model for insider threat detection, which has been trained on data augmented by the Borderline-SMOTE algorithm. The AUC for this ROC curve is 0.89. The ROC curve plots the TPR against the FPR at various threshold settings. The TPR is on the y-axis, and the FPR is on the x-axis. An AUC of 0.89 is relatively high, suggesting that the model can distinguish between positive (Malicious Insider) and negative (Benign insider cases. In insider threat detection, a high AUC is desirable as it indicates fewer false positives and negatives. The combination of Borderline-SMOTE and CNN has likely contributed to a model sensitive to the nuances and complexities of insider threat detection. The high AUC value implies that the model has a high rate of correct classifications for both classes. For practical implementation, it is essential to review the confusion matrix, investigate specific cases of false positives and false negatives, and adjust the decision threshold to align with the organization’s security needs and risk tolerance. Lastly, while the ROC curve is an important tool for evaluating classifier performance, it’s also essential to consider the context of the application and the cost of misclassifications when deploying the model in a real-world scenario.

While the AUC is a valuable metric, it is equally important to consider precision (how many selected items are relevant) and recall (how pertinent many items are selected). In the case of insider threat detection, a balance between precision and recall is crucial, as false positives can lead to unnecessary investigations, and false negatives can lead to missed threats. The decision threshold for classifying an activity as a threat influences the TPR and FPR. The threshold should be carefully chosen to align with the organization’s risk appetite and the operational costs of false alarms. As insider threat behavior may evolve, CNN should be part of a system that allows for retraining and continuous learning from new data. The ROC curve and the AUC of 0.89 indicate that the combination of Borderline-SMOTE and a CNN is effective for insider threat detection. However, it’s important to assess the model further with other metrics, consider the operational context, and ensure that it is robust to threat pattern changes over time. The results must be interpreted with an understanding that synthetic data generation techniques like Borderline-SMOTE can improve the model’s performance on the minority class, but the true test of the model comes from its deployment in a real-world environment and its ability to generalize to unseen data.

ADASYN + CCN

The ADASYN sampling approach is an algorithm used to create synthetic samples for imbalanced datasets, particularly aimed at improving classifier performance for minority class examples. Here is the recreated ROC curve with an AUC of 0.96 in a scientific visualization format. This curve demonstrates the model’s ability to distinguish between positive (e.g., insider threat) and negative (e.g., benign activity) classes at various threshold settings. The ROC curve’s AUC of 0.96 suggests that the combination of ADASYN and CNN resulted in a model with excellent performance due to its adaptive sampling technique, which focuses on generating synthetic samples for minority class instances that are harder to learn. Insider threats are often rare and complex, making them difficult for models to detect. By concentrating on these challenging minority instances, ADASYN improves the model’s ability to identify subtle and atypical patterns of malicious insider activity. Such a high AUC indicates that the model has a high true positive rate and a low false positive rate, which is essential in insider threat detection to avoid false alarms that could lead to unnecessary investigations as shown in Fig. 5.

We have recreated a ROC curve with a simulated AUC of 0.96, considered an excellent performance. An AUC of 0.96 suggests that the classifier has a high level of separability, meaning it can distinguish between the two classes with high accuracy. The results suggest that the ADASYN algorithm, by focusing on difficult-to-learn examples, and the CNN, by effectively learning features, work well together for the task at hand. An AUC of 0.96 indicates that the classifier has a 96% chance of correctly distinguishing between a positive (insider threat) and a negative (normal behavior) case. Such a high AUC suggests that the model has a good detection measure and is highly capable of differentiating between the two classes. ADASYN may have effectively addressed the class imbalance by creating synthetic samples that helped CNN learn the characteristics of the minority class more effectively. By generating more synthetic data for “difficult” samples, ADASYN can help form a more robust decision boundary.

Therefore, ADASYN is a powerful algorithm for dealing with imbalanced datasets by generating synthetic samples in a way that is adaptive to the learning difficulties posed by the original minority class instances. When used appropriately, it can significantly improve the performance of classifiers on minority class predictions. The ROC curve and the AUC value indicate that the combination of the ADASYN algorithm and a CNN is highly effective for insider threat detection. However, it is imperative to dive into more granular performance metrics and consider the practical implications of deploying such a model in a real-world environment. The ultimate goal is to achieve a practical balance between detecting threats and minimizing disruptions to legitimate activities.

Discussion

The experimental results demonstrate that the proposed model, ADASYN + CNN, which combines ADASYN oversampling with Convolutional Neural Network, significantly enhances the performance of insider threat detection. There are two primary reasons why the ADASYN + CNN method excels. Firstly, ADASYN effectively addresses class imbalance by generating synthetic samples, aiding CNN in learning the characteristics of the minority class more effectively. By concentrating on generating synthetic data for “difficult” samples, ADASYN outperforms methods like SMOTE, which uniformly generates synthetic samples across the feature space, and Borderline-SMOTE, which focuses on creating samples near the decision boundary where classes overlap or are close. In insider threat scenarios, ADASYN demonstrates greater effectiveness and more stable performance. Secondly, ADASYN, designed to generate synthetic observations for those more challenging to learn from, may produce synthetic observations for outlier minority instances that could have been ‘ignored’ by the learning model. This capability can enhance the model’s ability to detect nuanced insider threat behaviors.

To further assess the performance of insider threat detection based on ADASYN and CNN, we compare this model with other advanced existing models. As depicted in Table 8, we evaluate various state-of-the-art insider threat detection methods using the CERT r4.2 datasets. The methods compared with ADASYN-CNN in this study include AD-DNN7, DCNN22, and several other techniques we implemented on the same dataset. Notably, the ROC achieved by ADASYN-CNN is remarkably high at 96%, surpassing the performance of different algorithms. Table 8. compares the proposed methods with the most related work.

Table 8 summarizes the performance of some of the previous studies and methods employed for insider threat detection. AD-DNN demonstrates high performance with an ROC score of 95%, signifying its effectiveness in detecting insider threats. However, it is worth mentioning that the number of samples used in this study was too small. DCNN achieves a good balance between precision (83%) and recall (76%), making it reliable for accurate detection. Standard CNN performs moderately with a 70% ROC. SMOTE-CNN combines SMOTE and CNN to achieve a high ROC score of 94%, enhancing sensitivity and specificity. Borderline-SMOTE + CNN achieves an ROC score of 89%, while the ADASYN + CNN method exhibits impressive performance with a high ROC score of 96%. By combining the ADASYN algorithm with CNN, this method shows high discriminative power and accurate detection performance for insider threats. The ADASYN algorithm adaptively generates synthetic samples to balance the class distribution, resulting in a more robust and accurate model combined with CNN.

Conclusion

In conclusion, our research presents a novel hybrid model that integrates the CNNs with three data imbalance addressing techniques (SMOTE, Borderline-SMOTE, and ADASYN) for insider threat detection in cybersecurity settings. The experimental results demonstrate the effectiveness of the proposed model in significantly enhancing detection accuracy and robustness. The findings of our study reveal promising performance across all three data imbalance-addressing techniques combined with CNN. Specifically, the hybrid model achieved a ROC curve of 94 when integrated with SMOTE, indicating a high level of discrimination between normal and anomalous behaviors indicative of insider threats. Similarly, leveraging Borderline-SMOTE with CNN resulted in an ROC curve of 89%, showcasing the model’s capability to identify potential insider threats with notable precision accurately. Most notably, the integration of ADASYN with CNN yielded the highest ROC curve of 96%, surpassing the performance achieved with SMOTE and Borderline-SMOTE and further underscoring the effectiveness of ADASYN in enhancing detection accuracy in imbalanced datasets. These results highlight the significance of addressing data imbalance challenges in insider threat detection and leveraging deep learning techniques for improved cybersecurity resilience. By combining CNN with data imbalance addressing techniques, our hybrid model offers a practical and effective solution for identifying insider threats within imbalanced datasets commonly encountered in real-world cybersecurity environments. For future work, several avenues for further research emerge. Firstly, exploring additional data imbalance addressing techniques and their combinations with CNN could further enhance the model’s performance.

Additionally, investigating the impact of different CNN architectures and hyperparameter configurations on detection accuracy could provide insights into optimizing the model for specific cybersecurity contexts. Furthermore, extending the evaluation to diverse datasets representing various industries and threat landscapes would validate the generalizability and applicability of the proposed approach in different scenarios. Moreover, integrating advanced anomaly detection algorithms and ensemble learning techniques with CNN could improve detection capabilities and resilience against evolving insider threats. Lastly, exploring interpretability and explainability techniques to enhance the transparency and trustworthiness of the model’s decisions would facilitate its adoption in practical cybersecurity applications. Our research lays the groundwork for future investigations aimed at advancing insider threat detection methodologies and strengthening cybersecurity defenses against insider threats in organizational settings.

Data availability

The dataset generated during the current study is available from the corresponding author upon reasonable request.

References

GURUCUL. 2023 insider threat report (2023). https://gurucul.com/2023-insider-threat-report (accessed 18 September 2023).

Erney, T. & Chowdhury, M. M. A survey of intrusion detection and prevention systems. In 2022 IEEE World AI IoT Congress, AIIoT 2022 (2022). https://doi.org/10.1109/AIIoT54504.2022.9817348.

Shrestha, A. & Mahmood, A. Review of deep learning algorithms and architectures. IEEE Access https://doi.org/10.1109/ACCESS.2019.2912200 (2019).

Dixit, P. & Silakari, S. Analysis of state-of-art attack detection methods using recurrent neural network. In Proceedings of the International Conference on Paradigms of Communication, Computing and Data Sciences: PCCDS 2021 795–804 (Springer, 2022).

Sivakrishna, A. M., Mohan, R. & Randive, K. AUBIT: An adaptive user behaviour based insider threat detection technique using LSTM-autoencoder. In Recent Trends in Computational Intelligence and Its Application: Proceedings of the 1st International Conference on Recent Trends in Information Technology and its Application (ICRTITA, 22) 267 (CRC Press, 2023).

Willems, D., Kohls, K., van der Kamp, B. & Vranken, H. Data exfiltration detection on network metadata with autoencoders. Electronics 12(12), 2584 (2023).

Li, C., Li, F., Yu, M., Guo, Y., Wen, Y. & Li, Z. Insider threat detection using generative adversarial graph attention networks. In GLOBECOM 2022–2022 IEEE Global Communications Conference 2680–2685 (IEEE, 2022).

Khan, M. Z. A., Khan, M. M., Arshad, J. Anomaly detection and enterprise security using user and entity behavior analytics (UEBA). In 2022 3rd International Conference on Innovations in Computer Science & Software Engineering (ICONICS) 1–9 (IEEE, 2022).

Haq, M. A., Khan, M. A. R. & Alshehri, M. Insider threat detection based on NLP word embedding and machine learning. Intell. Autom. Soft Comput. 33, 619–635 (2022).

Chandola, V., Banerjee, A. & Kumar, V. Anomaly detection: A survey. ACM Comput. Surv. https://doi.org/10.1145/1541880.1541882 (2009).

Yuan, S. & Wu, X. Deep learning for insider threat detection: Review, challenges and opportunities. Comput. Secur. 104, 102221. https://doi.org/10.1016/j.cose.2021.102221 (2021).

Tian, Z. et al. Deep learning and dempster-shafer theory based insider threat detection. Mob. Netw. Appl. https://doi.org/10.1007/s11036-020-01656-7 (2020).

Tuor, A., Kaplan, S., Hutchinson, B., Nichols, N. & Robinson, S. Deep learning for unsupervised insider threat detection in structured cybersecurity data streams. In Proceedings of Artificial Intelligence for Cyber Security Workshop (AAAI-2017), San Francisco, CA, USA, 4–5 February (2017).

Sharma, B., Pokharel, P. & Joshi, B. User behavior analytics for anomaly detection using LSTM autoencoder-insider threat detection. In Proceedings of the 11th International Conference on Advances in Information Technology 1–9 (2020).

Yazdinejad, A., Kazemi, M., Parizi, R. M., Dehghantanha, A. & Karimipour, H. An ensemble deep learning model for cyber threat hunting in industrial internet of things. Digit. Commun. Netw. 9(1), 101–110. https://doi.org/10.1016/j.dcan.2022.09.008 (2023).

Wang, G., Hao, J., Mab, J. & Huang, L. A new approach to intrusion detection using artificial neural networks and fuzzy clustering. Expert Syst. Appl. 37, 9. https://doi.org/10.1016/j.eswa.2010.02.102 (2010).

Meng, F., Lou, F., Fu, Y. & Tian, Z. Deep learning based attribute classification insider threat detection for data security. In Proceedings: 2018 IEEE 3rd International Conference on Data Science in Cyberspace, DSC 2018 (2018). https://doi.org/10.1109/DSC.2018.00092.

Ma, Q. & Rastogi, N. DANTE: Predicting insider threat using LSTM on system logs. In Proceedings: 2020 IEEE 19th International Conference on Trust, Security and Privacy in Computing and Communications, TrustCom 2020 (2020). https://doi.org/10.1109/TrustCom50675.2020.00153.

Kim, T. Y. & Cho, S. B. Web traffic anomaly detection using C-LSTM neural networks. Expert Syst. Appl. https://doi.org/10.1016/j.eswa.2018.04.004 (2018).

Malhotra, P., Vig, L., Shroff, G. & Agarwal, P. Long short term memory networks for anomaly detection in time series. In 23rd European Symposium on Artificial Neural Networks, Computational Intelligence and Machine Learning, ESANN 2015: Proceedings (2015).

Ren, Y. & Wu, Y. Convolutional deep belief networks for feature extraction of EEG signal. In Proceedings of the International Joint Conference on Neural Networks (2014). https://doi.org/10.1109/IJCNN.2014.6889383.

Gayathri, R. G., Sajjanhar, A , Xiang, Y. & Ma, X. Multi-class classification based anomaly detection of insider activities. arXiv Prepr. arXiv:2102.07277 (2021).

Gayathri, R. G., Sajjanhar, A. & Xiang, Y. Image-based feature representation for insider threat classification. Appl. Sci. https://doi.org/10.3390/app10144945 (2020).

Koutsouvelis, V., Shiaeles, S., Ghita, B. & Bendiab, G. Detection of insider threats using artificial intelligence and visualization. In Proceedings of the 2020 IEEE Conference on Network Softwarization: Bridging the Gap Between AI and Network Softwarization, NetSoft 2020 (2020). https://doi.org/10.1109/NetSoft48620.2020.9165337.

Singh, M., Mehtre, B. M. & Sangeetha, S. User behavior profiling using ensemble approach for insider threat detection. In ISBA 2019: 5th IEEE International Conference on Identity, Security and Behavior Analysis (2019). https://doi.org/10.1109/ISBA.2019.8778466.

Yuan, F., Cao, Y., Shang, Y., Liu, Y., Tan, J. & Fang, B. Insider threat detection with deep neural network. In Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics) (2018). https://doi.org/10.1007/978-3-319-93698-7_4.

Cheng, L., Liu, F. & Yao, D. D. Enterprise data breach: Causes, challenges, prevention, and future directions. Wiley Interdiscip. Rev. Data Min. Knowl. Discov. 7(5), e1211. https://doi.org/10.1002/widm.1211 (2017).

Glasser, J. & Lindauer, B. Bridging the gap: A pragmatic approach to generating insider threat data. In Proceedings: IEEE CS Security and Privacy Workshops, SPW 2013 98–104 (IEEE, 2013). https://doi.org/10.1109/SPW.2013.37.

CERT and ExactData LLC. Insider threat test dataset. Software Engineering Institute, Carnegie Mellon University (2020). https://resources.sei.cmu.edu/library/asset-view.cfm?assetid=508099 (accessed 14 September 2021).

Al-Shehari, T. & Alsowail, R. A. An insider data leakage detection using one-hot encoding, synthetic minority oversampling and machine learning techniques. Entropy 23(10), 1258. https://doi.org/10.3390/e23101258 (2021).

Nelli, F. Machine learning with scikit-learn. In Python Data Analytics: With Pandas, NumPy, and Matplotlib 259–287 (Springer, 2023).

Ben Naceur, M., Akil, M., Saouli, R. & Kachouri, R. Deep convolutional neural networks for brain tumor segmentation: Boosting performance using deep transfer learning: preliminary results. In Brainlesion: Glioma, Multiple Sclerosis, Stroke and Traumatic Brain Injuries: 5th International Workshop, BrainLes 2019, Held in Conjunction with MICCAI 2019, Shenzhen, China, October 17, 2019, Revised Selected Papers, Part II 5 303–315 (Springer, 2020).

Elreedy, D. & Atiya, A. F. A comprehensive analysis of synthetic minority oversampling technique (SMOTE) for handling class imbalance. Inf. Sci. (NY) 50, 5. https://doi.org/10.1016/j.ins.2019.07.070 (2019).

Chawla, N. V., Bowyer, K. W., Hall, L. O. & Kegelmeyer, W. P. SMOTE: Synthetic minority over-sampling technique. J. Artif. Intell. Res. 16, 321–357. https://doi.org/10.1613/jair.953 (2002).

Han, H., Wang, W.-Y. & Mao, B.-H. Borderline-SMOTE: A new over-sampling method in imbalanced data sets learning. In International Conference on Intelligent Computing 878–887 (Springer, 2005).

He, H., Bai, Y., Garcia, E. A. & Li, S. ADASYN: Adaptive synthetic sampling approach for imbalanced learning. In 2008 IEEE International Joint Conference on Neural Networks (IEEE World Congress on Computational Intelligence) 1322–1328 (IEEE, 2008). https://doi.org/10.1109/IJCNN.2008.4633969.

Beysolow II, T. & Beysolow II, T. Convolutional neural networks (CNNs). Introduction to Deep Learning Using R: A Step-by-Step Guide to Learning and Implementing Deep Learning Models Using R 101–112 (2017).

Krizhevsky, A., Sutskever, I. & Hinton, G. E. Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 25 (2012).

He, K., Zhang, X., Ren, S. & Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 770–778 (2016).

Al-Mhiqani, M. N., Ahmed, R., Zainal, Z. & Isnin, S. An integrated imbalanced learning and deep neural network model for insider threat detection. Int. J. Adv. Comput. Sci. Appl. https://doi.org/10.14569/IJACSA.2021.0120166 (2021).

Acknowledgements

This research was supported by the Researchers Supporting Project Number (RSP2024R244), King Saud University, Riyadh, Saudi Arabia.

Funding

Funding was provided by the Researchers Supporting Project Number (RSP2024R244), King Saud University, Riyadh, Saudi Arabia.

Author information

Authors and Affiliations

Contributions

“Conceptualization, T.A. and M.K.; methodology, T.A. and M.N.A; software, M.U; validation, T.A., M.U. and T.A.; formal analysis, M.K.; investigation, M.U; resources, M.N.A. and T.A.; data curation, M.U; writing—original draft preparation, T.A.; writing—review and editing, M.K.; visualization, M.U.; supervision, T.A.; funding acquisition, H.A., S.S.U. and A.D. All authors reviewed the manuscript.”

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Al-Shehari, T., Kadrie, M., Al-Mhiqani, M.N. et al. Comparative evaluation of data imbalance addressing techniques for CNN-based insider threat detection. Sci Rep 14, 24715 (2024). https://doi.org/10.1038/s41598-024-73510-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-024-73510-9