Abstract

Predicting major adverse cardiovascular events (MACE) is crucial due to its high readmission rate and severe sequelae. Current risk scoring model of MACE are based on a few features of a patient status at a single time point. We developed a self-attention-based model to predict MACE within 3 years from time series data utilizing numerous features in electronic medical records (EMRs). In addition, we demonstrated transfer learning for hospitals with insufficient data through code mapping and feature selection by the calculated importance using Xgboost. We established operational definitions and categories for diagnoses, medications, and laboratory tests to streamline scattered codes, enhancing clinical interpretability across hospitals. This resulted in reduced feature size and improved data quality for transfer learning. The pre-trained model demonstrated an increase in AUROC after transfer learning, from 0.564 to 0.821. Furthermore, to validate the effectiveness of the predicted scores, we analyzed the data using traditional survival analysis, which confirmed an elevated hazard ratio for a group with high scores.

Similar content being viewed by others

Introduction

Background

Due to the worldwide aging phenomenon, cardiovascular disease has a sustained high prevalence and mortality rate, resulting in significant medical expenses1,2. Acute cardiovascular disease often arises in emergencies and is associated with a high readmission rate, necessitating the prediction and preparation for potential future risks. Moreover, cardiovascular diseases often lead to high rates of severe sequelae, increasing the financial burden on individuals and the healthcare system. Therefore, assessing and predicting cardiovascular events is crucial3.

In this regard, scoring models for predicting cardiovascular disease have been developed based on risk factors including age, sex, race, blood pressure, cholesterol levels, diabetes status, history of aspirin therapy, statin, or hypertension treatment, and smoking history4. While this scoring models offer simplicity, the comprehensive nature of electronic medical records (EMRs) represents a considerable portion of patient information and are considered big data with substantial potential. Moreover, existing scoring models are regression-based models and are limited by the number of covariates they can calculate. Consequently, the development of a scoring model utilizing numerous variables in EMR is currently being studied in deep learning5.

In addition, existing prediction models have limitations in considering patient status progression and scalability of multicenter uses. Current scoring models used in medical practice make cross-sectional predictions based solely on a patient’s status at a single time point, overlooking the critical aspect of patient status progression6. In addition, the deep learning-based prediction models, which rely on the assumption of consistent temporal intervals, face significant challenges in their development due to the irregularity of patient visits. Secondly, regional imbalances in medical service and resource concentration necessitate multicenter studies, particularly for medical centers facing data insufficiency. Furthermore, because the resource availability, such as device used in investigation, varies across hospitals, data obtained from different sources varies in scale and depth. The variations in the availability of tests, treatments, and medications across hospitals introduce performance uncertainties due to missing columns. Leveraging a well-pretrained model to transfer knowledge can enhance the performance of models with insufficient data, and through transfer learning, the model can learn to adjust to shifts between datasets.

In this study, we develop a deep learning model to predict cardiovascular disease from time-series medical records and demonstrate transfer learning with selecting features from two hospitals. The main contribution of the study lies in development of a prediction model that considers patient progression, alongside the demonstration of method for transfer learning. In addition, we established operational definitions and categories of codes to improve data quality and selected features employing computerized importance to enhance model performance. Furthermore, this method employing transfer learning can resolve data insufficiency across hospitals.

Objective

We have defined the major adverse cardiovascular events (MACE) as a study outcome, considering its need for early prediction. To improve the performance of treatment during hospitalization and reduce medical costs, we developed a model that identifies risk factors and predicts the risk of MACE. We utilized the extreme gradient boosting (Xgboost) model7 to select key factors among 1101 features in the source data, Asan Medical Center (AMC) EMR. Additionally, to ensure the scalability of the model, we utilized the target data from Chungnam National University Hospital (CNUH). The model is evaluated using Area under the Receiver Operating Characteristics (AUROC) and accuracy before and after transfer learning and further validated the prediction score via Kaplan–Meier model, a traditional survival analysis.

Related works

The prediction model regarding MACE starts with a scoring model regarding few statistically proven features at a single time point4. The validated risk scores are based on very few definite risk factors with the score range study to indicate severe risks, such as Thrombolysis In Myocardial Infraction (TIMI) score18, global registry of acute coronary events (GRACE) score19and HEART score20,21.

The traditional prediction models were based on logistic regression models and machine learning methods were applied to better predict MACE22. Studies highlights the non-linearity of the model with no requirement of pre-defined features. For example, traditional prognostic methods were compared with machine learning model, boosted ensemble algorithm (LogitBoost) and scored the highest AUROC of 0.79 among others23. In this study, features were automatedly selected by information gain ranking. Likely, Xgboost model achieved higher AUROC of 0.86 (accuracy 0.75) compared to traditional regression model in predicting myocardial infarction (MI). The importance of features in predicting MI is presented using SHAPley value, which is a difference of output value when each feature is replaced with a baseline value24.

Later, deep learning models outperformed machine learning models in many studies27,28. For example, Deep neural networks (DNN) outperformed regression-based GRACE model and machine learning models including gradient boosting machine (GBM), and generalized linear model (GLM)25. Prediction involved 51 variables in Korea Acute Myocardial Infarction Registry (KAMIR) and scored AUROC higher than 95% in all evaluation predicting MACE within 1,6, and 12 months.

With the recent emergence of sequential models, MACE was predicted from records of a patient utilizing models such as Recurrent Neural Network (RNN) and Long Short-Term Memory (LSTM). For example, AtheroEdge-MCDLAI (AE3.0DL)26 utilize medical examination data by the stipulated time frame to classify patient groups according to risk level. This model includes over sampling-based method and principal component analysis (PCA)-based pooling to address data imbalance between classes and to select features. In addition, transformer-based models were studied to learn temporal information bidirectionally from EMR29. BERT and XLNet was trained to predict mortality among patient with cardiovascular disease and XLNet showed slightly better performance.

In this study, we take records of a HRCVD cohort as an input and have selected features by Xgboost. We have developed a model, SA-RNN, consisting of convolution blocks, Bi-LSTM and attention modules. We have evaluated the model compared baselines models including Logistic regression, Xgboost and MLP classifier and Transformer. The analysis made by SA-RNN was validated with traditional statics model, Kaplan–Meier model.

Method

Study dataset

Study population and outcomes

Data was previously extracted from the anonymized EMR system of AMC. The criteria of inclusion were defined as patients who had visited AMC and are suspected of having cardiac disease between January 1st, 2000, and December 31st, 2017. For the target dataset for transfer learning, patient records of individuals who visited the CNUH regarding cardiac disease between January 1st, 2012, and December 31st, 2018, were extracted. The following information was extracted from both centers: patient encounter, demographics, diagnosis, medication, treatment, laboratory test regarding human-derived materials, physical information, vital signs, digital tests regarding coronary angiography (CAG), echocardiography/Computed Tomography(CT), myocardial Single Photon Emission CT(SPECT), cardiopulmonary function test, procedure, surgery, blood transfusion, smoking history.

In this study, a high-risk cardiovascular disease (HRCVD) cohort is defined as patients diagnosed with critical conditions, including MI, chronic ischemic heart disease, stroke, transient ischemic attack (TIA), angina, and heart failure. The primary objective is to predict MACE within three years during study period, with MACE defined as MI, stroke, TIA, heart failure, and death of all causes. The operational definition of each MACE was decided to be identifiable in the EMR system, considering diagnosis, treatment and patient encounter types including inpatient, outpatient, and emergency room (ER) visits. The details of operational definition are provided in Supplementary Table S1. Online. The model is primarily designed to learn from time series data based on tables in EMR, as its architecture does not incorporate multimodal inputs such as medical images or signals.

Data preprocessing for individual hospitals

We have manually categorized the codes of diagnosis, medication, and laboratory tests into each category of disease, drug ingredients, and lab test, respectively. Specific coding systems for diagnosis, medication, and laboratory tests were utilized following the common data model (CDM): International Statistical Classification of Diseases and Related Health Problems (ICD) codes for diagnoses, RxNorm for medications, and Logical Observation Identifiers Names and Codes(LOINC) for laboratory tests.

For the AMC dataset collected for 18 years, the coding system was institutionally maintained including the version changes of coding systems, such as a version upgrade of ICD codes from 9 to 10. Mapping between codes utilized guidelines or vocabularies provided by the systems or the guidelines for mapping insurance claim codes provided by the National Health Insurance Sharing Service (NHISS) and HIRA Bigdata Open Portal.

The data of a patient is structured into a table where a row represents each visit with columns of variables. To account for the irregularity in patient records, instead of deciding on a fixed interval, we organized the data by each visit and formed it into a row consisting of status variables. The variables for the initial dataset were manually selected from extracted information mentioned in the previous section. Categorical variables, such as records of treatment, procedure, or laboratory tests holding codes, we used codes within top 50 frequency to be encoded utilizing a one-hot encoding method. Continuous variables’ mean value for each visit was calculated with outliers removed. The binary label was assigned to each row, indicating 1 when reported with MACE within three years and 0 for without, as described in Fig. 1. A report with a minimum 28-day difference from the last visit date was considered for labeling purposes. The constructed dataset of source data has 1,101 features with a maximum of 100 visits per patient. A maximum number of 100 visits includes an entire visit of a patient with 99.9 confidence for the cohort. For patients with less than 100 visiting dates, data was padded from the beginning with values of the first record and label −1. For patients with over 100 visiting dates, only the record of recent 100 visits was used.

Data processing for multi-source study

Because the recording system differs per medical center, the schema of the EMR system is written with a detailed description to maximize the coverage of variables in AMC data. We have considered the availability difference of features and codes between centers and EMR systemic differences in recording time during medical procedures. The variables are chosen from the schema written by the categorized information. The unrecorded variables were filled with null values, and missing values were replaced with calculated averages within the same inpatient period. In addition, the time gaps between inpatient admissions, receptions, tests, and treatments were ignored, consolidating all related records under a single inpatient number. For instance, records of inpatient admissions within 2 days of an ER visit were combined.

In addition, to ensure two datasets have matching codes, institutional codes of CNUH were mapped into CDM codes that datasets share, assuring corresponding data inputs after one-hot encoding. Codes in diagnosis, surgery, digital/laboratory tests, and procedure/treatments tables were mapped into SNOMED-CT codes, except for some numerical data in laboratory tests, which are mapped based on LOINC. Codes of medication are mapped into corresponding medications of RxNorm or RxNorm extension codes. Categorized codes above are mapped into applicable categories. The manual mapping process was based on CDM, and for gaps between the codes used in each center, codes were mapped based on computerized text-similarity and subsequent manual curation.

Experimental ethics

This study protocol was approved and conducted under the supervision of the Institutional Review Board (IRB) following the Declaration of Helsinki in medical research. All patient-identifiable information was removed following a policy of the Health Insurance Portability and Accountability Act (HIPAA), and the data access was limited to authorized researchers. In addition, the protocol regarding multicenter data was approved by the IRB board of CNUH. Consent from individual subjects was waived by the Institutional Review Board (IRB) due to the retrospective nature of the study protocol. Access to the anonymized data was limited to authorized researchers.

Design of prediction model

Development of sequential model

Unlike basic Multi-Layer Perceptron (MLP), also known as feed-forward DNN, RNN was developed to predict succeeding sequences referring to previous information. The key point of RNN in learning sequential data is based on a recursive update of the model weights adapting the gradient descent algorithm. However, this structure made RNN vulnerable to the vanishing gradient problem as differences of gradients grow smaller over learning process. It also results in the limitation of maintaining long-term dependency when prediction needs to be inferred from far ahead data.

Consequently, LSTM32 was developed to learn sequential data without vanishing gradients or information loss caused by long sequences. A memory cell and gate units are designed to learn a sequence at time step \(\:t\) with the passed information from the cell state at \(\:t-1\). Each memory cell has input \(\:{x}_{t}\) and output \(\:{h}_{t}\) and learns cell state \(\:{C}_{t}\) to maintain or discard information learned from the entire sequence while learning information from new data. Each gate unit consists of several types of layers and holds weights and biases for each layer.

The learning process starts with a forget gate \(\:{f}_{t}\) that controls the information passed from the previous cell \(\:{h}_{t-1}\) with a sigmoid layer. An input gate decides information learned from the present sequence at \(\:t\) with sigmoid and tanh layer, denoted as \(\:{i}_{t}\) and \(\:{\stackrel{-}{C}}_{t}\) respectively. The new cell state is updated following \(\:{C}_{t}=\:{f}_{t}*{C}_{t-1}+{i}_{t}*\:{\stackrel{-}{C}}_{t}\). The output \(\:{h}_{t}\) of the cell is decided from the input \(\:{x}_{t}\) and previous output \(\:{h}_{t-1}\) by the sigmoid layer and multiplied by \(\:\text{tanh}{(C}_{t})\).

Since the emerging of LSTM, further variation was developed such as Bi-directional LSTM (Bi-LSTM)33. It was introduced to learn from information both previous and subsequent data. This model trains forward first as vanilla LSTM explained above, denoted as \(\:\overrightarrow{{h}_{t}}\). The backward states are \(\:\overleftarrow{{h}_{t}}\) learning from the cell state at \(\:t+1\). At the end, outputs of each direction are concatenated and returned.

Later as the state-of-the-art model, the transformer34 was introduced based only on multiple self-attention layers in an encoder-decoder structure. The attention mechanism was known to be effective in modeling sequences, where sequences are modeled based on dependencies between tokens instead of sequential distance between them. Scaled dot-product attention was used to calculate attention score for Query \(\:Q\), Key \(\:K\), and Value \(\:V\) as below.

The dependency of tokens \(\:K\) to input \(\:Q\) are attention distribution and attention value regarding \(\:V\) is calculated based on them. Attention distribution is calculated with applying Softmax on dot-product between \(\:Q\) and \(\:K\), scaled with \(\:{d}_{k}\), dimension of \(\:K\). This attention value is the weighted sum of this distribution and \(\:V\).

Transfer learning

Transfer learning involves adapting a model, pretrained on abundant data, to perform the same task on a target source with limited data8. Due to the lack of access to healthcare datasets, transfer learning in EMR is studied to compensate for the lack of data9,10,11. For transfer learning for different sources, the differences in data distribution between sources are adapted in the training stage12,13and before training via calculation of deviation14or feature importances15. In this study, adapting model was pretrained by AMC dataset and transfer learning was performed for the target dataset of CNUH. We have added the feature selection stage to adjust for the change in importance of a feature.

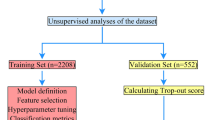

Feature selection

The features of EMR were selected by SHAPley value35 which calculates importance by excluding any bias from the current method through recursive feature elimination and by evaluating the feature’s importance of ML model. The importance of the feature was obtained from the Xgboost model. A tree model consists of classification models, each assigned with trainable weights to supplement the errors from the previous stage. In this study, we utilized the Xgboost as a classifier. An Xgboost is an ensemble method that combines the results of multiple classifiers to achieve better performance. The model is trained with a gradient with parameters λ and γ to avoid overfitting, and the evaluation of performance is estimated with the root mean square error (RMSE). The Xgboost model, yielding the lowest RMSE, was chosen to predict outcomes for 20% of the randomly selected patients via grid search. The feature selection results are shown in Sect. 4.2. Features ranking in the top 50 for feature importance were selected to discard those with lower feature importance and frequency. Furthermore, we have validated the model by comparing its predicted score with survival analysis from the traditional statistics model.

Model architecture

The prediction model uses previous time-series data of selected features of a patient to predict MACE within three years. We have suggested a Self-attention recurrent neural network (SA-RNN) to learn serial data with an attention mechanism. Convolution layers are often used as a feature extractor with other modules suited for the task31, as a convolutional model from previous work, EEGNet30, is combined with the attention module. Likewise, the SA-RNN model consists of convolution blocks as a feature extractor combined with LSTM and the attention module.

Figure 1 illustrates a schematic example of constructing time-series data and the overall architecture of SA-RNN, segmented into three principal sections between an input layer and an output layer. Details of architecture and data flow of SA-RNN are depicted in Fig. 2. The initial section is a feature extraction section composed of three 1-dimensional convolutional (1D-CONV) blocks, represented in green. Each 1D-CONV block consists of two 1-dimensional convolutions with added latencies, dropout, and a LeackyReLu as an activation function. With constructed time-series data as an input, 1-dimensional convolution is applied on a row of features to learn feature-level representation for each row in sequence.

Succeeding the convolutional blocks are two layers of Bi-LSTM units. These blocks are designed to capture progression in time-series data from both forward and reverse directions16. Concluding the architecture is an attention layer, which is employed to weigh the significance of the hidden states learned from the previous sections. Finally, the output layer is trained to classify the occurrence of the MACE learned from patient-level information.

Experimental setting

For internal validation, 20% of patient data was randomly selected, and predictions were made using the chosen feature set. The data distribution of train and test datasets from each source is provided in Supplementary Fig. S1 online. The model was trained with grid search to determine the most suitable parameters. (For details see Supplementary Table S2 online) The three 1D-convolutional blocks used a kernel size of 5 with filter sizes of 64, 128, and 256, respectively. The LeakyReLu is used as an activation function throughout the model with a dropout rate of 0.2. For the two Bi-LSTM layers, the dimensionality of the output space was each set to 50 dimensions. The Adam optimizer was used with a learning rate of le-04.

For transfer learning the small target dataset, the model went through 10 epochs with a small portion of weights set trainable. The 1D-Conv feature extraction was set trainable for it is where the difference between two hospital needs to be learned. In addition, the Attention module and output layers were set trainable to adjust the weights for classification. All weights set trainable except for the two Bi-LSTM layers to freeze where the weights learning sequential data is stored. The learning rate started at le-04 and was adjusted when the performance did not improve per epoch.

Result

Patient characteristics

For the inclusion criteria explained in the method, 49,117 patients satisfied the criteria in the AMC data with a maximum of 100 visits per patient, and 23,889 patients were extracted from CNUH for the target dataset for transfer learning. The baseline characteristics of each dataset are provided in Table 1with p values to indicate the difference between datasets evaluated using the log-rank test. For categorical and continuous variables, statistical analyses such as frequency for categorical and mean with standard deviation for continuous variables are presented. The characteristic variables are selected based on the baseline characteristics of a high-risk group for cardiovascular disease in a recent paper17 with categorized disease of high-risk commodity in data processing method. All analyses were conducted using the R package, version 4.2.

The results indicate that CNUH has a higher frequency of high-risk diseases such as hypertension, arrhythmia, atrial fibrillation, dyslipidemia, and heart failure, whereas AMC exhibits a higher frequency of severe and chronic diseases, such as diabetes mellitus, cancer, renal and liver disease. The difference between hospitals is observed in most of the variables with low p value except for pulmonary embolism. Especially for dyslipidemia, the prevalence was 7-fold higher in the CNUH dataset, likewise for the use of statins (10-fold) and calcium blocker (9.6-fold).

Due to the unavailability and varying distribution of the features across hospitals, the importance of features varied. Consequently, we re-evaluated the importance of features for predicting MACE within three years, and unavailable features were deleted. The feature importance results for the top 30 feature importance scores are shown in Fig. 3. The most important feature of pretrained model is the lab test result of the platelet, followed by the estimated glomerular filtration rate (eGFR). When trained with CNUH data, the feature importance changed to respiratory rate per time (RRPT) scoring the highest, followed by cardiovascular diseases such as Angina or Heart failure.

Feature importance of the top 30 features of AMC (left) and CNUH (right) dataset. Abbreviation: eGFR, estimated glomerular filtration rate; PT, Prothrombin Time test; HbA1c, Hemoglobin A1C test; hsCRP, High-Sensitivity C-reactive protein test; CRP, C-reactive protein test; PTT, partial thromboplastin time; BNP, B-Type Natriuretic Peptide test; LDL, low-density lipoproteins; HDL, high-density lipoproteins; BUN, Blood urea nitrogen test; ESR, Erythrocyte Sedimentation Rate; CKD-EPI, Chronic Kidney Disease Epidemiology Collaboration equation; CK, Creatine Kinase; CCB, Calcium channel blocker; DHP, dihydropyridine; WGHT, Weight; Lp(a), Lipoprotein(a); RR Respiratory rate; ALT, alanine aminotransferase; SGPT, serum glutamic-pyruvic transaminase; MDRD, Modification of Diet in Renal Disease equation; ALP, Alkaline phosphatase isoenzymes level test; SBP, Systolic blood pressure; HGHT, hight; PI11, Proteins Induced by Vitamin K Absence or Antagonis II (PIVKA II).

The platelet ranked highest with AMC dataset in relation to the consumption of anticoagulants in preventing stroke, however it shows importance drop in CNUH datasets. However, the importance of anticoagulants remained high, for example of statin, the importance elevated ranking in 4th when the ___domain shifted. This may show the characteristics of patients with cardiovascular disease using anticoagulants to prevent stroke17. In addition, this result provides clinical feedback that accentuates the importance of the features related to renal disease, such as eGFR. Additionally, RRPT which was initially positioned as the 44th variable of importance in the source dataset, gained prominence in the target dataset. This shift may reflect a bias result from local resource disparities or data insufficiency. A notable rise in the significance attributed to cardiovascular disease correlates with the prevalence figures presented in Table 1, indicating a substantial disparity in the occurrence of heart failure in comparison to the AMC dataset.

Performance of pretrained models

The performance of prediction models is measured via test AUROC and accuracy before and after fine-tuning. The result of prediction models pretrained with AMC dataset was compared between Xgboost, MLP, Logistic regression, Transformer, and SA-RNN model, as shown in Table 2. The SA-RNN model scored the highest with AUROC of 98.0% and accuracy of 0.99, followed by transformer model by a narrow margin scoring 97.0% and 0.98.

Performance change after transfer learning

The transfer learning result was compared with the transformer, the most competitive model with an attention mechanism, as shown in Table 3 and Fig. 4. The performance of the model pre-trained on the source dataset is represented in black with an AUROC of 98.3% with accuracy of 0.99. The initial performance of the model on the CNUH data is an AUROC of 56.4% (blue in Fig. 4) although the accuracy is 0.95, which suggests a performance drop when applied to a new dataset without fine-tuning. The performance on the CNUH dataset significantly improved after fine-tuning, with an AUROC of 82.1%, represented as a red line in Fig. 4. Although the accuracy maintained the same after fine-tuned, we considered the performance improved because the AUROC considers the model performance regarding model trained with imbalanced labels.

However, performance was less improved than SA-RNN after transfer learning, despite the same transfer learning step being adopted. The transformer model shows competitive result in both AUROC and accuracy, 97.0% and 48.9 respectively when trained with the AMC source dataset. However, performance regarding the CNUH dataset could not reach reasonable scores before and after fine-tuned, scoring AUROC of 48.9% then 57.6%.

Statistical validation

The use of prediction score of SA-RNN model was validated by comparing its scores with those derived from traditional hazard analysis. A survival analysis was performed utilizing the Kaplan–Meier model to validate the predicted score of the SA-RNN. The prediction score was divided into three patient categories, namely high, intermediate, and low scores, and each group was analyzed for survival rates. Figure 5 shows an increase in hazard ratio among individuals with low scores. The patient group with high prediction scores, colored in red, has shown significantly lower survival rates in the AMC dataset. Although the survival rates may not differ significantly in the CNUH dataset, the tendency is persistent.

Discussion

In this study, we suggested prediction of MACE a feature selection model based on machine-learned feature importance and trained on the SA-RNN model with selected features to gain scalability for multicentral studies. We cleaned the datasets to minimize systemic and resource-related discrepancies in recording by preprocessing the data. This involved organizing EMR tables for each center and simplifying categories to align institutional codes with a common data model.

Figure 3 illustrates the importance of the feature and confirms that platelet and eGFR were key variables in predicting MACE within three years after MI. This result indicates that the absence of some top 50 features in the AMC dataset across centers impacted their importance. Furthermore, the importance of features was adapted to the CNUH context during the transfer learning of the SA-RNN using recursive feature elimination.

In addition, we have evaluated SA-RNN model to baselines including traditional logistic regression, MLP classifier, and Xgboost and to a more advanced algorithm, transformer in Table 2. The most competitive model transformer was compared for the performance change after transfer learning in Table 3. Lastly, Fig. 4 indicates improvement of model performance when fine-tuned with selected features. The model achieved a higher AUROC when first applied with the CNUH dataset, indicating that transfer learning was utilized in feature selection and fine-tuning of the pretrained model.

Finally, in Fig. 5, we have validated the prediction score by demonstrating the difference in survival rate considering the severity of the score. The tendency for a low survival rate for a high prediction score continued when fine-tuned for the CNUH dataset.

Conclusion and future work

Current models for predicting MACE are based on the regression model with multi-covariates or logistic regression models. The limitation remains in the number of covariates considered, inevitably discarding redundant information with the current models. Thus, we have proven that utilizing the EMR is inevitably effective. In addition, using feature selection methods that employ Xgboost, the effort required to select features related to a clinical study manually was reduced. Furthermore, the predicted score was validated by comparing it with the survival analysis used in medical practice.

By developing the SA-RNN model to predict risk score, the features traditionally selected by the manual selection confirmed the possibility of atomization. Additionally, the feature selection method allows for control over the number of features, suggesting the potential to overcome traditional regression model limitations related to feature quantity.

Furthermore, by predicting MACE utilizing various information gained from EMR, the deep learning models are expected to outperform the current models used in clinical practice. Thus, it would be possible to provide selective treatment and prepare responses at an earlier stage for high-risk patients. Prospectively, the major risk factors discovered by SA-RNN can provide new approaches in setting goals when prescribing clinical treatment. In addition, beyond developing an alternative to the traditional scoring model, future studies can proceed with ___domain adaptation using selected features and integrating feature importance for fine-tuning.

Data availability

The raw clinical data is not publicly available due to reasons of sensitivity involving health information and all access requires the supervision of the Institutional Review Board (IRB) in each hospital. The mapping and generated data of this study are available from the corresponding author upon reasonable request.

References

Timmis, A. et al. European Society of Cardiology: cardiovascular disease statistics 2021. Eur. Heart J. 43(8), 716–799 (2022).

Lee, H. H. et al. Korea heart disease fact sheet 2020: analysis of nationwide data. Korean Circulation J. 51(6), 495 (2021).

Goldsborough, I. I. I., Tasdighi, E., Blaha, M. J. & E., & Assessment of cardiovascular disease risk: a 2023 update. Curr. Opin. Lipidol. 34(4), 162–173 (2023).

Bennett, G. et al. (2013). 2013 ACC/AHA guideline on the assessment of cardiovascular risk.

Li, K. Variable selection for nonlinear cox regression model via deep learning. arXiv Preprint arXiv:221109287. (2022).

Arunachalam, S. Cardiovascular disease prediction model using machine learning algorithms. Int. J. Res. Appl. Sci. Eng. Technol. 8, 1006–1019 (2020).

Chen, T. & Guestrin, C. Xgboost: A scalable tree boosting system. In Proceedings of the 22nd acm sigkdd international conference on knowledge discovery and data mining (pp. 785–794). (2016), August.

Maurer, A. & Jaakkola, T. Algorithmic stability and meta-learning. J. Mach. Learn. Res., 6(6). (2005).

Wiens, J., Guttag, J. & Horvitz, E. A study in transfer learning: leveraging data from multiple hospitals to enhance hospital-specific predictions. J. Am. Med. Inform. Assoc. 21(4), 699–706 (2014).

Bica, I. & van der Schaar, M. Transfer learning on heterogeneous feature spaces for treatment effects estimation. Adv. Neural. Inf. Process. Syst. 35, 37184–37198 (2022).

Lee, G., Rubinfeld, I. & Syed, Z. Adapting surgical models to individual hospitals using transfer learning. In 2012 IEEE 12th international conference on data mining workshops (pp. 57–63). IEEE. (2012), December.

Long, M., Cao, Y., Wang, J. & Jordan, M. Learning transferable features with deep adaptation networks. In International conference on machine learning (pp. 97–105). PMLR. (2015), June.

Ye, R. & Dai, Q. Implementing transfer learning across different datasets for time series forecasting. Pattern Recogn. 109, 107617 (2021).

Ye, R. & Dai, Q. A novel transfer learning framework for time series forecasting. Knowl. Based Syst. 156, 74–99 (2018).

Pudjihartono, N., Fadason, T., Kempa-Liehr, A. W. & O’Sullivan, J. M. A review of feature selection methods for machine learning-based disease risk prediction. Front. Bioinf. 2, 927312 (2022).

Yang, Z. et al. Hierarchical attention networks for document classification. In Proceedings of the 2016 conference of the North American chapter of the association for computational linguistics: human language technologies (pp. 1480–1489). (2016), June.

Cho, M. S. et al.Outcomes after use of standard-and low-dose non–vitamin K oral anticoagulants in Asian patients with atrial fibrillation. Stroke, 50(1), 110–118 (2019).

Grace Investigators. Rationale and design of the GRACE (Global Registry of Acute coronary events) project: a multinational registry of patients hospitalized with acute coronary syndromes. Am. Heart J. 141(2), 190–199 (2001).

Than, M. et al. 2-Hour accelerated diagnostic protocol to assess patients with chest pain symptoms using contemporary troponins as the only biomarker: the ADAPT trial. J. American Coll. Cardiol. 59(23), 2091–2098 (2012).

Eggers, K. M. et al. GRACE 2.0 score for risk prediction in myocardial infarction with nonobstructive coronary arteries. J. Am. Heart Association, 10(17), e021374 (2021).

Brady, W. & de Souza, K. The HEART score: a guide to its application in the emergency department. Turkish J. Emerg. Med. 18(2), 47–51 (2018).

Poldervaart, J. M. et al. Comparison of the GRACE, HEART and TIMI score to predict major adverse cardiac events in chest pain patients at the emergency department. Int. J. cardiol. 227, 656–661 (2017).

Ambale-Venkatesh, B. et al. Cardiovascular event prediction by machine learning: the multi-ethnic study of atherosclerosis. Circulation res. 121(9), 1092–1101 (2017).

Motwani, M. et al. Machine learning for prediction of all-cause mortality in patients with suspected coronary artery disease: a 5-year multicentre prospective registry analysis. Europ. heart J. 38(7), 500–507 (2017).

Wang, M. et al. Predictive value of machine learning algorithm of coronary artery calcium score and clinical factors for obstructive coronary artery disease in hypertensive patients. BMC Med. Inf. Decis. Mak. 23(1), 244 (2023).

Kim, Y. J., Saqlian, M. & Lee, J. Y. Deep learning–based prediction model of occurrences of major adverse cardiac events during 1-year follow-up after hospital discharge in patients with AMI using knowledge mining. Personal. Uniquit. Comput., 1–9. (2022).

Johri, A. M. et al. Deep learning artificial intelligence framework for multiclass coronary artery disease prediction using combination of conventional risk factors,carotid ultrasound, and intraplaque neovascularization. Comput. Biol. Med. 150, 106018 (2022).

Piros, P. et al. Comparing machine learning and regression models for mortality prediction based on the Hungarian myocardial infarction Registry. Knowl. Based Syst. 179, 1–7 (2019).

Antikainen, E. et al. Transformers for cardiac patient mortality risk prediction from heterogeneous electronic health records. Sci. Rep. 13(1), 3517 (2023).

Lawhern, V. J. et al. EEGNet: a compact convolutional neural network for EEG-based brain–computer interfaces. J. Neural Eng. 15(5), 056013 (2018).

Zhu, Y. & Wang, M. D. Automated Seizure Detection using Transformer Models on Multi-Channel EEGs. In 2023 IEEE EMBS International Conference on Biomedical and Health Informatics (BHI) (pp. 1–6). IEEE. (2023), October.

Graves, A. & Graves, A. Long short-term memory. Supervised Seq. Label. Recurr. Neural Networks, 37–45. (2012).

Bahdanau, D., Cho, K. & Bengio, Y. Neural machine translation by jointly learning to align and translate. (2014). arXiv preprint arXiv:1409.0473.

Vaswani, A. Attention is all you need. arXiv preprint arXiv:1706.03762. (2017).

Lundberg, S. M., Erion, G. G. & Lee, S. I. Consistent individualized feature attribution for tree ensembles. arXiv Preprint arXiv:180203888. (2018).

Acknowledgements

This work was supported by the Korea Medical Device Development Fund grant funded by the Korea government (the Ministry of Science and ICT, the Ministry of Trade, Industry and Energy, the Ministry of Health & Welfare, the Ministry of Food and Drug Safety) (Project Number: 1711195603, RS-2020-KD000097) and by a grant of the Korea Health Technology R&D Project through the Korea Health Industry Development Institute (KHIDI), funded by the Ministry of Health & Welfare, Republic of Korea (grant number: HR20C0026).

Author information

Authors and Affiliations

Contributions

Y.K. contributed to model development, study design, and drafting of the manuscript. H.K. contributed in statistical analysis, and interpretation of the results. H.S., H.C., M.K., J.H., G.K., S.P., S.K., H.J., B.K., and S.K. contributed in data collection, data analysis, and interpretation of the results. J.R provided and mapped the data for external validation. T.J. contributed to model development, study design, and supervised the overall project. Y.K. provided study conception and supervised the overall project. All authors have reviewed and approved the final manuscript.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Kim, Y., Kang, H., Seo, H. et al. Development and transfer learning of self-attention model for major adverse cardiovascular events prediction across hospitals. Sci Rep 14, 23443 (2024). https://doi.org/10.1038/s41598-024-74366-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-024-74366-9

Keywords

This article is cited by

-

Towards a prescribing monitoring system for medication safety evaluation within electronic health records: a scoping review

BMC Medical Informatics and Decision Making (2025)