Abstract

In practical work scenarios, it is common for multiple vessels to be present in close proximity within the same water area. In such cases, the acoustic signals emitted by these vessels often overlap, causing interference and reducing the accuracy of vessel identification. This paper proposes an improved GALR end-to-end underwater acoustic source separation algorithm, with ECA-DE (Efficient Channel Attention-Deep Encoder) and Bi-GRU (Bidirectional Gated Recurrent Unit), which consists of an encoder, separation blocks, and a decoder (EDBG-GALR). Addressing the issue of GALR encoder’s limited expressiveness due to its single-layer one-dimensional convolutional layer, we introduce a new deep encoder, ECA-DE, to enhance the encoder’s capability to process temporal signals and improve the efficiency of the separator’s input. Additionally, we integrate Bi-GRU into the GALR separation block’s local modeling module to enhance the local modeling ability of sequence features, thereby reducing the model’s computational and parameter requirements. Experimental results demonstrate that the proposed EDBG-GALR method can effectively separate mixed multi-target signals into multiple single-target signals, achieving a maximum scale-invariant signal-to-noise ratio (SI-SNR) improvement of 3.32 dB and a signal distortion ratio (SDR) improvement of 3.38 dB over baseline methods. These results highlight the practical applicability of EDBG-GALR in complex underwater environments.

Similar content being viewed by others

Introduction

Underwater acoustic target recognition technology is a significant research focus in the field of underwater acoustics. Early techniques for underwater acoustic target recognition relied on manual signal analysis, where the accuracy of recognition was heavily influenced by the subjective experience of the researchers, resulting in considerable uncertainty. In the 1990s, researchers began integrating machine learning techniques with underwater acoustic signal feature analysis. They utilized methods such as wavelet transform1 and Hilbert-Huang transform2 to extract target features and employed machine learning models like support vector machines3, BP neural networks, and decision trees for target recognition, yielding positive results. However, while traditional machine learning methods can perform well in certain scenarios, they often lack generalizability. In contrast, deep learning technology, with its powerful feature extraction capabilities, has shown improved results in the field of underwater acoustic target recognition4,5,6,7,8.

Existing research on underwater acoustic target recognition has primarily focused on single-target identification, with fewer studies addressing multi-target recognition. In practical scenarios, it is common for multiple vessels to be present in adjacent waters simultaneously, making it necessary to solve the problem of target recognition under such conditions. In most cases, multi-target localization and tracking are based on spatial information obtained from multi-channel signals9. For example, Tesei A et al.10 achieved the detection of multiple surface vessels using a towed hydrophone array. Urazghildiiev and Hannay11 detected simultaneously present marine mammals and estimated their numbers using a hydrophone array. When a single channel is used and a single hydrophone receives signals from multiple targets, the acoustic signals are superimposed at each time point, which limits the ability to obtain spatial information. This study focuses on single-channel underwater acoustic signals and employs source separation techniques to address the issue of overlapping multi-target vessel acoustic signals. This situation is analogous to the problem of recognizing different speakers in the field of speech recognition. For instance, Silveira et al.12 used independent component analysis to separate different speakers’ voices before performing speaker recognition.

In recent years, deep learning technology has been widely applied to the field of speech separation, significantly advancing its development. Since the introduction of the TasNet13 end-to-end source separation algorithm, processing mixed audio waveforms directly using an encoder-decoder framework has become an efficient method for source separation. TasNet encodes the audio signal and segments it into chunks, which are then processed using Recurrent Neural Networks (RNNs) on the encoded sequences. The separated audio sequences are finally decoded using a decoder. Subsequently, to enhance model performance, researchers have conducted extensive studies on the separator. DPRNN14 utilizes a dual-path RNN to model intra-chunk and inter-chunk sequences of the encoded audio. In contrast to traditional RNNs, DPTNet15 employs a dual-path transformer approach, enabling the model to perform direct context-aware modeling of audio sequences more effectively. Sandglasset16 introduces a self-attentive network with a novel sandglass-shaped structure to process the encoded sequences, thereby improving separation performance. Sepformer17 replaces traditional RNNs with a multi-scale transformer approach to learn both short-term and long-term dependencies, achieving competitive performance. Max W. Y. Lam et al.18 designed a global attention local recurrent (GALR) network model, which employs Bi-LSTM and multi-head attention mechanisms to handle intra-segment and inter-segment sequences, showing solid separation performance on the WSJ0-2mix dataset. GALR, with its lower computational cost and higher operational efficiency, has become a practical model for industrial deployment.

The primary contributions of this paper are as follows: First, we introduce deep learning-based source separation techniques into the underwater acoustic field and propose an enhanced method, EDBG-GALR, for separating underwater acoustic signals, offering a novel solution to the challenge of underwater acoustic multi-target recognition. Second, unlike previous model improvements that primarily focus on the separator while often overlooking the encoder’s performance, we designed a deep encoding module (ECA-DE) to replace the conventional encoder, which typically consists of a single-layer one-dimensional convolution and a ReLU activation function. Lastly, we incorporated bidirectional gated recurrent units (Bi-GRU) for local sequence modeling, significantly improving intra-segment sequence modeling capabilities while simultaneously reducing the model’s computational complexity.

Methods

In real-world scenarios, the underwater acoustic signals of multiple ships often become superimposed, negatively affecting underwater acoustic target recognition. To address this issue, we introduce the deep learning-based GALR model to separate these mixed multi-target signals. However, the single-layer 1D convolutional encoder used in this model has limited encoding capability for time-___domain signals. To enhance its suitability for underwater acoustic signal processing, we propose a novel deep encoder. Considering the increased model parameters introduced by the new encoder, we have implemented lightweight optimizations to the sequential separation module of the GALR model to offset this limitation.

End-to-end underwater acoustic source separation model based on EDBG-GALR

This study employs the GALR model as a baseline and optimizes it to enhance its performance in underwater acoustic source separation tasks. The overall framework of the GALR source separation model comprises three components: an encoder, a separation module, and a decoder. Initially, the encoder transforms the raw mixed audio into a representation of mixed features. Subsequently, the multi-layer stacked separation module models the local and global information of the encoded features to isolate the characteristics of different targets. Finally, the decoder reconstructs the audio signals of the individual targets. However, existing improvement strategies for end-to-end source separation models typically focus on the intermediate separator while neglecting the encoder’s performance. The GALR source separation model utilizes a conventional encoder structure, composed of a single-layer one-dimensional convolutional layer and a ReLU non-linear activation function, which limits the encoder’s ability to represent audio signals. Therefore, this study designs a deep encoder based on an efficient attention mechanism (ECA-DE) to enhance the input efficiency of the separation module. Additionally, the GALR model employs a Bi-LSTM network in the separation module for local modeling. Due to its complex gating mechanism, Bi-LSTM has more parameters than Bi-GRU, which implies that Bi-LSTM may require more data for training and is computationally more expensive. This makes it less suitable for underwater acoustic signal separation tasks with smaller datasets. To improve the modeling capability of intra-segment sequences while reducing computational complexity, this study introduces bidirectional gated recurrent units (Bi-GRU) for local sequence modeling.

The proposed EDBG-GALR underwater acoustic source separation algorithm framework is illustrated in Fig. 1. First, the deep encoder ECA-DE processes the mixed vessel audio to obtain encoded feature sequences. Then, segmentation is performed, dividing the encoded feature sequences into segments, which are concatenated into a three-dimensional tensor to serve as the input for the separation layers. This tensor is processed through a series of N separation layers, each containing local and global modeling modules. This study introduces Bi-GRU blocks for local modeling of intra-segment sequences and employs a multi-head attention mechanism (MHA) for global modeling of inter-segment sequences to predict the feature masks of different sources. Finally, the different masks are multiplied with the input encoded feature sequences to obtain the estimated features of different sources, which are then decoded to reconstruct the target signals of different sources.

Deep encoder based on ECA-DE

Kadıoğlu B et al.19 found that simultaneously increasing the number of convolutional layers in both the encoder and decoder can improve the source separation quality of separation models. In contrast, preliminary experiments in this study revealed that merely adjusting the encoder’s structure can enhance the model’s separation performance. The GALR encoder’s structure comprises a single-layer one-dimensional convolutional layer and a ReLU non-linear activation function. Based on this, we optimized and proposed a new encoder, ECA-DE. This encoder adds extra non-downsampling one-dimensional convolutional layers and an Efficient Channel Attention (ECA) mechanism to enhance the feature aggregation capability of input audio signals. Relevant studies have shown that attention mechanisms have had a profound impact on image processing fields such as Light Field Image Quality Assessment (LFIQA)20 and road semantic segmentation21, and they are also widely applied in the field of sound source separation16. The ECA-DE encoder achieves the feature sampling effect of stacking small filters through multiple convolutional layers and applies an efficient attention mechanism at the end of the encoder. This allows it to dynamically adjust attention according to different parts of the input sequence, thereby better handling long sequence data.

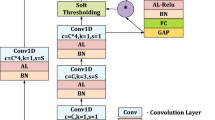

The improved encoder structure is illustrated in Fig. 2. ECA-DE consists of four consecutive one-dimensional convolutional layers and an ECA attention mechanism. The first convolutional layer aligns with the structure in GALR18, determining the output shape of the encoder after processing the input sequence, with the number of channels being K and the filter size being M. This is followed by three additional non-downsampling convolutional layers, each with a kernel size of 3 and a stride of 1, which increase the network depth without altering the sequence shape. Combined with the first convolutional layer, these layers act as stacked small filters. Previous research22, 23 have shown that this method can effectively organize short-term context representations, enhancing the network’s learning capacity. The output of the different convolutional layers is shown in Eq. (1).

where \(\:x\) denotes the input time series, \(\:{e}_{i}\) represents the output of the \(\:i\)-th layer, and \(\:Conv1d\) stands for the one-dimensional convolutional layer.

After sufficient information has been extracted through deep convolution operations, it is necessary to select and weight this information. To this end, the ECA mechanism is introduced to assign weights to the key features of the encoder output. As illustrated in Fig. 3, ECA is a non-dimensionality reduction local cross-channel interaction strategy that effectively avoids the negative impact of dimensionality reduction on channel attention learning. The ECA attention mechanism captures local cross-channel interaction features by considering each channel and its k adjacent frames. First, global average pooling is applied to all channels of the input to obtain a one-dimensional feature sequence. Then, a one-dimensional convolutional layer with a Sigmoid activation function is used to process the feature sequence (where k is the kernel size), resulting in the weights www for each channel. Finally, these weights are multiplied with the corresponding elements of the original input feature map to obtain the output features. The kernel size k is adaptive and proportional to the channel dimension, representing the coverage of local cross-channel interactions. This module efficiently utilizes the information extracted by the deep convolutional layers by weighting the channels of the extracted features. The Sigmoid function controls the weights of different channels between 0 and 1, with less important channels having weights close to 0 and more important channels having weights close to 1. The computational formula for this module is shown in Eq. (2).

where \(\:x\) represents the input information, \(\:F\left(x\right)\) denotes the output information, \(\:Sigmoid\) represents the Sigmoid function, \(\:Conv\) represents the one-dimensional convolution, and \(\:Glopool\) represents the global average pooling.

Through training, ECA enables the model to compute deep correlations between audio features. The shape and dimensions of the tensor remain unchanged before and after applying ECA.

Local time series modeling based on Bi-GRU

To enhance the modeling capability of intra-segment sequences while reducing the computational complexity of the model, we introduced bidirectional gated recurrent units (Bi-GRU) for local sequence modeling. Bi-GRU adopts the mechanism of gated recurrent units (GRU), including reset gates and update gates, and can capture long-term dependencies in time series data from both forward and backward directions simultaneously. The improved local time series modeling module is shown in Fig. 4.

The dimensions and shape of the input tensor are reshaped before and after the local modeling module to meet the input requirements of Bi-GRU and ensure that the output data’s dimensions and shape remain consistent with the input. As shown in Fig. 4, the specific structure consists of Bi-GRU, a linear fully connected layer, and residual connections. Within Bi-GRU, the forward GRU processes the input sequence from left to right, while the backward GRU processes it in the opposite direction, enabling Bi-GRU to consider both past and future context at each time step. By concatenating the outputs of the forward and backward GRUs, a final bidirectional output is formed, allowing the model to capture a more comprehensive understanding of the input sequence. The output data dimensions double after Bi-GRU processing because each step’s output is a concatenation of the forward and backward GRU hidden states. Therefore, after using Bi-GRU, a fully connected layer reduces the dimensions to match the input dimensions for subsequent calculations. Additionally, residual connections are added between the output of the fully connected layer and the input of Bi-GRU, enabling the model to effectively utilize past information and integrate it into the final output, improving performance and mitigating the vanishing gradient problem. Finally, the output with residual connections is normalized, facilitating easier network training.

The gated recurrent unit (GRU) is a variant of the recurrent neural network that effectively captures contextual dependencies in sequential data and mitigates the issues of vanishing and exploding gradients better than most recurrent neural networks24, 25. As shown in Fig. 5, compared to the LSTM structure, the GRU structure improves the design of the gating units by no longer estimating the state information \(\:{S}_{t}\) transmitted in LSTM, significantly reducing the network’s computational complexity.

Compared to the LSTM structure, the GRU structure has fewer gating units and consequently fewer activation functions in the forward propagation process, ultimately reducing the gradient computation complexity during training. The GRU structure significantly reduces the computational load and the number of parameters in the model while maintaining the recurrent neural network’s capability to model time series information. Therefore, for real-time underwater acoustic signal separation scenarios, using GRU instead of LSTM is reasonable, balancing the increased computational load and parameter count introduced by the deep encoder (ECA-DE).

Experiments and results

To validate the effectiveness of the EDBG-GALR underwater acoustic source separation model for separating ship radiated noise and the proposed improvements, this section conducts comparative experiments based on the ShipsEar dataset. The experiments verify the separation performance of ship radiated noise. First, the dataset used in the experiments is described. Next, comparative experiments are conducted to validate the effectiveness of the proposed improvements, ECA-DE and Bi-GRU. Subsequently, network parameters are analyzed. Finally, the separation performance of different end-to-end separation networks is compared.

Dataset

This study uses the publicly available ShipsEar dataset26, recorded at a port on the coast of Spain. The port is located in the Vigo estuary and is a significant port in the region. The recording equipment used for signal collection is the Hyd SR-1 recorder from Mar Sensing, which has a built-in hydrophone with adequate sensitivity and stable frequency response. Figure 5 shows the position for placing the collection device. Whenever possible, 3 hydrophones at different depths and with different gains were used to maximize the dynamic range of the recording. Each hydrophone may capture a different sound, incorporating the recording selection with the highest sound level into the dataset. The ShipsEar database contains 90 audio recordings in WAV format, each ranging from 15 s to 600 s in length, covering 11 different types of vessels and some ocean background noise. This study selects three types of vessels with larger data volumes: passenger ferries, motorboats, and ro-ro vessels. The signals from these three types of vessels are mixed to create a multi-target signal sample set, with each sample containing signals from two vessels. During the audio mixing process, the signals from different vessels are adaptively linearly superimposed at the same sampling rate. If any distortion occurs, audio is normalized to prevent amplitude clipping. Additionally, for the training process, the mixed signals (training samples) are normalized, and the corresponding pure signals are subjected to the same normalization to maintain consistency during loss calculation. The evaluation is performed separately, and the evaluation data does not participate in the training process.

All mixed audio samples are simulated by randomly combining different segments of ship radiated noise, with a sampling rate of 22,050 Hz and a signal-to-noise ratio (SNR) ranging from − 2 dB to 2 dB. The generated dataset includes three combinations of ship radiated noise: passenger ferry and motorboat, passenger ferry and ro-ro vessels, and motorboat and ro-ro vessels. To balance the number of samples in each class, some training and validation samples were randomly discarded, ensuring a balanced distribution for model training and evaluation. The final dataset consists of 5,037 training samples, 531 validation samples, and 522 test samples.

Experimental model training settings

In the experiments, all models were trained using PyTorch on an NVIDIA RTX3060 GPU. This study follows the training protocol from DPRNN, using permutation invariant training on mixed audio of two types of vessels to minimize pairwise SI-SNR loss. For optimization, the Adam optimizer was employed with an initial learning rate of 10−3 and a weight decay rate of 10−6. The learning rate decayed exponentially, with a decay factor of 0.96 every two epochs. An early stopping strategy was implemented, terminating training if the validation loss did not decrease for 10 consecutive epochs. For evaluation metrics, the maximum scale-invariant signal-to-noise ratio (SI-SNR) and signal distortion ratio (SDR) were used to assess all models. Since the experiment produces multiple output signals, the metrics were calculated for each separated signal, and the results were averaged. Table 1 presents the hyperparameters of the models.

Ablation study

Comparative experiments on improved encoder and local modeling

To validate the effectiveness of the improved encoder and local modeling modules, this section conducts an ablation study on these components. For comparison, GALR represents the baseline model. GALR (Bi-GRU) represents the model with an improved local modeling module, and GALR (ECA-DE) represents the model using the improved encoder. The final improved model is named EDBG-GALR. The experimental results validating the effectiveness of the improved encoder and local modeling are presented in Table 2.

The experimental results show that the proposed ECA-DE + Bi-GRU method effectively enhances the model’s separation performance. The GALR (Bi-GRU) model achieved an SI-SNR of 10.10 dB and reduced the parameter count by 0.39 MB, indicating that Bi-GRU improves separation performance while significantly reducing computational cost. The GALR (ECA-DE) model achieved an SI-SNR of 11.69 dB, demonstrating that the ECA-DE module significantly enhances the model’s separation performance by providing deep encoding information for the separation block, compared to the traditional one-dimensional convolutional encoder. The combination of ECA-DE and Bi-GRU leverages the advantages of both, improving audio separation performance and reducing the model’s parameter count.

Demonstration of model separation performance

The two-dimensional time-frequency spectrogram of audio signals can visually demonstrate the separation performance of the improved model. Figure 6 shows the spectrogram of a mixed sample with a signal-to-noise ratio of 0 from the test set and the separated audio signals.

As shown in Fig. 6, (a) is the time-frequency spectrogram of the mixed audio signal of a passenger ferry and a motorboat, (b) and (c) are the spectrograms of the pure motorboat and passenger ferry audio signals, respectively, and (d) and (e) are the spectrograms of the estimated motorboat and passenger ferry signals separated by the EDBG-GALR model. It is evident that the model can successfully separate the audio signals of the passenger ferry and motorboat. The spectrograms of the separated signals have clear energy distribution contours and significantly reduce the original background ocean noise. Based on human auditory perception and model evaluation metrics, the proposed EDBG-GALR model demonstrates superior separation performance.

Comparative experiments on encoder structures

The encoder maps input data into high-level feature representations, providing more efficient input features for subsequent tasks. Its structure significantly impacts the performance of the subsequent model separation layers. To determine the optimal encoder structure for this study, balancing model parameter count and separation capability, this section conducts comparative experiments with different numbers of additional convolutional layers and efficient channel attention mechanisms. To validate the effectiveness of ECA-DE, two sets of experiments were conducted.

The first set of experiments analyzed the impact of different encoders on the EDBG-GALR underwater acoustic source separation model. Based on the EDBG-GALR source separation model, four types of encoders were tested: the traditional one-dimensional convolutional encoder (hereafter referred to as 1D), the one-dimensional convolutional encoder with efficient channel attention (hereafter referred to as ECA-1D), the deep convolutional encoder with additional convolutional layers (hereafter referred to as DE), and the proposed encoder ECA-DE.

The comparative experimental results of the four different encoder structures are presented in Table 3. First, it was observed that the traditional single-layer one-dimensional convolutional encoder (1D) performed poorly compared to other structures, with an SI-SNR of 10.10 dB and an SDR of 12.25 dB. This suggests that a single-layer one-dimensional convolution may not sufficiently extract deep features from the input data. To enhance performance, an efficient channel attention mechanism (ECA) was introduced and combined with the one-dimensional convolutional encoder, forming ECA-1D. Although ECA-1D successfully selected key features from different channels in the encoding layer output, the improvement in evaluation metrics was not significant. This limitation may stem from the limited ability of the single-layer one-dimensional convolution to extract deep information. To address this issue, a deep encoder (DE) was introduced by adding additional non-downsampling convolutional layers after the first convolutional encoding layer. The stacked non-downsampling convolutional layers retained the spatial resolution of the first convolutional layer’s output data, better capturing deep features and improving the modeling capability for complex audio. Compared to using only the efficient channel attention mechanism (ECA), DE showed a notable performance improvement in SI-SNR and SDR by 0.97 dB and 1.02 dB, respectively. Finally, combining the deep encoder (DE) with the efficient channel attention mechanism (ECA-1D) resulted in ECA-DE. This encoder achieved the best performance, with SI-SNR and SDR of 12.83 dB and 14.36 dB, respectively, enhancing the performance of the ship radiated noise source separation model. This demonstrates that combining deep feature extraction with an efficient channel attention mechanism is an effective strategy for audio signal encoding tasks, providing a significant improvement in the quality of separated signals.

In deep learning tasks, balancing model complexity and performance improvement is a crucial consideration, necessitating further optimization of the model structure to achieve optimal performance. The second set of experiments explored the impact of the number of additional convolutional layers in ECA-DE, combined with the efficient channel attention mechanism, on the separation performance. The baseline for comparison in these experiments was set as ECA-1D, with evaluation metrics of SI-SNR at 10.10 dB and SDR at 12.25 dB.

In the experiments, the number of additional one-dimensional convolutional layers was varied to observe the model’s performance changes under different layer counts. The experimental results are shown in Table 4. When one convolutional layer was added, the evaluation metric SI-SNR increased from the baseline of 10.10 dB to 10.71 dB, and SDR improved from 12.25 dB to 13.03 dB. Adding two convolutional layers further enhanced the metrics, with SI-SNR rising to 12.27 dB and SDR increasing to 13.74 dB. When the third convolutional layer was added, SI-SNR significantly increased to 12.83 dB and SDR to 14.36 dB. These results suggest that the efficient channel attention mechanism better captures the correlations and complexities between signals when handling features extracted by multiple convolutional layers, resulting in improved separation performance. However, it is noteworthy that adding a fourth convolutional layer resulted in less significant performance improvements, and adding a fifth layer even led to a slight decline, indicating that excessive convolutional layers may introduce redundant information or overfitting. Therefore, adding three additional convolutional layers to the ECA-1D structure strikes a balance between performance and model complexity.

Comparative experiments on model parameters

To further investigate the structural parameters of the EDBG-GALR model for achieving optimal separation performance, this section compares various combinations of different encoder-decoder channel counts (D), filter sizes (M), and intra-segment sequence lengths (K) during the segmentation process. The goal is to identify the optimal structural parameters for the EDBG-GALR model. Additionally, the separation performance of the EDBG-GALR model is compared with the baseline GALR model. Table 5 presents the comparative experimental results of different model parameters.

As shown in Tables 4 and 5, the model performance for combinations (1) through (9) was compared under different parameter settings. The separation performance of the three combinations with 64 channels was significantly lower than that of the three combinations with 128 channels, while the combinations with 258 channels slightly outperformed those with 128 channels overall. As the number of channels increased, the evaluation metrics generally showed an upward trend, indicating that a higher number of channels enables the model to have more complex feature representation capabilities, thus improving separation performance. However, this also has a notable drawback: the increase in the number of channels leads to a corresponding exponential growth in model parameters.

Similar to the DPRNN model14, using shorter filter sizes (M) resulted in better separation performance, but the associated computational burden also increased. For very long input sequences, a larger intra-segment sequence length (K) is required to improve the model’s computational performance when the window length is small18. Therefore, the impact of different parameter combinations on various evaluation metrics was compared. In addition to SI-SNR and SDR, the computational cost of processing 1 s of mixed input for each model was analyzed, and the floating-point operations required to separate a single sample (GFLOPs) were calculated. It was observed that, compared to the baseline GALR, the proposed method reduced computational operations by 16.2%, reduced runtime memory by 23.6%, and required less training time while maintaining fewer parameters.

Overall, the combination of encoder-decoder channel count of 128, filter size of 4, and segment sequence length of 200 achieved the best separation performance. This parameter combination improved SI-SNR and SDR while reducing the model’s parameter count and computational load.

Comparison with other end-to-end source separation models

To demonstrate the effectiveness of the proposed EDBG-GALR model, this section conducts comparative experiments with current mainstream end-to-end source separation models, including Conv-TasNet, DPRNN, DPTNet, and the proposed EDBG-GALR. All models were trained using the same methods as described in this paper. The separation performance on the test set is shown in Table 6.

As shown in Table 6, by comparing four different methods for the audio separation task, EDBG-GALR achieved superior performance with a smaller parameter count (2.06 MB), yielding higher SI-SNR (12.83 dB) and SDR (14.36 dB). This indicates that the EDBG-GALR method can more accurately model time series while maintaining relatively low computational resource consumption, resulting in excellent performance in the task of separating ship radiated noise. This has practical implications for audio processing in resource-constrained environments.

Conclusions

To address the problem of overlapping multi-target ship underwater acoustic signals in real-world scenarios, this paper proposes an improved GALR underwater acoustic source separation algorithm, EDBG-GALR, to separate mixed ship radiated noise signals. First, an ECA-DE deep encoder was designed to provide more efficient encoded feature representations for the separation module of the GALR source separation model. Additionally, a Bi-GRU module was introduced to enhance the efficiency and performance of the separation blocks. Ablation experiments quantitatively validated the contributions of the ECA-DE deep encoder and Bi-GRU local modeling to the improvement in separation performance. The EDBG-GALR model can successfully separate each ship signal from the mixed signals, achieving a maximum scale-invariant signal-to-noise ratio (SI-SNR) improvement of 3.32 dB and a signal distortion ratio (SDR) improvement of 3.38 dB over baseline methods. Combined with underwater acoustic target recognition algorithms, this method provides a new and effective solution for the challenge of multi-target recognition in underwater acoustics, demonstrating its potential for practical applications in complex marine environments. Additionally, although our current work focuses on separating two mixed signals, the model is theoretically capable of separating more sources. For future research, we plan to investigate the impact of decoder modifications on overall model performance and explore the separation of more than two mixed signals.

Data availability

The data used to support the findings of this study are available from the corresponding author upon request.

References

Li, Y. et al. Research on gear signal fault diagnosis based on wavelet transform denoising. J. Phys.: Conf. Ser. 1971, 012074 (2021).

Yao, Q., Wang, Y. & Yang, Y. Underwater acoustic target recognition based on Hilbert–Huang transform and data augmentation. IEEE Trans. Aerosp. Electron. Syst. 60, 7336–7353 (2024).

Zeng, X., Wang, Y. & Li, Z. Bark-wavelet analysis and Hilbert–Huang transform for underwater target recognition. Def. Technol. 9, 115–120 (2012).

Sabara, R. & Jesus, S. Underwater acoustic target recognition using graph convolutional neural networks. J. Acoust. Soc. Am. 144, 1744 (2018).

Tian, S. et al. Deep convolution stack for waveform in underwater acoustic target recognition. Sci. Rep. 11, 9614 (2021).

Li, C. et al. A feature optimization approach based on inter-class and intra-class distance for ship type classification. Sensors. 20, 5429 (2020).

Wang, W., Zhao, X. & Liu, D. Design and optimization of 1d-cnn for spectrum recognition of underwater targets. Integr. Ferroelectr. 218, 164–179 (2021).

Kim, K. I. et al. A method for underwater acoustic signal classification using convolutional neural network combined with discrete wavelet transform. Int. J. Wavelets Multiresolut. Inf. Process. 19, 2050092 (2021).

Yang, H. et al. Underwater acoustic research trends with machine learning: Passive SONAR applications. J. Ocean. Eng. Technol. 34, 227–236 (2020).

Tesei, A., Meyer, F. & Been, R. Tracking of multiple surface vessels based on passive acoustic underwater arrays. J. Acoust. Soc. Am. 147, EL87–EL92 (2020).

Urazghildiiev, I. R. & Hannay, D. E. Passive acoustic detection and estimation of the number of sources using compact arrays. J. Acoust. Soc. Am. 143, 2825–2833 (2018).

Silveira, M. A. et al. Convolutive ICA-based forensic speaker identification using mel frequency cepstral coefficients and gaussian mixture models. Int. J. Forensic Comput. Sci. 8, 27–34 (2013).

Luo, Y. & Mesgarani, N. TaSNet: Time-___domain audio separation network for real-time, single-channel speech separation. 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), 696–700 (2018).

Luo, Y., Chen, Z. & Yoshioka, T. Dual-path rnn: efficient long sequence modeling for time-___domain single-channel speech separation. ICASSP 2020–2020 IEEE Int. Conf. Acoust. Speech Signal. Process. (ICASSP). IEEE, 46–50 (2020).

Chen, J., Mao, Q. & Liu, D. Dual-path transformer network: direct context-aware modeling for end-to-end monaural speech separation. arXiv Preprint (2020). arXiv:2007.13975.

Lam, M. W. Y., Wang, J., Su, D. & Yu, D. Sandglasset: a light multi-granularity self-attentive network for time-___domain speech separation. 2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), 5759–5763 (2021).

Subakan, C., Ravanelli, M., Cornell, S., Bronzi, M. & Zhong, J. Attention is all you need in speech separation. 2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), 21–25 (2021).

Lam, M. W. Y. et al. Effective low-cost time-___domain audio separation using globally attentive locally recurrent networks. 2021 IEEE Spoken Language Technology Workshop (SLT). IEEE, 801–808 (2021).

Kadıoğlu, B. et al. An empirical study of Conv-TasNet. ICASSP 2020–2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). IEEE, 7264–7268 (2020).

Zhang, Z., Tian, S., Zhang, Y., Zou, W., Morin, L. & Zhang, L. Blind perceptual quality assessment of LFI based on angular-spatial effect modeling. IEEE Trans. Broadcast. 70, 290–304 (2024).

Zhou, Z., Zhang, Y., Hua, G., Long, R., Tian, S. & Zou, W. SPNet: An RGB-D sequence progressive network for road semantic segmentation. 2023 IEEE 25th International Workshop on Multimedia Signal Processing (MMSP), 1–6 (2023).

Hershey J. R. et al. Deep clustering: discriminative embeddings for segmentation and separation. 2016 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). IEEE, 31–35 (2016).

Liu Y. & Wang D. L. Divide and conquer: a deep CASA approach to talker-independent monaural speaker separation. IEEE/ACM Trans. Audio Speech Lang. Process. 27, 2092–2102 (2019).

Chung, Junyoung, et al. "Empirical evaluation of gated recurrent neural networks on sequence modeling." arXiv preprint arXiv:1412.3555 (2014).

Yang S., Yu X. & Zhou Y. LSTM and GRU neural network performance comparison study: taking Yelp review dataset as an example. 2020 International Workshop on Electronic Communication and Artificial Intelligence (IWECAI). IEEE, 98–101 (2020).

Santos-Domínguez D. et al. ShipsEar: an underwater vessel noise database. Appl. Acoust. 113, 64–69 (2016).

Luo Y. & Mesgarani N. Conv-tasnet: surpassing ideal time–frequency magnitude masking for speech separation. IEEE/ACM Trans. Audio Speech Lang. Process. 27, 1256–1266 (2019).

Acknowledgements

The work was financially supported by Hubei Key Research and Development Program of China (2022BAA099).

Author information

Authors and Affiliations

Contributions

All authors contributed to the study’s conception and design. The first draft of the manuscript was written by YY and JF, and all authors commented on previous versions of the manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Yu, Y., Fan, J. & Cai, Z. End-to-end underwater acoustic source separation model based on EDBG-GALR. Sci Rep 14, 24748 (2024). https://doi.org/10.1038/s41598-024-76602-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-024-76602-8