Abstract

In recent years, there has been a proliferation of weakly supervised methods in the field of video anomaly detection. Despite significant progress in existing research, these efforts have primarily focused on addressing this issue within Euclidean space. Conducting weakly supervised video anomaly detection in Euclidean space imposes a fundamental limitation by constraining the ability to model complex patterns due to the dimensionality constraints of the embedding space and lacking the capacity to model long-term contextual information. This inadequacy can lead to misjudgments of anomalous events due to insufficient video representation. However, hyperbolic space has shown significant potential for modeling complex data, offering new insights. In this paper, we rethink weakly supervised video anomaly detection with a novel perspective: transforming video features from Euclidean space into hyperbolic space may enable the network to learn implicit relationships in normal and anomalous videos, thereby enhancing its ability to effectively distinguish between them. Finally, to validate our approach, we conducted extensive experiments on the UCF-Crime and XD-Violence datasets. Experimental results show that our method not only has the lowest number of parameters but also achieves state-of-the-art performance on the XD-Violence dataset using only RGB information.

Similar content being viewed by others

Introduction

With the increasing prevalence of surveillance applications in daily life, the sheer volume of generated surveillance videos is on the rise. This surge poses significant challenges for manual monitoring aimed at detecting anomalous events such as fights, abuse, accidents, theft, and more. Video anomaly detection has been a subject of research in the field of computer vision for several years. Specifically, video anomaly detection can be categorized into three types : fully supervised methods, weakly supervised methods, and unsupervised methods1. Fully supervised methods require frame-level annotations for both normal and anomalous instances in the training data, while weakly supervised methods necessitate only video-level annotations. In contrast, unsupervised methods do not rely on any annotated training data. Given the substantial volume of training data required for surveillance videos, labeling each frame of the video is labor-intensive and time-consuming, making the application of fully supervised methods impractical. Therefore, weakly supervised methods have gained widespread popularity in video anomaly detection, as they reduce the cost of obtaining fine-grained manual annotations by employing video-level labels.

Previous works2,3,4,5,6,7 have predominantly employed deep neural networks to learn video feature representations in Euclidean space. Although these Euclidean-based methods have achieved satisfactory performance, distinguishing between positive and negative instances becomes challenging when they are particularly similar. Recently, hyperbolic neural networks have shown immense potential in modeling intricate data structures across various domains, including word embeddings8, biological sequences9, social networks10, recommender systems11,12, and more. Existing low-dimensional hyperbolic feature spaces in hyperbolic neural networks can achieve performance comparable to or even better than high-dimensional Euclidean neural networks. In contrast to Euclidean space, distances in hyperbolic space grow exponentially, which allows hyperbolic space to better capture the distances between positive and negative instances. Additionally, hyperbolic space exhibits greater capacity for learning hierarchical and tree-like data structures compared to Euclidean space, enabling networks to better differentiate positive and negative instances. Therefore, in this paper, we believe that there is some kind of implicit relationship between normal and anomalous videos, and that mapping video features into hyperbolic space could help the network to learn richer feature representations and thus better distinguish anomalies. To summarize, our contributions are as follows:

-

We have designed a lightweight video anomaly detection network based on the Poincaré ball model and the Lorentz model from a new perspective. This represents a preliminary attempt of hyperbolic space in the field of anomaly detection, with significant innovative implications.

-

We designed the FSG branch and TRG branch based on the feature similarity and temporal correlation between video segments, embedding the intrinsic relationships between video segments into hyperbolic space to learn video representations.

-

Extensive experiments were conducted on two large-scale datasets, XD-Violence and UCF-Crime, and the best performance was achieved on the XD-Violence dataset, fully demonstrating the effectiveness of the proposed method.

Related work

Hyperbolic neural networks

Hyperbolic space has gained significant traction in machine learning due to high capacity and tree-like properties. Nickel et al.13 introduced Poincaré embeddings for learning symbolic data representations, experimentally demonstrating that these embeddings outperform Euclidean embeddings on complex data. Ganea et al.14 derived hyperbolic versions of important deep learning tools, such as polynomial logistic regression, feedforward, and recurrent neural networks, defining a hyperbolic neural network that bridges the gap between hyperbolic space and deep learning. Chami et al.15 extended Graph convolutional neural networks(GCNs) to hyperbolic geometry, deriving operations for neural networks in hyperbolic space, including feature transformations and aggregation, introducing hyperbolic graph convolutional networks. These advancements led to the rapid development of hyperbolic neural networks, with adaptations of Euclidean architectures to hyperbolic space, applied across diverse scenarios and tasks. For instance, Tifrea et al.8 achieved excellent results in similarity, analogy, and synonym detection tasks by embedding words into hyperbolic space based on the concepts of hyperbolicity or treeness. Yu et al.16 utilized hyperbolic Lorentz model properties for drug discovery based on chemical structures and relationships. The learned hyperbolic embeddings can be used to discover new drug uses and side effects. Wang et al.11 proposed a hyperbolic graph convolutional network for recommendation systems, enhancing user-item interaction understanding. Based on these developments, the learning capabilities of hyperbolic neural networks are now on par with or even superior to complex deep neural networks in Euclidean space. Therefore, we propose to apply hyperbolic neural networks to the field of video anomaly detection to improve the issue of misclassification of anomaly events caused by the limited representational capacity of Euclidean space.

Weakly supervised video anomaly detection

Sultani et al.2 proposed a deep multiple instance learning (MIL) framework that using C3D as a feature extractor. They treated normal and abnormal videos as bags and considered video segments as instances within the MIL framework. Inoder to separate abnormal and normal instances, they introduced a deep MIL ranking loss based on their anomaly scores and released UCF-Crime dataset, a large-scale video anomaly detection dataset. Wan et al.3 viewed video anomaly detection as a regression problem for weakly supervised prediction of abnormality scores for video segments. To learn discriminative features for anomaly detection, they designed AR_NET with dynamic multiple instance learning loss and center loss. Tian et al.4 hypothesized that within identical or similar video sequences, abnormal feature magnitudes might exceed normal feature magnitudes. They introduced the robust temporal feature magnitude (RTFM) loss to orient abnormal feature magnitudes in one direction while pushing normal feature magnitudes in the opposite direction. Chen et al.5 argued that encouraging abnormal feature magnitudes to increase and normal feature magnitudes to decrease through the RTFM loss may be unreasonable because it contradicts the inherent amplitude distribution of videos and could hinder network training. To address this issue, they proposed the MGFN network, which includes a feature amplification mechanism and amplitude contrast loss to enhance feature discrimination during anomaly detection. Zhou et al.7 introduced the uncertainty regulated dual memory units (UR-DMU) model. This model focuses on learning representations for normal data and discriminative features for abnormal data. To enhance the network’s capability in capturing video correlations, they integrated global and local multi-head self-attention modules into the transformer network, yielding more expressive embeddings.

Zhong et al.17 introduced a novel perspective by exploring supervised learning with noisy labels, utilizing graph convolutional networks (GCN) to rectify label noise. Wu et al.18 contributed to the field by releasing the XD-Violence dataset. This expansive multi-scene dataset spans 217 hours, encompassing 4754 untrimmed videos featuring audio signals and weak labels. Their proposal of a neural network with three parallel branches, designed to capture diverse relationships among video segments and integrate features, has notably advanced multi-modal approaches within video detection. Similar to Zhong et al.17 and Wu et al.18, our method relies on feature similarity and temporal relevance to establish the relationship matrix within graph convolutional networks. However, our approach differs significantly: we map the relationship matrix to hyperbolic space with lower distortion and employ hyperbolic graph convolutional neural networks suitable for the hyperbolic space. Additionally, our framework is more straightforward, achieving superior results using only the RGB modality.

Preliminaries

Hyperbolic geomerty is a non-Euclidean geometry with a constant negative curvature K. The hyperbolic geometry models have been applied in previous studies: the Poincaré ball model14, Poincaré half-plane model19, Klein model20, and Lorentz model21. These hyperbolic models have the property of equidistance equivalence, enabling transformation of any point in one model into another while preserving distances through appropriate transformations. Given their exponential and logarithmic mapping, as well as the numerical stability and computational simplicity of the distance function14, we opt for the Poincaré ball model and the Lorentz model as the foundational models for our framework.

Poincaré ball model

The Poincaré ball model \({\mathbb {P}}^n=(\beta ^n,g_x )\) of hyperbolic space is defined by the manifold \(\beta ^n= \left\{ \sum _{i=1}^{n} x_i^2<K, x_i \in R^n \right\}\) equipped with the Riemannian metric :

where \(g^E\) is the Euclidean scalar product. Furthermore, the distance between any two points \(p,q \in \beta ^n\) can be expressed as:

Lorentz model

\({\mathbb {L}}^n_K=({\mathbb {L}}^n,g^K_x )\) denotes a n-dimensional Lorentz model with constant negative curvature K. \(g^K_x\) is the Riemannian metric tensor. Every point in \({\mathbb {L}}^n_K\) satisfies:

where \(\langle x, x \rangle _{\mathbb {L}}\) represents the Lorentz inner product, which can be expressed as:

Exponential and logarithmic maps

In the Lorentz and Poincaré ball models, the tangent space centered at x is denoted as \({\mathbb {T}}_{x}\). The tangent and hyperbolic spaces of these models are interconvertible via exponential and logarithmic mappings, albeit with differing formulas. Focusing on the Lorentz model, we present the mathematical expressions for these two mappings as an illustration. For a point \(x \in {\mathbb {L}}^n_K\), the tangent space \({\mathbb {T}}_{x}{\mathbb {L}}_{K}^{n}\) is Euclidean subspace of \({\mathbb {R}} ^{n+1}\) and consists of all vectors orthogonal to x. Formally, it can be written as:

The tangent vector \(z \in {\mathbb {T}}_x{\mathbb {L}}^n_K\) in the Euclidean space can be mapped to the Lorentz hyperbolic space \({\mathbb {L}}^n_K\) through the exponential mapping, which can be written as:

Conversely, the logarithmic mapping can be mapped from Lorentzian space back into Euclidean space and it can be written as:

\(d^K_{\mathbb {L}}(x,y)\) denotes the Lorentzian intrinsic distance function between two points \(x,y \in {\mathbb {L}} ^n_K\), which is given as:

Graph convolutional neural networks

In Euclidean space, feature transform and neighborhood aggregation are the crucial operations of graph convolutional neural networks, which can be simplified into the following formulas:

where N(i) denotes the neighbors of node i, \(W^l\) and \(b^l\) are weights and bias parameters for layer l, \(\sigma\) is a non-linear activation function.

The proposed method

Overview

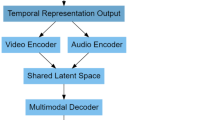

In this section, we will delve into the proposed model architecture. The overall structure of the model is shown in Fig. 1. Initially, the I3D network is employed to extract features from the raw video data, which are then uniformly divided into T segments.The model consists of two main branches: the Feature Similarity Graph (FSG) branch and the Temporal Relation Graph (TRG) branch, which capture the feature similarity and temporal relationships between the graph nodes, respectively. These relationships are used to construct adjacency matrices that are subsequently embedded into Lorentz and Poincaré hyperbolic spaces, enabling a more nuanced learning of video features. Finally, the learned hyperbolic video features are processed through a fully connected layer (FC) to predict anomaly scores for each video segment. The top k highest anomaly scores are then selected, and their average is computed as the overall anomaly score for the video.

Formulation and problem statement

The goal of video anomaly detection is to estimate the abnormal states within a video and locate the abnormal regions within the video sequence (if exist). In a weakly supervised scenario, the training dataset provides video sequences \({X}=\left\{ x_i \right\} ^n_{i=1}\) and video-level labels \(Y=\left\{ y_i \right\} ^n_{i=1},y_i \in \left\{ 0,1 \right\}\). The annotated video-level label \(y_i \in \left\{ 0,1 \right\}\) signifies the presence or absence of an anomaly event within the video. Following previous works2,3,4,5,6,7, the frame-level video sequence \({X}=\left\{ x_i \right\} ^n_{i=1}\) is divided into N non-overlapping segments, each consisting of 16 consecutive frames. The I3D model, pre-trained on the Kinetics dataset22, is then used as a feature extractor for each segment, yielding a segment-level representation \(F^{N\times D}\). Given the wide variation in video lengths, ranging from a few seconds to several hours, and considering GPU memory limitations, it is impractical to train the model using direct batch processing. Therefore, during training, we uniformly re-segment \(F^{N\times D}\) into T segments, however, during testing, the batch size is set to 1, eliminating the need to uniformly divide the video into T segments.

Feature similarity graph branch

We draw inspiration from previous studies on GCNs17,18,23 and leverage the FSG branch to capture global dependencies by assessing the similarity between any two segments. Anomalous events often span continuous time intervals, and both normal and anomalous segments exhibit a certain degree of internal similarity. For example, different explosion scenes are typically characterized by common features like flames and smoke. These shared features help the network better identify and classify events of the same type. The overall relationship matrix is thus defined based on feature similarity as follows:

The threshold operation is used to eliminate weak relationships in hyperbolic space by setting similarity values below the threshold \(\tau\) to 0. This reduces the interference of dissimilar events and strengthens the correlation of strongly similar ones. The threshold operation can be defined as:

Temporal relation graph branch

Video sequences exhibit contextual dependencies and temporal evolution between segments, making it essential to capture temporal correlations for many video-based tasks. While the FSG branch captures global dependencies by assessing the similarity between segments, it does not account for the temporal relationships between them. To address this, the TRG branch is designed to model the temporal dependencies between video segments. Anomalous events tend to occur in close temporal proximity, and by analyzing the sequence and timing of segments, we construct a temporal relation graph that captures these temporal structures. For any two segments \(i_{th}\) and \(j_{th}\) in a video sequence, the temporal relationship is defined as:

where \(\gamma\) and \(\sigma\) are hyperparameters that control the temporal range, influencing the rate at which the values in the distance matrix decrease as the distance increases. Specifically, the larger the value of \(\gamma\) and the smaller the value of \(\sigma\), the weaker the temporal correlation between two segments.

Hyperbolic embedding

Compared to Euclidean space, the volume of hyperbolic space increases exponentially with distance from the center. Therefore, hyperbolic space offers greater accuracy in capturing the distances and relative positions between complex data, particularly when dealing with hierarchical or tree-structured data. We divided the video into T segments and built inter-segment relationships using the FSG and TRG branches, where each segment is treated as a graph node in the GCN. Since the structure of this graph closely resembles a hierarchy, learning video representations in hyperbolic space has advantages over Euclidean space. Therefore, our proposed method maps the spatio-temporal information of video features into hyperbolic space for training, learning video representations from the perspective of hyperbolic space. In this section, we will provide a detailed introduction to graph convolution operations based on the Poincaré ball model and Lorentz model in hyperbolic space.

Poincaré ball embedding

HGCN15 implements graph convolution operations based on the hyperbolic space. When performing linear transformations, neighborhood aggregation, and nonlinear activation, it first maps the input vectors to the tangent space at the corresponding point, effectively transforming points from hyperbolic space to Euclidean space. The necessary computations are then carried out in the Euclidean space, and the results are subsequently mapped back to hyperbolic space. This approach allows the convolution operation to take place in Euclidean space while preserving the hyperbolic geometric properties throughout the process, ensuring that the unique characteristics of hyperbolic space are maintained during computation.

Linear transformation

Linear transformation requires multiplying the embedded vector by a weight matrix, followed by a bias shift. To perform matrix operations in Euclidean space, the vector must first be mapped to the tangent space at its current position. In the tangent space, standard Euclidean matrix operations can be used to execute the linear transformation. This involves multiplying the embedded vector by the weight matrix and adding the bias term. After completing these operations in the tangent space, the result can then be mapped back to hyperbolic space.

where o represents the origin in the Poincaré ball, and \(P_{o\rightarrow x^{{\mathbb {P}}} }^{K}\) is the translation operation from hyperbolic space to Euclidean space.

Neighborhood aggregation

Similarly, in HGCN, the computation of neighborhood aggregation requires mapping to tangent space for aggregation using logarithmic operations, and then mapping back to hyperbolic space using exponentials, as represented by the following equation:

where N(i) denotes the neighbors of node i and \(A_{ij}\) is the relation matrix.

Non-linear activation

To apply nonlinear activation functions in hyperbolic space, the activation function is first applied in the tangent space, and then the result is mapped back to hyperbolic space. The process of nonlinear activation is as follows:

To summarize the above three operations, the computational steps from layer \(l-1\) to layer l in the Poincaré ball hyperbolic space can be described as follows:

Lorentz embedding

Chen et al.24 formalized the fundamental operations of neural networks by adapting Lorentz transformations (including boosts and rotations), proposing a fully hyperbolic neural network based on the Lorentz model, without the need to switch between hyperbolic and Euclidean spaces. They provided a general formula for feature transformations in hyperbolic linear layers, incorporating activation, dropout, bias, and normalization:

where v represents the velocity in the Lorentz transformation (relative to the speed of light), and \(\phi\) can denote dropout, activation functions, or normalization. Further, their proposed neighborhood aggregation in Lorentz can be defined as:

where m is the number of points. Therefore, in the Lorentz hyperbolic convolution layer, the computational steps from layer \(l-1\) to layer l can be formalized as follows:

Loss function

After dividing the video into T segments at equal intervals, each segment is embedded into two hyperbolic spaces to learn the video representations. The features learned in the hyperbolic spaces, \(X^{{\mathbb {P}}}\) (from the Poincaré ball space) and \(X^{{\mathbb {L}}}\) (from the Lorentz space), are then used as inputs to a fully connected layer (FC) to predict the anomaly score S for each segment:

As mentioned earlier, in a weakly supervised setting, each video is assigned only a video-level label. We use the k-max loss function to enlarge the inter-class distance between anomalous and normal segments. Specifically, the average of the top k segments with the highest scores is selected as the anomaly score for the video, where \(k = \left\lfloor \frac{T}{16} + 1 \right\rfloor\). Therefore, the final classification loss is formulated as follows:

where \({\overline{S}}_{topk}\) represents the average of the top k anomaly scores among the video segments, and \(y_i\) is the binary video-level label.

Experiments

In this section, we evaluate our approach using the XD-Violence and UCF-Crime datasets, and compare the results with state-of-the-art methods. Additionally, we perform a series of ablation experiments to assess the effectiveness of each component within our framework.

Dataset

To validate the effectiveness of the proposed approach, we conduct extensive experiments on two anomaly-detection datasets, namely UCF-Crime and XD-Violence. They include a variety of scene distributions and have different dataset sizes.

UCF-Crime comprises unedited surveillance videos capturing 13 real-world anomalous events such as abuse, arrest, arson, assault, accident, burglary, explosion, fighting, robbery, gunshot, theft, shoplifting, and vandalism. The training set comprises 800 normal and 810 anomalous videos, while the test set includes 150 normal and 140 anomalous videos.

XD-Violence is a recently proposed large-scale multi-scene anomaly detection dataset compiled from real-life videos, online sources, sports streams, surveillance cameras, and central television stations. Spanning a cumulative duration of 217 hours, it encompasses 4754 unedited videos complete with audio signals and weak labels. The training set contains video-level labels, and the test set contains frame-level labels. Notably, our method in this paper exclusively leverages RGB information and omits the utilization of audio information.

Evaluation metrics. Similar to previous papers2,3,4,5,6,7, we evaluate WS-VAD performance with the area under curve (AUC) of the frame-level receiver operating characteristics (ROC) for UCF-Crime. Meanwhile, the AUC of the frame-level precision-recall curve (AP) is used for XD-Violence. Higher AUC and AP values signify superior performance of the network.

Implementation details

Our proposed method is implemented in PyTorch and trained on an NVIDIA RTX 3060TI GPU. The hyperparameters are set as \(\tau =0.8\), \(\gamma =1\) and \(\sigma =e\). we set T to 200 for UCF-Crime and 150 for XD-Violence. The number of hyperbolic convolutional layers is set to 2. The batch size in the training is 128 and each batch consists of randomly selected 64 normal videos and 64 abnormal ones. We use RADAM25 with the learning rate set to 0.0005 for XD-Violence and RSGD25 with a learning rate of 0.1 for UCF-Crime. These optimizers simulate the behaviors of ADAM26 and SGD27, respectively, while considering the geometry of hyperbolic space, which makes them more suitable for training our network.

Results of XD-violence

On the XD-Violence dataset, we conducted a comparative analysis of our method against the current state-of-the-art anomaly detection algorithms in Table 1. Given that there are relatively few studies utilizing unsupervised training methods on this dataset, our comparison primarily focused on weakly supervised methods. Among all the methods involved in the comparison, our approach, using only I3D RGB features, achieved the highest AP score of 82.67%. Furthermore, although the MGFN5 network has 28.65M parameters, its performance only reached 79.19%, demonstrating that our model exhibits significant advantages in parameter efficiency. Our model uses only 0.61M parameters, the least among all compared methods, yet it achieved the best performance. Notably, despite our method relying solely on unimodal features, its performance surpassed that of multimodal fusion methods, including HL-NET18, CU-NET31, and UR-DMU7. These results not only confirm the effectiveness of learning video instance representations in hyperbolic space but also highlight the application potential of our method in the field of video anomaly detection, demonstrating its efficiency and superiority in practical applications.

Results of UCF-crime

Due to the advantage of video-level labels in weak supervision, our method surpasses all current unsupervised methods. Although our model’s performance on the UCF-Crime dataset is 1.77% lower than the current best weakly supervised method, MGFN5, as shown in Table 2 , this gap is within an acceptable range, and it surpasses most other existing works, such as GCN17, MIST6, RTFM4 and Cao et al.38. It is noteworthy that methods like GCN17 and Cao et al.38 rely on traditional Euclidean-space graph convolutional networks, whereas we are the first to apply hyperbolic space to video anomaly detection and surpass their performance. This innovation not only demonstrates the feasibility of learning video representations in hyperbolic space but also reveals the potential advantages of its nonlinear characteristics in distinguishing between anomalous and normal videos, providing new perspectives and methodologies for future research.

Ablation experiments and analysis

Ablation experiments

Ablation experiments on the value of T

When processing videos in the UCF-Crime and XD-Violence datasets, due to the inconsistency in video lengths, it is necessary to standardize the length of video features during training by uniformly dividing them into T video segments. To determine the optimal number of segments, T, we conducted ablation experiments on the UCF-Crime and XD-Violence datasets. The experimental results in Table 3 show that as the value of T increases, the accuracy on both datasets improves. Specifically, on the XD dataset, the model performance reaches its peak when T is set to 150. However, further increasing T disrupts the temporal continuity of the videos, leading to a drop in accuracy. While increasing T may enhance the ability to capture local details, it may also compromise the ability to grasp the global context of the video. Conversely, reducing T results in each segment containing more video content, which helps in extracting global features but may overlook important details. Additionally, the performance of GCN depends on the construction of the adjacency matrix, and having too many video segments not only increases memory consumption but may also negatively impact the network’s learning efficiency. Based on the experimental results, we selected T=200 as the default setting for UCF-Crime and T=150 for XD-Violence.

Effectiveness of the hyperbolic spaces and optimizers

To validate the role of hyperbolic space in anomaly detection, we trained models on the UCF Crime and XD-Violence datasets using Poincaré ball hyperbolic space, and Lorentz hyperbolic space, respectively. The results in Table 4 show that in the experiments with only Poincaré ball embedding, compared to the best results, there is a decrease of 1.54% (UCF) and 0.81% (XD). In the experiments with only Lorentz embedding, compared to the best results, there is a decrease of 3.22% (UCF) and 3.18% (XD). Embedding videos into two different hyperbolic spaces allows for a perspective that resembles looking at the problem from different angles due to geometric differences. Having multiple viewpoints can effectively reduce ’blind spots’, leading to improved network performance when both spaces are used together. Additionally, we conducted ablation experiments on the optimizers RSGD and RADAM in hyperbolic space, with results shown in Table 5. On both datasets, the results obtained using the traditional SGD optimizer were 3.29% and 0.42% lower than those using the hyperbolic optimizers, respectively. These experimental results indicate that our method can effectively learn video representations in hyperbolic space. Videos with temporal relationships align well with the hierarchical structure of hyperbolic space, and especially with the combination of multiple hyperbolic spaces, which allows for better learning of complex feature relationships in videos to distinguish abnormal events.

Effectiveness of FSG and TRG branches

nTo evaluate the specific impact of each branch on the model’s performance, we trained the model using each branch individually while keeping other components unchanged. As shown in Table 6, when both branches work together, our network achieves the best performance across two different datasets. Anomalous events in videos usually occur in a continuous sequence, and there is a certain level of similarity both between anomalous events and between normal events. The TRG branch focuses on analyzing the temporal continuity of anomalous events, capturing the sequential development of events, while the FSG branch focuses on the global similarity between segments, uncovering commonalities between anomalous segments. This collaboration between the two branches enhances the accuracy of the network in detecting anomalous events.

Qualitative results

To further evaluate our method, we present qualitative results from both datasets in the Fig. 2, where the red areas represent the ground truth anomalous regions, and the blue curve indicates the anomaly scores predicted by the network model. Key frames are also provided to label the anomalous/normal frames, depicting what is happening in the video. Green rectangles represent normal frames, while red rectangles indicate anomalous frames. Notably, Fig. 2d and h depict two failure cases. In Fig. 2d, which shows a car crash, our method successfully detects the anomaly when the car flips over, but it also identifies the period with heavy dust after the rollover as abnormal. However, the ground truth labels this post-rollover scene as normal. In reality, the scene of the car with rising dust after the rollover should be considered abnormal. Additionally, in Fig. 2h, which captures a home robbery, the suspect is first seen observing the door, looking for an opportunity to pick the lock, and then proceeds to break in. Our model incorrectly labels both periods as anomalous, as these behaviors share certain similarities, making them challenging to distinguish. Overall, our method not only produces accurate detection regions for anomalous videos, but also exhibits very low values close to zero in the anomaly scores of normal videos, which demonstrates the stability and superiority of our method.

Complexity analysis

We conducted a comprehensive comparison of various methods in Fig. 3, including HL-NET21, Sultani et al.4, GCN24, UR-DMU28, RTFM6, MGFN7, and others, focusing on both parameter count and model accuracy. The horizontal axis in the figure represents the number of parameters, while the vertical axis represents the AP/AUC metrics. In the XD-Violence dataset, our method is positioned closest to the top-left corner in Fig. 3, indicating that it not only achieves superior accuracy but also features the most compact parameter count (0.61M). Although our method slightly lags behind UR-DMU28 and MGFN7 in terms of accuracy on the UCF-Crime dataset, it still maintains an advantage in parameter efficiency. Furthermore, our network has a simple structure, without introducing any complex loss functions beyond the basic classification loss, yet it performs on par with many complex Euclidean-based networks. These results demonstrate the efficiency of our framework, which employs a simplified network design while achieving excellent performance, highlighting the great potential of hyperbolic space-based methods for video anomaly detection.

Conclusion

This paper proposes a novel weakly-supervised learning method based on hyperbolic space for video anomaly detection.The proposed TSG and TRG branches construct feature relation matrices based on the feature similarity and temporal correlation of video segments, embedding them into the Poincaré ball model and Lorentz model to learn video feature representations. Extensive experiments on two challenging datasets demonstrate the effectiveness and superiority of the proposed approach, highlighting that hyperbolic geometry offers greater flexibility than Euclidean geometry when modeling complex data structures. While methods based on Euclidean space for video anomaly detection are well-established, the application of hyperbolic space in this field remains in its early stages. This study represents a preliminary exploration of hyperbolic space for anomaly detection, and we are optimistic about its potential for further development in the realm of video anomaly detection.

Data availibility

The UCF-Crime dataset is available at https://www.crcv.ucf.edu/projects/real-world. The XD-Violence dataset is available at https://roc-ng.github.io/XD-Violence.

References

Zaheer, M. Z. et al. Generative cooperative learning for unsupervised video anomaly detection. In Proc. of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 14744–14754 (2022).

Sultani, W., Chen, C. & Shah, M. Real-world anomaly detection in surveillance videos. In Proc. of the IEEE Conference on Computer Vision and Pattern Recognition, 6479–6488 (2018).

Wan, B., Fang, Y., Xia, X. & Mei, J. Weakly supervised video anomaly detection via center-guided discriminative learning. In 2020 IEEE International Conference on Multimedia and Expo (ICME), 1–6 (IEEE, 2020).

Tian, Y. et al. Weakly-supervised video anomaly detection with robust temporal feature magnitude learning. In Proc. of the IEEE/CVF International Conference on Computer Vision, 4975–4986 (2021).

Chen, Y. et al. Mgfn: Magnitude-contrastive glance-and-focus network for weakly-supervised video anomaly detection. In Proc. of the AAAI Conference on Artificial Intelligence 37, 387–395 (2023).

Feng, J.-C., Hong, F.-T. & Zheng, W.-S. Mist: Multiple instance self-training framework for video anomaly detection. In Proc. of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 14009–14018 (2021).

Zhou, H., Yu, J. & Yang, W. Dual memory units with uncertainty regulation for weakly supervised video anomaly detection. In Proc. of the AAAI Conference on Artificial Intelligence 37, 3769–3777 (2023).

Tifrea, A., Becigneul, G. & Ganea, O.-E. Poincare glove: Hyperbolic word embeddings. In International Conference on Learning Representations.

Corso, G. et al. Neural distance embeddings for biological sequences. Adv. Neural. Inf. Process. Syst. 34, 18539–18551 (2021).

Gerald, T., Zaatiti, H., Hajri, H., Baskiotis, N. & Schwander, O. A hyperbolic approach for learning communities on graphs. Data Min. Knowl. Disc. 37, 1090–1124 (2023).

Wang, L., Hu, F., Wu, S. & Wang, L. Fully hyperbolic graph convolution network for recommendation. In Proc. of the 30th ACM International Conference on Information & Knowledge Management, 3483–3487 (2021).

Chen, Y. et al. Modeling scale-free graphs with hyperbolic geometry for knowledge-aware recommendation. In Proc. of the Fifteenth ACM International Conference on Web Search and Data Mining, 94–102 (2022).

Nickel, M. & Kiela, D. Poincaré embeddings for learning hierarchical representations. Adv. Neural Inf. Process. Syst.30 (2017).

Ganea, O., Bécigneul, G. & Hofmann, T. Hyperbolic neural networks. Adv. Neural Inf. Process. Syst.31 (2018).

Chami, I., Ying, Z., Ré, C. & Leskovec, J. Hyperbolic graph convolutional neural networks. Adv. Neural Inf. Process. Syst.32 (2019).

Yu, K., Visweswaran, S. & Batmanghelich, K. Semi-supervised hierarchical drug embedding in hyperbolic space. J. Chem. Inf. Model. 60, 5647–5657 (2020).

Li, N., Zhong, J.-X., Shu, X. & Guo, H. Weakly-supervised anomaly detection in video surveillance via graph convolutional label noise cleaning. Neurocomputing 481, 154–167 (2022).

Wu, P. et al. Not only look, but also listen: Learning multimodal violence detection under weak supervision. In Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, August 23–28, 2020, Proceedings, Part XXX 16, 322–339 (Springer, 2020).

Sala, F., De Sa, C., Gu, A. & Ré, C. Representation tradeoffs for hyperbolic embeddings. In International Conference on Machine Learning, 4460–4469 (PMLR, 2018).

Gulcehre, C. et al. Hyperbolic attention networks. In International Conference on Learning Representations.

Nickel, M. & Kiela, D. Learning continuous hierarchies in the lorentz model of hyperbolic geometry. In International Conference on Machine Learning, 3779–3788 (PMLR, 2018).

Carreira, J. & Zisserman, A. Quo vadis, action recognition? a new model and the kinetics dataset. In Proc. of the IEEE Conference on Computer Vision and Pattern Recognition, 6299–6308 (2017).

Zeng, R. et al. Graph convolutional networks for temporal action localization. In Proc. of the IEEE/CVF international conference on computer vision, 7094–7103 (2019).

Chen, W. et al. Fully hyperbolic neural networks. In Proc. of the 60th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), 5672–5686 (2022).

Becigneul, G. & Ganea, O.-E. Riemannian adaptive optimization methods. In International Conference on Learning Representations.

Kingma, D. P. & Ba, J. Adam: A method for stochastic optimization. arXiv preprint[SPACE]http://arxiv.org/abs/1412.6980 (2014).

Thakare, K. V., Raghuwanshi, Y., Dogra, D. P., Choi, H. & Kim, I.-J. Dyannet: A scene dynamicity guided self-trained video anomaly detection network. In Proc. of the IEEE/CVF Winter Conference on Applications of Computer Vision, 5541–5550 (2023).

Hasan, M., Choi, J., Neumann, J., Roy-Chowdhury, A. K. & Davis, L. S. Learning temporal regularity in video sequences. In Proc. of the IEEE Conference on Computer Vision and Pattern Recognition, 733–742 (2016).

Schölkopf, B., Williamson, R. C., Smola, A., Shawe-Taylor, J. & Platt, J. Support vector method for novelty detection. Adv. Neural Inf. Process. Syst.12 (1999).

Li, S., Liu, F. & Jiao, L. Self-training multi-sequence learning with transformer for weakly supervised video anomaly detection. In Proc. of the AAAI Conference on Artificial Intelligence 36, 1395–1403 (2022).

Zhang, C. et al. Exploiting completeness and uncertainty of pseudo labels for weakly supervised video anomaly detection. In Proc. of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 16271–16280 (2023).

Wu, J.-C., Hsieh, H.-Y., Chen, D.-J., Fuh, C.-S. & Liu, T.-L. Self-supervised sparse representation for video anomaly detection. In European Conference on Computer Vision, 729–745 (Springer, 2022).

Pang, W.-F., He, Q.-H., Hu, Y.-j. & Li, Y.-X. Violence detection in videos based on fusing visual and audio information. In ICASSP 2021-2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), 2260–2264 (IEEE, 2021).

Wang, J. & Cherian, A. Gods: Generalized one-class discriminative subspaces for anomaly detection. In Proc. of the IEEE/CVF International Conference on Computer Vision, 8201–8211 (2019).

Al-Lahham, A., Tastan, N., Zaheer, M. Z. & Nandakumar, K. A coarse-to-fine pseudo-labeling (c2fpl) framework for unsupervised video anomaly detection. In Proc. of the IEEE/CVF Winter Conference on Applications of Computer Vision, 6793–6802 (2024).

Al-Lahham, A., Zaheer, M. Z., Tastan, N. & Nandakumar, K. Collaborative learning of anomalies with privacy (clap) for unsupervised video anomaly detection: A new baseline. In Proc. of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 12416–12425 (2024).

Zaheer, M. Z., Mahmood, A., Astrid, M. & Lee, S.-I. Claws: Clustering assisted weakly supervised learning with normalcy suppression for anomalous event detection. In Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, August 23–28, 2020, Proceedings, Part XXII 16, 358–376 (Springer, 2020).

Cao, C., Zhang, X., Zhang, S., Wang, P. & Zhang, Y. Adaptive graph convolutional networks for weakly supervised anomaly detection in videos. IEEE Signal Process. Lett. 29, 2497–2501 (2022).

Acknowledgements

This work was supported in part by National Natural Science Foundation of China (No.61701049) and Chengdu University of Technology 2023 Young and Middle-aged Backbone Teachers Development Funding Program (NO.10912-JXGG2023-06470).

Author information

Authors and Affiliations

Contributions

Meilin Qi: Conceptualization, Methodology, Resources, Software, Writing - original draft, Writing - review & editing. Yuanyuan Wu: Supervision, Validation, Writing - review & editing.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Qi, M., Wu, Y. Weakly supervised video anomaly detection based on hyperbolic space. Sci Rep 14, 26348 (2024). https://doi.org/10.1038/s41598-024-77505-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-024-77505-4