Abstract

Contrast-free ultrasound quantitative microvasculature imaging shows promise in several applications, including the assessment of benign and malignant lesions. However, motion represents one of the major challenges in imaging tumor microvessels in organs that are prone to physiological motions. This study aims at addressing potential microvessel image degradation in in vivo human thyroid due to its proximity to carotid artery. The pulsation of the carotid artery induces inter-frame motion that significantly degrades microvasculature images, resulting in diagnostic errors. The main objective of this study is to reduce inter-frame motion artifacts in high-frame-rate ultrasound imaging to achieve a more accurate visualization of tumor microvessel features. We propose a low-complex deep learning network comprising depth-wise separable convolutional layers and hybrid adaptive and squeeze-and-excite attention mechanisms to correct inter-frame motion in high-frame-rate images. Rigorous validation using phantom and in-vivo data with simulated inter-frame motion indicates average improvements of 35% in Pearson correlation coefficients (PCCs) between motion corrected and reference data with respect to that of motion corrupted data. Further, reconstruction of microvasculature images using motion-corrected frames demonstrates PCC improvement from 31 to 35%. Another thorough validation using in-vivo thyroid data with physiological inter-frame motion demonstrates average improvement of 20% in PCC and 40% in mean inter-frame correlation. Finally, comparison with the conventional image registration method indicates the suitability of proposed network for real-time inter-frame motion correction with 5000 times reduction in motion corrected frame prediction latency.

Similar content being viewed by others

Introduction

Ultrasound (US) is one of the majorly used imaging modality for different diagnostic applications due to its non-invasiveness, high resolution, low cost, and portability1,2. Recent advances in Doppler imaging using high frame rate contrast-free US microvasculature imaging help in visualizing and analyzing tumor vascular network for better characterization of lesions toward more accurate diagnosis3,4,5. Further, studies reported that meticulous analysis of tumor microvessel morphology can help early diagnosis of malignancy and reduce related mortality6,7,8. Additionally, in case of thyroid gland, the malignant nodules are quite low in number as compared to benign cases, therefore, to reduce unnecessary benign biopsies, it is crucial to accurately distinguish them using non-invasive contrast free quantitative US microvessel imaging9. However, there is unavoidable physiological and non-physiological motion between the successive frames, i.e., inter-frame motion, due to its closeness to the pulsating carotid artery which significantly contaminates the acquired frames and causes errors in the subsequent analysis of microvessel morphological features resulting in wrong diagnosis10.

The inter-frame motion can vary with respect to different types, such as, probe motion, respiratory motion, sonographer’s hand motion, rigid and non-rigid motion, affine, in-plane, and out-of-plane motion, etc10. The rigid motion is a combination of translation and rotation such as breathing motion, and affine motion includes translation, rotation, clipping, and resizing11,12. The nonrigid motion includes clipping, reshaping, uniform/non-uniform scaling12. These types of motion introduce different types of translations between the consecutive frames which reduces the inter-frame correlations and structural similarities, and causes fluctuations in the time intensity curves13. This leads to degradation of microvasculature image quality, inaccurate quantification and wrong diagnosis10,14. More prominently, in quantitative US microvessel imaging of thyroid nodules, considerable physiological inter-frame motion degrades the vessel visualization and adversely affects the results of quantification10. Therefore, correction of motion between the acquired successive frames is essential towards adequate analysis of microvessel morphological features for these applications.

In view of aforementioned aspects, literary research works employ inter-frame motion correction techniques prior to the subsequent analysis of the image data, majority of which are based on contrast enhanced US (CEUS) imaging. These include mainly the conventional method of motion-containing frames rejection and recent methods of image registration12,13. Casey et al. proposed a two-tier respiratory inter-frame motion correction for B-mode and contrast images of focal liver lesions using image registration with respect to an automatically selected reference frame13. Initially, normalized correlation coefficient (NCC) was estimated between the temporal mean frame and all other frames in the sequence13. Subsequently, the frame with the highest matching score based on the NCC was selected as the reference frame. The first tier performed the registration of the sub-reference frames chosen from each motion cycle with respect to the main reference frame. The second tier performed the registration of all other frames with respect to the closest sub-reference frame13. This work achieved an overall improvement of \(17\%\) in the NCC after motion correction13. However, there are no details about the overall computational analysis of the presented work. Another method in15 proposed reference-free registration of frames containing breathing, shifting position-based, and random motions in dynamic contrast enhanced US imaging (DCE-US), using the flow information. A significant improvement was reported with respect to reference-based registration methods applied on a simulated data with specified motion. However, this method relied on the DCE-US model, which limits its usage for other types of US images15.

A phase correlation algorithm was proposed in16 towards displacement estimation in each frame and between frames to correct the motion for ultrafast US localization microscopy (uULM). However, the algorithm was limited to correction of only rigid motion in small amounts16. Another work in17 implemented non-rigid breathing and heart beating motion estimation and correction of liver images acquired using uULM with contrast micro-bubbles. A 2D NCC-based speckle tracking was employed to measure liver displacements for consequent motion correction using dynamic reference frame selection17. The authors reported several hours of processing time for non-rigid motion correction using this method. This method was also applied to correct rigid inter-frame motion due to the carotid artery for non-contrast imaging of small vessels in human thyroid10,18. The authors demonstrated a significant increase in the contrast-to-noise ratio of motion-corrected Doppler images, and better visualization of the small vessel blood flow without employing any contrast agents. Speckle tracking was also shown to be effective towards estimating motion due to heartbeat, respiration, and residue, for improved quality of super-resolution imaging of rat kidneys in19. In20, non-rigid registration of CEUS frames of carotid artery was exclusively proposed for vascular imaging with a significant improvement in image intensities.

To overcome the issues with motion estimation and correction, Harput et al. proposed the use of two-stage motion correction for rigid, non-rigid and affine registration of frames with handheld probe motion in super-resolution and CEUS, which was previously used for magnetic resonance (MR) images11,12. It is a combination of affine registration, which approximates the global motion, and non-rigid registration, which approximates the local tissue deformity12. A cost function corresponding to the transformation precision and image resemblance is minimized for non-rigid registration12. The transformation matrix is saved after the cost function optimization and algorithm convergence for motion correction12. This method was also adapted in21 for high frame-rate CEUS imaging. It performs registration of all acquired frames with respect to a reference frame. This implies that the performance of motion correction is highly dependent on the quality of reference frame. Therefore, the appropriate selection of the reference frame is critical towards efficient frame registration and motion correction. Most of the research works utilize the first frame as the reference frame in the acquired sequence. However, the first frame may not always be the optimal choice for reference since it may also contain motion. Further, this method achieves high correlation between the corrected image frames and the reference frame at the expense of substantial computational time and memory used in optimization and multiple iterations12. Zhang et al. presented a quantitative selection approach for obtaining an optimal reference frame from a sequence of liver CEUS images22, to correct the respiratory motion. The selection was based on the highest near-peak contrast intensity image. The motion-corrected frames were obtained as the frames with the closest phase to the reference frame in every respiratory cycle, based on the correlation coefficient estimations22. This work reported an overall high performance after the motion correction.

The aforementioned motion correction studies are based mostly on CEUS imaging, and use speckle tracking and two-stage estimation, which incur high computational time and are sensitive to the selection of the reference frame. Further, the two-stage approach estimates the displacement matrix corresponding to the motion, and applies that transformation to obtain the motion corrected frame. This post-processing further contributes to the computational complexity. These challenges can be addressed by using easily accessible and cost-effective contrast-free US micovessel imaging, and appropriate reference frame selection and deep learning techniques for fast inter-frame motion correction. More specifically, deep learning can significantly reduce the computational time for optimization while correcting motion in the successive frames without any post-processing14,23. A few research studies have explored deep learning-based automated motion correction for reducing the time complexity14,24, however, they also utilize CEUS. For example, researchers in14 proposed the use of an encoder-decoder-based deep learning network to estimate the motion matrix and apply it to transform the input B-mode US images to obtain the motion corrected images. The network was trained using the transformation matrices for affine and non-rigid motion obtained from conventional two-stage motion correction method. An overall correlation of 0.8 was obtained with an average latency of 0.20s in prediction of a single patch of motion corrected image. These results indicated an overall improvement of \(20\%\) in the correlation and the computation time was reduced by 18 times with respect to the two-stage method14. However, the deep learning network could be used to directly predict the motion corrected frames to further save the computation time of post-processing. Further, there were no details of the network architecture and the validation on 200 frames of 36 patients lacked sufficiency. More recently, Zheng et al. proposed a deep learning-based VGG16 generative adversarial network and a spatial transformer network for cardiac motion correction in intracoronary imaging using intravascular US24. They reported an average inter-frame dissimilarity of 0.0075 with a latency of 0.412 s in correcting an image. However, the deep learning network incurred a significant complexity, and the validation was performed on a simulated dataset. There is a lack of clinical validation which could have further demonstrated the effectiveness of this method. Apart from the studies for ultrasound, there is a recent research which proposed deep learning-based automatic inter-frame motion correction in low frame rate PET imaging, however, the evaluation depicted the correction of only rigid/translational motion25.

The aforementioned limitations of the literary works can be addressed by using a simplistic and low complex deep learning network to correct inter-frame motion in contrast-free US quantitative microvessel imaging. The network needs to be validated on clinical data with a significant number of frames and subjects for demonstrating its efficiency. Further, there is a lack of ground truth data in real-time US imaging, which leads to difficulties in assessing the validation performance. In view of these aspects, we propose a novel inter-frame motion correction network, i.e., IFMoCoNet, which is a low complex deep learning network for fast motion correction in non-contrast-enhanced US imaging using convolutional and depth-wise separable convolutional layers with hybrid adaptive and squeeze-and-excite attention mechanisms. The hybrid adaptive attention mechanisms proposed by Chen et al. in26 for segmentation in ultrasound images are adapted in this work for the first time to correct inter-frame motion. To the best of our knowledge, this is the first work that uses a deep learning network for fast inter-frame motion correction in contrast-free US quantitative microvessel imaging. The proposed network is evaluated initially using a phantom data and an in-vivo dataset consisting of motion-free breast lesion US images artificially contaminated with random inter-frame motion artifacts for rigid and non-rigid transformations. After motion correction of the successive frames, we perform post-processing to extract the microvasculature Doppler images using the motion-corrected data, to demonstrate the effectiveness of the proposed method. Further rigorous validation is performed using another in-vivo dataset consisting of thyroid gland images with real physiological motion in the consecutive frames due to the pulsation of the carotid artery in close proximity to the imaging area. For the first time in this context, this work proposes a ground truth selection scheme in the thyroid dataset unlike the existing deep learning-based studies for CEUS. The scheme uses Spatio-temporal correlation matrix27 for the selection of reference frame based on the maximum correlation, which is further used as ground truth to train the proposed network for this dataset. The approach is applicable in real-time settings and can efficiently correct the motion between successive frames in US imaging, with significantly low computational time. Major contributions of this paper are the following:

-

Proposed a low complex deep learning network including depth-wise separable convolutional layers with hybrid adaptive and squeeze-and-excite attention mechanisms for inter-frame motion correction in contrast-free US quantitative microvessel imaging.

-

Network training using reference frame as the ground truth obtained from spatio-temporal correlation matrix, as well as the ground truth obtained from a conventional method.

-

Prediction of the motion corrected frame with excessively low latency, as compared to the existing inter-frame motion correction studies.

-

Validation of the proposed network on a phantom data and two clinical datasets consisting of large number of US frames with simulated as well as realistic inter-frame motion artifacts.

-

Extraction of microvasculature Doppler using the motion-corrected frames.

Methods

Experimental datasets

Phantom data

This work utilizes the first experimental dataset for the purpose of solely validating the proposed network by thorough examination of the motion corrected frames with respect to the actual ground truth, i.e., motion artifact-free frames. This dataset consists of in-phase quadrature-phase (IQ) data acquired from custom-made microflow Plastisol phantom in plane wave scanning mode using the Verasonics Vantage 128 equipped with an L11-4v transducer (Verasonics, Inc., Kirkland, WA, USA) with a center frequency of 6.25 MHz28. A frame rate of 550 Hz was used with an excitation voltage of 30 V. A total of 1000 IQ frames, each with dimension of 76 × 140 pixels were acquired in each acquisition. The probe was fixed by a retort stand, and not held by a sonographer. Therefore, there was no motion or any artifact in the acquisitions28. More details of this dataset can be found in28. In this work, we used a single acquisition of the phantom data which originally consisted of 1000 motion-free frames. This set of 1000 frames was contaminated artificially, by introducing a specific type of inter-frame motion, which was a particular level of rigid translation and/or rotation varied with respect to the frame index. That is, each first frame in the set of 1000 frames was translated or rotated with a particular shift and/or rotation level respectively, each second frame in the set of 1000 frames was translated or rotated with two times of the corresponding particular shift and/or rotation level respectively, and so on. This process was repeated nine times with different types pf motions including varying combinations of translations and/or rotations for each set of 1000 frames, resulting in total 9000 frames with inter-frame motion. Therefore, a complete set of 10,000 frames was achieved, including initial 1000 motion-free frames used as the ground truth, and the remaining 9000 frames used as the inter-frame motion-containing inputs to train, test, and validate the proposed IFMoCoNet. The original 1000 frames were used as ground truth labels by replicating each 1000 frame set nine times for feeding these resulting 9000 labels with the corresponding 9000 motion contaminated inputs to the IFMoCoNet. The corresponding nine different types and/or levels of motion are described as:

where \(m_h(f_{i})\) denotes the horizontal motion/shift of 0.005 pixel applied to the ith frame in the right direction. Similarly, \(m_v(f_{i})\) denotes the corresponding vertical shift, \(m_{hv}(f_i)\) denotes the combination of both horizontal and vertical shifts, \(m_r(f_{i})\) denotes rotation in clockwise direction by \(0.005^{\circ }\), \(m_{hr}(f_i)\) denotes combination of horizontal and rotational motion, \(m_{vr}(f_i)\) denotes combination of vertical and rotational motion, \(m_{hvr}(f_i)\) denotes combination of horizontal, vertical and rotational motion,\(m_{hvr_{10}}(f_i)\) denotes combination of horizontal, vertical and ten times of original rotational shift, and \(m_{h_{10}v_{10}r_{10}}(f_i)\) denotes combination of ten times of original horizontal and vertical shifts along with ten times of original rotational shift. For all motion types or each set of 1000 frames, the motion level increases with the frame index, as observed from the multiplication factor i, since it is inter-frame motion.

In-vivo breast dataset: DT1

This work utilizes another experimental dataset which is an in-vivo dataset for thorough validation of the proposed network. It consists of US IQ data acquired using Alpinion E-Cube 12R US scanner (Alpinion Medical Systems Co., Seoul, South Korea), with a L12–3H linear array transducer. Multiple plane wave IQ frames ranging from 1500–2500 were collected from the breast lesions of 750 participants, for examination of suspicious breast masses. The study received institutional review board approval (IRB: 12-003329 and IRB: 19-003028) and was Health Insurance Portability and Accountability Act (HIPAA) compliant. All procedures performed in this study were in accordance with the ethical standards of the institutional and/or national research committee and with the 1964 Helsinki declaration and its later amendments or comparable ethical standards. Signed IRB approved informed consent with permission for publication was obtained from all individual participants included in the study. They were instructed to restrict movements, and the probe motion was also kept minimal. They were also asked to stop breathing for 3 s duration to reduce any motion due to breathing. Therefore, this dataset can be assumed to be free of any significant motion artifacts and the frames can be directly used as the ground truth. However, for more effective analysis, this work utilizes the data of 20 selected participants with the best correlated frames based on the spatio-temporal correlation matrix. The corresponding selected data is thereby used as the ground truth and is artificially contaminated with random translational and rotational motion between the frames to obtain the input inter-frame motion containing data for network training. In this dataset, the dimension of acquired IQ data varied across patients depending upon the lesion size and depth. However, mostly, the dimensions were 616 × 192, 717 × 192, and 941 × 192.

In-vivo thyroid dataset: DT2

The third experimental dataset is utilized for validating the proposed network with respect to realistic motion artifacts. It consists of US in-phase quadrature-phase (IQ) data acquired using the same machine as described above. Multiple plane wave IQ frames ranging from 1500 to 2500 were collected from thyroid gland of 356 subjects, for examination of suspicious thyroid nodules. This study was performed under the guidelines and regulations of an approved institutional review board (IRB) protocol (IRB: 08-008778) and was in compliance with the Health Insurance Portability and Accountability Act (HIPAA). A written IRB-approved informed consent with permission for publication was obtained from each patient participant prior to the imaging study. The subjects were instructed to stop breathing for 3s duration to reduce any motion due to breathing. However, there is unavoidable physiological motion due to the pulsating carotid artery in close proximity to the thyroid imaging area, as mentioned previously. Therefore, the ground truth data, i.e., the artifact-free data needs to be extracted for training the proposed network. The corresponding proposed schemes for the ground truth preparation are described in the later sections. The dimensions of IQ frames were the same as used for the breast dataset. Further details about the dataset can be found in9,10.

In this work, the IQ data is resized to the highest dimension, i.e., 941 × 192 for all subjects and in-vivo datasets to maintain uniformity. Further, since the data is in complex IQ format, we extract the ‘I’ and ‘Q’ parts, i.e., the ‘real’ and ‘imaginary’ parts respectively and concatenate them to form an IQ matrix, for each frame. These matrices are used as input for the proposed network. This helps to preserve the magnitude and phase of the data.

Proposed deep learning network

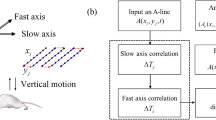

The proposed deep learning network, i.e., IFMoCoNet is presented in Fig. 1. It is built using a sequence of two-dimensional (conv2D) and depth-wise separable convolutional (DSConv2D) layers with hybrid adaptive attention block (HAAB) and squeeze-and-excite attention mechanism arranged in an exhaustively selected sequence. This work proposes the use of hybrid adaptive and sqeeze-and-excite attention mechanisms for the first time to correct inter-frame motion in ultrasound images. The network receives the input IQ image frames containing motion, along with the ground truth IQ frames derived from either the original motion-free data or the selected reference frames using the spatio-temporal correlation matrix. For representation, we have shown the absolute values of IQ data, i.e., the B-mode images in Fig. 1. After high-level feature extraction by the layers, the motion corrected frames are obtained at the output layer, as shown in the figure. The subsequent sub-sections describe the components of proposed deep learning network and the schemes for selection of ground truth frame/reference frame.

It can be observed from Fig. 1 that the network consists of two blocks of conv2D and DSConv2D layers, followed by a hybrid adaptive attention block, two DSConv2D layers, a squeeze-and-excite attention mechanism, and a final conv2D layer for prediction. Each conv2D and DSConv2D layer is followed by batch normalization (B.N.) except the final layer. These layers are sequentially arranged after rigorous experimental assessment of the network for inter-frame motion correction. The motion containing IQ data obtained from each acquisition are fed along with the selected reference frames as inputs to the first convolutional layer which consists of 64 kernels/filters each with a size of 3 × 3. This layer performs convolution operation between each IQ frame matrix B and kernel matrix K, to obtain the output C, as shown below29.

where p and q denote the indexes of rows and columns of the output C, and m and n denote the indexes of rows and columns of the input IQ matrix B. The kernel is shifted throughout the input matrix to obtain the output map, and its weights are learned during training process. This convolution operation for a multi-channel data is illustrated in30. Each input channel is convolved with a particular kernel, and the final output is obtained as the sum of the convolved outcomes of all channels30. In this work, He uniform initialization is used for the kernel weights, and Gaussian error linear unit (GELU) activation is applied to the convolved output for randomly dropping some neurons and capturing more complex patterns31, with zero bias to obtain the output feature map of this layer. In general, the output feature map \(\mathbf {C_o}\) of layer l is obtained as29,32:

where \(\sigma\) denotes the activation function and \(\textbf{bs}\) denotes the bias vector.

The 64 output feature maps obtained from the conv2D layer are given as inputs to the second layer, i.e., depthwise separable conv2D (DSConv2D). This layer factorizes the convolution operation into independent channel-wise (depth-wise) and spatial (pointwise) operations33. In this work, the 64 output feature maps obtained from the conv2D layer are fed as different channels to the DSConv2D layer. Firstly, each input channel is convolved with the kernel for depth-wise operation, and the corresponding outputs of all channels are stacked together to obtain the overall output34. Secondly, standard convolution is performed with a 1 × 1 kernel for point-wise operation. This layer significantly reduces the number of mathematical operations and parameters required in the conv2D layer, making it more efficient30,35. This layer also uses GELU activation and no bias vector.

In a similar manner, the subsequent conv2D and DSConv2D layers perform feature extraction sequentially and the output maps are then fed to a hybrid adaptive attention block. HAAB was recently proposed by Chen et al. in26 for segmentation of ultrasound images. This work adapts HAAB for inter-frame motion correction in ultrasound images for the first time. It consists of conv2D layers with varying filter sizes, channel and spatial attention mechanisms for extracting robust features with different receptive fields and scales from the channel-level and spatial-level aspects26. The description of HAAB is presented as follows26.

-

The input feature map \(F_i\) is convolved with 3 × 3, 5 × 5, and 3-rate dilated 3 × 3 conv2D layers in parallel to achieve \(H_3\), \(H_5\), and \(H_{D5}\) feature maps respectively, as shown in Fig. 1. Different receptive fields allow robust feature extractions and adaptations to a variety of images, i.e., varying levels of inter-frame motion related displacements in this work.

-

To capture the different receptive fields as mentioned above, the feature maps \(H_5\), and \(H_{D5}\) are combined and downsized to 1 × 1 to obtain \(F_1\) as:

$$\begin{aligned} F_1= G A P\left( H_5 \oplus H_{D5}\right) \end{aligned}$$(3)where GAP denotes global average pooling and \(\oplus\) denotes the element-wise addition operation.

-

The corresponding feature map \(F_1\) is given as input to a dense layer which performs a dot product between its weights and the input. The dense layer output undergoes batch normalization and GELU activation to obtain \(F_2\), as:

$$\begin{aligned} F_2=\sigma _g\left( B_n\left( W_{D} \cdot F_1\right) \right) \end{aligned}$$(4)where \(\sigma _g(.)\) and \(B_n(.)\) denote GELU and batch normalization operations respectively, and \(W_{D}\) denotes the weight matrix of dense layer.

-

The preceding feature map \(F_2\) is again fed to a dense layer and sigmoid activation to generate the channel attention weights a and \(1-a\) for \(H_5\) and \(H_{D5}\) respectively, where a is obtained as:

$$\begin{aligned} a=\sigma _s\left( W_{D} \cdot F_2\right) \end{aligned}$$(5)where \(\sigma _s(.)\) denotes sigmoid operation.

-

Finally, these two channel attention weights a and \(1-a\) are used to scale the feature maps \(H_5\) and \(H_{D5}\) respectively, to select the features from different receptive fields automatically, as:

$$\begin{aligned} \begin{aligned} F_{HD3}&=a \otimes H_{D3} \\ F_{H5}&=(1-a) \otimes H_5 \end{aligned} \end{aligned}$$(6)This channel-level attention mechanism helps to extract more robust features which can adapt to the variations in the displacements due to inter-frame motion.

-

The channel attention features are finally combined to form \(H_{1C}\) which is used with the 1 × 1 convolution output of \(H_3\), i.e., \(H_1\), as input to the next stage of HAAB, i.e., spatial-level attention mechanism, as shown in the figure.

-

The preceding inputs undergo GELU operation and 1 × 1 convolution to obtain \(H_{1S}\) as:

$$\begin{aligned} H_{1S}=conv2D_{1 \times 1}(\sigma _g(H_1 \oplus H_{1C})) \end{aligned}$$(7) -

The preceding feature map \(H_{1S}\) is fed to a sigmoid layer to obtain the spatial-level attention weights b and \(1-b\) for \(H_3\) and \(H_{1C}\) respectively. These weights need to be resampled to the same number of channels as \(H_3\) and \(H_{1C}\), and then multiplied by these features to obtain the spatial-attention feature maps as:

$$\begin{aligned} \begin{aligned} F_{H3}&=(1-b) \otimes H_{3} \\ F_{H1C}&=b \otimes H_{1C} \end{aligned} \end{aligned}$$(8) -

Finally, the overall output of HAAB, i.e., \(H_{1H}\) is obtained as:

$$\begin{aligned} H_{1H}=conv2D_{1 \times 1}((F_{H3} \oplus F_{H1C})) \end{aligned}$$(9)The output of HAAB reflects the combined channel and spatial-level attention feature map, which can effectively capture the variable shifts due to different levels of inter-frame motion.

The output of HAAB is again fed to two DSConv2D layers with batch normalization as shown in the Fig. 1. The corresponding output is further refined to obtain channel-level features using a squeeze-and-excite-attention block as shown in the figure. This block adds an attention mechanism to the output feature maps for considering the inter-dependencies between them to improve the overall network performance36. The mechanism is explained as follows.

-

Squeeze: Each output feature map is squeezed, i.e., converted to a single numeric value by using GAP as shown in the figure. This operation returns the spatial average \(\mu\) of the feature matrix. Therefore, it returns a vector with the size as the number of output feature maps, which is 64 in this case.

-

Excite: The squeezed output vector is fed into a dense layer with GELU activation function. The corresponding output is fed to another dense layer with sigmoid activation, which captures complex inter-dependencies and correlations among the feature maps effectively. The corresponding output is the same size as the vector input, containing the learned relevant weights for each feature map.

-

Multiply (Scale): The outputs of excite mechanism are multiplied with the corresponding original convolutional feature maps to provide weighted adaptations for enhancing the relevant maps and suppressing the less-relevant maps. This adds an effective attention mechanism to further enhance the performance of the proposed network.

Finally, the weighted output of the squeeze-and-excite attention mechanism is given as input to the final layer of the network, i.e., conv2D with a single filter for predicting the motion corrected frames, as shown in Fig. 1.

Reference/ground truth

Ground truth is essential to train a supervised deep learning network for learning the feature representations and validating the network performance. However, as mentioned previously, the ground truth is not available in real-time US imaging, such as, for the dataset DT2 in this work. Therefore, we propose a scheme for the selection of reference frame to be used as the ground truth in training the proposed network as shown in Fig. 2. The reference frame is selected based on the correlation of each acquired frame with all other frames in the sequence. It can be observed from the figure that initially, the acquired \(d_z \times d_x\) IQ frames ranging from \(1-d_t\) are restructured into spatio-temporal form to obtain the matrix \(C_m\). The columns of the matrix contain the spatial information, i.e., the frame data and the rows contain the time series data, as shown in the figure. The covariance of the spatio-temporal matrix is computed to obtain a matrix CC with dimension \(d_t \times d_t\), which contains the correlation values of each frame with respect to all other frames in the sequence. Each diagonal value consists of the correlation of each frame with itself, resulting in the maximum value, as observed from the colormap of CC shown in the figure. Finally, the frame with the maximum correlation with respect to all other frames is obtained by computing row-wise/column-wise mean \(\textbf{M}\) of CC and extracting the index I of maximum value of \(\textbf{M}\), i.e., \(\textbf{M}_I\). This is the reference frame. The mathematical interpretation of the same is shown in the figure.

Results

This section presents the results of the proposed IFMoCoNet for inter-frame motion correction in contrast-free US quantitative microvessel imaging in terms of different evaluation metrics. Initially, the training procedure and hyper-parameters are presented, and subsequently, the evaluation metrics used in this work are described and presented for IFMoCoNet. We also demonstrate the microvasculature Doppler images extracted using the motion-corrected frames along with the corresponding evaluation metrics and illustrations. Further, an experimentation with ground truth and a comparative evaluation study is presented.

Learning IFMoCoNet

The proposed network is trained using the inter-frame motion-containing input IQ frames acquired from each subject, along with the selected reference IQ frame(s) as the ground truth. For each dataset, the frames of \(80\%\) of the subjects are used for training, \(10\%\) for validating, and the remaining \(10\%\) for testing the proposed network. The proposed network employs a set of learning parameters which are selected after optimal hyper-parameter tuning using Keras tuner and rigorous experimental assessment. Based on that, we use the number of filters/kernels as 1, 64, 128, 256 for the convolutional layers, each with a size of 3 × 3 and a stride of 1, along with batch sizes between 2 and 8 and epochs between 40 and 100. These parameters assist the network to learn the mapping between inter-frame motion-containing frames and the reference frame(s). Further, the proposed network uses Adam optimizer with a learning rate of 0.0005 for minimizing the loss function, i.e., mean square error, and maximizing the similarity metric, i.e., cosine similarity in this work.

Evaluation metrics

The efficiency of the proposed network is demonstrated in terms of different evaluation metrics, such as, Pearson correlation coefficient (PCC), structural similarity index (SSI), and mean square error (MSE). PCC is one of the most commonly used metric for matching two images/frames in image registration problems and disparity analysis37. It is mathematically computed for the predicted test output image frame with respect to the ground truth, i.e., reference frame as described in37. SSI has been extensively used for assessing the magnetic resonance (MR) image quality38. For the first time, this work utilizes this metric to assess the inter-frame motion corrected US image frames. It estimates the structural resemblance between two images in terms of the brightness and contrast of local frame pixel intensity patterns39. It is estimated between the local windows of test image frame and reference frame as described in39. SSI between the full images is estimated as the mean of all such local SSIM values40. MSE is another widely adopted quantitative measure used to analyze two images for the extent of fidelity or the amount of distortion41. It is calculated as the mean of the sum of squared difference between the pixel intensities of the test and the reference images39. To further demonstrate the capability of the proposed network, we estimate the mean correlation among frames, i.e., mean inter-frame correlation (MIFC) before and after using IFMoCoNet. It is computed as the mean of row/column-wise mean of the spatio-temporal matrix, and denotes the overall mean of PCC among the acquired frames.

IFMoCoNet evaluation metrics

An analysis is performed for the phantom data and 20 subjects in database DT1 to assess IFMoCoNet for correcting the simulated inter-frame motion. Further exhaustive analysis is performed for all subjects in database DT2 to demonstrate the capability of IFMoCoNet in correcting the real motion. The metrics are estimated for the motion-corrected frames of \(10\%\) test subjects with respect to the corresponding reference frames, for each subject in both the in-vivo databases. Further, to diminish the statistical ambiguity of the proposed network, 10 fold cross-validation is used for each dataset. Table 1 shows the metrics with respect to the reference frame(s) before and after inter-frame motion correction for all datasets. It is observable from the table that the proposed network achieves improved values of all metrics, demonstrating its effectiveness in registering the frames with respect to reference frame, for all the databases. It can be observed that there is a significant improvement in the metrics for both the simulated motion-containing datasets as compared to that for DT2. However, this is reasonable since the dataset DT2 contains real motion, which is challenging to suppress completely. Finally, the results indicate the capability of the proposed network in correcting real motion, and adaptability to the variations in the datasets.

All computation is performed on a workstation with 26 core CPU, 64 GB RAM, and Nvidia RTX A5500 GPU. The overall computational time involved in predicting a motion-corrected frame by the trained IFMoCoNet is around 15 ms and its calculation complexity is estimated in terms of the number of floating point operations, which is 56 × 109.

Frame-wise evaluation metrics

This sub-section presents the evaluation metrics estimated for each IQ frame in a selected subject in database DT2 containing substantial amount of motion. As an illustration, Fig. 3 displays the metric values estimated with respect to reference frame before (original) and after (IFMoCoNet) motion correction of all frames of subject number 5. It is evident from the black arrow in Fig. 3a that for the frames containing more motion as represented by the lower original PCC values, there is a considerable increase in the PCC after motion correction. However, the scenario is opposite for the frames with originally high values of PCC depicted by a black circle in the figure, which is apparent since there is a limited scope for improvement. Further, the black rectangular symbol in the figure represents the reference frame zone, thereby, the PCC is 1 for those frames. The similar variations can be observed for the other set of frames in the figure. Furthermore, the same interpretation can be drawn for the other two metrics illustrated in the figure.

Performance assessment of IFMoCoNet-predicted frames for microvasculature doppler image extraction

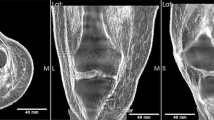

As mentioned previously, the motion-contaminated and motion-corrected frames estimated by the proposed IFMoCoNet are arranged into spatio-temporal correlation matrices and further utilized to extract the microvasculature Doppler images, for the simulated datasets. The matrices and Doppler images are compared with the corresponding original/ground truth counterparts in terms of MIFC and SSI respectively. Figure 4 shows these along with the B-mode frames extracted using the absolute value of corresponding IQ frames for the phantom ground truth data, data artificially contaminated with translational motion which increases by 0.005 pixels per frame and rotational motion which increases by \(0.015^{\circ }\) per frame, and the corresponding motion-corrected data in (a)–(c) respectively. The last B-mode frame in (b)-(i) can be observed to be shifted and rotated with respect to the first frame. Similar interpretation can be drawn for the intermediate frames. The corresponding spatio-temporal matrix displays the reduced MICF due to the induced motion as compared to that for the ground truth data in (a)-(ii). This further results in the degradation of microvasculature Doppler image which is structurally different with respect to that for the ground truth by \(36\%\). Finally, it can be observed from the figure in (c) that the IFMoCoNet-based motion corrected frames are recovered, and the MICF as well as the SSI of the Doppler image are considerably improved with respect to that using the motion corrupted data, i.e., the microvasculature is recovered with \(91\%\) similarity with respect to that using the ground truth data. This accounts for \(336\%\) and \(42\%\) increase in MICF ans SSI respectively with respect to that for the motion-contaminated data. Finally, this demonstrates the effectiveness of the proposed network for inter-frame motion correction in the phantom data.

Illustration of (i) spatio-temporal correlation matrices, (ii) microvasculature Doppler images, (iii) zoomed in portion of the microvasculature with arrows pointing to the alterations in the vessel structures, and (iv) Intensity profile along the green line marked in (iii) for a subject in the in-vivo breast dataset with (a) motion-free ground truth data, (b) artificial motion-contaminated data, and (c) motion-corrected data.

Figure 5 presents the spatio-temporal correlation matrices and the corresponding microvasculature Doppler images reconstructed using the ground truth frames, motion-contaminated frames, and the motion corrected frames of a subject taken from the in-vivo breast dataset in (a), (b), and (c) respectively. It can be observed from the figure that the spatio-temporal matrix in (a)-(i) that the subject has initially minimal inter-frame motion, with majority of the frames well correlated as indicated by the mean MIFC of 0.82. However, after introducing artificial motion, which is a combination of translational and rotational motion that increases by 0.005 pixels and \(45^{\circ }\) per frame respectively, it is reduced to 0.58, as indicated in (b)-(i). As a result, the corresponding microvasculature Doppler image in (b)-(ii) can be observed to be degraded with respect to that in (a)-(ii), which is also indicated by the sharp decline in SSI of the image. However, (c)-(i),(ii) shows that the proposed method can effectively correct the inter-frame motion and thereby recover the microvasculature Doppler with almost the same MIFC and SSI as that for the ground truth. For better clarity, we have shown zoomed in portions of the microvasculature Doppler images in (iii). The changes can be observed in the structure of many vessels after motion, and it even leads to the loss of some vessels as indicated by the arrows. However, the proposed IFMoCoNet-based motion correction helps to recover these missing vessels as observed from (iii)-(c). Finally, we estimate the intensity profiles along the line marked in the zoomed images, to demonstrate the effectiveness of IFMoCoNet in retaining the original intensities along that path, which were decreased and significantly changed due to the inter-frame motion, as observed in (iv).

Figure 6 shows the spatio-temporal correlation matrices and the corresponding microvasculature Doppler images obtained from the original motion-containing frames and the IFMoCoNet-corrected frames in (a) and (b) respectively, for a subject in the in-vivo thyroid dataset. It can be observed from (i) that the MICF after using IFMoCoNet is considerably improved, accounting for an overall increase of \(86\%\) with respect to that for the original data. This implies that the motion-corrected frames are well correlated, which further indicates the effectiveness of the proposed network. However, since the ground truth microvasculature Doppler images are not available for this dataset with realistic motion, it is difficult to evaluate the image in (b)-(ii). In comparison to the image in (c)-(ii), it is still observable that microvasculature for motion-corrected data is improved, as the vessels are less blurred.

Experimentation with ground truth

An experimental analysis is performed in this subsection to demonstrate the superiority of the proposed reference frame-based ground truth selection in database DT2 for IFMoCoNet. For this purpose, the existing two-stage image registration method for motion correction is utilized to extract the ground truth data for IFMoCoNet as follows. Initially, the two-stage method proposed by Harput et al. in12 is implemented in this work to obtain the motion corrected US frames for all subjects. The corresponding motion corrected frames are then fed as the ground truth data inputs for training IFMoCoNet. An average PCC of 0.80, MSE of 0.0004, SSI of 0.76, and MICF of 0.85 is achieved with respect to reference frame for the same \(10\%\) test data used previously. It can be observed that the proposed IFMoCoNet is able to correct motion irrespective of the ground truth. However, IFMoCoNet trained with the proposed reference frame-based ground truth is marginally superior to that with the two stage-based ground truth, as observed previously from Table 1. Further, the computational cost and time involved in implementing the two stage motion correction for frame registration and ground truth extraction is remarkably high as compared to that in selecting the reference frame based on spatio-temporal correlation for ground truth. Therefore, the proposed reference frame-based ground truth extraction outperforms the conventional motion correction method-based ground truth extraction for training IFMoCoNet.

Model interpretability

This section provides an insight of the internal workings of IFMoCoNet layers in terms of the feature activation maps. For this purpose, we extract the intermediate layer output maps of a test IQ input in the phantom dataset with horizontal, vertical, and rotational motion. Supplementary Fig. S1 displays the activation maps of the network layers, starting from first layer, i.e., convolutional layer 1 (conv_1) to the final layer which is the seventh convolutional layer (conv_7). Since the input is IQ data, it is challenging to interpret these maps as also observed from the ground truth data itself, however, we have marked the areas which show the shifts. The two small blue horizontal bars show the vertical downwards shifts for the phantom data, and the red filled gap shows the horizontal right shift. It can be clearly observed that as the feature maps pass through the layers, the amount of shifts decreases, i.e., the data is almost shifted back in the reverse directions. Further, the outputs of HAAB and squeeze and excite (SE) blocks demonstrate these major changes in the shifts, and focus on the actual data, rather than the background. This helps to visualize the main portion of the phantom data, while diminishing the background part as seen from lower intensities of background. Finally, it can be observed that the output is shifted back. Although it is difficult to clearly visualize the maps because of IQ data, they still give an insight of the model internal workings and its capability to extract valuable features. The corresponding feature maps of the final dsconv_7 layer are extracted for all frames are further post-processed by using singular value decomposition and top-hat filtering techniques to extract the Doppler image.

Comparative evaluation study

This sub-section demonstrates the performance comparison of the proposed IFMoCoNet with the existing two-stage method for motion correction. The two stage motion estimation is a combination of affine/global and non-rigid registration as mentioned in the introduction, which helps in increased range for non-rigid motion correction with a boost in speed and convergence12. Further, its implementation code is publicly available for download12. Therefore, the two-stage method is implemented for comparison of IFMoCoNet for inter-frame motion correction using all the datasets in this work. The results indicate that the method is able to register the acquired frames with the reference frame with PCCs of 0.95, 0.91, 0.80; MSEs of 0.0002, 0.0003, 0.0004; SSIs of 0.88, 0.86, 0.72; and MICFs of 0.95, 0.90, 0.84, for phantom dataset, DT1, and DT2 respectively. Further, the computational time for estimating a motion-corrected frame is approximately 75 s. It can be observed from the metric values that the proposed method corrects motion with close proximity to the existing two-stage method, however, there is an enormous difference in the computational time taken by both methods. The proposed method is able to achieve slightly superior performance for both simulated as well as real motion with a significantly low latency, i.e., 15ms. This results in an overall reduction by a factor of 5000 in the computational time taken by IFMoCoNet, making it more feasible in real-time scenario, as compared with the existing method. Finally, this demonstrates the remarkably low time complexity of IFMoCoNet without any tradeoff in performance with respect to existing method.

We further investigate the proposed IFMoCoNet with respect to some other cutting-edge deep learning architectures to demonstrate its reliability for inter-frame motion correction. For this purpose, we implement generative adversarial network (GAN), self-attention mechanism-based CNN, and U-Net, which are commonly used models in image analysis. The training and testing procedure is same as that for IFMoCoNet, and the estimated MIFC values obtained by these models with respect to proposed network are presented in Fig. 7. It can be observed from the figure that IFMoCoNet surpasses GAN and self-attention-CNN and performs marginally superior to U-Net for the phantom data and DT1. For the thyroid data DT2, it achieves marginally higher MIFC as compared to that achieved by GAN, and outperforms the other two models. Further, we calculate the number of floating-point operations (FLOPs) to analyze the computational complexities of these models. The number of FLOPs for IFMoCoNet is 56 G, while that for GAN, self-attention CNN, and U-Net are 116 G, 59 G, and 72 G respectively. This further indicates that the proposed network is able to achieve improved MIFC with less number of FLOPs, demonstrating its resource-efficiency. Finally, it is demonstrable that IFMoCoNet outperforms these models for inter-frame motion correction throughout the datasets used in this work, indicating its reliability with respect to these models.

Discussion

This work presents a comprehensive analysis of the proposed IFMoCoNet for inter-frame motion correction in high frame rate US imaging without contrast agents, along with the selection of ground truth. The simplistic architecture of IFMoCoNet highlights its low time and computational complexity. The evaluation metrics presented in Table 1 demonstrate that the proposed network is able to capture the variations in inter-frame motion with respect to different subject data and effectively correct artificially induced as well as real motion. The network is able correct different levels of translational and rotational motion, indicating its robustness. Further, it is capable of recognizing frames with significant real motion by automatically learning their feature representations and effectively correcting them. The frame-specific analysis for DT2 in Fig. 3 provides extraneous insight of the performance for each frame in the acquisition. It further demonstrates that the proposed network is more efficient in correcting motion of the frames containing considerable motion, which makes it suitable for real time scenarios where motion is unavoidable.

The spatio-temporal correlation matrices and microvasculature Doppler images extracted for the phantom and in-vivo breast data before and after motion correction in Figs. 4 and 5 demonstrates that the motion corrected frames retain their correlation and can reconstruct the microvasculature with similar structure as the ground truth. Moreover, they can recover the the microvessels which which were lost in the case of motion. Further analysis of the in-vivo thyroid dataset in Fig. 6 demonstrates the scenario for real motion. Although, the ground truth is absent which makes it difficult to compare the microvasculature, the spatiotemporal correlation matrix validates the capability of IFMoCoNet to correct real motion. The experimental analysis of modifying the ground truth data for DT2 to train IFMoCoNet demonstrates that a significant amount of computation and time can be saved by directly utilizing the reference frame for the ground truth. Finally, the comparative evaluation study investigates the overall performance of the proposed network with respect to traditional two-stage method proposed in12. It is apparent that the proposed network can achieve comparable performance for both simulated and real motion in a few milliseconds, whereas the two-stage method takes about 5000 times more time to predict the motion corrected frame. Further, the direct estimation of motion corrected frames rather than the displacement matrices as used in an existing work14 saves the post-processing time. The exhaustive comparison with some of the cutting edge deep learning methods further demonstrates the superiority of IFMoCoNet in terms of performance as well as low computational resource requirements. Finally, the meticulous evaluation using two different databases with simulated inter-frame motion and another sufficiently large clinical data with real motion demonstrates the robustness and adeptness of the proposed IFMoCoNet. The performance for database DT1 with respect to actual ground truth can also validate the analysis of proposed IFMoCoNet for the database DT2 with real motion, along with the performance of DT2 with respect to the selected reference frames.

The proposed IFMoCoNet may be further validated on a variety of datasets, as a part of future studies. Additionally, the competency of the proposed network may be assessed by extracting the microvasculature from the corresponding motion corrected data of more subjects. A few experiments may also be conducted to further examine the proposed network, using slight variations in the layers.

Conclusion

In this work, a deep learning-based simplistic network, i.e., IFMoCoNet is proposed for fast inter-frame motion correction in contrast-free US quantitative microvessel imaging. The validation studies on artificial inter-frame motion contaminated frames in phantom data and an in-vivo breast data DT1 indicate that overall PCCS of 0.97, 0.91, MSEs of 0.0001, 0.0002, SSI of 0.90, 0.88, and MIFCs of 0.98, 0.93 are achieved for test cases. Further extensive evaluation of the proposed network on a sufficiently large number of clinical images with real motion in database DT2 demonstrate that it achieves an PCC of 0.81, MSE of 0.0004, SSI of 0.78, and MIFC of 0.86. Further analysis of the frame-wise evaluation metrics for database DT2 indicate that the network is able to capture variations in motion among different frames and effectively correct them. The motion corrected data-based microvasculature Doppler images are significantly improved with respect to that based on the motion-containing data, for simulated datasets. These simulated motion-nased results can validate the proposed method for the real motion in thyroid dataset, along with the spatio-temporal matrix which demonstrates the improvement in the inter-frame correlations after motion correction. The superiority of the proposed scheme for spatio-temporal correlation-based reference frame selection is demonstrated by the experimental analysis using the traditional two-stage method for ground truth extraction. Further comparative evaluation with respect to the two-stage method indicates that the proposed network can predict motion corrected frame with approximately same performance in 15ms. Thereby, a substantial amount of time and computation can be saved by using the straightforward/low complex architecture of IFMoCoNet with minuscule tradeoff in performance for motion correction, making it suitable for real time applications. Finally, the proposed network is feasible for correcting realistic motion in contrast-free US quantitative microvessel imaging.

Data availability

The data that support the findings of this study are available from the corresponding author upon reasonable request. The requested data may include figures that have associated raw data. Because the study was conducted on human volunteers, the release of patient data may be restricted by Mayo policy and needs special request. The request can be sent to: Karen A. Hartman, MSN, CHRC | Administrator - Research Compliance| Integrity and Compliance Office | Assistant Professor of Health Care Administration, Mayo Clinic College of Medicine & Science | 507-538-5238 | Administrative Assistant: 507-266-6286 | [email protected] Mayo Clinic | 200 First Street SW | Rochester, MN 55905 | mayoclinic.org.m We do not have publicly available Accession codes, unique identifiers, or web links.

Change history

18 December 2024

A Correction to this paper has been published: https://doi.org/10.1038/s41598-024-82620-3

References

Zhao, S. X. et al. A local and global feature disentangled network: Toward classification of benign-malignant thyroid nodules from US image. IEEE Trans. Med. Imaging 41(6), 1497–1509 (2022).

Evain, E. et al. Motion estimation by deep learning in 2D echocardiography: Synthetic dataset and validation. IEEE Trans. Med. Imaging 41(8), 1911–1924 (2022).

Ternifi, R. et al. Quantitative biomarkers for cancer detection using contrast-free US high-definition microvessel imaging: Fractal dimension, Murray’s deviation, bifurcation angle and spatial vascularity pattern. IEEE Trans. Med. Imaging 40(12), 3891–3900 (2021).

Bayat, M. et al. Background removal and vessel filtering of non-contrast US images of microvasculature. IEEE Trans. Biomed. Eng. 66(3), 831–842 (2019).

Ghavami, S., Bayat, M., Fatemi, M. & Alizad, A. Quantification of morphological features in non-contrast-enhanced US microvasculature imaging. IEEE Access. 8, 18925–18937 (2020).

Ternifi, R. et al. Ultrasound high-definition microvasculature imaging with novel quantitative biomarkers improves breast cancer detection accuracy. Eur. Radiol. 32, 7448–7462 (2022).

Sabeti, S. et al. Morphometric analysis of tumor microvessels for detection of hepatocellular carcinoma using contrast-free US imaging: A feasibility study. Front. Oncol. 13, 1121664 (2023).

Gu, J. et al. Volumetric imaging and morphometric analysis of breast tumor angiogenesis using a new contrast-free US technique: a feasibility study. Breast Cancer Res. 24(1), 1–5 (2022).

Kurti, M. et al. Quantitative biomarkers derived from a novel contrast-free US high-definition microvessel imaging for distinguishing thyroid nodules. Cancers 15(6), 1888 (2023).

Nayak, R. et al. Non-invasive small vessel imaging of human thyroid using motion-corrected spatiotemporal clutter filtering. Ultrasound Med. Biol. 45(4), 1010–1018 (2019).

Harput, S. et al. Two stage sub-wavelength motion correction in human microvasculature for CEUS imaging. in 2017 IEEE International Ultrasonics Symposium (IUS) IEEE. 1–4 (2017).

Harput, S. et al. Two-stage motion correction for super-resolution US imaging in human lower limb. IEEE Trans. Ultrason. Ferroelectr. Freq. Control 65(5), 803–814 (2018).

Ta, C. N. et al. 2-tier in-plane motion correction and out-of-plane motion filtering for contrast-enhanced US. Invest. Radiol. 49(11), 707–719 (2014).

Oezdemir, I. et al. Faster motion correction of clinical contrast-enhanced US imaging using deep learning. in 2020 IEEE International Ultrasonics Symposium (IUS) (2020).

Barrois, G. et al. New reference-free, simultaneous motion-correction and quantification in dynamic contrast-enhanced US. in2014 IEEE International Ultrasonics Symposium (2014).

Hingot, V. et al. Subwavelength motion-correction for ultrafast US localization microscopy. Ultrasonics 77, 17–21 (2017).

Hao, Y. et al. Non-rigid motion correction for US localization microscopy of the liver in vivo. in2019 IEEE International Ultrasonics Symposium (IUS), 2263–2266 (2019).

Nayak, R. et al. Non-contrast agent based small vessel imaging of human thyroid using motion corrected power Doppler imaging. Sci. Rep. 8(1), 15318 (2018).

Taghavi, I. et al. In vivo motion correction in super-resolution imaging of rat kidneys. IEEE Trans. Ultrason. Ferroelectr. Freq. Control 68(10), 3082–3093 (2021).

Stanziola, A. et al. Motion correction in contrast-enhanced US scans of carotid atherosclerotic plaques. in 2015 IEEE 12th International Symposium on Biomedical Imaging (ISBI) (2015).

Stanziola, A. et al. Motion artifacts and correction in multipulse high-frame rate contrast-enhanced US. IEEE Trans. Ultrason. Ferroelectr. Freq. Control 66(2), 417–420 (2018).

Zhang, J. et al. Respiratory motion correction for liver contrast enhanced US by automatic selection of a reference image. Med. Phys. 46(11), 4992–5001 (2019).

Brown, K. G. et al. Deep learning of spatiotemporal filtering for fast super-resolution US imaging. IEEE Trans. Ultrason. Ferroelectr. Freq. Control 67(9), 1820–1829 (2020).

Zheng, S. et al. A deep learning method for motion artifact correction in intravascular photoacoustic image sequence. IEEE Trans. Med. Imaging 42(1), 66–78 (2022).

Shi, L. et al. Automatic inter-frame patient motion correction for dynamic cardiac PET using deep learning. IEEE Trans. Med. Imaging 40(12), 3293–3304 (2021).

Chen, G. et al. AAU-Net: An adaptive attention U-Net for breast lesions segmentation in ultrasound images. IEEE Trans. Med. Imaging 42(5), 1289–1300 (2023).

Nayak, R. et al. Quantitative assessment of ensemble coherency in contrast-free US microvasculature imaging. Med. Phys. 48(7), 3540–3558 (2021).

Adusei, S. et al. Custom-made flow phantoms for quantitative US microvessel imaging. Ultrasonics 134, 107092 (2023).

Goodfellow, I. et al. Deep Learning (MIT Press, 2016).

Bai, L., Zhao, Y. & Huang, X. A CNN accelerator on FPGA using depthwise separable convolution. IEEE Trans. Circuits Syst. II Express Briefs 65(10), 1415–1419 (2018).

Hendrycks, D. & Gimpel, K. Gaussian error linear units (gelus). arXiv preprint arXiv:1606.08415 (2016).

Saini, M., Satija, U. & Upadhayay, M. D. DSCNN-CAU: Deep-learning-based mental activity classification for IoT implementation toward portable BCI. IEEE Internet Things J. 10(10), 8944–8957 (2023).

Chollet, F. Xception: Deep learning with depthwise separable convolutions. in In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 1251–1258 (2017).

Howard, A. G. et al. Mobilenets: Efficient convolutional neural networks for mobile vision applications. arXiv preprint arXiv:1704.04861. (2017).

Saini, M. & Satija, U. On-Device implementation for deep-learning-based cognitive activity prediction. IEEE Sens. Lett. 6(4), 1–4 (2022).

Hu, J., Shen, L. & Sun, G. Squeeze-and-excitation networks. in 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition 7132–7141 (2018).

Neto, A. M. et al. Image processing using Pearson’s correlation coefficient: Applications on autonomous robotics. in 2013 13th IEEE International Conference on Autonomous Robot Systems (2013).

Mason, A. et al. Comparison of objective image quality metrics to expert radiologists’ scoring of diagnostic quality of MR images. IEEE Trans. Med. Imaging 39(4), 1064–1072 (2020).

Akasaka, T. et al. Optimization of regularization parameters in compressed sensing of magnetic resonance angiography: Can statistical image metrics mimic radiologists’ perception?. PLoS ONE 11(1), e0146548 (2016).

Mudeng, V. et al. Prospects of structural similarity index for medical image analysis. Appl. Sci. 12(8), 3754 (2022).

Wang, Z. & Bovik, A. C. Mean squared error: Love it or leave it? A new look at signal fidelity measures. IEEE Sign. Process. Mag. 26(1), 98–117 (2009).

Acknowledgements

The authors would like to thank all the past and present members, sonographers, and study coordinators who helped for a period of time during the years of this study. The authors also thank Dr. Redouane Ternifi and Shaheeda Adusei for flow phantom data acquisition. This work was supported in part by grants from the National Cancer Institute, R01CA239548 (A. Alizad and M. Fatemi), and the National Institute of Biomedical Imaging and Bioengineering, R01EB017213 (A. Alizad and M. Fatemi), both from the National Institutes of Health (NIH). The content is solely the responsibility of the authors and does not necessarily represent the official views of the NIH. The NIH did not have any additional role in the study design, data collection and analysis, decision to publish, or preparation of the manuscript.

Author information

Authors and Affiliations

Contributions

A.A. contributed to conceptualization, methodology, investigation, visualization, resources, funding acquisition, supervision, project administration, reviewing and editing the manuscript; M.F. contributed to conceptualization, methodology, visualization, resources, funding acquisition, supervision, and project administration, reviewing and editing the manuscript; M.S. wrote the original draft of manuscript, contributed to data curation, methodology, visualization, formal analysis, software, development of algorithms, reviewing and editing the manuscript; All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

The original online version of this Article was revised: The original version of this Article omitted an Affiliation for Azra Alizad. Full information regarding the correction made can be found in the correction for this Article.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Saini, M., Fatemi, M. & Alizad, A. Fast inter-frame motion correction in contrast-free ultrasound quantitative microvasculature imaging using deep learning. Sci Rep 14, 26161 (2024). https://doi.org/10.1038/s41598-024-77610-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-024-77610-4