Abstract

For light-element materials, X-ray phase contrast imaging provides better contrast compared to absorption imaging. While the Fourier transform method has a shorter imaging time, it typically results in lower image quality; in contrast, the phase-shifting method offers higher image quality but is more time-consuming and involves a higher radiation dose. To rapidly reconstruct low-dose X-ray phase contrast images, this study developed a model based on Generative Adversarial Networks (GAN), incorporating custom layers and self-attention mechanisms to recover high-quality phase contrast images. We generated a simulated dataset using Kaggle’s X-ray data to train the GAN, and in simulated experiments, we achieved significant improvements in Peak Signal-to-Noise Ratio (PSNR) and Structural Similarity Index (SSIM). To further validate our method, we applied it to fringe images acquired from three phase contrast systems: a single-grating phase contrast system, a Talbot-Lau system, and a cascaded grating system. The current results demonstrate that our method successfully restored high-quality phase contrast images from fringe images collected in experimental settings, though it should be noted that these results were achieved using relatively simple sample configurations.

Similar content being viewed by others

Introduction

Since the discovery of X-rays by Röntgen, the study of X-rays has sparked a wave of enthusiasm among scientists worldwide. In the field of imaging, X-rays have been highly anticipated and have shown great promise in areas such as disease diagnosis and industrial inspection1,2,3. However, traditional X-ray absorption imaging is less effective for materials composed of light elements such as carbon, hydrogen, oxygen, and nitrogen. This is because the phase shift cross-section (phase shift coefficient) of light elements is three orders of magnitude higher than their absorption cross-section (absorption coefficient)4. Currently, X-ray phase contrast imaging technology has demonstrated the capability to achieve high-quality imaging of materials with weak X-ray absorption, highlighting its advantages in imaging such materials. X-ray phase-contrast imaging focuses on the refraction angle of X-rays after passing through an object and uses this angle to detect the phase changes of X-rays transmitted through the object.

Over the past few decades, various methods of X-ray phase-contrast imaging have been developed, which can be mainly categorized into four types: crystal interferometry, diffraction-enhanced imaging, in-line phase-contrast, and grating-based phase-contrast imaging5,6,7,8. Among these methods, grating-based phase-contrast imaging has become a research hotspot in the realization of X-ray phase-contrast imaging.

In 2003, MOMOSE A. and his team successfully conducted X-ray phase-contrast imaging experiments using a Talbot interferometer composed of a phase grating and an absorption grating at a synchrotron facility9. At that time, grating-based X-ray phase-contrast imaging technology still relied on synchrotron sources. In 2006, PFEIFFER F. proposed an innovative method based on the Talbot-Lau principle. They created a spatially coherent X-ray source array using a conventional X-ray source combined with absorption gratings, resulting in the Talbot-Lau interferometer10. This development allowed X-ray phase-contrast imaging to extend beyond synchrotron facilities, making it accessible in standard laboratory and clinical environments. In 2018, Li Ji’s team introduced a dual-phase grating imaging system, which reduced the structural requirements for absorption gratings, enabling large-field X-ray phase-contrast imaging11. This approach was further enhanced through the implementation of a cascaded Talbot-Lau interferometer (CTLI), composed of a TLI and an inverse TLI, which utilized the self-image of the TLI as the source for the inverse TLI. This configuration successfully eliminated the need for small-period, high aspect-ratio absorption gratings, offering increased system versatility and tunability for large-field biomedical imaging applications27. Furthermore, recent studies have demonstrated the potential of using cascaded TLIs with large-period absorption gratings, coupled with Fourier analysis of moiré fringe patterns, to achieve multicontrast imaging (such as absorption, differential phase-contrast, and normalized visibility-contrast) with a single exposure. Experimental results have successfully identified fine structures in polytetrafluoroethylene (PTFE) samples, further validating the effectiveness of this approach for potential medical imaging applications28.

Traditional phase-contrast signal extraction algorithms include phase-stepping and Fourier algorithms. The phase-stepping method extracts phase information through multiple exposures and phase steps, but its main drawbacks include the need for long imaging times, phase recovery errors introduced by inconsistent step sizes, additional phase errors caused by grating non-uniformity, and image blurring due to the X-ray source focal spot size9,12,13,14. Although the Fourier algorithm requires only a single exposure and has shorter imaging times, it is limited by the choice of window function. The overlap of the zero-order and first-order spectra significantly affects imaging quality. Additionally, the choice of window function can lead to image edge blurring, and the spectral overlap can produce artifacts15,16,17.

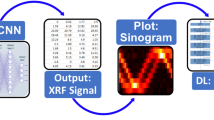

Due to its powerful learning capabilities, deep learning has the potential to play a role in image reconstruction for X-ray phase contrast imaging. Generative Adversarial Networks (GANs), initially used for image style transfer, enable the transformation of images across different domains18,19. In this study, we leverage the cross-___domain transformation capabilities of GANs to extract phase-contrast signals and restore high-quality phase-contrast images.

Principles and methods

In this study, we developed an X-ray phase-contrast image restoration model using a GAN framework, specifically targeting the extraction and restoration of X-ray phase-contrast signals. In our model design, the source ___domain images are defined as stripe signal images containing object information, while the target ___domain images are the corresponding object phase-contrast images. This setup enables the model to learn the mapping relationship from stripe signals to phase-contrast images, thereby achieving the restoration of high-quality phase-contrast images from stripe images containing object information.

The GAN consists of two primary components: the generator and the discriminator. The generator aims to produce synthetic images that closely resemble real images, thereby deceiving the discriminator. Conversely, the discriminator’s task is to accurately distinguish between real images from the dataset and those generated by the generator. The training mechanism of the GAN is illustrated in Fig. 1.

In grating-based phase-contrast imaging systems, the role of gratings is crucial. They primarily modulate the X-ray wavefront passing through the sample to produce stripe images that carry the sample’s information. These gratings influence the X-rays in specific ways to achieve phase-contrast and dark-field imaging, revealing microscopic structures and tissue differences that are difficult to capture with conventional absorption imaging. Phase gratings work by introducing periodic phase shifts to the X-ray wavefront, and this phase modulation is subsequently converted into intensity modulation at the detector plane through the Talbot effect. The variations in this intensity pattern depend on the object the X-rays pass through, allowing detailed internal structural information of the object to be obtained from the final image.

In our study, we used three types of X-ray grating-based phase-contrast systems for both simulations and real experiments. These three systems are shown in Fig. 2a–c, respectively: the single-grating projection system, the Talbot-Lau system, and the cascaded grating system.

The single-grating projection system uses only an absorption grating. In this setup, the grating is not used to directly modulate the phase but to spatially modulate the X-ray waves passing through the object, thereby indirectly affecting the imaging. The grating is placed between the X-ray source and the detector. Under micro-focus X-ray source irradiation, the X-rays passing through the absorption grating form grating projection stripe images, with the stripe period related to the absorption grating period and geometric magnification. Placing the sample behind the absorption grating results in grating projection stripe images carrying sample information.

The Talbot-Lau system is an X-ray phase-contrast imaging configuration suitable for conventional X-ray sources. By combining three gratings: the source grating, phase grating, and analyzer grating, it effectively overcomes the limitation of low coherence in traditional X-ray sources. In this system, the source grating is placed near the X-ray source, converting the incoherent X-ray beam into multiple coherent sub-beams, laying the foundation for subsequent phase modulation. The phase grating applies periodic phase modulation to these sub-beams, forming self-images. Placing the sample behind the phase grating results in self-imaging stripe images carrying sample information. Finally, the analyzer grating converts these self-imaging stripe images into Moiré fringe images, allowing the X-ray detector to capture the stripe variations caused by the sample, thereby obtaining the sample’s absorption, phase-contrast, and scatter multi-contrast images.

The cascaded grating system has significant advantages in X-ray phase-contrast imaging technology. This system can be viewed as a combination of a traditional Talbot-Lau interferometer (TLI) and an inverse Talbot-Lau interferometer (ITLI). The TLI part includes a conventional X-ray source, source grating G0, and phase grating G1; the ITLI part includes a virtual source formed by the TLI self-imaging effect, phase grating G2, and absorption grating G3. The self-imaging effect produced by the TLI serves as the light source for the ITLI, providing an illumination source with a small-period array, thus avoiding the need for producing high-aspect-ratio absorption gratings with small periods. In the TLI, the first phase grating G1 produces self-imaging stripes at a specific distance due to the Talbot effect. These stripes act as the illumination source in the ITLI, allowing the ITLI to generate large stripes with sufficient periods. The design of the imaging system closely integrates the TLI and ITLI: the self-imaging stripes provided by the TLI are not only a result of its internal function but also provide the necessary illumination source for the ITLI.

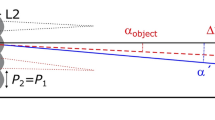

The following formulas reveal how X-rays interact with objects to produce images in three phase-contrast imaging systems: the single-grating projection system, the Talbot-Lau system, and the cascaded grating system:

Equation (1) describes the light intensity at each pixel on the detector plane, where \(a_{i} \left( {m,n} \right)\) represents the amplitude coefficient, \(\phi_{i} \left( {m,n} \right)\) is the phase coefficient, \(p\) is the period of the grating, and \(x_{g}\) is the lateral displacement of the grating. This periodic variation in intensity directly reflects the modulation effect of the X-ray wavefront after passing through the sample and grating system.

Equation (2) demonstrates that the differential phase contrast image is based on the gradient components of the object’s projected wavefront phase profile and is related to the phase \(\phi_{1} \left( {m,n} \right)\) of the intensity oscillation at each pixel, where \(\lambda\) is the X-ray wavelength and d is the distance from the grating to the detector. To eliminate the defects of the imaging system or the incident X-ray wavefront, the effective differential phase contrast image of the object alone can be calculated by measuring the difference with and without the sample, as shown in Eq. (3):

Here, the superscripts \(s\) and \(r\) represent the measurement states with and without the sample, respectively.

Design of the phase-contrast image restoration generative adversarial network

We adopted a Generative Adversarial Network (GAN) model as shown in Fig. 4. In this model, the generator uses an encoder-decoder structure based on U-Net20, while the discriminator is built on a deep convolutional neural network architecture21. The primary task of the generator is to learn the mapping from stripe images containing object information \(x\) to high-quality phase-contrast images \(y\), denoted as \(\left\{ {G:x \to y} \right\}\).

The function of the discriminator is to distinguish between the images produced by the generator \(G_{x}\) and the high-quality phase-contrast images \(y\), outputting a probability map containing “real” or “fake” information \(z\), denoted as \(\left\{ {D:\left\{ {G_{x} ,y} \right\} \to z} \right\}\).

As shown in the design diagram of the phase-contrast image restoration GAN in Fig. 3, the model’s initial stage involves a series of eight convolutional layers for downsampling. This systematically reduces the spatial dimensions of the image while progressively increasing the depth of the data channels. Each convolutional layer is followed by batch normalization and a LeakyReLU activation function, enhancing the model’s non-linear processing capabilities and ensuring training stability. The number of filters gradually increases from 64 to 512, enabling the network to effectively extract multi-level features from simple to complex.

After the deep feature extraction, the model introduces a self-attention layer. Initially used in natural language generation tasks, this layer has been successfully adapted to visual tasks. By flattening the spatial dimensions and applying the SoftMax function to calculate attention weights, the self-attention layer enhances the model’s global dependency on key image features, significantly improving the details and overall quality of the generated images.

Following the self-attention processing, a series of upsampling layers progressively restore the spatial resolution of the image while gradually reducing the number of channels. Each upsampling layer includes batch normalization to ensure the regularity and effectiveness of network activations. Some upsampling layers incorporate dropout operations to further enhance the model’s generalization ability on diverse data. In the final stage of the generator, a transposed convolutional layer adjusts the number of channels to 1, ensuring that the output image matches the original input image in size, producing a grayscale image of the same dimensions as the original.

The discriminator model in this paper is designed to evaluate the differences between generated images and target real images, guiding the generator to produce higher quality images. The discriminator employs a deep convolutional network structure, with inputs being a generated image and a corresponding real image, both of size 256 × 256 × 1. These two images are first input in parallel to a shared convolutional layer to extract and compare their features.

The discriminator architecture starts with a simple convolutional layer without batch normalization, reducing the preprocessing of initial data. This is followed by three downsampling convolutional layers with gradually increasing numbers of filters. Each layer is followed by a LeakyReLU activation function to enhance the network’s non-linear capacity and stabilize the training process. The latter layers of the discriminator use zero padding and larger convolutional kernels to further extract local features, with added batch normalization layers maintaining the stability of feature propagation. Finally, a convolutional layer with a small kernel outputs a single-channel result, representing the probability that the image pair is real, providing feedback to the generator to produce results that are visually indistinguishable from real images.

The loss function of the generator is designed as a composite of multiple components to comprehensively evaluate the quality of the generated images. The adversarial loss is calculated using binary cross-entropy to measure the difference between the generated images and the real image labels, aiming to make the generated images more realistically mimic the distribution of real images. Additionally, the generator’s loss includes PSNR and Structural Similarity Index SSIM, both of which are important metrics for evaluating image detail fidelity and visual quality.

Furthermore, the L1 loss is employed to directly quantify the absolute error between the generated images and the target images, enhancing detail restoration. The overall loss function is a weighted sum of these components, ensuring that each loss term contributes appropriately to the model training.

The loss function is defined by the following equation:

In Eq. (4), \(D\left( {G\left( x \right)} \right)\) represents the discriminator’s predicted probability for the generated image. \(MSE\) denotes the mean squared error between the target image and the generated image, and \(MAX_{I}^{2}\) is the square of the possible maximum pixel value of the image. In Eq. (5), \(SSIM\) is the Structural Similarity Index, used to compare the visual similarity between the generated image \(G\left( x \right)\) and the target image \(y\),where \(N\) is the number of pixels in the image. In Eq. (6), \(\alpha\), \(\beta\), \(\gamma\) and \(\lambda\) are weights for each loss term, used to balance the impact of different losses on model training.

The discriminator’s loss function also employs binary cross-entropy loss, divided into two parts: real loss and generated loss. The real loss calculates the error between the discriminator’s prediction for the real image pair and the true label (1), while the generated loss assesses the error between the discriminator’s prediction for the generated image pair and the false label (0). The objective of the discriminator is to accurately differentiate between real and generated images; hence, these two parts of the loss work in conjunction to encourage the discriminator to learn the ability to distinguish between generated and real images. This loss expression is illustrated in Eqs. (7) to (9):

Equations (7) and (8) define the methods for calculating the loss associated with real and generated images, where the label is set to 1 for real images and 0 for generated images. This design of the loss function helps ensure that the discriminator can effectively distinguish between real and generated images, thereby enhancing the overall performance of the generative adversarial network.

Simulation

During the simulation phase, we used a publicly available dataset from the Kaggle platform22. Equations (1) to (3) were used to generate the dataset for phase-contrast image restoration. The training set consisted of 5000 pairs of images, and the test set included 600 pairs of images, all with a bit depth of 16 bits. During the simulation, the average gray value of the stripe images was set to 5400, the stripe contrast was randomly chosen between 0.4 and 0.5, and the stripe period was randomly generated between 5 and 6 times the pixel length. The image resolution was 256 × 256 pixels, with a single pixel size of 74.8 × 74.8 µm. The signal-to-noise ratio (SNR) was set to a random Gaussian distribution between 50 and 100 to simulate real-world noise conditions.

The training of the GAN was conducted on a high-performance server equipped with an Intel Xeon Gold 6133 CPU (40 cores, 80 threads), an NVIDIA RTX 3090 GPU (24GB), and 32GB RAM. We chose TensorFlow as the primary computational framework and utilized GPU acceleration to optimize training efficiency. The network model weights were initialized with a standard Gaussian distribution, and the Adam optimizer was used for parameter tuning. The training process was planned for 7000 iterations.

As shown in Fig. 4, the images restored by the Fourier algorithm exhibit poorer detail recovery and contain noise and artifacts, especially noticeable in the red-boxed areas, compared to the images restored by the GAN. To more intuitively and accurately compare the superiority of GAN in image restoration, we used PSNR, SSIM, and FRC for image quality evaluation.

Comparison of phase-contrast images generated by different methods: (a) Stripe image: shows the stripe image containing object information. (b) Label image: serves as the target output of the GAN, guiding the model’s training. (c) Phase-contrast image generated by the Fourier algorithm: phase-contrast image generated after processing with the Fourier algorithm. (d) Phase-contrast image generated by the GAN: phase-contrast image generated after processing with the GAN.

As illustrated in Fig. 5a,b, the average PSNR of the images generated by the Fourier algorithm is 28.0985, and the average SSIM is 0.9014. In contrast, the images generated by the GAN have an average PSNR of 41.9023 and an average SSIM of 0.9846. The PSNR distribution of the GAN-generated images is more concentrated, with a standard deviation of 1.5647, compared to the Fourier algorithm’s images, which have a standard deviation of 4.2839. Similarly, the SSIM distribution of the GAN-generated images is more concentrated, with a standard deviation of 0.0011, compared to the Fourier algorithm’s images, which have a standard deviation of 0.0181. Detailed analysis shows that the image with the best PSNR generated by the Fourier algorithm has a PSNR value of 34.2808 and a corresponding SSIM value of 0.9261. After training with the GAN, the original untrained source image’s PSNR improved to 44.6723 and SSIM to 0.9914. Conversely, the image with the worst PSNR generated by the Fourier algorithm has a PSNR value of 22.3649 and a corresponding SSIM value of 0.6543. After training with the GAN, the original untrained source image’s PSNR improved to 36.1984 and SSIM to 0.8976. This indicates that the GAN significantly enhances image quality when processing both high and low-quality images, demonstrating higher average PSNR and SSIM values and superior stability compared to the Fourier algorithm.

To compare the restoration quality in the frequency ___domain, we used Fourier Ring Correlation (FRC) for image quality evaluation. By comparing the FRC values between the X-ray phase-contrast images restored by the Fourier algorithm and the GAN with the label images, we can assess the differences in image restoration performance. FRC values measure the similarity between two images at different spatial frequencies, divided into various frequency bins. Each frequency bin represents a specific spatial frequency range (cycles/pixel), such as 0–10 cycles/pixel for low-frequency information, 10–40 cycles/pixel for mid-frequency information, and 40 cycles/pixel and above for high-frequency information.

As shown in Fig. 5c,d, the Fourier algorithm performs well in low-frequency information recovery, with FRC values almost reaching 1 in the 0–10 cycles/pixel frequency bin (FRC value of 1.000 for bin 0 and 0.997 for bin 10). However, as the frequency increases, the FRC values of the Fourier algorithm gradually decrease, and in the high-frequency range (50 cycles/pixel and above), the FRC values significantly drop (0.497 for bin 50 and 0.420 for bin 60), indicating a clear deficiency in high-frequency detail recovery.

In contrast, the GAN outperforms the Fourier algorithm across all frequency ranges. In the low-frequency range (0–10 cycles/pixel), the FRC values are also close to 1 (0.999 for bin 0 and 0.998 for bin 10). In the mid-frequency range (10–40 cycles/pixel), the GAN’s FRC values are higher and more stable, remaining above 0.9 (0.975 for bin 20 and 0.965 for bin 30). Even in the high-frequency range (50 cycles/pixel and above), the GAN’s FRC values decrease less sharply, with some bins still above 0.6 (0.650 for bin 50 and 0.620 for bin 60).

The comparison indicates that while the Fourier algorithm performs well in low-frequency information recovery, it falls short in mid to high-frequency detail recovery, especially in the high-frequency range where the FRC values drop significantly. Conversely, the GAN excels across all frequency ranges, particularly in mid to high frequencies, where its FRC values are higher and more stable. For example, in the 20 and 40 cycles/pixel frequency bins, the GAN’s FRC values are 0.07 and 0.15 higher than those of the Fourier algorithm, respectively. These data demonstrate the GAN’s superiority in preserving and restoring image details, making it significantly better than the Fourier algorithm for image restoration tasks. Therefore, using GANs for X-ray phase-contrast image restoration can achieve higher-quality images and improve overall spatial resolution.

To further validate the superiority of the Generative Adversarial Network (GAN), we analyzed its image restoration performance during 0 to 7000 training iterations. The results are shown in Fig. 6. As the number of training iterations increases, the average values of PSNR and SSIM significantly improve, indicating a gradual enhancement in image quality and structural similarity.

In the initial stage (0 iterations), the average PSNR and SSIM values are 7.52 and 0.19, respectively, with large fluctuations, and the standard deviations are 0.97 and 0.02, respectively. This mainly reflects the instability of the network’s initial learning phase. At 100 iterations, the average PSNR and SSIM values increase significantly to 31.21 and 0.85. Although the image quality improves, the fluctuations remain large due to the instability of initial learning, with standard deviations of 3.04 and 0.03, respectively.

Between 300 and 900 iterations, the average PSNR increases from 33.69 to 34.24, with standard deviations fluctuating between 3.84 and 4.56. The average SSIM increases from 0.94 to 0.95, with a standard deviation of approximately 0.01. During this phase, the network gradually stabilizes, but fluctuations still exist due to adjustments in parameters such as the learning rate.

After 1000 iterations, the average values of PSNR and SSIM gradually stabilize. The average PSNR stabilizes between 34.86 and 38.71 after 1000 iterations, with a standard deviation of about 4.64 to 5.74. The average SSIM stabilizes between 0.93 and 0.98 after 1000 iterations, with a standard deviation of about 0.006 to 0.014. As training progresses, the model exhibits excellent and gradually stable performance in terms of image quality and structural similarity.

The overall trend shows that with the increase in training iterations, the PSNR and SSIM values significantly improve, indicating that the GAN performs excellently in restoring stripe images and generating high-quality phase-contrast images. The fluctuations in PSNR and SSIM values are larger in the early stages of training, mainly due to the instability of the network’s initial learning and parameter adjustments. As training continues, the fluctuations gradually decrease, indicating that the model’s performance on different images becomes more stable, and the image quality and structural similarity improve significantly.

In summary, GANs have shown promising potential in grating-based X-ray phase contrast imaging and may serve as a viable approach for reconstructing phase contrast images.

Real experiments

Single-grating projection system

The experimental platform is constructed based on the principle of X-ray grating projection imaging. The main components include a micro-focus X-ray source, an absorption grating, a flat-panel X-ray detector, and an electric nano-displacement platform. The detector used is the Dexela CMOS flat-panel X-ray detector (model: 2923) produced by PerkinElmer. The X-ray source is a micro-focus X-ray source unit (model: L9181-02) produced by Hamamatsu Photonics K.K., with a focal spot size of 5 μm. The absorption grating has a period of 96 μm (procured grating, Au thickness expected to be 120 μm), with a duty cycle expected to be 1/3 (Au expected to be 2/3). The flat-panel detector pixel size is 74.8 × 74.8 μm2. The total length of the imaging system is designed to be 0.8 m, with the distance from the micro-focus source to the grating expected to be 0.15 m, and the distance from the grating to the detector expected to be 0.65 m. In the experiment, the micro-focus source tube voltage is set to 40 kV, the tube current to 160 μA, and the single-step grating projection exposure time is 4 s. The experimental setup is shown in Fig. 7. In addition, for information on the X-ray source spectra, readers can refer to our previous studies24,25.

To validate the superior performance of the Generative Adversarial Network (GAN) in restoring X-ray phase-contrast images, this study tested using actual hose experimental data. We first restored the phase-contrast images using the Fourier algorithm and then compared the results with those restored by the GAN. This comparison aims to verify whether the GAN, trained only on simulated data, can learn the mapping relationship of the phase-contrast system and successfully restore phase-contrast images in a real experimental environment. As a benchmark, we used high-precision reference images restored by the six-step phase-stepping method. Our goal is to demonstrate that phase-contrast images restored by our GAN model under single-exposure conditions not only outperform those restored by the Fourier algorithm but also approach the restoration quality of the six-step phase-stepping method.

The experimental results are shown in Fig. 8. Figure 8a shows the local stripe image obtained in the experiment after placing a plastic hose, with a stripe period of approximately 523 μm, an average gray value of about 6000 (16-bit), and a contrast of 49.2%.

Comparison of image restoration effects in single-grating projection experiments: (a) Stripe image of the object, (b) Phase-contrast image restored by the six-step phase-stepping method, (c) Phase-contrast image restored by the Fourier transform algorithm, (d) Phase-contrast image restored by the Generative Adversarial Network.

Since perfect labeled phase-contrast images could not be obtained in our experiment, we used the phase-contrast images obtained by the six-step phase-stepping method as the reference standard. We then compared the phase-contrast images restored by the Fourier algorithm and the GAN with those obtained by the six-step phase-stepping method using PSNR and SSIM analysis. Through quantitative analysis of these results, we can verify the superiority of the GAN in image restoration. The PSNR value of the image restored by the Fourier algorithm is 19.74 dB, while the PSNR value of the image restored by the GAN is 20.19 dB, showing some improvement in reducing the average pixel error during the image restoration process. Moreover, the SSIM value of the Fourier algorithm is 0.487, while the SSIM value of the GAN reaches 0.771, significantly higher than that of the Fourier algorithm. This indicates that the GAN has a significant advantage in maintaining image structure, texture, and brightness.

The phase-contrast images restored by the six-step phase-stepping method are regarded as high-quality benchmarks, representing the best image quality. By comparing with this benchmark, we found that the restoration effect of the GAN under single-exposure conditions is significantly better than that of the Fourier algorithm, and its PSNR and SSIM values are close to those of the six-step phase-stepping method. This indicates that when dealing with single-exposure imaging, the GAN can not only reduce noise and improve the overall quality of the image but also largely maintain and restore the structure and details of the image, approaching the restoration effect of the six-step phase-stepping method.

In summary, despite the higher demands that single-exposure conditions place on restoration algorithms, the GAN shows significant potential in approaching the benchmark effect of the six-step phase-stepping method. By using this approach, we can restore high-precision phase-contrast images under single-exposure conditions, significantly reducing the X-ray dose without compromising image accuracy.

TALBOT-LAU system

In the Talbot-Lau system, we used the HPX-1606-11 X-ray source device produced by Varian Medical Systems, set to fine focus mode. The device’s maximum voltage is 160 kV, with a power of 800 W and a focal spot size of 0.4 mm. In the experiment, the distance between the object and the phase grating was 4.9 cm, and the distance between the analyzer grating and the detector was 6 cm, with the voltage set to 50 kV. The grating parameters are as follows: the phase grating period is 7.25 µm, the analyzer grating period is 4.8 µm, and the source grating period is 14.82 µm. The distance from the source grating to the X-ray focal spot is 10.6 cm, from the source grating to the phase grating is 65.03 cm, and from the phase grating to the analyzer grating is 21.06 cm. The experimental sample was 3 mm diameter PMMA. The experimental setup is shown in Fig. 9. For information on the distribution of the focal spot, please refer to reference26.

Our experiments demonstrated that in the single-grating projection system, phase-contrast images restored using the GAN were superior to those restored using the traditional Fourier algorithm in terms of both visual effects and objective evaluation metrics. Therefore, in the Talbot-Lau system, we focused on comparing the phase-contrast images restored by the GAN with those restored by the six-step phase-stepping method.

The experimental results shown in Fig. 10 indicate that the phase-contrast images restored by the GAN exhibit better smoothness and significantly reduced noise. However, compared to the multi-exposure phase-stepping method, the object contours restored by the GAN are more blurred, and the details are less pronounced. Quantitative analysis shows that the PSNR reached 33.26, and the SSIM was 0.8681, indicating that our network structure can restore image quality close to that of the six-step phase-stepping method under single-exposure conditions and achieve successful restoration in the Talbot-Lau system.

Cascaded grating system

We used a symmetrical cascaded TLI setup, with geometric relations \(R_{1}\) = \(R_{1}{\prime}\) = 71 cm and \(R_{2}\) = \(R_{2}{\prime}\) = 2.6 cm. The experiment employed a tungsten target X-ray tube with a focal spot size of 0.4 × 0.4 mm2, a voltage of 40 kV, a current of 4 mA, and an exposure time of 15 s, with a pre-filter of 0.8 mm beryllium. In the cascaded grating experimental system, we need to use four gratings, including two phase gratings and two absorption gratings, which were independently developed by our team23. The phase grating was made from silicon wafers through electrochemical etching, with a period of 3 μm and a height of 36 μm, suitable for 28 keV X-rays. The source and analyzer gratings were made from silicon wafers filled with tungsten nanoparticles, with a period of 42 μm and a height of 150 μm. The Moiré fringe images were recorded using a DEXELA 2923NDT flat-panel X-ray detector (3072 × 3888 pixels, each pixel 75 × 75 μm2). As shown in Figs. 2c and 11.

In the cascaded grating system, we used a rubber tube as the object sample for the experiment. According to the experimental results shown in Fig. 12, it can be visually observed that neither the six-step phase-stepping method nor the Generative Adversarial Network achieved ideal restoration effects. This is due to the higher experimental complexity of the cascaded grating system compared to the single-grating projection system or the Talbot-Lau system, which presents greater challenges.

As a result, the obtained phase-contrast images typically exhibit poor quality, with dense noise distribution and high object blurriness.

However, when we evaluated the image quality using PSNR and SSIM, it was surprising to find that the PSNR reached 38.33 and the SSIM was 0.88. These results are actually higher than those obtained from the Talbot-Lau and single-grating projection systems.

We speculate that this might be because the images restored by the phase-stepping method were of poor quality, leading the Generative Adversarial Network to perform better in phase-contrast restoration.

Conclusion

This study proposes a generative adversarial network-based model for X-ray phase contrast image reconstruction, successfully recovering phase contrast images from fringe images. By incorporating custom layers and self-attention layers, our GAN model enhances restoration performance, enabling the extraction of high-quality phase-contrast information from stripe images (in a single-grating projection system, the fringe image is formed by the projection fringes created as X-rays pass through the absorption grating. In the Talbot-Lau and cascaded grating systems, however, the fringe image corresponds to Moiré fringes) containing object information.

Using a simulated dataset generated from X-ray data downloaded from Kaggle, the GAN model demonstrated significant improvements in Peak Signal-to-Noise Ratio (PSNR) and Structural Similarity Index (SSIM), far surpassing the traditional Fourier algorithm. To further validate our method, we applied it to stripe images collected from single-grating projection systems, Talbot-Lau systems, and cascaded grating systems. Experimental results show that our method successfully restored high-quality phase-contrast images from the stripe images collected in experiments, proving the robustness and effectiveness of the GAN model.

Specifically, images restored by the GAN model exhibited significantly higher PSNR and SSIM values than those restored by the Fourier algorithm, indicating improved image quality and structural fidelity. Fourier Ring Correlation (FRC) analysis showed that the GAN excelled in mid-to-high frequency detail recovery. Additionally, the model’s stability and consistency across different training iterations further validated its capability to improve image quality.

In real experiments, including single-grating projection systems, Talbot-Lau systems, and cascaded grating systems, the GAN model consistently outperformed the traditional Fourier method under single-exposure conditions. The restored images showed reduced noise and artifacts, with PSNR and SSIM values approaching those achieved by the six-step phase-stepping method, thereby confirming the model’s effectiveness in practical applications. Moreover, our study demonstrates that the model can achieve high-quality imaging with lower X-ray doses, reducing radiation exposure risk and supporting the application and promotion of X-ray phase-contrast imaging technology. However, the experimental results primarily validate the performance of the network on simple models. Further research is needed to evaluate the effectiveness of this approach on complex biological specimens, particularly since the network’s performance may degrade when handling non-high-contrast simple structures.

Limitations of the study

Despite the significant advantages demonstrated by our GAN-based X-ray phase-contrast image restoration model in both simulated and real experiments, there are several limitations to this study.

Firstly, our model performs well with 16-bit images but shows poor performance with 8-bit images, indicating that the model’s ability to capture detailed information is limited when the image bit depth is reduced. Secondly, although our real experimental results show that GAN-generated images can approach those restored by the multi-step phase-stepping method, the actual results are still not ideal. The GAN-generated images still exhibit noise and detail blurring in some cases. And then, our model requires paired images as input. Even though the precision of multi-exposure images is high, these images still contain noise and detail blurring, making them less than ideal results. Additionally, this study primarily validated the network’s performance on relatively simple, high-contrast physical models, and has yet to fully assess its effectiveness on complex biological specimens. When dealing with low-contrast or structurally complex samples, the network’s performance may degrade. Therefore, further research is needed on more challenging biological specimens to comprehensively evaluate the method’s applicability and robustness.

To address these limitations, we propose the following improvements: firstly, combining two exposure images into one for training, which can provide more information to the model while maintaining a low X-ray dose, thus improving image restoration; secondly, attempting to use higher-resolution images for training so that the model can learn more detailed information, further improving image quality; and thirdly, exploring methods to input single images into the model, avoiding the reliance on paired multi-exposure images as label images. At last, to address the challenges posed by complex biological specimens, we plan to explore using a GAN framework combined with a pre-convolutional neural network (CNN) for more complex and efficient feature extraction. Additionally, we will attempt to enhance the contrast of complex biological specimens through data augmentation and other techniques, making them more suitable for model training and improving the overall performance of the model.

This can be achieved by developing new algorithms or improving the existing model structure, thereby enhancing the model’s applicability and flexibility under different exposure conditions. By addressing these limitations, we believe that the performance, applicability, and reliability of the GAN-based X-ray phase-contrast image restoration model can be further improved, providing stronger support for the application and promotion of X-ray phase-contrast imaging technology.

Data availability

All datasets generated and/or analyzed during this study are comprehensively presented in this article. To ensure the transparency and reproducibility of the research, the datasets used are available from the corresponding author upon reasonable request. For data access or any related inquiries, please contact the corresponding author.

References

Donath, T. et al. Phase-contrast imaging and tomography at 60 keV using a conventional x-ray tube source. Rev. Sci. Instrum. 80(5), 053701 (2009).

Herzen, J. et al. Quantitative phase-contrast tomography of a liquid phantom using a conventional x-ray tube source. Opt. Express 17(12), 10010–10018 (2009).

Stampanoni, M. et al. The first analysis and clinical evaluation of native breast tissue using differential phase-contrast mammography. Invest. Radiol. 46(12), 801–806 (2011).

Henke, B. L., Gullikson, E. M. & Davis, J. C. X-ray interactions: photoabsorption, scattering, transmission, and reflection at E= 50–30,000 eV, Z= 1–92. At. Data Nucl. Data Tables 54(2), 181–342 (1993).

Bonse, U. & Hart, M. An X-ray interferometer. Appl. Phys. Lett. 6(8), 155–156 (1965).

Davis, T. J. et al. Phase-contrast imaging of weakly absorbing materials using hard X-rays. Nature 373(6515), 595–598 (1995).

Wilkins, S. W. et al. Phase-contrast imaging using polychromatic hard X-rays. Nature 384(6607), 335–338 (1996).

David, C. et al. Differential x-ray phase contrast imaging using a shearing interferometer. Appl. Phys. Lett. 81(17), 3287–3289 (2002).

Momose, A. et al. Demonstration of X-ray Talbot interferometry. Jpn. J. Appl. Phys. 42(7B), L866 (2003).

Pfeiffer, F. et al. Phase retrieval and differential phase-contrast imaging with low-brilliance X-ray sources. Nat. Phys. 2(4), 258–261 (2006).

Lei, Y. et al. Cascade Talbot-Lau interferometers for x-ray differential phase-contrast imaging. J. Phys. D 51(38), 385302 (2018).

Wali, F. et al. Low-dose and fast grating-based x-ray phase-contrast imaging. Opt. Eng. 56(9), 094110–094110 (2017).

Marschner, M. et al. Helical X-ray phase-contrast computed tomography without phase stepping. Sci. Rep. 6(1), 23953 (2016).

De Marco, F. et al. Analysis and correction of bias induced by phase stepping jitter in grating-based X-ray phase-contrast imaging. Opt. Express 26(10), 12707–12722 (2018).

Wen, H. et al. Spatial harmonic imaging of x-ray scattering—initial results. IEEE Trans. Med. Imaging 27(8), 997–1002 (2008).

Bevins, N. et al. Multicontrast x-ray computed tomography imaging using Talbot-Lau interferometry without phase stepping. Med. Phys. 39(1), 424–428 (2012).

Seifert, M. et al. Improved reconstruction technique for Moiré imaging using an X-ray phase-contrast Talbot-Lau interferometer. J. Imaging 4(5), 62 (2018).

Isola, P. et al. Image-to-image translation with conditional adversarial networks. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 1125–1134 (2017).

Goodfellow, I. et al. Generative adversarial networks. Commun. ACM 63(11), 139–144 (2020).

Ronneberger, O., Fischer, P., & Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015: 18th International Conference, Munich, Germany, October 5–9, 2015, Proceedings, Part III 18 234–241 (Springer International Publishing, 2015).

Radford, A., Metz, L., & Chintala, S. Unsupervised representation learning with deep convolutional generative adversarial networks. arXiv preprint arXiv:1511.06434 (2015).

Rodrigo, B. Madushani. Fracture Multi-Region X-ray Data. https://www.kaggle.com/datasets/bmadushanirodrigo/fracture-multi-region-x-ray-data. Accessed May 10, 2024.

Lei, Y. et al. Tungsten nanoparticles-based x-ray absorption gratings for cascaded Talbot-Lau interferometers. J. Micromech. Microeng. 29(11), 115008 (2019).

Huang, J. et al. Detection performance of X-ray cascaded Talbot-Lau interferometers using W-absorption gratings. J. Imaging Sci. Technol. 68(1), 1–6 (2024).

Huang, J. et al. Quantitative analysis of fringe visibility in grating-based x-ray phase-contrast imaging. J. Opt. Soc. Am. A 33(1), 69–73 (2015).

Liu, X., Chen, R., Lei, Y., Huang, J. & Liu, X. Accurate PSF determination in x ray image restoration. Opt. Lett. 47(23), 6269–6272 (2022).

Li, J., Huang, J., Lei, Y., Liu, X., & Zhao, Z. X-ray phase-contrast imaging using cascade Talbot-Lau interferometers. In Tenth International Conference on Information Optics and Photonics Vol. 10964, 1287–1292 (SPIE, 2018).

Huang, J., Wali, F., Lei, Y., Liu, X. & Li, J. Fourier transform phase retrieval for x-ray phase-contrast imaging based on cascaded Talbot-Lau interferometers. Opt. Eng. 59(3), 033101–033101 (2020).

Acknowledgements

This work was supported in part by the National Natural Science Foundation of China (42327802,62075141,12375299), and Shenzhen Science and Technology Program(JCYJ20220530140805013) and GuangDong Basic and Applied Basic Research Foundation(2024A1515011993)

Author information

Authors and Affiliations

Contributions

Jiacheng Zeng conceptualized the research idea and designed the entire research framework. Jianheng Huang provided research supervision and funding support. Jiancheng Zeng offered technical support for enhancing the Generative Adversarial Networks. Jiaqi Li As an experimental assistant, assisted the first author in conducting experiments and collected data, Yaohu Lei and Xin Liu provided suggestions for improvement and technical support in X-ray research. Huacong Ye was responsible for the creation of figures and visual presentations. Yang Du and Chenggong Zhang provided funding support.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Zeng, J., Huang, J., Zeng, J. et al. Restoration of X-ray phase-contrast imaging based on generative adversarial networks. Sci Rep 14, 26198 (2024). https://doi.org/10.1038/s41598-024-77937-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-024-77937-y