Abstract

This study evaluates the effectiveness of an Artificial Intelligence (AI)-based smartphone application designed for decay detection on intraoral photographs, comparing its performance to that of junior dentists. Conducted at The Aga Khan University Hospital, Karachi, Pakistan, this study utilized a dataset comprising 7,465 intraoral images, including both primary and secondary dentitions. These images were meticulously annotated by two experienced dentists and further verified by senior dentists. A YOLOv5s model was trained on this dataset and integrated into a smartphone application, while a Detection Transformer was also fine-tuned for comparative purposes. Explainable AI techniques were employed to assess the AI’s decision-making processes. A sample of 70 photographs was used to directly compare the application’s performance with that of junior dentists. Results showed that the YOLOv5s-based smartphone application achieved a precision of 90.7%, sensitivity of 85.6%, and an F1 score of 88.0% in detecting dental decay. In contrast, junior dentists achieved 83.3% precision, 64.1% sensitivity, and an F1 score of 72.4%. The study concludes that the YOLOv5s algorithm effectively detects dental decay on intraoral photographs and performs comparably to junior dentists. This application holds potential for aiding in the evaluation of the caries index within populations, thus contributing to efforts aimed at reducing the disease burden at the community level.

Similar content being viewed by others

Introduction

Oral diseases are among the most common and preventable non-communicable diseases worldwide1. According to the World Health Organization’s (WHO) global oral health status report (2022), 75% of the population is affected by oral diseases, including dental decay and periodontal disease2. Tooth decay presents a significant global health concern, particularly among children and adolescents who face increased susceptibility due to limited resources and awareness regarding dental care, notably in Low- and Middle-Income Countries (LMICs)3,4. Dental decay affects 34.1% of adolescents aged 12–19 years, globally3,4. Timely and accurate diagnosis is imperative for decay prevention, as it significantly influences prognosis5. However, there is limited data on the prevalence of dental decay in LMICs. Despite WHO acknowledging dental decay as a significant health burden affecting 60–90% of children, it continues to be overlooked in these regions6,7. The prevalence data is essential for dental practitioners and policy makers to comprehend the vulnerability of the disease and implement preventive measures8,9.

Although dentists possess the ability to detect decay, socio-economic obstacles leading to decreased access to healthcare as well as the lack of resources in low-income settings render Artificial Intelligence (AI)-based models a more viable solution, empowering machines to generate results comparable to those of humans4,10,11,12. Convolutional Neural Networks (CNNs) and other Deep Learning (DL) techniques employed in computer vision have demonstrated effectiveness in detecting, segmenting, and categorizing anatomical structures or pathologies across diverse image datasets13,14. Leveraging CNNs for diagnosing dental decay from intraoral photographs presents a potentially cost-effective and accessible means to enhance oral healthcare15. Multiple studies have showcased the efficacy of DL models in detecting and categorizing decay in intraoral images16,17,18,19,20. Researchers have utilized various DL models, including YOLO, R-CNN, SSD-MobileNetV2, ResNet, and RetinaNet algorithms for the detection of dental decay18,21,22,23,24. Furthermore, transformer-based models have also been employed for similar purposes; however, their extensive computational requirements have limited their widespread exploration in dental imaging25.

These studies also lack the integration of Explainable AI (XAI) methods. XAI elucidates the decision-making process of CNNs, which may not always be transparent. Methods such as GradCAM and EigenCAM aid in comprehending the rationale behind AI decisions, thereby enhancing the validation of its results26.

Additionally, despite considerable efforts by researchers to develop algorithms for decay detection, these advancements are seldom translated into deployable solutions4,27. Hence, this study aimed to assess the effectiveness of a CNN-based model in identifying dental decay using clinical photographs, subsequently adapting it into a smartphone-based application28. This open-sourced app has the potential for implementation by users such as community health workers, to assess decay prevalence in populations28. Additionally, a direct comparison between the performance of AI and dentists is needed to determine the real-life applicability of this application.

Materials and methods

Study design and sample size

This validation study was conducted at the Aga Khan University Hospital (AKUH), Karachi, Pakistan following the STARD-AI guidelines (ERC#: 2023-9434-27025). All experimental methods were performed in accordance with the mentioned checklist and approved by the Ethical Review Committee (ERC) of AKUH prior to commencement of the study. The dataset utilized in this study was previously collected during another study, informed consent and assent was obtained from the participants (ERC#:2021-5943-16892). Around 6% of the images that formed the dataset were obtained from the adolescent population in Karachi, prospectively. Intraoral pictures of both primary and secondary dentitions were captured on mobile phones to incorporate images with varying resolutions. This was done to increase the diversity of images comprising the dataset which constituted a total of 7,465 intra-oral images. The methodology is summarized in Fig. 1.

Inclusion criteria

Intra-oral images of adolescents and young adults of both genders, between 8 and 24 years of age were included.

Exclusion criteria

Intra-oral images of patients with developmental dental anomalies, tetracycline staining, cleft lip/palate and oral pathologies were excluded.

Dataset preparation

The intra-oral images were transferred and visually analyzed by two dentists with an experience of more than two years on a HP Desktop Pro (G3 Intel(R) Core(TM) i5-9400, built-in Intel(R) UHD Graphics 630 with HP P19b G4 WXGA Monitor Display (1366 × 768)).The annotators were calibrated on the annotation task prior to commencement of the study. They were tained to localize and annotate/label all carious teeth, if present, in the intraoral images. This annotation process was carried out on an open-sourced annotation tool, ‘LabelMe V5.4.1’ (Massachusetts Institute of Technology-MIT, Massachusetts, Cambridge, United States)29. The annotators utilized bounding boxes to record the height, width, and coordinates of corners of the box around the tooth with visible decay (labelled ‘D’)2. Consequently, these annotations were verified by two senior dentists with at least four years of postgraduate experience. Any conflicts were resolved with discussion. Cohen’s kappa statistics revealed inter and intra-examiner reliability of 0.80. These annotations were employed for training of the models.

The annotated dataset is comprised of images and their corresponding JavaScript Object Notation (JSON) files. The JSON files contained important details about the ___location of decay in each intraoral picture. The JSON files were then converted into standardized YOLO format using python code for training the AI model30. A70:10:20 ratio was used to provide sizable training, validation and testing datasets.

Deep learning models and training

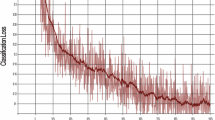

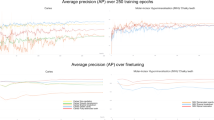

The YOLOv5s was trained on pretrained ‘coco.pt’ model acquired from Github for 200 epochs with a batch size of 6031. The dataset consisted of 7,465 images in total with 1,799 images with disease and rest with no diseases. These images were a mix of five different angles namely, frontal, right buccal, left buccal, maxillary and mandibular. This dataset was split into 5,226, 1,493, and 746 images for training, validation and testing respectively, keeping a fixed ratio of 70:20:10. The overall process was performed on a T4(15 GB) GPU from GoogleColab32. The training time of the algorithm was estimated as 413 min at a rate of 124 s/epoch, with a total of 0.27 kg eq. carbon footprint. The performance of the algorithm was evaluated using YOLO validation script.

Additionally, a Detection Transformer (DeTR) was fine-tuned on pretrained ‘facebook/detr-resnet-50’ model acquired from HuggingFace for 100 epochs with a batch size of 428. The dataset contains 1799 labeled images in total, split into 1,275, 361, and 163 images for training, validation and testing respectively, keeping a fixed ratio of 70:20:10. The overall process was performed on a L4 (22.5 GB) GPU from GoogleColab32. The training time of the model was estimated as 385 min at a rate of 232 s/epoch, with a total of 0.26 kg eq. carbon footprint. The performance of the algorithm was evaluated using COCO evaluator.

XAI implementation

After training the model, EigenCAM was used to identify image areas relevant to the decision-making of YOLOv5s by generating heat maps that highlight significant regions. This can be seen in Fig. 2 where the EigenCAM employed in this study adequately visualizes the decay in intra-oral photographs as evidenced by the position and size of the heatmaps.

Sample size calculation for direct comparison of AI with junior dentists

Sample size for a direct comparison between AI and dentists for the decay detection task was calculated using a paired sample t-test to compare the accuracy of AI and dentists, according to a study by Mertens et al.33 A sample size of 251 teeth was required to detect at least a 10% absolute difference in accuracy, with a standard deviation of 0.4, 80% power, and a 5% alpha level. This sample size was then adjusted by a design effect of 2.8, considering a cluster size of 10 and an intraclass correlation coefficient (ICC) of 0.2 to account for the clustered design with multiple teeth per photograph. Hence, the overall number of teeth needed to be assessed was 703, corresponding to 70 photographs (each containing 10 teeth). Sample size was calculated using STATA version 18.

To assess the performance of AI and junior dentists on decay detection on intra-oral photographs, the ground truth had to be determined first. Therefore, to establish the gold standard, two subject experts with over four years of postgraduate clinical experience labeled a total of 70 unseen images for decay. The performance of the AI model and junior dentists with at least two years of clinical experience, was gauged against these labels.

Results

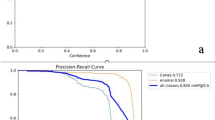

Comparison between DeTR and YOLOv5s

The performance comparison between the transformer-based DeTR and the CNN-based YOLOv5s is detailed in Table 1. DeTR exhibited suboptimal performance, with a sensitivity of 34.4% and a precision of 26.9%, resulting in an F1 score of 30.1%. Conversely, the CNN-based YOLOv5s outperformed DeTR, achieving a precision of 90.7%, a sensitivity of 85.6%, and an F1 score of 88.0%.

Direct comparison of YOLOv5s and junior dentists

Table 2 provides a performance evaluation comparing junior dentists in decay detection to the trained YOLOv5s algorithm. The results indicate that junior dentists achieved a sensitivity of 64.1%, a precision of 83.3%, and an F1 score of 72.4%. In contrast, the YOLOv5s algorithm outperformed the junior dentists, with a sensitivity of 67.5%, precision of 84.3% and an F1 score of 75.0%. The significance level was maintained at p ≤ 0.05 for all statistical analyses. Chi-squared test revealed non-significant results in the performance metrics for the two groups, indicating that both AI and humans have comparable performance in this task (p = 0.157). A regression analysis on the Receiver Operating Characteristic (ROC) of the performance of AI and junior dentists was 0.79 (CI 0.69–0.86) and 0.76 (CI 0.68–0.86), shown in Fig. 3.

Deployable application

The YOLOv5s algorithm was then translated into a smartphone-based application due to its superior results when compared to the junior dentists, further details of the app development are provided in the linked GitHub repository28. This application can be used by healthcare workers for determining disease prevalence of populations.

Discussion

This study assessed the effectiveness of an object detection model (YOLOv5s) in identifying decay on clinical photographs and its deployment into a smartphone application. The significance of this study lies in the potential of this open-sourced application that can be widely implemented to assess decay prevalence in populations, making dental health surveillance more accessible. Moreover, the comparison between AI and human performance in detecting dental decay also provides valuable insights into the capabilities and limitations of AI in deployment.

To develop a deployable algorithm for decay detection on intra-oral photographs, the authors of this study employed the YOLOv5s algorithm. This algorithm specializes in object detection and has the advantage of allowing simultaneous classification and localization of multiple objects in image datasets34. These algorithms are also capable of operating in real-time on high-resolution images, contributing to their widespread use across many fields, including dentistry34. In our study this algorithm showed superior performance with a precision of 90.7%, sensitivity of 85.6% and F-1 score of 88.0%. This is indicative of the model detecting decay with higher accuracy relative to the other reported studies performing object detection for decay identification18,35,36. A study by Thanh et al. employed the YOLOv3 algorithm, achieving a sensitivity of 74% for decay detection in intra-oral images35. However, this improvement could be attributed to the use of 2,652 intra-oral images in their study compared to a larger dataset of 7,465 images used in the current study, thus making it more robust. A study by Kim et al., developed a decay detection smartphone application, similar to the authors of this study18. In their study the YOLOv3 algorithm had a mean Average Precision (mAP) of 94.46% which is comparable to the current study18.

Another notable distinction of this study is the direct comparison of the performance of this trained YOLOv5s algorithm with dentists. The findings were interesting, the authors noted that the trained YOLOv5s was able to identify decay better compared to junior dentists. The sensitivity of decay detection by the algorithm was 67.5% compared to 64.1% of junior dentists, with a notable difference in performances. A study by Alam et al. found that the sensitivity of decay detection on clinical examination by humans was 86% compared 92% of the trained AI algorithm with no significant difference in both groups37. The authors can conclude that the trained AI model in the current study performs at least as well as a junior dentist with at least two years of clinical experience. This validates its potential use as a decay detection application in community settings where access to trained dentists is scarce.

An additional key strength of this study is the implementation of XAI techniques for rationalizing the performance of the YOLOv5s algorithm making it superior to other reported studies18,35,36. The authors employed EigenCAM to generate heatmaps localizing the area of interest for the algorithm leading to the identification of decay in the intra-oral photographs. This transparency allows researchers and practitioners to understand the AI’s rationale, ensuring trustworthy and validated decisions38. XAI techniques like EigenCAM eliminate the black-box nature of AI, transforming it into a transparent tool38. This fosters trust and provides valuable insights for refining AI models. By clearly identifying the features considered by the AI, researchers can assess accuracy, identify biases, and improve performance. Only one study previously utilized XAI (GradCAM) in a similar problem39. However literature has evidenced that EigenCAM has faster processing speeds and results in precise and highlighted visual explanations compared to GradCAM40.

The authors of this study also experimented with the recently introduced DeTR algorithm for the object detection task with the aim to achieve comparable if not better results but encountered several challenges. These transformer-based algorithms are resource-intensive and perform poorly when the objects to be detected occupy a small portion of the image, for example in our case a decay lesion in an entire image41. Additionally, this algorithm also requires a higher number of images for training compared to YOLOv5s algorithm42. A study by Jiang et al. utilized a transformer in their model for decay detection, opting to incorporate only the transformer backbone rather than the entire DeTR framework to mitigate computational costs43. In contrast, this study used the full DeTR framework and observed poor performance. This suggests that using a transformer backbone not only reduces computational costs but also significantly improves the model’s performance in detecting decay. Another hinderance encountered by the authors during training of the DeTR algorithm was its inability to process images with no disease. Since the images with no decay lesions had empty corresponding annotation files, this algorithm excluded those images with ‘healthy’ teeth entirely and the dataset that was used to train this algorithm all had examples of ‘diseased’ teeth. This led to class imbalance and therefore inaccurate prevalence of disease in the training dataset. Another disadvantage of the DeTR was the incompatibility of this algorithm with XAI techniques therefore the rationale for its results could not be determined. And since the goal was deployment in the form of an open-source application, this algorithm does not allow translation into edge devices due to its higher computational needs42. For this reason, had the algorithm performed better the authors would still not have recommended its deployment for determining the prevalence of disease/decay.

This study has limitations since the authors could only successfully execute the decay detection task; identifying fillings and missing teeth was not possible due to the limited number of its examples in the dataset. This may be due to the sample population, as the images were predominantly collected from rural areas of Pakistan where dental care is limited. Therefore, there were very few examples of filled teeth and the number of images for missing teeth was also low presumably due to the inclusion of adolescents who are still undergoing dental eruption phases.

In the future, the authors plan to utilize this application to assess disease prevalence across various populations, particularly in low-resource settings. This approach will enable the identification of disease prevalence in rural areas, potentially guiding healthcare resources to these regions for treatment. Moreover, this app will be used to capture images from urban populations, and the algorithm will be further trained on these images to include the detection of filled and missing teeth. The authors aim to improve the application’s generalizability, enabling it to be used for DMFT scoring by community dentists across different populations. The authors also acknowledge that diagnosing decay involves clinical, tactile, and radiographic examinations. Therefore, while the application can identify areas of concern, a dentist, albeit remote, may need to corroborate the findings to confirm the presence of decay.

Conclusion

The trained YOLOv5s algorithm in this study demonstrates optimal performance in detecting dental decay on intra-oral photographs, as evidenced by its comparable results to junior dentists. When deployed, this application will assist in determining the caries index of populations, enabling the assessment of disease prevalence. Subsequently, necessary measures can be implemented to reduce the disease burden at the community level.

Data availability

The datasets used during the current study are unavailable since they are owned by AKUH. The deployable AI application however, can be accessed using the following link: https://github.com/MeDenTec/Tooth-decay-detection-App).

References

World Health Organization. Noncommunicable diseases. https://www.who.int/news-room/fact-sheets/detail/noncommunicable-diseases (2023).

World Health Organization. Oral Health. https://www.who.int/news-room/fact-sheets/detail/oral-health (2023).

Frencken, J. E. et al. Global epidemiology of dental caries and severe periodontitis—a comprehensive review. J. Clin. Periodontol. 44, S94–S105. https://doi.org/10.1111/jcpe.12677 (2017).

Adnan, S., Lal, A., Naved, N. & Umer, F. A bibliometric analysis of scientific literature in digital dentistry from low- and lower-middle income countries. BDJ Open 10, 38. https://doi.org/10.1038/s41405-024-00225-4 (2024).

Cheng, L. et al. Expert consensus on dental caries management. Int. J. Oral Sci. 14, 17. https://doi.org/10.1038/s41368-022-00206-7 (2022).

Kathmandu, R. Y. The burden of restorative dental treatment for children in third world countries. Int. Dent. J. 52, 1–9. https://doi.org/10.1111/j.1875-595X.2002.tb00685.x (2002).

Petersen, P. E., Bourgeois, D., Ogawa, H., Estupinan-Day, S. & Ndiaye, C. The global burden of oral diseases and risks to oral health. Bull. World Health Organ. 83, 661–669. https://doi.org/10.2471/BLT.05.018067 (2005).

Seiffert, A. et al. Dental caries prevention in children and adolescents: A systematic quality assessment of clinical practice guidelines. Clin. Oral Investig. 22, 3129–3141. https://doi.org/10.1007/s00784-018-2527-4 (2018).

Peres, M. A. et al. Oral diseases: A global public health challenge. Lancet 394, 249–260. https://doi.org/10.1016/S0140-6736(19)31146-8 (2019).

Schwendicke, F., Samek, W. & Krois, J. Artificial intelligence in dentistry: Chances and challenges. J. Dent. Res. 99, 769–774. https://doi.org/10.1177/0022034520910464 (2020).

Shan, T., Tay, F. & Gu, L. Application of artificial intelligence in dentistry. J. Dent. Res. 100, 232–244. https://doi.org/10.1177/00220345211005763 (2021).

Lal, A. & Umer, F. Navigating challenges and opportunities: AI’s contribution to Pakistan’s sustainable development goals agenda—a narrative review. JPMA J. Pak Med. Assoc. 74, S49–S56. https://doi.org/10.47391/jpma.Aku-9s-08 (2024).

Chollet, F. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 1251–1258 (IEEE, 2020).

Morid, M. A., Borjali, A. & Del Fiol, G. A scoping review of transfer learning research on medical image analysis using ImageNet. Comput. Biol. Med. 128, 104115. https://doi.org/10.1016/j.compbiomed.2020.104115 (2021).

Patil, S. et al. Artificial intelligence in the diagnosis of oral diseases: Applications and pitfalls. Diagnostics 12, 1029. https://doi.org/10.3390/diagnostics12041029 (2022).

Hung, M. et al. Application of machine learning for diagnostic prediction of root caries. Gerodontology 36, 395–404. https://doi.org/10.1111/ger.12358 (2019).

Javid, A., Rashid, U. & Khattak, A. S. In 2020 IEEE 23rd International Multitopic Conference (INMIC) 1–5 (IEEE, 2020).

Kim, D., Choi, J., Ahn, S. & Park, E. A smart home dental care system: Integration of deep learning, image sensors, and mobile controller. J. Ambient Intell. Humaniz. Comput. 14, 1123–1131. https://doi.org/10.1007/s12652-021-03366-8 (2023).

Yoon, K. et al. AI-based dental caries and tooth number detection in intraoral photos: Model development and performance evaluation. J. Dent. 141, 104821. https://doi.org/10.1016/j.jdent.2024.104821 (2024).

Yu, H. et al. A new technique for diagnosis of dental caries on the children’s first permanent molar. IEEE Access 8, 185776–185785. https://doi.org/10.1109/ACCESS.2020.3035987 (2020).

Moutselos, K., Berdouses, E., Oulis, C. & Maglogiannis, I. In 41st Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC) 1617–1620 (IEEE, 2019).

Duong, D. L. et al. Proof-of-concept study on an automatic computational system in detecting and classifying occlusal caries lesions from smartphone color images of unrestored extracted teeth. Diagnostics 11, 1136. https://doi.org/10.3390/diagnostics11071136 (2021).

de Pérez, J. et al. AI-Dentify: Deep learning for proximal caries detection on bitewing x-ray—HUNT4 oral health study. BMC Oral Health 24, 344. https://doi.org/10.1186/s12903-024-03992-6 (2024).

Zhang, Y. et al. In Medical Ultrasound, and Preterm, Perinatal and Paediatric Image Analysis: First International Workshop, ASMUS 2020, and 5th International Workshop, PIPPI 2020, Held in Conjunction with MICCAI 2020, Lima, Peru, October 4–8, 2020, Proceedings 1 233–242 (2020).

Luo, D., Zeng, W., Chen, J. & Tang, W. Deep learning for automatic image segmentation in stomatology and its clinical application. Front. Med. Technol. 3, 767836. https://doi.org/10.3389/fmedt.2021.767836 (2021).

Giavina-Bianchi, M. et al. Explainability agreement between dermatologists and five visual explanations techniques in deep neural networks for melanoma AI classification. Front. Med. 10, 1241484. https://doi.org/10.3389/fmed.2023.1241484 (2023).

Umer, F., Adnan, S. & Lal, A. Research and application of artificial intelligence in dentistry from lower-middle income countries—a scoping review. BMC Oral Health. 24, 220. https://doi.org/10.1186/s12903-024-03970-y (2024).

Available at. https://github.com/MeDenTec/Tooth-decay-detection-App.

Wkentaro, L. Image polygonal annotation with Python (polygon, rectangle, circle, line, point and image-level flag annotation). https://github.com/wkentaro/labelme (2022).

GreatV. labelme2yolo: Labelme2YOLO is a powerful tool for converting LabelMe’s JSON format to YOLOv5 dataset format. https://github.com/greatv/labelme2yolo.

Jocher, G. YoloV5. GitHub. https://github.com/ultralytics/yolov5 (2024).

Google Google Colab. https://colab.research.google.com/ (2017).

Mertens, S., Krois, J., Cantu, A. G., Arsiwala, L. T. & Schwendicke, F. Artificial intelligence for caries detection: Randomized trial. J. Dent. 115, 103849. https://doi.org/10.1016/j.jdent.2021.103849 (2021).

Yang, R. & Yu, Y. Artificial Convolutional neural network in object detection and semantic segmentation for medical imaging analysis. Front. Oncol. 11, 638182. https://doi.org/10.3389/fonc.2021.638182 (2021).

Thanh, M. T. G. et al. Deep learning application in dental caries detection using intraoral photos taken by smartphones. J. Dent. 12, 5504. https://doi.org/10.1016/j.jdent.2022.5504 (2022).

Ding, B. et al. Detection of dental caries in oral photographs taken by mobile phones based on the YOLOv3 algorithm. Ann. Transl Med. 9, 1622. https://doi.org/10.21037/atm-21-4805 (2021).

Alam, M. K., Alanazi, N. H., Alazmi, M. S. & Nagarajappa, A. K. AI-based detection of dental caries: Comparative analysis with clinical examination. J. Pharm. Bioallied Sci. 16, S580–S582. https://doi.org/10.4103/jpbs.jpbs_872_23 (2024).

Dragoni, M., Donadello, I. & Eccher, C. Explainable AI meets persuasiveness: Translating reasoning results into behavioral change advice. Artif. Intell. Med. 105, 102431. https://doi.org/10.1016/j.artmed.2023.102431 (2023).

Askar, H. et al. Detecting white spot lesions on dental photography using deep learning: A pilot study. J. Dent. 107, 103615. https://doi.org/10.1016/j.jdent.2021.103615 (2021).

Rahman, A. N., Andriana, D. & Machbub, C. In 2022 International Symposium on Electronics and Smart Devices (ISESD) 1–5 (IEEE, 2022).

Zhang, H. et al. DINO: DETR with improved denoising anchor boxes for end-to-end object detection. arXiv. https://arxiv.org/abs/2207.09068 (2022).

Chen, Q. et al. LW-DETR: A Transformer replacement to YOLO for real-time detection. arXiv. https://arxiv.org/abs/2401.02891.

Jiang, H., Zhang, P., Che, C., Jin, B. & RDFNet A fast caries detection method incorporating Transformer mechanism. Comput. Math. Methods Med. 9773917 (2021).

Author information

Authors and Affiliations

Contributions

N.A., S.M.F.A., F.U.- wrote the main manuscript text and prepared figures and tables, S.M.F.A. and S.A. carried out data collection, J.K.D., R.H.S. and Z.H. critically appraised the manuscript. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Adnan, N., Faizan Ahmed, S.M., Das, J.K. et al. Developing an AI-based application for caries index detection on intraoral photographs. Sci Rep 14, 26752 (2024). https://doi.org/10.1038/s41598-024-78184-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-024-78184-x

Keywords

This article is cited by

-

A novel deep learning-based model for automated tooth detection and numbering in mixed and permanent dentition in occlusal photographs

BMC Oral Health (2025)

-

A visualization system for intelligent diagnosis and statistical analysis of oral diseases based on panoramic radiography

Scientific Reports (2025)