Abstract

The main advantage of DDIM is that it guarantees the quality of the generated images while increasing the efficiency of the generation by modifying the sampling strategy in the diffusion process. DiffusionRig, which addresses the problem of maintaining identity consistency by learning the person-specific facial prior in a tiny personalized dataset, is a successful representation of the DDIM strategy. Based on DiffusionRig, in this article, we propose an improved face attributes editing method based on DDIM to improve naturalness and accuracy of editing results in complex face attribute editing tasks and the generalization ability. Our method combines DDIM and DECA together and use two-stage training strategy. To reduce DiffusionRig’s limitations in handling face attribute editing tasks that require nonlinear understanding and fine-tuning, our method also introduces a channel attention mechanism and a depth-separable convolution technique in the training model. In the first stage we trained our model on the open FFHQ dataset, which consists of 30,000 high-resolution face image. In the second stage, the model was refined by using a tiny personalized dataset. Face attribute editing experiments,comparative experiment with DiffusionRig, and a series of ablation experiments have been performed. Then, the validity of our improved method is verified by qualitative and quantitative analysis.

Similar content being viewed by others

Introduction

Face attributes editing refers to modifying one or more attributes of a face image, such as facial expression, pose, lighting, etc., to generate the expected face. It has a wide range of applications in several fields, including entertainment, art creation, film production, social media and game development1,2. Traditional face editing methods are often based on mathematical calculations, color technology, image transformations, gradient constraints, or physical models. Traditional face attribute editing methods are difficult to meet the high requirements of accuracy, adaptability and naturalness. These methods often don’t work well when dealing with complex editing tasks such as adjusting facial features, changing facial expressions or age. Deep learning-based technologies automatically learn facial features through deep neural networks3, which can provide higher quality editing results4. Specifically, the most commonly used strategy to implement face editing is based on generative models. Among them, the most used generative models are GAN and Diffusion.

Generative Adversarial Network (GAN)5, as a powerful model in the field of deep learning, has not only played a great role in many fields such as image generation6,7,8, video synthesis9,10 and natural language processing11,12 through its unique adversarial training approach, but also promotes the development of the field of image editing. GAN is able to generate high-quality images while effectively maintaining the structural and semantic information of the image, which is particularly important for face images with high-dimensional attributes and complex textures13. Despite the great potential of GANs for face attribute editing tasks, there are some stability challenges associated with their training process. Pattern collapse14 and pattern oscillations are common problems that lead to a lack of diversity in the generated samples. In addition, GAN-generated face images can sometimes suffer from instability, artifacts, or distortion.

Compared with GAN, the Denoising Diffusion Probabilistic Model (DDPM)15 has certain advantages in many aspects. First, DDPM is able to generate diversity as well as higher quality samples, which means that the images generated are closer to real data and less prone to artifacts or distortions. Second, the training process of DDPM is usually more stable and less susceptible to problems such as pattern collapse or pattern oscillations. This stability makes DDPM a reliable choice for processing with complex image data. In addition, DDPM uses a progressive generation method, which makes it better able to maintain the structural consistency of the image.

The Denoising Diffusion Implicit Model (DDIM)16, as a variant of DDPM, further optimizes the generation speed. DDIM is able to achieve the same or better image quality in fewer steps by modifying the sampling strategy in the diffusion process. This accelerated generation process not only improves efficiency, but also reduces the consumption of computing resources, making the model more practical for practical applications. These improvements make DDIM especially valuable in scenarios that require fast generation of high-quality images. Therefore, the application of DDIM in the field of face attribute editing has great potential and prospects.

There are two main challenges in the field of face attribute editing: attribute decoupling and identity consistency. A human face consists of a variety of complex attributes, such as age, gender, expression, hairstyle and so on. These attributes often have natural correlations between them, e.g., certain expressions may be more common for specific age groups. This intrinsic connection between attributes may lead to entanglement between features, i.e., changing one attribute may accidentally affect other attributes. In order to maintain the naturalness and consistency of the image, effective attribute decoupling requires that the model be able to modify one or several attributes independently without disturbing other features of the face. On the other hand, keeping the identity of the edited face image unchanged is also a key challenge in face attribute editing. Some models may overemphasize the editing of a particular attribute and neglect the importance of identity consistency. Therefore, a good face attribute editing model should focus on both attribute decoupling and maintaining identity consistency.

In this context, DiffusionRig2 employs a signal-supervised training module that mainly relies on addition and multiplication operations of matrix. This design simplifies the model structure and reduces parameter calculations, which leads to training stability. However it also places great constraints on the model’s modeling capabilities. Specifically, this approach can only capture linear relationships between features and lacks the ability to dynamically adjust feature weights, which can be a limiting factor when dealing with face attribute editing tasks that require nonlinear understanding and fine tuning.

We propose an improved DDIM-based face attribute editing method aimed at achieving high-quality face attribute editing. Our method combines the generative ability of DDIM, the advanced expression capturing capability of DECA (Detailed Expression Capture and Animatio) model17, and cross-attention and depth-separable convolution techniques to optimize the face image editing process. Through two-stage training, our method not only achieves accurate editing of face attributes, such as expression, lighting, and pose changes, but also maintains the consistency of individual identity during editing, thus effectively generating realistic and personalized face images. It is worth noting that the DiffusionRig model improves the training stability by simplifying the model structure and reducing the parameter calculation, but its reliance on matrix operation limits the model’s ability to capture nonlinear relationships among features, which may lead to limited naturalness and accuracy of editing results in complex face attribute editing tasks. To address this issue, this paper introduces a channel attention mechanism and a depth-separable convolution technique to enhance the model’s focus on key features and maintain performance while reducing parameters.

The main contributions of our paper can be summarized as follows:

-

(1)

We propose a face image editing method that combines the generative capabilities of DDIM and the expression capture capabilities of DECA;

-

(2)

We verified that face image editing can be optimised through cross-attention mechanism and depth-separated convolution technique;

-

(3)

We verified that through a two-stage training method, not only the accurate editing of face attributes can be achieved, but also the consistency of individual identity can be maintained in face image editing.

In this paper, in Section “Introduction”, we present the research background of our proposed method and the contributions of this work. In Section “Related works”, we introduce the related works of the paper including DDIM, 3DMM, DECA, DiffusionRig and Cross-attention. Section “Method” introduces our method, introduces the main improvements of the model and the composition of the loss function. Section “Experiment” introduces the details of the experiment, the comparison of our method with other methods and our ablation experiments, and makes qualitative and quantitative analysis. Section “Discussion” compares the advantages and disadvantages of our method. Section “Conclusion” is the summary and conclusion. We experimented on a dataset of three celebrity and one non-celebrity face and compared our method to DiffusionRig. Through qualitative and quantitative analysis, we compared the FID (Fréchet Inception Distance)18 and LPIPS (Learned Perceptual Image Patch Similarity)19 metrics for face attribute editing. The results show that our method performs better in terms of generalization ability and the quality of the edited images. Our code with the datasets generated and analysed during the current study are available in the github repository, https://github.com/qzplucky/diffusionatt.git.

Related works

Face attribute editing is a key area in digital image processing. Before the emergence of deep learning technology, the main methods included Poisson fusion20,21, the 3DMM(3D Morphable Model)22,23 and so on. With the development of deep learning, the Deep Feature Interpolation (DFI) method updates the facial attribute by adjusting the deep features of a specific attribute24. The introduction of the diffusion model (DDPM) improves the stability of the training and increases the diversity of samples, which improves the quality of the generated image15. DDIM, as a variant of DDPM, further optimizes the generation speed, increases the efficiency and improves the quality of the generated images16.

DDIM

The main shortcoming of DDPM is that it needs to set a long diffusion step to get good results, which leads to slower generation of samples. For example, if the number of diffusion steps is 1000, then generating a sample requires the model to reason 1000 times. Compared to DDPM, DDIM has the same training objective but no longer restricts the diffusion process to be a Markov chain, which allows DDIM to speed up the generation process with fewer sampling steps.

The principle of DDIM is that the process of data generation is regarded as a reverse diffusion process. First, the DDIM model starts with a simple distribution, then gradually introduces structured information through a sequence of deterministic transformations, finally, generates training samples similar to the training data. This inverse process is accomplished by training a neural network that learns how to gradually remove the noise added to the data. The forward process is a process of gradually adding noise to the data. At time point t, the generation of data can be represented by the following equation:

where \(\varepsilon\) represents the noise randomly selected from standard normal distributions, \(a_{t}\) is a preset variance reduction coefficient that determines the amount of noise added to the data in each step. The forward process starts from the original state \(x_{0}\) and gradually adds noise until it reaches the full noise state.

The core of DDIM lies in its reversal process, which is a deterministic process of recovery from a completely noisy state to the original data state. Given the value of \(x_{t}\) at time point t, the value \(x_{t-1}\) at time point \(t-1\) can be calculated by the following equation:

where \(\varepsilon _{\theta }(x_{t},t)\) represents the output of the neural network, that is the predicted noise of \(x_{t}\) at time point t. This equation use the predicted noise of the neural network to recover \(x_{t}\) to \(x_{t-1}\) without going through all the intermediate states, reflects the non-Markov nature of its process.

The training goal of DDIM is to optimize the neural network so that it can accurately predict the noise added during the diffusion process, which can be achieved by minimizing the following loss function:

where \(x_{0}\) is the original data, \(\varepsilon\) is the added noise, t is a randomly chosen time point, \({\bar{a}_{t} }\) is the cumulative product of all a coefficients up to time point t. By minimizing this loss function, the neural network learns how to predict the noise to be added during diffusion based on the noise data and the time step.

The above approach makes the DDIM process more efficient, and the samples generated are usually more stable and consistent due to the reduction of random sampling.

3DMM and DECA

3DMM (3D Morphable Model)22 proposed by Blanz et al. in 1999. It is a generalized 3D face model and its core idea is to decompose the face into two parts: shape and texture. Later, many researches extended the data and studied 3DMM in depth, for example, FacewareHouse25 further decomposed the shape of the 3D face model into identity and expression. The advantage of 3DMM method is that it can decouple various attributes and reduce the confusion between features. 3DMM has achieved some success in face modeling and editing, but there are still have some problems due to the fact that high-precision face 3D models are difficult to obtain and the commonly used parameters have low dimensions, making it difficult to accurately represent the detailed features of the face.

The Deep Exemplar-based Colorization Algorithm (DECA)17 is a technique for face modeling and analysis. Unlike 3DMM, DECA mainly learns a high-dimensional feature representation of the face through a deep convolutional auto-encoder and maps it to a low-dimensional space. By doing so, key features of the face, such as expressions and postures, can be extracted, and the face analysis and processing can be automated. The effect images of the DECA is shown in Fig. 1. The first column shows the input raw RGB image, the second column displays the surface normal map, composed of vectors perpendicular to the surface at each point, used to describe the orientation of that point. This is primarily used for rendering lighting models and capturing the 3D appearance of a face. The third column presents the albedo map, which records the ability of various points on an object’s surface to reflect light, showcasing facial features under no lighting conditions. The fourth column features Lambertian rendering, which employs a lighting model based on Lambert’s law for illuminating the face.

DECA used the FLAME(Faces Learned with an Articulated Model and Expression) model26 of generalized 3DMM, which combines a independent linear identity shape space, expression space, a linear blend of skinned (LBS) and pose-dependent modified blend shapes to achieve dynamic representations of areas such as the neck, chin, eyeballs and so on. In face attribute editing, expression is adjusted by modifying the expression coefficient and mandibular rotation parameter; head pose is controlled by adjusting the head rotation parameter; and face illumination is edited by adjusting the Spherical Harmonics (SH) parameter.

Compared with 3DMM, DECA not only has stronger nonlinear modeling capability and more comprehensive feature representation, but also adopts an end-to-end learning approach, which effectively simplifies the modeling process. Its versatility is also a major advantage of DECA, which is suitable for face modeling tasks in multiple fields and has a wider application prospect. Therefore, DECA has greater potential and advantages in the field of face modeling and analysis, and can effectively solve the problem of feature entanglement in face attribute editing tasks.

However, the DECA approach performs not good in terms of image rendering performance, and it does not model parts such as hair and teeth, which makes it requires additional image completion work to generate these parts in real editing.

With the advancement of DDIM, the quality of face image generation has improved significantly. By combining the advantages of DECA in attribute decoupling with the capabilities of DDPM in image generation during face attribute editing, more detailed and realistic editing results can be realized.

DiffusionRig

DiffusionRig2 addresses the problem of maintaining identity consistency by learning the person-specific facial prior in a tiny personalized dataset of 20 images of the same person to achieve identity consistency in the process of editing the facial attributes of a specific person as well as maintaining high-frequency facial details.

Specifically, DiffusionRig is trained in two stages: DiffusionRig first learns a generalized facial prior from a large-scale face dataset, and then learns a person-specific facial prior from a small collection of portraits of specific people. It employs a signal-supervised training module that relies primarily on addition and multiplication operations of matrices. This design simplifies the model structure and reduces parameter computation, which leads to training stability, but it also has constraints on the model’s modeling ability . This approach can only capture the linear relationship between features and lacks the ability to dynamically adjust feature weights, which can be a limiting factor when dealing with face attribute editing tasks that require nonlinear understanding and fine tuning.

Cross-attention

In deep learning, the attention mechanism27 mimics the allocation mechanism of human attention while processing tasks to help neural networks process data more efficiently. It allows the model to focus on the most relevant information to the task and ignore the unimportant. This approach improves the performance of tasks such as image classification, target detection and scene understanding in computer vision. This model achieves the efficient use of computing resources by focusing on key areas of the image.

In face attributes editing, the attention mechanism significantly improves the quality and efficiency of editing by focusing on key parts and details of the face image. This enables the model to fine-tune expressions, hairstyles, or skin textures while maintaining the natural feel and personal characteristics of the face image.

The cross-attention mechanism28 is a technique in deep learning that is particularly useful for complex tasks such as face attribute editing. This mechanism adjusts the attention level of another sequence by analyzing one input sequence, allowing the model to process and associate different forms of data, such as text and images, more efficiently. This helps the model to better capture and utilize the relationships between these data. In the field of face image editing, the model can better understand the complex relationship between face features and editing tasks by using the cross-attention mechanism. For example, when performing editing of facial expressions or simulation of age changes, the model can more accurately capture detailed features associated with a particular expression or age state, such as subtle changes in facial expressions or details of skin texture.

To enhance the accuracy and efficiency in face attribute editing tasks, especially when dealing with complex and high-dimensional face data, our method is based on DiffusionRig2 and inspired by SENet29, and introduces a cross-attention mechanism28 to improve the model based on DiffusionRig. This improvement aims to generate higher fidelity edited images of faces by precisely controlling the channel weights.

Method

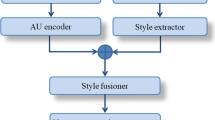

In this paper, we propose an improved face attribute editing method based on DiffusionRig. We enhance the model to establish dynamic dependencies by introducing a cross-attention mechanism and a deep separable convolution technique, which enables the model to focus more effectively on the most relevant information to the current editing task and maintain performance while reducing parameters. Our approach which combines DECA with DDIM is shown in Fig. 2. Specifically, this approach aims to achieve accurate editing of face attributes while preserving individual identity features through a two-stage training process. Figure 3 illustrates the process of face expression editing in the network of this paper. For the first and second stages, the models, losses, and training methods used are exactly the same, except that the large-scale dataset is switched to the personal dataset. The algorithm flow is as follows:

In the first stage of our method, a ResNet-1830 based architecture is used to extract the DECA coefficients of the face image, which are then used to perform the required 3D facial edits. After these edits are completed, our method adopts DDIM to process the edited “physical buffer” (including surface normals, albedo, and Lambertian rendering) to produce a near-realistic image.

To ensure that edited face images retain their original identity information, our method prefers to train the network on the Flickr-Faces-HQ (FFHQ) Dataset31 to learn generalized prior knowledge of facial features. This allows the network to effectively restore facial images with a sense of realism from the edited physical buffer. Relying only on the first-stage model does not satisfy the need of high-precision editing, as the information in the physical buffer is coarse and lacks sufficient identity features.

Therefore, in the second phase of our method, the model was adjusted by using a tiny personalized dataset containing 20 photos of relevant people. This step aims to further refine the performance of the model, especially in dealing with attributes that DECA fails to model directly, such as hairstyles and eyeglasses. For dealing with attributes that are not directly modeled by DECA, our method introduces an additional encoder branch, which is responsible for encoding the input image into a global code that captures facial features not covered by the DECA framework. To improve the effectiveness and efficiency of face attribute editing, we introduce a cross-attention mechanism as well as depthwise separable convolution in the DDIM generation model. The cross-attention mechanism enables the model to focus on the most relevant information for the current editing task by establishing dynamic dependencies in the model, thus making the editing process more accurate and targeted. Deeply separable convolution aims to optimize the computational efficiency of the model by breaking down traditional convolution operations into lighter-weight steps to reduce the number of parameters in the model while maintaining or improving performance. These improvements not only improve the quality of the editing task, but also greatly speed up the editing process, making the model more efficient in handling complex face editing tasks.

This two-stage training allows our model to not only perform accurate face attribute editing, but also maintain the identity consistency of individuals in the editing process, which provides an effective technical approach for generating realistic and personalized face images.

Learning generalized prior knowledge of facial features

The first stage of our method dedicated to acquiring a priori knowledge of facial features with the aim of generating highly realistic images while following various physical condition constraints. the DECA framework is first employed to extract key physical parameters from the input images, which include the parameters of the FLAME model (e.g., facial shape \(\beta\), expression \(\phi\), pose \(\theta\)), albedo \(\alpha\), and illumination I. Next, using Lambertian reflections, these parameters are rendered into the physical buffer, which mainly covers the surface normals, albedo, and Lambertian rendering effects.

Although the information provided by the physical buffer is rather basic and may not be sufficient to accurately represent the identity of an individual, by adjusting the DECA coefficients, we are able to perform fine-grained editing of the face attributes. A significant advantage of this approach is that it achieves decoupling between individual attributes, effectively reducing mutual interference between features. In order to overcome the limitations of DECA in rendering image capability, our method adopts DDIM to further improve the quality of face image generation. By combining the decoupling ability of DECA with the efficient generation ability of DDIM, our method can achieve better quality editing results. In order to consider the randomness in the DDIM generation process, random noise images are also introduced our method. In addition, our method utilizes the ResNet-18 based encoder to encode the global appearance information to capture the facial features that may be missed by the DECA, such as hair, hats, glasses, and so on. Thus, in our model, the physical buffer and the learned global encoding together serve as the conditions for image synthesis, providing a strong support for generating richer and more personalized facial images. Our model can be represented by the following equation:

where \(x_{t}\) is the noisy image at moment t, z represents physical buffer, \(x_{0}\) is input image, \({\hat{\varepsilon }}_{t}\) is the predicted noise, \(f_{0}\) and \(\phi _{\theta }\) represent the DDIM and global latent encoder, respectively.

Learning personalized prior knowledge of facial features

The first stage which mentioned in Section “Learning generalized prior knowledge of facial features” successfully have learned generalized facial prior knowledge from the FFHQ dataset, enabling the model to synthesize face images within the physical buffer. The two core challenges in achieving high-quality face attribute editing are attribute decoupling and identity consistency.

The first phase of our approach exploits the capability of DECA to achieve attribute decoupling. However, keeping the identity of the edited face unchanged during the editing process is another major challenge for face attribute editing. As shown in Fig. 4, relying only on the generalized face prior learned in the first stage is not sufficient to ensure that the generated image matches the original identity exactly. This is because although the physical buffer is able to reproduce facial structure to a certain extent, it lacks the detail required to maintain high-frequency facial features (e.g., fine skin texture, unique facial markings, etc.), which are critical for face image recognition and identity preservation.

What can be identified is the importance of personalized prior in maintaining identity consistency and refining high-frequency facial features. By deeply analyzing and refining the model to more accurately capture and reproduce the unique facial features of the individual, Our approach improve the quality of generated face image through allowing free editing of face attributes while maintaining the identity of the individual unchanged. The exact steps of our methodology are as follows:

First, we use ResNet-18 for each image in this personalized small dataset to extract its DECA parameters, including facial shape, expression, and pose. Since the DECA is designed to extract DECA coefficients for a single image, theoretically the DECA coefficients of the same person in different images should be consistent. Based on this assumption, this study calculates the average value of DECA coefficients for all images of the same person in the personalized dataset and adopts this average value as the basis for representing the uniform facial shape and other attributes of the person.

Then, in the process of fine-tuning, our approach focuses on learning these uniform and averaged DECA coefficients through the DDIM model as a way to better understand and preserve an individual’s identity information. In this way, the model is able to more accurately retain an individual’s unique facial features and identity information when generating new images.

In order to quantify the model performance and guide the training process, our mehod employs P2 weight loss32 as shown in Eq. (5). This loss function is designed to calculate the difference between the model prediction noise and the true noise, aiming to optimize the model’s noise prediction ability for more accurate image generation and editing. With this well-designed loss function and fine-tuning strategy, our method aims to address the key challenges in face editing and achieve face image editing that is both personalized and maintains a high degree of realism.

where \(\lambda _{t}\) is the hyperparameter to control the weights for different time steps.

Improved model of cross attention and depthwise separable convolution

The signal-supervised training module used by DiffusionRig relies on matrix addition and multiplication operations. This design simplifies the structure of model and reduces the computation of parameters, which leads to training stability. However, this approach can only capture the linear relationship between features and lacks the ability to dynamically adjust the weights of features, which may become a limiting factor when dealing with the task of editing face attributes that require nonlinear understanding and fine tuning.

Therefore, we introduced the cross attention module (ATT module) into the U-Net network structure in the DDIM model, as shown cross attention module in Fig. 2 (the middle position on the right side), and carried out experiments on face attribute editing based on this. In our method, for the input external supervision features which include the coded features of the externally supervised DECA and the coded features of the noise-adding moments, they are sequentially added and incorporated into the coded features of the image that is noise-added during the denoising process through the ATT module. Specifically, we process the externally supervised features in two parts: one part adopts a linear modeling approach, and the other part achieves nonlinear modeling through the cross-attention mechanism. The specific ATT module is shown in Fig. 2 (bottom right corner).

In the linear modeling stage, the number of feature channels is first increased to twice the original number, then divided these channels into two parts. These two parts of the features are combined with the image features by matrix multiplication and matrix addition, respectively. This stage is designed to maximize the learning and utilization of externally supervised detailed features while speeding up the computational speed of the model.

For the nonlinear modeling stage, we employ a cross-attention module for feature fusion. In this process, supervised features are treated as queries (Q), and image features are used as keys (K) and values (V). This stage allows the model to achieve deeper supervised learning of features while maintaining high efficiency.

To further enhance the flexibility of the model and avoid the additional computational burden caused by adding parameters, we summed the results of linear and nonlinear modeling. In addition, to prevent possible degradation problems during model training, we introduce jump connections to stabilize the training of the model.

By the design of this method, the Encoded_Timestep (t in DDIM) and face style embedding can be more effectively integrated into the diffusion process, making the generated face editing images more realistic. This strategy of integrating linear and nonlinear modeling (the linear modeling stage and the nonlinear modeling stage above) not only improves the model’s ability to handle externally supervised features, but also optimizes the overall image editing results.

To further improve the efficiency of the model and reduce the number of parameters, we adopts the design concept of MobileNet33, especially its innovative approach in reducing parameters and computational cost. Specifically, we employ Depthwise Separable Convolution34 in the jump connections of each ResNet block.

Depthwise Separable Convolution reduces the number of parameters and computational complexity of the model by decomposing the standard convolution operation into two lightweight steps: first, channel-by-channel (Depthwise) spatial filtering, and then cross-channel (pointwise) convolution. This decomposition approach leads to a significant reduction in the number of parameters and computational operations without significantly sacrificing the performance of the model. By applying Depthwise Separable Convolution, the number of parameters of our model is further reduced to 784.51MB from 794.77MB, which demonstrates the effectiveness of the Depthwise Separable Convolution in reducing the number of parameters of the model and the computational cost.

Experiment

We first conducted experiments on facial attribute editing on three famous persons in the FFHQ dataset (Obama, Biden, and Taylor) and one person not in FFHQ. Then we conducted a comparative experiment with DiffusionRig to analyze the advantages of our proposed approach from a qualitative and quantitative point of view. Finally we also conducted a series of ablation experiments to investigate the importance of each module in our model.

Implementation details and evaluation metrics

In the first phase of our experiments, we trained the network using the FFHQ dataset, which consists of 70,000 high-resolution face images. The pre-processing and partitioning of the dataset follows the work of DiffusionRig. We chose Adam as optimizer and set the learning rate as \(10^{-4}\) and set the batch size as 8. The resolution of all images was uniformly adjusted to \(256*256\) pixels, and the whole network was trained after 50,000 iterations in order to achieve a good training result, the partial loss graph of the model training is shown in the Fig. 5.

In the second phase, we take a more refined approach to fine-tuning the model using a tiny personalized dataset which contains 20 photos. In this phase, the learning rate is further reduced to \(10^{-5}\) and the batch size is adjusted to 4, the image resolution is kept at \(256*256\) pixels and the number of iterations is reduced to 5,000. Utilizing an NVIDIA A6000 graphics card, this training phase took approximately 30 minutes to complete.

All our qualitative and quantitative analyze are based on images with a resolution of \(256*256\). All experiments was implemented in the PyTorch framework. This two-phase training approach not only improves the generality and adaptability of the model, but also improves the quality and realism of the edited image.

We use FID(Fréchet Inception Distance)18 and LPIPS(Learned Perceptual Image Patch Similarity)19 to evaluate our method. FID combines deep learning and statistical methods to evaluate the quality of the generated images by comparing the distance between the feature distribution of the generated images and that of the real images. LPIPS uses a pre-trained deep neural network model to extract features from the images and calculates the similarity between the images based on the difference of these features. A lower value of both metrics indicates that the feature distribution between the generated image and the real image is more similar and the quality of the generated image is higher.

Obama face attribute editing experiment results. For the given three original images of Obama (first row) and one reference image (second row), extract the DECA coefficients of the face (including Surface Normals, Albedo, and Lambertian Render), edit the DECA coefficients based on the application targets (Expression, Lightingh, Pose, etc.) and the reference image, and finally generate the edited face images(seventh row) based on the DDIM method using the edited DECA coefficients(third, fourth, and fifth rows). The sixth row is the editing results of the DiffuseRig method.

Face attribute editing based on physical buffer modification

We used the personalized model trained in the second stage to conduct experiments on face attribute editing by manipulating the physical buffer, which includes the three types of editing: light editing, expression editing and pose editing. We changed the Lambertian rendering effect by adjusting the spherical harmonics(SH) parameter for the lighting editing. we modified the expression parameter and jaw rotation parameter of the FLAME model to realize the expression editing. We adjusted the head rotation parameter for the pose editing. When performing these edits, 64-dimensional global variables are generated by encoding the input image and remain unchanged throughout the editing process. The editing results include three celebrities and one non-celebrity and all the edited images have a resolution of \(256*256\), which are shown in Fig. 6. Taking the Obama’s face attribute editing as an example, the first row represents the input original Obama face image, the second row from left to right represents the expression editing, lighting editing, and pose editing, respectively. The rest (Biden, Tyler, and non-celebrities) are the same order as the Obama face attribute editing.

Comparison experiment

In this section, we focus on editing the face attributes of three celebrities (Obama, Biden, and Taylor) using our model and comparing these edited results with DiffusionRig from the perspective of qualitative and quantitative analyses.

Qualitative and quantitative comparison demonstrates the advantages of our model over DiffusionRig in terms of lighting editing and expression modification, which not only performs well in maintaining the identity characteristics of the characters, but also has significant advantages in ensuring the natural and realistic of the editing results.

Qualitative analysis

As shown in Fig. 7 , when editing Obama’s tiny personalised dataset, our method does a better job in maintaining the original skin tone compared to DiffusionRig and the editing results are more natural and close to the original image. As shown in Fig. 8, when processing expression editing in Biden’s dataset , editing results processed by DiffusionRig were significantly different from the original image in skin tone and have a bluish background colour when compared to the original image. In comparison with DiffusionRig’s results, the results of our method are more natural. As shown in Fig. 9, in the editing of Taylor’s dataset, a certain amount of noise appeared in the results processed by DiffusionRig. Compared to the DiffusionRig method, our method is visually superior and the edited image is much clearer and almost noiseless, thus providing better quality editing results. These comparisons demonstrate the significant advantages of our method in terms of detail processing, colour preservation and noise control. The above comparisons further demonstrate the ability of our method to generate more realistic and natural images compared to DiffusionRig method in face attribute editing tasks.

Quantitative analysis

The results of the quantitative analysis are shown in Table 1. These data are collected from 1,000 randomly generated samples of different personal data sets. According to the data in the table, it can be seen that the FID , RMSE and LPIPS metric values of our method are better than those of DiffusionRig in terms of lighting and expression attribute editing, which means that our method generates images with higher quality and greater similarity to the real images in these two aspects. However, the FID and LPIPS metric values of our method are lower than those of DiffusionRig for pose attribute editing, which means images generated by DiffusionRig may be visually closer to the real images or maintain better perceptual consistency when dealing with pose changes. We calculated the DECA Re-Inference Error (RMSE) and Face Re-Identification Error (face re-ID error) on 1000 images in each ___domain to compare the DiffusionRig and Mystyle methods. The Re-Identification Error are shown in Table 2 and RMSE are shown in Table 3. From the results, we can see that our method achieves better results in RMSE and face re-ID error than the original DiffusionRig method, and the face consistency is stronger.

These observations and conclusions highlight the advantage of our approach in light and expression editing, but also point out the shortage of our method in the pose editing task. For future work, this means that we can explore new approaches or improve existing techniques to improve the accuracy and image quality of pose editing so that all face attributes editing can be exceed the level of existing techniques.

Ablation experiments

In this section, we conduct a series of ablation experiments to validate the importance of the personalized prior for improving the performance of our model in the face attribute editing task, the effect of choosing the ATT module and the number of images in the tiny personalized dataset in adjusting the model. Through these ablation experiments, we are able to gain insight into the contribution of each component to the overall performance of the model.

Experiments without personalized prior

Experiments were conducted on the performance of the model without a personalized prior. the model in these experiments relied only on the first stage of training on the FFHQ large dataset without any personalized fine-tuning. In the experiments, editing was performed by using physical buffers as the only input for pose, expression and lighting. The results of experiments are shown in Fig. 4, we can see this strategy doesn’t perform well in identity consistency. These experiments validated the importance of personalized prior in ensuring identity consistency during the editing process.

Experiments without cross attention module

Experiments were conducted to explore the performance of our model without the attention module(ATT) by removing ATT module in experiments. The results of Obama’s, Biden’s, and Taylor’s qualitative experiments are displayed in Figs. 10, 11 and 12. The visual impact of the absence of the Attention Module on the editing results can be seen in these figures. In addition, we also quantitatively evaluated the impact of the attention module through FID and LPIPS values. The specific results are shown in Table 4. We output 1000 samples of random latent vectors for each person’s photo to calculate the score. It can be seen that the introduction of the ATT module can significantly improve the performance of the model. So, these experimental results confirm the importance of the attention module for improving the accuracy and efficiency of the model in face attribute editing tasks.

Experiments of adjust the number of images in the tiny personalized dataset

These experiments focused on investigating the effect of the number of images used during the second phase on the ability to learn personalized priors. Specifically, a celebrity (Taylor) is selected as a case study and four different models are trained using different numbers of images (1, 5, 10, and 20) in the tiny personalized dataset. These four different trained models are evaluated in terms of light editing, expression editing, and pose editing.

The results are presented in Fig. 13, (a) represents the original image, (b) to (e) show the the editing results by using the trained model with 1,5,10 and 20 images, (f) is a reference image that define the target appearance. As shown in Fig. 13, when training with only a small number of images (e.g., 1, 5, or 10), the quality of the results is significantly worse compared to a model using 20 images. As the number of training images increases, the model’s ability to capture details and apply personalized priori improves significantly, especially in more natural and precise expressions, lighting and pose adjustments. This phenomenon is not surprising, as more images provide richer information that helps the model learn and capture individual high-frequency facial features and personalized priors more accurately.

The results of these experiments highlight the importance of using sufficient number of images in the personalized fine-tuning phase so that the model can effectively learn and apply personalized priors to achieve higher accuracy and naturalness in face attribute editing tasks.

Discussion

By studying DDIM-based face attribute editing method, we have made a new contribution in the field of image editing. By introducing personalized prior, cross-attention, and deep separable convolution into DDIM, we improve the quality of edited images. Experiments demonstrate that our method performs well in face attribute editing.

However, there are some limitations of our study. First, in the second phase of the experiment, the method is extremely dependent on a smaller personalized dataset for fine-tuning. This dependency limits the scalability of the method. In addition, our method in this study relies on DECA to obtain the required physical buffer information. Although DECA is a powerful tool for providing detailed facial information, it is limited in its ability to handle extreme expressions and predict lighting conditions.

Future research directions should explore the use of more diverse as well as larger datasets for training of the second phase and integrate more advanced facial parsing techniques to improve the ability to handle extreme situations and the accuracy of illumination estimation.

Conclusion

We propose an improved face attributes editing method based on DDIM. This method incorporates the generative capabilities of DDIM, the advanced expression capturing capabilities of DECA models, as well as cross-attention and depth-separable convolution techniques to optimize the face image editing process. This approach not only achieves accurate face attribute editing, such as expression, lighting and pose changes, but also maintains the identity consistency of individuals during editing through two-stage training. Our method can correct faces in different lighting poses. Users only need to provide one photo to generate customized face poses and photos with different lighting. The editing experiments on celebrity and non-celebrity face images show that our method significantly improves the editing quality compared to the original DiffusionRig model, which proves its advantages in the field of face attribute editing. In the future, we will further investigate how to reduce reliance on small personalized datasets and how to use facial parsing technology to improve the accuracy of face editing.

Data availability

Our code with the datasets generated and analysed during the current study are available in the github repository, https://github.com/qzplucky/diffusionatt.git.

References

He, Z., Zuo, W., Kan, M., Shan, S. & Chen, X. Attgan: Facial attribute editing by only changing what you want. IEEE Trans. Image Process. 28, 5464–5478. https://doi.org/10.1109/TIP.2019.2916751 (2019).

Ding, Z. et al. Diffusionrig: Learning personalized priors for facial appearance editing. In 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 12736–12746, https://doi.org/10.1109/CVPR52729.2023.01225 (2023).

Umirzakova, S. & Whangbo, T. K. Detailed feature extraction network-based fine-grained face segmentation. Knowl.-Based Syst. 250, 109036. https://doi.org/10.1016/j.knosys.2022.109036 (2022).

Chen, Q. et al. Rdfinet:reference-guided directional diverse face inpainting network. Complex Intell. Syst.[SPACE]https://doi.org/10.1007/s40747-024-01543-8 (2024).

Goodfellow, I. et al. Generative adversarial networks. Commun. ACM 63, 139–144. https://doi.org/10.1145/3422622 (2020).

Qiao, K. et al. Biggan-based bayesian reconstruction of natural images from human brain activity. Neuroscience 444, 92–105. https://doi.org/10.1016/j.neuroscience.2020.07.040 (2020).

Su, S. et al. Face image completion method based on parsing features maps. IEEE J. Sel. Top. Signal Process. 17, 624–636. https://doi.org/10.1109/JSTSP.2023.3262357 (2023).

Deng, F. et al. Dg2gan: Improving defect recognition performance with generated defect image sample. Sci. Rep. 14, 14787. https://doi.org/10.1038/s41598-024-64716-y (2024).

Liu, M.-Y., Huang, X., Yu, J., Wang, T.-C. & Mallya, A. Generative adversarial networks for image and video synthesis: Algorithms and applications. Proc. IEEE 109, 839–862. https://doi.org/10.1109/JPROC.2021.3049196 (2021).

Wang, Y., Bilinski, P., Bremond, F. & Dantcheva, A. Imaginator: Conditional spatio-temporal gan for video generation. In 2020 IEEE Winter Conference on Applications of Computer Vision (WACV), 1149–1158, https://doi.org/10.1109/WACV45572.2020.9093492 (2020).

Fedus, W., Goodfellow, I. J. & Dai, A. M. Maskgan: Better text generation via filling in the ______. ArXiv[SPACE]arXiv:abs/1801.07736 (2018).

Haidar, M. A. & Rezagholizadeh, M. Textkd-gan: Text generation using knowledge distillation and generative adversarial networks. Adv. Artif. Intell.[SPACE]https://doi.org/10.1007/978-3-030-18305-9_9 (2019).

Zhu, B., Zhang, C., Sui, L. & Yanan, A. Facemotionpreserve: a generative approach for facial de-identification and medical information preservation. Sci. Rep. 14, 17275. https://doi.org/10.1038/s41598-024-67989-5 (2024).

Durall, R., Chatzimichailidis, A., Labus, P. & Keuper, J. Combating mode collapse in gan training: An empirical analysis using hessian eigenvalues. ArXiv[SPACE]arXiv:abs/2012.09673 (2020).

Ho, J., Jain, A. & Abbeel, P. Denoising diffusion probabilistic models. In Proceedings of the 34th International Conference on Neural Information Processing Systems, NIPS’20 (Curran Associates Inc., Red Hook, NY, USA, 2020).

Song, J., Meng, C. & Ermon, S. Denoising diffusion implicit models. ArXiv[SPACE]arXiv:abs/2010.02502 (2020).

He, M., Chen, D., Liao, J., Sander, P. V. & Yuan, L. Deep exemplar-based colorization. ACM Trans. Graph.[SPACE]https://doi.org/10.1145/3197517.3201365 (2018).

Heusel, M., Ramsauer, H., Unterthiner, T., Nessler, B. & Hochreiter, S. Gans trained by a two time-scale update rule converge to a local nash equilibrium. In Proceedings of the 31st International Conference on Neural Information Processing Systems, NIPS’17, 6629-6640, https://doi.org/10.5555/3295222.3295408 (Curran Associates Inc., Red Hook, NY, USA, 2017).

Johnson, J., Alahi, A. & Fei-Fei, L. Perceptual losses for real-time style transfer and super-resolution. In Leibe, B., Matas, J., Sebe, N. & Welling, M. (eds.) Computer Vision – ECCV 2016, 694–711, https://doi.org/10.1007/978-3-319-46475-6_43 (Springer International Publishing, Cham, 2016).

Pérez, P., Gangnet, M. & Blake, A. Poisson image editing. ACM Trans. Graph. 22, 313–318. https://doi.org/10.1145/882262.882269 (2003).

Qiang, Z., He, L., Zhang, Q. & Li, J. Face inpainting with deep generative models. Int. J. Comput. Intell. Syst. 12, 1232–1244. https://doi.org/10.2991/ijcis.d.191016.003 (2019).

Blanz, V. & Vetter, T. A morphable model for the synthesis of 3d faces. In Proceedings of the 26th Annual Conference on Computer Graphics and Interactive Techniques, SIGGRAPH ’99, 187-194, https://doi.org/10.1145/311535.311556 (ACM Press/Addison-Wesley Publishing Co., USA, 1999).

Nirkin, Y., Masi, I., Tran Tuan, A., Hassner, T. & Medioni, G. On face segmentation, face swapping, and face perception. In 2018 13th IEEE International Conference on Automatic Face and Gesture Recognition (FG 2018), 98–105, https://doi.org/10.1109/FG.2018.00024 (2018).

Upchurch, P. et al. Deep feature interpolation for image content changes. In 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 6090–6099, https://doi.org/10.1109/CVPR.2017.645 (2017).

Cao, C., Weng, Y., Zhou, S., Tong, Y. & Zhou, K. Facewarehouse: A 3d facial expression database for visual computing. IEEE Trans. Visual Comput. Graphics 20, 413–425. https://doi.org/10.1109/TVCG.2013.249 (2014).

Li, T., Bolkart, T., Black, M. J., Li, H. & Romero, J. Learning a model of facial shape and expression from 4d scans. ACM Trans. Graph.[SPACE]https://doi.org/10.1145/3130800.3130813 (2017).

Bahdanau, D., Cho, K. & Bengio, Y. Neural machine translation by jointly learning to align and translate. In Bengio, Y. & LeCun, Y. (eds.) 3rd International Conference on Learning Representations, ICLR 2015, San Diego, CA, USA, May 7-9, 2015, Conference Track Proceedings (2015).

Huang, Z. et al. Ccnet: Criss-cross attention for semantic segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 45, 6896–6908. https://doi.org/10.1109/TPAMI.2020.3007032 (2023).

Hu, J., Shen, L. & Sun, G. Squeeze-and-excitation networks. In 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, 7132–7141, https://doi.org/10.1109/CVPR.2018.00745 (2018).

He, K., Zhang, X., Ren, S. & Sun, J. Deep residual learning for image recognition. In 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 770–778, https://doi.org/10.1109/CVPR.2016.90 (2016).

Karras, T., Laine, S. & Aila, T. A style-based generator architecture for generative adversarial networks. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 4401–4410 (2019).

Poirier-Ginter, Y. & Lalonde, J.-F. Robust unsupervised stylegan image restoration. In 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 22292–22301, https://doi.org/10.1109/CVPR52729.2023.02135 (2023).

Howard, A. G. et al. MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications. ArXiv (2017). arXiv:1704.04861.

Chollet, F. Xception: Deep learning with depthwise separable convolutions. In 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 1800–1807, https://doi.org/10.1109/CVPR.2017.195 (2017).

Acknowledgements

This research was supported by the project of the Natural Science Foundation of China (Grant No. 12163004); the National Key Research and Development Program of China(Grant No. 2022YFC3320802); the Yunnan Fundamental Research Projects(CN) (Grant Nos. 202301BD070001-008, 202401AT070151); the key scientific research project of Yunnan Police College (19A010); Yunnan International Joint Laboratory of Natural Rubber Intelligent Monitor and Digital Applications (202403AP140001); Xingdian Talent Support Program (Grant No. YNWR-QNBJ-2019-286).

Author information

Authors and Affiliations

Contributions

L.H., Q.C., Y.P., M.W. and Z.Q conceptualized the research, L.H., Q.C., Y.P., M.W. and Y.W developed the methods, M.W., Y.W. and L.L. conducted the investigation, L.H., Y.P. and L.L. visualized the results, Z.Q. supervised the research. L.H. wrote the original draft. All authors reviewed and edited the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

He, L., Chen, Q., Pang, Y. et al. An improved face attributes editing method based on DDIM. Sci Rep 14, 27154 (2024). https://doi.org/10.1038/s41598-024-78378-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-024-78378-3