Abstract

Motion provides a powerful sensory cue for segmenting a visual scene into objects and inferring the causal relationships between objects. Fundamental mechanisms involved in this process are the integration and segmentation of local motion signals. However, the computations that govern whether local motion signals are perceptually integrated or segmented remain unclear. Hierarchical Bayesian causal inference has recently been proposed as a model for these computations, yet a hallmark prediction of the model – its dependency on sensory uncertainty – has remained untested. We used a recently developed hierarchical stimulus configuration to measure how human subjects integrate or segment local motion signals while manipulating motion coherence to control sensory uncertainty. We found that (a) the perceptual transition from motion integration to segmentation shifts with sensory uncertainty, and (b) that perceptual variability is maximal around this transition point. Both findings were predicted by the model and challenge conventional interpretations of motion repulsion effects.

Similar content being viewed by others

Introduction

Visual motion is a rich source of information, not only for determining the movement of objects in the world but also for segmenting the visual scene and grouping together objects based on shared components of motion. This elementary set of operations, known as motion integration/segmentation, has been extensively studied since the time of Gestalt psychologists1. Integration and segmentation are often conflicting computations2 that depend on the similarity of noisy local motion signals. To enhance signal-to-noise ratio and form coherent global motion percepts, the visual system typically integrates local motion signals that have similar velocity vectors3,4. However, this conflicts with the visual system’s need to highlight the relative motions of disparate local velocity signals, in order to segment object boundaries in a scene5. Balancing the competing demands of integration vs. segmentation is a challenging problem that is not unique to motion perception2,6,7,8. Despite the longstanding recognition of these issues, the computations that govern the brain’s transition from integrating local motion signals to segmenting them remain unclear.

An important example demonstrating the conflicting demands of motion integration and segmentation involves motion transparency. In a typical motion transparency display, two spatially overlapping sets of random dots move with different velocities9,10,11,12,13. When the direction difference is moderate (e.g., 10–30 deg), observers tend to overestimate the difference in direction between the two dot patterns, a phenomenon known as motion repulsion9,10,13,14. This repulsion is not restricted to spatially overlapping stimuli, but also occurs for center-surround stimuli15,16. Previous psychophysical experiments have demonstrated how direction repulsion depends on stimulus factors such as contrast, speed, eccentricity, stimulus duration, surrounding motion direction, center-surround gap width, and motion noise4,5,13,15,17,18,19,20,21,22. In contrast, when the direction difference between two moving stimuli becomes very small, they may be perceptually integrated into a single moving percept, thus producing an attractive bias between stimuli23,24. Importantly, a few studies18,20,22,23,24 have observed a transition from motion integration (attractive bias) to motion segmentation (repulsive bias) as the difference in direction between two stimuli varies. However, none of these studies have quantified the effect of sensory uncertainty on the transition point from motion integration to segmentation, nor have they provided a computational account of this transition. Thus, the computational processes that govern the transition from integration to segmentation remain largely unknown.

Computationally, various models have been proposed to explain the direction repulsion effect associated with segmentation25,26,27. For instance, Dakin & Mareschal27 suggested a functional model of motion repulsion based on Johansson’s hypothesis that the perception of motion is relative to reference frames that are dynamically constructed by the brain28. However, none of these models offer a normative framework that predicts the transition from integration to segmentation.

More recently, Bayesian causal inference (CI) has been proposed as a computation by which the visual system infers the most likely hierarchical motion structures observed in the environment29,30,31, or samples from a posterior distribution over these structures32. Each of these models assumes that the brain tries to compute motion of an object (e.g., one’s hand) relative to the motion of other parts (e.g., the arm, the body), such that motion is represented hierarchically. Performing inference on the motion inputs in these models consists of two key steps: first, decide whether to integrate or segment different visual elements based on their motion; second, infer the causal relationships between the segmented elements. Yang et al.29 psychophysically tested the second step. Shivkumar et al.32 tested both the first and the second step, however, without varying the sensory uncertainty of the motion cues. This leaves a key signature of Bayesian computations untested: their dependence on stimulus uncertainty.

The goal of this study was to fill this important gap by deriving, and testing, the predictions of Bayesian causal inference as a function of stimulus uncertainty. We first show that Shivkumar et al.’s32 model predicts a shift in the perceptual transition point from integration to segmentation as sensory uncertainty increases, together with a corresponding shift in the peak of perceptual variability. We then report new psychophysical data collected by leveraging a recently proposed 3-patch stimulus configuration32 that facilitates measurement of the transition between motion integration and segmentation, while manipulating motion coherence to control sensory uncertainty. Our findings agree with the predictions of the Bayesian model, supporting the hypothesis that causal inference computations govern whether two motion stimuli are integrated or segmented by the visual system.

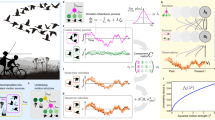

Stimulus, task, and previous behavioral results. (A) Stimulus schematic. The relative motion direction between the center and inner ring is represented by \(\:\varDelta\:\phi\:\). (B) Task design: observers view the stimulus for a period of 2s and then use a dial to adjust an arrow to match their perceived direction of the center (green) patch. (C) Reference frames. The dashed orange line corresponds to the relative motion of the (green) center computed with respect to the (blue) outer ring (left), and with respect to the (red) inner ring (right). (D) Behavioral results from Shivkumar et al.32 show a clear transition from integration to segmentation as \(\:\varDelta\:\phi\:\) increases.

Results

Our paper will proceed as follows. First, we describe the task and stimulus design that allow for a precise measurement of the transition from integration to segmentation. Second, we derive new Bayesian causal inference predictions for the data from this task. Finally, we present new empirical data from this task and compare them to model predictions.

Task and prior empirical results

Distinguishing between motion integration and motion segmentation using a stimulus that consists of only two moving elements suffers from a fundamental problem: for small differences in motion direction, when integration is most likely to occur, the integration predictions are almost indistinguishable from the case when the perceptual report is based on local retinal motion alone. We therefore performed our measurements using a recently developed stimulus consisting of three moving parts in which integration, segmentation, and absence of any interaction all predict substantially different percepts32.

The stimulus was composed of a central patch of green moving dots (the target) encircled by two concentric rings of red and blue moving dots, as depicted in Fig. 1A. The dots within the center patch and each of the surrounding rings moved coherently but motion velocity varied across the three components (see Methods for details of stimulus design). The relative motion direction between the center and the inner ring (\(\:\varDelta\:\phi\:\) in Fig. 1A) was varied across trials. Participants were instructed to maintain fixation on a dot at the center of the display and then report their perceived direction of the center patch by using a dial to match an arrow cue with their percept (Fig. 1B). Importantly, the horizontal component of all three velocities was matched, and the center velocity was intermediate to that of the surrounding rings. As a result, the relative motion of the center with respect to the inner ring was always in the direction of + 90° (upward, Fig. 1C), and the relative motion of both center and inner ring with respect to the outer ring was − 90° (downward, Fig. 1C). The experimental findings of Shivkumar et al.32 (Fig. 1D) show a clear negative bias (towards − 90°) for small \(\:\varDelta\:\phi\:\) as expected by an integration of center and inner ring, and referencing the motion of both to the outer ring. For large \(\:\varDelta\:\phi\:,\) there is a clear positive bias (towards 90°) as predicted by a segmentation of the center from the inner ring, and referencing the motion of the center to the inner ring.

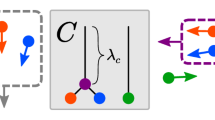

Causal structures giving rise to the three primary potential percepts (A) Upper part: velocities of moving stimulus elements. Lower part: implied relative velocities between different stimulus elements. Arrow colors are matched to the colors of the variables in the generative models in panels B-D. (B-D) Example generative models that imply no (B), positive (C), and negative (D) perceptual biases. \(\:\varvec{v}\) denotes inferred (latent) velocities while o represents velocities actually observed by the retina. Variables in black are inferred to be zero (stationary), while other colors represent non-zero velocities. More details about the model can be found in Shivkumar et al.32.

This stimulus design, which yields a clear perceptual transition from integration to segmentation, is ideally suited to test one of the hallmarks of Bayesian computations—the dependence of this transition on stimulus uncertainty. We extended this stimulus design by manipulating motion coherence to control sensory uncertainty. Specifically, we employed a Brownian motion algorithm33 to generate random-dot motion stimuli. This method enabled us to manipulate motion uncertainty exclusively based on the distribution of dot directions while keeping dot speeds constant (for details, see Methods). Participants indicated their perceived motion direction of the center stimulus under three motion coherence levels: 100%, 50%, and 33%. The motion coherence of both the center patch and the surrounding rings of dots was manipulated simultaneously.

Model predictions for uncertainty dependence

While it is intuitive that increasing stimulus uncertainty should expand the range of motion directions over which the center stimulus is integrated with the surround, thereby shifting the transition point from integration to segmentation to larger \(\:\varDelta\:\phi\:\), exactly how that will manifest itself in perception is unclear in the absence of a quantitative model. We used the causal inference model detailed in Shivkumar et al.32 to make quantitative predictions for our stimulus involving three moving groups of dots (Fig. 1A). Since making exact predictions using all possible motion structures is computationally very expensive for our stimulus, we used the 44 principal structures identified by Shivkumar et al.32 to make predictions for different levels of uncertainty. The reference frame velocity inferred in these principal structures coincided with the velocity of either the center patch, inner ring, or outer ring (see three examples in Fig. 2). We next grouped these structures into five groups based on the behavioral responses they imply, and any observed responses correspond to linear combinations between them. Finally, we generate model predictions by sampling from a wide range of plausible parameters (see Methods for details).

Effects of sensory uncertainty on model predictions. (A) Three percepts implied by the principle causal structures potentially explaining the stimulus. (B) Total posterior probability over all causal structures that are part of Group 1 (predicting a percept of 90°). The probability increases with \(\:\varDelta\:\phi\:\), before peaking and slightly decreasing at larger values. The curve shifts to the right with an increase in uncertainty. (C) Total posterior probability over all causal structures that are part of Group 3 (predicting a percept of -90°). The probability decreases with increasing \(\:\varDelta\:\phi\:\) and the curve also shifts to the right with an increase in uncertainty. (D) Change in transition point from integration to segmentation as a function of uncertainty predicted by the model with the same parameters used in (B,C) (thick black line). Thin lines show predictions made with parameters widely sampled around those used in (B,C). While the transition point decreases with decrease in uncertainty (greater motion coherence) for the majority of parameter samples (65%), some parameters also result in a non-monotonic trend (characterized in legend). (E) Same as (D) but plotting the change in point of maximum variability as a function of uncertainty.

Figure 3A shows the perceived motion directions predicted by each of the three main groups of causal structures. Group 1 (orange) consists of structures that all predict a perceptual bias of + 90°. In these structures the inner ring forms the reference frame in which the center velocity is perceived (Fig. 2C). Using plausible parameters32 (Table 1, supplementary materials), we computed the probability of inferring a structure belonging to this group (Fig. 3B). The probability increases with \(\:\varDelta\:\phi\:\) as expected for groups in which the center is perceived moving relative to the inner ring. The probability then peaks and then decreases slightly for larger \(\:\varDelta\:\phi\:\) because, at larger disparities, the slow speed component of the mixture prior over velocity favors the structures belonging to Group 2 (Figure S1D). Group 2 (green in Fig. 3A, predicted percept of 0°) includes structures in which each group is perceived independently, or the center forms the reference frame in which other elements are perceived (Fig. 2B and S1D). Structures in Group 3 include those in which the outer group forms the reference frame; they predict a perceptual bias of -90° (Fig. 2A, D). Figure 3C shows that the probability of structures in Group 3 (red in Fig. 3A) decreases as \(\:\varDelta\:\phi\:\) increases. The outer ring forms the reference frame primarily when center and inner ring are perceived to move together, since otherwise the center is more likely to be perceived relative to the inner ring. This is most likely when the difference between the center and inner ring velocities is small. In the supplementary material (Figure S1) and in Shivkumar et al. (2023)32, we provide the total posterior probabilities of additional principal causal structures potentially explaining the stimulus. Importantly, consistently across all groups of structures, posterior probability as a function of difference between center and inner ring shifts rightward with increase in uncertainty.

The predictions in Fig. 3B-C and Figure S1 are computed using the previously described distributions over parameters32. To capture the actual pattern seen in the observer responses, we would need to fit the model to data, which is outside the scope of this paper. However, to gain an understanding of the percepts predicted by the model, we generated data for 1000 synthetic observers by sampling from a wide range of plausible parameter values (see Methods). Figure 3D and E show the results from 200 such “synthetic observers” (randomly chosen from the 1000 simulated values). These synthetic observers were constrained to have a transition point less than 45 degrees for the highest uncertainty, to produce responses that are broadly similar to those of human observers. Since the average predicted perceptual reports can be approximated as a linear combination of the predicted reports for each structure, weighted by the posterior mass of each structure, we can predict that the transition from integration to segmentation in the data will shift to the right with increasing sensory uncertainty. We quantify this shift by defining a transition point parameter that corresponds to the \(\:\varDelta\:\phi\:\) value at which average reports change from negative (integration) to positive (segmentation) values (Fig. 1D). Across 200 randomly sampled synthetic observers, we find that most, but not all (189 out of 200), synthetic observers behave as expected from intuition (Fig. 3D).

Furthermore, in a simpler experiment involving only center and inner ring, but no outer ring, Shivkumar et al.32 found that the variability of measured behavioral responses generally peaked around the transition point from integration to segmentation. The reason for this is the fact that perceptual variability is primarily driven by uncertainty about the correct causal structure, and at the transition from integration to segmentation the uncertainty about the corresponding two causal structures is largest. Making a similarly simple qualitative prediction in our three-part stimulus with five potential percepts (Figure S1) is not possible, but our model simulations using plausible parameters also predict an inverted U-shape for the dependence of response variability on relative direction, with the ___location of peak variability shifting with motion coherence like the transition point for 139 out of 200 synthetic observers, Fig. 3E).

Experimental results

Having established a range of quantitative predictions from the Bayesian causal inference model, we now examine how sensory uncertainty modulates human perception. The behavioral reports of two representative observers are depicted in Fig. 4A and B, respectively. Several key points emerge from these plots. First, the data clearly replicate the main empirical findings of Shivkumar et al.32: a transition from integration-dominated responses for small \(\:\varDelta\:\phi\:\) to segmentation-dominated responses at large \(\:\varDelta\:\phi\:\), as well as an increase in response variability at intermediate values of \(\:\varDelta\:\phi\:\). Critically, when comparing responses for 100% motion coherence (low uncertainty) with those for 50% and 33% coherence (high uncertainty), we find that the transition from integration to segmentation shifts towards larger relative directions, as predicted by the model (Fig. 3D). This effect can be seen for both example observers in Fig. 4 by examining the relative direction at which the psychometric fit crosses the horizontal dashed lines. Second, the distributions of responses are widest around the transition point from motion integration to segmentation, and this point of maximum variability shifts with coherence. This finding aligns with the second prediction of the model (Fig. 3E). Third, the overall variability in responses increases with motion uncertainty, as indicated by the generally broader distributions of data at lower motion coherence levels. Finally, note that the responses of the observer in Fig. 4A exhibit a bimodal distribution near the transition point, whereas the responses of the observer in Fig. 4B show a more unimodal distribution. This observation suggests that observers may employ different decision strategies during the experiment32.

Behavioral responses of two representative observers. The x-axis represents the relative direction between the center and inner ring, while the y-axis indicates the reported center direction minus the retinal center direction. Each dot represents the report from a single trial. The response distribution obtained at each relative direction is visualized using a violin plot, and the fitted psychometric curve is displayed in each panel. Each column (and color) corresponds to a motion coherence level (100%, 50%, 33%) (A) Example of an observer showing bimodality in the distribution of responses near the transition point from integration to segmentation. (B) Representative observer showing unimodal distributions of responses across coherence levels, but increased variance of reports for intermediate relative directions.

Figure 5A summarizes the shifts of the psychometric function with coherence across our sample of eleven participants. Thick lines represent the mean responses across observers for each of the three levels of motion coherence. The plot reveals a rightward shift in the transition point from integration to segmentation with increasing motion uncertainty, as predicted by our model. We fit a sigmoidal function to the responses from each observer (Methods) in order to compute an observer-specific transition point. As predicted, these transition points decrease with increasing coherence (Fig. 5B), an effect that is highly significant at the population level (33% vs. 100%, \(\:t\left(10\right)=8.9,\:p=2.4\times\:{10}^{-6}\), one-tailed t-test; 33% vs. 50%, \(\:t\left(10\right)=4.5,\:p=5.8\times\:{10}^{-4},\:\)one-tailed; 50% vs. 100%, \(\:t\left(10\right)=5.6,\:p=9.1\times\:{10}^{-5},\:\)one-tailed). Furthermore, it is individually significant (p < 0.05) for all observers for the 33% vs. 100% condition and 10 out of 11 observers for the 50% vs. 100% and 33% vs. 50% conditions, respectively.

Transition points shift with motion uncertainty. (A) Large data points and thick curves represent the average response of observers (N = 11) as a function of relative direction between center patch and inner ring, for each of the three motion coherence levels. The individual participants’ performances are displayed in faded colors in the background. Error bars represent SEM. (B) The black symbols and line depict the average transition point across observers as a function of motion coherence, with error bars representing SEM. The lighter colored lines show the median transition point derived from bootstrapped samples (n = 1000) of individual participants, accompanied by 68% confidence intervals. Statistical significance was evaluated using a one-tailed paired t-test.

We next test the second prediction of our model, the dependence of response variability on the relative direction between center and inner ring, Δφ. Since reported direction values reflect a circular variable, we quantify their variability using the concentration parameter of a Von Mises distribution, \(\:\kappa\:\) (fit jointly with their mean, see Methods). Under the assumption of equal motor variability across directions (the orientation of our entire stimulus display is randomized between zero and 360°), 1/\(\:\kappa\:\) is directly related to perceptual variability as represented in our model.

The model predicts an inverted U-shape for the dependence of perceptual variability on motion coherence. Figure 6A shows this relationship for the empirical data, averaged across the population of participants. The curves exhibit the expected inverted U-shape for each coherence value, indicating that variability peaks at intermediate relative directions. Additionally, the peak in perceptual variability shifts rightwards toward larger relative directions with increases in motion uncertainty, as demonstrated by a statistically significant difference in the peak locations between the results at 100% vs. 33% coherence (\(\:t\left(10\right)=2.6,\:p=0.013,\:\)one-tailed t-test) and 50% vs. 33% coherence (\(\:t\left(10\right)=2.2,\:p=0.024,\)one-tailed).

Given that both the transition point from integration to segmentation and the peak response variability change with motion coherence, we examined whether these two measures are correlated, as expected from the model. We performed this analysis in three different ways: across all data, across all observers after accounting for coherence level, and across coherence levels after accounting for inter-subject differences. Each data point in Fig. 6B represents the median values of transition point and peak variability for one subject, as obtained from bootstrapping observer reports. We performed a robust regression analysis to examine the correlation between locations of transition point and peak variability, pooling across observers and coherence levels, while accounting for potential outliers in the data. This yielded a statistically significant correlation between the ___location of maximum variability and the transition point (\(\:F\left(\text{1,31}\right)=42,\:p=2.9\times\:{10}^{-7},\:r=0.76\)). To examine whether this relationship held while accounting for variability across observers, we performed an analysis of covariance (ANCOVA) using subject ID as an ordinal covariate. We again found a significant correlation between transition point and ___location of peak variability (ANCOVA, \(\:F\left(\text{1,11}\right)=9.0,\:p=0.012\)), indicating that the effect holds while accounting for variation across observers. While most individual observers show a positive correlation between these variables, a couple of observers were clear outliers (Fig. 6C). To examine whether this relationship was also found within coherence levels, we again performed ANCOVA but with coherence as an ordinal covariate instead of subject ID. In this case, we did not find a significant correlation between transition point and ___location of peak variability (ANCOVA, \(\:F\left(\text{1,27}\right)=2.4,\:p=0.13\)), indicating that the overall correlation is driven mainly by covariation across coherence levels, as predicted by the model, not by differences between observers.

The substantial heterogeneity in the data (Fig. 6C) initially surprised us given the model predictions of Fig. 3D, E. We therefore analyzed the relationship between transition point and ___location of peak response variability in data predicted by the model for our synthetic observers. Interestingly, we also found substantial variability in the model predictions that qualitatively matched the heterogeneity among human observers (Fig. 6D). While most synthetic observers showed a simple positive correlation between transition point and ___location of peak variability, a substantial minority of synthetic observers (~ 31%) showed more complex relationships (Fig. 6D). Furthermore, when the transition point in the synthetic data deviated from the peak of variability, the empirical data agreed with the model predictions in that the transition point occurred at smaller Δφ than the peak in variability for most observers (most points lie below the diagonal in Fig. 6C + D).

Although a quantitative model fit was not feasible due to the combinatorial complexity of potential causal structures (see Shivkumar et al., 202332), we can still offer some insights into the observed heterogeneity in the empirical data. Inter-subject differences in sensory noise likely explain variations in the transition from integration to segmentation, while individual biases in causal inference—specifically, the probability of perceiving the center dots as being part of the same causal structure as surrounding dots—likely account for variability in bias magnitude across relative directions (Δφ). For instance, the bias at the smallest relative directions will be large if the prior to infer center (and inner ring) as part of the same causal structure as the outer ring is large, while the bias at the largest relative directions will be greatest when the probability to infer the center as part of the same causal structure as the inner ring is largest. Together, our findings provide strong support for the hierarchical Bayesian CI model while underscoring the role of individual variations in driving perceptual differences.

Perceptual variability is greatest at intermediate relative directions and shifts with coherence. (A) The relationship between perceptual variability and relative direction exhibits an inverted U-shape as predicted by the model. Shading represents SEM. (B) Scatter plot showing the relationship between the transition point (from integration to segmentation) and the ___location of maximum perceptual variability. Each dot represents the median value obtained from bootstrapped samples for an individual observer at the three coherence levels (denoted by different colors). Error bars correspond to 68% confidence intervals. (C) Scatter plot showing the same measurements as in panel B, but connecting the data of each observer across coherence levels. Each color represents an individual observer, and the symbol shapes represent different coherence values. (D) Relationship between the transition point and the ___location of maximum perceptual variability, as predicted by the model. Each synthetic observer is colored based on how the ___location of maximum perceptual variability changes with increase in uncertainty: (i) increasing (black; ~68% of observers), (ii) convex (pink, ~ 11% of observers), (iii) concave (orange, ~ 20% of observers), (iv) non-increasing (purple, ~ 0.2% of observers). The thick lines show the average across predictions for each type.

Discussion

Our results provide critical new evidence that Bayesian Causal Inference describes the brain’s computations underlying motion integration and segmentation. Making use of a recently-developed stimulus32 that allows us to clearly differentiate between integration and segmentation processes, we found strong evidence for key predictions of a recently proposed hierarchical Bayesian causal inference model32: (1) the transition point from motion integration to segmentation shifts as a function of sensory uncertainty, and (2) the point of maximum perceptual variability shifts correspondingly with uncertainty. Interestingly, the data revealed complexities in the relationship between transition point and peak variability across observers; while counter to our original intuitions, these complexities could be explained by specific parameter regimes of the model, further supporting our quantitative framework. Traditional explanations of motion integration and segmentation suggest that these phenomena result from competitive interactions between the neural representations of local motion signals in the visual system2. The present work reframes these traditional interpretations and provides a normative framework for predicting how the brain switches from integrating to segmenting nearby motion stimuli depending on its inferences about the causal structures underlying the sensory inputs.

Our study offers an alternative interpretation for motion repulsion effects. Conventionally, motion repulsion has been interpreted as a perceptual ‘error’ arising from inhibitory interactions between direction-tuned neurons with overlapping25,26,27 or non-overlapping receptive fields15,16. Another interpretation proposes that motion repulsion arises from the computation of relative motion with respect to a heuristically inferred reference frame27. Our result goes beyond these explanations by providing evidence for the hypothesis that motion attraction (integration) and repulsion (segmentation) effects result from inferences that observers make about how moving elements in a scene are causally linked. Thus, the observed attraction or repulsion effects are not perceptual errors but reflect the effects of an underlying computation that first infers the hierarchical reference frame structure in a scene and then computes the motion of elements relative to those frames. In our model, if motion elements in the display are not inferred by the subject to have any causal linkages, we should only observe veridical reports in a retinal reference frame (no repulsion or attraction effects). However, if the visual system computes the motion of objects relative to the reference frames (hierarchical structure) that it dynamically infers, then we should expect to observe perceptual biases (attraction or repulsion) in the data.

There are several advantages of our model over existing mechanistic explanations16,25,26 of motion repulsion. Our model is not limited to modeling the interactions between only two moving stimuli—it can predict motion integration-segmentation effects using more complex motion stimuli. Importantly, and contrary to previous models, ours provides a normative framework for making quantitative predictions about how the brain functions under conditions of complex motion perception. This theoretical framework can inform the design of richer motion perception tasks and provide a framework for interpreting complex patterns of neurophysiological activity32. Our framework is not incompatible with mechanistic models aimed at explaining the neural mechanisms underlying motion perception tasks; it is a computational model (in Marr’s terms) and can serve to guide construction of models at the algorithmic (or neural) implementation level. In particular, it points to a key missing ingredient in existing mechanistic models like that by Dakin & Mareschal27: inferring the causal structure of the scene and making the computations of relative motion depend on that inference.

The present study addresses two key aspects regarding the transition from integration to segmentation in motion perception tasks that are largely missing in the literature: (1) how the transition point changes under conditions of sensory uncertainty, and (2) the absence of a model to explain this transition. For instance, Nawrot & Sekuler18 demonstrated spatial interactions between stripes of dots moving in one direction (biasing stripes) and stripes of dots moving in the opposite direction at different coherence levels (ambiguous stripes). They found that perception changes from integration (also known as assimilation) to segmentation (also known as contrast) as a function of stripe width. Similarly, Murakami & Shimojo20 used a center-surround configuration of same/opposite moving dots to show a shift in perceptual bias from integration to segmentation with increasing size of the center stimulus. Additionally, Braddick et al.23, using a transparent motion display composed of two sets of random-dot patches moving in different directions, observed a perceptual transition from integration at small angular differences between the moving components to segmentation at larger differences. Despite these observations, none of the aforementioned studies investigated how the perceptual transition depends on uncertainty or could predict when the transition should occur. Bridging this gap in the literature is important because understanding the dynamics of the transition from motion integration to segmentation is crucial for elucidating the underlying neural mechanisms mediating these processes and for informing the design and constraints of future models at the neural circuit level.

Gaudio and Huang22 reported that motion noise alters the directional interaction between transparently moving stimuli, shifting from repulsion to attraction. Some aspects of their findings are quite similar to ours. Specifically, they observed that small angular differences yielded attractive biases (integration) for low motion coherence and repulsive biases (segmentation) at high coherence. However, whereas we observed a transition from integration to segmentation with increasing angular difference even at the lowest motion coherence, Gaudio and Huang22 consistently found attractive biases at low coherence for a broad range of angular differences. Our work substantially extends that of Gaudio and Huang22 by providing quantitative model predictions for how perceptual reports should depend on both angular difference (\(\:\varDelta\:\phi\:\)) and sensory uncertainty, as well as examining the variance of perceptual reports.

A novel finding and prediction of our study concerns the correlation between the transition point and the ___location of peak response variability. In the literature, measurements of response variability in motion integration vs. segmentation tasks have generally not been reported. The only exception, to our knowledge, is the study of Braddick et al.23, who reported participants’ response variability as a function of angular difference (see their Fig. 3a). Their data may show a maximum in response variability for intermediate angular differences, but they did not provide any quantitative analysis to establish such a maximum. Our findings extend previous work by showing that the well-defined peak in response variability shifts with sensory uncertainty, roughly following the shift of the transition point from integration to segmentation. In our modeling framework, this peak in variability reflects the observer’s uncertainty about whether to integrate or segment the motion signals, a clear signature of causal inference.

An important question is how our model’s predictions relate to neural representations. Although concrete neural predictions require specific assumptions about the relationship between the model’s posterior distributions and neural responses, one would expect neural responses to reflect perceptual biases if neural populations represent posterior distributions over perceived velocities or latents in our generative model. For example, in the context of optic flow-parsing34, a mechanism proposed for computing object motion during self-motion, perceptual biases arise as a result of the flow-parsing computations. A potential neural basis of this process has recently been described in macaque area MT at both the individual unit and population levels35. Moreover, we predict that under stimulus conditions for which perceptual variability is high, neural variability will also be higher-than-average36.

To summarize, our work sheds light on the longstanding problem of how the brain decides to integrate or segment motion signals. Our results support the hypothesis that Bayesian causal inference governs the computations by which the brain solves this problem. This study contributes to a growing body of work suggesting that Bayesian Causal Inference may be a “universal computation” in the brain37.

Methods

Participants

A total of fourteen participants took part in this study, consisting of nine males and six females. Eleven observers (5 males, 6 females) were naive to the purpose of the study. Three participants were excluded from further analysis due to not meeting the study’s exclusion criteria, as described below. All participants had normal or corrected-to-normal vision. Prior to the experiment, each participant provided written consent, and compensation was provided for their participation. We confirm that all experimental procedures and methods adhered to the guidelines and regulations approved by the Office of Human Subject Protection (OHSP) at the University of Rochester. The OHSP grounds its ethical principles on the Declaration of Helsinki and its subsequent revisions, especially the Belmont Report.

Apparatus

Observers viewed visual stimuli presented on an AORUS FO48U monitor. The stimulus was displayed at a resolution of 2048 × 1152 with a refresh rate of 60 Hz. At the viewing distance of 1.04 m, the display subtended 54 by 32 deg of visual angle. Subjects’ heads were stabilized using a head/chin rest and eye movements were tracked using the Eyelink 1000 system. Stimuli were generated using MATLAB (MathWorks) and the Psychophysics toolbox38. The background luminance was set to 0.3 cd/m2 as measured with a Minolta (LS-110) luminance meter, and the room was darkened during experiments.

Experimental design

Stimuli

The visual stimulus consisted of a central patch of green moving dots surrounded by two concentric rings of red and blue moving dots (Fig. 1A). Since the stimuli were viewed eccentrically, different colors were used to help observers attend to the central target patch and report its direction. Each dot had a diameter of 0.1 degrees. A white fixation target with a diameter of 0.5 degrees was placed in the center of the screen. The stimulus was presented at an eccentricity of 5 degrees, measured from the center of the display to the center of the central patch. The number of dots in the center patch, inner ring, and outer ring were 10, 50, and 250, respectively. The center patch had a radius of 1 degree, while the inner and outer rings had an annular width of 1 degree with a 0.5-degree gap between them. Hence, the entire 3-ring stimulus had a radius of 4 deg. The relative direction of motion between the central target and the inner ring was chosen from the set [-3.75, -7.5, -15, -30, -45, -60], where negative angles indicate clockwise rotation of the center patch relative to the inner ring. The outer ring always moved in a direction that was 60 degrees counterclockwise relative to the center patch. The center patch moved with a speed of 0.5 deg/s, which was selected to enhance the segmentation effect39. The center patch direction was randomly chosen from a uniform distribution that spanned 360 deg, to discourage any systematic reporting biases. The horizontal component of the outer ring, inner ring, and center patch velocities was set to be equal resulting in a -90 deg (downward) relative direction between the center and inner ring with respect to the outer ring, and a + 90 deg (upward) relative direction between the center patch and the inner ring.

Motion uncertainty

In this study, we varied motion coherence to manipulate sensory uncertainty and assess its effect on motion integration and segmentation. Specifically, we utilized a Brownian motion algorithm as the basis for generating the random-dot motion stimuli33. Random dot stimuli are commonly employed for investigating motion perception due to their flexibility in manipulating the strength of motion energy in specific directions and speeds. Additionally, random dot motion stimuli are often used to study the dorsal visual pathway, or “where” stream, as they lack coherent form cues. Unlike other random dot motion algorithms (e.g., white noise40), in which noise dots are assigned random directions and speeds in each frame, Brownian motion moves all dots at the same speed33. Therefore, in Brownian motion, only the direction of the noise dots changes randomly from frame to frame. This allows us to manipulate motion uncertainty based solely on direction, without altering the speed of the dots. In each trial of the experiment, we simultaneously manipulated the motion coherence of the center patch and the surrounding rings using three levels of coherence: 100%, 50%, and 33%. We initially explored the use of stimulus contrast as an uncertainty variable, but the observed effects were weak. Given that reducing contrast introduces other forms of uncertainty (e.g., stimulus presence, ___location), we focused on the coherence manipulation as a means to selectively manipulate uncertainty about direction of motion.

Task procedure

The task schematic for this study is depicted in Fig. 1B. At the onset of each trial, a fixation dot appeared for 0.5 s at the center of the display. Participants (N = 11) were instructed to maintain their gaze on the fixation target and direct their attention towards the green center patch (target stimulus, \(\:{\stackrel{-}{v}}^{center}\)). After a two-second presentation of the random-dot stimulus, participants performed a motion estimation task by indicating their perceived direction of motion for \(\:{\stackrel{-}{v}}^{target}\). Participants were given a maximum of 3.5 s to make their report before the next trial began. They used a rotating dial to adjust the direction of an arrow cue (Fig. 1B) to match their perceived direction of the central green patch; they subsequently pressed a key on a keypad to finalize their response.

Within each experimental session, all combinations of relative motion directions and coherence levels were randomly interleaved across trials. Additionally, we included a control condition in which the surrounding rings’ dots moved randomly (0% coherence), and only the center patch moved at one of the selected motion coherence levels (33%, 50%, or 100%). Each observer took part in five experimental sessions, totaling 1260 trials per participant. Each session took approximately 1 h to complete. All trials were recorded, and any trials for which eye position fell outside of a 2.5-degree radius from the fixation point were discarded (4.88–10.26% of trials across all observers). Furthermore, participants whose performance at the lowest motion uncertainty level (100% coherence) exhibited a circular variance greater than 0.5 across the experimental conditions were not included in subsequent analyses (3 out of 14; see Figure S4).

Model description

The causal inference model detailed in Shivkumar et al.32 was applied to the stimulus in Fig. 1A, which had 3 groups of moving dots. The causal inference model consisted of a repeated motif that groups the velocities of different elements moving on the screen. The groups formed the reference frame in which the velocity of each element is inferred. The motif was a generative model in which the retinal velocity of each element was the sum of the group velocity that the element belongs to and the relative velocity to the group velocity. The prior over relative velocity was a mixture of a delta function centered at zero and a zero mean Gaussian distribution. Such a prior naturally transitions between inferring every element as either being stationary relative to the group, i.e. forming the group, or moving in the group’s reference frame. The motif was applied to all possible groupings of the three elements forming different possible structures. The full causal inference model inferred the posterior probability over structures, which was then used to weight the probability over the velocities inferred under each structure. Figure 2 showcases three example generative models from three types of causal structures.

While having 3 different groups of dots results in 512 possible structures32, for tractability and ease of interpretation we restricted the structures examined to those in which one of the relative velocities was zero32. These principal structures predict 3 possible percepts that are shown in Fig. 3A.

This simplified model has 13 parameters (see32 for more details) which are: (a) the three sensory uncertainties associated with each velocity (3 parameters), (b) the computational noise at each level of the hierarchy in the model (1 parameter), (c) the mixture prior parameters (2 parameters), (d) the probability of grouping center & inner ring, inner & outer ring, and center & outer ring (3 parameters), (e) the probability of grouping the groups in (d) with the remaining element (3 parameters), and (f) the probability of grouping center, inner and outer ring together into a single group (1 parameter).

To generate synthetic observers, we started with plausible parameters by choosing the inner and outer ring uncertainties and the computational noise to be much smaller, and the prior to be much larger, than the center ring uncertainty (chosen as be 5 deg/s standard deviation) in accordance with model assumptions. We also used prior knowledge of the stimulus arrangement to choose a higher probability of the center being grouped with the inner ring (compared to the other combinations) and then with the outer ring. However, to explore the space of model predictions, we chose plausible parameters by drawing them from the appropriate distributions around the representative parameters (detailed in supplementary Table 1, supplementary materials). We ensured that the relationship between the uncertainties (inner being less than center and more than outer ring) was maintained and the prior probabilities for all structures added to 1. We also increased each sensory uncertainty variance by a factor of 2 for simulating medium uncertainty and by a factor of 4 for simulating high uncertainty.

To only consider parameters that could be plausible for the participants, we removed any parameter that resulted in a transition point (defined as the point where the mean response shift crosses zero) greater than 45 degrees, consistent with what we observe in the data. Further, for ease of interpretability, in Fig. 6D, we also excluded parameters which resulted in non-decreasing transition points with decrease in reliability, leaving 529 out of the 1000 observers, which were used to study the relation between the transition points and the point of maximum variability.

Finally, as noted in Shivkumar et al.32, inferring the model parameters from our empirical data is challenging due to the large number of possible causal structures (264 for our three-part stimulus). While many of these structures will have low posterior probabilities, we expect strong dependencies in the resulting posterior over model parameters given our essentially one-dimensional data (only varying the relative direction between center and inner ring). Uniquely identifying the parameters for individual subjects would likely require a more diverse range of stimuli and large numbers of trials.

Statistical analysis

For each observer, the circular mean and variability of their responses was plotted as a function of the relative direction between center patch in inner ring (\(\:\varDelta\:\phi\:\)), and the data were fitted by maximizing the likelihood of individual responses, R, using the following noise model:

where \(\:\lambda\:\) is the lapse rate and \(\:{\kappa\:}_{\varDelta\:\phi\:}\) is the inverse variability (circular). \(\:{\kappa\:}_{\varDelta\:\phi\:}\) represents a combination of perceptual and motor variability. Fitting it separately for each \(\:\varDelta\:\phi\:\) accounts for the fact that perceptual variability is predicted to vary as a function of \(\:\varDelta\:\phi\:\) by the model and is empirically known to do so.

For the mean responses, we assumed a sigmoidal dependency on \(\:\varDelta\:\phi\:\) as given by:

where \(\:a\) represents the lower asymptote, \(\:(a+b)\) the upper asymptote, \(\:\mu\:\) the midpoint of the sigmoid, and \(\:\tau\:\) the slope parameter. We bootstrapped trials (n=1000) to obtain confidence intervals and assess the statistical significance of parameter estimates. The model’s fit to data from an example observer is illustrated in Figure S2. In addition, for the summary statistics across observers, we conducted one-tailed paired t-tests to assess statistical significance. We chose a one-tailed test because our model predicts a rightward shift with increases in motion uncertainty.

Perceptual variability calculation.

The model fits captured the variability in the responses for each relative direction, Δφ, using \(\:{\kappa\:}_{\varDelta\:\phi\:}\) in Eq. (1). In order to combine the variabilities \(\:1/{\kappa\:}_{\varDelta\:\phi\:}\) across observers in Fig. 5A, we first scaled the maximum of \(\:1/{\kappa\:}_{\varDelta\:\phi\:}\) to be 1 for each observer. For each observer, we then determined the point at which \(\:1/{\kappa\:}_{\varDelta\:\phi\:}\) took its maximum by fitting to it a quadratic function. Finally, we aligned \(\:1/{\kappa\:}_{\varDelta\:\phi\:}\) for each observer to the same average peak ___location and averaged across all observers.

Data availability

The datasets and code for this work is located at https://figshare.com/s/4b8e56cb9e660266c8f2.

References

Wertheimer, M. Experimentelle Studien über das Sehen Von Bewegung. Z. Psychol. 61, 161–265 (1912).

Braddick, O. Segmentation versus integration in visual motion processing. Trends Neurosci. 16, 263–268. https://doi.org/10.1016/0166-2236(93)90179-p (1993).

Britten, K. H., Shadlen, M. N., Newsome, W. T. & Movshon, J. A. Responses of neurons in macaque MT to stochastic motion signals. Vis. Neurosci. 10, 1157–1169. https://doi.org/10.1017/s0952523800010269 (1993).

Tadin, D., Lappin, J. S., Gilroy, L. A. & Blake, R. Perceptual consequences of centre–surround antagonism in visual motion processing. Nature. 424, 312–315. https://doi.org/10.1038/nature01812 (2003).

Tadin, D. et al. Spatial suppression promotes rapid figure-ground segmentation of moving objects. Nat. Commun. 10, 2732–2732. https://doi.org/10.1038/s41467-019-10653-8 (2019).

Sims, C. R. Efficient coding explains the universal law of generalization in human perception. Science. 360, 652–656. https://doi.org/10.1126/science.aaq1118 (2018).

Chu, M. W., Li, W. L. & Komiyama, T. Balancing the robustness and efficiency of odor representations during learning. Neuron. 92, 174–186. https://doi.org/10.1016/j.neuron.2016.09.004 (2016).

Grossberg, S. Adaptive resonance theory: How a brain learns to consciously attend, learn, and recognize a changing world. Neural Netw. 37, 1–47. https://doi.org/10.1016/j.neunet.2012.09.017 (2013).

Mather, G. & Moulden, B. A simultaneous shift in apparent direction: Further evidence for a distribution-shift model of direction coding. Q. J. Exp. Psychol. 32, 325–333. https://doi.org/10.1080/14640748008401168 (1980).

Marshak, W. & Sekuler, R. Mutual repulsion between moving visual targets. Science. 205, 1399–1401. https://doi.org/10.1126/science.472756 (1979).

Burr, D. & Thompson, P. Motion 1985–2010. Vis. Res. 51, 1431–1456. https://doi.org/10.1016/j.visres.2011.02.008 (2011).

Qian, N., Andersen, R. A. & Adelson, E. H. Transparent motion perception as detection of unbalanced motion signals. I. Psychophysics. J. Neurosci. 14, 7357–7366. https://doi.org/10.1523/jneurosci.14-12-07357.1994 (1994).

Kim, J. & Wilson, H. R. Direction repulsion between components in motion transparency. Vis. Res. 36, 1177–1187. https://doi.org/10.1016/0042-6989(95)00153-0 (1996).

Hiris, E. & Blake, R. Direction repulsion in motion transparency. Vis. Neurosci. 13, 187–197. https://doi.org/10.1017/s0952523800007227 (1996).

Kim, J. & Wilson, H. R. Motion integration over space: Interaction of the center and surround motion. Vision. Res. 37, 991–1005. https://doi.org/10.1016/S0042-6989(96)00254-4 (1997).

Wang, H., Wang, Z., Zhou, Y. & Tzvetanov, T. Near- and far-surround suppression in human motion discrimination. Front. NeuroSci. 12, 206–206. https://doi.org/10.3389/fnins.2018.00206 (2018).

Rauber, H. J. & Treue, S. Revisiting motion repulsion: Evidence for a general phenomenon? Vision. Res. 39, 3187–3196. https://doi.org/10.1016/S0042-6989(99)00025-5 (1999).

Nawrot, M. & Sekuler, R. Assimilation and contrast in motion perception: Explorations in cooperativity. Vision. Res. 30, 1439–1451. https://doi.org/10.1016/0042-6989(90)90025-G (1990).

van Doorn, A. J. & Koenderink, J. J. Temporal properties of the visual detectability of moving spatial white noise. Exp. Brain Res. 45, 179–188. https://doi.org/10.1007/bf00235777 (1982).

Murakami, I. & Shimojo, S. Assimilation-type and contrast-type bias of motion induced by the surround in a random-dot display: Evidence for center-surround antagonism. Vision. Res. 36, 3629–3639. https://doi.org/10.1016/0042-6989(96)00094-6 (1996).

Alais, D., Van Der Smagt, M. J., Van Den Berg, A. V. & Van De Grind, W. A. Local and global factors affecting the coherent motion of gratings presented in multiple apertures. Vision. Res. 38, 1581–1591. https://doi.org/10.1016/S0042-6989(97)00331-3 (1998).

Gaudio, J. L. & Huang, X. Motion noise changes directional interaction between transparently moving stimuli from repulsion to attraction. PLOS ONE. 7, e48649–e48649. https://doi.org/10.1371/JOURNAL.PONE.0048649 (2012).

Braddick, O. J. & Curran, W. Directional performance in motion transparency. Vision. Res. 42, 1237–1248. https://doi.org/10.1016/S0042-6989(02)00018-4 (2002).

Farrell-Whelan, M., Wenderoth, P. & Brooks, K. R. Challenging the distribution shift: Statically-induced direction illusion implicates differential processing of object-relative and non-object-relative motion. Vis. Res. 58, 10–18. https://doi.org/10.1016/j.visres.2012.01.018 (2012).

Wilson, H. R. & Kim, J. A model for motion coherence and transparency. Vis. Neurosci. 11, 1205–1220. https://doi.org/10.1017/S0952523800007008 (1994).

Qian, N., Andersen, R. A. & Adelson, E. H. Transparent motion perception as detection of unbalanced motion signals. III. Modeling. J. Neurosci. 14, 7381–7392. https://doi.org/10.1523/jneurosci.14-12-07381.1994 (1994).

Dakin, S. C. & Mareschal, I. The role of relative motion computation in ‘direction repulsion’. Vision. Res. 40, 833–841. https://doi.org/10.1016/S0042-6989(99)00226-6 (2000).

Johansson, G. Configurations in the perception of velocity. Acta. Psychol. 7, 25–79. https://doi.org/10.1016/0001-6918(50)90003-5 (1950).

Yang, S., Bill, J., Drugowitsch, J. & Gershman, S. J. Human visual motion perception shows hallmarks of bayesian structural inference. Sci. Rep. 11, 3714–3714. https://doi.org/10.1038/s41598-021-82175-7 (2021).

Gershman, S. J., Tenenbaum, J. B. & Jäkel, F. Discovering hierarchical motion structure. Vision. Res. 126, 232–241. https://doi.org/10.1016/j.visres.2015.03.004 (2016).

Bill, J., Pailian, H., Gershman, S. J. & Drugowitsch, J. Hierarchical structure is employed by humans during visual motion perception. Proceedings of the National Academy of Sciences 117, 24581–24589 (2020). https://doi.org/10.1073/PNAS.2008961117

Shivkumar, S., DeAngelis, G. C. & Haefner, R. M. Hierarchical motion perception as causal inference. bioRxiv (2023). https://doi.org/10.1101/2023.11.18.567582

Pilly, P. K. & Seitz, A. R. What a difference a parameter makes: A psychophysical comparison of random dot motion algorithms. Vision. Res. 49, 1599–1612. https://doi.org/10.1016/j.visres.2009.03.019 (2009).

Warren, P. A. & Rushton, S. K. Optic l. Curr. Biol. 19, 1555–1560. https://doi.org/10.1016/J.CUB.2009.07.057 (2009).

Peltier, N. E., Anzai, A., Moreno-Bote, R. & DeAngelis, G. C. A neural mechanism for optic flow parsing in macaque visual cortex. Curr. Biol. https://doi.org/10.1016/j.cub.2024.09.030 (2024).

Lange, R. D. & Haefner, R. M. Task-induced neural covariability as a signature of approximate bayesian learning and inference. PLoS Comput. Biol. 18, e1009557. https://doi.org/10.1371/journal.pcbi.1009557 (2022).

Shams, L. & Beierholm, U. Bayesian causal inference: A unifying neuroscience theory. Neurosci. Biobehav. Rev. 137 https://doi.org/10.1016/J.NEUBIOREV.2022.104619 (2022).

Brainard, D. H. The Psychophysics Toolbox. Spat. Vis. 10, 433–436 (1997).

Chen, T. T., Maloney, R. T. & Clifford, C. W. G. Determinants of the direction illusion: motion speed and dichoptic presentation interact to reveal systematic individual differences in sign. J. Vis. 14 https://doi.org/10.1167/14.8.14 (2014).

Britten, K. H., Shadlen, M. N., Newsome, W. T. & Movshon, J. A. The analysis of visual motion: A comparison of neuronal and psychophysical performance. J. Neurosci. 12, 4745–4765. https://doi.org/10.1523/jneurosci.12-12-04745.1992 (1992).

Acknowledgements

We thank Tadin Duje for helpful comments and discussions.

Author information

Authors and Affiliations

Contributions

Conceptualization: BP, SS, GCD, RMH; Methodology: BP, SS, GL, GCD, RMH; Investigation: BP; Data collection: BP; Analysis: BP, SS, GL, GCD, RMH; Visualization: BP, SS, GCD, RMH; Writing—original draft: BP, SS; Writing—review & editing: BP, SS, GL, GCD, RMH; Funding acquisition: GCD, RMH.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Penaloza, B., Shivkumar, S., Lengyel, G. et al. Causal inference predicts the transition from integration to segmentation in motion perception. Sci Rep 14, 27704 (2024). https://doi.org/10.1038/s41598-024-78820-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-024-78820-6