Abstract

Embroidery images carry rich historical information and are an important form of embroidery art. In the field of combination query image retrieval, how to efficiently retrieve the embroidery image information required by users has become a current research challenge. In recent years, convolutional neural networks (CNNs) have achieved significant success in image feature extraction, but they tend to focus on local information, making it easy to ignore global context information when processing such textured embroidery images. Therefore, we propose a combination query retrieval method for embroidery images. First, we propose Blend-Transformer, which introduces Group External Attention (GEA). GEA can integrate feature information from three different dimensions, effectively capturing the local and global context information of embroidery images. Second, we propose Enhanced CNN, which introduces Shuffle Attention (SA), regrouping the reference image features extracted by CNN and reaggregating them by channel to enhance the richness of embroidery image feature information. Through experiments on the TCE-S and ICR2020 standard datasets, we verify the excellent performance of the proposed algorithm in embroidery image retrieval. Our method fills the gap in embroidery image retrieval research and provides a new perspective for the protection of embroidery art.

Similar content being viewed by others

Introduction

Embroidery images, as a brilliant cultural heritage of the Chinese nation, not only bear profound historical information, but also serve as an important form of embroidery art. With the rapid development of digital technology, the protection, inheritance, and innovation of embroidery images are facing unprecedented challenges and opportunities. In reality, there are many types of embroidery images, including different patterns, colors, textures, and stitching techniques, with high levels of detail, which makes it difficult to extract uniform and effective features. Examples of embroidery images are shown in Fig. 1. In the field of image retrieval, how to efficiently retrieve the embroidery image information desired by users has become the focus and difficulty of current research.

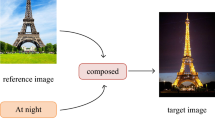

In the field of image retrieval, especially for embroidery images with highly complex texture and vivid color features, traditional Content-Based Image Retrieval (CBIR)1 technology faces challenges. With the rapid development of information technology, users’ expectations for image retrieval are no longer limited to querying a single image. In this context, query-based image retrieval technology2,3 was born, which combines image and text query modes and greatly enhances the precision of retrieval. In terms of image feature extraction, Convolutional Neural Network (CNN)4 has strong learning ability and feature representation ability, and has been successfully applied to combination query image retrieval methods5,6 as shown in Fig. 2(a). However, its limitations cannot be ignored7, especially for embroidery images with complex backgrounds and interactive patterns, where CNN often pays too much attention to local image details and ignores the integration of global context information.

In recent years, Vision Transformer (ViT)8 has achieved great success in many fields9,10, mainly due to its ability to capture rich feature information and especially excel at modeling global information. Given these advantages of ViT, many studies have been applied to the combination query image retrieval task11,12, as shown in Fig. 2(b). However, during the application process, we noticed that the Multi Head Self-Attention (MHSA) mechanism in ViT13 can pay attention to the correlation between internal features of samples, but to some extent, it ignores the correlation between different samples. This limitation makes it difficult for the model to fully grasp the global conditions of the entire dataset, which limits the ability of the ViT network to capture complex image structural information.

To address this issue, we propose an innovative combination query embroidery image retrieval method, as shown in Fig. 2(c). First, we introduce the Grouped External Attention (GEA) module and integrated it into the Blend-Transformer architecture to replace the traditional MHSA mechanism. GEA can extract feature information from the reference image in three different dimensions, enabling Blend-Transformer to better understand and process complex visual inputs while retaining local details. Second, we propose Enhanced CNN, introducing the Shuffle Attention (SA) module. The SA module achieves information flow between different feature groups by exchanging information between channels, optimizing the CNN’s problem of insufficient utilization of image feature relevance.

Finally, we combine the enhanced features of CNN and the contextual features of Blend-Transformer to achieve a comprehensive representation of the embroidery image features for subsequent combination query retrieval. We conduct extensive experiments on the TCE-S30 and ICR202031 publicly available datasets, and the experimental results confirmed the superior performance of our algorithm. Our method not only can quickly and accurately retrieve the embroidery images required by users, but also can effectively handle the complex texture and color features of embroidery images.

Related work

Combined query image retrieval algorithms

Although traditional image retrieval14 has achieved certain results, it is difficult to meet the modern retrieval needs of the information explosion era. The development of deep learning technology15 has promoted the emergence of combination query image retrieval technology. The ComposeAE2 method combines CNN and BERT16 to obtain features of different lengths for images and text, and uses autoencoder learning to learn feature combination. Chen et al. proposed the VAL framework17 by learning visual and linguistic attention through multi-level composite Transformer, effectively combining the two. Xu et al.18 proposed ComqueryFormer, which combines global, entity, and structured visual information, and combines reference images and text from coarse to fine granularity to further improve retrieval effect. Yang et al.19 proposed CRN, which can handle various types of query text, including modified text, auxiliary query text, or their combinations.

Vision transformer

In the field of computer vision, the successful application of Transformer models20 not only revolutionized natural language processing, but also inspired the exploration of ViT8 in image recognition tasks. In recent years, researchers have been exploring improved architectures for ViT21,22,23,24. CPVT22 innovatively uses convolutional layers to replace the traditional learnable position embedding, to better capture the local structural information of images. Touvron et al.23 further improved the model capacity and generalization ability of ViT by constructing deeper Transformer structures and combining specialized training optimization strategies. TNT24 proposed a novel Transformer structure that models the pixel-level interactions within each patch, retaining more rich local features. However, the MHSA module in ViT only focuses on the internal correlation of samples, ignores the inter-sample relationship. Therefore, we proposed Blend-Transformer, whose core lies in introducing the GEA module to replace the MHSA in ViT.

Attention mechanism

Within the framework of deep learning, attention mechanisms possess unique local and global modeling capabilities. The local attention module25 mimics the aggregation strategy of deep convolutional networks, integrates content-aware characteristics into self-attention mechanisms. At the same time, by combining global and local attention mechanisms26, a full-kernel module is built by constructing a branch of global, large-scale, and local features, achieving a comprehensive feature representation learning. In recent years, various attention mechanism variants27,28 have flourished. Among them, Cui et al.27 proposed the ChaIR model, exploring the potential value of channel interactions in image restoration tasks without explicitly introducing spatial attention mechanisms. Xue et al.28 trained an attention-aware MLP and used attention-scoring ranking features to prune the feature set through a two-stage heuristic strategy, ensuring model accuracy while reducing computational cost.

Method

Research motivation

In the context of embroidery image retrieval, the local receptive field of CNN limits the acquisition of global feature information. Therefore, we introduce ViT to adapt to the characteristics of embroidery images. However, the MHSA mechanism in ViT mainly focuses on intra-sample correlation within a frame and ignores global context. To overcome this limitation, we propose the GEA module and replace MHSA with GEA to construct the Blend-Transformer in ViT. Then, we propose Enhanced CNN, introducing the Shuffle Attention (SA) module to reconstruct the CNN features through channel shuffling, further enhancing the richness and relevance of the feature information. Finally, we combine the Enhanced CNN and Blend-Transformer features to achieve a comprehensive representation of embroidery image features for subsequent combination query retrieval.

Network architecture

As shown in Fig. 3, in order to capture more rich feature information in the reference image, we designed a network that combines Enhanced CNN and Blend-Transformer. The network is designed to extract both local and global feature information from the reference image at the same time. Meanwhile, we use the BERT model to extract relevant text features.

Specifically, the reference image \(x\) is encoded through two different branches. In the upper branch, we first use CNN to encode the reference image to capture its local features. Then, through the introduction of SA module, we group and shuffle the local features extracted by CNN to further enrich and enhance these features. After SA module processing, we get a two-dimensional spatial feature vector \({f}_{img1}\left(x\right)\), which can accurately describe the local feature distribution of the reference image, as shown in Eq. (1):

where \(B\) is batch, \(C\) is the number of channels, \({\phi}_{l}\) is the reference image local feature.

In the lower branch of the architecture, the reference image \(x\) is processed by a component called Blend-Transformer, and then encoded into a set containing 2d spatial feature vectors, which is expressed as \({f}_{img2}\left(x\right)\). This process can be described by mathematical Eq. (2):

where \({\phi}_{g}\) is the reference image global feature.

Then \({\phi}_{l}\) is fused with \({\phi}_{g}\) to get \({\phi}_{gl}\), which can be described by Eq. (3):

where \({\phi}_{gl}\) is fusion feature of the reference image.

Simultaneously, we use BERT to extract the text feature \({f}_{text}\left(t\right)\), which can be described by Eq. (4):

where \({\phi}_{t}\) is text feature.

On the basis of following the method described in the baseline1, we fuse feature \({\phi}_{t}\) and \({\phi}_{gl}\) vectors build query features \({\phi}_{q}\) in two-dimensional space. Then, we will use CNN to extract the key features of the target image and form feature vectors \({\phi}_{tar}\).

Blend-transformer

In order to overcome the limitations of MHSA mechanism in ViT, we proposes Blend-Transformer. Specifically, as shown in Fig. 3, Blend-Transformer consists of the LN layer, GEA, and MLP. The following is a detailed description of GEA.

Our GEA module design is inspired by the external attention module to further enrich the feature information of the reference image. As shown in Fig. 4, the principle of the GEA module is that it can extract the feature information of the reference image from three different dimensions. This design aims to maximize the feature representation of the reference image, so as to improve the performance of the model in visual tasks. By integrating feature information of different dimensions, the GEA module enables Blend-Transformer to better understand and process complex visual inputs.

Specifically, on the one hand, we extract the features \({\phi}_{w}\) of dimension \(W\) and \({\phi}_{h}\) of dimension \(H\) respectively, which are described as Eqs. (5) and (6):

where \({X}_{1}\in\:{\mathbb{R}}^{B\times\:H\text{*}W\times\:C}\), \({\phi}_{w}\in\:{\mathbb{R}}^{B\times\:C\times\:H\times\:W}\), \({\phi}_{h}\in\:{\mathbb{R}}^{B\times\:C\times\:W\times\:H}\), \(R\) represents Reshape operation, \(F{C}_{-}w\) represents fully connected operation on \(W\) dimension, \(F{C}_{-}h\) represents fully connected operation on \(H\) dimension, \(Per\) represents \(Permute\) operation.

Next, we combine \({\phi}_{w}\) with \({\phi}_{h}\) to obtain fusion feature \({\phi}_{fuse1}\). As described in Eq. (7):

where \(\varphi _{{fuse1}} \in {\mathbb{R}}^{{B \times H{\text{*}}W \times 2C}}\), \(Fla\) represents Flatten operation, \(Tr\) represents Transpose operation.

In the GEA module, firstly, the feature information between channels will be interacted and fused through a 1 × 1 convolution operation. Then, in order to calculate the feature attention between samples, we use two externally shared matrices \(\left({M}_{k},{M}_{v}\right)\) for operation to obtain the fused feature representation \({\phi}_{fuse2}\). The specific implementation of this process is shown in Eq. (8):

where \({\phi}_{fuse2}\in\:{\mathbb{R}}^{B\times\:H\text{*}W\times\:C}\), * represents ⊗ (element-wise product), \(Conv\) represents 1 × 1 convolution, \({M}_{k}\in\:{\mathbb{R}}^{d\times\:s}\), \({M}_{v}\in\:{\mathbb{R}}^{s\times\:d}\), in our experiment, \(d\) is 768 and \(s\) is 64.

On the other hand, we introduce two external shared matrices \(\left({M}_{k},{M}_{v}\right)\)29 into the dimension \(H\times\:\text{W}\) to obtain the features \({\phi}_{hw}\). As described in Eq. (9):

In the final step, we first fuse \({\phi}_{hw}\) and \({\phi}_{fuse2}\), and then readjust and exchange feature information between different channels through a 1 × 1 convolution layer to generate a comprehensive feature representation \({X}_{1}^{{\prime}}\). As described in Eq. (10):

Compared with the MHSA mechanism in ViT, the GEA module introduced in Blend-Transformer not only considers the two spatial dimensions of height \(H\) and width \(W\), but also considers the joint spatial dimension of \(H\times\:\text{W}\), so that it can capture more comprehensive and rich reference image feature information. In addition, the GEA module calculates the correlation between different samples through two external shared matrices, which effectively enhances the model’s ability to perceive the global conditions of the dataset. Therefore, compared with ViT, Blend-Transformer shows better performance in capturing global information of reference images with its GEA module.

Enhanced CNN

To enhance CNN’s ability to extract feature information from reference images, we propose the Enhanced CNN method, which incorporates the Shuffle Attention (SA) module29. As depicted in Fig. 5, the SA module first divides the feature map into multiple independent sub features according to the channel dimension, and then the correlation of these sub features at the spatial and channel levels is carefully modeled. After completing the correlation modeling, multiple sub features are aggregated through an innovative strategy, in which a “channel shuffling” operation is specially introduced, which allows the information between different sub features to be efficiently exchanged. Through the SA module, we reorganize the reference image features extracted by CNN groups at the channel level, and realize the flow of information between different feature groups. This strategy greatly enriches the diversity and relevance of the reference image feature information.

Loss

We adopt a deep metric learning (DML) approach to train model. Our training objective is to learn a similarity metric, \(\kappa \left( {\cdot,\cdot} \right):{\mathbb{R}}^{d} \times {\mathbb{R}}^{d} \mapsto {\mathbb{R}}\), between composed image-text query features \(\vartheta\:\) and extracted target image features \(\psi\:\left(y\right)\). The composition function \(f(z,q)\) should learn to map semantically similar points from the data manifold in \({\mathbb{R}}^{d}\times\:{\mathbb{R}}^{h}\) onto metrically close points in \({\mathbb{R}}^{d}\). Analogously, \(f\left( {\cdot,\cdot} \right)\)should push the composed representation away from non-similar images in \({\mathbb{R}}^{d}\).

For sample \(i\) from the training mini-batch of size \(N\), \({\vartheta}_{i}\) denote the composition feature, \(\psi\:\left({y}_{i}\right)\) denote the target image features and \(\psi\:\left({\stackrel{\sim}{y}}_{i}\right)\) denote the randomly selected negative image from the mini-batch. We follow baseline1 in choosing the base loss for the datasets.

We employ triplet loss with soft margin as a base loss. As shown in Eq. (11):

where \(M\) denotes the number of triplets for each training sample \(i\). In our experiments, we choose the same value as mentioned in the baseline code, i.e. 3.

In addition to the base loss, we also incorporate two reconstruction losses in our training objective. They act as regularizes on the learning of the composed representation. The image reconstruction loss as shown in Eq. (12):

where \({\widehat{z}}_{i}={d}_{img}\left({\vartheta}_{i}\right)\).

Similarly, the text reconstruction loss as shown in Eq. (13):

where \({\widehat{q}}_{i}={d}_{txt}\left({\vartheta}_{i}\right)\).

We can include rotational symmetry loss in the training objective. Specifically, we require that the combination of the target image features and the complex conjugate of the text features should be similar to the query image features. On the concrete side, first we obtain the complex conjugate of the textual features projected into the complex space. As shown in Eq. (14):

Let \(\stackrel{\sim}{\varphi}\) denote the composition of \({\delta}^{*}\) with the target image features \(\psi\:\left(y\right)\) in the complex space. As shown in Eq. (15):

Finally, we compute the composed representation, denoted by \({\vartheta}^{*}\), as shown in Eq. (16):

The rotational symmetry constraint translates to maximizing this similarity kernel: \(\kappa\:\left({\vartheta}^{*},z\right)\). We incorporate this constraint in our training objective by employing softmax loss or soft-triplet loss depending on the dataset.

Since for datasets, the base loss is \({L}_{BASE}\), we calculate the rotational symmetry loss, \({L}_{SYM}^{BASE}\), as shown in Eq. (17):

The total loss is computed by the weighted sum of above-mentioned losses. As shown in Eq. (18):

where \(\alpha\:\), \(\beta\:\) and \(\gamma\:\) are weights coefficients. We set \(\alpha\:\text{=1}\),\(\:\beta\:\text{=0.1}\) and \(\gamma\:\text{=0.1}\). Empirically, we find our model are not sensitive to coefficients when \(\alpha\:,\beta\:,\gamma\:\in\:\left[\text{0.1,1}\right]\).

Experiment

Experimental setups

To build our model, we used the PyTorch framework and Python 3.7 as the development environment. We chose ResNet18 and Blend-Transformer to encode images, and used the BERT model to encode text. We referred to EA29 and used two small, learnable, shared memory blocks initialized randomly as the external shared matrix \(\left({M}_{k},{M}_{v}\right)\), which can be conveniently inserted into any attention module. We used the SGD optimizer for training. The learning rate is 0.01, the momentum was 0.9, the weight decay is \(1\times\:1{0}^{-6}\), and the batch size is 32. The training is conducted for 160k iterations.

Dataset

This experiment aims to evaluate the performance of image-text matching, and we refer to two publicly available datasets: TCE-S30 and ICR202031. The TCE-S dataset is a collection of traditional Chinese embroidery images collected online, consisting of eight categories of traditional Chinese embroidery. A total of 763 embroidery images were collected in this study, as the original embroidery image dataset was too small, we introduced 4000 natural scene distractor images from the ImageNet dataset to the TCE-S dataset. The training set contains 4286 images for model training, and the test set contains 477 images for model evaluation. The ICR2020 dataset is from the collection of the Qinghai Provincial Intangible Cultural Heritage Research Base. The dataset contains 2020 embroidery images, divided into 5 categories according to the type of embroidery, each representing a unique artistic style of different ethnic groups and regions in Qinghai. We added 10,000 distractor images from the ImageNet dataset to the ICR2020 dataset, and the training set contains 10,818 images, and the test set contains 1,202 images.

Evaluation metrics

In the experiment, we adopted an evaluation index that is consistent with the previous baseline study2, namely, the Recall (R @ K). This indicator is used to quantify the proportion of images predicted by the model as positive (or interested) samples in all real positive sample images under a given query. At the same time, we use the evaluation metric mean Average Precision(mAP), which is widely used in image retrieval, to fully reflect the performance of the model under different categories and different recall. In order to calculate this indicator more accurately, we have adopted specific mathematical formulas, namely Eq. (12) and Eq. (13), which define how to calculate the number of true positives from the previous K prediction results and compare it with the total number of all true positive samples.

when evaluating the performance of the model, we pay special attention to the distinction between true positive samples (denoted as \(TP\)) and false negative samples (denoted as \(FN\)). The total number of images returned is represented by K, while the total number of images of all positive samples is marked by n. By calculating the number of images with a \(score\) of 1 in the first two prediction results, we get the total number of images predicted as positive samples, which is expressed as \({\sum}_{i=1}^{K}\left(score\right)\), and its range is \([0,K]\).

Captioning performance

In this paper, we investigate various combination query image retrieval methods on the TCE-S and ICR2020 datasets. To ensure fairness, the technical scales of the methods are kept equal and the training strategies are consistent. The research focuses on three types of image features: CNN features (C), ViT features (V), and CNN and ViT features (C + T). The performance of three advanced CNN models, LBF32, HFFCA33, and IRTF6, and two ViT models, VAL34 and TNT24, are compared. Furthermore, the performance of three models that combine CNN and ViT, namely ComposeAE2, TECMH12, and EAR-FS28, is deeply compared. Table 1 shows that models that rely solely on CNN features generally score relatively lower in most evaluation metrics, which to some extent indicates that they have limitations in capturing image context information. In contrast, models that turn to ViT features, such as TNT, show significant improvements on the TCE-S and ICR2020 datasets. This indicates that compared with CNN, using ViT as a feature extractor has stronger feature extraction capabilities and can capture more global and contextual information.

Next, we observed the performance of the model that combined CNN and ViT features, which showed significant improvements in evaluation metrics compared to models that only used single visual features. This indicates that combining different types of visual features can bring additional performance gains for image retrieval. However, embroidery images contain rich textures and complex structures that are distributed across different parts of the image. These methods may not perform well when applied to embroidery images in natural scene images. Our method is specifically designed for complex structures and rich textures in embroidery images, and the Blend-Transformer can extract image features from different angles and utilize the global context information of embroidery images. Meanwhile, the Enhanced CNN enhances the information flow between different feature groups, thereby improving retrieval accuracy. These comparisons prove the effectiveness of the model in embroidery image retrieval.

To comprehensively evaluate the computational efficiency of our proposed method, we specifically compared the model parameter sizes of each model, as shown in Table 1. In real-world application scenarios, the size of model parameters directly affects the loading and inference speed, and consequently affects the overall performance. It is worth noting that the ComposeAE has the largest number of parameters due to its multi-layer stacked components. In contrast, the model proposed in this paper has a parameter size comparable to the excellent performance of TECMH, but achieves higher scores in all evaluation indicators. This achievement is due to the Enhanced CNN and Blend-Transformer designed by us, which effectively balance the computational complexity and feature extraction ability through efficient matrix operations and image block multi-dimensional processing strategies, thereby optimizing the embroidery image retrieval performance while maintaining a lightweight parameter size.

In addition, precision-recall curve is added to observe the retrieval behavior as the number of retrieved images increases. The results are shown in Fig. 6. We can find that the proposed method achieves positive and stable performance for different number of hash bits. These encouraging results illustrate the effectiveness of our proposed method on the TCE-S dataset.

Qualitative results

Figure 7 shows that the model is able to successfully modify the state of the query image by querying the text and retrieve the correct target image on the TCE-S dataset. On the left of figure, the query image is shown. In the middle of the figure, the query text is provided, which describes the desired state modification for the query image. The system modifies the state of the query image based on the query text and retrieves the matching image from the dataset with the modified state. On the right of figure, the correct target image is marked with a red frame. This shows that after the state modification, the system is able to accurately retrieve the image that corresponds to the description in the query text. It is clearly shown from Fig. 7 that the model is able to successfully apply the state modification from the query text to the query image and accurately retrieve the desired target image on the TCE-S dataset, which proves the effectiveness of the model in the task of image state modification and retrieval.

The core of this paper is the ability of the model to learn visual features related to embroidery images. To further prove the effectiveness of our method, we explore the output distribution of these embroidery images. Specifically, we extract the feature vectors from the TCE-S test set and map them to a two-dimensional space using t-SNE35, visualizing them as scatter plots, as shown in Fig. 8. From Fig. 8, we can see that embroidery images with similar context are well clustered together. For example, the green dashed box represents embroidery images of roses, and the closest distance is the orange dashed box, which represents embroidery images of fresh flower patterns, because they have highly similar context content. This result proves that the model can dig deeper into image similarity, capture subtle differences and common features between images, even for structurally complex and colorful embroidery images, and effectively classify them.

Ablation experiments

Comparison of different components

We conducted experiments by setting different variants to evaluate the impact of key components in the model on its performance on the TCE-S and ICR2020 datasets. For each experiment, we deleted one different component and retrained the model from scratch, as shown in Table 2. The comparative variant implementations of MMFN are as follows: (1) w/o H. The GEA module is deleted to extract reference image features from the H dimension. (2) w/o W. The GEA module is deleted to extract reference image features from the W dimension. (3) w/o H×W. The GEA module is deleted to extract reference image features from the H×W dimension. (4) w/o ESM. The two external shared matrices introduced by the GEA module are deleted. represents deleting the entire module, and ✓ represents retaining the entire module.

As shown in Table 2, we found that w/o H and w/o H×W had varying degrees of performance degradation, and w/o W degraded the most, verifying that GEA can extract reference image features from multiple dimensions and capture more rich reference image feature information, thereby improving retrieval performance. Then, we found that w/o ESM also lowered the model’s performance score, verifying that the two external shared matrices play an important role in GEA. Next, we removed GEA entirely and only retained SA, finding that the combination of SA and CNN allows information to flow between different groups, effectively enhancing the relevance of reference image feature information. Finally, achieving the best performance by combining GEA and SA verifies the effectiveness of our method.

Comparison of different encoders

Table 3 shows the performance versus parameter count of several representative feature extractors, covering image encoders (such as ResNet and its variants, ConvNeXt36, ViT, Swin-T37) and text encoders (BERT, RoBERTa38). We observe: (1) CNN Feature Extractor: The first four experiments show that as the complexity of CNN models (such as ResNet-18 and its more powerful variants) increases, the number of parameters rises, but the performance gain is not significant. This shows that for embroidery images, ResNet-18 has been able to effectively capture key texture and content details, and more importantly, how to efficiently fuse these features to optimize fine-grained retrieval. (2) Text Encoder Comparison: Compare BERT (first line) with RoBERTa (fifth line). Although the latter is good at processing complex and long texts with more parameters, its performance improvement is limited on the shorter texts covered in this paper, indicating that BERT is more efficient for short texts. (3) ViT vs. Swin-T: The last two rows show that its performance drops significantly compared to ViT despite having a smaller number of Swin-T parameters. ViT, with its powerful global information modeling ability, performs better on the recognition of details such as color and texture of embroidery images, which is more suitable for such image tasks. In summary, after experimental validation, we selected ResNet-18, ViT, and BERT as the optimal feature extractor combination to balance model complexity, parameter amount, and performance.

Impact of local and global information

In Fig. 9, we further analyze the local and global interactions and separability using t-SNE visualization on the TCE-S dataset. The CNN representation represents the local information, the ViT representation represents the global information, and the latest EAR-FS28 uses the CNN + ViT representation to represent the interaction of local and global information. The points of the same color represent instances of the same label. The CNN representation learns a visual representation that overlaps with the visual representation of different labels, indicating that local information alone is not sufficient for TCE-S classification.

Compared with the CNN representation, the CNN + ViT representation is more separable, and the separability with the ViT representation is slightly better. This shows that CNN + ViT combines local and global information to provide complementary information, improving the separability of the representation. However, due to the complex eight-class embroidery images in the TCE-S dataset, it is still unable to achieve good separation effects. Finally, our method representation shows the best separability among all variations, exceeding the separability of the CNN and ViT representations. These results not only prove the importance of combining CNN and ViT for image retrieval, but also prove the effectiveness of the proposed method in embroidery image representation.

Conclusion

This paper presents Blend-Transformer, where the core lies in the GEA module, which replaces the MHSA in ViT and extracts features from the reference embroidery image from different dimensions, greatly enriching the feature information. The GEA module also utilizes two external shared matrices to calculate the correlation between samples to obtain global conditions. To further improve performance, this paper proposes Enhanced CNN, introducing the SA module, which extracts features through channel reorganization of the CNN group, achieving information flow between different groups, thereby enriching the reference embroidery image feature information. The experiments on the TCE-S and ICR2020 datasets show that our method significantly improves the capture of reference image information.

Currently, there is still a preliminary research stage for complex and diverse image categories such as embroidery, and how to better lightweight the model and reasonably utilize external knowledge to assist image retrieval has become an urgent problem to be solved. In future research, we will try to explore the method of distilling knowledge from large models to small models and utilizing the rich knowledge of multi-modal large models to enhance the model’s ability to retrieve complex images.

Data availability

Publicly available data supporting the conclusions of this study can be found at: https://doi.org/10.3974/geodb.2021.07.03.V1.

References

Li, X., Yang, J. & Ma, J. Recent developments of content-based image retrieval (CBIR). Neurocomputing 452, 675–689 (2021).

Anwaar, M. U., Labintcev, E. & Kleinsteuber, M. Compositional learning of image-text query for image retrieval. In Proceedings of the IEEE/CVF Winter conference on Applications of Computer Vision 1140–1149 (2021).

Zhang, F., Xu, M. & Xu, C. Tell, imagine, and search: End-to-end learning for composing text and image to image retrieval. ACM Transactions on Multimedia Computing, Communications, and Applications (TOMM) 18(2), 1–23 (2022).

He, K., Zhang, X., Ren, S. & Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 770–778 (2016).

Baldrati, A. et al. Conditioned and composed image retrieval combining and partially fine-tuning clip-based features. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 4959–4968 (2022).

Nguyen, P. Enhancing image retrieval efficiency through text feedback to improve search performance. J. Inf. Hiding Multim Signal. Process. 15(1), 21–35 (2024).

Ahad, M. T. et al. Comparison of CNN-based deep learning architectures for rice diseases classification. Artif. Intell. Agric. 9, 22–35 (2023).

Dosovitskiy, A. et al. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv Preprint arXiv:2010.11929 (2020).

Dong, H., Zhang, L. & Zou, B. Exploring vision transformers for polarimetric SAR image classification. IEEE Trans. Geosci. Remote Sens. 60, 1–15 (2021).

Lin, A. et al. Ds-transunet: Dual swin transformer u-net for medical image segmentation. IEEE Trans. Instrum. Meas. 71, 1–15 (2022).

Zhang, Z., Wang, L. & Cheng, S. Composed query image retrieval based on triangle area triple loss function and combining CNN with transformer. Sci. Rep. 12(1), 20800 (2022).

Li, Q. et al. TECMH: Transformer-based cross-modal hashing for fine-grained image-text retrieval. Computers Mater. Continua 75(2): 3713–3728 (2023).

Xue, L., Li, X. & Zhang, N. L. Not all attention is needed: Gated attention network for sequence data. In Proceedings of the AAAI Conference on Artificial Intelligence, Vol. 34, no. 04 6550–6557 (2020).

Alsmadi, M. K. Content-based image retrieval using color, shape and texture descriptors and features. Arab. J. Sci. Eng. 45(4), 3317–3330 (2020).

Li, Y., Ma, J. & Zhang, Y. Image retrieval from remote sensing big data: A survey. Inform. Fusion 67, 94–115 (2021).

Kenton, J. & Bert Pre-training of deep bidirectional transformers for language understanding. In Proceedings of naacL-HLT, Vol. 1 2 (2019).

Chen, Y., Gong, S. & Bazzani, L. Image search with text feedback by visiolinguistic attention learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 3001–3011 (2020).

Xu, Y. et al. Multi-modal transformer with global-local alignment for composed query image retrieval. IEEE Trans. Multimedia 25, 8346–8357 (2023).

Yang, Q. et al. Composed Image Retrieval via Cross Relation Network with Hierarchical Aggregation transformer (IEEE Transactions on Image Processing, 2023).

Vaswani, A. Attention is all you need (Advances in Neural Information Processing Systems, 2017).

Li, S. et al. Moganet: Multi-order gated aggregation network. In The Twelfth International Conference on Learning Representations (2023).

Pepino, L., Riera, P. & Ferrer, L. Study of positional encoding approaches for audio spectrogram transformers. In ICASSP 2022–2022 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP) 3713–3717 (IEEE, 2022).

Touvron, H. et al. Going deeper with image transformers. In Proceedings of the IEEE/CVF International Conference on Computer Vision 32–42 (2021).

Han, K. et al. Transformer in transformer. Adv. Neural. Inf. Process. Syst. 34, 15908–15919 (2021).

Cui, Y. et al. Irnext: Rethinking convolutional network design for image restoration. In International Conference on Machine Learning (2023).

Cui, Y., Ren, W. & Knoll, A. Omni-kernel network for image restoration. In Proceedings of the AAAI Conference on Artificial Intelligence, Vol. 38, no. 2 1426–1434 (2024).

Cui, Y. & Knoll, A. Exploring the potential of channel interactions for image restoration. Knowl. Based Syst. 282, 111156 (2023).

Xue, Y. et al. An external attention-based feature ranker for large-scale feature selection. Knowl. Based Syst. 281, 111084 (2023).

Guo, M. H. et al. Beyond self-attention: External attention using two linear layers for visual tasks. IEEE Trans. Pattern Anal. Mach. Intell. 45(5), 5436–5447 (2022).

Xu, S. et al. Individuality in commonality: A comparative study of Su embroidery and Gu embroidery based on online retrieval of museum collections. Asian Social Sci. 19(4), 12 (2023).

Wei, Z. & Ko, Y. C. Segmentation and synthesis of embroidery art images based on deep learning convolutional neural networks. Int. J. Pattern Recognit. Artif. Intell. 36(11), 2252018 (2022).

Hosseinzadeh, M. & Wang, Y. Composed query image retrieval using locally bounded features. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 3596–3605 (2020).

Zhang, G., Wei, S., Pang, H. & Zhao, Y. Heterogeneous feature fusion and cross-modal alignment for composed image retrieval. In Proceedings of the 29th ACM International Conference on Multimedia 5353–5362 (2021).

Chen, Y., Zheng, Z., Ji, W., Qu, L. & Chua, T. S. Composed image retrieval with text feedback via multi-grained uncertainty regularization. arXiv Preprint arXiv arXiv:2211.07394 (2022).

Chatzimparmpas, A., Martins, R. M. & Kerren, A. t-visne: Interactive assessment and interpretation of t-sne projections. IEEE Trans. Vis. Comput. Graph. 26(8), 2696–2714 (2020).

Liu, Z. et al. A convnet for the 2020s. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 11976–11986 (2022).

Liu, Z. et al. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision 10012–10022 (2021).

Delobelle, P., Winters, T. & Berendt, B. Robbert: A Dutch roberta-based language model. arXiv preprint arXiv:2001.06286 (2020).

Author information

Authors and Affiliations

Contributions

Xinzhen Zhuo. conceptualized and designed the algorithm, implemented the initial codebase, and prepared the original manuscript draft.Donghai Huang. contributed to the development and fine-tuning of the algorithm, performed substantial debugging and code optimization, and assisted with manuscript writing and revisions.Yang Lin. designed and executed the performance tests, analyzed the computational results, and contributed to the interpretation of these results for the manuscript.Ziyang Huang. provided essential theoretical insights, contributed to algorithm improvements, and critically revised the manuscript for important intellectual content.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Zhuo, X., Huang, D., Lin, Y. et al. Combined query embroidery image retrieval based on enhanced CNN and blend transformer. Sci Rep 14, 27518 (2024). https://doi.org/10.1038/s41598-024-79012-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-024-79012-y