Abstract

Sebaceous carcinoma is difficult to distinguish from chalazion due to their rarity and clinicians’ limited experience. This study investigated the potential of AI-generated image training to improve diagnostic skills for these eyelid tumors compared to traditional video lecture-based education. Students from Orthoptics, Optometry, and Vision Research (n = 55) were randomly assigned to either an AI-generated image training group or a traditional video lecture group. Diagnostic performance was assessed using a 50-image quiz before and after the intervention. Both groups showed significant improvement in overall diagnostic accuracy (p < 0.001), with no significant difference between groups (p = 0.124). In the AI group, all 25 chalazion images showed improvement, while only 6 out of 25 sebaceous carcinoma images improved. The video lecture group showed improvement in 19 out of 25 chalazion images and 24 out of 25 sebaceous carcinoma images. The proportion of images with improved accuracy was significantly higher in the AI group for chalazion (P = 0.022) and in the video group for sebaceous carcinoma (P < 0.001). These findings suggest that AI-generated image training can enhance diagnostic skills for rare conditions, but its effectiveness depends on the quality and quantity of patient images used for optimization. Combining AI-generated image training with traditional video lectures may lead to more effective educational programs. Further research is needed to explore AI’s potential in medical education and improve diagnostic skills for rare diseases.

Similar content being viewed by others

Introduction

Improving diagnostic ability through medical education is a critical challenge that can influence patient prognosis1. When distinguishing between common and rare diseases, healthcare professionals often face difficulties due to the limited number of cases of rare diseases, making it challenging to enhance diagnostic skills2. The differentiation between sebaceous carcinoma and chalazion is a typical example of this issue. For example, there is a report that when pathological examinations were performed on 1060 cases clinically diagnosed as chalazion, sebaceous carcinoma was found in 68 cases (6.4%)3.

Sebaceous carcinoma presents with diverse findings and can often resemble common diseases such as chalazion, hordeolum, blepharitis, and conjunctivitis. This can often lead to delayed diagnosis as the average duration from symptom onset to diagnosis can be up to one year4. Timely diagnosis is very important, a previous study5 found that among 100 cases of sebaceous carcinoma, six patients died due to metastasis, and larger tumor size was associated with a higher risk of metastasis. Therefore, enhancing diagnostic skills for eyelid tumors is likely to improve prognosis.

To address this issue, recent advancements in artificial intelligence (AI) image generation techniques, which began with Generative Adversarial Networks (GAN)6), have evolved into more user-friendly methods such as Stable Diffusion7, offer the potential to generate a larger number of images for diagnostic teaching purposes. Recently we reported that by learning a large number of AI-generated medical images in a short period of 53 min, the accuracy of identifying six types of retinal diseases significantly improved from 43.6 to 74.1%8.

However, the extent to which these AI-generated images contribute to the actual improvement of diagnostic ability and the potential educational applications have not been sufficiently investigated. In particular, research focusing on diseases with limited image characteristics or high variability in pathological picture remains limited. Sebaceous carcinoma and chalazion are prime examples of conditions that possess both of these characteristics.

The purpose of this study is to explore the possibility of novel educational interventions improving diagnostic skills in undistinguishable diseases by comparing and verifying the effectiveness of image training utilizing AI technology with traditional video education methods. This not only contributes to the theoretical development of medical education but also has significant clinical implications by directly improving early disease diagnosis and patient prognosis.

We conducted an experiment to investigate the potential application of AI-generated images in medical education by comparing the effectiveness of diagnostic training using AI-generated eyelid tumor images with traditional video lecture-style education among students in orthoptic and optometry training programs.

Method

Ethics

This study was approved by the ethics committees of Tsukazaki Hospital (Himeji, Japan) (Application number: 231016, June 20,2023), the Glasgow Caledonian University (Scotland, UK) (Application number: HLS/LS/A23/034, April 23,2024), Kawasaki University of Medical Welfare (Kurashiki, Japan) (Application number: 24 − 006, May 26,2024), and Cancer Institute Hospital (Tokyo, Japan) (Application number: 2023-GB-163, April 9,2024) Comprehensive written informed consent for the anonymous use of clinical data was obtained from patients at their first hospital visit. The study was conducted in accordance with the ethical principles of the Declaration of Helsinki. All of the participants received informed consent about this research.

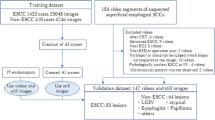

Study design

Participants were randomly assigned to either the AI-generated image group or the traditional video lecture group based on their student ID numbers or the order of the experiment. To assess learning effects, a diagnostic quiz consisting of 50 anterior segment photographs (25 chalazion and 25 sebaceous carcinomas, each from 25 patients) was administered before and after the intervention.

The change in participants’ accuracy rates before and after the intervention was measured as the learning effect. Additionally, the learning effect for each of the 50 evaluation images was assessed based on whether the accuracy rate for that image improved after the intervention.

Participants

The participants included 16 students from the University of Glasgow (1 from the Orthoptist training program, 8 from the Optometrist training program, and 7 from the Master’s program in Vision Sciences) and 39 students from the Orthoptist training program at Kawasaki University of Medical Welfare, totaling 55 participants.

Patient image collection

Chalazion images were collected from anterior segment photographs of patients with a documented physician’s diagnosis in their medical records and confirmed clinical resolution. For sebaceous carcinoma, anterior segment photographs were collected from patients with a pathologically confirmed diagnosis. Twenty-five images from 25 patients were used for the evaluation quiz for both chalazion and sebaceous carcinoma. These patients’ photographs were excluded from the optimization process for AI-generated images. For AI-generated image optimization, 118 images from 39 sebaceous carcinoma patients and 1,046 images from 475 chalazion patients were used. The patient characteristics of the collected images are shown in Table 1.

AI-generated image synthesis

In this study, we created a LoRA (Low-Rank Adaptation of Large Language Models)9 to optimize Stable Diffusion XL10 (Stability AI, London, UK), an image generation AI model. LoRA is a technique for efficiently fine-tuning large-scale pre-trained models by injecting low-rank matrices into the weight matrices of the original model, enabling optimization with relatively few images and low computational cost without directly modifying the parameters of the original model. We created separate LoRAs for chalazion and sebaceous carcinoma and generated AI-synthesized images using the prompts “chalazion” and “sebaceous carcinoma.” We fine-tuned the SD model using the following parameters and settings: Input images were resized to 1024 × 1024 pixels and normalized to [0,1] range. The optimization was performed on Nvidia RTX A6000 48GB GPU x2 using Python v3.1.7, PyTorch 2.1, and Diffusers 0.28.0 libraries. We used the AdamW optimizer with a learning rate of 0.0004 and a batch size of 2. The model was saved after one epoch, and during image generation, we performed 10 epochs sequentially for output. The standard diffusion model objective function was used as the loss function. These technical specifications ensure reproducibility of our image generation process. The optimization was performed separately for chalazion and sebaceous carcinoma using the aforementioned images, and image generation was carried out. A total of 1,000 synthetic images were created for each condition. From these, 100 images each for chalazion and sebaceous carcinoma were selected by two physicians (HTa and TM) who both agreed that the images were suitable for training purposes. Figure 1 shows representative examples of the synthesized images.

Web quiz creation

The Learning BOX (Learning box corp. Tatsuno, Japan) web-based quiz system was used to create evaluation quizzes (pre- and post-intervention) and an AI-generated image learning quiz. All quizzes followed a common format where an image was presented, and participants chose from three options (chalazion, sebaceous carcinoma, or “I don’t know”). Figure 2 shows an example question from the learning quiz.

Example question and result display for the learning quiz. (A) A synthetic image of chalazion is presented, and three choice buttons are displayed below. (B) Result display for the answer. The light green bar indicates that chalazion was selected. On the “Synthetic image” below, a green circle and checkmark are shown, indicating that the answer was correct.

The evaluation quizzes consisted of 25 images from 25 patients for both chalazion and sebaceous carcinoma, with the same images used for pre- and post-intervention quizzes. The selection of images for the evaluation quizzes was made by HTa, prioritizing images with clearly visible lesions. None of the images used in the evaluation quizzes had unclear lesions. The 50 images were presented in a random order by the web system during each quiz. While the evaluation quizzes did not provide answers after each question, the learning quiz provided immediate feedback on whether the answer was correct or incorrect to facilitate learning. The learning quiz was set to conclude after 10 min. This 10-minute duration was specifically chosen to match the length of the educational video, ensuring that both groups received equal learning time exposure. All quizzes were translated into English for participants from the United Kingdom.

YouTube lecture video creation

Y.N., a specialist in eyelid tumors, created a lecture video with a precise duration of 10 min to ensure equivalence with the AI image learning time. The images and explanations used in the video were based on the “Atlas of Eyelid and Conjunctival Tumors” by Hiroshi Goto MD, PhD., published in October 2017 by Igaku-Shoin. An English version of the video materials was narrated by a 32-year-old native English speaker. Both videos are available on YouTube (Japanese: https://youtu.be/M10UO7PnmIg, English: https://youtu.be/QcWPPfKwv3w ).

Experimental procedure

The AI image learning group took the pre-intervention evaluation quiz, followed by the AI-generated image learning quiz, and then immediately took the post-intervention evaluation quiz. The traditional video lecture group took the pre-intervention evaluation quiz, watched the YouTube lecture video, and then immediately took the post-intervention evaluation quiz.

Analysis

The accuracy rates

The accuracy rates (number of correct answers/50 total questions) of the evaluation quizzes were compared before and after the intervention for each participant and between the two groups. Improvement was calculated by subtracting the pre-intervention accuracy rate from the post-intervention accuracy rate and compared between the two groups. Furthermore, changes in accuracy rates before and after the intervention were analyzed for each of the 50 images (25 chalazion and 25 sebaceous carcinoma) used in the evaluation quizzes. The change in accuracy rate was calculated as 1 if all respondents improved from incorrect to correct answers, and − 1 if all respondents deteriorated from correct to incorrect answers. For example, if 25 out of 50 participants improved from incorrect to correct answers and the remaining 25 deteriorated from correct to incorrect answers, the change in accuracy rate would be zero. If 20 participants (40%) improved from incorrect to correct answers and the remaining 30 participants had no change in their answers before and after the intervention, the change in accuracy rate would be + 0.4. If the ratio of correct respondents remains unchanged before and after intervention, the value is zero. If it increases by 10% after intervention, the value is + 0.1 (green), and if it decreases by 10%, the value is -0.1.

Improvement in response time

Response times (measured in seconds and recorded in the web quiz system) for the evaluation quizzes were compared both before and after the intervention for each participant, as well as between the two groups. Improvement in response time (post-intervention response time minus pre-intervention response time in seconds) was also compared between the two groups. Based on the changes in response content before and after training for each participant, the 50 evaluation images for each of the 55 participants (totaling 2750 units) were divided into three groups according to the learning effect (Diagnosis Accuracy Change). Images where the correct answer deteriorated to a wrong answer were categorized as the Worsened group, images where there was no change in response content, whether correct or incorrect, were categorized as the Unchanged group, and images where a wrong answer improved to a correct answer were categorized as the Improved group. A statistical analysis was conducted on the relationship between these Diagnosis Accuracy Change and the change in response time by two diseases (Chalazion and Sebaceous carcinoma).

Statistical analysis

The first statistical analyses were performed using SPSS Version 28, IBM, UK. A repeated measures analysis of variance (ANOVA) was conducted with the between subjects’ factor being the intervention type (the AI-generated image group, the traditional video lecture group) and institution (Glasgow, Kawasaki) and the repeated measure factor being pre-Vs post training for both the quiz score and response times. Bonferroni adjustments were made for multiple comparisons. Post hoc testing included pairwise comparisons between all variables.

The second statistical analyses were performed using JMP Pro 16 2.0 software (SAS institute, NC, USA). Paired t-tests were used to compare all participants’ evaluation quiz scores before and after the intervention. The Mann-Whitney U test was employed. Fisher’s exact test was used to compare the proportion of images with improved accuracy rates in terms of the learning effects between the two groups for each evaluation quiz image. The relationship between changes in response content and response time before and after training for each image was analyzed using a two-factor analysis of variance (ANOVA) to test for interactions. Depending on the presence of interactions, comparison items were selected, and pairwise comparisons were conducted using the Steel-Dwass test.

Results

The mean (SD) accuracy rate significantly improved from 56.1% (18.9%) before the intervention to 69.8% (10.5%) after the intervention within individuals in the AI-generated image learning group (ANOVA, F1, 51= 13.117, p < 0.001). In the traditional video group, the rates also significantly improved from 58.6% (21.5%) to 79.5% (9.3%) (ANOVA, F1, 51= 13.117, p < 0.001) (Fig. 3).

The details for each group are shown in Table 2. There was no significant difference in the pre-scores between the AI and video intervention groups (ANOVA, F1, 51= 0.196, p = 0.660) but the video group had significantly higher post-scores compared to the AI intervention group (ANOVA, F1, 51= 10.093, p = 0.003).

There was no significant difference between the two institutions in the pre-intervention evaluation test scores (ANOVA, F1, 51= 0.085, p = 0.772), but the Glasgow’s post intervention score was significantly higher than that at Kawasaki in the post-intervention scores (ANOVA, F1, 51= 5.267, p = 0.026)). Further investigations using pairwise comparisons showed that the difference in the post intervention scores was significant in the AI (p = 0.011) but not the video group (p = 0.557).

The results of the learning effect analysis for each evaluation image are shown in Fig. 4 for each group.

The ratio of correct respondents among the participants before and after the intervention is illustrated for each evaluation image. The left half (to the left of the central black line) consists of 25 synthetic images for chalazion learning, and the right half (to the right of the central black line) consists of 25 synthetic images for sebaceous carcinoma learning. If the ratio of correct respondents remains unchanged before and after intervention, the value is zero. If it increases by 10% after intervention, the value is + 0.1 (green), and if it decreases by 10%, the value is -0.1 (red). A: AI-generated image learning group, B: Traditional video learning group.

In the AI-generated image learning group, all 25 chalazion images showed a positive change in accuracy rate. However, for sebaceous carcinoma, 18 images (72%) showed a deterioration in accuracy rate change, indicating no learning effect. In contrast, in the traditional video learning group, 6 chalazion images (24%) and 1 sebaceous carcinoma image (4%) showed a deterioration in accuracy rate change, totaling 7 images (14%). However, a learning effect was observed in the remaining 43 images (86%) In terms of the proportion of images with improved accuracy rates, the learning effect for chalazion images was higher in the AI-generated image group (Fisher’s exact test, P = 0.022). In contrast, for sebaceous carcinoma images, the learning effect was significantly higher in the traditional video learning group (Fisher’s exact test, P < 0.001).

Regarding response times, presented in Fig. 5, the AI-generated image learning group showed a statistically significant difference between the pre-time and post-time (ANOVA, F1, 51= 26.942, p < 0.001), an improvement of 78 s in the mean response time. The traditional video lecture group showed an improvement of 5.5 s, but the difference between pre-time and post-time was not statistically significant (ANOVA, F1, 51= 0.516, p = 0.476).

Response times for the evaluation test before and after the intervention for the two groups. AI: AI-generated image learning group, Video: Traditional video lecture group, gray bars: pre-intervention score, white bars: post-intervention score, **: p < 0.001 by ANOVA, N.S: Not significant (ANOVA, p = 0.476)

To further investigate the relationship between changes in response content and response time, we conducted a two-factor analysis of variance (ANOVA) for each image, considering “Disease Type” (Chalazion vs. Sebaceous carcinoma) and “Diagnosis Accuracy Change” (Improved, Unchanged, Worsened) as factors. The results showed that there was a significant interaction between disease types and changes in diagnosis accuracy within the AI-generated image learning group (F (2, 1394) = 20.45, p < 0.001). In contrast, no significant interaction was observed between disease types and changes in diagnosis accuracy in the traditional video lecture group (F (2, 1423) = 0.43, p = 0.65). For the AI-generated image learning group, where an interaction was noted, the Steel-Dwass test was conducted to compare the changes in response times for each combination of disease type and change in diagnosis accuracy. Significant differences in response times were found between the improvement group for Chalazion and the improvement group for Sebaceous carcinoma (p < 0.001), the improvement group for Chalazion and the unchanged group for Sebaceous carcinoma (p < 0.001), and the improvement group for Chalazion and the worsened group for Sebaceous carcinoma (p = 0.001). On the other hand, in the traditional video lecture group where no interaction was observed, changes in response times were compared by disease type and by changes in diagnosis accuracy. No significant difference was found between Chalazion and Sebaceous carcinoma (Steel-Dwass test, p = 0.21). For changes in diagnosis accuracy, significant differences were observed between the improvement and unchanged groups (p = 0.13), improvement and worsened groups (p = 0.001), and unchanged and worsened groups (p = 0.001). Figure 6 shows the changes in response times before and after learning, by disease and learning method.

Changes in response times before and after learning by disease type and method. Changes in response times across 2,750 units, each representing one of 50 disease images evaluated by 55 participants, shown by changes in answers and learning method before and after learning. The change in response time is calculated as the difference in seconds between pre- and post-learning assessment test times (subtracting post from pre). Error bars represent standard deviation. AI: AI-generated image training group, Video: Traditional video learning group. ‘Worsened’ denotes groups where answers changed from correct to incorrect post-learning, ‘Unchanged’ where there was no change in answers, and ‘Improved’ where answers changed from incorrect to correct post-learning.

Discussion

The results of this study demonstrate that training with AI-generated images can significantly improve the participants’ diagnostic ability. The analysis of individual images used in the evaluation test showed that AI-generated image training had a significantly greater effect than traditional video lecture-based education. Furthermore, a significant reduction in response time was observed in the AI-generated image training group.

The contrasting effectiveness of these two educational approaches appears to reflect their distinct pedagogical characteristics. The AI image training’s success with chalazion diagnosis likely stems from enhanced pattern recognition through repeated exposure to varied visual presentations, facilitating rapid visual processing as evidenced by reduced response times. Conversely, the video lecture’s effectiveness in teaching sebaceous carcinoma diagnosis can be attributed to its systematic presentation of specific diagnostic criteria, particularly beneficial for complex conditions with variable presentations. The instructor’s ability to explicitly highlight and explain the four key diagnostic features provided a structured framework that proved especially valuable for this more challenging condition. These findings suggest that optimal educational strategies should be tailored to both the condition being taught and its specific learning requirements.

The further analysis of response time changes for each image revealed a significant interaction effect between diseases and score improvement in the AI-generated image learning group. This suggests that the impact of learning on response time varied depending on the type of image (Chalazion vs. Sebaceous carcinoma) and whether the answer improved, remained unchanged, or worsened. While the traditional video lecture group showed no significant interaction effect, indicating an independent relationship between diseases and score improvement, the AI-generated image learning group demonstrated a more complex relationship. This suggests that the AI training may have had a more nuanced effect on response time compared to the traditional video lectures. The finding that the AI-generated image learning group exhibited a shorter time to reach a diagnosis compared to the video lecture group, particularly for chalazion images, aligns with the dual coding theory and picture superiority effect. The dual coding theory, which suggests that visual and verbal information is processed separately, has long been proposed11. Moreover, the picture superiority effect, which states that visual information is more quickly and easily memorized than textual information, has been reported12. The AI training, by providing a large number of visually rich images, may have facilitated faster and more efficient processing of visual information, leading to quicker diagnostic decisions. However, the AI-generated image training did not consistently demonstrate an effect on reducing response time for sebaceous carcinoma images. This may be attributed to the insufficient number of patient images used for optimizing the generative AI. The lack of comprehensive representation of sebaceous carcinoma characteristics in the training set could have hindered the development of efficient visual processing skills for this specific disease. It’s important to note that the lack of correlation between score improvement and time improvement in both groups suggests that response time reduction may not always be directly proportional to accuracy improvement. Further investigation is needed to understand the factors contributing to the observed response time differences and to explore the potential interplay between cognitive processing, image characteristics, and learning outcomes.

In addition, for sebaceous carcinoma images, the educational effect of AI-generated images could not be demonstrated. This may also result from the insufficient number of patient images used for optimizing the generative AI. In the traditional video learning method, which showed a learning effect for sebaceous carcinoma images, the instructor emphasized four features of sebaceous carcinoma (loss of eyelashes, irregular shape, vascular expansion, and minimal inflammation) during the instruction. The AI-generated image set used for sebaceous carcinoma identification training in this experiment may not adequately represent these characteristics. It was recognized that when generating synthetic medical images for diagnostic training using AI-generated images, it is necessary to check not only individual images but also whether the characteristics of the target disease are comprehensively represented throughout the entire training image set.

Compared to chalazion, sebaceous carcinoma has a lower prevalence and greater variation in its clinical presentation13. This implies that, although it is difficult to collect patient images, a larger number of images may be required to represent the full range of possible pathological conditions compared to chalazion. This point may also be common to other rare diseases14,15,16. Developing techniques to create synthetic image sets for identification training that incorporate all possible diagnostic features from a limited number of patient images is a future challenge, and its realization may lead to new advancements in medical education methods.

The fact that the effectiveness of the traditional video learning method was particularly demonstrated in the identification of sebaceous carcinoma, a rare disease, reaffirms the value of the conventional educational methods we have been using. Notably, these differences in learning outcomes were observed despite identical learning exposure times (10 min) for both methods, suggesting that the effectiveness of each approach is related to the educational methodology rather than duration of exposure. The instructional approach we adopted in this study, which focused on comparison with chalazion, is likely to be an immediately effective teaching method in clinical settings. If available as e-learning, it can be used worldwide, and if knowledge and identification skills for sebaceous carcinoma can be improved in 10 min, many people would be interested in taking the course.

Furthermore, if we aim to further enhance the ability to differentiate between chalazion and sebaceous carcinoma, it is necessary to consider a combined approach of traditional video learning and AI-generated image learning. For example, after acquiring basic knowledge of the diseases through lecture-based education, practical diagnostic skills may be further developed by training with AI-generated images. The next stage of research should address this challenge once it becomes possible to provide appropriate variations in the synthetic image set for sebaceous carcinoma training.

Conclusion

The results of this study suggest that training with AI-generated images may be useful for improving diagnostic skills for eyelid tumors, although the effectiveness depends on the quality and quantity of patient images used for optimization. The finding that training with a large number of images reduced the time required for diagnosis suggests that visual processing of images is easier for trainees to memorize. It was also suggested that combining AI-generated image training with traditional video educational methods may lead to the development of more effective educational programs. Further research is expected to contribute to the advancement of AI-driven medical education and the improvement of diagnostic skills for rare diseases.

Data availability

The datasets used and/or analyzed during the current study available from the corresponding author on reasonable request.

References

Asch, D. A., Nicholson, S., Srinivas, S., Herrin, J. & Epstein, A. J. Evaluating obstetrical residency programs using patient outcomes. JAMA 302(12), 1277–1283. https://doi.org/10.1001/jama.2009.1356 (2009).

Dasgupta, T., Wilson, L. D. & Yu, J. B. A retrospective review of 1349 cases of sebaceous carcinoma. Cancer 115(1), 158–165. https://doi.org/10.1002/cncr.24030 (2009).

Ozdal, P. C., Codère, F., Callejo, S., Caissie, A. L. & Burnier, M. N. Accuracy of the clinical diagnosis of chalazion. Eye 18(2), 135–138. https://doi.org/10.1038/sj.eye.6700603 (2004).

Shields, J. A., Demirci, H., Marr, B. P., Eagle, R. C. Jr. & Shields, C. L. Sebaceous carcinoma of the eyelids: Personal experience with 60 cases. Ophthalmology 111(12), 2151–2157. https://doi.org/10.1016/j.ophtha.2004.06.031 (2004).

Sa, H. S. et al. Prognostic factors for local recurrence, metastasis and survival for sebaceous carcinoma of the eyelid: Observations in 100 patients. Br. J. Ophthalmol. 103(7), 980–984. https://doi.org/10.1136/bjophthalmol-2018-312635 (2019).

Goodfellow, I. J. et al. Generative adversarial networks. In Proceedings of the 27th International Conference on Neural Information Processing Systems (pp. 2672–2680) (2014).

Rombach, R., Blattmann, A., Lorenz, D., Esser, P. & Ommer, B. High-resolution image synthesis with latent diffusion models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (pp. 10684–10695) (2022).

Tabuchi, H. et al. Using artificial intelligence to improve human performance: Efficient retinal disease detection training with synthetic images. Br. J. Ophthalmol. https://doi.org/10.1136/bjo-2023-324923 (2024).

Hu, E. J. et al. LoRA: Low-Rank Adaptation of Large Language Models. https://arxiv.org/abs/2106.09685 (2021).

Podell, D. et al. SDXL: Improving Latent Diffusion Models for High-Resolution Image Synthesis. https://arxiv.org/abs/2307.01952 (2023).

Clark, J. M. & Paivio, A. Dual coding theory and education. Educational Psychol. Rev. 3(3), 149–210 (1991).

Defeyter, M. A., Russo, R. & McPartlin, P. L. The picture superiority effect in recognition memory: A developmental study using the response signal procedure. Cogn. Dev. 24(3), 265–273. https://doi.org/10.1016/j.cogdev.2009.05.002 (2009).

Watanabe, A. et al. Sebaceous carcinoma in Japanese patients: Clinical presentation, staging and outcomes. Br. J. Ophthalmol. 97(11), 1459–1463. https://doi.org/10.1136/bjophthalmol-2013-303776 (2013).

Evans, W. R. & Rafi, I. Rare diseases in general practice: Recognising the zebras among the horses. Br. J. Gen. Pract. 66(652), 550–551. https://doi.org/10.3399/bjgp16X687625 (2016).

Stiebitz, S., von Streng, T. & Strickler, M. Delayed diagnosis of rheumatoid arthritis in an elderly patient presenting with weakness and desolation. BMJ Case Rep. 14(2), e237251. https://doi.org/10.1136/bcr-2020-237251 (2021).

Benito-Lozano, J. et al. Diagnostic process in rare diseases: Determinants associated with diagnostic delay. Int. J. Environ. Res. Public Health. 19(11), 6456. https://doi.org/10.3390/ijerph19116456 (2022).

Acknowledgements

The software engineering support provided by Mr, Nobuto Ochiai. Dr. Hidetesu Mito and Dr. Hideo Umezu contributed to data curation for the preliminary research. Ryo Nishikawa CO contributed to creating the web quizzes.

Author information

Authors and Affiliations

Contributions

HTa contributed to Conceptualization, Funding acquisition, Methodology, Project administration, Resources, Writing/Original Draft Preparation, and Writing – Review & Editing. IN, TY and MD contributed to Data curation and Methodology. MD and MT contributed to Formal analysis. MD, NS, MA, JE and HTs contributed to Writing – Review & Editing and Supervision. HD and YN contributed to Data curation.TM contributed to Software.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Tabuchi, H., Nakajima, I., Day, M. et al. Comparative educational effectiveness of AI generated images and traditional lectures for diagnosing chalazion and sebaceous carcinoma. Sci Rep 14, 29200 (2024). https://doi.org/10.1038/s41598-024-80732-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-024-80732-4