Abstract

This study presents a novel privacy-preserving self-supervised (SSL) framework for COVID-19 classification from lung CT scans, utilizing federated learning (FL) enhanced with Paillier homomorphic encryption (PHE) to prevent third-party attacks during training. The FL-SSL based framework employs two publicly available lung CT scan datasets which are considered as labeled and an unlabeled dataset. The unlabeled dataset is split into three subsets which are assumed to be collected from three hospitals. Training is done using the Bootstrap Your Own Latent (BYOL) contrastive learning SSL framework with a VGG19 encoder followed by attention CNN blocks (VGG19 + attention CNN). The input datasets are processed by selecting the largest lung portion of each lung CT scan using an automated selection approach and a 64 × 64 input size is utilized to reduce computational complexity. Healthcare privacy issues are addressed by collaborative training across decentralized datasets and secure aggregation with PHE, underscoring the effectiveness of this approach. Three subsets of the dataset are used to train the local BYOL model, which together optimizes the central encoder. The labeled dataset is employed to train the central encoder (updated VGG19 + attention CNN), resulting in an accuracy of 97.19%, a precision of 97.43%, and a recall of 98.18%. The reliability of the framework’s performance is demonstrated through statistical analysis and five-fold cross-validation. The efficacy of the proposed framework is further showcased by showing its performance on three distinct modality datasets: skin cancer, breast cancer, and chest X-rays. In conclusion, this study offers a promising solution for accurate diagnosis of chest X-rays, preserving privacy and overcoming the challenges of dataset scarcity and computational complexity.

Similar content being viewed by others

Introduction

The global impact of the coronavirus (COVID-19), caused by the SARS-CoV-2 virus, has been profound and unprecedented. It is highly contagious and spreads rapidly through respiratory droplets caused by coughing, sneezing, or through saliva1. The COVID-19 initial outbreak occurred in December 2019 in Wuhan, China which swiftly spread throughout the world and became a pandemic. On January 30, 2020, the World Health Organization (WHO) declared the epidemic a global public health emergency, which persisted until May 5, 20232,3. Most COVID-19 patients recover without specific treatment. However, severe cases are more common among individuals aged 55 and older or those with pre-existing medical conditions like hypertension, diabetes, cancer, cardiac abnormalities, chronic respiratory disease, high cholesterol, or kidney disease4,5. As of February 16, 2024, the pandemic has resulted in 774.493 million reported cases and 7.03 million confirmed fatalities, placing it as the fifth deadliest pandemic6.

COVID-19 can be diagnosed with time-consuming techniques like reverse transcription-polymerase chain reaction (RT-PCR) or faster techniques such as rapid antigen tests (RAT). However, these tests have potential drawbacks such as lack of equipment, low sensitivity (60-70%), and higher false-positive cases7,8,9,10. Therefore, studies indicate that chest CT, combined with clinical symptoms and epidemiological data, offers a more effective early diagnosis support with greater sensitivity (approximate 98%)11,12,13,14. Computer-aided diagnostic (CAD) systems can significantly support radiologists in correctly diagnosing COVID-19 related respiratory conditions. The convergence of healthcare and innovative technologies, particularly deep learning, shows promise for identifying diseases. However, the scarcity of annotated datasets makes it challenging to adequately diagnose with deep-learning models15. While increasing collaboration to obtain well-annotated datasets is an ongoing endeavor. It is a laborious and time-consuming process for the radiologists involved. Consequently, adopting a self-supervised learning (SSL) method for the categorization task, which can potentially improve the diagnostic system with a smaller annotated dataset, may offer healthcare professionals a less stressful and more effective alternative.

Privacy concerns within a healthcare organization generally restrict the sharing of private datasets as cyber-attacks against medical models and datasets have become more common in medical data analysis16. The need for publicly available datasets from several sources is mainly caused by the privacy and confidentiality of medical data. Federated learning (FL) could be a reliable solution to address this issue, enabling collaborative model training across decentralized datasets without sharing raw data. This technique maintains privacy by preserving sensitive information within the local environments of medical centers while allowing for collaborative model training17,18. By keeping sensitive data local, the method protects privacy while potentially facilitating access to a broader range of datasets and enhancing the performance of deep learning models. This study proposes a FL-SSL based framework to classify lung CT scan images with privacy preservation. To secure the communication of model updates between clients and the central server, Paillier Homomorphic Encryption (PHE) is applied, ensuring that data remains encrypted during transmission, thus preventing potential security threats. Two publicly available lung CT scan datasets have been utilized as the labeled (MosMed dataset)19 and unlabeled (Curated COVID-19 lung CT scan dataset)20 for the classification task. The Curated COVID-19 lung CT scan dataset serves as the unlabeled dataset, where the classes are removed through combining the data files. The bootstrap your own latent (BYOL) framework, a contrastive learning framework is employed for the training of unlabeled dataset at the local hospital server. The unlabeled dataset is divided into three subsets which are assumed to have been sourced from three different hospitals. These are utilized for training local BYOL models in a collaborative manner. A VGG19 encoder followed by four convolutional neural network (CNN) blocks and one attention block (VGG19 + attention CNN) is used as the FL-SSL framework’s encoder. For each local BYOL model, the central encoder (VGG19 + attention CNN) is identical. Local BYOL model updates assist in the central encoder’s optimization throughout training cycles, enabling collective knowledge accumulation. The updated weight parameters from each local BYOL model are encrypted with PHE before being shared with the central server, where they are aggregated using federated averaging. The updated central encoder is then trained and assessed on a labeled dataset to ensure the learned representations are well-suited for downstream classification tasks. The input dataset of the local and central model is chosen by automatically selecting the CT slice having the largest lung portion. In order to reduce the computational complexity of the proposed FL-SSL framework, the input size is taken as 64\(\:\times\:\)64. Statistical analysis is used to assess the FL-SSL framework’s performance and the performance consistency is evaluated with five-fold cross validation. In addition, the proposed FL-SSL framework’s performance is compared with ten transfer learning (TL) models, keeping the same hyperparameter settings. Furthermore, the efficiency of the SSL framework is evaluated by comparing its performance with the Momentum Contrast (MoCo)21 and Simple Framework for Contrastive Learning of Visual Representations (SimCLR)22, both of which utilize the proposed encoder (VGG19 + attention CNN). Finally, the robustness of the proposed FL-SSL framework is validated by demonstrating the framework’s performance on three different datasets.

The major contributions of this study are outlined as follows:

-

An efficient privacy-preserving classification framework is presented that demonstrates excellent performance by incorporating a PHE mechanism to secure model updates and prevent data breaches.

-

The largest lung portion is selected based on an automatic process and the utilization of an input size of 64 × 64 leads to a significant decrease in the overall computational complexity.

-

The effectiveness of the VGG19 + attention CNN encoder is assessed by comparing its performance with ten TL models on the labeled dataset, all trained with identical hyper-parameter configurations. Additionally, the comparative analysis is extended to evaluate the performance of TL models when employed as encoders within the BYOL model.

Literature review

Advancements in AI and ML have greatly improved disease diagnosis systems through medical image analysis, proving especially valuable where timely and accurate imaging-based diagnoses are essential. Yet, challenges like data privacy and the scarcity of labeled datasets have driven interest toward privacy-preserving FL and SSL approaches. This section discusses developments in privacy-preserving systems, self-supervised frameworks, and deep-learning techniques for COVID-19 diagnosis using CT images.

Deep learning for COVID-19 classification

Deep learning models have become essential in automating disease segmentation and classification in COVID-19 diagnosis using CT imaging. Salama et al.23 proposed an innovative method to segment and classify CT images into positive and negative classes for COVID-19, integrating VGG16 and ResNet50 with U-Net. The proposed classification model performed well with a 98.98% accuracy, a 98.87% AUC, and a computing time of 1.8974 s. Similarly, both Gupta et al.5, and Ravi et al.24 proposed a deep learning-based COVID-19 CT-scan classification approach. Gupta et al.5 developed automated screening of COVID-19, using MobileNetV2 and DarkNet19, a newly designed lightweight DLM. The highest classification accuracy 98.91%, is achieved using transfer learned DarkNet19. Using DarkNet19, Ravi et al.24 proposed a deep learning-based feature fusion approach and stacked ensemble meta-classifier for large-scale learning for COVID-19 classification. Ultimately, classification was accomplished using a two-phase stacked ensemble meta-classifier-based method. Support vector machines (SVM) and random forests were used in the first step to make predictions, which were then combined and fed into the second stage. They obtained a 99% accuracy.

Self-supervised learning for COVID-19 classification

The acquisition of labeled data, especially in medical contexts, can be a challenging and resource-intensive process. Labeling medical data often requires the expertise of trained professionals, such as radiologists, who can accurately annotate images and provide diagnostic labels. This task is not only time-consuming but also costly. Self-supervised learning is a superior solution to this problem where the model is trained with labeled data and able to work for unlabeled dataset. Fung et al.25, Ewen et al.26, Gazda et al.27, and Park et al.28 provided self-supervised classification and segmentation models. Fung et al.25 proposed a two-stage deep learning model that is self-supervised and could potentially be used for COVID-19 by segmenting the lesions (ground-glass opacity and consolidation) from chest CT scans. Compared to the two related baseline models (0.55, 0.49), the proposed self-supervised two-stage segmentation model had the best overall F1 score (0.63). Ewen et al.26 proposed a deep learning model to investigate the viability of focused self-supervision, the proposed self-supervision technique, for a COVID-19 image dataset. They obtained a gain in accuracy of nearly 6% when using self-supervision compared to not using it. Gazda et al.27 employed an unlabeled chest X-ray dataset to pretrain a self-supervised deep neural network and Park et al.28 developed a model for the diagnosis of COVID-19 by utilizing a convolution attention module and a self-supervised learning strategy to solve the classification problem. In the COVID-19 class, the AUC is 99.4%, indicating that their model performs quite well in terms of classification. While these deep learning advancements provide a foundation for COVID-19 diagnosis from CT images, they also highlight challenges in data sharing and privacy in real-world implications. Addressing these challenges has led to the exploration of privacy-preserving frameworks such as FL.

Federated learning in medical imaging

To address privacy concerns, Yan et al.29 presented a reliable, label-efficient self-supervised FL framework for analyzing medical images. This strategy presented a new paradigm for self-supervised pre-training based on transformers. The method improved test accuracy for dermatological and retinal images, and chest X-rays, increasing classification accuracy by 5.06%, 1.53%, and 4.58% respectively, without the need for any additional pre-training data. Yang et al.30 proposed a FL-SSL framework using multi-national data for COVID-19 affected region segmentation in chest CT scans. The dice score was 65.0% in this method. Another FL based semi-supervised multi-tasking approach was proposed by Alam et al.31 for lung segmentation and detection of COVID-19. The average dice score of the segmentation task with this framework was 88.4%. Chetoui et al.32, Zhang et al.33, and Florescu et al.34 presented a classification algorithm with a FL approach for CT scans and histopathological images. Chetoui et al.32 proposed a vision transformer model with a peer-to-peer FL framework, resulting in an AUC of 92.00% for hospital 1 and an AUC of 99.00% for hospital 2. Zhang et al.33 proposed a pseudo-data-based FL framework, achieving a 96.66% accuracy and Florescu et al.34 developed COVID-19 detection models with pre-trained deep learning using a FL framework which achieved an accuracy of 83.82%. These studies illustrate FL’s effectiveness in preserving privacy while optimizing model performance in sensitive applications.

Recent advancements in FL have highlighted the critical importance of addressing privacy concerns, particularly when dealing with sensitive image data. A variety of innovative approaches have emerged to ensure both security and privacy in this ___domain. For instance, Kumar et al.35 proposed deep learning models for COVID-19 with a blockchain FL framework and obtained a precision of 83%. Similarly, Heidari et al.36 developed a FL framework integrated with blockchain technology to securely detect and classify malignant lung nodules in CT scans. By authenticating data with blockchain, Heidari’s model achieved an accuracy 99.69% across diverse datasets, including real-world cases. In another approach, Gupta et al.37 presented a blockchain-enhanced FL model targeting lung disease classification through DenseNet201 and the FedAvg algorithm for parameter aggregation. By leveraging the decentralized nature of blockchain with IPFS, the study addressed privacy risks, such as denial-of-service and reverse engineering attacks. This secure model achieved an F1 score of 90%. In the realm of image retrieval, Li et al.38 proposed a Privacy-Preserving Medical Image Retrieval (PMIR) system. This framework allows medical institutions to store and retrieve encrypted images securely on cloud servers, demonstrating a practical application of encryption techniques to protect sensitive medical data. Furthermore, Mahajan et al.39 explored a cloud-based biomedical image processing system that employs elliptic curve cryptography (ECC) to ensure secure transmission of medical images. This multi-layered architecture, which incorporates edge, fog, and cloud computing, significantly enhances privacy for multimedia medical records.

In addition to blockchain technologies, recent literature has also focused on the integration of advanced encryption and machine learning techniques to bolster security in image data handling. Qamar et al.40 proposed a federated model that combines adversarial learning with blockchain, along with advanced encryption techniques like MRLLSM, to securely classify medical images in IoT-connected devices. Hijazi et al.41 highlighted the advantages of FL with pointing out the challenges related to transmitting model parameters over insecure channels. To address these concerns, he proposed a FL framework integrating fully homomorphic encryption (FHE), allowing computations on encrypted data, and enhancing both privacy and security. This approach has shown improvements in accuracy, recall, precision, and F-scores, achieving performance metrics between 80.15% and 89.98% for communication overhead reduction and a latency reduction of 70.38%. Rieyan et al.42 further explored the use of partially homomorphic encryption within a distributed data fabric architecture for medical image analysis. This method enables secure collaboration among healthcare entities, ensuring compliance with regulations such as HIPAA and GDPR. The study focused on pituitary tumor classification, achieved an accuracy of 83.31%, demonstrating the practical effectiveness of these techniques in maintaining privacy without sacrificing performance. Additionally, Kumar et al.43 introduced a privacy-preserving data mining (PPDM) framework that combines statistical transformation methods with homomorphic encryption. This framework preserved data utility while safeguarding privacy, addressing concerns that sensitive information could be compromised during analysis. Truhn et al.44 addressed the vulnerabilities of traditional FL frameworks by proposing the somewhat-homomorphically-encrypted federated learning (SHEFL) model, which allows encrypted model updates and prevents the exposure of sensitive data. Walskaar et al.45 enhanced FL algorithms through multi-key homomorphic encryption, enabling secure collaboration across institutions. These studies demonstrate a wide range of security and privacy-preserving techniques that can enhance the applicability of FL frameworks.

Despite these advancements described above, existing studies face limitations, particularly regarding access to labeled data for model training. Although unlabeled hospital data can be utilized, comprehensive access is often restricted due to privacy concerns. To address these challenges, our proposed SSL-FL-based framework leverages large unlabeled datasets from client servers alongside a small labeled dataset, enabling hospitals to maximize their available data for model training while ensuring privacy preservation. This study distinguishes itself by employing a privacy-preserving self-supervised learning approach enhanced with PHE, thereby ensuring efficient and secure training.

Dataset

This study utilizes a 3D chest computed tomography (CT) scan dataset derived from the open-source MosMed database, which is maintained by the Research and Practical Clinical Center for Diagnostics and Telemedicine Technologies of the Moscow Health Care Department19. The inclusion criterion for participants in this dataset authorized that individual be 18 years or older. In contrast, the exclusion criterion eliminated those with a history of surgical intervention or radiation therapy for the management of thoracic diseases. The median age of the subjects was noted to be 47 years. Data collection occurred between 1 March 2020 and 25 April 2020 amidst the COVID-19 pandemic to assess the extent of pulmonary parenchymal damage associated with the virus. A total of 1110 distinct chest computed tomographic (CT) images, characterized by a slice thickness ranging from 1.0 to 1.5 mm, were incorporated into the dataset. In accordance with Russian national guidelines, the images were archived in NifTI format and categorized into five distinct groups based on the extent of pulmonary involvement. The dataset contains 2049 voxel images, each labeled as either showing no lung pathology or exhibiting COVID-19 lung pathology. 254 scans show no pathology (normal). In comparison the majority (684 scans) represent COVID-19-related pathology stage one, 125 scans display stage two, 45 scans show stage three, and 2 scans stage four disease patterns, indicating various degrees of disease progression. This dataset (see Fig. 1) is utilized as a labeled dataset where the CT-0 is considered the Normal class and the CT-1, CT-2, CT-2, and CT-4 classes are merged and considered the COVID-19 class.

For the unlabeled dataset (see Fig. 2), the Curated COVID-19 lung CT scan dataset, available in Kaggle, is utilized20. The dataset comprises 7,593 CT scan images from 466 patients with COVID-19, 6,893 normal (non-COVID) images from 604 patients and 2,618 Community-acquired pneumonia (CAP) images from 60 patients. The average ages for cases categorized as COVID-19, Normal, and CAP are recorded as 51.2 years, 52.8 years, and 64.3 years, respectively. The CAP class is excluded from the classification task, as this study solely focused on COVID-19. Within the two classes, the dataset includes 14,486 images. COVID-19 and Normal images are collected from multiple sources: namely, Iran (6294 slices), Italy (100 slices), Russia (5865 slices), China, and Japan (349 slices), and the rest of the slices are from multiple demographic areas. The extracted CT slices of 1070 CT scans were converted into PNG files. The unlabeled dataset is created by merging all the images of the two classes.

The dataset description presented in Table 1 outlines the total number of images along with the distribution of samples across the Normal and COVID-19 classes for both the labeled and unlabeled datasets.

Methodology

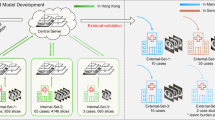

The methodology proposed in this study is illustrated in Fig. 3. This figure outlines the key steps and processes involved in the research approach.

The proposed methodology utilizes a FL framework with a global server and three local hospital servers to develop an FL-SSL-based classification framework while keeping the lung CT scan data decentralized. The global server hosts a VGG19 + attention CNN encoder-based model that is shared with all client servers. Each client’s local server initially identifies the COVID-19 CT slice with the largest lung portion in the volume of unlabeled CT scans of each patient. This is achieved through a sequential process where first the lung region of interest (ROI) is segmented. Then the area of all CT slices within a CT volume is calculated, based on the segmented ROIs. Finally, the largest lung CT scan slice for a patient is selected by identifying the CT image containing the largest ROI area. Only these CT slices are utilized to train the BYOL model. The client site processing allows hospitals to improve feature learning from the localized data samples without exposing original patient CT data externally. With such a process, the FL-SSL framework can read both 3D CT and 2D CT scans as input, as it automatically processes the data according to its appropriate format. The updated weight parameters from the local BYOL model are encrypted with PHE before being shared with the global server to mitigate potential transmission risks. These encrypted weights are then aggregated using federated averaging. The central encoder, representing the global model updated in a decentralized manner, is then trained, and tested with the labeled dataset using similar processing to focus on CT slices containing the largest lung area. The model performance is quantified through statistical analysis, validation loss monitoring, and k-fold cross validation. Additionally, the framework is compared with ten TL models. These ten models are also utilized as the encoder of BYOL model to demonstrate the proposed encoder’s effectiveness. The efficiency of the SSL framework is further evaluated by comparing its performance with the MoCo and SimCLR frameworks, all using the proposed encoder. Finally, the robustness of the classification framework is evaluated by classifying three different datasets. The FL-SSL collaborative framework yields a robust COVID-19 detection model that improves privacy compliance by preserving patient data within hospitals.

Security analysis and attack resistance

The proposed scheme employs PHE to enhance both privacy and security within the FL framework, effectively resisting a range of cyber threats. Specifically, it addresses vulnerabilities to eavesdropping, replay attacks, man-in-the-middle (MitM) attacks, message falsification, and model reconstruction attacks while preserving computational efficiency45. Eavesdropping refers to unauthorized interception of data during transmission, while MitM attacks involve an attacker secretly relaying and potentially altering the communication between two parties46. The probabilistic encryption approach of PHE ensures that each encryption process generates unique ciphertexts for every data transmission, significantly increasing resilience against potential adversaries. Even if data is intercepted, it remains unreadable without access to the decryption key, thereby protecting against eavesdropping and MitM attacks. Furthermore, the continuous encryption of data during transmission ensures that intercepted messages cannot be altered or used maliciously. The FL model’s reliance on PHE encryption also guards against replay attacks, wherein an attacker resends previously intercepted data to alter the model’s output47. Replay attacks exploit previously captured data to manipulate the system’s behavior. Because each model update results in a unique ciphertext, an attacker’s ability to replay messages is severely hampered, as the server can easily identify and reject outdated or invalid updates. Additionally, the encryption scheme ensures that only authenticated messages can be decrypted and processed, thereby maintaining the integrity of the transmitted data, and mitigating the risks of message falsification, which involves the unauthorized modification of messages48. Moreover, PHE encryption provides strong protection against inference and model reconstruction attacks49. Inference attacks attempt to deduce sensitive information about individual clients based on the model outputs, while model reconstruction attacks aim to recreate the underlying model or data from the information available. By enabling aggregate computations on encrypted data without exposing individual client updates to the server or any potential attacker, the scheme prevents the direct exposure of sensitive information. This significantly lowers the risk of data leakage, as attackers cannot access individual contributions. Thus, even if they attempt to infer or reconstruct model details, the lack of access to individual updates disrupts such efforts. Overall, this comprehensive security analysis highlights the robustness of our proposed framework against various attack vectors, ensuring the confidentiality and integrity of the data processed within the federated learning environment.

FL-SSL framework

The proposed framework integrates FL with the SSL-based BYOL method for the classification tasks. The BYOL framework leverages the VGG19 + attention CNN encoder within this framework. This section presents an overview of the framework’s architectural design and operational process.

VGG19 + attention CNN encoder

The encoder proposed in this study comprises the VGG-19 model50 followed by a self-attention block and two convolutional blocks. The encoder begins with the VGG-19 architecture, which is a CNN of 19 layers, comprising 16 convolutional layers and 3 fully connected layers. These layers extract features from the input image through convolution operations. In each convolutional layer, 3 × 3 compact filters with a stride of 1 and padding of 1 pixel are employed. The number of filters utilized in these layers increases progressively, starting with 64 filters in the initial layers and 512 filters in the 16th layer. This hierarchical design enables the network to learn intricate feature patterns. The feature extraction process is structured into 5 groups, each followed by a max-pooling layer with a 2 × 2 kernel size and a stride of 2 (See Fig. 4). These pooling layers effectively downsample the feature maps by retaining the maximum value within each 2 × 2 region, which reduces the dimensionality of the data from 224 × 224 to 7 × 7. The following equation represents each convolutional layer’s output51.

Here, within the layers of (L-1)th and Lth, the connection between mth and nth features is determined by the convolution function ×. The activation function and bias value are represented by \(\:\phi\:\) and \(\:{b}_{n}\) respectively.

Following the final output feature map of VGG-19’s last convolutional layer, a self-attention block is applied to focus on relevant regions of the input features only, enhancing its ability to capture long-range dependencies. The self-attention block is designed with a convolution operation with a kernel size and stride of 1 × 1 and 1 respectively. This convolutional operation is applied to the feature map, resulting in three feature maps (See Fig. 4), each with a resolution of C × H × W. The first feature map is reshaped and transposed, generating a matrix with dimensions of (H × W) × C to perform matrix multiplication with the second feature map with dimensions of C × H × W, resulting in a matrix of (H × W) × (H × W). To derive the attention map, a SoftMax operation is applied. This attention map is further combined with the third feature map C through matrix multiplication, yielding the final self-attention feature map with dimensions of C × (H × W). Thus, the reshaped self-attention feature map and the original input feature map are fused resulting in dimensions of C × H × W. This fusion is done based on a scale parameter which can be calculated as follows52,

Here, Y denotes the final feature map after the fusion. The original feature map is represented by \(\:{Y}_{0}\). \(\:\gamma\:\) and \(\:{Y}_{att}\) are the scale parameter and self-attention feature maps respectively.

After the self-attention block, two convolutional blocks are incorporated which consist of a convolutional layer with 32 filters followed by a MaxPooling2D layer. The convolutional block utilizes a rectified linear unit (ReLU) activation function which efficiently manages the non-linearities within the network by setting negative input values to zero, while allowing positive values to pass unchanged. This can be represented as53,

Here \(\:x\) is the input value. This function effectively introduces sparsity within the network and mitigates the vanishing gradient problem, enhancing the network’s ability to learn complex patterns54. Following each convolutional layer, a MaxPooling2D layer is applied, which serves to reduce the spatial dimensions of the feature maps while retaining the most prominent features. Max pooling operates by selecting the maximum value within a defined spatial window, such as a 2 × 2 region, which optimizes the feature map size and provides translation invariance to the network. The operation can be calculated as55,

Here, \(\:{p}_{i}\) represents the values within the pooling region \(\:P\). These blocks further enhance the spatial data patterns and high-level abstractions extracted by the attention mechanism. Thus, the VGG19 + attention CNN encoder leverages the strengths of the VGG-19 model in conjunction with self-attention mechanisms and convolutional blocks to improve the feature extraction capabilities.

Hyperparameter tuning

An ablation study was conducted on the core VGG19 + attention CNN encoder to determine the optimal hyperparameters for effective feature extraction. This study tested different activation functions, optimizers, learning rates, and dropout rates to find the best configuration. The resulting optimal configuration for the FL-SSL framework was an ELU activation function, Adam optimizer, learning rate of 0.01, and dropout rate of 0.3. We used a batch size of 128, trained for 100 epochs, and reduced computational complexity by resizing input images to 64 × 64. Detailed analysis is available in the “Ablation study" section under the “Results” section.

BYOL

BYOL (Bootstrap Your Own Latent) is a self-supervised learning framework which efficiently pre-trains a deep neural network using large-scale, unlabeled datasets. BYOL contains a twin neural network structure, consisting of an online and a target network27 (See Fig. 5).

The process begins with the online network receiving an input image of \(\:x\). The online network includes an encoder \(\:{e}_{\theta\:}\), a projector \(\:{p}_{\theta\:}\), and a predictor \(\:{q}_{\theta\:}\). The weights of the online network are defined as \(\:\theta\:\). the target network mirrors the online network’s architecture, excluding the predictor, while operating with a separate set of weights \(\:{\theta\:}^{{\prime\:}}\). In each iteration, from the weakly augmented view \(\:{v}_{1}\) of an unlabeled image, the online network results are \(\:{e}_{\theta\:}\), \(\:{p}_{\theta\:}\), and \(\:{q}_{\theta\:}\), the representation, prediction, and projection. The target network generates \(\:{e}_{{\theta\:}^{{\prime\:}}}\) and \(\:{p}_{{\theta\:}^{{\prime\:}}}\) from the strongly augmented view \(\:{v}_{1}\) of the unlabeled image. The online network receives regression targets for its training purpose from the target network \(\:{\theta\:}^{{\prime\:}}\) which is updated exponentially based on the moving average (\(\:\theta\::{\theta\:}^{{\prime\:}}⟵\tau\:{\theta\:}^{{\prime\:}}+\left(1-\tau\:\right)\theta\:\:\), where \(\:\:\tau\:\) represent the target decay rate of the parameters of the online network \(\:\theta\:\). The stop-gradient keeps the target network parameters updated separately during the exponential moving average operation, ensuring a consistent target for training without directly influencing the target network weights through the loss function. This process results in the loss minimization of the BYOL.

To generate the prediction \(\:{q}_{\theta\:}\) of the target projection, the projection \(\:{p}_{\theta\:}\) is normalized followed by \(\:{L}_{2}\) normalization. Then the loss between \(\:{q}_{\theta\:}\) and \(\:{p}_{{\theta\:}^{{\prime\:}}}\) is calculated as follows56,57,

Here, the loss \(\:{L}_{\theta\:,\:\:{\theta\:}^{{\prime\:}}}\) is calculated between the \(\:{v}_{1}\) and \(\:{v}_{2}\) of the online and target networks. For \(\:{v}_{1}\) and \(\:{v}_{2}\), the \(\:{L}_{\theta\:,\:\:{\theta\:}^{{\prime\:}}}\) and \(\:{{L}^{{\prime\:}}}_{\theta\:,\:\:{\theta\:}^{{\prime\:}}}\:\)is calculated within the online network and target network. The overall loss of the BYOL is calculated as shown in Eq. (6).

In the BYOL framework of this study, representation learning of the \(\:{v}_{1}\) and \(\:{v}_{2}\) is accomplished using the VGG19 + attention CNN encoder. Additionally, for the projection, two fully connected layers are employed, consisting of a dense layer followed by a ReLU activation function, followed by another dense layer.

Paillier homomorphic encryption

The PHE algorithm is a well-established cryptosystem known for its additive homomorphic properties, making it highly suitable for privacy-preserving computations, such as those employed in federated learning models. In federated systems, the PHE algorithm ensures secure aggregation of local model weights, enabling meaningful computation without exposing sensitive information to external threats58,59. The algorithm achieves this by allowing operations to be performed directly on ciphertexts, with the results corresponding to operations as if they had been carried out on the plaintexts.

Key generation

At the core of the PHE cryptosystem is the generation of public and private keys. To begin, two large prime numbers, \(\:p\) and \(\:q\), are selected, ensuring that the greatest common divisor (gcd) between \(\:p\times\:q\) and \(\:(p-1)\times\:(q-1)\) is 1. These primes are then used to compute the modulus \(\:n=\:p\times\:q\), and and the Carmichael function \(\:\lambda\:=lcm\left(p-1,\:q-1\right),\) where lcm stands for least common multiple. A generator g is chosen from the set of integers modulo \(\:{n}^{2}\left(g\epsilon{\mathbb{Z}}_{{n}^{2}}^{*}\right)\), such that \(\:g\) satisfies specific conditions, including the divisibility of \(\:n\) by the order of \(\:g\). The public key consists of the pair \(\:(n,g)\), while the private key represented by \(\:(\lambda\:,\:\mu\:)\). Here, \(\:\mu\:\) is s derived from the given formula60,

Encryption

The encryption process begins by converting a plaintext message \(\:m\:\epsilon\:{\mathbb{Z}}_{n}\) into a ciphertext \(\:c\). This is done by selecting a random integer \(\:r\:\epsilon\:{\mathbb{Z}}_{n}^{*}\) and computing, \(\:c=\:{g}^{m}\:\times\:\:{r}^{n}\:mod\:{n}^{2}\). This method ensures that the encryption is probabilistic, adding an extra layer of security by randomizing the ciphertext for each encryption, even if the same plaintext is encrypted multiple times.

Additive homomorphism

A crucial feature of PHE is its additive homomorphic property. This property allows the sum of two plaintexts, \(\:{t}_{1}\) and \(\:{t}_{2}\), to be computed by performing modular multiplication on their corresponding ciphertexts \(\:{c}_{1}\) and \(\:{c}_{2}\) as,

This additive homomorphism is particularly useful in federated learning, where model parameters are aggregated across multiple devices without needing to decrypt the individual data contributions61,62. After this homomorphic operation is completed and the aggregated ciphertext is obtained, the decryption is proceeded to recover the plaintext of the aggregated data.

Decryption

Decryption relies on the private key and involves the calculation of the plaintext from the ciphertext. Given the ciphertext \(\:c\), the message\(\:\:m\) is recovered using the private key parameters \(\:\lambda\:\) and \(\:\mu\:\) through calculating, \(\:m=\left(L\:\left({c}^{\lambda\:}\:mod\:{n}^{2}\:\right)\times\:\mu\:\right)\:mod\:n\). This decryption process ensures that the original plaintext is retrieved correctly and is then used for global model training.

FL framework

FL is a distributed machine learning approach which enables model training on decentralized data, located on user devices like phones or hospitals. This method addresses privacy issues by preserving the data on the client’s server and allowing for decentralized client management63. In a basic FL framework, the central server distributes the initial model to the clients. The clients then train the model on their local data and send only the model updates (not the raw data) back to the server64. To maintain privacy and protect data during exchanges, this study applies PHE for securing client-server communications. PHE allows the server to perform computations on encrypted data without needing to decrypt it, preserving privacy even during operations involving sensitive information. Each client encrypts its model updates using PHE before sending them to the server, protecting these updates from potential cyber threats. After receiving the encrypted model updates from the clients, the server aggregates them without needing to decrypt each update individually, using federated averaging techniques to refine the global model’s encoder. This step completes a communication round, which facilitates secure data exchanges and model updates without exposing raw data to the server. In each communication round, the aggregated model parameters are calculated as follows,

In Eq. 565, the aggregation update w is the average of the individual updates \(\:\text{w}\text{i}\) obtained from N clients. The average aggregation is then calculated by summing the \(\:\text{w}\text{i}\) updates and dividing by the total number of clients N.

Additionally, the performance of this global model is evaluated using a loss function that aggregates the client specific losses. The loss is calculated by evaluating the model weights across all clients, defined as,

Here, \(\:\text{x}\) denotes the total number of clients, \(\:{\text{n}}_{\text{x}}\) is the number of data points from each client, \(\:\text{M}\) represents the model weight vector, and \(\:{\text{f}}_{\text{i}}\left(\text{M}\right)\) is the individual client’s loss function. The \(\:{\text{F}}_{\text{x}}\left(\text{M}\right)\) corresponds to the client-specific loss function aggregated over each client’s predictions with the global model weight vector \(\:\text{M}\). This comprehensive loss, \(\:\text{f}\left(\text{M}\right)\), collectively represents the average model error across clients, reflecting overall performance.

This updated global model encoder is then made available to clients to start the next round of localized training. This process is repeated until the model converges to a satisfactory performance level66. For this study, the round number is set to 10. Figure 6 shows the process of the FL framework.

The foundation of the FL approach is training an encoder on the unlabeled CT scan data, collecting results from the client sites (hospitals) to update the global server for effective classification with a limited number of labeled data. This updates the classification encoder to accurately classify CT scans, as the VGG19 + attention CNN encoder’s pretraining step. In the client server the SSL framework BYOL is used, applying unlabeled input data from the clients.

Largest CT slice selection

The CT scans available at each hospital are generally composed of many 2D image slices stacked to form a 3D representation of a patient’s lungs. Evaluating the entire 3D scan is too computationally intensive for quick model updates in FL. Therefore, an efficient slice selection algorithm is employed to extract a single representative 2D slice for each patient, containing the largest lung region. The algorithm first goes through a lung segmentation process to identify lung regions in the CT volume. Before the segmentation process, the algorithm first detects whether the data is in JPG/PNG or 3D Neuroimaging Informatics Technology Initiative (NIfTI) format. If the data is in NIfTI format, the algorithm first converts the CT slices into JPG images and then processes the images for segmentation. Here the segmentation is done based on several processes. First, a binary threshold is applied with a threshold value of 60. Then, the largest contour is detected and masked with white color. Lastly, the ROI is detected by subtracting the threshold image from the mask. For each image of a lung CT scan, the algorithm calculates the total lung ROI area. The image slice with the maximum lung area is selected. The raw image version of the selected largest ROI image is sent to the model for training. By choosing the slice with the largest visible lung area, we maximize the view of important diagnostic features within the lungs for COVID-19 positive cases. This representative 2D slice is then used for computationally cheaper model training and prediction in the FL-SSL based framework applied across three hospitals. Consequently, through this largest CT slice selection algorithm, the unlabeled data comprises of 1070 CT images overall for the BYOL model training. The slice selection process is illustrated in Fig. 7.

Proposed FL-SSL framework

Utilizing the labeled and unlabeled datasets which are considered as public and private datasets respectively for the FL framework, the SSL-based classification of COVID-19 is conducted. As the labeled dataset is a public dataset which will be externally providing for the training and testing process of the updated VGG-19 + attention CNN encoder, the dataset is processed outside the FL framework by selecting the CT images containing largest lung, following the algorithm described in the “Largest CT Slice Selection” section. A visualization of the overall FL-SSL based classification is shown in Fig. 8.

Figure 6 depicts how three hospitals are contributing to the SSL framework’s training and encoder update process with unlabeled datasets. Figure 8 represents the operational mechanism of the overall FL-SSL framework in executing the classification task. Initially, the unlabeled datasets from the client servers are processed with the CT image selection algorithm. The encoder of the client server in the FL-SSL framework is trained with the processed images. During the iterative training process, the weights of each client’s local encoder are sent to the global server, after encrypting by the PHE algorithm. The global server aggregates these weights to update the global encoder, contributing to the improvement of the global encoder’s classification. For the aggregation process, the federated averaging algorithm is employed, where the classifier weights from all participating clients are updated in each round. The enhanced global encoder is then used for labeled data classification, eliminating the need for large annotated datasets. Labeled data is provided to the global server externally (outside from the client servers). Only a limited amount of labeled data is required, making it suitable for public accessibility. By utilizing this process, the classification task becomes more efficient, as radiologists only need to annotate a small quantity of data, and the use of processed or balanced data is optional.

Computing environment

All experiments were conducted on a system with an Intel Core i5-8400 processor, 16GB RAM, and an NVIDIA GeForce GTX 1660 GPU, providing the necessary computational power for deep learning workloads. A 256GB DDR4 SSD supported fast data storage and retrieval.

Results

This study focuses on the classification of unlabeled CT scans for the accurate classification of COVID and normal lung CT images using an FL-SSL privacy-preserving framework. An ablation study is carried out to determine a stable model configuration that optimizes classification accuracy Analyzing diverse performance metrics, the adaptability and effectiveness of the proposed framework are evaluated. These experiments provide an understanding of how well the proposed FL-SSL framework works for accurate identification in medical imaging.

Ablation study

To get the best possible model performance, an ablation study is carried out to determine the ideal hyperparameter configuration for the proposed VGG19 + attention CNN encoder. To provide insight into the crucial hyperparameter settings influencing the framework’s effectiveness in the classification task, this analysis aimed at identifying factors that have a major impact on the FL-SSL framework’s performance. The results of the ablation study are described below, highlighting how the hyperparameters affect the overall performance of the classification framework. The results of the ablation study are shown in Table 2.

In Table 2, the ablation study investigates how different hyperparameter combinations affect the proposed framework’s performance. Here, the activation function elu exhibited superior performance compared to relu (89.41%), tanh (85.34%), and SoftMax (81.50%) with a test accuracy of 91.64%. Moving on to optimizers, Adam achieved the highest test accuracy of 93.48%, surpassing SGD (87.41%), Nadam (92.91%), and Adamax (91.64%). A learning rate of 0.01 resulted in the highest test accuracy of 95.48% while learning rates of 0.001 (93.48%) and 0.0001 (92.76%) led to slightly lower accuracies. The average time per epoch remained consistent across each investigation of activation function, optimizer and learning rate. Finally, the effect of dropout rates on average time per epoch and test accuracy is investigated. The highest test accuracy of 97.52% is obtained with a dropout rate of 0.3. It is observed that greater dropout rates result in a decrease in accuracy, 0.4 (95.48%) and 0.5 (94.82%) and an increase in average time per epoch. Throughout the optimization process, the FL-SSL framework achieves its highest performance with activation function elu, optimizer adam, a learning rate of 0.01, and a dropout rate of 0.3. Input images of 64 × 64 are used to reduce the computational complexity.

Performance analysis of the proposed FL-SSL framework

The FL-SSL framework is evaluated using performance metrics which are derived from the confusion matrix’s True Positives (TP), True Negatives (TN), False Positives (FP), and False Negatives (FN). These include the False Positive Rate (FPR), False Discovery Rate (FDR), False Negative Rate (FNR), F1 score, Precision, Recall, Test and Training Accuracy, and Matthew’s Correlation Coefficient (MCC). These metrics provide information about how well the FL-SSL framework distinguishes between COVID and normal CT scans. The confusion matrix of the proposed framework is shown in Fig. 9.

The overall accuracy of the framework in differentiating between COVID and normal CT scans, is reflected in the training and test accuracy. Sensitivity, accuracy, and specificity provide information on how well the FL-SSL framework detects positive instances, how accurately positive predictions are made, and how well negative cases are identified. The evaluation metrics are listed in Table 3.

The ability of the FL-SSL framework to accurately classify instances within the training dataset is demonstrated by the training accuracy, which reaches an accuracy of 98.76%. The test accuracy of 97.52%, demonstrates the effectiveness of the framework in categorizing images. The sensitivity score of 98.53% indicates how well it can identify positive cases. Both recall and sensitivity are 98.53%. The precision is 95.71%, suggesting a low incidence of false positives. The specificity of 96.77% demonstrates how well the framework detects negative instances. The F1 Score of 97.10% indicates a strong balance between the framework’s precision and recall. The scores of MCC and NPV are 94.96% and 98.90% respectively indicating the framework’s effectiveness in dealing with imbalanced datasets and predicting true negatives. The FPR, FDR, and FNR values of 3.23%, 4.29%, and 1.47% respectively, further support the general effectiveness of the framework and its reliability in COVID-19 classification.

The accuracy and loss curves provide more information on the behavior of the proposed FL-SSL framework throughout the training and validation phase. The training and validation’s accuracy and loss curves are shown in Fig. 10, providing an illustration of the FL-SSL framework’s learning history, tendency to convergence, and generalization abilities over epochs.

K-fold cross-validation

The k-fold cross-validation method has been employed to evaluate the proposed FL-SSL framework’s robustness and validate its generalization across unseen subsets of the labeled dataset. The labeled dataset has been split into k subsets, and the framework is trained and evaluated k times, using a single instance of each subset as the validation set. A more accurate assessment of the FL-SSL framework’s capabilities can be obtained by examining the average performance across all folds. Figure 11 displays the cross-validation using a k value of 5.

The proposed framework’s k-fold cross-validation results across five folds demonstrate its generalization and performance consistency, as shown in Fig. 11. With a training accuracy of 94.84% and a test accuracy of 93.26% in the first fold. The FL-SSL framework’s robustness is further supported by the second fold, which produced a test accuracy of 94.55% and a training accuracy of 95.37%. The third, fourth, and fifth folds all have test accuracies of 96.25%, 92.87%, and 96.21% and training accuracies of 98.09%, 94.14%, and 97.97%.

Performance comparison between the proposed encoder and TL models with labeled dataset

A comparative analysis has been conducted using well-known transfer learning models, including EfficientNetB5, MobileNet, DenseNet201, VGG16, InceptionV3, VGG19, MobileNetV2, InceptionResNetV2, ResNet50V2, and ResNet50, all of which are trained and tested on labeled datasets, in order to contextualize the performance of the proposed encoder. For this experiment, the proposed encoder is utilized as a classification model. The labeled dataset is split, with 70% allocated for training, 15% for testing and 15% for validation purpose. The accuracy, precision, sensitivity, and F1 score are the main emphasis of the comparative analysis. The proposed encoder’s model consistently outperforms the TL models. The results are shown in Fig. 12.

It is notable that, the proposed encoder’s model has the highest performance for all evaluation metrics with 97.91% training and 95.65% for test accuracy, 92.86% for precision, 97.01% for sensitivity, and 94.89% for F1 score.

Performance comparison between TL models and proposed encoder for the FL-SSL framework

This study introduces a VGG19 + attention CNN-based encoder for the FL-SSL-based classification of lung CT images. A comparative analysis is conducted in this section to assess the proposed encoder’s effectiveness for the FL-SSL-based classification. The ten TL models: VGG19, DenseNet201, VGG16, MobileNet, EfficientNetB5, InceptionV3, MobileNetV2, InceptionResNetV2, ResNet50V2, and ResNet50 are employed as the FL-SSL framework’s encoder to understand how other encoder performs compared to the proposed encoder. While utilizing the TL encoders, the key hyperparameters of activation function, optimizers, learning rate, and dropout rate are kept the same as for the proposed encoder. The comparative analysis evaluates accuracy, precision, sensitivity, and F1 score. The results are presented in Fig. 13.

The Fig. 13 compares the proposed encoder with other encoders across various performance indicators. As illustrated, the proposed encoder consistently surpasses the other TL encoders in terms of train, test, precision, sensitivity, and F1 score, revealing its superior capacity to classify the lung CT scan data.

Comparative analysis of BYOL against two other SSL frameworks

To demonstrate the effectiveness of BYOL, we compared its SSL-based classification performance with MoCo and SimCLR, using our proposed VGG19 + attention CNN as the encoder for each framework. This experiment was conducted using the same dataset and splitting ratio applied in our SSL framework to ensure consistent evaluation. Figure 14 illustrates the performance of the proposed encoder across three SSL frameworks, evaluating train accuracy, test accuracy, precision, sensitivity, and F1 score.

The comparative results in Fig. 14 demonstrate that BYOL consistently achieves the highest performance across all metrics, outperforming both SimCLR and MoCo frameworks. Here, SimCLR exhibits moderate performance, whereas MoCo shows comparatively lower results. These findings highlight the robustness of our proposed SSL framework in classification tasks, further validating its effectiveness.

Evaluating the proposed framework’s adaptability across various modality datasets

The proposed FL-SSL framework demonstrates an impressive performance in classifying CT scan images as COVID-19 or normal. To assess the robustness of the framework across various imaging modalities, an additional evaluation is conducted using three distinct datasets, each representing a different modality. The dataset descriptions are summarized in Table 4.

Table 4 presents the datasets that are utilized for the proposed framework’s evaluation where 75% of the data is designated as the unlabeled dataset, while the remaining 25% is assigned to the labeled dataset. Table 5 shows the performance of the FL-SSL framework’s performance for the different datasets.

Table 5 demonstrates the proposed framework’s classification accuracy for several datasets with different modalities which consist of two classes. Although the proposed SSL-based classification framework was initially developed for COVID-19 CT scan image classification, its effectiveness is not limited to chest X-rays demonstrating its effectiveness in medical image classification. Therefore, this framework holds potential promise for application in multimodal datasets sourced from diverse medical facilities.

Discussion

The integration of FL and PHE in our SSL framework for COVID-19 classification from lung CT scans has profound clinical implications. As healthcare increasingly relies on data-driven solutions, safeguarding patient privacy and ensuring data security are essential. Our framework effectively addresses the privacy risks inherent in FL through PHE, alleviating hospital’s concerns about data leakage during collaboration.

A key advantage of our FL-SSL-based framework is its requirement for only a small labeled dataset, significantly reducing the workload on radiologists who often face pressure to annotate large volumes of data. allows healthcare professionals to focus more on patient care rather than the time-consuming task of data labeling. Additionally, by enabling institutions to train models without sharing raw data, our approach facilitates collaboration across diverse datasets while preserving patient confidentiality. Moreover, as a FL-based framework, our global model benefits from data collected from multiple hospitals, enhancing its reliability and robustness. This collective learning from various sources allows the model to train better across different patient populations and imaging conditions captured from different devices, ultimately leading to improved diagnostic precision. The enhanced ability to learn from diverse data sets ensures that the model remains effective in real-world scenarios, where variations in data are common.

In summary, our privacy-preserving FL-SSL framework not only tackles critical technical challenges in maintaining privacy during medical image classification but also addresses urgent clinical needs by providing a reliable system that does not require extensive annotated data. Future applications may include extending this framework to other diseases and imaging modalities, further maximizing its impact on healthcare, and potentially transforming clinical practice.

Conclusion

This research is conducted to reduce the workload of radiologists with a privacy-preserving automated system that classifies COVID-19 and normal CT images with the FL-SSL framework, incorporating PHE for secure aggregation within the FL system. This framework ensures that medical data are secure throughout the model processing and during data transmission from client to server. Two different datasets are used, one is considered as a labeled dataset, and the other is used as an unlabeled dataset. As this study utilizes the lung CT scans for the classification, the CT slice containing largest lung portion is selected through an automatic process in order to achieve a simpler framework with less computational complexity. The unlabeled dataset is trained with the BYOL constructive learning framework with a VGG19 + attention CNN-based encoder. The updated encoder of the SSL framework is utilized as the global server’s encoder through which the labeled dataset is classified. While the proposed framework demonstrates effectiveness in distinguishing between COVID-19 and normal lung conditions with privacy preservation, it is important to explore real-word datasets which will include a broader range of lung conditions. Furthermore, the opportunities of FL with diverse medical imaging datasets collected from various healthcare centers should be further explored. This would serve as a foundation for improving and enhancing the framework’s performance in more varied medical circumstances and offering a more nuanced comprehension of its adaptability.

Data availability

The datasets are publicly available and free. The links are attached.https://mosmed.ai/datasets/covid191110/ and https://www.kaggle.com/datasets/maedemaftouni/large-covid19-ct-slice-dataset.

References

Bhosale, Y. H. & Patnaik, K. S. PulDi-COVID: Chronic obstructive pulmonary (lung) diseases with COVID-19 classification using ensemble deep convolutional neural network from chest X-ray images to minimize severity and mortality rates, Biomed Signal Process Control https://doi.org/10.1016/j.bspc.2022.104445 (2023).

Team, E. E. Note from the editors: World Health Organization declares novel coronavirus (2019-nCoV) sixth public health emergency of international concern. Euro. Surveill. 25 (5). https://doi.org/10.2807/1560-7917.ES.2020.25.5.200131e (2020).

Cheng, K. et al. WHO declares the end of the COVID-19 global health emergency: Lessons and recommendations from the perspective of ChatGPT/GPT-4, Int. J. Surg., 109 (9), 2859–2862. https://doi.org/10.1097/JS9.0000000000000521 (2023).

Zaki, N., Alashwal, H. & Ibrahim, S. Association of hypertension, diabetes, stroke, cancer, kidney disease, and high-cholesterol with COVID-19 disease severity and fatality: A systematic review, Diabetes and Metabolic Syndrome: Clinical Research and Reviews, 14 (5), 1133–1142. https://doi.org/10.1016/j.dsx.2020.07.005 (2020)

Akter, S., Shamrat, F. M. J. M., Chakraborty, S., Karim, A. & Azam, S. COVID-19 Detection Using Deep Learning Algorithm on Chest X-ray Images, Biology, 10 (11). https://doi.org/10.3390/biology10111174 (2021).

Edouard, M. et al. Coronavirus Pandemic (COVID-19), Our World in Data. Accessed 30 Mar 2024. [Online]. Available: https://ourworldindata.org/coronavirus

Islam, N. et al. Thoracic imaging tests for the diagnosis of COVID-19, Mar. 16, John Wiley and Sons Ltd. https://doi.org/10.1002/14651858.CD013639.pub4 (2021).

Hassan, H. et al. Review and classification of AI-enabled COVID-19 CT imaging models based on computer vision tasks, Elsevier Ltd. https://doi.org/10.1016/j.compbiomed.2021.105123 (2022)

Bhuiyan, M. R. I. et al. Deep Learning-based Analysis of COVID-19 X-ray Images: Incorporating clinical significance and assessing misinterpretation, Digital Health, 9, 1–21. https://doi.org/10.1177/20552076231215915 (2023).

Hussain, E. et al. CoroDet: A deep learning based classification for COVID-19 detection using chest X-ray images. Chaos Solitons Fractals. 142 https://doi.org/10.1016/j.chaos.2020.110495 (2021).

Li, M. et al. Coronavirus Disease (COVID-19): Spectrum of CT findings and temporal progression of the disease, Elsevier USA. https://doi.org/10.1016/j.acra.2020.03.003 (2020).

Agnihotri, A. & Kohli, N. Challenges, opportunities, and advances related to COVID-19 classification based on deep learning. KeAi Commun. Co. https://doi.org/10.1016/j.dsm.2023.03.005 (2023).

Kovács, A. et al. The sensitivity and specificity of chest CT in the diagnosis of COVID-19. https://doi.org/10.1007/s00330-020-07347-x/Published

Pu, J. et al. Automated quantification of COVID-19 severity and progression using chest CT images. Eur. Radiol. 31 (1), 436–446. https://doi.org/10.1007/s00330-020-07156-2 (2021).

Adnan, M., Kalra, S., Cresswell, J. C., Taylor, G. W. & Tizhoosh, H. R. Federated learning and differential privacy for medical image analysis. Sci. Rep. 12 (1). https://doi.org/10.1038/s41598-022-05539-7 (2022).

Liu, W. K., Zhang, Y., Yang, H. & Meng, Q. A Survey on differential privacy for medical data analysis. Springer Sci. Bus. Media Deutschland GmbH https://doi.org/10.1007/s40745-023-00475-3 (2023).

Rakhmiddin, R. & Lee, K. Y. Federated learning for clinical event classification using vital signs data. Multimodal Technol. Interact. 7 (7). https://doi.org/10.3390/mti7070067 (2023).

Sheller, M. J. et al. Federated learning in medicine: Facilitating multi-institutional collaborations without sharing patient data. Sci. Rep. 10 (1). https://doi.org/10.1038/s41598-020-69250-1 (2020).

Morozov, S. P. et al. MOSMEDDATA: Chest CT scans with COVID-19 related findings dataset, (2020). [Online]. Available: www.mosmed.ai.

Maftouni, M. et al. A Robust ensemble-deep learning model for COVID-19 diagnosis based on an integrated CT scan images database, [Online]. Available: https://www.researchgate.net/publication/352296409 (2021).

Kaku, A., Upadhya, S. & Razavian, N. Intermediate Layers matter in momentum contrastive self supervised learning.

Chen, T., Kornblith, S., Norouzi, M. & Hinton, G. A simple framework for contrastive learning of visual representations, [Online]. Available: https://github.com/google-research/simclr (2020).

Salama, W. M. & Aly, M. H. Framework for COVID-19 segmentation and classification based on deep learning of computed tomography lung images. J. Electron. Sci. Technol. 20 (3), 246–256. https://doi.org/10.1016/j.jnlest.2022.100161 (2022).

Ravi, V., Narasimhan, H., Chakraborty, C. & Pham, T. D. Deep learning-based meta-classifier approach for COVID-19 classification using CT scan and chest X-ray images, In Multimedia Systems, Springer Science and Business Media Deutschland GmbH, 1401–1415. https://doi.org/10.1007/s00530-021-00826-1. (2022).

Fung, D. L. X., Liu, Q., Zammit, J., Leung, C. K. S. & Hu, P. Self-supervised deep learning model for COVID-19 lung CT image segmentation highlighting putative causal relationship among age, underlying disease and COVID-19. J. Transl Med. 19 (1). https://doi.org/10.1186/s12967-021-02992-2 (2021).

Ewen, N. & Khan, N. Targeted self supervision for classification on a small covid-19 CT scan dataset. In Proceedings - International Symposium on Biomedical Imaging, IEEE Computer Society, pp. 1481–1485. (2021). https://doi.org/10.1109/ISBI48211.2021.9434047

Gazda, M., Plavka, J., Gazda, J. & Drotar, P. Self-supervised deep convolutional neural network for chest X-Ray classification. IEEE Access. 9, 151972–151982. https://doi.org/10.1109/ACCESS.2021.3125324 (2021).

Park, J., Kwak, I. Y. & Lim, C. A deep learning model with self-supervised learning and attention mechanism for covid-19 diagnosis using chest x-ray images. Electron. (Switzerland). 10 (16). https://doi.org/10.3390/electronics10161996 (2021).

Yan, R. et al. Label-efficient self-supervised federated learning for tackling data heterogeneity in medical imaging. IEEE Trans. Med. Imaging. 42 (7), 1932–1943. https://doi.org/10.1109/TMI.2022.3233574 (2023).

Yang, D. et al. Federated semi-supervised learning for COVID region segmentation in chest CT using multi-national data from China, Italy, Japan. Med. Image Anal. 70 https://doi.org/10.1016/j.media.2021.101992 (2021).

Alam, M. U. & Rahmani, R. Federated semi-supervised multi-task learning to detect covid-19 and lungs segmentation marking using chest radiography images and raspberry pi devices: An internet of medical things application. Sensors 21 (15). https://doi.org/10.3390/s21155025 (2021).

Chetoui, M. & Akhloufi, M. A. Peer-to-peer federated learning for COVID-19 detection using transformers. Computers 12 (5). https://doi.org/10.3390/computers12050106 (May 2023).

Zhang, Y. et al. Mar., Pseudo-data based self-supervised federated learning for classification of histopathological images, IEEE Trans. Med. Imaging, 43 (3), 902–915. https://doi.org/10.1109/TMI.2023.3323540 (2024).

Florescu, L. M. et al. Federated Learning Approach with Pre-trained Deep Learning models for COVID-19 detection from unsegmented CT images. Life 12 (7). https://doi.org/10.3390/life12070958 (2022).

Kumar, R. et al. Jul., Blockchain-federated-learning and deep learning models for COVID-19 detection using CT imaging, IEEE Sens. J., 21 (14), 16301–16314. https://doi.org/10.1109/JSEN.2021.3076767 (2021).

Heidari, A. et al. A new lung cancer detection method based on the chest CT images using Federated Learning and blockchain systems, Artif .Intell. Med.. 141. https://doi.org/10.1016/j.artmed.2023.102572 (2023)

Gupta, M., Kumar, M. & Gupta, Y. A blockchain-empowered federated learning-based framework for data privacy in lung disease detection system. Comput. Hum. Behav. 158 https://doi.org/10.1016/j.chb.2024.108302 (2024).

Li, D. et al. Pmir: an efficient privacy-preserving medical images search in cloud-assisted scenario. Neural Comput. Appl. 36 (3), 1477–1493. https://doi.org/10.1007/s00521-023-09118-3 (2024).

Mahajan, H. B. & Junnarkar, A. A. Smart healthcare system using integrated and lightweight ECC with private blockchain for multimedia medical data processing, Multimed. Tools Appl., 82 (28), 44335–44358 (2023). https://doi.org/10.1007/s11042-023-15204-4

Qamar, S. Federated convolutional model with cyber blockchain in medical image encryption using multiple Rossler lightweight logistic sine mapping. Comput. Electr. Eng. 110 https://doi.org/10.1016/j.compeleceng.2023.108883 (2023).

Hijazi, N. M., Aloqaily, M., Guizani, M., Ouni, B. & Karray, F. Secure federated learning with fully homomorphic encryption for IoT communications, IEEE Internet Things J.. 11 (3), 4289–4300. https://doi.org/10.1109/JIOT.2023.3302065 (2024).

Rieyan, S. A. et al. An advanced data fabric architecture leveraging homomorphic encryption and federated learning. Inform. Fusion. 102 https://doi.org/10.1016/j.inffus.2023.102004 (2024).

Sathish Kumar, G., Premalatha, K., Uma Maheshwari, G. & Kanna, P. R. No more privacy Concern: A privacy-chain based homomorphic encryption scheme and statistical method for privacy preservation of user’s private and sensitive data, Expert Syst Appl 234. https://doi.org/10.1016/j.eswa.2023.121071 (2023).

Truhn, D. et al., “Encrypted federated learning for secure decentralized collaboration in cancer image analysis,” Medical Image Analysis, vol. 92, https://doi.org/10.1016/j.media.2023.103059 (2024).

Walskaar, I., Tran, M. C. & Catak, F. O. A Practical implementation of medical privacy-preserving federated learning using multi-key homomorphic encryption and flower framework, Cryptography, 7 (4). https://doi.org/10.3390/cryptography7040048 (2023).

Khan, H. M., Khan, A., Jabeen, F., Anjum, A. & Jeon, G. Fog-enabled secure multiparty computation based aggregation scheme in smart grid. Comput. Electr. Eng. 94 https://doi.org/10.1016/j.compeleceng.2021.107358 (2021).

Tun, N. W. & Mambo, M. Secure PUF-based authentication systems. Sensors 24 (16). https://doi.org/10.3390/s24165295 (2024).

Shi, Y. & Nekouei, E. Secure adaptive control of linear networked systems using paillier encryption. IEEE Trans. Circuits Syst. I Regul. Pap. https://doi.org/10.1109/TCSI.2024.3401223 (2024).

Yazdinejad, A., Dehghantanha, A., Karimipour, H., Srivastava, G. & Parizi, R. M. A robust privacy-preserving federated learning model against model poisoning attacks. IEEE Trans. Inf. Forensics Secur. 19, 6693–6708. https://doi.org/10.1109/TIFS.2024.3420126 (2024).

Namani, S., Akkapeddi, L. S., & Bantu, S. Performance analysis of VGG-19 deep learning model for COVID-19 detection. 2022 9th International Conference on Computing for Sustainable Global Development (INDIACom), 781–787 (2022). https://doi.org/10.23919/indiacom54597.2022.9763177

Nguyen, T. H., Nguyen, T. N. & Ngo, B. V. A VGG-19 Model with transfer learning and image segmentation for classification of tomato leaf disease, AgriEngineering, 4 (4), 871–887. https://doi.org/10.3390/agriengineering4040056 (2022).

Sun, C., Ai, Y., Wang, S. & Zhang, W. A two-branch network for weakly supervised object localization, Electronics (Switzerland), 9 (6), 1–15. https://doi.org/10.3390/electronics9060955 (2020).

Agarap, A. F. Deep learning using rectified linear units (ReLU). [Online]. Available: http://arxiv.org/abs/1803.08375 (2018).

Wang, X., Qin, Y., Wang, Y., Xiang, S. & Chen, H. ReLTanh: An activation function with vanishing gradient resistance for SAE-based DNNs and its application to rotating machinery fault diagnosis, Neurocomputing, 363, 88–98. https://doi.org/10.1016/j.neucom.2019.07.017 (2019).

Zafar, A. et al. A Comparison of pooling methods for convolutional neural networks, MDPI. https://doi.org/10.3390/app12178643 (2022).

Hu, X., Li, T., Zhou, T., Liu, Y. & Peng, Y. Contrastive learning based on transformer for hyperspectral image classification, Appl. Sci. (Switzerland), 11 (18) (2021). https://doi.org/10.3390/app11188670

Grill, J. B. et al. Bootstrap your own latent a new approach to self-supervised learning. [Online]. Available: https://github.com/deepmind/deepmind-research/tree/master/byol

Fang, H. & Qian, Q. Privacy preserving machine learning with homomorphic encryption and federated learning. Future Internet. 13 (4). https://doi.org/10.3390/fi13040094 (2021).

Zhang, J. et al. VPFL: A verifiable privacy-preserving federated learning scheme for edge computing systems, Digital Communications and Networks. 9 (4), 981–989. https://doi.org/10.1016/j.dcan.2022.05.010 (2023).

Zhang, F., Huang, H., Chen, Z. & Huang, Z. Robust and privacy-preserving federated learning with distributed additive encryption against poisoning attacks. Comput. Netw. 245 https://doi.org/10.1016/j.comnet.2024.110383 (2024).

Xie, Q. et al. Efficiency optimization techniques in privacy-preserving federated learning with homomorphic encryption: A brief survey. IEEE Internet Things J. 11, 24569–24580. https://doi.org/10.1109/JIOT.2024.3382875 (2024).

Wang, L. et al. PriVeriFL: Privacy-preserving and aggregation-verifiable federated learning. IEEE Trans. Serv. Comput. https://doi.org/10.1109/TSC.2024.3451183 (2024).

Bernal Bernabe, J. et al. Privacy-preserving solutions for blockchain: Review and challenges. Inst. Electr. Electron. Eng. Inc. https://doi.org/10.1109/ACCESS.2019.2950872 (2019).

Brik, B., Ksentini, A. & Bouaziz, M. Federated learning for UAVs-enabled wireless networks: Use cases, challenges, and open problems. IEEE Access. 8, 53841–53849. https://doi.org/10.1109/ACCESS.2020.2981430 (2020).

Moshawrab, M., Adda, M., Bouzouane, A., Ibrahim, H. & Raad, A. Reviewing federated learning aggregation algorithms; Strategies, contributions, limitations and future perspectives. MDPI. (2023). https://doi.org/10.3390/electronics12102287

Liu, J. et al. FedPA: An adaptively partial model aggregation strategy in federated learning.

Kermany, D. Z. K. G. M. Labeled optical coherence tomography (OCT) and chest x-ray images for classification. Mendeley Data. (2018).

Melanoma Skin. Cancer Dataset of 10000 Images. [Online]. Available: https://www.kaggle.com/datasets/hasnainjaved/melanoma-skin-cancer-dataset-of-10000-images

M. J. G.-C. W. C. de A. P. Wilfrido Gómez-Flores, BUS-BRA: A breast ultrasound dataset with BI-RADS categories, Zenodo. (2023).

Funding

No funds, grants or other support was received.

Author information

Authors and Affiliations

Contributions

Sadia Sultana Chowa, Sami Azam: Conceptualization, Methodology, Software. Md Rahad Islam Bhuiyan and Asif Karim: Data curation, Writing- Original draft preparation, interim review and editing. Mst. Sazia Tahosin and Sidratul Montaha: Visualization, Investigation. Sadia Sultana Chowa, Mehedi Hassan: Validation. Mohod Asif Shah, Sami Azam: Writing- Reviewing and Finalization.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Consent for publication

All authors provide the consent for publication.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Chowa, S.S., Bhuiyan, M.R.I., Tahosin, M.S. et al. An automated privacy-preserving self-supervised classification of COVID-19 from lung CT scan images minimizing the requirements of large data annotation. Sci Rep 15, 226 (2025). https://doi.org/10.1038/s41598-024-83972-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-024-83972-6