Abstract

To address the challenges of high labor intensity and low harvesting efficiency in shiitake mushroom production and management, this article presents a novel detection and classification method based on mamba-YOLO. This method adheres to the picking standards and grade specifications for shiitake mushrooms, enabling the automatic detection and quality grading of the mushrooms. Experiments conducted on a self-constructed shiitake mushroom dataset demonstrate that mamba-YOLO achieves precision (P), recall (R), mean average precision calculated at an IoU threshold of 50% ([email protected]), and average precision computed over IoU thresholds ranging from 50% to 95% in increments of 5% ([email protected]–0.95) of 98.89%, 98.79%, 97.86%, and 89.97%. The classification accuracies for various categories—mushroom stick, plane-surface immature, plane-surface mature, cracked-surface immature, cracked-surface mature, deformed mature, and deformed immature shiitake mushrooms—are 98.1%, 98.3%, 98.2%, 98.8%, 98.5%, 96.2%, and 96.9%. These results indicate that the proposed detection and grading method effectively determines the maturity of shiitake mushrooms and categorizes them based on cap texture characteristics. The network detection speed of 8.3 ms falls within the acceptable range for real-time applications, and the model’s parameters are compact at 6.1 M, facilitating easy deployment and scalability. Overall, the lightweight design, precise detection accuracy, and efficient detection speed of mamba-YOLO provide robust technical support for shiitake mushroom harvesting robots.

Similar content being viewed by others

Introduction

Shiitake mushrooms have become an important part of the global edible mushroom industry due to their unique nutritional value and wide range of markets1. As one of the most widely cultivated edible mushrooms, the global shiitake mushroom market size in 2024 was approximately 68 billion USD2. In China, the production of shiitake mushrooms in 2024 reached about 13.152 million tons, accounting for over 90% of the global total production3. This sector played a crucial role in rural economic development and agricultural modernization. With the widespread adoption of factory production methods and the integration of intelligent management technologies, the shiitake mushroom industry is advancing toward a path of efficient and sustainable development4. Currently, substitute cultivation is the predominant technique for shiitake mushroom production. This method is favored for its high yields, ease of management, and short production cycle5,6,7. This method has become the mainstream approach for large-scale shiitake mushroom production, particularly in factory settings. However, the primary harvesting method still relies on manual picking. This approach requires substantial manpower, material, and financial resources. Moreover, missing the optimal harvest time can negatively impact the quality and market competitiveness of shiitake mushrooms. To achieve rapid and accurate shiitake mushroom harvesting, mechanized harvesting has become the preferred method. Among the numerous technical challenges in mechanized harvesting, precise detection and classification of shiitake mushrooms stand out as crucial components. This process involves multiple disciplines and aspects such as machine vision, deep learning, agricultural materials science, and decision modeling. As such, this area remains one of the most critical and challenging aspects in the development of automated shiitake mushroom harvesting systems.

Traditional detection methods primarily rely on features such as color, shape, and texture to identify target objects8,9,10,11. Many researchers have explored mushroom detection using conventional image processing techniques. Wang et al.12 developed an automatic detection and sorting system for fresh white shiitake mushrooms. They proposed a method that utilizes the watershed algorithm and Canny edge detection for feature recognition and extraction. Their approach effectively recognizes and classifies fresh white mushrooms. It also mitigates the impact of shadows and petioles on the images through algorithmic adjustments. Yang et al.13 proposed a segmentation recognition method based on edge gradient features to address the challenge of overlapping Agaricus bisporus in complex factory environments. Through global gradient threshold calculation, Canny edge and Harris corner detection, the method achieved an identification rate exceeding 96% with an average coordinate deviation rate below 1.59%. However, traditional image processing methods have difficulty in maintaining recognition accuracy in the face of variable lighting and complex backgrounds due to the limitations of the algorithms themselves. This requires researchers to utilize new technological tools to overcome the limitations of traditional methods.

With advancements in machine vision and deep learning technologies, their applications in agriculture have become increasingly prevalent14,15,16,17,18,19. This approach enhances the recognition accuracy of crops and accelerates the recognition speed. It significantly improves the adaptability and generalization capabilities of the models. In the realm of mushroom recognition, Subramani et al.20 conducted a comparative analysis of various algorithms, including SVM, ResNet50, YOLOv5, and AlexNet, to effectively classify non-toxic mushrooms. Ketwongsa et al.21 proposed a CNN and R-CNN-based method to classify edible and poisonous mushrooms in Thailand, achieving accuracies of 98.50% and 95.50%, respectively, with reduced testing time. Maurya et al.22 utilized machine-learning techniques to extract texture features from mushrooms and employed an SVM classifier to identify poisonous varieties. Research on the detection of shiitake mushrooms is relatively limited. Ozbay et al.23 employed Grad-CAM, LIME, and Heatmap methods within a CNN architecture to visualize images of shiitake mushrooms and extract deep features from various structural images. They utilized the ASO algorithm for feature selection in the CNN model, successfully identifying and classifying nine types of mushroom data, achieving a classification accuracy of 95.45% across different species. Liu et al.24 proposed an efficient channel pruning mechanism based on the YOLOX framework for the quality detection and grading of shiitake mushrooms. The algorithm significantly reduces the number of model parameters compared to the original network, while maintaining detection accuracy. However, the model’s recognition efficiency is affected when facing shiitake mushrooms with different growth stages and morphologies, which poses a potential problem for mechanized individual harvesting. The growth cycle of shiitake mushrooms usually lasts for several months, from the growth and germination of the mycelium to its final maturity. During this process, the morphological classification of shiitake mushrooms includes different shapes of the substrate, such as oval and round. Therefore, the maturity classification of shiitake mushrooms can be realized by combining machine vision with the shape characteristics at different periods, which further improves the efficiency and precision of shiitake mushrooms individual picking.

The above studies provided valuable insights for this work. However, there is still room for improvement in the practical application of shiitake mushroom mechanized harvesting. Based on the above limitations, this study focuses on facility-cultivated shiitake mushrooms, proposing an identification and grading method based on mamba-YOLO. Aiming at the recognition difficulties of shiitake mushrooms, such as small targets, dense growth, and complex backgrounds, we combine a multi-scale detection mechanism to enhance the perception of small targets. This approach strengthens the model’s ability to segment and recognize targets in complex backgrounds. Aiming at the recognition difficulties of different growth stages of shiitake mushrooms, the maturity grading is realized through different shapes of sub-entities combined with relevant decision analysis. In addition, considering that effective quality grading can enhance the market competitiveness of shiitake mushrooms after harvesting, the texture of the shiitake mushroom cap is used as the grading standard. By combining this with a deep learning model, accurate classification of shiitake mushrooms is achieved, which effectively improves the accuracy of quality grading. This study provides reliable technical support for the intelligent harvesting and quality assessment of shiitake mushrooms. It offers significant reference value for the intelligent identification and accurate classification of many small-target harvesting crops, with shiitake mushrooms serving as a representative example.

Materials and methods

Data set construction

The growth characteristics of lentinula Edodes

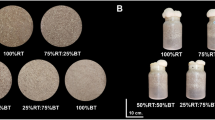

The cultivation methods for shiitake mushrooms primarily consist of cut-log cultivation and substitute cultivation, as shown in Fig. 1. In cut-log cultivation, sections of broadleaf trees measuring 10–15 mm in diameter are selected, treated, and drilled to inoculate with mycelium. This method typically involves a lengthy growth cycle and yields relatively low output. Conversely, substitute cultivation entails packing a sterilized cultivation medium into bags, followed by inoculation with shiitake mushroom strains. This approach is characterized by a shorter growth cycle, higher yields, and easier management, making it well-suited for large-scale factory production and subsequent mechanized management.

The growth process of shiitake mushrooms encompasses several stages: mycelium growth, germination, fruiting body development, and maturation. Following inoculation and cultivation, the mycelium growth stage transitions into the fruiting body growth phase after germination. The morphology of shiitake mushroom exhibits diversity as it progresses through these growth stages, as shown in Fig. 2. Harvesting is guided by the principle of picking the ripe first and the unripe later; specifically, shiitake mushroom with a cap diameter exceeding 40 mm is considered pickable. Additionally, quality evaluation criteria involve observing the surface texture of the fruiting body cap, as illustrated in Fig. 3. Key indices such as smoothness and texture clarity are analyzed for grading, facilitating subsequent harvesting and marketing operations.

Collection and production of data sets

Experimental samples were collected from the Qihe Biological Intelligent Factory in Zichuan District, Zibo, Shandong Province. The cultivation method employed was substitute cultivation, using the Qihe 9 variety. Sample collection occurred from July to August 2024, with a Huawei Mate 30 Pro smartphone used as the imaging equipment, the specific parameter information is shown in Table 1. As illustrated in Fig. 4, the shooting device was fixed 40 cm away from the shiitake mushroom to maintain parallel alignment with the target. Image acquisition was achieved by adjusting the position of the tripod, resulting in a total of 2,320 images collected. The dataset exhibited a variety of growth characteristics, as shown in Fig. 5, including single distribution, occlusion, and overlap. These complex growth features significantly increased the challenges associated with the detection and classification of shiitake mushrooms.

Photoshop software was utilized to resize the collected dataset, reducing the resolution to 640 × 480 pixels. Following this resizing, the dataset was partitioned into training (1624 images), validation (465 images), and test (231 images) subsets in a ratio of 7:2:1, based on common practice in similar research studies17,25,26. Additionally, LabelImg software was employed to annotate all images within the training set. During the labeling process, the smallest external rectangle was used to mark the outline of each shiitake mushroom, ensuring that each rectangular label contained only one mushroom while minimizing background pixels. Based on the texture and diameter of the cap, shiitake mushrooms were classified into six categories: cracked-surface mature (c-mature), cracked-surface immature (c-immature), plane-surface mature (p-mature), plane-surface immature (p-immature), deformed mature (d-mature), and deformed immature (d-immature). Additionally, the mushroom stick was labeled as “mushroom stick” to facilitate subsequent picking. To enhance the model’s generalization and robustness, the data set was enhanced using python scripts27, and the image enhancement operations are shown in Table 2. The tagged data were augmented using random brightness adjustments and Gaussian noise, resulting in a total of 6,960 amplified images, and some of the enhancement examples are shown in Fig. 6.

Lentinula Edodes detection and classification method based on mamba-YOLO

Deep learning has gained significant traction in the field of computer vision, with YOLO emerging as a milestone in object detection. This framework continuously integrates new technologies to enhance model performance. The introduction of a transformer structure improves the model’s global modeling capabilities, however, it also increases computational complexity. To address this, the latest mamba-YOLO incorporates the state space model (SSM), which alleviates the computational burden through its linear time complexity advantages. Innovations in the mamba-YOLO network include28:

1) Introduce SSM to solve the problem of long-distance dependence;

2) Optimize the basis of SSM and make specific adaptations for target detection tasks;

3) LS Block and RG Block modules are designed to accurately capture the local dependence of images and enhance the robustness.

The network structure is shown in Fig. 7.

The network structure of mamba-YOLO primarily consists of the ODMamba Backbone, PAFPN(Path Aggregation Feature Pyramid Network), and Head. The ODMamba Backbone represents the backbone network of the model and includes components such as the Simple Stem, ODSSBlock, and the “Vision Clue Merge” module. This backbone is utilized to process the input images of shiitake mushrooms, gradually extracting and merging features. The PAFPN provides the configuration for the feature pyramid, incorporating the ODSSBlock module along with upsample、concat operations, and convolution layers to fuse and refine features across different scales. The Head defines the structure of the detection head, featuring distinct layers designed for detecting small, medium, and large targets.

(i) ODMamba Backbone.

ODMamba Backbone is the backbone network of the model, which is responsible for processing input images and extracting preliminary features to generate enough information for further processing by subsequent modules. First, the inputted shiitake mushroom image is preprocessed by the Simple Stem module. The structure diagram of the Simple Stem module is shown in Fig. 8. Segmentation blocks are used as its initial module to divide the image into non-overlapping fragments and initially extract the image features of the shiitake mushroom.

The processed feature map is extracted by a series of ODSSblocks. Its structure diagram is shown in Fig. 9. The module uses state space model technology to capture features at different levels effectively. The module primarily consists of three components: LSBlock (Fig. 9d), RGBlock (Fig. 9b), and SS2D (Fig. 9c).

After a series of operations, such as formulas (1) ~ (3), the network can learn deeper and richer feature representations while maintaining the efficiency and stability of the training reasoning process through batch normalization:

where Ac is the activation function, BN is the Batch Norm operation, LN is the Layer Norm operation, and RG is the RG Block module.

As shown in Fig. 9b, RGBlock improves the performance of the model at low computational cost by creating two branches from the input and implementing a full join layer in the form of convolution on each branch. Using depth-separated convolution as a position-coding module on one branch and reflecting the gradient more effectively through residual cascades during training makes it less computationally expensive and significantly improves performance by retaining and using the spatial structure information of the image. The RGBlock module uses nonlinear GeLU as the activation function to control the information flow at each level, merges with another branch by element multiplication, refines the channel information by convolution and global features and finally performs feature summation. RGBlock can capture more global features with only a slight increase in computing costs.

As shown in Fig. 9d, the LSBlock module enhances the extraction of local features. Initially, depth convolution is applied to the specific input features, allowing each input channel to be processed separately without mixing channel information. This approach effectively extracts local spatial information from the input feature map, leading to better accuracy while minimizing computational costs and reducing the number of parameters. Following this, batch normalization is performed, providing a regularization effect that helps mitigate overfitting. Subsequently, the module employs convolution to mix channel information, ensuring that the distribution of information is better maintained through the activation function. This allows the model to learn more complex feature representations, enabling it to extract rich multi-scale contextual information from the input feature map. The processed features are fused with the original input through residual splicing, allowing the model to integrate features of varying dimensions within the image. By merging the processed features with the original input, the method preserves the original information while enhancing it with learned features. This fusion process enables the model to better capture complex patterns without losing important information, thus improving the model’s performance.

The Vision Clue Merge module further integrates and optimizes these features, and its structure is shown in Fig. 10. It ensures that the features extracted from different levels can be effectively combined, and enhance the detection ability of small targets (immature shiitake mushroom) and large targets (mature shiitake mushroom).

(ii) PAFPN (Pyramid Attention Feature Pyramid Network).

The PAFPN uses the features provided by ODSSBlock for multi-scale processing. In this stage, the spatial resolution of the feature map is adjusted and refined by combining upsample (U) and concat (C) operations and convolution processing. Through such configuration, the model can process high-resolution large-scale features and low-resolution small-scale features at the same time, and can more accurately identify and classify shiitake mushrooms with different sizes and different mulch textures that appear on the screen at the same time.

(iii) Head.

The Head is the last part of the model and is responsible for the final object detection task. It utilizes specialized convolutional layers to predict the bounding box positions, sizes, and categories of each detected object. Typically, the Head incorporates detection layers of varying scales to accommodate a wide range of objects, from small to large. This design ensures that the model can be effectively adapted to different sizes and scenarios of shiitake mushrooms, enhancing its versatility in real-world applications.

Experimental environment

The mamba-YOLO recognition model was trained on a computer with the following configuration parameters: 12 th Gen Intel(R) Core(TM) i9-12900 K with a main frequency of 3.20 GHz, NVIDIA GeForce RTX 3080 Ti graphics card, and Ubuntu operating system.

The algorithm in this study is primarily implemented using Python 3.10. For model training, we utilized PyTorch, a deep learning framework developed by Facebook, further details can be found in the table-linked resources. Additionally, the Mamba-YOLO model used in this study is described in Ref28. The YOLOv8 model, as implemented with the Ultralytics package, is detailed in Ref29. Similarly, the implementations of Faster R-CNN, YOLOv6 s, and YOLOv7 can be found in Ref30,31,32, respectively. A comprehensive list of the software tools used in this study is provided in Table 3.

The specific parameters for model training are detailed in Table 4. The initial learning rate is set to 0.01, following common deep learning practices, to facilitate rapid convergence while maintaining stability. A weight decay of 0.0005 is applied to mitigate overfitting and enhance generalization. Stochastic Gradient Descent (SGD) is chosen as the optimization algorithm for its suitability in large-scale data training and its effectiveness in improving global convergence.

Results and discussion

Results and discussion of Shiitake mushroom detection

Evaluation index

In order to verify the performance of the mamba-YOLO recognition model, some indexes such as precision (P), recall (R), mean average precision (mAP), and detection speed were selected as evaluation indexes33,34:

where the TP is the model correctly identifies a harvestable shiitake mushroom, FP is the model incorrectly classifies a non-harvestable shiitake mushroom as harvestable, FN is the model fails to detect an actually harvestable shiitake mushroom, P(r) is the precision at a given recall level r, dr is the change in recall from the previous level, C is the defect category, in this work, C = 7.

Network training results

Fig. 11a illustrates the loss function’s change curve throughout the training process, clearly indicating that as the number of iterations increases, the loss curve gradually stabilizes. The change curves for precision (P), recall (R), [email protected], and [email protected]–0.95 for the validation set are presented in Fig. 11b. During the initial 100 iterations, various performance metrics exhibit sharp increases and significant fluctuations. As training progresses, these metrics gradually stabilize in their respective ranges.

Results of Shiitake mushroom detection

The performance test of the trained mamba-YOLO recognition model was conducted, with the recognition accuracy and results for each category presented in Table 5; Fig. 12. The table shows that the recognition precision for each category is 96% or higher, yielding a mean average precision of 97.86%. This indicates that shiitake at various quality levels and growth stages can be effectively classified. Additionally, the figure demonstrates that different types of shiitake are accurately identified and classified, even in complex environments characterized by small targets, dense growth, and overlapping occlusion. The confidence levels exceed 0.9, highlighting that the recognition method utilizing mamba-YOLO exhibits strong performance in the shiitake mushroom recognition task.

Discussion of Shiitake mushroom detection

In the process of shiitake mushroom recognition and classification, some shiitake mushrooms are missed or misdetected. The possible reasons are as follows: 1) Influenced by light intensity and the external environment, the network mistakenly identifies small light and shadow patterns in the background as immature shiitake mushrooms, as shown in the arrow at the lower left corner of Fig. 13a; 2) Because the color characteristics of fresh shiitake mushroom are similar to fungus sticks, some small shiitake mushroom are missed, as shown in Fig. 13a with the right arrow and Fig. 13b with the up arrow; 3) Due to the serious occlusion between shiitake mushroom, very few shiitake mushroom are not identified and detected, as shown in Fig. 13b and c.

While there are still a few instances of missed detection during the network recognition process, these cases primarily involve small shiitake mushrooms that have just emerged and immature ones that are heavily shielded. Since these do not meet picking standards, their impact on actual harvesting operations is negligible. This indicates that the mamba-YOLO model maintains high accuracy and confidence for ripe shiitake mushrooms at the picking stage, effectively supporting picking decisions and ensuring production efficiency and operational quality. Future optimizations could involve adjusting the model’s recognition threshold and enhancing its detection capabilities for small targets, further improving feature extraction in complex environments.

Comparison of detection algorithms

To further verify the effectiveness of mamba-YOLO in the classification and recognition of shiitake mushrooms, a comparison was conducted with other mainstream target detection algorithms, including Faster R-CNN30, YOLOv6 s31, YOLOv733, YOLOv834, and YOLOv5 s, under the same conditions. The test results are presented in Table 6.

Table 6 shows that when mamba-YOLO processes shiitake mushroom images, the P, R, [email protected], and [email protected]–0.95 are 98.89%, 98.79%, 97.93%, and 89.97%, the detection speed is 8.3 ms. Compared with the current mainstream advanced models, this network also has certain advantages. The P was 3.99, 1.09, 5.49, 1.44, and 1.22% points higher than Faster R-CNN, YOLOv5 s, YOLOv6 s, YOLOv7, and YOLOv8. The R was higher by 9.49, 2.69, 3.59, 0.6, and 1.16% points. The [email protected] was higher by 7.76, 0.56, 2.88, 0.27, and 0.33. These results demonstrate that mamba-YOLO exhibits excellent overall performance in shiitake mushroom detection and classification, ensuring high precision and supporting the accurate picking process, thereby enhancing automation and intelligence in harvesting.

The detection results of various algorithms are illustrated in Fig. 14. The figure demonstrates that mamba-YOLO outperforms Faster R-CNN, YOLOv5 s, YOLOv6 s, YOLOv7, and YOLOv8 in recognition and classification capabilities. Notably, mamba-YOLO excels at identifying small targets and subtle features, effectively capturing these details. This capability ensures accurate detection even in complex environments, highlighting the robustness of the model.

Meanwhile, to better evaluate the application of our proposed model in mushroom recognition and grading, in Table 7, we summarize the relevant results of previous mushroom recognition studies, including object detection and quality grading. We compare key metrics such as mean average precision, number of parameters, and detection speed. Experimental results demonstrate that our proposed method is competitive in terms of accuracy and detection speed, while also achieving a favorable balance between computational complexity and model size.

Performance evaluation under different lighting conditions

To assess the adaptability of the proposed detection algorithm under varying lighting conditions, we evaluated the model’s performance in different illumination environments. The illumination environments include low illumination (using shading material to reduce ambient light), natural light, high illumination (using LED light for additional lighting), and backlighting, etc. For each scenario, 100 images were collected and used for testing. The experimental results are presented in Table 8; Fig. 15.

Mamba-YOLO achieved the highest detection performance under natural light condition, with the mAP of 97.43%, demonstrating its strong adaptability to standard illumination. In low illumination and high illumination conditions, the detection accuracy exhibited a slight decline. This may be attributed to insufficient feature visibility in weak lighting and overexposure leading to detail loss under intense lighting. The lowest mAP (94.28%) was observed in the backlighting scenario, primarily due to reduced target contrast and shadow interference.

Overall, mamba-YOLO demonstrated strong robustness across different lighting conditions. However, its performance still degrades under extreme illumination variations. Future work will focus on improving its generalization capability in complex agricultural environments through adaptive illumination enhancement techniques and model optimization strategies.

Feasibility analysis of picking robot application

As shown in Table 6, mamba-YOLO achieves a detection speed of 8.3 ms, which is slightly slower than other YOLO versions. However, this speed remains acceptable for real-time applications, allowing robots to recognize and respond effectively in the complex environments where shiitake mushrooms grow. For the picking robot, overall reaction time encompasses various factors, including mechanical action, path planning, and obstacle avoidance. The detection speed of mamba-YOLO fits well within these operational parameters, ensuring the robot can maintain an efficient picking rhythm.

The model’s parameters total 6.1 M, which is lower than those of Faster R-CNN (22.7 M), YOLOv5 s (1.12 M), YOLOv6 s (11.1 M), YOLOv7 (30.4 M), and YOLOv8 (5.1 M). This compact size makes it suitable for deployment on resource-limited embedded devices or low-power platforms. For picking robots, using smaller processing units helps reduce system power consumption, extend battery life, and enhance portability and operational flexibility. Additionally, the reduced model size facilitates easier deployment and updates, enabling rapid migration and scaling of applications across devices.

Mamba-YOLO’s high-precision detection, combined with its relatively lightweight computing requirements, makes it highly feasible for use in picking robots. For agricultural tasks that demand precision and real-time performance, such as shiitake mushroom harvesting, mamba-YOLO not only ensures efficient target detection but also optimizes the robot’s operational efficiency and energy consumption while maintaining high recognition accuracy. This advancement further promotes the development of intelligent agricultural picking technology.

Conclusion and future work

Aiming at the problems of high intensity and low efficiency of artificial harvesting of shiitake mushrooms, this paper proposed and verified the application potential of the mamba-YOLO model in accurate detection and classification of shiitake mushrooms, and realized the detection of different growth stages and quality classification of shiitake mushroom. The experimental results on the self-made shiitake mushroom detection data set showed that the P, R, [email protected], and [email protected]–0.95 of mamba-YOLO were 98.89%, 98.79%, 97.86%, and 89.97%, and the detection speed was 8.3 ms. The picture results showed that mamba-YOLO showed high recognition accuracy and classification ability in the processing of small targets, complex occlusion, and densely distributed shiitake mushroom environment. Combined with its lightweight model design and high detection speed, the model provides effective technical support for the shiitake mushroom picking robot.

Despite its advantages, the proposed shiitake mushroom detection and classification method using mamba-YOLO still has several limitations should be acknowledged. First, the dataset split in this study did not incorporate cross-validation techniques, which could enhance the robustness of model evaluation. Second, the model’s detection accuracy is influenced by variations in lighting conditions, which may affect its performance in diverse real-world scenarios. Third, the algorithm has not yet been tested in real-time on an edge device, leaving its practical deployment performance unverified. Based on the above limitations, future work will focus on expanding image data collection under diverse real-world conditions to enhance data diversity. This will involve increasing the dataset size, incorporating samples from varied scenarios, lighting conditions, and growth stages, and employing data augmentation techniques to improve model generalization and robustness. Additionally, alternative image acquisition methods, such as multi-angle imaging and hyperspectral imaging, will be explored to enhance detection accuracy in highly occluded and visually complex environments. Further optimization of the model’s computational efficiency will also be considered to facilitate deployment on resource-constrained robotic platforms, ensuring real-time performance and scalability in practical applications. Moreover, in future work, we will consider employing cross-validation techniques during the split of training, validation, and testing sets for a more robust evaluation of the model’s performance. This will help ensure more reliable and consistent results across different data splits.

Data availability

Data available on request from the authors. The data that support the findings of this study are available from the corresponding author,upon reasonable request.

References

Wang, Z., Tao, K., Yuan, J. & Liu, X. Design and experiment on mechanized batch harvesting of Shiitake mushrooms. Comput. Electron. Agric. 217, 108593 (2024).

Market Research (2024). https://www.polarismarketresearch.com/industry-analysis/mushroom-market

Xun, M. Z. Production analysis of Chinese mushroom industry and top ten provinces and cities in terms of production from 2010 to 2024. (2025). https://www.dongfangqb.com/article/11206

Velliangiri, S., Sekar, R. & Anbhazhagan, P. Using MLPA for smart mushroom farm monitoring system based on IoT. Int. J. Netw. Virtual Organ. 22 (4), 334–346 (2020).

Kobayashi, T. et al. Mushroom yield of cultivated Shiitake (Lentinula edodes) and fungal communities in logs. J. Res. 25 (4), 269–275 (2020).

Atila, F. Compositional changes in lignocellulosic content of some agro-wastes during the production cycle of Shiitake mushroom. Sci. Hortic. 245, 263–268 (2019).

Gu, X. et al. Sustainability assessment of a Qingyuan mushroom culture system based on emergy. Sustainability 11 (18), 4863 (2019).

Tian, Y., Yang, G., Wang, Z., Li, E. & Liang, Z. Instance segmentation of Apple flowers using the improved mask R–CNN model. Biosyst Eng. 193, 264–278 (2020).

Liu, Q., Chen, S. L., Chen, Y. X. & Yin, X. C. Improving license plate recognition via diverse stylistic plate generation. Pattern Recognit. Lett. 183, 117–124 (2024).

Greer, R., Gopalkrishnan, A., Keskar, M. & Trivedi, M. M. Patterns of vehicle lights: addressing complexities of camera-based vehicle light datasets and metrics. Pattern Recognit. Lett. 178, 209–215 (2024).

Kurtulmus, F., Lee, W. S. & Vardar, A. Green citrus detection using ‘eigenfruit’, color and circular Gabor texture features under natural outdoor conditions. Comput. Electron. Agric. 78 (2), 140–149 (2011).

Wang, F. et al. An automatic sorting system for fresh white button mushrooms based on image processing. Comput. Electron. Agric. 151, 416–425 (2018).

Yang, S., Ni, B., Du, W. & Yu, T. Research on an improved segmentation recognition algorithm of overlapping agaricus bisporus. Sensors 22 (10), 3946 (2022).

Romero-Bautista, V., Altamirano-Robles, L., Díaz-Hernández, R. & Zapotecas-Martínez, S. Sanchez-Medel, N. Evaluation of visual SLAM algorithms in unstructured planetary-like and agricultural environments. Pattern Recognit. Lett. 186, 106–112 (2024).

Amziane, A., Losson, O., Mathon, B. & Macaire, L. MSFA-Net: A convolutional neural network based on multispectral filter arrays for texture feature extraction. Pattern Recognit. Lett. 168, 93–99 (2023).

Zheng, T., Jiang, M., Li, Y. & Feng, M. Research on tomato detection in natural environment based on RC-YOLOv4. Comput. Electron. Agric. 198, 107029 (2022).

Fu, L. et al. Fast detection of banana bunches and stalks in the natural environment based on deep learning. Comput. Electron. Agric. 194, 106800 (2022).

Lu, S., Chen, W., Zhang, X. & Karkee, M. Canopy-attention-YOLOv4-based immature/mature Apple fruit detection on dense-foliage tree architectures for early crop load Estimation. Comput. Electron. Agric. 193, 106696 (2022).

Zhang, Y. et al. Real-time strawberry detection using deep neural networks on embedded system (rtsd-net): an edge AI application. Comput. Electron. Agric. 192, 106586 (2022).

Subramani, S., Imran, A. F., Abhishek, T. T. M. & Yaswanth, J. Deep learning based detection of toxic mushrooms in Karnataka. Procedia Comput. Sci. 235, 91–101 (2024).

Ketwongsa, W., Boonlue, S. & Kokaew, U. A new deep learning model for the classification of poisonous and edible mushrooms based on improved AlexNet convolutional neural network. Appl. Sci. -Basel. 12 (7), 3409 (2022).

Maurya, P. & Singh, N. P. Mushroom classification using feature-based machine learning approach. In Proceedings of 3rd International Conference on Computer Vision and Image Processing: CVIP 2018 1, 197–206 (2020).

Özbay, E., Özbay, F. A. & Gharehchopogh, F. S. Visualization and classification of mushroom species with multi-feature fusion of metaheuristics-based convolutional neural network model. Appl. Soft Comput. 164, 111936 (2024).

Liu, Q., Fang, M., Li, Y. & Gao, M. Deep learning based research on quality classification of Shiitake mushrooms. LWT-Food Sci. Technol. 168, 113902 (2022).

Rizvi, S. Z., Jamil, M. & Huang, W. Enhanced defect detection on wind turbine blades using binary segmentation masks and YOLO. Comput. Electron. Agric. 120, 109615 (2024).

Li, Y., Gao, J., Chen, Y. & He, Y. Objective-oriented efficient robotic manipulation: A novel algorithm for real-time grasping in cluttered scenes. Comput. Electron. Agric. 123, 110190 (2025).

Paul, A., Machavaram, R., Kumar, D. & Nagar, H. Smart solutions for capsicum harvesting: unleashing the power of YOLO for detection, segmentation, growth stage classification, counting, and real-time mobile identification. Comput. Electron. Agric. 219, 108832 (2024).

Wang, Z., Li, C., Xu, H., Zhu, X. & Mamba, Y. O. L. O. SSMs-based YOLO for object detection. ArXiv Preprint (2024). arXiv:2406.05835.

Varghese, R. & Sambath, M. Yolov8: A novel object detection algorithm with enhanced performance and robustness. In 2024 International Conference on Advances in Data Engineering and Intelligent Computing Systems (ADICS) 1–6 (2024).

Ren, S., He, K., Girshick, R., Sun, J. & Faster, R-C-N-N. Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 39 (6), 1137–1149 (2016).

Li, C. et al. YOLOv6: A single-stage object detection framework for industrial applications. ArXiv Preprint (2022). arXiv:2209.02976.

Wang, C. Y., Bochkovskiy, A. & Liao, H. Y. M. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition 7464–7475 (2023).

Redmon, J., Divvala, S., Girshick, R. & Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE conference on computer vision and pattern recognition 779–788 (2016).

Jiang, B., Luo, R., Mao, J., Xiao, T. & Jiang, Y. Acquisition of localization confidence for accurate object detection. In Proceedings of the European conference on computer vision (ECCV) 784–799 (2018).

Cong, P., Feng, H., Lv, K., Zhou, J. & Li, S. MYOLO: a lightweight fresh Shiitake mushroom detection model based on YOLOv3. Agriculture-Basel 13 (2), 392 (2023).

Liu, H., Hu, Q. & Huang, D. Research on the wild mushroom recognition method based on transformer and the multi-scale feature fusion compact bilinear neural network. Agriculture-Basel 14 (9), 1618 (2024).

Cengil, E. & Çınar, A. Poisonous mushroom detection using YOLOV5. Turk. J. Sci. Technol. 16 (1), 119–127 (2021).

Zhu, L., Pan, X., Wang, X. & Haito, F. A small sample recognition model for poisonous and edible mushrooms based on graph convolutional neural network. Comput. Intell. Neurosci. 2276318 (2022). (2022) (1).

Acknowledgements

This work was supported by the Shandong Natural Science Foundation Program under Grant (ZR2022MC067), Agricultural Scientific and Technological Innovation Project of Shandong Academy of Agricultural Sciences under Grant (CXGC2023G24) and Agricultural Scientific and Technological Innovation Project of Shandong Academy of Agricultural Sciences (CXGC2024A08) and Agricultural Scientific and Technological Innovation Project of Shandong Academy of Agricultural Sciences under Grant (CXGC2024F07).

Author information

Authors and Affiliations

Contributions

KangKang Qi: Writing - original draft, Methodology, Funding acquisition Zhen Yang: review & editing, Methodology, Visualization.Yangyang Fan:Visualization, Methodology Hualu Song: ValidationZhichao Liang: Visualization, ValidationShuai Wang: Supervision, Writing review &editingFengyun Wang: Funding acquisition, Writing review & editing.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Qi, K., Yang, Z., Fan, Y. et al. Detection and classification of Shiitake mushroom fruiting bodies based on Mamba YOLO. Sci Rep 15, 15214 (2025). https://doi.org/10.1038/s41598-025-00133-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-025-00133-z