Abstract

High-quality whole-slide scanning is expensive, complex, and time-consuming, thus limiting the acquisition and utilization of high-resolution histopathology images in daily clinical work. Deep learning-based single-image super-resolution (SISR) techniques provide an effective way to solve this problem. However, the existing SISR models applied in histopathology images can only work in fixed integer scaling factors, decreasing their applicability. Though methods based on implicit neural representation (INR) have shown promising results in arbitrary-scale super-resolution (SR) of natural images, applying them directly to histopathology images is inadequate because they have unique fine-grained image textures different from natural images. Thus, we propose an Implicit Self-Texture Enhancement-based dual-branch framework (ISTE) for arbitrary-scale SR of histopathology images to address this challenge. The proposed ISTE contains a feature aggregation branch and a texture learning branch. We employ the feature aggregation branch to enhance the learning of the local details for SR images while utilizing the texture learning branch to enhance the learning of high-frequency texture details. Then, we design a two-stage texture enhancement strategy to fuse the features from the two branches to obtain the SR images. Experiments on publicly available datasets, including TMA, HistoSR, and the TCGA lung cancer datasets, demonstrate that ISTE outperforms existing fixed-scale and arbitrary-scale SR algorithms across various scaling factors. Additionally, extensive experiments have shown that the histopathology images reconstructed by the proposed ISTE are applicable to downstream pathology image analysis tasks.

Similar content being viewed by others

Introduction

High-resolution (HR) whole slide images (WSIs) contain rich cellular morphology and pathological patterns, and they are the gold standard for clinical diagnosis and the basis for automated histopathology image analysis tasks, including segmentation and classification1,2,3,4. However, the acquisition and utilization of digital WSIs remain limited in the daily clinical workflow4,5. On the one hand, HR digital WSIs are typically obtained through sophisticated and costly whole-slide scanning equipment, which is often difficult to access in remote and underserved regions. On the other hand, acquiring HR digital WSIs involves using dedicated micro-cameras within the whole slide scanner to capture image fragments from different local regions of the specimen, which are then stitched together to form a complete image depicting the entire specimen6. Such a digital process is highly time-consuming4,5. Furthermore, HR digital WSIs are very large, often reaching gigapixels, which places additional demands on clinical funding support, professional training, ample data storage, and efficient data management2,7. Therefore, if it is possible to scan low-resolution (LR) histopathology images with cheaper devices while designing algorithms that can produce WSIs maintaining high quality, the digitization process could be accelerated, and the clinical application of automated techniques to analyze histopathology images could be promoted4,5,8.

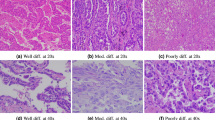

Motivation of our ISTE. (a) Existing SR methods for histopathology images6,9,10,11,12,13,14 can only achieve fixed integer-scale SR and need to retrain the model to achieve different scaling factors; (b) Existing SR algorithms based on implicit neural networks for natural images (exemplified by LIIF15) perform SR directly in the spatial ___domain, and lack attention and enhancement of image texture information; (c) ISTE is an efficient dual-branch framework based on implicit self-texture enhancement for arbitrary-scale histopathology image SR. ISTE further enhances its performance through feature-based and spatial ___domain-based texture enhancement; (d) We use the Canny operator16 to extract texture from both natural and histopathology images. It is evident that, in contrast to natural images, histopathology images contain a large amount of fine-grained cell morphology and arrangement information, and they tend to have richer texture information.

Super-resolution (SR) algorithms based on deep learning can accurately map a single LR image to an HR image10,14,17,18,19,20,21,22,23,24,25. Recently, deep learning-based methods have been widely applied in histopathology image SR. Most approaches construct a large dataset of LR-HR image pairs to train neural networks in an end-to-end manner. The trained neural networks can generate HR images with input LR images. For example, Mukherjee et al.10 utilized a convolutional neural network with an upsampling layer to produce SR images. Chen et al.12 proposed a spatial wavelet dual-stream network to perform the SR image generation. As shown in Fig. 1a, although these previous methods demonstrate promising performance, they can only be trained and tested at fixed integer scales as they rely on up-sampling modules such as learnable deconvolution or pixel shuffle10,12. If different scaling factors are required, the network would need to be retrained for each specific scale. However, in clinical pathological diagnosis, doctors usually need to continuously zoom in and out of sections at different scaling factors, so the applicability of these models is greatly limited. This highlights the importance of arbitrary-scale SR models for histopathology imaging. Once trained, such a model could perform SR at multiple scales without the need for retraining. Furthermore, it enables scaling at any magnification, including non-integer scaling factors. This capability not only assists doctors in observing and analyzing histopathology images at various scales, leading to more accurate diagnoses, but also better meets clinical needs for images at different magnifications. Unfortunately, to our knowledge, no existing arbitrary-scale SR model is specifically designed for histopathology images.

Recently, inspired by implicit neural representation (INR)26,27,28, some studies have pioneered arbitrary-scale SR for natural images15,29. For example, Chen et al.15 proposed the local implicit image function (LIIF), which represents 2D images as latent code through an encoder and maps the input coordinates and corresponding latent variables to RGB values through the decoding function based on the multilayer perceptron (MLP), enabling image SR at arbitrary scales. As shown in Fig. 1b, although these methods can be directly applied to histopathology images, they do not account for the unique texture characteristics of histopathology images, resulting in sub-optimal performance. As shown in Fig. 1d, histopathology images contain a large amount of fine-grained cell morphology and repetition, unlike natural images. Better reconstructing the unique texture characteristics at arbitrary scales is essential for histopathology image SR.

Motivated by the observation above, we propose an efficient dual-branch framework based on implicit self-texture enhancement (ISTE) for arbitrary-scale SR of histopathology images to better deal with its special texture. Figure 1c briefly illustrates the overall framework of ISTE. Specifically, ISTE consists of a feature aggregation branch and a texture learning branch. In the feature aggregation branch, we introduce the Local Feature Interaction (LFI) module, which is designed to enhance feature interaction within local regions and to focus the framework’s attention on discriminative local details such as the morphology and structure of cell nuclei. In the texture learning branch, we propose the Texture Learner (TL), aiming to enhance the learning of high-frequency texture information, including details like intercellular gaps and tissue texture fragments. After that, we design a two-stage texture enhancement strategy for these two branches, where the first stage is feature-based texture enhancement, and the second stage is spatial ___domain-based texture enhancement. Considering that histopathology images contain many similar cell morphologies and periodic texture patterns, we assume that these similar regions can assist each other in reconstruction in the feature space, so we design the self-texture fusion (STF) module to accomplish feature-based texture enhancement. The main idea is to retrieve the texture information from the texture learning branch and transfer it to the feature aggregation branch for information fusion and enhancement. For spatial ___domain-based texture enhancement, we decode the features of the two branches into RGB values in the spatial ___domain using the local pixel decoder (LPD) and the local texture decoder (LTD), respectively, and perform information fusion in the spatial ___domain. These two decoders are based on implicit neural networks15, thus enabling image SR at arbitrary scales. Extensive experiments on three public datasets have shown that ISTE performs better than existing fixed-scale and arbitrary-scale SR algorithms at multiple scales and helps to improve downstream task performance. Overall, the contributions of this paper are as follows:

-

We introduce ISTE, an efficient dual-branch framework based on implicit self-texture enhancement for arbitrary-scale SR of histopathology images. ISTE recovers the texture details from the low resolution image through feature-based texture enhancement and spatial ___domain-based texture enhancement.

-

The proposed ISTE achieves state-of-the-art performance at various scaling factors on three public datasets, and we demonstrate the effectiveness of the proposed texture enhancement strategy through a series of ablation experiments.

-

The histopathology images reconstructed by ISTE are shown to be effective for two downstream tasks in pathology image analysis: gland segmentation and cancer detection. The performance of these tasks can be improved by using the reconstructed images.

Related works

Deep learning-based super-resolution methods for natural images

Single-image super-resolution (SISR) refers to recovering an HR image from an LR image or an LR image sequence, which is a classical low-level computer vision task with a wide range of applications19,20,21,22,23,24,25. Deep neural networks can achieve accurate mapping from LR images to HR images due to their powerful fitting ability. Thus, they have become the mainstream approach in current SR studies. Numerous methods based on convolutional neural networks (CNNs) have been proposed for natural image SR, including SRCNN30, EDSR17, and RDN31. To further improve the performance of SR, some methods utilized residual modules32,33, densely connected modules34,35, and other blocks36,37 for the design of the CNNs. Subsequently, a series of SR methods based on attention mechanism have emerged, such as channel attention38,39, self-attention (IPT40, SwinIR41), and non-local attention42,43. However, these methods can only be trained and tested at a fixed integer scale, and need to be retrained for new scaling factors.

In recent years, implicit neural representation (INR) has been proposed as a continuous data representation for various tasks in computer vision26,27,28. INR uses a neural network (usually a coordinate-based MLP) to establish a mapping between coordinates and their signal values, which allows continuous and efficient modeling of 2D image signals. This approach has been widely used in research on arbitrary-scale SR15,29,44,45,46. For example, Chen et al.15 first applied INR to the SR algorithm and proposed the local implicit image function (LIIF) for arbitrary-scale SR. Lee et al.29 proposed the local texture estimator (LTE), which transforms coordinates into the fourier ___domain information to enhance the representation of the local implicit function. Chen et al.44 proposed the local implicit transformer (LIT) to enhance the local implicit function’s focus on the context of the target reconstruction region. Fu et al.45 introduced the local mixed implicit network (LMI), which considers multiple independent point coordinates and features to learn the spatial texture information of real-world images in a mix manner. Although these methods can be directly applied to histopathology images for continuous scale super-resolution, they fail to recover the special textures of the histopathology images effectively.

Deep learning-based super-resolution methods for pathological images

In recent years, deep learning-based SR algorithms have been widely used in pathological images to improve imaging resolution6,9,10,11,12,13,14,47,48. Upadhyay et al.9 developed a generative adversarial network that integrated the tasks of pathological image SR and surgical smoke removal into a single framework. Mukherjee et al.10 implemented SR image generation using a CNN with an up-sampling layer and augmented the outputs using the K-nearest neighbor algorithm. Chen et al.12 accomplished the SR task through a spatial wavelet dual-stream network incorporating a refined context fusion module. Xie et al.47 proposed the multi-features extraction module and the multi-scale selective fusion method to better extract and fuse multi-scale features for super-resolution. Li et al.14 employed a multi-scale CNN-based generative adversarial network for SR image generation and introduced a curriculum learning training strategy. Wu et al.6 incorporated a magnification classification branch into the SR network, improving SR performance through multi-task learning. These studies demonstrate the promise of using SR to enhance pathological image resolution in resource-limited settings. However, they still have some limitations. For instance, they restrict training and testing to specific scaling factors, and the resultant SR outputs still leave room for refinement. We attribute this primarily to a lack of adequate consideration for the unique textural characteristics of pathological images. In this paper, we introduce ISTE as a solution to address these challenges, aiming to achieve high-quality arbitrary-scale SR of pathological images.

Workflow of our ISTE. The LR image \(X_{LR}\) is input into the encoder to get the pre-extracted feature map \(F_{LR}\) first. In the feature aggregation branch, we input the feature \(F_{LR}\) into the local feature interactor and a convolutional layer to obtain \(F_{LFIC}\). In the texture learning branch, we input the feature \(F_{LR}\) into the texture learner to obtain the texture feature \(F_{TL}\). Then the feature maps from the two branches are input to the self-texture fusion module to accomplish feature-based enhancement. Finally, the enhanced feature \(F_{STF}\) output from the STF module and the texture feature \(F_{TL}\) output from the texture learner are decoded into RGB values respectively, and added up to accomplish spatial ___domain-based texture enhancement.

Methods

Problem formulation and framework overview

Given a set of N pairs of corresponding LR images and HR images \(\left\{ X_{LR}^i, Y_{H R}^i\right\} _{i=1}^N\), the objective is to find the optimal parameters \(\hat{\theta }\) of the SR model \(F_\theta\):

where \(X_{LR}^i\) is a LR image and \(Y_{HR}^i\) is its corresponding ground truth, and L is the L1 loss function to measure the difference between the ground-truth and the generated SR images. Figure 2 shows the overall framework of the proposed ISTE. We first utilize the backbone of SwinIR41 as the encoder to perform feature pre-extraction on the input LR image \(X_{LR}\) and then input the pre-extracted feature \(F_{LR}\) into the upper feature aggregation branch and lower texture learning branch of ISTE, respectively. In the feature aggregation branch, we input the feature \(F_{LR}\) into the local feature interactor (LFI) to enhance the interaction of features in the local region and obtain feature \(F_{LFI}\), which helps to improve the model’s ability to focus on local details in the image. In the texture learning branch, we input the feature \(F_{LR}\) into the texture learner (TL) to enhance the learning of high-frequency information and extract the feature \(F_{TL}\). Then we design a two-stage texture enhancement strategy for these two branches, where the first stage is feature-based texture enhancement, and the second stage is spatial ___domain-based texture enhancement. In the first stage, we designed the self-texture fusion (STF) module to leverage the interaction of similar regions of the pathological images in the feature space, thereby accomplishing feature-based texture enhancement to assist in reconstruction. In the second stage, we decode the \(F_{STF}\) from the STF module to obtain the image \(I_{LPD}\) through the local pixel decoder (LPD). Simultaneously, we decode the \(F_{TL}\) from the TL module to obtain the image \(I_{LTD}\) through the local texture decoder (LTD). Subsequently, we perform spatial summation of \(I_{LTD}\) and \(I_{LPD}\), obtaining the final reconstructed HR image \(I_{Pred}\). The purpose of the second stage is to fully utilize the features \(F_{TL}\) learned by the texture learner and decode them into the spatial ___domain for texture enhancement.

Local feature interactor

We propose the LFI module to enhance the interaction of features within local regions, thereby capturing the correlation of features within local regions to improve the model’s focus on local details such as the morphology and structure of cell in the histopathology image. As shown in Fig. 3, the size of the feature map \(F_{LR}\) is \(h \times w \times 64\), and we denote each vector of \(F_{LR}\) as \(F_{LR}^j(j=1,2, \ldots , h \times w)\). The LFI first assigns a window of size \(3 \times 3\) to each vector of \(F_{LR}\), and the eight neighboring vectors in the window around \(F_{LR}^j\) form a set \(F_N^j=\left\{ F_{N_i}^j \mid i=3,4, \ldots , 10\right\}\). The average pooling result of the vectors within a window is denoted as \(F_{P}^j\). The feature map \(F_{LFI}\) output by the LFI is calculated through self-attention so that each point on the feature map incorporates local features while paying more attention to itself. We denote each vector of \(F_{LFI}\) as \(F_{LFI}^j(j=1,2, \ldots , h \times w)\), and it is calculated as follows:

where \(Q_{LR}^j\) is the query mapped linearly from \(F_{LR}^j\), \(K_1^j\) is the key mapped linearly from \(F_{LR}^j\), \(V_1^j\) is the value mapped linearly from \(F_{LR}^j\), \(K_2^j\) is the key mapped linearly from \(F_{P}^j\), \(V_2^j\) is the value mapped linearly from \(F_{P}^j\), \(\left\{ K_i^j \mid i=3,4, \ldots , 10\right\}\) is the key mapped linearly from \(F_{N}^j\), \(\left\{ V_i^j \mid i=3,4, \ldots , 10\right\}\) is the value mapped linearly from \(F_{N}^j\), and d is the dimension of these vectors. The parameters used by each window are shared in the self-attention calculation.

Illustration of coordinate normalization. The red dots represent the pixels of the HR image, with coordinates denoted as \((X', Y')\). The blue dots represent the pixels of the LR image, with coordinates denoted as (X, Y). After coordinate normalization, each pixel of the HR image has a corresponding nearest neighbor pixel in the LR image.

Texture learner

Inspired by LTE29, we propose the TL module for learning high-frequency texture information in histopathology images. We employ sine activation to effectively enhance implicit neural representations for learning high-frequency texture details in the images, thereby mitigating spectral bias issues stemming from the ReLU activation functions26. As shown in Fig. 4, we normalize each pixel’s 2D coordinate \(\left( X^{\prime }, Y^{\prime }\right) =\left\{ \left( \textrm{x}_i^{\prime }, \textrm{y}_j^{\prime }\right) \mid i=1,2, \ldots , mw, j=1,2, \ldots , mh\right\}\) in the continuous HR image ___domain and the 2D coordinate \((X, Y)=\left\{ \left( \textrm{x}_i, \textrm{y}_j\right) \mid i=1,2, \ldots , mw, j=1,2, \ldots , mh\right\}\) nearest to \(\left( X^{\prime }, Y^{\prime }\right)\) in the continuous LR image ___domain between −1 and 1, where m represents the scaling factor. The local grid is defined as \(\left( X^{\prime }-X, Y^{\prime }-Y\right)\). Each HR image pixel has a corresponding closest pixel in the LR image. As shown in Fig. 5a, the TL module firstly outputs three feature maps \(F_{Amp}\in h\times w\times 256\), \(F_{FreqX}\in h\times w\times 256\) and \(F_{FreqY}\in h\times w\times 256\) through three convolutional layers respectively, and predicts the feature maps \(Amp\in mh\times mw\times 256\), \(FreqX\in mh\times mw\times 256\) and \(FreqY\in mh\times mw\times 256\) corresponding to each pixel coordinate of the HR image through nearest-neighbor interpolation. Then we use linear projection based on an MLP and Sigmoid activation function to map \((2 / \textrm{mw}, 2 / \textrm{mh})\) to a 256-dimensional feature vector Phase to simulate the effect of texture fragment offset when the image scaling factor changes. The output of the TL module is calculated as follows:

where \(\otimes\) represents element-wise multiplication and \(\odot\) represents inner product operation.

Self-texture fusion module for feature-based enhancement

Inspired by SRNTT49 and T2 Net50, we propose the STF module based on cross-attention, which aims to globally retrieve texture features from \(F_{TL}\) that are most similar to \(F_{LFIC}\) and to fuse these retrieved features with \(F_{LFIC}\), thus completing the feature-based texture enhancement. As shown in Fig. 5b, we use the features sampled from \(F_{LFIC}\) by nearest-neighbor interpolation as the query (Q) and use \(F_{TL}\) as the key (K) and value (V) of the cross-attention module. To retrieve the texture features that are most relevant to the feature \(F_{LFIC}\), we first compute the similarity matrix R of Q and K, where each element \(r_{i,j}\) of R is computed according to Eq. (4):

where \(q_{i}\) represents an element of Q, and \(k_{j}\) represents an element of K. Then we obtain the coordinate index matrix T with the highest similarity to \(q_{i}\) in K. An element in T is \(t_i=\arg \max _j\left( r_{i, j}\right)\), and \(t_{i}\) represents the position coordinates of the texture feature \(k_{j}\) with the highest similarity to \(q_{i}\) in \(F_{TL}\). We select the feature vector \(a_{i}\) with the highest similarity to each element in Q from V according to the coordinate index matrix T to obtain the retrieved texture feature A, which can be represented by \(a_i=v_{t_i}\), where \(a_i\) is an element in A and \(v_{t_i}\) represents the element at the \(t_i\)-th position in V. To fuse the retrieved texture feature A with the feature \(F_{LFIC}\), we first concatenate \(F_{LFIC}\) with A and obtain the aggregated feature Z through an MLP, where \(Z=MLP(Concat(F_{LFIC}, A))\). Finally, we calculate the soft attention map S, where an element \(s_i\) in S represents the confidence of each element \(a_i\) in the retrieved texture feature A, and \(s_i=\max _j\left( r_{i, j}\right)\). \(F_{STF}\) is calculated as Eq. (5):

where \(\langle \cdot \rangle\) represents inner product operation, \(\Vert \cdot \Vert\) represents the square root operation, and \(\oplus\) represents element-wise summation.

Spatial ___domain-based enhancement

In spatial ___domain-based texture enhancement, we decode the texture feature \(F_{TL}\) directly into the spatial ___domain \(I_{LTD}\) and add it to \(I_{LPD}\), which is reconstructed from \(F_{FLIC}\) using the LPD, to obtain the final output \(I_{Pred}\). First, we utilize the LPD to decode the feature \(F_{STF}\) into the RGB value \(I_{LPD}\). We parameterize the LPD as an MLP \(f_\theta\). As shown in Fig. 5c, \({u_t}\) denotes the coordinates of \({F_{LR}}\), while \({x_{q}}\) denotes the coordinates of both \({F_{STF}}\) and \(F_{TL}\). We denote the upper-left, upper-right, lower-left, and lower-right coordinates of an arbitrary point \(x_{q}\) as \(u_t(t \in 00,01,10,11)\). The RGB value at coordinate \({x_{q}}\) in the HR image decoded by the LPD can be represented as Eq. (6), where c consists of two elements, 2/mh and 2/mw, which represent the sizes of each pixel in \(I_{LPD}\), and \({\theta }\) is the parameter of the MLP \(f_\theta\). Similarly, we calculate the RGB values of the texture information \(I_{LTD}\) at coordinate \(x_{q}\) via Eq. (7), where the LTD is parameterized as an MLP \(g_{\varphi }\). We use the LTD to decode the texture features into the spatial ___domain texture information \(I_{LTD}\) and add it to the \(I_{LPD}\) via Eq. (8) for spatial ___domain texture enhancement to obtain the final prediction \(I_{Pred}\), where \({\varphi }\) is the parameter of the MLP \(g_{\varphi }\). \(S_t(t \in 00,01,10,11)\) is the area of the rectangular region between \(x_q\) and \(u_t\), and the weights are normalized by \(S=\sum _{t \in \{00,01,10,11\}} S_t\).

Experiments

In this section, we introduce the datasets, implementation details, and compare our ISTE with other SR methods. Finally, we conduct a series of ablation studies to validate the effectiveness of each component in the proposed ISTE.

Datasets

In terms of experimental data, this paper utilize three publicly available datasets: (1) Tissue Microarray (TMA) dataset: Following Li et al.14, we experimented on the TMA dataset to validate our method. The TMA dataset, a widely used public dataset in pancreatic cancer research51,52, was scanned by an Aperio AT digital pathology scanner (Leica Biosystems, Wetzlar, Germany) at a magnification of 0.504 \(\upmu\)m/pixel and contains 573 WSIs (average 3850 \(\times\) 3850 pixels each). We randomly selected 460 WSIs as the training set, 57 WSIs as the validation set, and 56 WSIs as the test set. (2) Histopathology Super-Resolution (HistoSR) dataset: Following Chen et al.12, we conducted experiments on the Histopathology Super-Resolution (HistoSR) dataset, which is built on the high-quality H&E stained WSIs of the Camelyon16 dataset53. The HistoSR dataset contains HR images with a patch size of 192 \(\times\) 192 through random cropping. The training set comprises 30,000 HR patches, while the test set consists of 5000 HR patches. (3) TCGA Lung Cancer dataset: The TCGA lung cancer dataset54 comprises 1054 WSIs (average 100,000 \(\times\) 100,000 pixels each) from The Cancer Genome Atlas (TCGA) data center55. We selected five slides from this dataset and cut them into 400 sub-images with a size of 3072 \(\times\) 3072. We randomly selected 320 sub-images as the training set, 40 as the validation set, and 40 as the test set.

Implementation details and evaluation metrics

Following previous SR methods based on implicit neural representation15,29, we used the patches with the size of \({48 \times 48}\) as the input for training. We first randomly sampled the scaling factor m in a uniform distribution U(1, 4) and cropped patches with the size of \(48m \times 48\) m from the ground truth HR images in a batch, where m represents the scaling factor. Following Li et al.14, we resized the patches to \({48 \times 48}\) via bicubic downsampling and did a Gaussian blur to simulate degradation since it is difficult to acquire authentically downsampled images at arbitrary scales through scanners. The size of the Gaussian kernel was set to 1/2 of the scaling factor m. We sampled \(48^2\) pixels from the corresponding cropped patches to form RGB-Coordinate pairs. We utilized the deep learning toolbox Pytorch to implement ISTE and Adam as the optimizer, setting the initial learning rate to 0.0001 and epochs to 1000. We employed structure similarity index measure (SSIM) and peak signal-to-noise ratio (PSNR) to evaluate the quality of reconstructed images. The PSNR and SSIM are given by:

where \(I_{Pred}\) and \(Y_{HR}\) are the generated image and the ground truth image, respectively. i represents the index of the i-th pixel of the image, and N is the total number of the pixels in the image. \(\mu _x\), \(\sigma _x\) and \(\sigma _{x y}\) are the mean standard deviation and covariance, respectively.

Comparison with previous methods

We compared the performance of ISTE with state-of-the-art SR methods in both the pathological image ___domain: SWD-Net12, SRMFENet47 and Li et al.14, and the natural image ___domain: Bicubic, EDSR17, RDN31, SwinIR41, LIIF15, LTE29, LMI45, ITSRN46 and LIT44, where the latter five are arbitrary-scale SR methods. For a fair comparison, the encoder used for arbitrary-scale SR methods is SwinIR41 without the last upsampling layer.

Quantitative results

We compared our ISTE with previous SR methods at five scaling factors of \(\times 2\), \(\times 3\), \(\times 4\), \(\times 6\), and \(\times 8\). As shown in Table 1, our ISTE achieved the highest performance in terms of PSNR and SSIM metrics at each scaling factor on the HistoSR and TCGA datasets. Although the SSIM metric for our method at \(\times\)8 scale is slightly lower than that of LTE29 by 0.0009 on the TMA dataset, it outperforms the comparison methods in PSNR metrics at all scaling factors and in SSIM metrics at the other scaling factors. To substantiate our results, we evaluate the significant difference between our ISTE and other methods using paired Student’s t-tests. Our ISTE method shows statistically significant differences compared to the comparison methods in almost all cases, with a p-value smaller than 0.001. The only exception is in the HistoSR dataset at the \(\times\)2 scale, where the significance test with EDSR on the SSIM metric yields a p-value slightly greater than 0.001 but still smaller than 0.05. It is worth noting that our method still demonstrates a statistically significant improvement over EDSR. To further assess the advantages of our method over other arbitrary-scale SR Methods, we present comparative results in Table 2 for ISTE, LIIF15, LTE29, LMI45, ITSRN46 and LIT44 at non-integer scaling factors. Our method demonstrates superior performance in terms of both PSNR and SSIM metrics. We also provide the Frechet Inception Distance (FID) score metric to evaluate the perceptual quality of images generated by different methods, as shown in Table 3. It can be observed that our method outperforms the comparative methods in terms of FID. The results indicate that the textures of images generated by our method are more realistic, yielding perceptual effects superior to those of other arbitrary-scale SR methods.

Qualitative results

Figure 6 shows the visual results and absolute error maps of different methods on the TCGA datasets at the scale of \(\times\)4, TMA datasets at the scale of \(\times\)2, and HistoSR datasets at the scale of \(\times\)2. The proposed ISTE performs better in restoring texture information, closely approximating the ground truth. Based on the brightness levels in the absolute error maps, it is observable that our method’s error maps contain more dark regions, indicating more minor errors in the reconstructed results compared to other methods. Figure 7 shows an example of a comparison of LIIF and our ISTE at non-integer scales. It can be seen that ISTE achieves arbitrary-scale SR with clear cell structure and texture. As shown in the red box, two cells are connected due to blurring in the image generated by LIIF while they are still separated in the image generated by ISTE at the scale of \(\times\)7.3. Please refer to supplementary figures for more comparisons.

Ablation study

To validate the effectiveness of each module in our proposed method, including the LFI, TL, STF, and LTD, we designed several variant networks for ablation experiments at scaling factors of \(\times\)2, \(\times\)3, and \(\times\)4 on the TCGA dataset, as shown in Table 4. To substantiate our results, we evaluate the significance of the differences between our proposed method and other variant networks using paired Student’s t-tests. \({P<0.001}\) was considered as a statistically significant level. We observe statistically significant differences with p-values smaller than 0.001 in all cases.

Evaluation of the local feature interactor

For the features obtained from the encoder \(F_{LR}\), the LFI module enhances feature interaction within local regions. To investigate the effectiveness of the LFI module, we conducted an ablation experiment by directly removing the LFI module from the ISTE framework. As shown in Table 4, all metrics improve across all scaling factors when using the LFI.

Evaluation of the texture learner

The TL module is employed to enhance the learning of high-frequency textures in histopathology images. To investigate the effectiveness of this module, we conducted an ablation experiment by replacing the module with a convolutional layer. As shown in Table 4, it can be seen that after ablating the TL module, all metrics become worse at all scaling factors. To better illustrate the role of the TL module, we visualized the features input to and output from the TL, denoted as \(F_{LR}\) and \(F_{TL}\), respectively, in Fig. 8. Compared to \(F_{LR}\), the output feature map \(F_{TL}\) from the TL module contains richer texture information.

Evaluation of the self-texture fusion module

The STF module globally retrieves texture features that are most similar to \(F_{LFIC}\) in \(F_{TL}\) and fuses the retrieved features to \(F_{LFIC}\). We designed a variant network without the STF module to evaluate its effectiveness. Specifically, we first take the feature \(F_{LFIC}\) obtained from the feature aggregation branch of the framework and decode it directly through the LPD to obtain \(I_{LPD}^{\prime }\). Then, we take the feature \(F_{TL}\) obtained from the texture learning branch and decode it through the LTD to obtain \(I_{LTD}^{\prime }\). We sum \(I_{LPD}^{\prime }\) and \(I_{LTD}^{\prime }\) to get the output \(I_{Pred}^{\prime }\) of the variant network. As shown in Table 4, all metrics become worse at all scaling factors after ablating the STF module.

Evaluation of the texture decoder for spatial ___domain-based enhancement

The feature \(F_{STF}\) is decoded into the pixel information \(I_{LPD}\) by the LPD in the spatial ___domain. To accomplish spatial ___domain-based texture enhancement in the subsequent stage, LTD is employed to decode texture features \(F_{TL}\) directly into texture information \(I_{LTD}\) in the spatial ___domain, and we sum \(I_{LTD}\) and \(I_{LPD}\) to obtain \(I_{Pred}\). To demonstrate the effectiveness of the designed spatial ___domain-based enhancement strategy, we removed the LTD from the ISTE framework and used only the pixels decoded by the LPD for the final prediction. The results in Table 4 suggest that incorporating spatial ___domain-based texture enhancement leads to improved results. To better illustrate the effectiveness of the spatial ___domain-based enhancement, we visualized the pixel information decoded by the LPD and the texture information decoded by the LTD in Fig. 9. It can be seen that the texture information \(I_{LTD}\) decoded from the LTD reveals clear outlines and texture features of the tissue cells and has more vibrant colors. This further illustrates the importance of LTD for spatial ___domain-based enhancement.

Evaluation of the dual-branch architecture

To further assess the effectiveness of the feature aggregation branch and texture learning branch in the proposed framework, we designed two single-branch variant networks: (1) retaining only the TL and LTD in the ISTE framework, which represents the ablation of the feature aggregation branch, and (2) retaining only the LFI and LPD in the ISTE framework, representing the ablation of the texture learning branch. As shown in Table 4, the proposed dual-branch architecture ISTE outperforms both single-branch variants, demonstrating the effectiveness of the feature aggregation and texture learning branches. Additionally, we provide visual comparisons in Fig. 10 to further validate the effectiveness of the proposed dual-branch framework. As shown in the first row, when the feature aggregation branch is removed, the reconstructed images show the loss of cellular boundaries. In the second and third rows, when the texture learning branch is removed, the model struggles to recover high-frequency details, such as intercellular gaps. In contrast, the complete dual-branch ISTE framework successfully reconstructs both cellular structures and intercellular gaps, further illustrating the effectiveness of the feature aggregation branch in capturing local details and the texture learning branch in reconstructing high-frequency textures.

Discussion

Applications in downstream pathology image analysis tasks

It is important to evaluate whether the images generated by the proposed ISTE in this paper can be used for pathology image analysis tasks. We demonstrate experimentally that ISTE effectively enhances the performance of two downstream tasks: gland segmentation and cancer detection. First, for gland segmentation, we trained and tested the state-of-the-art segmentation model U-Net56 on the Glas dataset from the MICCAI 2015 Gland Segmentation Challenge57. The Glas dataset includes a training set and two test sets, Test A and Test B. The training set contains 85 labeled images, Test A contains 60 labeled images, and Test B contains 20 labeled images. We performed \(\times\)4 downsampling on the HR images to generate LR images using bicubic interpolation. We compared segmentation results under the following settings: (1) Original high-resolution: Train U-Net on the original HR GlaS dataset for segmentation of original high-resolution images; (2) SISR: Directly employing U-Net trained on the original HR GlaS dataset for segmentation of the reconstructed images produced by our ISTE; (3) HR U-Net: Train U-Net on the reconstructed images produced by our ISTE for segmentation of original HR images; (4) Bicubic: Train U-Net on LR images obtained by bicubic interpolation for segmentation of original HR images. Table 5 shows the quantitative test results, where larger values indicate better performance for the F1 score and Object Dice score, while smaller values indicate better performance for object Hausdorff distance. It can be seen that the U-Net model trained on the reconstructed images from our ISTE performs better than the U-Net model trained on the LR image dataset, showing higher F1 scores and object Dice scores, as well as lower object Hausdorff distances. In particular, when evaluated on the Test B dataset, our results for segmentation of reconstructed images using U-Net trained on the original HR GlaS training set are close to those for segmentation of the original HR image, both with an F1 score of 0.93. Figure 11 shows representative results for different experimental setups, and we observe that the U-Net trained on LR images produced the worst results, it not only failed to detect small glands but also produced poor segmentation results for large glands. In contrast, the U-Net trained on the reconstructed images effectively outlined the boundaries of the macro glands and detected the tiny glands. Compared to using LR images for training, utilizing the generated SR images can improve segmentation accuracy when evaluating.

Qualitative evaluation of UNet for gland segmentation on the GlaS dataset57 with different experiment setups.

To further evaluate the contribution of our ISTE to the cancer detection task, we conducted tumor recognition on the PCam dataset58. The PCam dataset comprises 262,144 color images for training and 32,768 images for testing, with each image annotated with a binary label indicating the presence of metastatic tissue. We performed \(\times\)2 downsampling on HR images of the test set to generate LR images using bicubic interpolation. We chose ResNet-5059 as the classifier and trained it on the original PCam dataset. We compared classification results across the following settings: (1) Original: Directly employing trained ResNet-50 model to test on the original HR images in the test set; (2) Low resolution: Directly employing trained ResNet-50 model to test on the LR images of the test set; (3) Bicubic: Directly employing the trained ResNet-50 model to test on the bicubic interpolated images of the test set; (4) LIIF: Directly employing trained ResNet-50 model to test on the images generated by LIIF from the LR test set images; (5) ISTE: Directly employing trained ResNet-50 model to test on the images generated by our ISTE from the LR test set images. Table 6 illustrates the diagnostic performance with different experiment setups. By introducing additional prior knowledge, our ISTE leads to a performance improvement, resulting in a 4.06% accuracy increase compared to the Bicubic method. These results indicate that ISTE can improve classification performance by recovering more distinctive details.

The impact of different encoders on ISTE

We studied the impact of different encoders on the performance of ISTE using the TCGA and TMA datasets. We conducted a comparison using three different encoders: RDN31, EDSR17, and SwinIR41. As shown in Table 7, ISTE with the SwinIR encoder achieved the best performance. Compared to EDSR17 and RDN31 which use convolutional neural networks, SwinIR41 integrated with the Swin Transformer block can more effectively handle long-range dependencies, which is crucial for capturing subtle texture variations in histopathology images. Specifically, for histopathology images with fine textures and complex structures, SwinIR is able to capture these details more accurately and provides stronger feature representation capabilities.

Computational consumption analysis for ISTE

Finally, we compared the computational consumption of our ISTE with other arbitrary-scale SR methods using an NVIDIA RTX 3090 with 24GB of memory. All models used SwinIR41 as the encoder. We employed LR images with the size of 96\(\times\)96 as input, computing 48\(\times\)48, 96\(\times\)96, and 192\(\times\)192 output pixels for each query. As shown in Table 8, our model has a slightly longer runtime and consumes relatively more memory than the other SR models and does not have a clear advantage in terms of lightweight design. To further demonstrate that the reconstruction performance of our method comes from the network design rather than an increase in the number of parameters, we added a simple number of swin transformer blocks to the internal encoders of the two baseline models, LTE and LIIF, without modifying the network after the encoders. This modification resulted in a higher number of parameters than our ISTE. We then compared them on the TCGA dataset. As shown in Table 9, our method still achieves higher PSNR and SSIM. LIIF* and LTE* represent the models with increased parameters. This indicates that our network design is effective, and we will continue to work towards developing more computationally efficient models in the future.

Conclusion

In this work, we propose an innovative dual-branch framework ISTE based on implicit self-texture enhancement for arbitrary-scale histopathology image super-resolution. ISTE consists of a feature aggregation branch and a texture learning branch. We employ the feature aggregation branch to enhance the relevance of features in the local region while utilizing the texture learning branch to improve the learning of high-frequency texture details. We then design a two-stage texture enhancement strategy to fuse the features from the two branches to obtain SR images, where the first stage is feature-based texture enhancement and the second stage is spatial ___domain-based texture enhancement. Extensive experiments on publicly available datasets show that ISTE outperforms existing fixed-scale and arbitrary-scale SR methods across multiple scaling factors. Further experiments indicate that our method can enhance performance on two downstream tasks. In the future, we will continue to work on computationally efficient models and integrate the proposed SR models with existing diagnostic networks to improve diagnostic performance.

Data availability

The datasets used and analysed during the current study are available from the corresponding author on reasonable request.

References

Gilbertson, J. R. et al. Primary histologic diagnosis using automated whole slide imaging: a validation study. BMC Clin. Pathol. 6, 1–19 (2006).

Pantanowitz, L. et al. Review of the current state of whole slide imaging in pathology. J. Pathol. Inform. 2, 36 (2011).

Weinstein, R. S. et al. An array microscope for ultrarapid virtual slide processing and telepathology. design, fabrication, and validation study. Hum. Pathol. 35, 1303–1314 (2004).

Wilbur, D. C. Digital cytology: current state of the art and prospects for the future. Acta Cytol. 55, 227–238 (2011).

Ghaznavi, F., Evans, A., Madabhushi, A. & Feldman, M. Digital imaging in pathology: whole-slide imaging and beyond. Annu. Rev. Pathol. 8, 331–359 (2013).

Wu, X., Chen, Z., Peng, C. & Ye, X. Mmsrnet: Pathological image super-resolution by multi-task and multi-scale learning. Biomed. Signal Process. Control 81, 104428 (2023).

Nielsen, P. S. et al. Virtual microscopy: an evaluation of its validity and diagnostic performance in routine histologic diagnosis of skin tumors. Hum. Pathol. 41, 1770–1776 (2010).

Madabhushi, A. & Lee, G. Image analysis and machine learning in digital pathology: Challenges and opportunities. Med. Image Anal. 33, 170–175 (2016).

Upadhyay, U. & Awate, S. P. A mixed-supervision multilevel gan framework for image quality enhancement. In International Conference on Medical Image Computing and Computer-Assisted Intervention, 556–564 (Springer, 2019).

Mukherjee, L., Keikhosravi, A., Bui, D. & Eliceiri, K. W. Convolutional neural networks for whole slide image superresolution. Biomed. Opt. Express 9, 5368–5386 (2018).

Juhong, A. et al. Super-resolution and segmentation deep learning for breast cancer histopathology image analysis. Biomed. Opt. Express 14, 18–36 (2023).

Chen, Z., Guo, X., Yang, C., Ibragimov, B. & Yuan, Y. Joint spatial-wavelet dual-stream network for super-resolution. In Medical Image Computing and Computer Assisted Intervention—MICCAI 2020: 23rd International Conference, Lima, Peru, October 4–8, 2020, Proceedings, Part V 23, 184–193 (Springer, 2020).

Shahidi, F. Breast cancer histopathology image super-resolution using wide-attention GAN with improved Wasserstein gradient penalty and perceptual loss. IEEE Access 9, 32795–32809 (2021).

Li, B., Keikhosravi, A., Loeffler, A. G. & Eliceiri, K. W. Single image super-resolution for whole slide image using convolutional neural networks and self-supervised color normalization. Med. Image Anal. 68, 101938 (2021).

Chen, Y., Liu, S. & Wang, X. Learning continuous image representation with local implicit image function. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 8628–8638 (2021).

Canny, J. A computational approach to edge detection. IEEE Transactions on Pattern Analysis and Machine Intelligence, 679–698 (1986).

Lim, B., Son, S., Kim, H., Nah, S. & Mu Lee, K. Enhanced deep residual networks for single image super-resolution. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, 136–144 (2017).

Jia, Y., Chen, G. & Chi, H. Retinal fundus image super-resolution based on generative adversarial network guided with vascular structure prior. Sci. Rep. 14, 22786 (2024).

Shi, W. et al. Cardiac image super-resolution with global correspondence using multi-atlas patchmatch. In Medical Image Computing and Computer-Assisted Intervention–MICCAI 2013: 16th International Conference, Nagoya, Japan, September 22-26, 2013, Proceedings, Part III 16, 9–16 (Springer, 2013).

Thornton, M. W., Atkinson, P. M. & Holland, D. Sub-pixel mapping of rural land cover objects from fine spatial resolution satellite sensor imagery using super-resolution pixel-swapping. Int. J. Remote Sens. 27, 473–491 (2006).

Zou, W. W. & Yuen, P. C. Very low resolution face recognition problem. IEEE Trans. Image Process. 21, 327–340 (2011).

Zhang, Y., Zhou, P. & Chen, L. Dual-branch feature encoding framework for infrared images super-resolution reconstruction. Sci. Rep. 14, 9379 (2024).

Hu, L., Hu, L. & Chen, M. Edge-enhanced infrared image super-resolution reconstruction model under transformer. Sci. Rep. 14, 15585 (2024).

Li, G., Cui, Z., Li, M., Han, Y. & Li, T. Multi-attention fusion transformer for single-image super-resolution. Sci. Rep. 14, 10222 (2024).

Wang, L., Li, X., Tian, W., Peng, J. & Chen, R. Lightweight interactive feature inference network for single-image super-resolution. Sci. Rep. 14, 11601 (2024).

Sitzmann, V., Martel, J., Bergman, A., Lindell, D. & Wetzstein, G. Implicit neural representations with periodic activation functions. Adv. Neural. Inf. Process. Syst. 33, 7462–7473 (2020).

Tancik, M. et al. Fourier features let networks learn high frequency functions in low dimensional domains. Adv. Neural. Inf. Process. Syst. 33, 7537–7547 (2020).

Mildenhall, B. et al. Nerf: Representing scenes as neural radiance fields for view synthesis. Commun. ACM 65, 99–106 (2021).

Lee, J. & Jin, K. H. Local texture estimator for implicit representation function. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 1929–1938 (2022).

Dong, C., Loy, C. C., He, K. & Tang, X. Learning a deep convolutional network for image super-resolution. In Computer Vision—ECCV 2014: 13th European Conference, Zurich, Switzerland, September 6-12, 2014, Proceedings, Part IV 13, 184–199 (Springer, 2014).

Zhang, Y., Tian, Y., Kong, Y., Zhong, B. & Fu, Y. Residual dense network for image super-resolution. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2472–2481 (2018).

Cavigelli, L., Hager, P. & Benini, L. Cas-cnn: A deep convolutional neural network for image compression artifact suppression. In 2017 International Joint Conference on Neural Networks (IJCNN), 752–759 (IEEE, 2017).

Kim, J., Lee, J. K. & Lee, K. M. Accurate image super-resolution using very deep convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 1646–1654 (2016).

Wang, X. et al. Esrgan: Enhanced super-resolution generative adversarial networks. In Proceedings of the European Conference on Computer Vision (ECCV) Workshops (2018).

Zhang, Y., Tian, Y., Kong, Y., Zhong, B. & Fu, Y. Residual dense network for image restoration. IEEE Trans. Pattern Anal. Mach. Intell. 43, 2480–2495 (2020).

Chen, Y. & Pock, T. Trainable nonlinear reaction diffusion: A flexible framework for fast and effective image restoration. IEEE Trans. Pattern Anal. Mach. Intell. 39, 1256–1272 (2016).

Deng, X., Zhang, Y., Xu, M., Gu, S. & Duan, Y. Deep coupled feedback network for joint exposure fusion and image super-resolution. IEEE Trans. Image Process. 30, 3098–3112 (2021).

Niu, B. et al. Single image super-resolution via a holistic attention network. In Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, August 23–28, 2020, Proceedings, Part XII 16, 191–207 (Springer, 2020).

Zhang, Y. et al. Image super-resolution using very deep residual channel attention networks. In Proceedings of the European Conference on Computer Vision (ECCV), 286–301 (2018).

Chen, H. et al. Pre-trained image processing transformer. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 12299–12310 (2021).

Liang, J. et al. Swinir: Image restoration using swin transformer. In Proceedings of the IEEE/CVF International Conference on Computer Vision, 1833–1844 (2021).

Liu, D., Wen, B., Fan, Y., Loy, C. C. & Huang, T. S. Non-local recurrent network for image restoration. Adv. Neural Inf. Process. Syst. 31 (2018).

Mei, Y., Fan, Y. & Zhou, Y. Image super-resolution with non-local sparse attention. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 3517–3526 (2021).

Chen, H.-W. et al. Cascaded local implicit transformer for arbitrary-scale super-resolution. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 18257–18267 (2023).

Fu, H. et al. Continuous optical zooming: A benchmark for arbitrary-scale image super-resolution in real world. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 3035–3044 (2024).

Yang, J., Shen, S., Yue, H. & Li, K. Implicit transformer network for screen content image continuous super-resolution. Adv. Neural. Inf. Process. Syst. 34, 13304–13315 (2021).

Xie, L. et al. Shisrcnet: Super-resolution and classification network for low-resolution breast cancer histopathology image. In International Conference on Medical Image Computing and Computer-Assisted Intervention, 23–32 (Springer, 2023).

Ma, J. et al. Stsrnet: Self-texture transfer super-resolution and refocusing network. IEEE Trans. Med. Imaging 41, 383–393 (2021).

Zhang, Z., Wang, Z., Lin, Z. & Qi, H. Image super-resolution by neural texture transfer. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 7982–7991 (2019).

Feng, C.-M., Yan, Y., Fu, H., Chen, L. & Xu, Y. Task transformer network for joint mri reconstruction and super-resolution. In Medical Image Computing and Computer Assisted Intervention—MICCAI 2021: 24th International Conference, Strasbourg, France, September 27–October 1, 2021, Proceedings, Part VI 24, 307–317 (Springer, 2021).

Drifka, C. R. et al. Highly aligned stromal collagen is a negative prognostic factor following pancreatic ductal adenocarcinoma resection. Oncotarget 7, 76197 (2016).

Drifka, C. R. et al. Periductal stromal collagen topology of pancreatic ductal adenocarcinoma differs from that of normal and chronic pancreatitis. Mod. Pathol. 28, 1470–1480 (2015).

Bejnordi, B. E. et al. Diagnostic assessment of deep learning algorithms for detection of lymph node metastases in women with breast cancer. JAMA 318, 2199–2210 (2017).

Li, B., Li, Y. & Eliceiri, K. W. Dual-stream multiple instance learning network for whole slide image classification with self-supervised contrastive learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 14318–14328 (2021).

Weinstein, J. N. et al. The cancer genome atlas pan-cancer analysis project. Nat. Genet. 45, 1113–1120 (2013).

Ronneberger, O., Fischer, P. & Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015: 18th International Conference, Munich, Germany, October 5-9, 2015, Proceedings, Part III 18, 234–241 (Springer, 2015).

Sirinukunwattana, K. et al. Gland segmentation in colon histology images: The glas challenge contest. Med. Image Anal. 35, 489–502 (2017).

Veeling, B. S., Linmans, J., Winkens, J., Cohen, T. & Welling, M. Rotation equivariant cnns for digital pathology. In Medical Image Computing and Computer Assisted Intervention–MICCAI 2018: 21st International Conference, Granada, Spain, September 16-20, 2018, Proceedings, Part II 11, 210–218 (Springer, 2018).

He, K., Zhang, X., Ren, S. & Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 770–778 (2016).

Acknowledgements

This study was supported by the National Natural Science Foundation of China (Grant 82372097, 82072021, and 62471149).

Author information

Authors and Affiliations

Contributions

M.D. designed the methodology, conducted the experiments, and wrote the manuscript. L.Q. contributed to writing the manuscript. Z.Y., M.W., C.Z., and Z.S. revised the manuscript critically for important intellectual content. All authors reviewed the manuscript.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Duan, M., Qu, L., Yang, Z. et al. An efficient dual-branch framework via implicit self-texture enhancement for arbitrary-scale histopathology image super-resolution. Sci Rep 15, 18883 (2025). https://doi.org/10.1038/s41598-025-02503-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-025-02503-z