Abstract

To address the challenges of age and gender recognition in uncontrolled scenarios with facial absence or severe occlusion, this paper proposes a Spatial Correlation Guided Cross Scale Feature Fusion Network (SCGNet). The proposed method specifically tackles the limitations of existing approaches that heavily rely on facial features, which become unreliable under partial/complete occlusion scenarios. The method integrates multi-granularity semantic features through a Cross-Scale Combination (CSC) module, enhances local detail representation using a Local Feature Guided Fusion (LFGF) module, and designs a Spatial Correlation Composition Analysis (SCCA) module based on Getis-Ord Gi* statistics for feature reorganization, effectively resolving interference from non-informative regions. The SCCA module introduces a novel bipartite grouping mechanism that leverages hotspot detection to preserve discriminative body features when facial cues are unavailable. Comprehensive experiments demonstrate that SCGNet achieves state-of-the-art performance with minimum Mean Absolute Error (MAE) 4.01% for age estimation on IMDB-Clean (2.9% improvement over VOLO-D1) and highest gender classification accuracy on IMDB-Clean, UTKFace, and Lagenda datasets, showing improvements in cross-scene adaptability compared to VOLO and MiVOLO models respectively. Notably, the method maintains gender discrimination accuracy under complete facial occlusion scenarios, validating the effectiveness of spatial correlation modeling for non-facial feature reasoning, maintaining 97.32% gender accuracy even with complete facial occlusion on Lagenda dataset. The proposed architecture shows 73.30% CS@5 for age prediction in cross-___domain testing, demonstrating superior cross-scene adaptability compared to VOLO (69.72%) and MiVOLO (71.27%). Ablation studies confirm the individual contributions of CSC, LFGF, and SCCA modules. This research provides new insights for robust identity analysis in human-computer interaction and intelligent security applications.

Similar content being viewed by others

Introduction

With the rapid development of artificial intelligence technology, especially the in-depth research on age and gender of people in photos based on deep learning, related recognition based on computer vision has become a popular research field1,2. It plays a crucial role in many real-world applications, such as retail and clothing recognition, surveillance cameras, personnel identification, and shopping malls. However, this task faces huge challenges in uncontrolled scenarios. Changes in image quality, facial angles and rotations, partial occlusion of the face, and even the absence of the face in the image, such as only the back of the person being visible, coupled with the strict requirements for speed and accuracy in real-world applications, all these factors make this task extremely difficult. Our goal is to develop an efficient and accurate method that can simultaneously identify age and gender even when the face is invisible and only the back of the person is available.

In this paper, “gender recognition” refers to a well-established computer vision problem, specifically using binary classification to estimate the biological gender from photos. We acknowledge the complexity of gender recognition and related issues that cannot be resolved through the analysis of a single photo. We do not intend to cause harm or offense to anyone.

In the field of computer vision, gender recognition belongs to the category of classification tasks. Specifically, it estimates the biological gender by analyzing photos and using classification methods. Age estimation, on the other hand, is relatively more complex. It can be solved through regression methods or attempted through classification methods. Many popular benchmarks and research papers3,4 , etc., consider age as a classification problem with specific age ranges. However, it should be noted that this treatment may not be entirely accurate. Essentially, age is more of a regression problem. In addition, the age problem itself has significant imbalances, as elaborated in detail in reference5. When the age problem is regarded as a classification problem, a series of non-negligible problems will be triggered. For classification models, misclassifying a sample into an adjacent category is not significantly different from deviating from the true age by several decades in terms of results. Moreover, as mentioned in5, classification models face great difficulties in approximating age ranges from unseen categories. In contrast, although regression models can, to some extent, approximate age ranges from unseen categories, it is not an easy task. Regression models are more difficult to train, which is mainly reflected in multiple aspects. On the one hand, since regression models need to accurately fit the continuous change of age, they require more data for effective training. The continuity of age means that samples of various different age groups need to be covered, and the diversity requires that the data contain age differences caused by factors such as different living environments. On the other hand, collecting or cleaning datasets for such tasks is also extremely challenging. The continuity and diversity of age make the annotation and screening of data more delicate. It is necessary not only to accurately annotate the age of each sample but also to consider the influence of various factors on age in order to screen out representative and effective data.

At the same time, in actual image detection tasks, it often happens that the face is not shown in the image6. For this type of image detection task, we cannot be limited to the extraction of facial features only. Instead, we should extract corresponding feature information from other parts of the human image to assist in age and gender detection. This includes the extraction of human limb movements, behaviors, and other contents. Limb movements can reflect a person’s age characteristics. For example, the movements of young people may be more agile and lively, while those of the elderly may be relatively slow and steady. In terms of behavior, people of different ages and genders also behave differently. By extracting and analyzing these feature information, more bases can be provided for age and gender detection, improving the accuracy and reliability of the detection.

In recent years, Vision Transformers (ViTs) have shown excellent performance in various computer vision applications. Inspired by the remarkable achievements of the Transformer architecture in Natural Language Processing (NLP), ViT divides the input image into a series of tokens and uses self-attention layers to capture the relationships between these tokens, ultimately generating rich representations for visual recognition tasks7,8,9. However, we observe that compared with CNNs10. proved the existence of spatial correlations between feature maps in Convolutional Neural Networks (CNNs), ViTs have poor spatial correlations, and the features of deeper network layers show lower spatial feature content11,12,13. At the same time, ViTs have deficiencies in processing the division of patches into tokens, especially in handling global and local information. The spatial information of adjacent tokens in the original image may not be relevant. When the dimension of the feature map changes, problems such as a decrease in long-distance information correlation and loss of spatial information will occur. Based on these findings, in this paper, we propose a method named Spatial Correlation Guided Cross Scale Feature Fusion for Age and Gender Estimation (SCGNet), aiming to strengthen the spatial correlation between patches and tokens, enhance the receptive field, retain local details, and strengthen the connection between local and global information. The SCGNet method consists of three consecutive frameworks: Cross Scale Combination (CSC), Local Feature Guided Fusion (LFGF), and Spatial Correlation based Combination and Analysis Module (SCCA). Three consecutive modules specifically designed to address ViT’s limitations. The Cross Scale Combination (CSC) module enriches feature representation by integrating multi-scale information through channel dimension expansion while preserving spatial relationships. This design enables comprehensive feature fusion but requires careful parameter balancing to maintain computational efficiency. The number of different channel dimensions is increased to a specified size while maintaining the spatial dimension unchanged, effectively combining feature information of different scales and enriching the semantic content. Subsequently, in the LFGF stage, we reduce the spatial dimension of the feature map while keeping the channel dimension unchanged. It achieves dynamic equilibrium between spatial scales and local refinement through spatial dimension reduction with constant channels. This approach effectively preserves fine-grained details that are crucial for age-related feature extraction. Given that the feature map input to the LFGF stage is already rich in semantic information, this stage focuses on the processing of local information and makes full use of the key features of local information. In order to avoid fluctuations in correlations in non-key information areas, in the SCCA stage, we use a token-based correlation re-grouping method to solve the problem of interference from abnormal correlation scores in non-information areas on the recognition performance. At the same time, the robustness of the network based on the ViT model is enhanced, and the inference efficiency is improved.

In this paper, through in-depth research and analysis, we considered the following popular benchmarks: IMDB-Clean14, UTKFace15, Adience3, FairFace4, AgeDB16, and Layer Age Gender6. These are some of the most famous datasets in the field of computer vision that contain real-world annotations of age and gender. Our model was trained using the images in the datasets and achieved state-of-the-art (SOTA) results respectively. Moreover, our model can handle occlusion situations where the face is invisible.

The main contributions of our work can be summarized as follows:

-

We proposed a new network architecture named “Spatial Correlation Guided Cross Scale Feature Fusion for Age and Gender Estimation” and achieved SOTA in multiple datasets.

-

The Cross Scale Combination module enriches the feature representation by integrating information from different scales, and the Local Feature Guided Fusion enhances the extraction of local detail information, achieving a dynamic balance between different spatial scales and local feature refinement.

-

The SCCA module uses the spatial correlation between tokens and the method of token re-grouping to solve the interference from non-information areas and fully utilize the spatial information represented by different features.

-

The model supports face-less input prediction by using features such as spatial correlation.

Related work

Transformer

Since the Transformer was introduced into visual processing tasks, it has achieved remarkable success in various aspects17,18,19. So far, most visual tasks based on the Transformer focus on improving the relevant accuracy, and many related variants have been proposed to enhance their performance18. ViTs divide the image into non-overlapping patches, linearly project these patches into a sequence of tokens, and process them using the Transformer encoder. In the evolution of the Vision Transformer (ViT) architecture, numerous innovative methods have emerged, aiming to improve its performance and efficiency. For example, the CvT, a hybrid model20, adds an additional convolutional layer before the Multi-Head Self-Attention (MHSA) module, skillfully integrating the internal inductive bias into the ViT architecture, enabling it to better capture local features and context relationships when processing image information. CeiT21, on the other hand, takes a unique approach. It uses the Image-to-Token (I2T) module to focus on extracting low-level features and replaces the traditional feed-forward network with a Local-enhanced Feed-Forward (LeFF) layer, significantly enhancing the local representation ability, allowing the model to focus more precisely on the details of the image.

To enable ViTs to possess the excellent ability to learn multi-scale features, CrossViT22innovatively adopts a dual-branch Transformer structure. By skillfully combining image patches of different sizes, it successfully fuses multi-scale information and then generates more powerful and rich image features, which can more comprehensively describe various features of the image. ViTAE23 effectively incorporates multi-scale context information into the model system by carefully designing reduction cells (RC) and normal cells (NC), enabling the model to flexibly respond to image content of different scales and accurately extract key features.

In the pursuit of efficient Vision Transformers, recent research efforts have focused on the optimized processing of tokens24,25,26,27, aiming to reduce the computational load while maintaining the model’s performance. ResT28 introduced a breakthrough efficient self-attention module using overlapping depth-wise convolutions, greatly enhancing the computational efficiency while ensuring the effectiveness of feature extraction. T2T-ViT29 uses the Token-to-Token (T2T) module to achieve token aggregation, optimizing the way tokens are combined and enabling the model to process image information more efficiently. PiT30 skillfully reduces the spatial size through the pooling layer, maintaining the model’s representational ability while reducing the computational complexity. Dynamic-ViT31 even realizes dynamic token pruning during the inference process, flexibly adjusting the allocation of computational resources according to the actual needs of the image content and further improving the model’s operating efficiency. CaiT32 deeply optimizes the ViT architecture through layer scaling and class-attention mechanisms, enhancing the overall performance and generalization ability of the model. A simple token merging technique recently proposed by Bolya et al.33shows unique advantages. It can achieve a certain degree of performance improvement without even retraining the model, providing a simple and effective new idea for the optimization of the ViT architecture.

Hierarchical feature representation

In the field of dense prediction tasks, hierarchical feature representation plays a crucial role, and its importance has triggered extensive exploration and research enthusiasm in the academic community. Currently, the research methods in this field can be mainly divided into two categories: fixed-grid methods and dynamic-feature-based methods.

Regarding fixed-grid methods, methods such as PVT (Pyramid vision transformer: A versatile backbone for dense prediction without convolutions.) and Swin (Swin transformer: Hierarchical vision transformer using shifted windows. ) adopt the strategy of using 2D convolutions to merge patches within adjacent window regions. Although this approach can achieve a certain degree of feature integration, it also has obvious limitations. Its receptive field is relatively single and has a small range, making it difficult to fully capture the information features of a wide area in the image. The fixed-grid methods like PVT and Swin suffer from rigid receptive field constraints due to their uniform window partitioning. While dynamic methods like DynamicViT and Token Merging improve efficiency through adaptive token selection, they exhibit two fundamental limitations: (1) The token pruning criteria based on activation magnitudes (e.g., class attention scores) tend to discard low-response but semantically important features, particularly problematic for age-related subtle texture patterns. (2) The lack of spatial significance modeling leads to suboptimal merging of tokens from disparate anatomical regions (e.g., merging facial wrinkles with clothing textures), disrupting the spatial-semantic consistency crucial for analysis. Moreover, once an attempt is made to overly expand the grid size to enhance the receptive field, it will inevitably lead to a sharp increase in computational costs, greatly reducing the efficiency of the model in practical applications.

In contrast, for dynamic-feature-based methods, take DynamicViT (Dynamicvit: Efffcient vision transformers with dynamic token sparsiffcation.) as an example. It adaptively extracts features by skillfully eliminating redundant patches and precisely retaining key patches, and then constructs hierarchical feature maps. On this basis, EviT (Not all patches are what you need: Expediting vision transformers via token reorganizations. ) further optimizes this method. By carefully selecting the top K tokens with the highest average values among all heads for the next stage and merging the remaining tokens, the feature extraction becomes more efficient and targeted. PS-ViT (Vision transformer with progressive sampling. ) iteratively adjusts the center of the patch towards the object, thereby enriching the object information in the hierarchical feature map and enabling the model to more accurately grasp the features of the target object. Token Merging (Token merging: Your vit but faster.) ingeniously uses cosine similarity to gradually merge similar tokens, significantly improving the model’s throughput and enhancing its efficiency performance when processing large-scale data.

However, although dynamic-feature-based methods have the advantage of adaptability, they also have non-negligible disadvantages. Since they may discard some tokens that, although having low scores, actually contain valuable information during the token screening process, it causes a certain degree of information loss. Moreover, such methods often lack the ability for end-to-end training and cannot fully utilize the global information of the data for the overall optimization of the model.

In contrast, we propose the Spatial Correlation Guided Cross Scale Feature Fusion for Age and Gender Estimation (SCGNet). This method contains three key modules: The SCCA module uses the patch-correlation re-grouping method to solve the problem of correlation fluctuations in non-key areas, enhance the robustness and inference efficiency of the ViT model, and stabilize the connection between local and global information. The CSC module increases the channel dimension while maintaining the spatial dimension unchanged, combines feature information of different scales to enrich the semantics, and improves the comprehensive discrimination ability of gender and age features. The LFGF module reduces the spatial dimension of the feature map while keeping the channel dimension unchanged, and uses the semantically rich input to strengthen the processing of local features and precisely capture the subtle differences in gender and age. This method can be directly applied to recognition tasks, and it improves performance with unique advantages, providing a new and efficient solution for this field.

Age and gender recognition

In the research field of facial age and gender recognition, dealing with the gender recognition task alone often fails to attract sufficient attention in academic research and commercial application scenarios. Therefore, current mainstream research methods and related benchmark settings usually tend to deal with both age and gender recognition tasks simultaneously or focus on the single dimension of age recognition.

Convolutional Neural Networks (CNNs) have long dominated many computer vision tasks and represent advanced technical levels, although in recent years, they have faced the trend of being replaced by other technologies in some specific tasks. Levi et al.34 were the first to apply CNNs to the field of facial age and gender recognition and evaluated their method on the representative Adience dataset3, where both age and gender were set as classification categories. The network architecture they constructed was a convolutional model with two fully-connected layers, achieving a certain accuracy in age recognition.

With the continuous development and major breakthroughs of neural network technology in the field of computer vision, a large number of innovative methods have emerged. Some methods explore based on face recognition technology and related models35, strongly demonstrating the powerful effectiveness of general-purpose face models in adapting to downstream tasks. Some research papers36 even adopt more general-purpose model architectures, such as VGG1637, as encoders in ordinal regression methods for age estimation, while other methods are committed to using CNN networks to achieve direct classification or regression tasks in age recognition38,39. Up to now, the cutting-edge model for age classification on the Adience dataset uses the Attention LSTM Networks method40 and achieves an accuracy of 67.47%. However, this model has not extended its application to the field of gender prediction.

In the research direction of using facial and body images for recognition, the vast majority of age or gender estimation methods mainly rely on facial analysis technology. Although there are some studies that consider using body features for age41or gender42 recognition, only in very limited work43 are facial and body images used for joint recognition tasks. This makes it difficult to obtain a complete baseline model as a research starting point from open-source resources. Only a few research projects use personal full-body images for recognition analysis. The earliest attempt44 can be traced back to the period when neural networks were not widely used. At that time, classical image processing techniques were mainly used to predict age. Paper45 uses facial and body images but adopts a late-fusion method and is only applied to the specific task of gender prediction. Mivolo6 shows unique advantages in handling the joint recognition of facial and body images. It can more effectively integrate information from different modalities, further improving the accuracy and reliability of recognition and providing new ideas and methods for research in this field.

Proposed method

Overview

In this paper, we propose a method named Spatial Correlation Guided Cross Scale Feature Fusion for Age and Gender Estimation (SCGNet), aiming to strengthen the spatial correlation between patches, enhance the receptive field, retain local details, and strengthen the connection between local and global information. The SCGNet method consists of three consecutive frameworks: Cross Scale Combination (CSC), Local Feature Guided Fusion (LFGF), and Spatial Correlation-based Combination and Analysis Module (SCCA). In the CSC stage, the number of different channel dimensions is increased to a specified size while maintaining the spatial dimension unchanged, effectively combining feature information of different scales and enriching the semantic content. Subsequently, in the LFGF stage, we reduce the spatial dimension of the feature map while keeping the channel dimension unchanged. Given that the feature map input to the LFGF stage is already rich in semantic information, this stage focuses on the processing of local information and makes full use of the key features of local information. In order to avoid fluctuations in correlations in non-key information areas, in the SCCA stage, we use a token-based correlation re-grouping method to solve the problem of interference from abnormal correlation scores in non-information areas on the recognition performance. At the same time, the robustness of the network based on the ViT model is enhanced, and the inference efficiency is improved. The overall network structure is shown in Fig. 1.

Patch enhancement module

In order to strengthen the spatial correlation between patches, enhance the receptive field, retain local details, and strengthen the connection between local and global information, this study proposes a patch enhancement module (shown as the green block in Fig. 1). This innovative framework consists of two core modules: the Cross-Scale combination (CSC) module and the Local Feature Guided Fusion (LFGF) module. Its design aims to effectively address the challenges brought about by changes in the dimensions of the feature map. The CSC module enhances feature diversity by expanding the feature channel dimension and establishing long-range dependencies, effectively combining feature information of different scales and enriching the semantic content. Complementing it is the LFGF module, which innovatively introduces context-aware guiding tokens within the local receptive field, thus achieving the refinement and enhancement of local features. This dual-module collaborative architecture organically integrates the advantages of global structure understanding and local detail optimization, providing a significant performance improvement for downstream dense prediction tasks such as age and gender recognition.

Cross scale combination (CSC) module

We propose the Cross Scale Combination (CSC) module to enhance feature diversity by expanding the feature channels and establishing long-range dependencies, accurately model long-distance dependencies by combining feature information of different scales, and process local information and global features according to the size of different receptive fields to enrich semantic information. The CSC aims to effectively model long-range dependencies through a multi-level and multi-scale information processing architecture. The design of this module is based on the collaborative integration mechanism of local and global features: First, fine-grained information is captured by local feature extraction units with a smaller receptive field, and then these local information are cross-regionally integrated by high-order feature integration units with a larger receptive field, so as to capture complex spatial patterns and object features. At the same time, the long-range information interaction mechanism built in the module supports the efficient integration of features in different regions, providing an important basis for overall scene understanding. In addition, the parallel multi-scale feature extraction structure in the module can process local details and global features simultaneously. Meanwhile, in the age estimation task, features of different body regions have multi-scale sensitivity: facial wrinkles (local fine-grained features), hairstyle contours (medium-scale structural features), and limb postures (global semantic features). The CSC module uses multi-scale and multi-granularity feature extraction. This design can help: (1) Capture local facial features such as the density of the texture around the eyes, which is sensitive to age differences within 10 years. (2) Identify curves such as hairstyle boundaries, solving the problem of gender feature compensation in occluded scenes. 3. Establish long-range age dependencies through large-scale content simultaneously.

Based on the above mechanism, the CSC module adopts an efficient multi-scale feature extraction strategy, as shown in Fig. 2. Specifically, the module first divides the input channels c into N independent pieces. Each piece performs convolutional operations with different receptive fields in the depth direction. This design not only significantly reduces the number of parameters and computational complexity but also realizes the effective extraction of multi-granularity features. Subsequently, the module expands the receptive field by introducing larger-scale convolutional kernels, thereby enhancing the ability to model long-range dependencies. On this basis, we designed a channel feature refinement module to refine and reorganize features of different scales among channels and achieve the deep fusion of multi-scale features through a set of linear projection layers with independent parameters. Finally, the module concatenates the outputs of N pieces (P) and adjusts the number of channels to the target dimension layer \(C'\) through the final linear projection. The number of linear projections is C/N.

Through the efficient modeling mechanism of long-range dependencies, the CSC module can more effectively capture and integrate key feature representations, thus showing significant performance advantages in complex visual recognition tasks. The mathematical expressions of this module are as follows:

where \(X = [x_1, x_2, \ldots , x_N ]\) represents the input X split into multiple pieces along the channel dimension, and \(x_{n} \in R^{B\times H\times W\times \frac{C}{N}}\) denotes the n-th piece. The kernel size of the depth-wise convolution for the n-th piece is denoted by \(c_{n} \in \{k1, k2, \ldots , kN\}\). FR represents the feature refine module. Here, \(P_{n} \in R^{B\times H\times W\times \frac{C}{N}}\) represents the n-th piece after being processed by the depth-wise convolution (with output channels = input channels) and feature refine, and \(P^{c}_{FR}\) represents the c-th channel in the n-th piece. \(W^{C} \in R^{N\times N}\) is the weight matrix of the linear projection. \(G^{C} \in R^{B\times H\times W\times N}\). Finally, \(W \in R^{C\times C'}\) is the weight matrix of the linear projection that adjusts the number of channels to the specified \(C'\). In order to achieve the purpose of refining and reorganizing features of different scales among channels, we designed a feature-refine module. By calculating the intra-region correlations, it reduces the impact of mis-segmented information, filters out useless information, and extracts significant features. The module structure is shown in Fig. 3.

For matching tasks such as age and gender recognition, whether it is facial feature information or human body parsing parts, we hope to minimize the probability error of similar features. Therefore, we consider that the ratio of the value corresponding to a pixel in the network feature map to the corresponding category is the category contribution of the current pixel. The larger the contribution, the more significant the feature. The pixel values in the feature map output by the original network are converted into corresponding category prediction probabilities, and then the contribution value of the pixel point to the entire category prediction is calculated. The prediction contribution value is the pixel value divided by the total pixel prediction of the entire category, as shown in the following formula:

where p(x)is the output value of the pixel. First, the feature map is expanded into a two-dimensional form through the Softmax function, and the contribution value at the current position is calculated and multiplied by the current pixel value to obtain the corresponding significant feature s(x), as shown in the following formula:

where \(x_i\) , \(x_j\) are the pixel value, Q(x)is the value obtained after the current pixel passes through the Softmax function, and M(x) is the new feature value after feature refinement. Through feature refinement, the information of pixel points with large feature contributions is retained, and those with small contributions are eliminated and set to zero to obtain a new feature matrix. Then, the new feature matrix \(F'\) is multiplied by the original feature map F to obtain a significant feature matrix.

Local feature guided fusion (LFGF)

After obtaining features of different scales through the CSC module, we developed the Local Feature Guided Fusion (LFGF) module. Its purpose is to integrate information from local and global contexts, refine the processing of local features through the context content provided by previous modules and the corresponding feedback, and ensure that detailed features are fully applied and interpreted in the current context. This hierarchical processing involves connecting local and global aspects, allowing for more refined and accurate feature extraction. According to the above description, LFGF serves as a local-global feature enhancer, retaining context-feature awareness to guide tokens, as shown in Fig. 4.

Gender recognition relies on local details of clothing textures (such as neckline and cuff styles) and body shape contours (such as the ratio of shoulder to waist), but the global attention of traditional ViT is easily disturbed by the background. The LFGF module builds local-aware tokens through the GGM module. Specifically, we implemented a guide generator module (GGM) that guides the local feature A to generate an attention token \(A'\). This token performs the same self-attention operation as the previous features, thus providing higher-level semantic support for local features in the subsequent global pooling part. The LFGF module can ensure that the extracted features connect the local and the global. Formally, given an input \(X\in R^C\times H\times W\) , in the GGM, a set of feature maps is generated through depth-wise convolution, and the corresponding guiding feature \(X'\) is generated through a linear operation, as shown below:

Spatial correlation-based combination and analysis module (SCCA)

The Multi-Head Self-Attention (MHSA) mechanism is a core component of the Transformer architecture, enabling dynamic context-aware interactions between input tokens. Given an input sequence (shown in the figure) of tokens, each with d-dimensional embeddings, MHSA first projects X into query Q, key K, and value V matrices via learnable linear transformations. For each of the h parallel attention heads, the scaled dot-product attention is computed as:

where \(S_{QK}\) is the corresponding attention score,

where \({\bf{Q}}_i={\bf{X}}{\bf{W}}_i^Q,{\bf{K}}_i={\bf{X}}{\bf{W}}_i^K,{\bf{V}}_i={\bf{X}}{\bf{W}}_i^V\), with \({\bf{W}}_i^Q,{\bf{W}}_i^K,{\bf{W}}_i^V\in {\mathbb {R}}^{d\times d/h}\). The outputs of all heads are concatenated and linearly projected:

This multi-head design allows the model to jointly attend to diverse relational patterns from different representation subspaces.

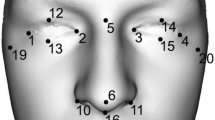

In spatial analysis of deep neural networks, explicitly modeling the concentration patterns of high-response features can enhance the model’s sensitivity to task-specific regions (e.g., facial landmarks for age/gender recognition). Getis-Ord \(G_i^*\) provides a directional measure of local concentration intensity, quantifying how strongly a ___location is surrounded by statistically significant high/low values-a critical property for identifying discriminative regions in visual recognition tasks. Getis-Ord \(G_i^*\) is more suitable for capturing locally significant aggregation regions (such as high-response feature hotspots) and can more efficiently guide the merging of key Tokens.

\(G_{i}^*\)-driven token selection

First, calculate the local \(G_{i}^*\) score. For the input feature map \(X\in R^{N\times d}\), calculate its corresponding \(G_{i}^*\) score:

where \(a_j=\Vert {\bf{x}}_j\Vert _2\) is token activation intensity and \(w_{i,j}=\textrm{softmax}({\bf{x}}_i^T{\bf{x}}_j/\sqrt{d})\) is attention driven spatial weights. and for the generation of dynamic thresholds, we automatically determine the hotspot regions based on the \(G_{i}^*\) score distribution Automatically determine the hotspot regions based on the \(G_i^*\) distribution:

where \(\mu _G\), \(\sigma _G\) are the mean and standard deviation of current \(G_{i}^*\), \(\lambda\): learnable parameter (initial value = 1.0). Finally, screen the candidate tokens:

directly select significant hotspots (\(G_{i}^*>\tau _{\textrm{hot}}\)) or coldspots (\(G_{i}^*<\tau _{\textrm{cold}}\)) Tokens, without the need for explicit segmentation of Set A/B. Specifically, there are two key body regions that can be dynamically identified by the SCCA module. Firstly, there are age-related hotspots, such as the texture of hand joints, where the skin folds of elderly samples show high-frequency responses. Secondly, there are gender-related coldspots, where there are kinematic differences in gait between different genders.

\(G_i^*\)-aware bipartite grouping

1. Improvement of similarity measurement

\(G_i^*\) is introduced as a penalty term in bipartite matching with the aim of preferentially merging tokens with similar spatial significance. The similarity measurement formula is:

where \(\eta\) is the balance factor, with a default value of 0.3. This formula represents the similarity between two tokens \({\bf{x}}_i\) and \({\bf{x}}_j\). It not only takes into account their cosine similarity but also introduces the difference in \(G_i^*\) values as a penalty term. In this way, tokens with similar spatial significance are more likely to be merged, thus better capturing the consistency of spatial features.

2. Matching and merging

-

Randomly divide the set \(\mathscr {A}\) into two subsets, \(\mathscr {A}_1\) and \(\mathscr {A}_2\).

-

For each \({\bf{x}}_i \in \mathscr {A}_1\), find \({\bf{x}}_j\) in \(\mathscr {A}_2\) to maximize \(Sim({\bf{x}}_i, {\bf{x}}_j)\). In this step, the above-improved similarity measurement is used to find the token that best matches \({\bf{x}}_i\).

-

Merge the matching pairs. The merged token \({\bf{x}}_{merged}\) is calculated as: \({\bf{x}}_{merged} = ({\bf{x}}_i + {\bf{x}}_j)/2\), that is, the average of the two matching tokens is taken as the new token.

-

Unmatched tokens are retained as residuals, and these unmatched tokens will be considered separately in subsequent processing.

3. Output reorganization

The final output \({\bf{X}}_{out}\) is obtained by concatenating the merged token set \({\bf{X}}_{merged}\), the residual token set \({\bf{X}}_{residual}\), and the stable token set \({\bf{X}}_{stable}\), that is: \({\bf{X}}_{out} = Concat ({\bf{X}}_{merged}, {\bf{X}}_{residual}, {\bf{X}}_{stable})\) where \({\bf{X}}_{stable} = \{{\bf{x}}_j | \tau _{cold} \le G_j^* \le \tau _{hot} \}\) represents the set of retained non-significant tokens, and \(\tau _{cold}\) and \(\tau _{hot}\) are the thresholds for cold spots and hot spots respectively. Through such an output reorganization method, information from different types of tokens can be comprehensively utilized, providing richer and more effective inputs for subsequent model processing. Through the above Getis-Ord \(G_i^*\)-aware bipartite grouping method, spatial significance information can be more effectively considered when processing tokens, and the token merging and processing process can be optimized, thus contributing to improving the performance of the model in related tasks such as age and gender recognition. The pseudo-code of the main process of SCGNet is as follows:

Experiments and results

Evaluation metrics

Our code is written based on the PyTorch library, and we use the Transformer as the base architecture. Similar to Mivolo, in terms of evaluation metrics, we choose classification accuracy to evaluate the performance of gender prediction and age prediction.

In the regression test of the age benchmark, the performance of the model is evaluated based on two indicators: Mean Absolute Error (MAE) and Cumulative Score (CS). MAE is calculated by computing the average absolute difference between the predicted ages and the true age labels in the test dataset. CS is calculated using the following formula:

where N represents the total number of samples in the test dataset, and \(N_{l}\) represents the number of samples for which the absolute error between the estimated age and the true age does not exceed l years.

Experiments on open-source datasets

First, we conducted experiments on the IMDB-clean, UTKFace, and Lagenda datasets. For data augmentation, we applied a series of techniques, including random cropping, random horizontal flipping, label smoothing regularization, and random erasing. These augmentation measures are aimed at improving the robustness and generalization ability of the model.

During the training process, we used the AdamW optimizer (Loshchilov and Hutter, 2017) with a momentum parameter of 0.9 and an initial learning rate set to \(1\times 10^{-3}\). We gradually decreased the learning rate following the cosine annealing schedule (Loshchilov and Hutter, 2016). The model was trained from scratch for 300 epochs on each dataset, and the batch size was set to 128. Similar to Mivolo, we experimented with multiple loss functions and explored different parameter settings, such as Weighted Focal Mean Squared Error loss (WeightedFocalMSE loss) and Weighted Huber loss (WeightedHuber loss). It turned out that the simple Weighted Mean Squared Error (WeightedMSE) achieved the best performance. The results of only predicting age are shown in Table 1.

As demonstrated in Table 1, SCGNet achieves improvements over baseline models. For instance, when trained on Lagenda and tested on UTKFace, SCGNet reduces Age MAE by 4.62% (3.72 vs. 3.90) and improves Age CS@5 by 2.88% (74.33% vs. 72.25%) compared to VOLO-D1. Furthermore, on the UTKFace test set with the same training data, SCGNet outperforms MWR, Randomized Bins, and CORAL by 6.64%, 10.33%, and 24.30% in Age MAE, respectively.

The results of predicting age and gender are shown in Table 2.

As shown in Table 2, SCGNet achieves consistent improvements over VOLO-D1 in both age estimation and gender classification. When trained on Lagenda and tested on Lagenda, SCGNet reduces Age MAE by 3.16% (3.98 vs. 4.11) and improves Gender Accuracy by 0.44% (97.32% vs. 96.89%). On the UTKFace test set with UTKFace training data, SCGNet outperforms VOLO-D1 by 3.55% in Age MAE (4.08 vs. 4.23) and 1.19% in Gender Accuracy (98.85% vs. 97.69%). Notably, SCGNet surpasses MWR, Randomized Bins, and CORAL by 6.64%, 10.33%, and 24.30% respectively in Age MAE on UTKFace test data At the same time, Table 3 shows the comparison results between the method proposed in this paper and single-input VOLO and multi-input MiVOLO.

As can be seen from Table 3, the method proposed in this paper outperforms VOLO and MiVOLO in experiments using only facial images, only body images, and combined facial and body images. SCGNet achieves 22.64% lower MAE (3.28 vs. 4.24) and 2.85% higher CS@5 (73.30% vs. 71.27%) than MiVOLO-D1 on IMDB test data. For body-only, SCGNet shows 18.83% MAE reduction (3.75 vs. 4.62) on UTKFace compared to MiVOLO-D1. Remarkably, when trained on Lagenda data, SCGNet attains 99.65% gender accuracy in body and face (vs. 97.36% for MiVOLO-D1), demonstrating robust cross-modal synergy

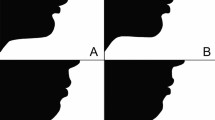

The results show that when using combined facial and body images, thanks to the spatial-correlation-guided cross-scale feature fusion proposed in this paper, which utilizes spatial correlations to perform Cross Scale Combination of facial and body features, excellent recognition performance can be obtained without cropping the face as in MiVOLO. Similarly, we removed all facial feature information from the datasets and conducted experiments on the IMDB, UTKFace, and Lagenda datasets. The results are shown in Table 3. Through the spatial-correlation module, the body feature information is fully utilized. The relationship between the MAE of the model input with facial and body information and age is shown in Fig. 5.

At the same time, to verify the generalization ability of the model in age and gender recognition tasks, we also conducted classification benchmark tests on SCGNet in the AgeDb regression benchmark test and popular datasets such as FairFace and Adience. We directly tested the model trained on Lagenda on the test sets of the above-mentioned datasets and mapped the regression output to the corresponding classification range. The results are shown in Table 4.

Ablation experiments

We conducted relevant ablation experiments on each of the proposed modules to verify the effectiveness of the designed modules through different ablation experiments in Fig. 6. The pictures in Fig. 6 are available in UTKFace17.

As shown in Fig. 6, we horizontally compared the outputs of the base model and the SCGNet network proposed in this paper, and tested the heatmap effects generated by the network when the Patch Enhance module and the SCCA module were absent. The first row shows an image of a woman close to a full-body shot. It can be seen that the attention distribution of the base-model network is relatively scattered, mainly concentrated on the upper garment part. For the image without Patch Enhance (only SCCA), the attention is mainly concentrated on the face. For the image without SCCA (only Patch Enhance), the attention is mainly concentrated on the clothing part, and there is less information about facial features. The attention heatmap of SCGNet can basically outline the entire contour of the human body including the face. The second-row image shows a half-body portrait of a face. It can be seen that, compared with the previous results, SCGNet shows a more prominent attention effect on facial features. The third row shows a half-body portrait of a man. It can be seen that the heatmap results of a single module, although including part of the face, do not incorporate the entire body frame. The attention features at the position of the clothing torso are relatively scattered. The results of lacking the Patch Enhance module and lacking the SCCA module all fail to highlight facial features or clothing features to varying degrees. The fourth row shows a close-up of a man’s head. Compared with other results, SCGNet can well outline the facial contour.

At the same time, we conducted quantitative experiments on the entire network module. The experimental results are shown in Table 5. For the Patch Enhance module, we designed two groups of experiments, removing the Cross Scale Combination and the Local Feature Guided Fusion respectively. The experiments were trained on the Lagenda dataset and tested on the Lagenda test set. From the results in the table, it can be seen that the SCGNet designed in this paper achieved the best performance. The Cross Scale Combination module enriches the feature representation by integrating information from different scales, and the Local Feature Guided Fusion enhances the extraction of local detail information, achieving a dynamic balance between different spatial scales and local feature refinement.

At the same time, to test the effectiveness of the LFGF module proposed by us, we designed a group of experiments. We replaced LFGF with a \(2\times 2\) convolution, a \(3\times 3\) average pooling, and a \(7\times 7\) average pooling respectively. The experimental results are shown in Table 6. It can be seen that compared with other methods, LFGF improved the gender accuracy by 1.15%, 1.24%, and 1.75% respectively.

Conclusion

We proposed a new framework for age and gender recognition, SCGNet (Spatial Correlation Guided Cross Scale Feature Fusion for Age and Gender Estimation). Its aim is to strengthen the spatial correlation between patches and tokens, enhance the receptive field, retain local details, and strengthen the connection between local and global information. This method consists of three consecutive frameworks: Cross Scale Combination (CSC), Local Feature Guided Fusion (LFGF), and Spatial Correlation-based Combination and Analysis Module (SCCA). Among them, the Cross Scale Combination module enriches the feature representation by integrating information from different scales; the Local Feature Guided Fusion module enhances the ability to extract local detail information, achieving a dynamic balance between different spatial scales and local feature refinement; the SCCA module uses the spatial correlation between tokens and the method of token re-grouping to solve the interference problem in non-information areas and fully utilize the spatial information represented by different features. A large number of experimental results show that our framework can accurately identify age and gender.

While SCGNet demonstrates promising results, several directions warrant further investigation: (1) Developing lightweight variants for real-time deployment on edge devices through neural architecture search and hardware-aware optimization; (2) Exploring hybrid architectures integrating graph neural networks (GNNs) to explicitly model anatomical relationships between body regions, particularly for handling complex occlusions through spatial reasoning over human pose graphs; (3) Enhancing cross-modal learning by combining visual transformers with temporal graph networks for video-based age/gender estimation, enabling temporal correlation modeling across frames.

Data availability

The datasets generated and analysed during the current study are available in the MiVOLO repository, [https://github.com/WildChlamydia/MiVOLO]. And figure with people is available in the UTKFace dataset, [https://susanqq.github.io/UTKFace/].

Code availability

Our code is available at https://github.com/happpyjsy/SCGNet.

References

Kaut, H. & Singh, R. A review on image segmentation techniques for future research study. Int. J. Eng. Trends Technol. 35, 504–505 (2016).

Razzaghi, P., Abbasi, K. & Ghasemi, J.B. Multivariate pattern recognition by machine learning methods. In Machine Learning and Pattern Recognition Methods in Chemistry from Multivariate and Data Driven Modeling. 47–72 (Elsevier, 2023).

Eidinger, E., Enbar, R. & Hassner, T. Age and gender estimation of unfiltered faces. IEEE Trans. Inf. For. Secur. 9, 2170–2179 (2014).

Karkkainen, K. & Joo, J. Fairface: Face attribute dataset for balanced race, gender, and age for bias measurement and mitigation. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision. 1548–1558 (2021).

Yang, Y., Zha, K., Chen, Y., Wang, H. & Katabi, D. Delving into deep imbalanced regression. In International Conference on Machine Learning. 11842–11851 (PMLR, 2021).

Kuprashevich, M. & Tolstykh, I. Mivolo: Multi-input transformer for age and gender estimation. arxiv 2023. arXiv preprint arXiv:2307.04616.

Bai, Y., Mei, J., Yuille, A. L. & Xie, C. Are transformers more robust than CNNS?. Adv. Neural Inf. Process. Syst. 34, 26831–26843 (2021).

Bhojanapalli, S. et al. Understanding robustness of transformers for image classification. In Proceedings of the IEEE/CVF International Conference on Computer Vision. 10231–10241 (2021).

Naseer, M. M. et al. Intriguing properties of vision transformers. Adv. Neural Inf. Process. Syst. 34, 23296–23308 (2021).

Nikzad, N., Gao, Y. & Zhou, J. CSA-net: Channel-wise spatially autocorrelated attention networks. arXiv preprint arXiv:2405.05755 (2024).

Nikzad, N., Gao, Y. & Zhou, J. CSA-net: Channel-wise spatially autocorrelated attention networks. arXiv preprint arXiv:2405.05755 (2024).

Guo, Y., Stutz, D. & Schiele, B. Improving robustness of vision transformers by reducing sensitivity to patch corruptions. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 4108–4118 (2023).

Mao, X. et al. Towards robust vision transformer. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 12042–12051 (2022).

Lin, Y., Shen, J., Wang, Y. & Pantic, M. FP-age: Leveraging face parsing attention for facial age estimation in the wild. In IEEE Transactions on Image Processing (2022).

Zhang, Z., Song, Y. & Qi, H. Age progression/regression by conditional adversarial autoencoder. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 5810–5818 (2017).

Moschoglou, S. et al. Agedb: The first manually collected, in-the-wild age database. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops. 51–59 (2017).

Dosovitskiy, A. et al. An image is worth 16 x 16 words: Transformers for image recognition at scale. arXiv preprint arXiv:2010.11929 (2020).

Han, K. et al. A survey on vision transformer. IEEE Trans. Pattern Anal. Mach. Intell. 45, 87–110 (2022).

Mohammadzadeh-Vardin, T., Ghareyazi, A., Gharizadeh, A., Abbasi, K. & Rabiee, H. R. Deepdra: Drug repurposing using multi-omics data integration with autoencoders. Plos one 19, e0307649 (2024).

Wu, H. et al. CVT: Introducing convolutions to vision transformers. In Proceedings of the IEEE/CVF International Conference on Computer Vision. 22–31 (2021).

Yuan, K. et al. Incorporating convolution designs into visual transformers. In Proceedings of the IEEE/CVF International Conference on Computer Vision. 579–588 (2021).

Chen, C.-F. R., Fan, Q. & Panda, R. Crossvit: Cross-attention multi-scale vision transformer for image classification. In Proceedings of the IEEE/CVF International Conference on Computer Vision. 357–366 (2021).

Xu, Y., Zhang, Q., Zhang, J. & Tao, D. Vitae: Vision transformer advanced by exploring intrinsic inductive bias. Adv. Neural Inf. Process. Syst. 34, 28522–28535 (2021).

Meng, L. et al. Adavit: Adaptive vision transformers for efficient image recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 12309–12318 (2022).

Song, Z. et al. CP-VIT: Cascade vision transformer pruning via progressive sparsity prediction. arXiv preprint arXiv:2203.04570 (2022).

Fayyaz, M. et al. Adaptive token sampling for efficient vision transformers. In European Conference on Computer Vision. 396–414 (Springer, 2022).

Kong, Z. et al. Spvit: Enabling faster vision transformers via latency-aware soft token pruning. In European Conference on Computer Vision. 620–640 (Springer, 2022).

Zhang, Q. & Yang, Y.-B. Rest: An efficient transformer for visual recognition. Adv. Neural Inf. Process. Syst. 34, 15475–15485 (2021).

Yuan, L. et al. Tokens-to-token VIT: Training vision transformers from scratch on ImageNet. In Proceedings of the IEEE/CVF International Conference on Computer Vision. 558–567 (2021).

Heo, B. et al. Rethinking spatial dimensions of vision transformers. In Proceedings of the IEEE/CVF International Conference on Computer Vision. 11936–11945 (2021).

Rao, Y. et al. Dynamicvit: Efficient vision transformers with dynamic token sparsification. Adv. Neural Inf. Process. Syst. 34, 13937–13949 (2021).

Touvron, H., Cord, M., Sablayrolles, A., Synnaeve, G. & Jégou, H. Going deeper with image transformers. In Proceedings of the IEEE/CVF International Conference on Computer Vision. 32–42 (2021).

Bolya, D. et al. Token merging: Your VIT but faster. arXiv preprint arXiv:2210.09461 (2022).

Levi, G. & Hassner, T. Age and gender classification using convolutional neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops. 34–42 (2015).

Kim, T. Generalizing MLPS with dropouts, batch normalization, and skip connections. arXiv preprint arXiv:2108.08186 (2021).

Shin, N.-H., Lee, S.-H. & Kim, C.-S. Moving window regression: A novel approach to ordinal regression. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 18760–18769 (2022).

Simonyan, K. & Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv preprint arXiv:1409.1556 (2014).

Hou, L., Samaras, D., Kurc, T., Gao, Y. & Saltz, J. Convnets with smooth adaptive activation functions for regression. In Artificial Intelligence and Statistics. 430–439 (PMLR, 2017).

Shaham, U., Zaidman, I. & Svirsky, J. Deep ordinal regression using optimal transport loss and unimodal output probabilities. arXiv preprint arXiv:2011.07607 (2020).

Zhang, K. et al. Fine-grained age estimation in the wild with attention LSTM networks. IEEE Trans. Circuits Syst. Video Technol. 30, 3140–3152 (2019).

Koo, J. H., Cho, S. W., Baek, N. R., Lee, Y. W. & Park, K. R. A survey on face and body based human recognition robust to image blurring and low illumination. Mathematics 10, 1522 (2022).

Baek, N. R., Cho, S. W., Koo, J. H., Truong, N. Q. & Park, K. R. Multimodal camera-based gender recognition using human-body image with two-step reconstruction network. IEEE Access 7, 104025–104044 (2019).

Bonet Cervera, E. Age & gender recognition in the wild. B.S. Thesis, Universitat Politècnica de Catalunya (2022).

Ge, Y., Lu, J., Fan, W. & Yang, D. Age estimation from human body images. In 2013 IEEE International Conference on Acoustics, Speech and Signal Processing. 2337–2341 (IEEE, 2013).

Gonzalez-Sosa, E. et al. Image-based gender estimation from body and face across distances. In 2016 23rd International Conference on Pattern Recognition (ICPR). 3061–3066 (IEEE, 2016).

Cao, W., Mirjalili, V. & Raschka, S. Rank consistent ordinal regression for neural networks with application to age estimation. Pattern Recognit. Lett. 140, 325–331 (2020).

Berg, A., Oskarsson, M. & O’Connor, M. Deep ordinal regression with label diversity. In 2020 25th International Conference on Pattern Recognition (ICPR). 2740–2747 (IEEE, 2021).

Rothe, R., Timofte, R. & Van Gool, L. Dex: Deep expectation of apparent age from a single image. In Proceedings of the IEEE International Conference on Computer Vision Workshops. 10–15 (2015).

Shin, N.-H., Lee, S.-H. & Kim, C.-S. Moving window regression: A novel approach to ordinal regression. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 18760–18769 (2022).

Hung, C.-Y. et al. Compacting, picking and growing for unforgetting continual learning. Adv. Neural Inf. Process. Syst. 32 (2019).

Acknowledgements

This research was supported by Guizhou Provincial Major Scientific and Technological Program (qian ke he cheng guo [2024] No.004).

Author information

Authors and Affiliations

Contributions

All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Jiang, S., Ji, Q., Shi, H. et al. Spatial correlation guided cross scale feature fusion for age and gender estimation. Sci Rep 15, 20873 (2025). https://doi.org/10.1038/s41598-025-03081-w

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-025-03081-w