Abstract

Rock image classification plays a crucial role in geological exploration, mineral resource development, and environmental monitoring. However, rock images often exhibit high intra-class similarity and low inter-class variation, posing challenges for accurate classification. Additionally, existing models often suffer from having a large number of parameters. To address these issues, we propose an enhanced rock classification model based on EfficientNet-B0. First, the DiffuseMix algorithm is applied to the training set to increase data diversity. Second, the shuffle attention mechanism is integrated into the backbone network to enhance feature extraction while reducing model parameters. Finally, the Lion optimizer is employed to optimize the training process, improving both the accuracy and stability of the model. The experimental results demonstrate that the improved model achieves an accuracy of 94.02% on the test set, outperforming ResNet50, MobileNetV3, and SwinTransformer by 8.02%, 9.35%, and 5.02%, respectively. Additionally, the model’s parameter count is significantly reduced to 3.38 million. The proposed model reduces the number of parameters while maintaining high recognition accuracy for rock image classification, providing a novel solution for intelligent rock recognition.

Similar content being viewed by others

Introduction

Rock classification is a critical task in geological research, playing an essential role in geological investigations, modeling, and mineral exploration1,2. Different types of rocks are products of specific geological processes, reflecting the environmental changes that occurred during their formation. By studying igneous, sedimentary, and metamorphic rocks, geologists can trace the chronological sequence of geological events and understand the interactions between Earth’s internal and external processes3. Additionally, rocks serve as important hosts for mineral resources. For instance, igneous rocks such as basalt and granite are often associated with metal ore deposits4, while sedimentary rocks like sandstone and shale contain coal, oil, and natural gas5.Currently, rock image data are primarily obtained from thin sections prepared and imaged under a microscope. In typical workflows, field geologists collect rock samples, which are then processed in laboratories through specialized preparation procedures to produce thin sections. However, the preparation of thin sections requires specific equipment and is time-consuming, making automated recognition based on thin-section images insufficient to address the long turnaround time and high cost associated with rock identification. In practical exploration scenarios, enabling fast and real-time automatic rock identification would significantly enhance operational efficiency, reduce exploration costs, and minimize human error.Moreover, the diversity in formation conditions, composition, structure, and physical properties of rocks poses substantial challenges to efficient and accurate rock type classification6,7. However, the diversity in rock formation conditions, composition, structure, and properties presents a significant challenge for efficient and accurate rock type identification6,7.

To address the above challenges, this study constructs a fresh rock image dataset specifically designed for rock classification tasks and proposes an improved classification method based on EfficientNet-B0. By incorporating the DiffuseMix data augmentation strategy, the Shuffle Attention mechanism, and the Lion optimizer, the proposed approach aims to enhance classification performance while maintaining model compactness. The main contributions of this study are as follows:

-

A fresh rock image dataset comprising five representative rock types was constructed to address the lack of field-oriented datasets in existing research.

-

An improved lightweight classification model based on EfficientNet-B0 was proposed, integrating DiffuseMix augmentation, the Shuffle Attention mechanism, and the Lion optimizer to achieve high accuracy with reduced model complexity.

-

Systematic comparative experiments demonstrated that the proposed method outperforms previous approaches by jointly optimizing data augmentation, attention mechanisms, and optimization strategies for fresh rock classification, highlighting its methodological innovation.

Related work

Traditional classification methods are typically feature-based, also known as “theory-driven” or “handcrafted” approaches, relying on classical machine learning algorithms such as Support Vector Machines (SVM)8 and Random Forest (RF)9. From a technical perspective, this approach is a semi-automated method for rock classification. It requires geologists or ___domain experts to first extract representative physical or chemical features from rock samples, which are then input into a model for processing. It is worth noting that, in recent years, the process of feature extraction and selection has become increasingly automated. The features include, but are not limited to, color, texture, mineral distribution, grayscale values, and chemical composition10,11,12,13. Wenhua et al14 used conventional logging data combined with Support Vector Machine (SVM) and Random Forest (RF) algorithms to classify the lithology of volcanic rocks in the Liaohe Oilfield. Their proposed classification method achieved an accuracy of 97.46%, significantly enhancing the ability to identify the lithology of complex formations. Guohua et al15 achieved a high accuracy using the GS-LightGBM model for high-dimensional data and complex formations. Similarly, Bressan et al16 applied machine learning methods to lithology classification, testing four algorithms: multilayer perceptron, decision tree, random forest, and support vector machine. Their results indicated that the random forest algorithm performed the best, achieving a classification accuracy of over 80%. Xue et al17 applied SVM and RF algorithms to classify coal rocks in coal mine roadway images, achieving an accuracy of 90.3% using PCA for feature dimensionality reduction. However, these methods rely on manual feature extraction, which requires significant geological expertise and is time-consuming, limiting their practical application in rock classification.

In recent years, advancements in deep learning have introduced new approaches to rock identification tasks18. Deep learning-based methods can automatically learn complex features such as texture and color, reducing manual intervention while improving the speed and accuracy of rock recognition. Chen et al19 proposed a rock image classification method based on ResNet and transfer learning. By utilizing pre-trained models and data augmentation techniques, they achieved a classification accuracy of 99.1% for seven rock types. Jia et al20 proposed the SwinMin model for mineral image recognition, combining CNN and Swin Transformer architectures to enhance local feature extraction and improve overall performance through multi-scale information fusion. This approach achieves higher recognition accuracy and reliability but comes with increased computational complexity. Guo et al21 improved the ability to capture key features in rock images by introducing the squeeze and excitation(SE) attention mechanism, raising classification accuracy to 90.30%, but at the cost of higher computational demands and increased model parameters. Liu et al22 employed Faster R-CNN and a simplified VGG16 network to achieve intelligent rock type recognition, achieving an accuracy of over 96% for images containing a single rock type. Ma et al23 applied knowledge distillation and a high-precision Feature Localization Comparison Network (FPCN) to improve the accuracy of fine-grained rock image classification to 93.1%.

Additionally, due to the high cost of collecting rock images, datasets are typically small, making transfer learning and data augmentation techniques widely used in rock classification research. Zhang et al24 applied transfer learning using the Inception-v3 model to develop an image classification model for three rock types: granite, kilomagnet, and breccia, achieving over 90% accuracy on both the training and test sets. Liang et al25 improved the classification accuracy of fine-grained rock images by combining image cropping and SBV algorithms. Liu et al26 employed three GAN models-DCGAN, SRGAN, and CycleGAN-to generate images highly similar to real mineral images in terms of texture, color, and shape, demonstrating the effectiveness of GAN-based enhancement methods on models such as InceptionV3. Li et al27 proposed the RDNet model, which achieves higher accuracy and efficiency in lithology classification. The model’s generalization ability is enhanced through data augmentation techniques, such as Mix-up, Cut-out, and Cut-mix, while the number of parameters and computational complexity is significantly reduced through model pruning and batch normalization.

Although deep learning has greatly advanced the field of rock image classification, several critical challenges remain. First, the lack of publicly available datasets specifically focused on fresh rock images poses a significant obstacle to the development of rapid and practical identification methods suitable for field-based geological exploration. Second, rock images within the same category often exhibit high intra-class similarity, while inter-class differences are relatively subtle, making accurate classification inherently difficult. Third, many existing models have large parameter sizes and high computational costs, which hinder their deployment in resource-constrained environments.To address these issues, this study constructs a fresh rock image dataset comprising five representative rock types-basalt, sandstone, granite, marble, and shale-and proposes an improved rock classification model based on EfficientNet-B0 that balances accuracy and model compactness.

Methods

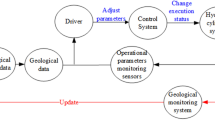

To achieve both high accuracy and model efficiency, an improved rock image classification model based on EfficientNet-B028 is proposed. First, the rock dataset was constructed and preprocessed. Second, to address the high similarity and low dissimilarity in rock images, the DiffuseMix29 data augmentation method was employed to mitigate overfitting and improve generalization. Specifically, the original dataset was first augmented using this method to generate enhanced data, which was then merged with the original dataset (rather than concatenated) to form a new training set for model training. Third, the squeeze and excitation(SE) attention mechanism, limited in spatial feature extraction and adding unnecessary parameters, was replaced with the shuffle attention(SA) mechanism30. This approach combines channel and spatial attention to enhance the capture of local features in rock images while reducing the number of model parameters. Lastly, the Lion optimizer31 was used to accelerate model convergence and improve training stability. By simplifying the parameter update process with symbolic operations, the optimizer reduces computational complexity while increasing the model’s robustness to noise and enhancing classification accuracy. The model training structure is illustrated in Fig. 1.

EfficientNet demonstrates strong capabilities in image analysis and can accurately classify different categories. However, due to the unique characteristics of rock images and certain limitations of the model, DiffuseMix, the SA mechanism, and the Lion optimizer were introduced to address these challenges. A brief overview of the methods used is provided below.

EfficientNet

EfficientNet28 is a convolutional neural network architecture proposed by Google in 2019, designed to achieve higher performance and efficiency in image classification by optimizing network structure and parameter utilization. EfficientNet incorporates techniques such as depthwise separable convolutions, group convolutions, the SE attention mechanism, the Swish activation function, and Neural Architecture Search (NAS). Its core innovation is the Compound Scaling strategy, which scales the network by considering three dimensions-depth, width, and input resolution-simultaneously. Unlike traditional methods that scale in a single dimension, Compound Scaling uses a composite coefficient \(\phi\) to coordinate all three dimensions, enabling the model to grow in size while preserving computational efficiency. The Eq. (1) that determines the expansion of each dimension is as follows.

Where, d represents the depth, w represents the width, and r represents the resolution. Constants \(\alpha\), \(\beta\) and \(\gamma\) satisfy the constraint \(\alpha \cdot {\beta ^2} \cdot {\gamma ^2} \approx 2\) and \(\alpha \ge 1,\beta \ge 1,\gamma \ge 1\) to control the increase in computational complexity.

The EfficientNet model series begins with the baseline model, EfficientNet-B0, and extends through versions EfficientNet-B1 to EfficientNet-B7 using compound scaling, enabling adaptation to different computational resources and requirements. EfficientNet-B0 is designed using Neural Architecture Search (NAS) technology, with MBConv serving as the core building block to enhance computational efficiency while maintaining strong feature representation capabilities. This study employs EfficientNet-B0 due to its lower model complexity, which requires fewer computational resources compared to more advanced versions, making it more suitable for resource-constrained scenarios.

DiffuseMix

To address the issue of high intra-class similarity and low inter-class variability in rock images, data augmentation methods were applied during the preprocessing stage. While existing techniques, such as Mix-up32, Cut-out33, Cut-mix34, can increase data diversity, they may also cause label confusion, impacting the model’s ability to accurately extract rock features. DiffuseMix enhances data diversity and improves model generalization while preserving the semantic consistency of the images. The core idea of DiffuseMix is to stitch parts of the original image with its generated counterpart, retaining essential image semantics and providing richer object details and contexts for more effective data augmentation.The DiffuseMix algorithm comprises three main steps: generation, stitching, and fractal mixing.

First, in the generation step, a pre-trained diffusion model generates the enhanced image \({\hat{I}_{ij}}\) based on a randomly selected prompt \({p_j}\) and the input image \({I_i}\). The Eq. (2) that represents the generation process is given as follows.

Where, \({I_i}\) represents the ith image in the original dataset, \({p_j}\) is the j th prompt randomly selected from \(P = \left\{ {{p_1},{p_2}, \ldots ,{p_k}} \right\}\), where k is the size of the prompt set, G is the generative function of the diffusion model, and \({\hat{I}_{ij}}\) is the jth image generated by the diffusion model corresponding to the ith original image.

Second, in the stitching step, a randomly selected mask \(M_u\) is used to stitch the original input image \({I_i}\) with the generated image \({\hat{I}_{ij}}\), forming the hybrid image \({H_{iju}}\). The Eq. (2) that represents the stitching process is given as follows.

Where, \(M_u\) is the uth mask randomly selected from the set of masks M, consisting only of 0s and 1s. The symbol \(\odot\) represents pixel-by-pixel multiplication. \({H_{iju}}\) is the hybrid image formed by combining the ith image with the generated image \({\hat{I}_{ij}}\) using the mask \(M_u\).

Finally, in the fractal blending step, a fractal image \(F_v\) is randomly selected from the fractal image dataset F and blended with the hybrid image \({H_{ijv}}\) using a blending factor \(\lambda\) to produce the final enhanced image \({A_{ijuv}}\). The Eq. (2) that represents the fractal mixing process is given as follows.

Where, \({F_v}\) is the vth fractal image randomly selected from the fractal image set F. \(\lambda\) is the fractal image mixing factor, which controls the proportion of the fractal image in the final enhanced image and is set to 0.2. \({A_{ijuv}}\) is the final enhanced image, obtained by blending the hybrid image \({H_{ijv}}\) with the fractal image \({F_v}\) using the blending factor \(\lambda\).

The DiffuseMix algorithm enhances data diversity through its generation, stitching, and fractal mixing steps, while preserving the semantic information of the original image. Experimental results demonstrate that DiffuseMix performs well on general categorization datasets (ImageNet35 and Tiny-ImageNet-20036) as well as fine-grained categorization datasets (Oxford-102 Flowers37, Stanford Cars38, and Aircraft39), significantly improving the model’s generalization capability and adversarial robustness.

Shuffle attention

In order to enhance feature extraction capabilities and reduce the number of model parameters, the shuffle attention(SA) mechanism was integrated into the backbone network. The SA mechanism combines channel attention and spatial attention, capturing both inter-channel dependencies and key spatial features in rock images. Additionally, the SA mechanism reduces the number of model parameters through group convolution. Finally, it incorporates a “channel shuffle” operation to facilitate interaction between different feature groups, enhancing the model’s ability to distinguish important features from noise and improving classification performance. The structure of the SA mechanism is illustrated in Fig. 2. The main implementation steps of the SA mechanism are as follows:

The processing flow of the SA mechanism30.

-

(1)

Feature grouping: The input feature map is divided into multiple sub-feature groups along the channel dimension, with each group gradually learning specific semantic information during the training process.

-

(2)

Application of the attention mechanism: The input sub-feature set is further divided into two parts, where spatial and channel attention mechanisms are applied in parallel.

-

(3)

Feature aggregation: The output features from the two attention modules are concatenated along the channel dimension and aggregated into a complete feature map.

-

(4)

Channel blending: The “channel shuffle” operation is applied to enable information exchange between different sub-feature groups, resulting in the final output feature map.

Lion optimizer

To further enhance the model’s training efficiency and stability, the Lion optimizer31 was employed to optimize its parameters. The Lion Optimizer is an efficient optimizer developed by Google in 2023 through extensive TPU-based search combined with human intervention. Unlike traditional adaptive optimizers such as AdamW40, the Lion Optimizer tracks only momentum and computes updates via the Sign Operation, ensuring all parameters are updated by the same magnitude. This design significantly reduces memory usage and computational complexity while demonstrating excellent performance in various tasks, including image classification, diffusion modeling, and language modeling.

The Eq. (5) that represents the Lion optimizer update process is as follows.

Among them,

Where, \({m_t}\) represents the current momentum, \({\beta _1}\) is the interpolation coefficient between the current gradient and momentum, \({\beta _2}\) is the momentum update rate, \({g_t}\) is the gradient of the loss function, \({\lambda _t}\) is the weight decay coefficient, \({\eta _t}\) is the learning rate, and \({\mu _t}\) is the update value of the model parameters.

Experiment settings and evaluation metrics

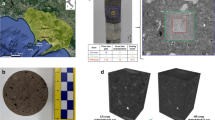

Data collection

The dataset used in this study comprises five distinct rock types: basalt, sandstone, granite, marble, and shale, each representing key categories in geological research. Fig. 3 shows the sample images of each rock type. These rock types exhibit unique physical and mineralogical properties, making them essential for both scientific investigations and practical applications such as construction materials, natural resource exploration, and educational tools. The dataset comprises 3,055 images obtained from the internet and photographs captured by the research team. The details of dataset are presented in Table 1. This dual-sourcing approach ensures a diverse and comprehensive collection of images, covering a wide range of textures, lighting conditions, and environmental settings. The inclusion of both controlled and real-world scenarios enhances the generalizability and robustness of the resulting models.

Training hyper-parameters and environmental setting

As this is a multi-class classification task, the Cross-Entropy Loss function is used in the experiment to calculate the loss for each batch.In this experiment, the batch size was set to 64. This study employed the Lion optimizer during training, with an initial learning rate of 1 \(\times\) 10-3 and a weight decay of 1 \(\times\) 10-3. The experimental platform utilized an NVIDIA 3090 GPU (24 GB VRAM) with CUDA version 11.3, running on a Linux system. The deep learning model was constructed and trained in a Python 3.8 environment using the PyTorch framework.

Evaluation metrics

To comprehensively evaluate the performance of the proposed model for rock classification, the experiments utilize accuracy (Acc), precision (P), recall (R), and \(F_1\) score as evaluation metrics. The Eqs. (8), (9), (10), and (11) that provide the formulas for these four metrics are as follows. Additionally, the number of parameters is calculated to assess the model’s efficiency in terms of its lightweight design.

Where, TP represents the number of true positives, indicating the samples correctly predicted as positive; FP represents the number of false positives, indicating the samples incorrectly predicted as positive; TN represents the number of true negatives, indicating the samples correctly predicted as negative; and FN represents the number of false negatives, indicating the samples incorrectly predicted as negative.

Results

To meet the input size requirements of the selected deep learning models, all images were resized to a standard resolution of 224\(\times\)224 pixels. The dataset was divided into three subsets: training, validation, and testing, following a 7:2:1 ratio. In this work, three methods were applied for comparison with the baseline model EfficientNet-B0: EfficientNet-B0 with DiffuseMix, EfficientNet-B0 with SA, EfficientNet-B0 with the Lion optimizer, and an improved model combining all three methods. To verify the performance of the improved model, comparative experiments were conducted using some mainstream models. The performances of our models were evaluated using four evaluation metrics: accuracy, recall, \(F_1\) score, and model parameters.The models were trained for multiple epochs, and their specific results are discussed in the following subsections.

Sensitivity analysis of hyperparameter \(\lambda\)

To evaluate the impact of the hyperparameter \(\lambda\) in Eq. (4) on model performance, a systematic sensitivity analysis was conducted. As a critical control parameter in the DiffuseMix augmentation method, the value of \(\lambda\) plays a significant role in determining the final classification performance of the model. Therefore, \(\lambda\) was selected from the set \(\{0.1, 0.2, 0.3, 0.4, 0.5\}\), and comparative experiments were conducted under identical settings. The performance was assessed using four metrics: Accuracy, Precision, Recall, and \(F_1\) score.

The experimental results are summarized in Table 2:

As shown in the results, when \(\lambda\) is set to 0.2, all performance metrics reach their highest values. Specifically, the model achieves an accuracy of 93.02%, a precision of 92.96%, a recall of 93.04%, and an \(F_1\) score of 92.94%. Compared to other settings, \(\lambda = 0.2\) enables a better balance between precision and recall, leading to overall superior performance and improved stability when utilizing fractal images. It is noteworthy that as \(\lambda\) increases beyond 0.2, model performance degrades significantly, suggesting that an excessively high \(\lambda\) may cause augmented samples to be overly influenced by fractal patterns, thereby adversely affecting classification accuracy.

Considering the performance across all evaluation metrics, \(\lambda = 0.2\) was ultimately selected in this study to maximize classification performance while maintaining robust generalization capability.

Ablation experiment

To evaluate the contribution of different components in our proposed model, we conducted an ablation study by systematically modifying key elements. Table 3 presents the results of these experiments on the test set, where we compare the baseline model (EfficientNet-B0) with various configurations, including the addition of DiffuseMix, the SA mechanism, the Lion optimizer, and a configuration integrating all three components.

Table 3 presents the results of the ablation study under different module combinations. The baseline model achieved an accuracy of 89.67% and an \(F_1\) score of 89.62%, serving as the reference for subsequent improvements. With the introduction of the DiffuseMix, the model’s accuracy increased to 93.02% and the \(F_1\) score improved to 92.94%, while maintaining the parameter count at 4.01M, demonstrating a significant enhancement in model performance. Incorporating the lightweight SA raised the accuracy to 91.30% and the \(F_1\) score to 91.32%, while reducing the parameter count to 3.38M, indicating excellent parameter efficiency. The use of the Lion optimizer alone led to limited improvements, with the accuracy reaching 90.00% and the \(F_1\) score 89.66%. The combination experiments revealed that the integration of DiffuseMix and SA yielded the best performance, achieving an accuracy of 93.68%, an \(F_1\) score of 93.60%, and reducing the parameter count to 3.38M, thus balancing performance enhancement and model compactness. Our final proposed method, which integrates all the improvements, further boosted the accuracy to 94.02% and the \(F_1\) score to 93.98%, representing an increase of approximately 4.35% and 4.36% over the baseline, respectively, while reducing the parameter count by about 15%. These results validate the effectiveness of the designed modules, particularly highlighting the critical role of DiffuseMix and SA in enhancing both model performance and efficiency.

In different geological settings, rock images exhibit significant variations in texture complexity and compositional diversity. In this study, the primary rock types, including basalt, sandstone, granite, marble, and shale, can be broadly categorized into simple and complex geological environments. Simple geological settings, such as sandstone and shale sedimentary layers, are characterized by homogeneous composition, regular particle distribution, and clearly defined bedding structures. In such scenarios, moderately reducing the number of model parameters does not compromise classification performance; rather, it mitigates the risk of overfitting and further enhances the model’s generalization capability. In contrast, complex geological environments-such as basalt development zones, marble areas with significant metamorphism, and fractured granites within fault zones-are marked by highly variable texture patterns and substantial scale differences, imposing greater demands on the model’s feature extraction capabilities. In these cases, simply reducing parameters may impair the model’s ability to perceive fine-grained features. However, by integrating efficient feature modeling modules, it is still possible to achieve effective recognition of complex texture structures while maintaining a relatively small parameter size.

EfficientNet-B0 with DiffuseMix

During data preprocessing, DiffuseMix was applied to increase the diversity of the rock dataset, expanding the training set to three times its original size. In this experiment, the DiffuseMix data augmentation method is employed to expand the training set, using the text prompt “autumn, sunset” with the InstructPix2Pix diffusion model. An example of data augmentation with DiffuseMix is presented in Fig. 4.

According to Table 3, the model with DiffuseMix demonstrated substantial improvements across all performance metrics compared to the baseline model. Accuracy, precision, recall, and \(F_1\) score each increased by approximately 3%, enhancing the model’s generalization ability. Furthermore, despite these performance gains, the number of model parameters remained unchanged.The comparison of training loss and validation accuracy for both models is shown in Fig. 5.

As shown in Fig. 5, the EfficientNet-B0 model combined with DiffuseMix outperforms the baseline model in both training and validation. In terms of training loss, DiffuseMix accelerates the convergence rate and maintains lower loss with less fluctuation in later stages, indicating better training stability. Regarding validation accuracy, the DiffuseMix model shows faster improvement in the early stages and maintains higher accuracy with more stable generalization throughout the entire training process.

EfficientNet-B0 with SA

As presented in Table 3, replacing the SE attention mechanism in the baseline model with the SA mechanism resulted in a 1.6% increase in accuracy, precision, recall, and \(F_1\)-score, demonstrating a moderate enhancement in overall model performance. Moreover, the model’s parameter count was reduced by approximately 16%, indicating that the SA mechanism not only enhances feature extraction but also improves efficiency by reducing model’s parameter.

As shown in Fig 6, the EfficientNet-B0 model with SA demonstrated superior performance compared to the baseline model during both training and validation. In terms of training loss, although both models exhibited rapid decreases in the early stages of training (within the first 50 epochs), the SA model consistently maintained lower loss values throughout the training process, especially in the later stages (after 200 epochs), where its loss curve was significantly below that of the baseline model. Regarding validation accuracy, the SA model showed a slightly faster improvement in the early stages, indicating that the SA mechanism enhances the model’s generalization ability more quickly during training.

EfficientNet-B0 with lion

According to Table 3, the Lion-optimized model exhibited slight but notable performance improvements over the baseline. Accuracy and precision increased by 0.33% and 0.48%, respectively, suggesting that the optimizer contributes to enhanced optimization efficiency. Although recall showed a minor decrease, the \(F_1\)-score remained nearly unchanged, indicating that the model effectively maintained a balance between precision and recall.

As shown in Fig 7, the EfficientNet-B0 model using the Lion optimizer outperformed the baseline model in both training and validation. During the early stages of training, the Lion optimizer significantly accelerated convergence, leading to a rapid reduction in loss and maintaining lower loss values in later stages, indicating improved stability. Regarding validation accuracy, the Lion-optimized model exhibited faster improvements in the early and middle stages and sustained higher accuracy with less fluctuation throughout training, highlighting its effectiveness in enhancing generalization.

EfficientNet-B0 with all methods

As reported in Table 3, the final model, incorporating DiffuseMix, the SA mechanism, and the Lion optimizer, achieved the highest performance across all metrics. Compared to the baseline, accuracy, precision, recall, and \(F_1\)-score increased by 4.3% to 4.4%, confirming the synergistic effect of these enhancements. Additionally, the parameter count decreased by 16%, demonstrating that the proposed model not only improves classification performance but also achieves greater efficiency.

In Fig 8, the model that incorporates all three methods shows significant improvements in both training loss and validation accuracy compared to the baseline EfficientNet-B0 model. The improved model demonstrates faster convergence in the early stages of training, with lower loss values, and maintains greater stability throughout the training process. In terms of validation accuracy, the model experiences quicker improvements in the early stages and maintains higher accuracy with smaller fluctuations later on, indicating better generalization and training stability.

Comparative experiments

To verify the performance of the improved model, comparative experiments were conducted using different variants of the ResNet41, ResNeXt42, MobileNet43,44, ShuffleNetV245, ConvNeXt46, MobileViT47, and SwinTransformer48 models. The comparative experimental results are shown in the Table 4.

In the comparative experiments, the proposed model exhibited notable advantages, achieving an excellent balance between performance and parameter efficiency. It achieved an accuracy of 94.02%, outperforming all other models and exceeding the closest competitor, SwinTransformer, by approximately 5 percentage points. Moreover, the proposed model attained an \(F_1\) score of 93.98%, further demonstrating its robustness in classification tasks and its ability to effectively handle imbalanced data. Compared with recently developed advanced models, such as the methods proposed by Zhihao H. et al.49 (91.87%), Yan Z. et al.50 (91.36%), and Weihao C. et al.19 (90.03%), the proposed approach consistently achieved superior performance. Notably, the proposed model contains only 3.38 million parameters, which is significantly fewer than those of large-scale models such as ResNet101 and SwinTransformer. Furthermore, compared to lightweight models including MobileNet and ShuffleNet, the proposed model achieves substantial performance improvements while further reducing model complexity.

Analysis of model complexity and inference efficiency

To evaluate the practical applicability and deployability of the proposed method, a quantitative comparison was conducted between the improved model and the baseline from two key perspectives: model complexity and inference efficiency. Specifically, we compared the number of parameters, computational cost (measured in MACs), and inference latency. The experimental results are presented in Table 5.

As shown in Table 5, the proposed model significantly reduces the number of parameters compared to the baseline, achieving a reduction of approximately 15.7%. This indicates the effectiveness of the structural optimization in enhancing model compactness. Although the computational cost slightly increases to 400.91 million MACs, the relative growth remains below 1%, which is within an acceptable range and does not impose a substantial computational burden.

In terms of inference performance, the proposed model achieves an average inference time of 25.19 ms, which is nearly identical to that of the baseline model (25.08 ms). The minimal difference in latency demonstrates that the proposed method maintains high inference efficiency despite the minor increase in computational complexity.

Overall, the results suggest that the proposed method successfully balances performance improvement with model compactness and computational efficiency. Its lightweight nature and low latency make it well suited for deployment in real-world scenarios, particularly those with resource constraints or stringent response time requirements.

Grad-CAM analysis

To further evaluate the neighborhood adaptability of the proposed model, this study conducted visualization experiments using Grad-CAM (Gradient-weighted Class Activation Mapping). As shown in Fig. 9, a comparative analysis between the baseline model (left) and the proposed method (right) clearly reveals significant differences in their attention patterns.

Each row in the figure corresponds to one input sample. The left three columns show the input image and the corresponding activation maps of the baseline model, while the right three columns illustrate the visualization results of the proposed method. The heatmaps highlight the key regions that the models attend to when making classification decisions.

In contrast, the proposed model produces more focused and semantically meaningful activation regions. The attention maps consistently cover the entire rock surface, effectively capturing structural and textural information. This indicates a stronger ability to perceive and adapt to local neighborhood variations. The more precise and comprehensive focus regions also imply enhanced robustness and interpretability.

In summary, the Grad-CAM visualizations provide qualitative evidence of the proposed model’s superior neighborhood adaptability in complex visual environments, further validating its effectiveness in enhancing local discriminative capacity.

Discussion

This study proposes an improved rock classification method based on EfficientNet-B0, incorporating DiffuseMix data augmentation, the Shuffle Attention mechanism, and the Lion optimizer to enhance classification performance. Although the model achieves high accuracy on the experimental dataset, certain limitations remain in practical applications.

On one hand, the dataset used in this study contains a limited number of rock types and a relatively small sample size. As a result, the model may struggle to generalize effectively to complex real-world conditions, such as variations in lighting, occlusions, and noise interference, thereby limiting its generalization capability. On the other hand, the model relies solely on static 2D visual features for classification, whereas 3D morphology, spectral analysis (XRD), and scanning electron microscopy (SEM) data are also crucial for accurate rock identification.

Beyond these limitations, the proposed model demonstrates strong potential for real-world geological applications. Its lightweight architecture and high classification accuracy make it particularly suitable for integration into existing geological exploration workflows, especially in scenarios requiring rapid on-site decision-making. For example, the model can be deployed on portable devices to assist with preliminary lithological identification during field surveys, thereby reducing reliance on traditional laboratory analyses. In addition, it can be incorporated into automated core scanning systems to provide real-time support for conventional petrographic studies, thereby improving exploration efficiency.

Future research will focus on expanding the dataset to enhance the model’s adaptability to complex geological environments. In parallel, the integration of multimodal features51,52,53, such as spectral data and three-dimensional morphological characteristics, is expected to further improve the accuracy and practical applicability of rock classification. In addition, efforts will be made to explore the deployment of the model in real-world geological applications, including optimizing the model for portable devices, integrating it into existing exploration systems, and conducting field validation to ensure reliability across diverse environmental conditions.

Conclusion

This study proposes an improved lightweight rock classification model based on EfficientNet-B0 to address common challenges in rock image classification, including high intra-class similarity, low inter-class variability, and excessive model complexity. By integrating the DiffuseMix data augmentation strategy, the Shuffle Attention mechanism, and the Lion optimizer, the proposed method enhances classification performance while maintaining a compact model structure.

To support the evaluation of the proposed approach, a new rock image dataset consisting of five representative rock types was constructed, effectively filling the gap in field-oriented datasets in existing research. Experimental results on this dataset demonstrate that the proposed model achieves a classification accuracy of 94.02% and an \(F_1\) score of 93.98%, with only 3.38 million parameters. These results surpass the performance of several mainstream models, including ResNet, MobileNet, ShuffleNet, and SwinTransformer, as well as recent advanced rock classification methods.

Ablation studies further verify the effectiveness of each component: DiffuseMix improves data diversity and model generalization; the Shuffle Attention mechanism enhances feature extraction while preserving model efficiency; and the Lion optimizer accelerates convergence and improves training stability.

In summary, the proposed model achieves an excellent trade-off between accuracy and model complexity, making it highly suitable for practical geological classification tasks, particularly in resource-constrained field environments.

Data availability

The datasets generated and analyzed during the current study are not publicly available due to requirements of our partners, but are available from the corresponding author on reasonable request. The source code used in this study is publicly available at: https://github.com/zhh-pixel/rock.

References

Xu, Z. et al. Deep learning of rock images for intelligent lithology identification. Comput. Geosci. 154, 104799 (2021).

Shirmard, H., Farahbakhsh, E., Müller, R. D. & Chandra, R. A review of machine learning in processing remote sensing data for mineral exploration. Remote Sens. Environ. 268, 112750 (2022).

Nance, R. D., Murphy, J. B. & Santosh, M. The supercontinent cycle: a retrospective essay. Gondwana Res. 25, 4–29 (2014).

Naldrett, A. From the mantle to the bank: the life of a ni-cu-(pge) sulfide deposit. South Afr. J. Geol. 113, 1–32 (2010).

Zhang, W., Bai, K., Zhan, C. & Tu, B. Parameter prediction of coiled tubing drilling based on gan-lstm. Sci. Rep. 13, 10875 (2023).

Tatar, A., Haghighi, M. & Zeinijahromi, A. Experiments on image data augmentation techniques for geological rock type classification with convolutional neural networks. J. Rock Mech. Geotech. Eng.. 17 (1), 106-125 (2024).

Ma, J. et al. A real-time intelligent classification model using machine learning for tunnel surrounding rock and its application. Georisk: Assess. Manag. Risk for Eng. Syst. Geohazards 17, 148–168 (2023).

Wang, P., Chen, X., Wang, B., Li, J. & Dai, H. An improved method for lithology identification based on a hidden markov model and random forests. Geophysics 85, IM27–IM36 (2020).

Song, Q., Jiang, H., Song, Q., Zhao, X. & Wu, X. Combination of minimum enclosing balls classifier with svm in coal-rock recognition. PLoS One 12, e0184834 (2017).

Chatterjee, S. Vision-based rock-type classification of limestone using multi-class support vector machine. Appl. intelligence 39, 14–27 (2013).

Sharif, H., Ralchenko, M., Samson, C. & Ellery, A. Autonomous rock classification using bayesian image analysis for rover-based planetary exploration. Comput. Geosci. 83, 153–167 (2015).

Wu, Y. & Misra, S. Intelligent image segmentation for organic-rich shales using random forest, wavelet transform, and hessian matrix. IEEE Geosci. Remote Sens. Lett. 17, 1144–1147 (2019).

Kalashnikov, A., Pakhomovsky, Y. A., Bazai, A., Mikhailova, J. & Konopleva, N. Rock-chemistry-to-mineral-properties conversion: Machine learning approach. Ore. Geol. Rev. 136, 104292 (2021).

Wenhua, W. et al. Lithology classification of volcanic rocks based on conventional logging data of machine learning: A case study of the eastern depression of liaohe oil field. Open Geosci. 13, 1245–1258 (2021).

Guohua, Q., Weiwei, Z. & Shuilong, S. Intelligent identification of stratum characteristics during shield tunnelling based on pca-gmm model. J. Basic Sci. Eng. 31, 1508–1518 (2023).

Bressan, T. S., de Souza, M. K., Girelli, T. J. & Junior, F. C. Evaluation of machine learning methods for lithology classification using geophysical data. Comput. Geosci. 139, 104475 (2020).

Xue, Y. Coal and rock classification with rib images and machine learning techniques. Min. Metall. Explor. 39, 453–465 (2022).

Li, J. et al. Autonomous martian rock image classification based on transfer deep learning methods. Earth Science Informatics 13, 951–963 (2020).

Chen, W., Su, L., Chen, X. & Huang, Z. Rock image classification using deep residual neural network with transfer learning. Front. Earth Sci. 10, 1079447 (2023).

Jia, L. et al. Swinmin: A mineral recognition model incorporating convolution and multi-scale contexts into swin transformer. Comput. Geosci. 184, 105532 (2024).

Guo, Y. et al. Automatic lithology identification method based on efficient deep convolutional network. Earth Sci. Informatics 16, 1359–1372 (2023).

Liu, X., Wang, H., Jing, H., Shao, A. & Wang, L. Research on intelligent identification of rock types based on faster r-cnn method. IEEE Access 8, 21804–21812 (2020).

Ma, M., Gui, Z. & Gao, Z. Research on a high-performance rock image classification method. Electronics 12, 4805 (2023).

Zhang, Y., Li, M. & Han, S. Automatic identification and classification in lithology based on deep learning in rock images. Yanshi Xuebao/Acta Petrologica Sinica 34, 333–342 (2018).

Liang, Y., Cui, Q., Luo, X. & Xie, Z. Research on classification of fine-grained rock images based on deep learning. Comput. Intell. Neurosci. 2021, 5779740 (2021).

Liu, Y., Wang, X., Zhang, Z. & Deng, F. Deep learning based data augmentation for large-scale mineral image recognition and classification. Miner. Eng. 204, 108411 (2023).

Li, D., Zhao, J. & Liu, Z. A novel method of multitype hybrid rock lithology classification based on convolutional neural networks. Sensors 22, 1574 (2022).

Tan, M. & Le, Q. E. Rethinking model scaling for convolutional neural networks. arxiv 2019. arXiv preprint arXiv:1905.11946 (1905).

Islam, K., Zaheer, M. Z., Mahmood, A. & Nandakumar, K. Diffusemix: Label-preserving data augmentation with diffusion models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 27621–27630 (2024).

Zhang, Q.-L. & Yang, Y.-B. Sa-net: Shuffle attention for deep convolutional neural networks. In ICASSP 2021-2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), 2235–2239 (IEEE, 2021).

Chen, X. et al. Symbolic discovery of optimization algorithms. Advances in neural information processing systems 36 (2024).

Zhang, H. mixup: Beyond empirical risk minimization. arXiv preprint arXiv:1710.09412 (2017).

DeVries, T. Improved regularization of convolutional neural networks with cutout. arXiv preprint arXiv:1708.04552 (2017).

Yun, S. et al. Cutmix: Regularization strategy to train strong classifiers with localizable features. In: Proceedings of the IEEE/CVF international conference on computer vision, 6023–6032 (2019).

Deng, J. et al. Imagenet: A large-scale hierarchical image database. In: 2009 IEEE conference on computer vision and pattern recognition, 248–255 (Ieee, 2009).

Le, Y. & Yang, X. Tiny imagenet visual recognition challenge. CS 231N(7), 3 (2015).

Nilsback, M.-E. & Zisserman, A. Automated flower classification over a large number of classes. In: 2008 Sixth Indian conference on computer vision, graphics & image processing, 722–729 (IEEE, 2008).

Krause, J., Stark, M., Deng, J. & Fei-Fei, L. 3d object representations for fine-grained categorization. In: Proceedings of the IEEE international conference on computer vision workshops, 554–561 (2013).

Maji, S., Rahtu, E., Kannala, J., Blaschko, M. & Vedaldi, A. Fine-grained visual classification of aircraft. arXiv preprint arXiv:1306.5151 (2013).

Loshchilov, I. Decoupled weight decay regularization. arXiv preprint arXiv:1711.05101 (2017).

He, K., Zhang, X., Ren, S. & Sun, J. Deep residual learning for image recognition. In: Proceedings of the IEEE conference on computer vision and pattern recognition, 770–778 (2016).

Xie, S., Girshick, R., Dollár, P., Tu, Z. & He, K. Aggregated residual transformations for deep neural networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition, 1492–1500 (2017).

Sandler, M., Howard, A., Zhu, M., Zhmoginov, A. & Chen, L.-C. Mobilenetv2: Inverted residuals and linear bottlenecks. In: Proceedings of the IEEE conference on computer vision and pattern recognition, 4510–4520 (2018).

Howard, A. et al. Searching for mobilenetv3. In: Proceedings of the IEEE/CVF international conference on computer vision, 1314–1324 (2019).

Ma, N., Zhang, X., Zheng, H.-T. & Sun, J. Shufflenet v2: Practical guidelines for efficient cnn architecture design. In: Proceedings of the European conference on computer vision (ECCV), 116–131 (2018).

Liu, Z. et al. A convnet for the 2020s. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 11976–11986 (2022).

Mehta, S. & Rastegari, M. Mobilevit: light-weight, general-purpose, and mobile-friendly vision transformer. arXiv preprint arXiv:2110.02178 (2021).

Liu, Z. et al. Swin transformer: Hierarchical vision transformer using shifted windows. In: Proceedings of the IEEE/CVF international conference on computer vision, 10012–10022 (2021).

Huang, Z., Su, L., Wu, J. & Chen, Y. Rock image classification based on efficientnet and triplet attention mechanism. Appl. Sci. 13, 3180 (2023).

Zhang, Y., Ye, Y.-L., Guo, D.-J. & Huang, T. Pca-vgg16 model for classification of rock types. Earth Sci. Informatics 17, 1553–1567 (2024).

He, K. et al. Remote sensing image interpretation of geological lithology via a sensitive feature self-aggregation deep fusion network. Int. J. Appl. Earth Obs. Geoinf. 137, 104384 (2025).

He, K. et al. Improving geological remote sensing interpretation via a contextually enhanced multiscale feature fusion network. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 17, 6158-6173 (2024).

Niu, H., Fan, R., Chen, J., Xu, Z. & Feng, R. Urban informal settlements interpretation via a novel multi-modal kolmogorov-arnold fusion network by exploring hierarchical features from remote sensing and street view images. Sci. Remote. Sens. 11, 100208 (2025).

Funding

This work was supported in part by the Open Fund of Hubei Key Laboratory of Drilling and Production Engineering for Oil and Gas(Yangtze University)(YQZC202205).

Author information

Authors and Affiliations

Contributions

K.B. developed the concept, devised the methodology; Z.Z. developed the software, managed data; S.J. wrote the original draft; S.D. reviewed and edited the manuscript. All authors reviewed the manuscript. All authors have read and agreed to the published version of the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Bai, K., Zhang, Z., Jin, S. et al. Rock image classification based on improved EfficientNet. Sci Rep 15, 18683 (2025). https://doi.org/10.1038/s41598-025-03706-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-025-03706-0