Abstract

In this study, we utilized a comprehensive dataset comprising over 19,000 data points with inputs represented by coordinates (x, y) and the corresponding output denoted as concentration (C). The case study was analysis of mesoporous material for adsorption separation of target solute from aqueous solution. Mass transfer and machine learning evaluations were carried out to obtain separation efficiency. Our objective was to develop predictive models for C using three distinct base models: decision tree (DT), support vector regression (SVR), and Gaussian process regression (GPR). To enhance the predictive performance of these base models, we employed the ensemble method AdaBoost, which combines their outputs to yield more accurate predictions. Hyper-parameter optimization was achieved through particle swarm optimization, allowing us to fine-tune the models for optimal results. The results of our experiments demonstrate promising performance across the ensemble models. Specifically, AdaBoost combined with decision tree (ADA-DT) yielded an impressive R2 of 0.96984 and a mean squared error (MSE) of 7.9407E+00. AdaBoost combined with SVR (ADA-SVR) achieved an even higher R2 score of 0.97148 with an MSE of 7.6362E+00, while AdaBoost combined with GPR (ADA-GPR) produced a commendable R2 score of 0.95963 with an MSE of 8.16352E+00.

Similar content being viewed by others

Introduction

Various computational models have been developed for analysis and correlation of separation processes where a solute is removed from solution via mass transfer. The models are primarily based on estimation of diffusional and convective mass transfer rates and use of thermodynamics for phase equilibria in the solution and solid phase1,2,3. It is of great importance to find out the concentration distribution of solute in adsorption process so that the distribution and separation efficiency can be correlated to the process parameters.

Usually, computational fluid dynamics (CFD) method is used for determination of concentration distribution in adsorption process by which transport phenomena equations should be solved numerically in order to find the distribution of solute concentration in the solution. By calculating the concentration values at the outlet in continuous mode or at the end of process for batch mode, one can estimate the separation efficiency and the time required for the desired separation4,5,6. Although CFD is powerful in measuring the separation efficiency, the method of computation suffers from some drawbacks such as computational expenses and therefore some methods based on Machine learning (ML) can be replaced the physical models.

The use of machine learning models has gained significant traction as powerful tools for the analysis and prediction of data in various industries7,8. The application of these methodologies enables the extraction of valuable insights and complex patterns from intricate datasets, thereby empowering researchers to generate accurate predictions and well-informed decisions. There have been significant advances in the ___domain of ML over the past decade, which have led to the emergence of various algorithms and models designed to address a wide range of complex problems9,10,11. Unlike CFD method, ML can learn the data to offer quick and accurate prediction of concentration distribution of solute entire the process12,13. For adsorption, this can be done by finding solute concentration versus spatial coordinates such as x and y14. From the practical point of view, finding solute concentration versus x and y can help optimize the process and calculate the separation efficiency for various solid adsorbents, thereby saving time and cost of measurements.

The present study employed AdaBoost regression as the ensemble method, incorporating three distinct base models: decision tree (DT), support vector regression (SVR), and Gaussian process regression (GPR) for the first time to predict solute concentration in adsorption process using mesoporous silica materials. AdaBoost regression is a widely utilized and highly efficient methodology for constructing precise regression models. This is achieved by amalgamating the forecasts of numerous weak regressors through an adaptive and iterative procedure15.

The DT regression approach is a simple yet powerful way of modeling predictive data. By recursively splitting the dataset, it creates a tree-like structure where each leaf (terminal) node contains the predicted numeric value. DT regression is known for its interpretability, making it a popular choice when understanding feature importance is crucial. However, it can be prone to overfitting noisy data, and its performance may be limited for highly complex relationships within the data16.

SVR represents a distinct adaptation of support vector machines (SVMs), for regression objectives. SVR endeavors to pinpoint an ideal hyperplane that adeptly captures the inherent data patterns, all while accounting for a predefined range of acceptable deviations, referred to as the epsilon-insensitive zone17.

GPR is a versatile technique utilized for the modeling of non-linear relationships within data. GPR employs mathematical functions to model the uncertainty of predictions based on the available training set. A kernel function quantifies the similarity and correlation between data. GPR is capable of effectively managing observations that are affected by noise and intricate data patterns. The computation of large datasets can pose challenges18.

The main contribution and innovation of the current study is developing a modeling framework based on machine learning and integration of CFD and ML for prediction of solute concentration distribution in adsorption process. Furthermore, the built models are optimized using advanced algorithm including particle swarm optimization to enhance its performance. Use of boosting strategy and integration of optimizer would enhance the accuracy of models considerably which have practical application in design and optimization of adsorption process for selective removal of species from solution such as water treatment.

Material and methods

In this section, we outline the comprehensive methodology employed for the regression analysis of a concentration dataset comprising more than 19,000 data points. The primary objective of this analysis is to model and predict the concentration (C) in a given environment based on two coordinates (x and y). To ensure the robustness of our analysis, we follow a structured workflow that encompasses data preprocessing, model selection, hyper-parameter optimization, and ensemble learning. The methodology can be summarized into the following key steps:

-

1.

Data preprocessing: We commence by scrutinizing the dataset for outliers using Cook’s distance, a widely accepted method for identifying influential data points. To facilitate consistent model training, we employ min–max scaling to normalize the input features (x and y). Subsequently, we partition the dataset into a training set (75%) and a test set (25%) to enable model evaluation.

-

2.

Model selection: Three distinct regression models serve as the foundation of our analysis: DT, SVR, and GPR. Our choice of these models was based on their ability to capture nonlinear relationships and handle large datasets. In addition, we use the AdaBoost ensemble method to combine the strengths of these base models to improve prediction accuracy.

-

3.

Hyper-parameter optimization: To harness the full potential of each base model and the ensemble method, we employ particle swarm optimization (PSO) for hyper-parameter tuning. PSO is utilized to search for optimal hyper-parameter configurations, such as tree depth for DT, kernel selection for SVR, and alpha value for GPR.

This structured approach (visually shown in Fig. 1), from data preprocessing to ensemble learning, aims to uncover the best regression model for predicting chemical concentrations within the spatial ___domain defined by x and y coordinates. In the subsequent sections, we delve into the specifics of each step, presenting experimental results and insights derived from this comprehensive analysis. The building blocks of this modeling structure are described in the rest of this section.

Dataset description

The dataset under examination contains over 19,000 entries, which represent a diverse collection of data points14. The data have been collected from CFD simulation of mass transfer in continuous mode considering a single adsorbent particle which is mesoporous silica structure with high surface area for adsorption. Diffusion was considered inside the porous adsorbent, and the mass transfer in the solution was assumed to be diffusion and convection due to the fluid flow. The convection term is significant in the bulk of solution because of velocity dominance, and effect of viscous forces are simulated via momentum equation14.

Mass transfer model can be expressed as diffusion and convection for the bulk of fluid and inside the porous solid which is diffusion only. The general form of mass transfer may be written as19:

where C is the concentration of solute (mol/m3), D is diffusivity, V is velocity, and t is time. R is chemical reaction term which is zero in this case.

The fluid flow in the bulk of solution (Navier–Stokes equations) can be expressed as19:

where V is velocity, p is pressure, \(\rho\) is density of fluid, \(\mu\) is the viscosity, and F is the body force.

The coupled equations of mass transfer and Navier–Stokes were solved by finite element method and utilization of COMSOL software to determine concentration distribution of solute at various locations in the process, i.e., x and y. Indeed, two-dimensional model was assumed for the mass transfer simulation of adsorption process. Then, the concentration (C) data was used in the next step for ML modeling. As such, C as a function of x and y are used for ML analysis.

Due to its size and diversity, the dataset can be used for research and modeling, allowing a thorough exploration of various correlations and patterns. The inputs are x and y measured in meters, while the output feature is C expressed in moles per cubic meter (moles/m3)14. The histograms of variables are shown in Fig. 2.

Outlier detection: Cook’s distance

In the process of analyzing the dataset and building regression models, it is essential to identify potential outliers that might influence the model’s performance and reliability. Cook’s distance is a valuable metric for detecting influential data points in regression analysis. It measures the impact of each data point on the model’s predictions and parameter estimates.

For a given data point i, Cook’s distance \(D_{i}\) is defined as20,21:

where n stands for the total number of data points, \(Y_{j}\) denotes the observed response variable, and \(Y_{j\left( i \right)}\) denotes the predicted response variable with the i-th data point removed. Also, p represents the number of model parameters (including intercept) and \(\widehat{{{\upsigma }^{2} }}\) stands for the estimated variance of the error term.

Cook’s distance quantifies how much the model’s predictions and parameters change when the i-th data point is excluded. Larger Cook’s distances indicate that the data point significantly influences the model.

A common threshold for identifying influential points is a Cook’s distance greater than \(4/n\), which corresponds to a point having a substantial impact on the model.

In our analysis, Cook’s distance was used as a preliminary step for outlier detection. Data points with Cook’s distance values exceeding the threshold were flagged as potential outliers and considered for further investigation and preprocessing.

By identifying and addressing influential outliers, we aimed to improve the robustness and accuracy of our regression models.

Ensemble method: AdaBoost regression

AdaBoost, abbreviated from Adaptive boosting, stands as an ensemble regression method that amalgamates forecasts from numerous weak learners, typically decision trees, to craft a potent predictive model. In situations where input and output variables have complex relationships, it is particularly useful.

In the AdaBoost algorithm, the assignment of weights to each weak learner is determined by their respective accuracies in predicting the target variable. In the context of ML, weak learners that exhibit superior performance are assigned greater weights, while those that demonstrate inferior performance are assigned lesser weights. The final prediction is achieved by combining the weighted predictions contributed by each independent weak learner22.

The key equation for AdaBoost regression involves calculating the weighted sum of the weak learners’ predictions15:

In this context, the ultimate prediction, denoted as F(x), is determined by a combination of factors. \({\upalpha }_{t}\) signifies the weight attributed to the weak learner \(h_{t} \left( x \right)\), while T represents the overall count of weak learners in the ensemble.

AdaBoost incrementally adjusts the weights of training samples to emphasize those misclassified in the prior iteration, thus enhancing predictive accuracy. The steps of this model are shown in Fig. 3.

Base models

The models employed in this study comprise of DT, GPR, and SVR. The aforementioned models function as the fundamental components within the ensemble learning structure known as Adaptive Boosting Regression (AdaBoost).

The DT regression model is a versatile and interpretable ML technique used in our analysis. It constructs a tree-like structure that recursively partitions the dataset based on the input features, x and y, into regions with distinct predicted outcomes. In the context of regression, each leaf node of the tree represents a predicted numeric value for the target variable, C (concentration). DT regression identifies complex, nonlinear associations between input features and the target variable. This is especially beneficial for our analysis, as it offers insights into the hierarchical significance of input features in predicting chemical concentrations, facilitating result interpretation. The model’s hyperparameters, such as tree depth and split criteria, are optimized through PSO to ensure optimal performance in our regression task23.

In the context of regression tasks, researchers have introduced SVR, which is a specialized version derived from the general-purpose support vector machine (SVM). SVR is designed to identify an optimal hyperplane that effectively accommodates the dataset while regulating deviations within an epsilon-insensitive zone. This approach seeks to strike a delicate balance between accurately fitting the training data and averting overfitting. Leveraging the kernel trick, SVR transforms the data into higher-dimensional space, thereby enhancing its capacity to capture intricate, non-linear associations within the dataset24. SVR demonstrates proficiency in managing datasets with high dimensionality and exhibits reduced sensitivity to outliers. Nevertheless, the key to achieving peak performance lies in the judicious selection of hyperparameters and kernel functions24.

GPR represents a Bayesian approach, wherein it characterizes the connection between input attributes X and the target variable Y as a probabilistic distribution encompassing various functions. This predictive distribution takes the form of25:

Within the framework of this equation, the expression \({\upmu }\left( X \right)\) signifies the mean function, while \(k\left( {X,X^{\prime}} \right)\) serves as the kernel function, responsible for evaluating the likeness between data points X and \(X^{\prime}\). GPR exhibits the ability to capture intricate non-linear associations within the dataset and excels in managing observations tainted by noise. Nevertheless, it may encounter computational challenges when confronted with extensive datasets, primarily due to the requirement of inverting a covariance matrix. The method is described in Fig. 4.

Hyperparameter tuning: particle swarm optimization (PSO)

The PSO is a nature-inspired optimization method that emulates the social behavior of birds in flocks or particles traversing a multi-dimensional space. The PSO algorithm is utilized in hyperparameter tuning to detect the optimal hyperparameters that enhance the fitting accuracy of machine learning26,27.

PSO initializes a population, with each particle symbolizing a distinct configuration of hyperparameters. These particles systematically modify their locations in the hyperparameter space according to their present performance and the experiences of their peers. The PSO algorithm efficiently navigates the hyperparameter space and progressively approaches the optimal solution by tracking the most successful particles in the swarm28. One of the unique aspects of the PSO algorithm is its ability to strike a balance between exploration and exploitation. This adaptive behavior makes the PSO algorithm a valuable tool for hyperparameter tuning, where the objective is to discover hyperparameter configurations that yield the best model performance.

Results and discussion

In this section, the results of our work involving three base regression models (DT, SVR, and GPR) combined with the AdaBoost ensemble method are represented and analyzed. The project was built using Python 3.10, harnessing powerful libraries for data analysis and ML. Scikit-learn provided a robust set of ML algorithms for predictive modeling, while Matplotlib enabled data visualization to illustrate trends and results. NumPy and Pandas supported efficient numerical operations and data management, streamlining the analysis process.

We assessed the efficacy of each model utilizing two fundamental metrics: the Coefficient of Determination (R2-score) and the mean squared error (MSE). These metrics provide insights into the model’s goodness of fit and the magnitude of prediction errors, respectively. The models were assessed on training, fivefold cross-validation, and test sets, with results summarized in Table 1, which includes R2-score and MSE for each phase.

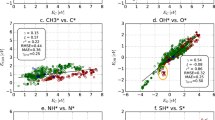

The findings illustrate the effectiveness of the AdaBoost ensemble technique in enhancing the predictive precision of the foundational regression models. AdaBoost integrated with decision tree (ADA-DT) attained a remarkable R2-score of 0.96984, indicating a robust agreement between predictions and actual data. AdaBoost combined with support vector regression (ADA-SVR) performed even better with an R2 of 0.97148, showcasing excellent predictive accuracy. AdaBoost combined with Gaussian Process Regression (ADA-GPR) also exhibited commendable performance with an R2-score of 0.95963. Finally, the ADA-SVR model is selected to do our final analysis. Figure 5 compares the observed and predicted output values. Also, partial dependencies are shown in Figs. 6 and 7 which show agreement with the results reported in literature14.

The close alignment of R2-scores and MSE values across training, fivefold cross-validation, and test sets, as shown in Table 1, indicates the absence of significant overfitting in the AdaBoost ensemble models. For instance, the ADA-SVR model’s cross-validation R2-score of 0.96629 is only marginally lower than its test R2-score of 0.97148, with a similar trend observed in MSE values. Likewise, ADA-DT and ADA-GPR exhibit consistent performance across all sets, suggesting that the models generalize well to unseen data. This robustness, achieved through careful hyper-parameter tuning via particle swarm optimization and the ensemble approach, underscores the reliability of the predictive models for practical applications.

Figure 8 displays the ultimate prediction surface in a three-dimensional representation, while Fig. 9 exhibits the contour plot of the output. The significance of the input features is demonstrated in Fig. 10. No significant concentration change was observed in the bulk of solution which is far from the solid adsorbent, and that implies the concentration change is important near the surface of porous adsorbent. It shows the formation of solute concentration boundary layer governed by molecular diffusion (see Fig. 9). It is also notable that molecular diffusion is significant inside the porous adsorbent which causes significant concentration change inside the mesoporous silica. Same variations was reported by Sun et al.14 for hybrid machine learning modeling of solute concentration in adsorption process.

In addition to assessing the accuracy of the ML models, we evaluated their computational efficiency, focusing on CPU time and memory usage. These metrics are essential for determining the feasibility of deploying the models in practical, resource-limited settings. For example, the overall training time for Model ADA-DT was approximately 531 s, Model ADA-GPR required 590 s, and Model ADA-SVR took 672 s. These differences highlight the trade-offs between model complexity and computational cost, which are critical considerations for scalability and real-world application. Future efforts could explore optimization techniques, such as parallel processing or model simplification, to reduce these computational demands while maintaining performance.

While this study offers valuable insights into the performance of the tested ML algorithms, several limitations should be noted to provide a balanced perspective. The dataset used was relatively modest in size, which may limit the generalizability of the findings to larger or more diverse scenarios. Furthermore, the study focused on a specific subset of algorithms, leaving out other potentially effective methods that could yield different results. Real-world factors, such as data imbalance or noisy inputs, were not fully addressed, potentially affecting the models’ robustness. To build on this work, future research could incorporate larger and more varied datasets, test a broader range of algorithms, and account for additional practical constraints. These steps would enhance the applicability and reliability of the models in diverse contexts.

Conclusion

Our study found that ensemble modeling, specifically AdaBoost, improves the predictive performance of base regression models (DT, SVR, GPR) on a large dataset with spatial coordinates (x, y) predicting concentration (C) in mol/m3.

Our findings indicate that AdaBoost, in combination with these base models, consistently produces highly accurate predictions. AdaBoost combined with DT and SVR yielded R2 scores exceeding 0.97, signifying strong alignment between predictions and actual observations. Furthermore, the combination of AdaBoost and GPR attained an impressive R2 score of 0.95963. These findings highlight the efficacy of AdaBoost as a formidable ensemble technique for enhancing the precision of spatial regression models.

Furthermore, our utilization of PSO for hyper-parameter tuning showcased the importance of optimizing model parameters to achieve optimal predictive performance. This fine-tuning process significantly contributed to the superior results obtained in our study.

The implications of our research extend to various domains, including environmental science, where accurate predictions of spatially distributed concentrations are critical for informed decision-making. By leveraging ensemble techniques like AdaBoost, researchers and practitioners can harness the power of diverse base models to improve the reliability of predictions in complex spatial datasets.

In summary, our study highlights the synergy between ensemble learning, hyper-parameter optimization, and spatial regression modeling, offering a valuable framework for enhancing predictive accuracy and advancing the application of ML in spatial data analysis. These insights pave the way for more precise predictions and informed decisions in fields reliant on spatial concentration modeling.

Data availability

The datasets used and analyzed during the current study are available from the corresponding author on reasonable request.

Abbreviations

- C:

-

Concentration (mol/m3)

- DT:

-

Decision tree

- GPR:

-

Gaussian process regression

- MSE:

-

Mean squared error

- R2 :

-

Coefficient of determination

- SVR:

-

Support vector regression

- T:

-

Temperature (°C)

- x, y:

-

Spatial coordinates (m)

- Δ:

-

Change or difference

- ε:

-

Epsilon (e.g., error term in regression)

- σ:

-

Standard deviation

- CFD:

-

Computational fluid dynamics

- ML:

-

Machine learning

- PSO:

-

Particle swarm optimization

References

Chen, B. et al. Removal of Cl− from contaminated acid by resin adsorption: Kinetics, isothermal model, approximate site energy distribution and adsorption mechanism. J. Environ. Chem. Eng. 13(3), 116656 (2025).

Ismail, B. B. et al. Mechanism of punicalagin adsorption on novel baobab-derived green adsorbents: Insights from a mass transfer model approach. Sep. Purif. Technol. 369, 133101 (2025).

Zuhara, S. & McKay, G. Single and binary pollutant adsorption of strontium and barium on waste-derived activated carbons: Modelling, regeneration and mechanistic insights. Environ. Technol. Innov. 39, 104220 (2025).

Fabian Ramos, H. S. et al. CFD-based model of adsorption columns: Validation. Chem. Eng. Sci. 285, 119606 (2024).

Omdehghiasi, H., Yeganeh-Bakhtiary, A. & Korayem, A. H. Comprehensive study on copper adsorption using an innovative graphene carbonate sand composite adsorbent: Batch, fixed-bed columns, and CFD modeling insights. Chem. Eng. Process. Process Intensif. 206, 110047 (2024).

Xiao, R. et al. Efficient heterogeneous adsorption of Hg0 on the Se-based sorbent (Se/SiO2): Experimental and modeling by DEM + CFD + ANOVA method. Sep. Purif. Technol. 364, 132427 (2025).

Kumar Tipu, R. et al. Shear capacity prediction for FRCM-strengthened RC beams using hybrid ReLU-activated BPNN model. Structures 58, 105432 (2023).

Tipu, R. K. et al. Enhancing load capacity prediction of column using eReLU-activated BPNN model. Structures 58, 105600 (2023).

Zhong, S. et al. Machine learning: New ideas and tools in environmental science and engineering. Environ. Sci. Technol. 55(19), 12741–12754 (2021).

Zhou, Z.-H. Machine Learning (Springer, 2021).

Lamba, P. et al. Repurposing plastic waste: Experimental study and predictive analysis using machine learning in bricks. J. Mol. Struct. 1317, 139158 (2024).

Hassan, R. & Kazemi, M. R. Machine learning frameworks to accurately estimate the adsorption of organic materials onto resin and biochar. Sci. Rep. 15(1), 15157 (2025).

Kooh, M. R. R. et al. Machine learning approaches to predict adsorption capacity of Azolla pinnata in the removal of methylene blue. J. Taiwan Inst. Chem. Eng. 132, 104134 (2022).

Sun, Y., Xia, L. & Zhang, Y. Mesosilicate materials for environmental applications: Adsorption separation analysis via hybrid computational and machine learning tools. Case Stud. Therm. Eng. 56, 104285 (2024).

Collins, M., Schapire, R. E. & Singer, Y. Logistic regression, AdaBoost and Bregman distances. Mach. Learn. 48, 253–285 (2002).

Myles, A. J. et al. An introduction to decision tree modeling. J. Chemom. A J. Chemom. Soc. 18(6), 275–285 (2004).

Schölkopf, B. et al. New support vector algorithms. Neural Comput. 12(5), 1207–1245 (2000).

Schulz, E., Speekenbrink, M. & Krause, A. A tutorial on Gaussian process regression: Modelling, exploring, and exploiting functions. J. Math. Psychol. 85, 1–16 (2018).

Qin, J.-X. et al. CFD simulation of liquid–liquid extraction mechanism and enhancement schemes in microchannels based on three mass transfer models. Chem. Eng. Res. Des. 211, 221–234 (2024).

Banerjee, M. Cook’s distance in linear longitudinal models. Commun. Stat. Theory Methods 27(12), 2973–2983 (1998).

Cook, R. D. Detection of influential observation in linear regression. Technometrics 19(1), 15–18 (1977).

Schapire, R. E. Explaining adaboost. In Empirical Inference: Festschrift in Honor of Vladimir N. Vapnik, 37–52 (2013).

Rokach, L. & Maimon, O. Decision trees. In Data Mining and Knowledge Discovery Handbook, 165–192 (2005).

Zhang, F. & O’Donnell, L. J. Support vector regression. In Machine Learning 123–140 (Elsevier, 2020).

Wang, J. An Intuitive Tutorial to Gaussian Processes Regression. arXiv preprint https://arxiv.org/abs/:2009.10862 (2020).

Shi, Y. Particle swarm optimization: Developments, applications and resources. In Proceedings of the 2001 Congress on Evolutionary Computation (IEEE Cat. No. 01TH8546) (IEEE, 2001).

Kennedy, J. & Eberhart, R. Particle swarm optimization. In Proceedings of ICNN’95-International Conference on Neural Networks (IEEE, 1995).

Sen, G. & Krishnamoorthy, M. Discrete particle swarm optimization algorithms for two variants of the static data segment ___location problem. Appl. Intell. 48(3), 771–790 (2018).

Acknowledgements

Fund support: The Scientific Research Fund of Hunan Provincial Education Department (NO. 23B0994).

Author information

Authors and Affiliations

Contributions

Minge Yang: Writing, methodology, investigation, resources, validation. Qiqing Yue: Writing, investigation, resources, software. Junyi He: Writing, methodology, investigation, visualization, supervision. All authors reviewed the manuscript.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Yang, M., Yue, Q. & He, J. Evaluation of mesoporous silica synthesized for green adsorption by modeling via machine learning and mass transfer. Sci Rep 15, 19477 (2025). https://doi.org/10.1038/s41598-025-04324-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-025-04324-6