Abstract

Chalcogenide glasses, renowned for their exceptional optoelectronic properties, have become the material of choice for numerous microelectronic and optical devices. With the rapid development of artificial intelligence technologies in the field of materials science, researchers have increasingly incorporated machine learning (ML) methods to accelerate the exploration of composition–structure–property relationships in chalcogenide glasses. However, traditional ML methods, which predominantly rely on single-property modelling, are often inadequate for addressing the practical demand for multiproperty collaborative optimization. To overcome this limitation, this study proposes a graph-based deep learning approach and develops a novel model for the efficient prediction of key properties of chalcogenide glasses. Furthermore, relevant data were collected from the publicly available SciGlass database to construct an experimental dataset, and the performance of the developed model was systematically evaluated. The experimental results demonstrate the model’s remarkable stability and predictive performance, highlighting its potential application value in the design and development of chalcogenide glasses.

Similar content being viewed by others

Introduction

Chalcogenide glasses are a class of nonoxide glass materials composed of one or more elements from sulfur (S), selenium (Se), and tellurium (Te) combined with other nonoxygen elements, such as those from groups IV, V, or VI1,2. Since Frerichs first discovered As₂S₃ glass in 1950, chalcogenide glasses have garnered significant attention due to their exceptional infrared transmission properties. Their unique physicochemical characteristics, including extremely high nonlinear refractive indices, low phonon energy, narrow bandgaps (1–3 eV), and compositionally tunable flexibility, have rendered them highly promising for applications in microelectronics and optical devices3,4. To date, the optimization of the material properties of sulfur-based glasses is still based on trial‒and‒error experiments, and researchers have mostly explored the synthesis of these glasses by adjusting their chemical composition. This “Edisonian” approach consumes considerable experimental resources and time and lacks a systematic modelling and prediction mechanism between compositional modulation and performance response.

In recent years, the development of artificial intelligence technologies has encouraged researchers to employ machine learning (ML) methods to predict the optical and thermal properties of chalcogenide glasses (e.g., glass transition temperature, thermal expansion coefficient, and refractive index) based on existing experimental data. The introduction of ML algorithms has been shown to be effective in accelerating the research of sulfur-based glasses, thereby accelerating the material design process5,6,7,8,9,10,11,12,13,14,15,16. For example, M. Zhang et al. developed the first ML algorithm in sulfur glasses with a multiple linear regression model that successfully predicted the glass transition temperature (Tg) of the binary AsxSe(1-x)system17. On this basis, Saulo Martiello Mastelini et al. subsequently applied multiple algorithms to construct property prediction models for chalcogenide glasses, further incorporating Shapley additive explanations (SHAPs) to interpret the decision logic of the models, thereby elucidating the effects of the glass composition on their properties and advancing related research18. To further broaden the research scope, Sayam Singla et al. developed an ML model capable of predicting 12 physical properties of chalcogenide glasses using up to 51 compositional input elements, making it the most comprehensive model in the literature regarding input diversity and property coverage. Moreover, they employed SHAP to analyse the contributions of different components to specific properties, providing valuable interpretability for compositional design. Based on the model, the authors reported that Ge increases the Tg and Young’s modulus of glass but decreases the coefficient of thermal expansion, whereas the opposite is true for Se, S, P, and antimony, all of which have different effects on the properties. Based on these conclusions, the authors also developed the Glass Selection Chart (GSC) to assist in the compositional design of sulfur glasses, which allows the user to customize sulfur glasses according to the needs of the user19.

However, efficient modelling methods for predicting the property characteristics of complex vulcanized glasses are lacking. For example, machine learning models typically focus on single-property predictions and fail to meet the practical demands for multiproperty collaborative optimization; to address this, R. Ravinder proposed a new deep learning (DL)-based design paradigm, which developed a bidirectional composition–property prediction model for oxide glasses, demonstrating the immense potential of deep learning in materials design14. Compared with traditional ML approaches, DL possesses powerful nonlinear modelling capabilities, efficiently handles high-dimensional data, and enables simultaneous optimization of multiple target properties. Furthermore, DL eliminates the need for manual feature selection, automatically extracting the complex relationships between composition and properties, making it particularly well suited for materials such as chalcogenide glasses, which exhibit pronounced nonlinear characteristics.

Based on these insights, this study proposes a graph classification-based deep learning approach for predicting key properties of chalcogenide glasses, such as the glass transition temperature (Tg), thermal expansion coefficient (CTE), and refractive index (nd). This method not only provides a more comprehensive understanding of the intricate relationships between composition and properties but also offers an efficient auxiliary tool for the multiproperty optimization and design of chalcogenide glasses, aiming to overcome the limitations of traditional approaches in materials design.

The contributions of this study are summarized as follows:

-

We first introduced a graph-coded approach to characterize the way in which objects are composed of vulcanized glass, which provides a new research idea for the study of the complex composition of vulcanized glass.

-

A novel graph classification-based deep learning model was developed to accurately predict the key attributes of chalcogenide glasses, including the glass transition temperature (Tg), thermal expansion coefficient (CTE), and refractive index (nd).

Methodology

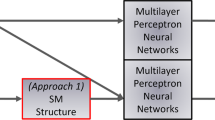

As shown in Fig. 1, each substance component generates its corresponding graphical representation. For example, the nodes in the graph represent the content labels of a certain element, which are divided into low, medium, and high labels. The generated graph represents the properties of the substance in the form of a fully connected graph. This graphical representation method helps to intuitively understand the impact of different element contents on material properties.

This article proposes a hybrid graph classification model, which is a supervised graph classification method. The central idea of this model is to use a GNN to encode the component attribute graph, thereby obtaining the internal feature representation of the data. Specifically, the hybrid graph classification model extracts the global attributes of the graph by obtaining node representations and internal representations of edges.

Graph neural networks (GNNs) utilize the graph structure and node features Xv to learn the representation vector hv of nodes or the representation vector hG of the entire graph. Modern GNNs adopt a neighbourhood aggregation strategy to update node representations by iteratively aggregating the representations of neighbouring nodes. After k iterations of aggregation, the representation of nodes can capture the structural information within their k-hop network neighbourhood. Formally, the k-th layer of the GNN is represented as:

Among them is the eigenvector of node v in k iterations on the ground, which we initialize, and it is also the degree of node v. In the GNN, various AGGREGATE architectures have been proposed, which are crucial. In the pooling variant of GraphSAGE (Hamilton et al., 2017a), AGGREGATE is formalized as

where W is a learnable matrix and MAX represents elementwise max pooling. The combined step can be a join operation followed by linear mapping. In graph convolutional networks (GCNs) (Kipf&Welling, 2017), elementwise average pooling, AGGREGATE, and combination steps are integrated as follows:

For graph classification tasks, the READOUT function aggregates the node features in the final iteration to obtain the representation vector hG of the entire graph

READOUT can be a permutation invariant function that uses summation. After obtaining the node and edge representation information of the graph, this paper adopts graph sampling to enhance the generalizability of the model. At the beginning of each epoch, we use the latest learned model to evaluate the distance or similarity between classes and then construct a graph for all classes. In this way, information sampling can be performed by utilizing the relationships between classes. Specifically, we randomly select one image from each class to construct a small subdataset. The feature embeddings of the current network are then extracted, denoted as X ∈ RC × d, where C is the total number of classes trained and d is the feature dimension. Then, for each category c, the top P-1 nearest neighbour categories can be retrieved, denoted as N (c)={xi | i = 1, 2…, P-1}, where P is the number of categories to be sampled in each small batch. Therefore, a graph G=(V, E) can be constructed, where V={c | c = 1,2…, C} represents vertices, each category is a node, E={(c1, c2) | c2 ∈ N (c1)} represents edges, and finally, for small batch sampling, for each category c as an anchor point, we retrieve all its connected categories in graph G. Then, along with the anchor category c, we obtain a set A={c} ∪ {x | (c, x) ∈ E}, where | A |=P. Next, for each category in set A, we randomly sample K instances of each category to generate a small batch containing B = P × K samples for training.

It is best to perform secondary aggregation on the sampled features of the graph through a layer of the GNN and finally map the graph features to the classification result space through fully connected (FC) methods. Finally, graph loss calculations are performed on the classification results to evaluate the reliability of the model.

This article uses cross entropy to predict the results of the model, where loss 1 is the Tg, loss 2 is the CTE, and loss 3 is the loss value of nd, with coefficients of 0.2, 0.4, and 0.4 used for gradient descent, respectively.

Experiments

Dataset

Chalcogenide glasses refer to glasses containing one or more elements from sulfur (S), selenium (Se), or tellurium (Te) while excluding oxygen (O), nitrogen (N), fluorine (F), chlorine (Cl), bromine (Br), iodine (I), silver (Ag), and gold (Au). Based on this definition, we collected relevant data from the SciGlass database. Table 1 provides a statistical summary of the data: the dataset contains a total of 556 glasses, including 355 glasses containing sulfur,350 containing selenium, 26 containing tellurium, and 339 containing germani.

Table 2 presents the number of data points in the dataset as well as the average, maximum, and minimum values of the output properties. Although the dataset is relatively small compared with those used in other studies, it is sufficient for model development and evaluation. Among the data, the maximum and minimum glass transition temperatures (Tg) are 763 K and 298 K, respectively. The maximum and minimum coefficients of thermal expansion (CTE) are − 4.18 and − 4.86, respectively, whereas the refractive index ranges from a maximum of 2.09 to a minimum of 3.05.

Table 3 provides the basic information of the dataset after it is converted into a graph-based representation. In this study, four graph-structured datasets were constructed by selecting 10%, 20%, 50%, and 100% of the labelled data, in order of increasing proportion. These datasets were then used for model development and evaluation to validate the stability of the model.

The model in this article is developed based on the idea of graph classification, so before developing the model, it is necessary to classify the input and output in a certain way. Table 4 is a classification table for the dataset used. Based on this table, the dataset and output attributes are classified into low, medium, and high ranges according to the molar percentage of input components. The output attributes are also classified into low, medium, and high ranges according to their numerical values.

Material examples

Figure 2 illustrates the workflow for predicting material properties based on elemental composition. The input data includes the elemental ratios of typical chalcogenide glass components (Ge, As, Se, Te, S). These quantitative ratios are first qualitatively classified into “high”, “medium”, or “low” levels according to predefined thresholds, resulting in an elemental classification table. Next, graphical modeling is applied to represent each material sample as a graph, where nodes and edges encode the structural relationships among elemental features. A graph-based classification model is then employed to predict key material properties, including the coefficient of thermal expansion (CTE), glass transition temperature (Tg), and refractive index at 10.6 μm. The output is presented in categorical form (high, medium, low), facilitating efficient screening and design of glass compositions, Where for the attributes that were incorrectly predicted we have indicated in red font.

-

(1)

For example, consider a chalcogenide glass composition of As₄₀Se₆₀. The constituent elements are arsenic (As) and selenium (Se), and according to a predefined classification table, their compositional labels are categorized as (Ge: low, As: high, Se: low, Te: low, S: high). The graph modeling module subsequently transforms these inputs—comprising both elemental species and their categorical labels—into a structured graph representation. This graph is then processed by the graph-based classification module to predict a set of output property labels, such as (high, high, high). By referencing the classification table, these predicted labels can be mapped to specific ranges of material properties (e.g., thermal expansion coefficient, glass transition temperature, refractive index), thereby completing the predictive cycle.

-

(2)

Another example is Ge₃₀As₁₃Se₅₇, whose input attributes are represented as (Ge: high, As: medium, Se: high, Te: low, S: low). Based on these inputs, the model predicts output property labels as (medium, high, high).

-

(3)

Another example is Ge₂₅As₂₀Se₂₅Te₃₀, with input attributes categorized as (Ge: high, As: high, Se: medium, Te: high, S: low). Based on these inputs, the model predicts output property labels of (medium, high, high). This process enables efficient and interpretable estimation of material properties, thereby supporting the targeted design of chalcogenide glasses.

Implementation

To train the model, we followed all the training conditions used in the previous study, the GHNN. We trained the classification model for 300 epochs with a batch size of 64. We used the Adam optimizer with a learning rate of 0.01. We repeated all the experiments five times and reported the average accuracy with standard deviations. All the experiments were implemented with a single NVIDIA RTX 2080 Ti with PyTorch Geometric.

Evaluation indicators

Deep learning models based on graph classification require model evaluation to verify their reliability, and three model metrics are commonly used: ACC (accuracy), recall (recall), and the F1 score (harmonic average of accuracy and recall).

ACC is the proportion of correctly predicted results among all predictions and is calculated via the following formula20:

where TP (true positive) is the number of samples correctly predicted as positive, TN (true negative) is the number of samples predicted as negative, FP (false positive) is the number of samples incorrectly predicted as positive, and FN (flame positive) is the number of samples incorrectly predicted as negative.

The second indicator is recall, which is defined as the proportion of correctly identified positive classes and represents the model’s ability to discover all positive classes. The calculation formula is21:

The third indicator is the F1 score, which is the harmonic average of precision and recall and is used to balance precision and recall ability. The calculation formula is22:

Results analysis

To verify the stability and generalization ability of the model, this paper extracts four different proportion sample sets of 10%, 20%, 50%, and 100% from the dataset for batch evaluation to comprehensively examine the performance of the model under changes in data size. In addition, to further demonstrate the superiority of the proposed model, we conducted systematic comparative experiments with various graph classification-based deep learning models in the literature, such as DGCNN23DCNN24ECC25DGK26GraphSAGE-GCN27and DeepWalk28to demonstrate the advantages of the model in processing complex graph structured data.

Table 5 shows the comparison results of the classification accuracy of the 8 models under different dataset ratios (10%, 20%, 50%, 100%). The results indicate that the model proposed in this paper (Our Model) is significantly better than the other methods in terms of all data proportions, especially in small sample scenarios (10% dataset), with an accuracy of 0.782, significantly surpassing the second-ranked GraphSAGE-GCN (0.723). For the complete dataset (100%), the accuracy of our model was further improved to 0.846, fully demonstrating its powerful learning ability for large-scale data. In addition, compared with traditional methods such as DGK and DeepWalk, deep learning methods based on graph neural networks such as GCN and GraphSAGE-GCN demonstrate significant advantages, indicating that deep learning methods can more efficiently capture the structural features of graphs. The model proposed in this article achieves a comprehensive surpassing of existing methods through a more efficient structural modelling strategy, verifying its superior performance and strong generalization ability in graph data classification tasks.

Table 6 shows the performance of the different models on the four datasets (10%, 20%, 50%, and 100% labelled data ratios) and the recall indicator test results. Specifically, the performances of the DGCNN, DCNN, ECC, DGK, GCN, GraphSAGE-GCN, DeepWalk, and the proposed model (our model) were compared.

The table shows that as the proportion of labelled data increases, the recall values show varying degrees of improvement. Among them, the model proposed in this article achieved the best performance on all datasets, demonstrating strong robustness and superiority. For example, on the 10% dataset, the model proposed in this article achieves a recall value of 0.735, which is significantly higher than those of the other models. On a 100% dataset, the recall further improved to 0.885, an increase of approximately 15.1% compared with the second highest, GraphSAGE-GCN (0.769).

In addition, from the comparison between models, the performance of traditional graph representation learning models (such as DeepWalk) is relatively low, whereas deep learning methods based on graph neural network models (such as GCN and GraphSAGE-GCN) perform better but still perform worse than our model does, indicating that our method has significant advantages in utilizing labelled data and constructing graph structures.

Table 7 presents the F1 score metric test results of 8 models at different labelled data ratios (10%, 20%, 50%, 100%), including DGCNN, DCNN, ECC, DGK, GCN, GraphSAGE-GCN, DeepWalk, and the proposed model (our model).

The table shows that the F1 score of each model generally tends to increase with increasing proportion of labelled data. The model proposed in this study achieves the best F1 score on all the datasets, indicating excellent generalizability and stability. For example, with 10% labelled data, the F1 score of our model reached 0.678, which is significantly higher than those of the suboptimal models DGK (0.637) and GraphSAGE-GCN (0.527). With 100% labelled data, the F1 score of our model reached 0.821, which is also ahead of those of other models, such as GraphSAGE-GCN (0.819) and GCN (0.716).

Further observation reveals significant performance differences among the different types of models. The F1 score of traditional graph embedding methods such as DeepWalk is consistently low, especially in the case of a low proportion of labelled data (10%), which is only 0.346. In contrast, models based on graph neural networks (such as GCN and GraphSAGE-GCN) perform better when the proportion of labelled data is high (50% and 100%), but the overall performance is still inferior to that of the model proposed in this paper. The above results indicate that the method proposed in this paper has significant advantages in fully utilizing graph structure information and labelling data.

Conclusion

This study introduces a novel methodology for modeling complex chalcogenide glasses by employing graph-based encoding to capture their compositional structures, thereby addressing the limitations of traditional single-component modeling approaches. The proposed model demonstrates improved performance and stability compared to existing methods, including Deep Graph Kernels (DGK), DeepWalk, and graph neural network models such as GCN and GraphSAGE-GCN, particularly under conditions of limited labeled data. These results highlight the model’s potential to advance research in the field of chalcogenide glasses. Nonetheless, the study acknowledges certain limitations, including the relatively small size of the initial dataset and the restriction to only five input features, despite the existence of over fifty relevant elements within chalcogenide systems. These constraints underscore the need for future research to enhance predictive accuracy and expand the model’s applicability across a broader range of compositions. The limitations of our study include the relatively small number of samples available for sulfide glasses, which constrains the model’s robustness and generalizability. To address this, future work will focus on constructing an expanded experimental dataset, thereby enhancing data diversity and promoting broader generalization. Additionally, we aim to extend the model’s prediction intervals to improve its applicability in real-world industrial settings and facilitate its practical deployment.

Data availability

The data that support the findings of this study are available upon reasonable request from Shaoyun Liu ([email protected]).Chalcogenide glasses data can be found on SciGlass database.(https://github.com/epam/SciGlass).

References

Lezal, D. Chalcogenide glasses-survey and progress. J. Optoelectron. Adv. Mater. 5, 23–34 (2003).

Varshneya, A. K. Fundamentals of Inorganic Glasses (Elsevier, 2013).

Adam, J. L. & Zhang, X. Chalcogenide Glasses: Preparation, Properties and Applications (Woodhead publishing, 2014).

Kokorina, V. F. Glasses for Infrared OpticsVol. 13 (CRC, 1996).

Alcobaça, E. et al. Explainable machine learning algorithms for predicting glass transition temperatures. Acta Mater. 188, 92–100 (2020).

Bhattoo, R., Bishnoi, S., Zaki, M. & Krishnan, N. A. Understanding the compositional control on electrical, mechanical, optical, and physical properties of inorganic glasses with interpretable machine learning. Acta Mater. 242, 118439 (2023).

Bishnoi, S., Ravinder, R., Grover, H. S., Kodamana, H. & Krishnan, N. A. Scalable Gaussian processes for predicting the optical, physical, thermal, and mechanical properties of inorganic glasses with large datasets. Mater. Adv. 2, 477–487 (2021).

Bishnoi, S. et al. Predicting young’s modulus of oxide glasses with sparse datasets using machine learning. J. Non-cryst. Solids. 524, 119643 (2019).

Cassar, D. R. & ViscNet Neural network for predicting the fragility index and the temperature-dependency of viscosity. Acta Mater. 206, 116602 (2021).

Cassar, D. R. et al. Predicting and interpreting oxide glass properties by machine learning using large datasets. Ceram. Int. 47, 23958–23972 (2021).

Cassar, D. R., Santos, G. G. & Zanotto, E. D. Designing optical glasses by machine learning coupled with a genetic algorithm. Ceram. Int. 47, 10555–10564 (2021).

Dreyfus, C. & Dreyfus, G. A machine learning approach to the Estimation of the Liquidus temperature of glass-forming oxide blends. J. Non-cryst. Solids. 318, 63–78 (2003).

Ravinder et al. Artificial intelligence and machine learning in glass science and technology: 21 challenges for the 21st century. Int. J. Appl. Glass Sci. 12, 277–292 (2021).

Ravinder, R. et al. Deep learning aided rational design of oxide glasses. Mater. Horiz. 7, 1819–1827. https://doi.org/10.1039/d0mh00162g (2020).

Singla, S., Mannan, S., Zaki, M. & Krishnan, N. A. Accelerated design of chalcogenide glasses through interpretable machine learning for composition–property relationships. J. Physics: Mater. 6, 024003 (2023).

Zaki, M. et al. Interpreting the optical properties of oxide glasses with machine learning and shapely additive explanations. J. Am. Ceram. Soc. 105, 4046–4057 (2022).

Zhang, Y. & Xu, X. Predicting as x se 1-x glass transition onset temperature. Int. J. Thermophys. 41, 149 (2020).

Mastelini, S. M. et al. Machine learning unveils composition-property relationships in chalcogenide glasses. Acta Mater. 240, 118302 (2022).

Singla, S., Mannan, S., Zaki, M. & Krishnan, N. M. A. Accelerated design of chalcogenide glasses through interpretable machine learning for composition–property relationships. J. Physics: Mater. 6 https://doi.org/10.1088/2515-7639/acc6f2 (2023).

Chicco, D. & Jurman, G. The advantages of the Matthews correlation coefficient (MCC) over F1 score and accuracy in binary classification evaluation. BMC Genom. 21, 1–13 (2020).

Cook, J. & Ramadas, V. When to consult precision-recall curves. Stata J. 20, 131–148 (2020).

Gupta, A., Gupta, S. & Katarya, R. InstaCovNet-19: A deep learning classification model for the detection of COVID-19 patients using chest X-ray. Appl. Soft Comput. 99, 106859 (2021).

Phan, A. V., Le Nguyen, M., Nguyen, Y. L. H., Bui, L. T. & Dgcnn A convolutional neural network over large-scale labeled graphs. Neural Netw. 108, 533–543 (2018).

Ma, B., Li, X., Xia, Y. & Zhang, Y. Autonomous deep learning: A genetic DCNN designer for image classification. Neurocomputing 379, 152–161 (2020).

Yang, H., Zhu, Y. & Liu, J. in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 11206–11215.

Luo, Z., Liu, L., Yin, J., Li, Y. & Wu, Z. Deep learning of graphs with Ngram convolutional neural networks. IEEE Trans. Knowl. Data Eng. 29, 2125–2139 (2017).

Zhang, T., Shan, H. R. & Little, M. A. Causal graphsage: A robust graph method for classification based on causal sampling. Pattern Recogn. 128, 108696 (2022).

Jeyaraj, R., Balasubramaniam, T., Balasubramaniam, A. & Paul, A. DeepWalk with reinforcement learning (DWRL) for node embedding. Expert Syst. Appl. 243, 122819 (2024).

Author information

Authors and Affiliations

Contributions

H .L: Complete model development and initial draft writing.P.L, Shaoyun Liu: Complete data collection and screening, as well as revise and correct the paper.B .Y: Responsible for experimental guidance and paper guidance.All authors have reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Li, H., Liu, P., Liu, S. et al. Deep learning-assisted attribute prediction of chalcogenide glasses based on graph classification. Sci Rep 15, 19360 (2025). https://doi.org/10.1038/s41598-025-04391-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-025-04391-9