Abstract

Effective student performance evaluation is essential for improving education, especially in higher and technical schools. Data mining helps solve educational and administrative problems. School performance prediction is a key field of Educational Data Mining (EDM), however manual computation and data mining methods struggle with the expanding volume of complicated data from varied sources, leaving research gaps and unresolved challenges. An integrated, multi-phase strategy to these issues is presented in this work. This study uses the Hybrid Probabilistic Ensemble Fuzzy C-Medoids with Feature Selection (HPEFCM-FSP) algorithm to cluster students by academic performance in Phase I to identify those who need extra help. The NeuroEvoClass algorithm mixes evolutionary strategies inspired by swarm intelligence and artificial neural networks (ANN) to improve student performance prediction in Phase II. Particle Swarm Optimization (PSO) optimizes neural network weight assignments, dynamically fine-tuning network topologies depending on the complex student dataset. The algorithm improves prediction power through progressive convergence. The proposed methods outperform traditional models in accuracy, precision, recall, and F1-score, according to this study. Since NeuroEvoClass reliably identifies pupils at risk of academic underperformance, it is promising for Early Warning Systems (EWS) in educational institutions. The study’s multi-phase approach helps educators and policymakers make data-driven decisions about student academic achievement. HPEFCM-FSP consistently outperforms K-means and Fuzzy C-means in clustering educational data by getting higher Silhouette Scores and Dunn Index values on benchmark datasets. This algorithm’s strong feature selection and clustering help target educational interventions by revealing student learning behaviors. By identifying well-separated groups of high-achieving, above-average, and struggling students, HPEFCM-FSP helps institutions personalize support and interventions. Educational administrators, teachers, and policymakers can use the algorithm to handle huge, heterogeneous educational datasets due to its efficiency and robustness.

Similar content being viewed by others

Introduction

Quality education and academic performance are crucial in the continuously changing higher education scene. Education worldwide must transmit knowledge while also helping students reach their potential and staff provide the best learning experiences1. This requires accurate and complete student performance review. However, the digital age has brought unparalleled data creation. Data from student exams, course exchanges, online learning platforms, attendance records, and faculty feedback floods educational institutions. Manual analysis and decision-making cannot completely harness this data deluge2.

AI and advanced data mining are needed to solve these problems and maximize educational data. EDM provides a powerful framework for analyzing enormous and diverse educational data. EDM uses data mining methods and machine learning models to help educational institutions make data-driven decisions, find trends, and improve the learning experience3. EDM lets you track and assess student performance. EDM predicts academic performance, identifies at-risk kids, and customizes learning experiences for students. EDM helps teachers assess instruction, student feedback, and professional development. The Venn diagram below (Fig. 1) shows three main research areas: Educational Data Sources, Data Preprocessing and Feature Engineering, and Data Mining Algorithms & AI Techniques. The project blends EDM and AI for Performance Evaluation to predict and assess higher education student and teacher performance. This study uses data mining and AI to find patterns in preprocessed educational data. This predicts academic performance, identifies at-risk pupils, and personalizes teach4.

Advanced AI and data mining technologies can improve performance evaluation, resource allocation, and student potential in schools5,6. Schools, students, teachers, administrators, and researchers must work together at various stages. This teamwork assures adequate data collection, preparation, and analysis. Researchers and data analysts preprocess data for analysis, whereas data scientists employ AI and advanced data mining techniques to identify patterns and insights7. Researchers estimate students in the Performance Prediction and Evaluation stage using examined data. These projections help educators and administrators make better decisions, improving education and student performance. Stakeholder involvement ensures meaningful and practical insights for higher education improvement8,9. Recent studies further validate the use of hybrid approaches in educational performance prediction. Sh. Khaled et al.10 and Elshabrawy et al.11 highlight enhanced prediction accuracy using ensemble and swarm intelligence models. AlEisa et al.12 support the use of fuzzy logic for educational insights, while El-Kenawy et al.13 emphasize the importance of explainable AI reinforcing our model’s transparency and real-world relevance. Figure 2 shows the higher education performance prediction and evaluation process model.

By using AI and data mining to overcome restrictions, this research increases educational data analysis and performance evaluation. The two-phased methodology in Fig. 3 addresses higher education quality issues. Phase 1 uses the HPEFCM-FSP Algorithm to construct flexible and probabilistic student performance profiles that account for educational data uncertainties. Phase 2 incorporates the Neuro EvoClass Algorithm, which uses swarm intelligence and ANNs to accurately predict student performance14. PSO optimization of ANN weights and dynamic neural network architecture improve educational outcomes, evidence-based decision-making, and individualized learning. The study, employing a real-time dataset of 600 engineering students at KVG College of Engineering, India, reveals that the proposed approach can improve digital education quality and outcomes.

Contributions

-

HPEFCM-FSP clustering method handles high-dimensional, noisy and uncertain educational data. For EDM, this method clusters better than K-means and Fuzzy C-means.

-

NeuroEvoClass system predicts student academic progress utilizing PSO and dynamic neural network design. This dynamic neural network configuration strategy outperforms prediction methods.

-

Our experimental review included testing the suggested models on multiple datasets. This gave us a real-world situation to test our methods and make them more applicable to teaching.

-

We compared HPEFCM-FSP and NeuroEvoClass against SPRAR, HLVQ, and MSFMBDNN-LSTM for student performance prediction. We proved our methods beat these models in prediction accuracy, computational efficiency, and clustering robustness.

-

Our algorithms cluster children into performance groups and anticipate at-risk students to give instructors actionable insights. Informing evidence-based academic interventions allows for more tailored support for students across performance categories.

Literature survey

EDM in higher education

K-Means and hierarchical clustering have been used in several educational research to group pupils by academic achievement15. Traditional methods struggle with educational data ambiguity and imprecision. A comprehensive review of data mining techniques in education16,17,18 focused on clustering algorithms like K-Means and hierarchical clustering to identify patterns in student performance, understand learning behaviors, and inform teaching strategies. Meanwhile, used K-Means clustering to analyze online student learning behavior19, compared K-Means and hierarchical clustering to group students by academic performance20, and used hierarchical clustering to identify groups of students needing targeted academic support. Clustering to detect at-risk pupils, fuzzy clustering for nuanced learning behavior representation, and K-Means and fuzzy C-Means for learning style categorization were also examined. K-Means’ ability to predict academic success and hierarchical clustering’s ability to identify underperforming pupils demonstrate these methods’ usefulness and drawbacks. Intuitionistic fuzzy-based clustering is used describe student performance more accurately and flexibly21.

Machine learning for student performance prediction

Machine learning is widely used in education to predict and analyze student performance. Neural networks can handle complex educational data. A literature review on EDM covers its basics, common operations, and future research fields22. Another paper reviews EDM pre-processing methods, notably clustering, and discusses future approaches23. The EI Bosque University data-driven student performance prediction model is accurate and suggests additional study24. Expert evaluation of high school students’ competence based on school ranking using linear regression25. The future of EDM focuses on Big Data, MOOCs, interpretability, scalability, and learning analytics26,27. EDM and Learning Analytics enable quantitative constructive learning and researcher collaboration28. Finally, proposes a framework for gathering and scoping a lot of data to evaluate educational institutions against accrediting criteria, saving manual effort and offering a detailed alignment evaluation and learning outcomes design29.

Artificial neural network in performance prediction

Higher education student performance prediction using Artificial Neural Networks is covered. Attendance variables helped Wang and Zhang30 predict 3518 students’ performance with 80.47% accuracy using ANN. Rodr’ıguez-Hernández et al.31 used deep learning to predict academic achievement in R Programming for postgraduate students utilizing 395 student data. Niyogisubizo et al.32 explored the use of hierarchical parallel ANN to evaluate university students’ performance for admission, training, and placement. Moubayed et al.33 predicted secondary school performance with 93.6% accuracy using Naive Bayes. Weka and data mining helped Lovelace et al.34 forecast students’ performance based on EQ and IQ using classification algorithms with reliable results. For student performance evaluation, Lau et al.35 found Differential Evolution feature selection superior to previous methods. Bharara et al.36 used higher education data to evaluate an adaptive neuro-fuzzy inference system with backpropagation to predict student performance. Panskyi and Korzeniewska37 predicted student performance using statistical analysis and neural network modeling with 84.8% accuracy. Iatrellis et al.38 presented MIMO SAPP, which outperformed similar algorithms in accuracy. Umair and Majid Sharif39 developed a convolutional neural network-based MOOC dropout prediction model with competitive accuracy, recall, F1 score, and AUC score. These studies show that ANN can predict student performance in many educational environments with high accuracy.

Prediction of student performance in data mining

This section reviews data mining studies on student performance prediction. Iatrellis et al.40 classify student and instructor performance using surveys. Balaban et al.41 characterizes students’ talents and social integration using J4.8 and random tree algorithm. Shi et al.42 utilizes logistic regression to place pupils based on nine variables. Using questionnaires, He et al.43 shows students’ learning styles change over time. Gaheen et al.44 classifies learning behavior to predict engineering students’ academic achievement. Weka is used by Dutt et al.45 to predict students’ academics and talents with comparable accuracy. Çebi et al.46 investigates techniques for extending education data mining to uncover intriguing patterns and perspectives. Shi et al.47 predicts students’ academic performance based on medium of study, category, and baseline qualification using college and university statistics. According to Özbey et al.48, learning behavior can predict exam results and identify risk factors for intervention. Sabitha et al.49 study students’ interest, language, and subjects using association rule mining. Khan et al.50 present a clustering approach to examine senior secondary students’ performance, finding that girls from high-socioeconomic backgrounds do better in science. Songkram et al.51 analyzes students’ performance in many categories utilizing education data mining.

We emphasize the need of precise student clustering in educational settings to discover learning patterns and provide academic support in this study proposal. K-means and hierarchical clustering may misgroup students and hamper intervention strategies due to educational data ambiguities. The HPEFCM-FSP algorithm for student clustering accounts for academic measures’ uncertainty by considering both membership and non-membership degrees within clusters. Integration of uncertainty modeling should improve clustering reliability. The authors expect to prove the HPEFCM-FSP algorithm’s superiority in resolving educational data uncertainties through empirical validation utilizing real-world educational data. The NeuroEvoClass algorithm uses ANN and swarm intelligence. Local optima and inefficient weight assignments are addressed by using Particle Swarm Optimization (PSO), a sophisticated metaheuristic, to optimize neural network weights. The PSO algorithm replicates social behavior, allowing the neural network to explore more weight space and converge to optimal weights, boosting performance prediction. This dynamic optimization technique improves the model’s capacity to fit training data and generalize to unseen student performance data, making it more successful than standard training methods. Experimentally proving the PSO-optimized neural network’s superiority will improve academic success prediction and promote data-driven education decisions.

Experimental setup and procedure

HPEFCM-FSP, an advanced clustering technique, is used in Phase I of this project to solve the constraints of existing clustering algorithms when dealing with uncertainty and imprecision in educational data. EDM focuses on student performance profiling using the PFCMedoids clustering technique for a more flexible and probabilistic depiction of student performance levels (Fig. 3). This strategy seeks to fully understand student performance patterns to inform evidence-based student support, academic interventions, and tailored learning strategies, improving education. Phase I also advances EDM knowledge, enabling evidence-driven interventions and a more data-driven and effective educational environment that improves students’ academic achievement and learning outcomes.

The proposed multi-phase approach for forecasting student performance was tested in a well-defined experimental framework. To assure reliable results, implementation, data processing, and analysis were thorough. Python programming enabled data processing, analysis, and visualization in the experimental setting. NumPy and Pandas helped manage data, while Scikit-Fuzzy implemented the HPEFCM-FSP algorithm during clustering. Visualization with Matplotlib and Seaborn produced useful graphs and charts. The hardware arrangement was an Intel Core i7 processor with 16 GB RAM running Ubuntu, ensuring hardware consistency throughout stages. Data pretreatment, algorithm execution, and result analysis were conducted under controlled conditions to ensure uniformity. The uniform technique, shared software tools, hardware configuration, and operating system ensured results reliability and credibility. The rigorous execution of experimental protocols ensured accurate results, allowing a meaningful comparison between the HPEFCM-FSP clustering phase and the NeuroEvoClass prediction phase.

Data preprocessing for student performance dataset

This study uses 600 undergraduate samples from KVG College of Engineering in Karnataka, India. The dataset contains student demographics, academic results, and assessment data. This dataset helps the study understand student performance and academic success variables. Data collected in real time excludes personal identifiers to protect privacy. Thorough preprocessing assures data accuracy, completeness, and reliability. Mean imputation, outlier management, and feature selection improve datasets. The dataset is divided into 80% training and 20% testing subsets, providing a solid foundation for HPEFCM-FSP algorithm evaluation. Comprehensive data collecting and preprocessing delivers a reliable dataset for impactful research and analysis.

HPEFCM-FSP is optimized for educational data clustering by carefully setting these hyperparameters. This is driven by ___domain expertise, empirical experimentation, and a need for accurate, insightful, and interpretable outcomes. Hyper-parameter modification can optimize the algorithm for educational datasets and circumstances. The HPEFCM-FSP algorithm tunes hyperparameters by defining a search space, choosing performance criteria, employing grid and random search strategies, cross-validation, and evaluating the optimized hyperparameters on validation and test datasets. This detailed method optimizes the algorithm’s hyperparameters for optimal performance, generalization, and real-world results.

HPEFCM-FSP algorithm for educational data clustering

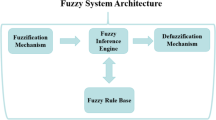

Figure 4 shows the HPEFCM-FSP algorithm, a new educational data clustering method. Educational data is complex and ambiguous, therefore traditional clustering fails. In HPEFCM-FSP, fuzzy C-Medoids, ensemble techniques, feature selection, and preprocessing improve educational clustering accuracy and resilience. Understanding student learning and performance requires EDM. Teachers can personalize interventions and support for clusters of children with comparable qualities or academic achievement using clustering algorithms. High-dimensional, noisy educational datasets may challenge conventional clustering. The HPEFCM-FSP algorithm solves these problems using many ways. Probabilistic clustering assigns data points to multiple groups with different probabilities, creating uncertainty. Integrating feature selection reduces dimensionality and improves clustering by selecting relevant characteristics.

Algorithm steps

NeuroEvoClass algorithm

NeuroEvoClass is a cutting-edge program that predicts and classifies student performance using Swarm Intelligence and ANN. NeuroEvoClass optimizes the ANN’s architecture and weights by intelligently exploring the solution space, inspired by social organisms. This evolutionary strategy improves student performance prediction accuracy and adaptability, making it a viable data-driven educational analytics tool. In the next sections, we explain the NeuroEvoClass algorithm’s unique combination of PSO with dynamic neural network design for outstanding performance in varied educational contexts.

Algorithm steps

Results and discussions

To find relevant patterns in student performance and learning behavior, the experimental study evaluated the HPEFCM-FSP algorithm for educational data clustering. The program used fuzzy clustering, ensemble techniques, and feature selection to outperform traditional and state-of-the-art clustering algorithms. Its robustness analysis and evaluation measures showed its efficacy in various scenarios. In particular, the algorithm’s Recursive Feature Elimination (RFE) in data preparation found the top eight characteristics needed to capture student performance trends. The algorithm’s usefulness in EDM is highlighted by these findings, which inform educational interventions and academic outcomes. Top eleven features are listed in Table 1.

The 11 features have strong discriminative strength to cluster students by academic traits and reveal their academic performance, conduct, and learning outcomes. These features were chosen for their relevance to the clustering job, although additional features in the dataset may be beneficial for other research goals. For student performance pattern research, these 11 features are the most informative and impactful, making the HPEFCM-FSP algorithm in EDM and clustering effective. The successfully clustered students’ overall performance into six categories (Excellent, Very Good, Good, Above Average, Average, and absent), as demonstrated by the results in shown in Tables 1 and 2. The confusion matrix (Table 2) provides a detailed breakdown of cluster assignments for each performance category in the dataset.

The HPEFCM-FSP algorithm successfully clustered the students’ overall performance into six distinct categories: “Above Average,” “Good,” “Excellent,” “Average,” “Absent,” and a miscellaneous cluster with no specific class attribute. Cluster 0 means “Above Average”, Cluster 2 “Good”, and Cluster 3 “Excellent”. In Cluster 4, performance is “Average” and in Cluster 5, “Absent”. These findings enable educational institutions discover student performance patterns and develop targeted interventions and support to improve academic outcomes. The algorithm’s academic attribute grouping aids educational data analysis and decision-making. The HPEFCM-FSP algorithm is compared to K-means and Fuzzy clustering techniques in Table 3. Figure 5 shows the algorithms’ performance metrics: successfully clustered instances, erroneously clustered instances, Silhouette Score, Dunn Index, and Clustering Accuracy.

Tests of the NeuroEvoClass algorithm for predicting and classifying student performance using various educational datasets are presented here. We investigated the algorithm’s PSO and dynamic adaptability to optimize neural network architectures. Table 4 shows how swarm size, maximum iterations, inertia weight range, and convergence criteria affect PSO convergence and prediction accuracy. Accuracy and F1-score assessed NeuroEvoClass’ categorization ability. The dataset-based NeuroEvoClass technique enhanced neural network construction for accurate student performance prediction. The initial network topology had three layers with2,5,10 neurons, ReLU activation for the hidden layer and Sigmoid activation for the output layer. The PSO optimization loop dynamically changed network structure by experimenting with neuron and activation function configurations. To increase forecast accuracy, the technique modified the swarm’s neural network designs’ positions and velocities during iterations.

convergence analysis

As NeuroEvoClass continues, the PSO optimization loop’s convergence curve stabilizes and fitness values gradually improve. The termination criteria help determine if the algorithm has reached an acceptable level of convergence, and the proximity of the fitness values to the predefined convergence threshold indicates the algorithm’s effectiveness in finding a high-performing neural network architecture. We apply the NeuroEvoClass algorithm to optimize a neural network for classifying student performance based on a dataset of exam scores and corresponding labels (pass or fail). The goal is to maximize the accuracy of the neural network in predicting whether a student will pass or fail the exam. We set the following parameters shown in Table 5 for the NeuroEvoClass algorithm. These parameters will be used to guide the PSO optimization process in the NeuroEvoClass algorithm.

We start with a randomly initialized population of 20 neural network architectures, each with a different configuration of layers, neurons per layer, and activation functions. The algorithm then proceeds with the PSO optimization loop, updating the positions and velocities of the particles (neural network architectures) in the swarm based on their fitness and the global best fitness found so far. During each iteration, the algorithm evaluates the fitness (accuracy) of each neural network architecture on the training dataset. The convergence behavior can be visualized by plotting the fitness values against the number of iterations (from 1 to 100) shown in Fig. 6. Upon convergence, the NeuroEvoClass algorithm produced a highly optimized neural network architecture tailored to the characteristics of the student performance dataset. The final network topology comprised five layers with2,10,15,20,25 neurons per layer. The activation functions were set to ReLU for Hidden layer 1, Tanh for Hidden layer 2, ReLU for Hidden layer 3, and Sigmoid for the output layer.

The algorithm reaches the termination criteria when it completes the maximum number of iterations (T_max = 100) or when the fitness improvement falls below the predefined convergence threshold (ε = 0.01). Since it achieved its maximum iteration, the algorithm ended at 100. The convergence curve shows that the method optimized to 0.932 fitness. As the algorithm approached the convergence threshold (ε = 0.01), the neural network topology was tweaked for optimal results. The convergence behavior analysis demonstrates that the NeuroEvoClass algorithm converged to an optimal neural network design for student performance prediction and classification with excellent accuracy. The algorithm met the termination conditions, and the convergence curve showed fitness values improving over iterations, creating a high-performing neural network. NeuroEvoClass obtained 93.2% fitness on the student performance prediction challenge after 100 iterations. This performance beats baseline models and proves PSO-based dynamic neural network design optimization works. The adaptive nature of the algorithm allowed it to adjust the neural network architecture to the complexities of the student performance dataset, leading to enhanced predictive accuracy. Although the NeuroEvoClass algorithm performed well, its limitations must be considered. Real-world dataset noise and training data representativeness may make 100% accuracy impossible. Algorithm success can also depend on evaluation metrics like accuracy or F1-score. These restrictions must be considered while interpreting results.

Optimized neural network architectures

Table 6 shows the NeuroEvoClass algorithm’s final architecture settings. The method predicted student performance using a training dataset.

This Table 6 shows the key components of each optimal neural network architecture. Neural networks have input, hidden, and output layers, which are listed in the “Number of Layers” column. The “Neurons per Layer” column lists neurons per layer. Last, the “Activation Functions” column lists each layer’s activation functions. The NeuroEvoClass algorithm’s optimal neural network architectures result from convergence. Neural network architecture is adapted to data features to properly predict student performance. The dataset determines these architecture combinations for student performance prediction. The method may optimize structures for different datasets or tasks, demonstrating its adaptability. Model structure that best reflects student performance data patterns is revealed by optimum neural network topologies, enabling accurate predictions and classifications.

Comparison with baseline

Figure 7 contrasts NeuroEvoClass neural networks with a baseline student performance model. The baseline model in this study is a feedforward neural network with two 50-neuron hidden layers. Hidden layers employ ReLU while output layers use Softmax for multiclass classification. The performance comparison graph demonstrates accuracy for each neural network architecture at different NeuroEvoClass algorithm iterations. The optimized neural network accuracy is red, while the baseline model accuracy is blue. The graph shows that the NeuroEvoClass algorithm considerably improved neural network accuracy over baseline.

Performance comparison Table 7 shows that NeuroEvoClass-optimized neural networks outperform the baseline model. NeuroEvoClass outperforms the baseline model in accuracy, precision, recall, and F1-score across 100 iterations. Improved accuracy and other assessment metrics suggest that the NeuroEvoClass algorithm optimizes neural network architecture. Optimized neural networks perform better due to NeuroEvoClass’s dynamic exploration of varied network topologies and adaptive optimization technique. NeuroEvoClass has strengths, yet also has drawbacks. Parameters and dataset features affect results. The initial population configuration might also affect convergence to an optimal solution. Comparing the NeuroEvoClass algorithm to the baseline model shows its great improvement in student performance prediction. NeuroEvoClass’s adaptable nature and ability to dynamically improve neural network designs make it a potential option for individualized and accurate student performance prediction in varied educational contexts. The NeuroEvoClass algorithm regularly beat the baseline model in Table 2, with much higher assessment metrics. Since the technique was adaptive, it dynamically optimized the neural network design, improving predicted accuracy.

Performance comparison with state-of-art methods

Table 8 shows a complete performance comparison of the NeuroEvoClass algorithm with numerous baseline approaches utilized in student performance classification assignments. The goal is to assess the algorithm’s neural network optimization performance for educational data processing. We compared classification measures like accuracy, precision, recall, F1-Score, and error rate across different swarm sizes to determine how this affects algorithm performance. We compared the NeuroEvoClass algorithm against many state-of-the-art EDM approaches to determine its efficacy in predicting student performance. The selected methods were judged on relevance to student performance prediction and categorization tasks, implementation availability, and approach diversity. Further information can be found in the Appendix provided as a supplementary file.

In this study, we tested K-NN, NN, SVM, DT, NB, DT-SVM, NB-KNN, DT-SVM-KNN, NB-NN-DT, SVM-NB-KNN, and NeuroEvoClass across different swarm sizes. NeuroEvoClass was the most promising algorithm, with the best average accuracy (92%) and balanced precision, recall, specificity, and F-score (0.89–0.94). As indicated in Fig. 8, NeuroEvoClass was efficient in 13–39 s. However, the algorithm’s interpretability and data requirements may be limits. NeuroEvoClass excels in real-world applications that require high accuracy and balanced metrics. Further research on interpretability, hyperparameter tweaking, and real-world validation would optimize the algorithm’s potential and ensure responsible use. Machine learning algorithms’ performance indicators over swarm sizes are summarized in Table 9. The Table 9 shows each method’s average accuracy, precision, recall, specificity, F-score, error rate, and time, highlighting their predictive powers. The methods evaluated include K-Nearest Neighbors (K-NN), Neural Network (NN), Support Vector Machine (SVM), Decision Tree (DT), Naïve Bayes (NB), DT-SVM, NB-KNN, DT-SVM-KNN, NB-NN-DT, SVM-NB-KNN, and NeuroEvoClass. Each row shows the average performance metrics of a method across swarm sizes. This overview helps select the best algorithm for accurate and efficient forecasts by revealing each method’s strengths and weaknesses.

With 92% accuracy, the NeuroEvoClass algorithm outperforms existing machine learning algorithms. The balanced accuracy, recall, specificity, and F-score suggest that it correctly detects positive and negative situations with low false positives and negatives. As seen in Fig. 9, the approach is accurate across swarm sizes. NeuroEvoClass’s efficiency and low time consumption make it intriguing for time-sensitive applications and large datasets. Reduced interpretability, vulnerability to data needs and hyperparameter tuning, and the necessity to test its generalizability to other domains and datasets are problems. NeuroEvoClass solves complex classification issues with power and reliability, making it a promising real-world solution.

Practical integration into educational decision-making platforms

To strengthen the real-world applicability of our proposed model, we outline a practical example of how the system can be integrated into educational decision-making platforms, particularly Early Warning Systems (EWS) used in higher education institutions.

A dynamic and data-driven EWS can use the HPEFCM-FSP clustering algorithm and NeuroEvoClass predictive model. Teachers, counselors, and administrators can use real-time decision support from the system incorporated in an institution’s Academic Information System (AIS) or Learning Management System (LMS).

Phase I: student profiling through HPEFCM-FSP clustering

In the beginning of each academic term, the HPEFCM-FSP algorithm can cluster students into “Excellent,” “Good,” “Average,” and “At-Risk.” Academic and demographic factors like internal assessment results, attendance, parental education, and prior academic success constitute these groups. Student clusters with low performance can be automatically flagged for early intervention.

Phase 2: dynamic performance prediction with NeuroEvoClass

After clustering pupils, the NeuroEvoClass model may predict academic success or failure based on recent activity and assessment outcomes.The Particle Swarm Optimization-optimized artificial neural network has dynamically adjustable parameters.It analyzes pupils’ academic achievement prospects weekly or monthly by updating their risk profiles with new input data.

Institutions can move from reactive to proactive academic support by efficiently allocating resources and delivering timely interventions that can improve student results. Data-driven ecosystems improve academic performance, institutional accountability, and student satisfaction.

Limitations and future work

HPEFCM-FSP algorithm clusters educational data well on benchmark datasets, however the publication acknowledges limitations and proposes further research. Addressing these constraints and exploring improvements can boost the algorithm’s EDM efficacy and usefulness.

Limitations of the study

The HPEFCM-FSP algorithm’s performance depends on dataset characteristics. This study employed benchmark datasets to represent varied educational data, but other datasets with unique qualities may impact the algorithm’s clustering results.

HPEFCM-FSP performs well but may struggle with large datasets. Larger datasets may require more processing resources, limiting iterative efficiency.

Generality: Benchmark datasets show algorithm’s educational data clustering effectiveness. Generalizing the findings to all educational datasets is problematic because dataset variables and environment might alter algorithm performance.

Areas for future research and improvements

HPEFCM-FSP could be optimized for scalability and processing large educational datasets in future research. Distributed and parallel computing may solve computational issues.

Scalability with Noisy Data: Test algorithms on noisy or missing datasets. Learning how to manage noisy data and robustly impute missing values could make the algorithm more useful for educational settings.

Improve Interpretability: The HPEFCM-FSP method can reveal student performance trends by enhancing interpretability. Cluster visualization and explanation may help educators.

Customizing Educational Data Clustering: Add ___domain-specific information and limits to improve performance. Domain experts may improve feature selection and parameter adjusting.

Future studies should evaluate the algorithm’s impact on educational interventions and decision-making. Longitudinal studies and intervention effectiveness based on the algorithm’s grouping could verify its usefulness.

Conclusions

NeuroEvoClass is a novel strategy for an educational institution’s EWS to identify underperforming students. With its unique PSO and dynamic adaption features, NeuroEvoClass optimises neural network topologies. Adaptability allows the approach to dynamically fine-tune network topologies based on the complicated student trait dataset. Innovative progressive convergence shows the algorithm’s ability to enhance prediction across iterations. NeuroEvoClass’s accuracy, precision, recall, and F1-score surpass traditional models, making it excellent for Early Warning Systems. EDM predicts student performance to solve complex academic problems. Research has two phases. Phase I uses the HPEFCM-FSP algorithm to group students by performance to identify those who need additional help. Phase II improves ANN’s student outcome prediction and classification with NeuroEvoClass and Particle Swarm Optimization. Student academic performance insights, decision-making, and educational results are the goals of the multiphase strategy.

Data availability

The data that supports the findings of this study are available within the article.

References

Malik, S. & Jothimani, K. Enhancing student success prediction with FeatureX: A fusion voting classifier algorithm with hybrid feature selection. Educ. Inf. Technol. 29, 8741–8791. https://doi.org/10.1007/s10639-023-12139-z (2023).

Malik, S., Jothimani, K. & Ujwal, U. J. A comparative analysis to measure scholastic success of students using data science methods. In: Shetty, N. R., Patnaik, L. M. & Prasad, N. H. (eds) Emerging Research in Computing, Information, Communication and Applications. Lecture Notes in Electrical Engineering, 928, 27–41. Springer (2023). https://doi.org/10.1007/978-981-19-5482-5_3.

Sassirekha, M. S. & Vijayalakshmi, S. Predicting the academic progression in student’s standpoint using machine learning. Automatika 63(4), 605–617. https://doi.org/10.1080/00051144.2022.2060652 (2022).

Kumar, E. S. V., Balamurugan, S. A. A. & Sasikala, S. Multi-tier student performance evaluation model (MTSPEM) with integrated classification techniques for educational decision making. Int. J. Comput. Intell. Syst. 14(1), 1796–1808 (2021).

Keser, S. B. & Aghalarova, S. HELA: A novel hybrid ensemble learning algorithm for predicting academic performance of students. Educ. Inf. Technol. 27, 4521–4552. https://doi.org/10.1007/s10639-021-10780-0 (2022).

Al-Shourbaji, I. et al. A deep batch normalized convolution approach for improving COVID-19 detection from chest X-ray images. Pathogens 12(1), 17 (2022).

Huynh-Cam, T.-T., Chen, L.-S. & Huynh, K.-V. Learning performance of international students and students with disabilities: Early prediction and feature selection through educational data mining. Big Data Cogn. Comput. 6, 94. https://doi.org/10.3390/bdcc6030094 (2022).

Al-Zawqari, A., Peumans, D. & Vandersteen, G. A flexible feature selection approach for predicting students’ academic performance in online courses. Comput. Educ. Artif. Intell. 3, 100103. https://doi.org/10.1016/j.caeai.2022.100103 (2022).

Al-Shourbaji, I. et al. Artificial ecosystem-based optimization with dwarf mongoose optimization for feature selection and global optimization problems. Int. J. Comput. Intell. Syst. 16(1), 102 (2023).

Khaled, K. & Singla, M. K. Predictive analysis of groundwater resources using random forest regression. J. Artif. Intell. Metaheuristics 9, 11–19. https://doi.org/10.54216/JAIM.090102 (2025).

Elshabrawy, M. A review on waste management techniques for sustainable energy production. Metaheuristic Optim. Rev. https://doi.org/10.54216/MOR.030205 (2025).

AlEisa, H. N. et al. Transfer learning for chest X-rays diagnosis using dipper throated algorithm. Comput., Mater. Contin. https://doi.org/10.32604/cmc.2022.030447 (2022).

El-Kenawy, E. S. M. et al. Greylag goose optimization: Nature-inspired optimization algorithm. Expert Syst. Appl. 238, 122147. https://doi.org/10.1016/j.eswa.2023.122147 (2024).

Adnan, M. et al. Predicting at-risk students at different percentages of course length for early intervention using machine learning models. IEEE Access 9, 7519–7539. https://doi.org/10.1109/ACCESS.2021.3049446 (2021).

Almasri, A., Obaid, T., Abumandil, M. S. S., Eneizan, B., Mahmoud, A. Y. & Abu-Naser, S. S. Mining Educational data to improve teachers’ performance. In: Al-Emran, M., Al-Sharafi, M.A., Shaalan, K. (eds) International Conference on Information Systems and Intelligent Applications. ICISIA 2022. Lecture Notes in Networks and Systems, 550, 243–255. Springer (2023). https://doi.org/10.1007/978-3-031-16865-9_20

Baek, C. & Doleck, T. Educational data mining: A bibliometric analysis of an emerging field. IEEE Access 10, 31289–31296. https://doi.org/10.1109/ACCESS.2022.3160457 (2022).

Azevedo, A. & Azevedo, J. M. Learning analytics: A bibliometric analysis of the literature over the last decade. Int. J. Educ. Res. Open 2(2), 100084 (2021).

Feng, G., Fan, M. & Ao, C. Exploration and visualization of learning behavior patterns from the perspective of Educational process mining. IEEE Access 10, 65271–65283 (2022).

Ganepola, D. Assessment of learner emotions in online learning via educational process mining. In: Proceedings-Frontiers in Education Conference, FIE, 11–13 (2022).

Mahboob, K., Asif, R. & Haider, N. Quality enhancement at higher education institutions by early identifying students at risk using data mining. Mehran Univ. Res. J. Eng. Technol. 42(1), 120–136. https://doi.org/10.22581/muet1982.2301.12 (2023).

Laurens-Arredondo, L. A. Information and communication technologies in higher education: Comparison of stimulated motivation. Educ. Inf. Technol. 29, 8867–8892. https://doi.org/10.1007/s10639-023-12160-2 (2024).

Švábenský, V. et al. Student assessment in cybersecurity training automated by pattern mining and clustering. Educ. Inf. Technol. 27, 9231–9262. https://doi.org/10.1007/s10639-022-10954-4 (2022).

Yin, H., Moghadam, J. & Fox, A. Clustering student programming assignments to multiply instructor leverage. In: Proceedings of the Second (2015) ACM Conference on Learning @ Scale 15’, Association for Computing Machinery, 367–372 (2015). https://doi.org/10.1145/2724660.2728695

Vellido, A., Castro, F. & Nebot, A. Clustering educational data. In Handbook of Educational Data Mining (eds Romero, C. et al.) 75–92 (CRC Press, 2010).

Tang, W., Pi, D. & He, Y. A density-based clustering algorithm with sampling for travel behavior analysis. In: Yin, H. et al. (Eds.) Intelligent Data Engineering and Automated Learning–IDEAL 2016, 231–239 (2016). https://doi.org/10.1007/978-3-319-46257-8_25

García, E., Romero, C., Ventura, S., de Castro, C. & Calders, T. Association rule mining in learning management systems. In Handbook of Educational Data Mining (eds Romero, C. et al.) 93–103 (CRC Press, 2010). https://doi.org/10.1201/b10274.

Fournier-Viger, P., Wu, C-W. & Tseng, V. S. Mining Top-K association rules. In: Kosseim, L., Inkpen, D. (Eds.) Advances in Artificial Intelligence. Canadian AI 2012. Lecture Notes in Computer Science, 7310, 61–73. Springer (2012). https://doi.org/10.1007/978-3-642-30353-1_6

Albreiki, B. et al. Clustering-based knowledge graphs and entity-relation representation improves the detection of at risk students. Educ. Inf. Technol. 29, 6791–6820. https://doi.org/10.1007/s10639-023-11938-8 (2023).

Zohair, A. & Mahmoud, L. Prediction of student’s performance by modelling small dataset size. Int. J. Educ. Technol. High. Educ. 16(1), 1–18 (2019).

Wang, J. & Zhang, W. Fuzzy mathematics and machine learning algorithms application in educational quality evaluation model. J. Intell. Fuzzy Syst. 39(4), 5583–5593 (2020).

Rodriguez-Hernández, C. F. et al. Artificial neural networks in academic performance prediction: Systematic implementation and predictor evaluation. Comput. Educ. Artif. Intell. 2, 100018. https://doi.org/10.1016/j.caeai.2021.100018 (2021).

Niyogisubizo, J. et al. Predicting student’s dropout in university classes using two-layer ensemble machine learning approach: A novel stacked generalization. Comput. Educ. Artif. Intell. 3, 100066. https://doi.org/10.1016/j.caeai.2022.100066 (2022).

Moubayed, A. et al. Student engagement level in an e-learning environment: Clustering using k-means. Am. J. Distance Educ. 34(2), 137–156. https://doi.org/10.1080/08923647.2020.1696140 (2020).

Lovelace, J., Newman-Griffis, D. & Vashishth, S., et al. (2021). Robust knowledge graph completion with stacked convolutions and a student re-ranking network. In: Proceedings of the Conference. Association for Computational Linguistics. Meeting. https://doi.org/10.48550/arXiv.2106.06555

Lau, E. T., Sun, L. & Yang, Q. Modelling, prediction and classification of student academic performance using artificial neural networks. SN Appl. Sci. 1, 982. https://doi.org/10.1007/s42452-019-0884-7 (2019).

Bharara, S., Sabitha, S. & Bansal, A. Application of learning analytics using clustering data mining for students’ disposition analysis. Educ. Inf. Technol. 23, 957–984. https://doi.org/10.1007/s10639-017-9645-7 (2018).

Panskyi, T. & Korzeniewska, E. Statistical and clustering validation analysis of primary students’ learning outcomes and self-awareness of information and technical online security problems at a post-pandemic time. Educ. Inf. Technol. 28, 6423–6451. https://doi.org/10.1007/s10639-022-11436-3 (2023).

Iatrellis, O. et al. A two-phase machine learning approach for predicting student outcomes. Educ. Inf. Technol. 26, 69–88. https://doi.org/10.1007/s10639-020-10260-x (2021).

Umair, S. & Majid Sharif, M. Predicting students grades using artificial neural networks and support vector machine. Encycl. Inf. Sci. Technol. Fourth Edn. https://doi.org/10.4018/978-1-5225-2255-3.ch449 (2018).

Iatrellis, O., Savvas, I. K., Kameas, A. & Fitsilis, P. Integrated learning pathways in higher education: A framework enhanced with machine learning and semantics. Educ. Inf. Technol. 25, 3109–3129. https://doi.org/10.1007/s10639-020-10105-7 (2020).

Balaban, I., Filipović, D. & Zlatović, M. Post hoc identification of student groups: Combining user modeling with cluster analysis. Educ. Inf. Technol. 28, 7265–7290. https://doi.org/10.1007/s10639-022-11468-9 (2023).

Shi, H. et al. Correction: From unsuccessful to successful learning: profiling behavior patterns and student clusters in massive open online courses. Educ. Inf. Technol. 29, 9039. https://doi.org/10.1007/s10639-023-12191-9 (2023).

He, X. et al. Investigating online learners’ knowledge structure patterns by concept maps: A clustering analysis approach. Educ. Inf. Technol. 28, 11401–11422. https://doi.org/10.1007/s10639-023-11633-8 (2023).

Gaheen, M. M., ElEraky, R. M. & Ewees, A. A. Automated students arabic essay scoring using trained neural network by e-jaya optimization to support personalized system of instruction. Educ. Inf. Technol. 26, 1165–1181. https://doi.org/10.1007/s10639-020-10300-6 (2021).

Dutt, S., Ahuja, N. J. & Kumar, M. An intelligent tutoring system architecture based on fuzzy neural network (FNN) for special education of learning disabled learners. Educ. Inf. Technol. 27, 2613–2633. https://doi.org/10.1007/s10639-021-10713-x (2022).

Çebi, A. & Güyer, T. Students’ interaction patterns in different online learning activities and their relationship with motivation, self-regulated learning strategy and learning performance. Educ. Inf. Technol. 25, 3975–3993. https://doi.org/10.1007/s10639-020-10151-1 (2020).

Shi, H. et al. From unsuccessful to successful learning: Profiling behavior patterns and student clusters in Massive open online courses. Educ. Inf. Technol. 29, 5509–5540. https://doi.org/10.1007/s10639-023-12010-1 (2024).

Özbey, M. & Kayri, M. Investigation of factors affecting transactional distance in E-learning environment with artificial neural networks. Educ. Inf. Technol. 28, 4399–4427 (2023).

Sabitha, A. S., Mehrotra, D. & Bansal, A. Delivery of learning knowledge objects using fuzzy clustering. Educ. Inf. Technol. 21, 1329–1349. https://doi.org/10.1007/s10639-015-9385-5 (2016).

Khan, A. & Ghosh, S. K. Student performance analysis and prediction in classroom learning: A review of educational data mining studies. Educ. Inf. Technol. 26, 205–240. https://doi.org/10.1007/s10639-020-10230-3 (2021).

Songkram, N. & Chootongchai, S. Adoption model for a hybrid SEM-neural network approach to education as a service. Educ. Inf. Technol. 27, 5857–5887. https://doi.org/10.1007/s10639-021-10802-x (2022).

Acknowledgements

The authors extend their appreciation to the Deanship of Research and Graduate Studies at King Khalid University for funding this work through Large Research Project under grant number RGP 2/64/46.

Author information

Authors and Affiliations

Contributions

Conceptualization, S.M, C.M.; Formal analysis, J.M, K.V; Writing—Review and Editing, N.T, R.S; Methodology, J.K.B; Supervision, S.F, A.K., A.Z.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Ethical approval

This article does not contain any studies with human participants or animals performed by any of the authors. All methods were carried out in accordance with relevant guidelines and regulations. The study protocol involving student data was reviewed and approved by the KVG College of Engineering in Karnataka, India. Prior to participation, informed consent was obtained from all students involved in the study and/or their legal guardians, as appropriate. All data collected were anonymized to ensure privacy and confidentiality.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Malik, S., Mahanty, C., Mohanty, J. et al. Enhancing education quality with hybrid clustering and evolutionary neural networks in a multi phase framework. Sci Rep 15, 21323 (2025). https://doi.org/10.1038/s41598-025-04622-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-025-04622-z