Abstract

Accurate forecasting of agricultural commodity prices is essential for market planning and policy formulation, especially in agriculture-dependent economies like India. Price volatility, driven by factors such as weather variability and market demand fluctuations, poses significant forecasting challenges. This study evaluates the performance of traditional stochastic models, machine learning techniques, and deep learning approaches in forecasting the prices of 23 commodities using daily wholesale price data from January 2010 to June 2024. Models assessed include Autoregressive Integrated Moving Average, Support Vector Regression, Extreme Gradient Boosting, Multilayer Perceptron, Recurrent Neural Networks, Long Short-Term Memory Networks, Gated Recurrent Units, and Echo State Networks. Results show that deep learning models, particularly Long Short-Term Memory and Gated Recurrent Units, outperform others in capturing complex temporal patterns, achieving superior accuracy across error metrics. The results indicate that deep learning models, particularly Long Short-Term Memory (LSTM) networks and Gated Recurrent Units (GRU), demonstrate superior performance in capturing complex temporal patterns. For instance, the GRU model achieved a Root Mean Squared Error (RMSE) of 369.54 for onions and 210.35 for tomatoes, significantly outperforming the ARIMA model, which recorded RMSE values of 1564.62 and 1298.60, respectively. Furthermore, the Mean Absolute Percentage Error (MAPE) for GRU was notably lower, at 14.59% for onions and 10.58% for tomatoes. These results underscore the efficacy of deep learning approaches in addressing the inherent volatility and nonlinear dynamics of agricultural commodity prices. These findings offer valuable insights for policymakers, traders, and farmers, enabling better market interventions, crop planning, and risk management. The study recommends exploring hybrid models and incorporating external factors like weather data to further enhance forecasting reliability.

Similar content being viewed by others

Introduction

Accurate forecasting of agricultural commodity prices is a cornerstone for effective market planning, policy formulation, and risk management in agriculture-driven economies. The agricultural sector, which forms the backbone of economies like India, faces unique challenges due to its exposure to various uncertainties. Price volatility in agricultural commodities, driven by factors such as weather fluctuations, seasonal supply patterns, international trade policies, and global market dynamics, presents a highly complex forecasting challenge. This unpredictability affects a wide range of stakeholders, including farmers striving to make informed decisions about crop selection and marketing, traders managing supply chain risks, and policymakers working to stabilize markets and ensure food security. The complexity of agricultural price forecasting arises from the inherently nonlinear, nonstationary, and stochastic nature of price movements. Traditional methods, such as the Autoregressive Integrated Moving Average (ARIMA) model, have long been the go-to approach for time-series forecasting due to their simplicity and mathematical rigor. These models, while effective for capturing linear relationships in historical data, often fall short in addressing the intricate temporal dependencies and nonlinear patterns inherent in agricultural price dynamics1,2. Consequently, reliance solely on traditional statistical models can result in suboptimal forecasts, limiting their utility for real-world decision-making.

In recent years, advancements in machine learning (ML) and deep learning (DL) have paved the way for more robust forecasting methods capable of addressing the limitations of traditional approaches. Machine learning algorithms such as Support Vector Regression (SVR) and Extreme Gradient Boosting (XGBoost) offer enhanced flexibility, enabling them to model multidimensional relationships and nonlinear trends in time-series data. These models have demonstrated considerable success in applications ranging from commodity price forecasting to demand prediction. Meanwhile, deep learning techniques have emerged as a game-changer in the field, driven by their ability to model complex, high-dimensional data and uncover hidden patterns that traditional models often fail to capture. Deep learning models such as Multilayer Perceptron (MLP), Recurrent Neural Networks (RNN), Long Short-Term Memory (LSTM) networks, Gated Recurrent Units (GRU), and Echo State Networks (ESN) have further revolutionized forecasting tasks. These models are particularly well-suited to agricultural price forecasting, as they excel at capturing long-term dependencies, seasonality, and temporal dynamics in data. Their ability to learn from large datasets with minimal human intervention makes them an attractive option for stakeholders seeking to improve forecasting accuracy and reliability.

This study evaluates the effectiveness of these advanced methodologies in forecasting the prices of 23 agricultural commodities using daily wholesale price data from the Agricultural Marketing Information Network (AGMARKNET) portal. Covering a period from January 2010 to June 2024 and spanning 165 markets, this dataset provides a rich basis for analyzing and comparing different predictive models. The commodities are chosen so as to represent various classes of agricultural commodities such as - Spices (Turmeric, Coriander, Dry Chiles, Cumin), Oilseeds (Groundnut, Soyabean, Mustard, Sesamum), Cereals (Wheat, Ragi, Bajra, Maize, Jowar, Paddy), Pulses (Arhar, Bengal Gram, Lentil, Greengram, Kabuli Channa), Vegetables (Onion, Tomato, Potato) and Fiber (Cotton). The performance of stochastic models, machine learning techniques, and deep learning approaches is assessed using standard error metrics such as Root Mean Squared Error (RMSE), Relative Normalized Mean Squared Error (RNMSE), Mean Absolute Error (MAE), and Mean Absolute Percentage Error (MAPE). By examining these models’ predictive capabilities, this research aims to identify the most suitable approach for agricultural price forecasting while also exploring opportunities for model enhancement.

This study contributes to the advancement of agricultural price forecasting research by conducting a rigorous evaluation of traditional statistical methods, machine learning algorithms, and deep learning models across a diverse range of commodities in India, using daily price data spanning over a decade. The novelty of this work lies in its empirical demonstration of the consistent superiority of deep learning models, particularly Long Short-Term Memory (LSTM) networks and Gated Recurrent Units (GRU), across multiple error metrics. Additionally, the study explores the suitability of these models for capturing the complex temporal and nonlinear characteristics of highly volatile agricultural price data. Unlike existing studies, this research utilizes an extensive dataset comprising 23 commodities from 165 markets, enabling a detailed and robust validation of model performance. Furthermore, the study emphasizes the practical implications of improved forecasting accuracy, offering actionable insights for stakeholders such as policymakers, farmers, and traders. It also underscores the potential of hybrid modeling approaches and the incorporation of external variables, such as weather data, to further enhance forecasting reliability and robustness.

The rest of the paper is structured as follows. Section "Literature Review" reviews existing literature on agricultural price forecasting, focusing on the evolution of stochastic models, the rise of machine learning, and the recent impact of deep learning techniques. Section "Methodology" details the methodology, including a description of the dataset, preprocessing steps, and the mathematical underpinnings of each model evaluated in the study. The models include ARIMA, SVR, XGBoost, MLP, LSTM, GRU, and ESN, each explained in terms of their theoretical framework and application to the problem at hand. Section "Results and Discussions" presents the results and discussion, offering a comparative analysis of the models’ performance and providing insights into their strengths and limitations. Finally, Sect. "Conclusion" concludes the paper by summarizing the findings, discussing their practical implications for stakeholders, and suggesting directions for future research, such as the development of hybrid models and the integration of external factors like weather data for further improving forecast accuracy.

Literature review

The agricultural sector is highly susceptible to price volatility caused by various factors such as weather anomalies, policy changes, and market dynamics3,4,5. Accurate forecasting of agricultural commodity prices is critical for ensuring market stability, supporting farmers’ livelihoods, and enhancing food security. Traditional statistical methods, such as the Autoregressive Integrated Moving Average (ARIMA) model6, have long been the foundation of time-series forecasting. Despite their simplicity and interpretability, these models fall short in capturing the nonlinearity and abrupt changes inherent in agricultural prices7,8. Machine learning (ML) models such as Support Vector Regression (SVR) and Extreme Gradient Boosting (XGBoost) have demonstrated superior performance in time-series forecasting due to their ability to handle nonlinear relationships and high-dimensional data9,10. SVR, based on structural risk minimization, has been particularly effective in mitigating overfitting and handling noisy data. XGBoost, with its ensemble learning approach, has shown remarkable success in commodity price forecasting tasks3,11.

While these ML models provide improvements over traditional approaches, their inability to capture long-term temporal dependencies limits their forecasting accuracy in sequential data. This gap has been addressed through the advent of deep learning techniques. Deep learning (DL) models such as Recurrent Neural Networks (RNNs), Long Short-Term Memory (LSTM) networks, and Gated Recurrent Units (GRUs) have transformed time-series forecasting by capturing complex temporal patterns and long-term dependencies12,13,14,15. Patel16 demonstrated the superior performance of LSTM and GRU models in forecasting the volatile prices of vegetables in India, achieving lower error metrics compared to ARIMA and SVR. Echo State Networks (ESNs), introduced by Jaeger17, offer an alternative deep learning approach with reduced computational costs. By training only, the output layer while keeping the recurrent layer fixed, ESNs efficiently capture dynamic temporal patterns.

Recent advancements in machine learning have underscored the efficacy of neural networks and Gaussian process regression for modelling nonlinear and complex patterns across various domains. Neural networks have shown remarkable versatility and robustness in capturing intricate dependencies and temporal dynamics, as demonstrated in studies focusing on economic and energy modelling18,19. Additionally, their adaptability to diverse datasets and scalability have positioned them as powerful tools for forecasting in financial and agricultural contexts20,21,22. On the other hand, Gaussian process regression provides a probabilistic framework that excels in uncertainty quantification and capturing nonlinear relationships, making it particularly suitable for forecasting tasks in data-scarce or noisy environments23,24. Studies in operations management and environmental forecasting have demonstrated its ability to improve prediction reliability and interpretability25,26. These findings emphasize the growing relevance of machine learning models in tackling complex real-world problems, thereby motivating the present study’s exploration of their application to agricultural price forecasting.

Hybrid models have emerged as powerful tools for improving forecasting accuracy. The ARIMA-LSTM hybrid model, proposed by Zhang27, combines ARIMA’s linear pattern modeling capabilities with LSTM’s strength in capturing nonlinear dependencies. This hybrid approach has shown significant improvements in agricultural price forecasting, as highlighted by Khashei and Bijari28. Ensemble methods, such as the Particle Swarm Optimization-based Weighted Ensemble Variance (P-WEV) model, optimize model weights dynamically to leverage the strengths of different forecasting techniques.

Bonawitz29 highlighted the potential of Federated Learning (FL) in preserving data privacy while enabling collaborative learning. In the context of agricultural forecasting, FL enables regional models to be trained locally and aggregated at a central server. This approach captures region-specific patterns while leveraging global insights. A recent study by Yang et al.30 applied FL to predict crop prices across different markets, demonstrating improved forecasting accuracy and robustness against data heterogeneity. Zhu et al. explored a federated LSTM model for commodity price forecasting, where local LSTM models were trained on market-specific data and their parameters aggregated. This federated approach effectively captured local variations while benefiting from the global model’s generalized learning capabilities. FL also addresses concerns related to data privacy and compliance with regulations such as the General Data Protection Regulation (GDPR), making it an attractive solution for agricultural markets where data confidentiality is paramount31,32.

Comparative studies consistently demonstrate that deep learning models outperform traditional and ML models in terms of error metrics such as Root Mean Squared Error (RMSE) and Mean Absolute Percentage Error (MAPE)41633. Hybrid and ensemble approaches further enhance forecasting performance by integrating the strengths of individual models. Federated learning, though relatively new in this ___domain, shows great promise in improving forecasting accuracy while addressing data privacy and scalability concerns. Studies by Yang et al.30 and Zhu et al. underline the potential of FL to revolutionize agricultural price forecasting by enabling collaborative, decentralized model training.

To summarize previous studies have extensively explored traditional statistical models like ARIMA and machine learning approaches such as SVR and XGBoost to forecast agricultural commodity prices. However, these methods often fall short in handling the nonlinear and volatile nature of price data. Deep learning techniques, including LSTM and GRU, have demonstrated superior performance in capturing complex temporal patterns and long-term dependencies, but prior research typically focused on limited datasets or fewer commodities. Gaps remain in leveraging external variables like weather data and hybridizing models for improved accuracy and robustness. This study addresses these gaps by analyzing 23 commodities across 165 markets over 14 years, empirically validating the superior performance of LSTM and GRU while advocating for hybrid models and external factor integration, thus contributing to more reliable and actionable forecasting solutions.

Methodology

Data description

The daily wholesale data from 2010-02-26 to 2024-06-11 for 23 different commodities across 165 markets have been collected from the AGMARKNET Portal (http://agmarknet.gov.in/). These 23 commodities include 3 vegetables (Potato, Onion and Tomato), 4 oilseeds (Mustard, Sesamum, Groundnut and Soybean), 5 pulses (Greengram, Kabuli Channa, Lentil, Bengal Gram and Arhar), 4 spices (Turmeric, Dry Chilies, Cumin and Coriander) and 6 cereals (Paddy, Jowar, Maize, Wheat, Ragi and Bajra) and Cotton which is a fiber crop. Different classes of commodity have been selected for their different levels of volatility. The selection of the markets and commodities in each class was based on their maximum share and representation.

The commodity with the highest variation, as measured by the coefficient of variation (CV), is Onion, with a CV of approximately 0.765. None of the commodities exhibit normality based on the Jarque-Bera test, as all have probabilities indicating non-normality. The descriptive statistics can be seen in Table 1.

To ensure the validity of our time series modeling approach, we examined the stationarity of the price series using two complementary tests: the Augmented Dickey-Fuller (ADF) and Kwiatkowski-Phillips-Schmidt-Shin (KPSS) tests. The ADF test evaluates the null hypothesis that a unit root is present in the time series (indicating non-stationarity). The test is based on the following regression model which is seen in Eq. 1:

where \(\:{y}_{t}\) represents the time series, \(\:\varDelta\:{y}_{t}\) = \(\:{y}_{t}\) − \(\:{y}_{t-1}\), t is a time trend, and \(\:p\) is the lag order. The null hypothesis of a unit root corresponds to \(\:\gamma\:\) = 0, while the alternative hypothesis of stationarity requires \(\:\gamma\:\) < 0. Complementing the ADF test, we employed the KPSS test which reverses the hypotheses. The KPSS test assumes stationarity under the null hypothesis and tests for the presence of a unit root in the alternative. The test statistic is based on the residuals from the regression as seen in Eq. 2.1:

where \(\:{r}_{t}\) follows a random walk: \(\:{r}_{t}\) = \(\:{r}_{t-1}\) + \(\:{u}_{t}\), with \(\:{u}_{t}\) ~ i.i.d.(0, \(\:{\sigma\:}_{u}^{2}\)). The KPSS test statistic is defined as seen in Eq. 2.2:

where \(\:{S}_{t}=\) \({\sum}_{j=1}^{T}{\widehat{\epsilon}}_{j}\), \({\widehat{\epsilon}}_{t}\) are the residuals, and \(\:{\widehat{\sigma}}_{\epsilon}^{2}\) is a consistent estimate of the long-run variance of \({\epsilon}_{t}\).

The results for both the stationarity tests can be seen in Table 2.

The unit root test results indicate that most commodity price series are non-stationary, as confirmed by both the ADF and KPSS tests. Only onion and tomato are clearly stationary, suggesting their prices revert to a mean over time. Several commodities, including mustard, paddy, greengram, cotton, and wheat, are non-stationary according to both tests, meaning they exhibit persistent trends and require transformations like differencing for further analysis. A few commodities, such as turmeric, cumin, potato, and maize, show conflicting results—where the ADF test suggests stationarity, but KPSS indicates non-stationarity—implying potential trend stationarity or structural breaks. Overall, most series require additional preprocessing to ensure they meet stationarity assumptions for time series modeling.

Machine learning models

Autoregressive integrated moving average (ARIMA) model

The Autoregressive Integrated Moving Average (ARIMA) model is a significant tool in predictive modeling, providing enhanced flexibility in fitting time-series data by combining both autoregressive and moving average components. This combination results in the ARMA (p, q) model (Eq. 3), a concept introduced by Box and Pierce in 1970. The fundamental assumption of this model is that the time series should be time-invariant, meaning it remains constant or stationary over time.

The ARMA (p, q) model can be expressed as:

Using the backshift operator B, this can be equivalently written as:

where,

To handle nonstationary time-series data, ARMA models can be extended by incorporating a method called “differencing,” as described by Makridakis et al. in 1982. This extension results in the ARIMA (p, d, q) model (Eq. 4), which is formulated as:

where \(\:{\epsilon\:}_{t}\) are identically and independently distributed (IID) as N(0, \(\:{\sigma\:}^{2}\)), and the integration parameter ‘d’ is a non-negative integer.

The algorithm for ARIMA forecasting can be seen below:

Algorithm

ARIMA for Commodity Price Forecasting.

Input: Historical price data P, order parameters (p, d, q), forecast horizon H.

Output: Forecasted prices F.

-

1.

Perform Augmented Dickey-Fuller test to check stationarity.

-

2.

If data is non-stationary, apply differencing (d times) until stationary.

-

3.

Select optimal (p, d, q) using AIC/BIC.

-

4.

Fit ARIMA model with selected (p, d, q).

-

5.

Forecast next H time steps.

-

6.

Return forecasted prices F.

Support vector regression (SVR)

Support Vector Regression (SVR) (Eq. 5) is a machine learning technique for non-linear estimation, following the principle of structural risk minimization (Valiant, 1984). It aims to minimize the upper limit of generalization error. The SVR model can be expressed as:

where \(\:f\left(x,z\right)\) is the model’s output, and the kernel function \(\phi\:\left(.\right)\) transforms the non-linear dataset into a higher-dimensional feature space, assuming linearity.

A key feature of SVR is the ε-insensitive loss function, which introduces a margin of tolerance around the predicted output. Errors within this margin, defined by a parameter ϵ\epsilonϵ, are ignored, while deviations beyond it are penalized. This allows SVR to create a robust regression model that focuses on significant patterns without being overly sensitive to noise or outliers in the data. By tuning ϵ\epsilonϵ, users can control the trade-off between precision and generalization, making SVR particularly effective for datasets with moderate noise levels.

SVR models leverage kernels to handle nonlinear relationships in the data. A kernel function maps input features into a higher-dimensional space where a linear relationship can be established. Popular kernel functions include the linear, polynomial, and radial basis function (RBF) kernels, with the RBF kernel being widely used due to its ability to capture complex patterns. The choice of kernel and its associated hyperparameters significantly influences SVR’s performance and flexibility.

The algorithm for running SVR is shown below:

Algorithm

SVR for Commodity Price Forecasting.

Input: Historical price data P, kernel function K, hyperparameters C and epsilon.

Output: Forecasted prices F.

-

1.

Preprocess data: Normalize prices P.

-

2.

Define feature set X and target variable Y.

-

3.

Split dataset into training and test sets.

-

4.

Train SVR model with kernel K, regularization parameter C, and epsilon.

-

5.

Predict prices on test set.

-

6.

Evaluate performance using RMSE, MAE.

-

7.

Return forecasted prices F.

Multilayer perceptron (MLP)

The Multilayer Perceptron (MLP) (Eq. 6) is a foundational model in the field of artificial neural networks. It was first introduced by Marvin Minsky and Seymour Papert in their 1969 book “Perceptrons,” although the term “MLP” and its widespread application in practical tasks came later. An MLP consists of multiple layers of neurons, including an input layer, one or more hidden layers, and an output layer. Each neuron in a layer is connected to every neuron in the subsequent layer. MLPs use backpropagation, an algorithm popularized by Rumelhart, Hinton, and Williams in their seminal 1986 paper, for training. This algorithm adjusts weights to minimize the error between the predicted and actual outputs. The architecture of a MLP model can be seen in Fig. 1.

The mathematical representation of a neuron in an MLP is given by:

where \(\:Y\) is the output, \(\:{x}_{i}\) are the input values, \(\:{w}_{i}\) are the weights, \(\:b\) is the bias term, and \(\:\sigma\:\) is the activation function, typically a non-linear function like ReLU or sigmoid.

MLP is run using this algorithm:

Algorithm

MLP for Commodity Price Forecasting.

Input: Historical price data P, number of hidden layers L, neurons per layer N, activation function A.

Output: Forecasted prices F.

-

1.

Normalize price data P.

-

2.

Define input features X and target variable Y.

-

3.

Split dataset into training and test sets.

-

4.

Initialize MLP with L hidden layers, N neurons per layer, activation function A.

-

5.

Train model using backpropagation and Adam optimizer.

-

6.

Predict prices on test set.

-

7.

Evaluate performance using RMSE, MAE.

-

8.

Return forecasted prices F.

Recurrent neural networks (RNN)

Recurrent Neural Networks (RNNs) (Eq. 7) are a specialized type of neural network in which the output from a previous step serves as the input for the current step. In contrast to conventional neural networks, where inputs and outputs are treated independently, RNNs are designed to handle sequences, making them ideal for tasks like predicting the next word in a sentence, which relies on information from previous words. This distinctive feature introduces the concept of a “hidden layer” or “memory state” that retains information about past inputs. The concept of RNNs was first introduced by Elman in 1990. The architecture of a RNN model can be seen in Fig. 2.

The defining characteristic of RNNs is their ability to process input sequences of arbitrary length. At each time step, the network takes the current input and the hidden state from the previous step to compute the new hidden state. This hidden state acts as a dynamic memory, preserving context from earlier inputs. Mathematically, the hidden state is computed as:

These equations incorporate three key connection weight matrices: \(\:{W}_{U}\), \(\:{W}_{V}\), and \(\:{W}_{W}\). The network’s hidden and output units are characterized by activation functions \(\:{f}_{H}\) and \(\:{f}_{O}\).

The algorithm for RNN is as below:

Algorithm

RNN for Commodity Price Forecasting.

Input: Historical price data P, number of time steps T, number of hidden units H.

Output: Forecasted prices F.

-

1.

Normalize and reshape data into sequences of length T.

-

2.

Define RNN architecture with H hidden units.

-

3.

Train RNN using stochastic gradient descent.

-

4.

Predict prices for next time steps.

-

5.

Evaluate model performance.

-

6.

Return forecasted prices F.

Long Short-Term memory (LSTM) networks

The Long Short-Term Memory (LSTM) network is an advanced type of Recurrent Neural Network (RNN) that effectively addresses the vanishing gradient problem, which limits traditional RNNs in learning long-term dependencies. LSTM achieves this through its unique architecture comprising three key gates: the forget gate, input gate, and output gate. These gates regulate the flow of information, enabling the network to retain relevant information over extended sequences while discarding irrelevant patterns. The forget gate discards unnecessary information from the previous time step, ensuring that the model focuses only on the important aspects of the data. The input gate determines what new information from the current time step should be added to the memory, while the output gate decides what information to pass on to the next time step. This gating mechanism empowers LSTM to handle complex, nonlinear, and sequential data effectively, making it particularly suitable for forecasting agricultural commodity prices, which exhibit strong temporal dependencies and volatility. The architecture can be seen in Fig. 3. The equations that define a LSTM cell are the Eqs. 8.1 to 8.6.

The mathematical formulation of LSTM cells involves:

where \(\:{f}_{t}\), \(\:{i}_{t}\), and \(\:{o}_{t}\) are the forget, input, and output gates, respectively; \(\:{C}_{t}\) is the cell state; \(\:{h}_{t}\) is the hidden state; \(\:{x}_{t}\) is the input; and \(\:W\) and \(\:b\) are the weights and biases.

The daily wholesale price data for 23 agricultural commodities was carefully preprocessed to ensure compatibility with the LSTM model. The first step was normalization, where the price data was scaled to a range between 0 and 1. This preprocessing step is critical to ensure stable and efficient convergence during model training and to prevent larger numerical values from dominating the learning process. Next, a sliding window approach was employed to structure the data into overlapping time-series windows. Each window consisted of 1000 consecutive time steps, allowing the model to learn both short-term fluctuations and long-term trends effectively. Finally, the dataset was divided into an 80% training set and a 20% testing set, ensuring the model was validated on unseen data to assess its generalization ability. This systematic data preparation process ensured that the LSTM model had access to clean, well-structured input for learning temporal patterns.

The LSTM model was implemented using Python and the TensorFlow/Keras library. The architecture was carefully designed to balance performance and computational efficiency. The input layer was configured to accept sequences of 1000 time steps, with each step representing a single price point. This was followed by a single LSTM layer with 128 units, chosen after extensive hyperparameter tuning to ensure the model’s capacity to learn complex patterns without overfitting. To further reduce the risk of overfitting, a dropout layer with a rate of 0.2 was included. This layer randomly deactivated 20% of neurons during training, encouraging the network to learn robust representations. The final dense output layer, consisting of a single neuron with a linear activation function, was used to predict the price for the next time step. This simple yet effective architecture ensured that the LSTM model could handle the nonlinearities and temporal dependencies inherent in the price data.

The training process for the LSTM model was designed to optimize its performance while ensuring stability and efficiency. The Mean Squared Error (MSE) loss function was used, as it penalizes large deviations between predicted and actual values, making it suitable for regression tasks like price forecasting. The Adam optimizer was employed to minimize the loss, offering adaptive learning rates that accelerate convergence and improve training efficiency. The model was trained in batches of size 64 over 50 epochs, with 20% of the training data set aside for validation. This validation step allowed the model’s performance to be monitored during training and helped prevent overfitting by ensuring it generalized well to unseen data. The training process was further supported by early stopping mechanisms to halt training when validation loss stopped improving. These carefully designed training procedures ensured that the LSTM model achieved high accuracy and robustness, particularly for volatile commodities like onions and tomatoes.

To run LSTM, the following algorithm is used:

Algorithm

LSTM for Commodity Price Forecasting.

Input: Historical price data P, sequence length T, LSTM units U.

Output: Forecasted prices F.

-

1.

Normalize price data P.

-

2.

Convert data into overlapping sequences of length T.

-

3.

Define LSTM model with U units.

-

4.

Train model using backpropagation through time (BPTT).

-

5.

Predict future prices.

-

6.

Evaluate model performance.

-

7.

Return forecasted prices F.

Gated recurrent unit (GRU) networks

The Gated Recurrent Unit (GRU) (Fig. 4) is a simplified version of the Long Short-Term Memory (LSTM) (3.2.5) network, designed to address the limitations of traditional Recurrent Neural Networks (RNNs). Like LSTM, GRU is well-suited for capturing long-term dependencies in sequential data, making it a powerful tool for forecasting agricultural commodity prices. The GRU architecture replaces the multiple gates in LSTM with two primary gates: the reset gate and the update gate. These gates regulate the flow of information within the network, enabling it to selectively remember or forget information over time. The reset gate determines the degree to which the previous hidden state contributes to the current hidden state, allowing the network to disregard irrelevant information. The update gate, on the other hand, decides how much of the previous information needs to be carried forward to the next time step. This streamlined gating mechanism reduces the computational complexity of GRU compared to LSTM while maintaining similar performance in many tasks. GRU’s lightweight architecture is particularly beneficial when computational efficiency is a priority.

The mathematical representation of GRU cells includes:

where \(\:{z}_{t}\) and \(\:{r}_{t}\) are the update and reset gates, respectively; \(\:{h}_{t}\) is the hidden state; \(\:{x}_{t}\) is the input; and \(\:W\) and \(\:b\) are the weights and biases.

The daily wholesale price data for 23 agricultural commodities was meticulously prepared to ensure it was suitable for the GRU model. The first step in the process was normalization, where the price values were scaled to a range between 0 and 1. This transformation improved the stability of the training process by preventing large numerical values from dominating the learning process and ensuring all features contributed equally. Following normalization, the data was restructured using a sliding window approach, which involved splitting the dataset into overlapping sequences of 1000 time steps. Each sequence represented a continuous block of historical prices, enabling the GRU model to learn both short-term variations and long-term dependencies within the data. This approach allowed the model to extract temporal patterns effectively and handle the inherent volatility in agricultural price data. Lastly, the dataset was divided into two subsets: 80% for training and 20% for testing. This train-test split ensured that the model’s performance was evaluated on unseen data, providing a robust measure of its generalization ability. These preprocessing steps collectively ensured that the data was clean, well-structured, and ready for training the GRU model.

The GRU model was implemented using Python and TensorFlow/Keras. The architecture was carefully designed to balance simplicity and predictive accuracy. The input layer was configured to accept sequences of 1000 time steps, where each step represented a single price point. The core of the model was a single GRU layer with 128 units, selected based on hyperparameter tuning to optimize performance without introducing unnecessary complexity. Unlike LSTM, GRU does not require a separate cell state, which simplifies its architecture and reduces memory requirements. To prevent overfitting, a dropout layer with a rate of 0.2 was added, randomly deactivating neurons during training to encourage the model to learn robust patterns. The dense output layer, with one neuron and a linear activation function, was used to predict the next price in the sequence. This straightforward architecture leveraged GRU’s efficiency while maintaining high accuracy.

The GRU model was trained using the Mean Squared Error (MSE) loss function, which penalizes large deviations between predicted and actual values. The Adam optimizer was employed for optimization, offering adaptive learning rates that improve convergence speed and stability. The model was trained with a batch size of 64 over 50 epochs, and 20% of the training data was used for validation. The validation step allowed for real-time monitoring of the model’s performance during training and ensured that the model did not overfit to the training data. Early stopping mechanisms were also implemented, halting the training process if the validation loss did not improve for a specified number of epochs. This approach ensured that the model was not over-trained and could generalize well to new data. By the end of training, the GRU model demonstrated strong predictive accuracy, particularly for highly volatile commodities such as onions and tomatoes, where it achieved consistently low RMSE and MAPE values.

To run GRU, the following algorithm is used:

Algorithm

GRU for Commodity Price Forecasting.

Input: Historical price data P, sequence length T, GRU units U.

Output: Forecasted prices F.

-

1.

Normalize and reshape data into sequences of length T.

-

2.

Define GRU architecture with U units.

-

3.

Train GRU using backpropagation through time.

-

4.

Predict prices for the next time steps.

-

5.

Evaluate model performance.

-

6.

Return forecasted prices F.

Extreme gradient boosting (XGBoost)

Extreme Gradient Boosting (XGBoost) is an ensemble learning algorithm that builds multiple decision trees in a sequential manner to improve the overall predictive accuracy. XGBoost enhances the standard gradient boosting algorithm by incorporating regularization techniques, parallel processing, and efficient handling of missing values, making it particularly effective for structured data and tabular datasets. Unlike deep learning models like LSTM or GRU, XGBoost focuses on modeling nonlinear patterns through decision tree ensembles, which partition the feature space to optimize the prediction performance. Each tree in XGBoost learns from the residual errors of the previous trees, progressively refining the model’s accuracy. This iterative process is particularly useful for datasets with moderate nonlinearity and less temporal complexity, such as agricultural price data for moderately volatile commodities. XGBoost’s efficiency and scalability make it a strong candidate for forecasting tasks, especially where computational resources are limited.

XGBoost is based on the principle of gradient boosting, where the model sequentially minimizes a loss function by adding decision trees as weak learners. The objective function in XGBoost comprises two parts: the training loss and a regularization term. The objective function is defined as:

where l(yi, ŷi) is the loss function measuring the difference between the actual value yi and the predicted value ŷi, and Ω(fk) is the regularization term for the k-th tree, defined as Ω(fk) = γT + ½λ‖w‖². Here, T is the number of leaves in the tree, λ is the L2 regularization term for leaf weights, and γ controls the minimum loss reduction required to perform a split. This formulation balances model accuracy and complexity, ensuring robustness and preventing overfitting.

At each iteration t, XGBoost refines the predictions by adding a new tree ft(x) to the model, such that the prediction is updated as:

where \(\hat{y}_i^{(t-1)}\) is the prediction from the previous t − 1 trees and ft(xi) is the output of the current tree. To efficiently minimize the loss, XGBoost uses a second-order Taylor expansion to approximate the objective function:

Here, \(g_i=\partial1(y_i,\hat{y_i})/\partial\hat{y}_i\) is the first-order gradient (error) and \(h_i=\partial^21(y_i,\hat{y_i})/\partial\hat{y}_i^2\) is the second-order gradient (curvature). These gradients enable efficient optimization of the objective function by guiding the updates in the tree-building process.

The optimal weights for the leaves of a tree are computed as:

where \(\text{I}_j\) is the set of data points in leaf j. The gain from splitting a node is calculated as:

Here, I1, Ir, and Ip are the sets of data points in the left child, right child, and parent nodes, respectively, and γ is the regularization term for split complexity. This formulation ensures that splits are only performed when they result in meaningful improvements to the model.

The final prediction for a data point xi is computed as the sum of the outputs from all K trees:

The XGBoost model was configured to maximize its ability to capture the nonlinearities and patterns in the price data. The input features consisted of historical price sequences, with each feature corresponding to a specific time step. Key hyperparameters were tuned to enhance the model’s performance:

-

Number of Trees: The number of decision trees was set to 500 to ensure the model had sufficient capacity to capture complex relationships without overfitting.

-

Learning Rate: A learning rate of 0.1 was chosen to balance the speed of training and the model’s ability to converge to an optimal solution.

-

Maximum Tree Depth: The depth of each tree was set to 6, ensuring the model captured sufficient complexity while avoiding overfitting.

-

Regularization: L1 and L2 regularization terms were included to penalize overly complex models and improve generalization.

-

Early Stopping: Early stopping was implemented based on the validation loss to halt training when performance plateaued, reducing unnecessary computational expense.

This configuration allowed XGBoost to efficiently learn patterns in the agricultural price data while maintaining scalability and robustness.

The XGBoost model was trained using the Mean Squared Error (MSE) as the objective function, which minimized the squared difference between predicted and actual values. During training, the model incrementally added decision trees, each learning to predict the residual errors of the previous trees. This iterative process allowed XGBoost to refine its predictions continuously, improving accuracy with each step. The model was trained with a batch size of 128 over 100 boosting rounds, with early stopping triggered if validation loss did not improve after 10 consecutive rounds. Hyperparameter tuning was conducted using grid search to identify the optimal combination of tree depth, learning rate, and regularization parameters. Once trained, the model was evaluated on the test set using metrics such as RMSE, MAE, and MAPE.

The algorithm for running XGBoost is as below:

Algorithm

XGBoost for Commodity Price Forecasting.

Input: Historical price data P, feature set X, target Y, hyperparameters.

Output: Forecasted prices F.

-

1.

Preprocess and engineer features from P.

-

2.

Split dataset into training and test sets.

-

3.

Train XGBoost model with hyperparameters.

-

4.

Predict prices on test set.

-

5.

Evaluate model using RMSE, MAE.

-

6.

Return forecasted prices F.

Echo state networks (ESN)

Echo State Networks (ESNs) (Fig. 5) are a specialized type of Recurrent Neural Network (RNN) designed to efficiently handle time-series prediction tasks by leveraging their unique architecture. Introduced by Jaeger in 2001, ESNs address the computational challenges typically associated with training RNNs by keeping the internal recurrent structure, referred to as the “reservoir,” fixed. Instead of training the entire network, ESNs focus on optimizing only the output layer, significantly reducing training time while maintaining the ability to capture complex temporal dynamics.

The reservoir, a large and sparsely connected set of neurons, serves as the core of the ESN. It is initialized randomly and remains unchanged during training, ensuring that it provides a diverse range of dynamic responses to input sequences. These responses are mapped to the output layer, where the actual learning occurs. This separation between the untrained reservoir and the trainable output weights enables ESNs to efficiently process sequential data without the risk of overfitting or requiring extensive computational resources.

One of the defining characteristics of ESNs is their reliance on the “echo state property.” This property ensures that the influence of past inputs fades over time, allowing the network to maintain stability and focus on recent information. Mathematically, the reservoir state at time is computed as a function of the current input, the previous reservoir state, and a set of fixed weights. The output is then derived as a linear combination of these reservoir states using trainable weights.

The ESN can be mathematically described as:

where \(\:{x}_{t}\) is the state of the reservoir at time \(\:t\), \(\:{u}_{t}\) is the input, \(\:{W}_{}\) is the input weight matrix, \(\:W\) is the reservoir weight matrix, and \(\:{W}_{out}\) is the output weight matrix that is trained. The reservoir states \(\:{x}_{t}\) capture the temporal dynamics, and the readout \(\:{y}_{t}\) provides the final prediction.

ESN is run using the algorithm below:

Algorithm

ESN for Commodity Price Forecasting.

Input: Historical price data P, reservoir size R, spectral radius S, sparsity parameter Sp.

Output: Forecasted prices F.

-

1.

Normalize data P.

-

2.

Create an ESN with reservoir size R, spectral radius S, and sparsity Sp.

-

3.

Train readout layer using ridge regression.

-

4.

Predict future prices.

-

5.

Evaluate performance.

-

6.

Return forecasted prices F.

Prediction accuracy

The prediction accuracy of different models compared based on four error measures. Namely, Root Mean Squared Error (RMSE) (Eq. 12), Relative Normalized Mean Squared Error (RNMSE) (Eq. 13), Mean Absolute Error (MAE) (Eq. 14) and Mean Absolute Percentage Error (MAPE) (Eq. 15). The formulas for each are given below.

RMSE is sensitive to large errors due to the squaring. We chose RMSE because we want to heavily penalize models for large prediction misses (which, in commodity markets, could correspond to failing to predict a price surge or crash). A lower RMSE means the model’s predictions on average deviate less in a quadratic sense from actual prices. However, RMSE is scale-dependent (in units of the commodity’s price) and can be dominated by a few outliers. To counter scale-dependence, RNMSE is reported too.

MAE is the average absolute error. It is more interpretable in terms of actual price units (e.g., rupees per quintal) and is less skewed by outliers than RMSE. We examine MAE to understand typical error magnitude without the extra penalty on outliers. In some cases, we found RMSE and MAE giving different rankings for models if one model occasionally had big errors. Reporting both helps cross-check robustness: if a model has a much higher RMSE than MAE relative to another, it implies occasional large misses.

MAPE is the mean absolute percent error. We include MAPE to gauge error relative to the actual price level in percentage terms. This is crucial when comparing forecast accuracy across commodities with very different price scales. MAPE normalizes errors, allowing apples-to-apples comparison.

By using all four metrics, we satisfy multiple criteria: RMSE and RNMSE for emphasizing big errors, MAE for general reliability, and MAPE for relative error. We also considered alternatives like Theil’s U statistic and Mean Absolute Scaled Error (MASE). Theil’s U can benchmark against a no-change forecast, and MASE would allow comparison to naive seasonal forecasts, but we ultimately chose RMSE, MAE, MAPE as they are widely understood and cover the needs of our evaluation. Notably, these metrics align with those commonly used in the literature, ensuring our results can be compared to other studies. Where one model is best in RMSE but another in MAPE, it provides insight: perhaps one model handles scale better (lower MAPE) but has occasional large errors (hence higher RMSE). This nuanced evaluation helps determine the truly “best” model depending on what error profile is more important for stakeholders (e.g., avoiding large shocks vs. overall consistency).

Results and discussions

Accuracy results

The analysis evaluated the performance of various machine learning models on predicting prices of different agricultural commodities, including MLP, GRU, LSTM, RNN, XGBoost, Linear Regression, SVR, ESN, and ARIMA. The models ran on a window size of 1000 with a 80% training data and 20% test data split. The results indicated that recurrent neural network models, particularly GRU and RNN, consistently delivered superior accuracy across most commodities, as evidenced by their lower MAE and MAPE values. These models effectively captured the temporal dependencies in agricultural price data, making them highly suitable for forecasting tasks.

Conversely, while XGBoost and traditional models like ARIMA showed reasonable performance, they often lagged behind the neural network models, especially in handling complex, non-linear patterns in the data. Despite its simplicity, Linear Regression performed competitively in some cases, highlighting that simpler models can sometimes yield good results. The findings suggest prioritizing GRU and RNN models for practical forecasting applications due to their robustness and reliability while also advocating for further research into hybrid models and the integration of external factors to enhance prediction accuracy. The performance of these models for a few selected commodities is illustrated in Table 3 for RMSE, Table 4 for RNMSE, Table 5 for MAE, and Table 6 for MAPE.

Results statistical significance

The Diebold-Mariano (DM) test is a statistical method used to compare the predictive accuracy of two competing forecast models. It determines whether the difference in forecast errors is statistically significant. In this section, the DM test is conducted to compare the predictive performance of the deep learning model against another forecasting model, assessing whether the differences in their forecast errors are statistically significant. The test is based on the loss differential, which represents the difference in forecasting errors of the two models. The DM test statistic is calculated as shown in Eq. 16. The DM test results for RMSE, RNMSE, MAE and MAPE between a few of the machine learning models can be seen in Tables 7, 8, 9 and 10 respectively. While the p-values for the respective tests can be seen in Tables 11, 12, 13 and 14 respectively. Extremely small p-values been capped at 1E-300.

where:

\(\:\stackrel{-}{d}=\frac{1}{T}{\sum\:}_{t=1}^{T}{d}_{t}\) - is the mean loss difference,

\(\:\:{d}_{t}=\:g\left({e}_{\left\{1,t\right\}}\right)-\:g\left({e}_{\left\{2,t\right\}}\right)\)- represents the loss differential at time t,

\(\:{\left\{f\right\}}_{d\left(0\right))}\) - is an estimator of the long-run variance of \(\:{d}_{t}\),

\(\:T\) - is the number of observations.

The P-Values of the DM test, at a significance level of 5%, being less than 0.05 show that the comparisons of errors across models are statistically significant. The Diebold-Mariano (DM) test evaluates whether the predictive accuracy of two competing models differs significantly. The null hypothesis (\(\:{H}_{0})\) states that there is no significant difference in forecast errors between the two models, implying that they have equal predictive accuracy. The alternative hypothesis (\(\:{H}_{A})\) suggests that one model has significantly lower forecast errors than the other. A low P-value indicates that the null hypothesis can be rejected, confirming that the difference in forecasting performance between the models is statistically significant.

Discussion on accuracy results

Deep learning models, particularly LSTM and GRU, demonstrated outstanding accuracy across all error metrics, including Root Mean Squared Error (RMSE), Mean Absolute Error (MAE), and Mean Absolute Percentage Error (MAPE). These models are inherently designed to capture long-term dependencies and nonlinear patterns, which are prevalent in agricultural commodity prices due to factors like weather variability and market fluctuations. The LSTM model, for instance, consistently delivered lower RMSE and MAPE values, particularly for highly volatile commodities such as onions and tomatoes. GRU models, though slightly less robust than LSTM, also performed exceptionally well, with the added benefit of a simpler architecture that reduces computational overhead. The Echo State Network (ESN) emerged as another strong contender in the deep learning category. Unlike LSTM and GRU, ESN utilizes a fixed recurrent structure, training only the output layer. This approach not only improves computational efficiency but also provides strong performance in capturing dynamic temporal patterns. However, for certain commodities with highly complex price dynamics, ESN’s error metrics were marginally higher than those of LSTM and GRU, suggesting that while ESN is effective, it might require further tuning for optimal performance in such scenarios.

Traditional models like ARIMA and Linear Regression, while historically significant in time-series forecasting, exhibited clear limitations in this study. ARIMA, which relies on linear assumptions and stationarity, struggled to handle the nonlinear and non-stationary nature of agricultural price data. This limitation was reflected in its consistently higher RMSE and MAE values across most commodities. Linear Regression, a simplistic model that fits a straight line to data points, also failed to account for the inherent complexities and volatilities in the dataset, leading to suboptimal forecasting accuracy. Despite their limitations, these models occasionally delivered competitive results for commodities with relatively stable price patterns, such as paddy and wheat. This suggests that for less volatile commodities, the simplicity of ARIMA and Linear Regression may still provide acceptable performance, particularly when computational resources or model interpretability are prioritized.

Machine learning models like XGBoost and SVR occupied a middle ground in terms of performance. XGBoost, a powerful ensemble method based on gradient boosting, was particularly effective in handling moderate nonlinearities. Its performance across metrics like MAE and MAPE was commendable, especially for moderately volatile commodities such as coriander and soybean. However, XGBoost fell short of the deep learning models in capturing long-term temporal dependencies, which limited its effectiveness for highly volatile commodities. Support Vector Regression (SVR) also demonstrated decent performance, leveraging its kernel-based approach to model complex relationships within the data. However, its error metrics were consistently higher than those of LSTM and GRU, indicating a gap in capturing intricate patterns and dependencies. Additionally, SVR’s performance was sensitive to hyperparameter tuning, which could pose challenges in practical applications.

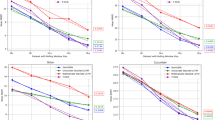

As shown in Fig. 6, GRU achieved the lowest RMSE values for onions (369.54) and tomatoes (210.35), significantly outperforming ARIMA, which recorded RMSE values of 1564.62 and 1298.60, respectively. Similarly, RNMSE comparisons in Fig. 7 reveal that GRU and LSTM models consistently maintained lower values across commodities, with GRU recording an RNMSE of 0.08 for onions and 0.06 for tomatoes, compared to ARIMA’s much higher RNMSE of 0.32 and 0.39 for the same commodities. These results underscore the ability of GRU and LSTM to handle price volatility effectively. MAE values, illustrated in Fig. 8, further emphasize the superiority of deep learning models. For instance, GRU and LSTM achieved MAE values of 217.73 and 229.70 for onions, respectively, while ARIMA recorded a much higher MAE of 1234.25. For tomatoes, GRU and LSTM showed MAE values of 164.28 and 139.30, respectively, compared to ARIMA’s 1138.77. This significant reduction in MAE highlights the precision of GRU and LSTM in forecasting commodity prices with minimal deviation from actual values. The MAPE analysis in Fig. 9 also provides critical insights into model performance. GRU recorded a MAPE of 16.17% for onions and 14.81% for tomatoes, significantly outperforming ARIMA’s MAPE values of 92.66% and 92.17%, respectively. These improvements are particularly important for highly volatile commodities, where accurate percentage error reductions can have substantial practical implications for stakeholders. The Echo State Network (ESN) also performed well, demonstrating competitive error metrics in capturing temporal patterns. However, as indicated in Figs. 6, 7, 8 and 9, its metrics were consistently marginally higher than those of GRU and LSTM, particularly for highly complex price dynamics, suggesting room for further optimization.

Traditional models like ARIMA and Linear Regression revealed significant limitations across all metrics, particularly for volatile commodities. While ARIMA achieved reasonable accuracy for relatively stable commodities like paddy and wheat, its RNMSE (0.48 for paddy) and MAPE (53.19% for paddy) remained considerably higher than those of GRU and LSTM. This limitation is evident across Figs. 7, 8 and 9, where ARIMA consistently lags behind deep learning models. XGBoost, as a machine learning model, occupied an intermediate performance level. While it performed better than ARIMA and Linear Regression in RNMSE, MAE, and MAPE, it could not match the performance of GRU and LSTM, particularly for volatile commodities. For instance, its RNMSE for onions was 0.09, slightly higher than GRU’s 0.08, as shown in Fig. 8.

The results highlight significant variations in model performance across different commodities. Highly volatile commodities like onions and tomatoes benefited the most from the advanced capabilities of LSTM and GRU models. These models effectively captured the sudden price spikes and drops, providing more accurate and reliable forecasts. For moderately volatile commodities such as wheat and soybean, machine learning models like XGBoost offered competitive performance, though deep learning models still held a slight edge. Interestingly, even for relatively stable commodities like paddy and maize, the advanced models outperformed traditional ones, underscoring the versatility and robustness of deep learning techniques. The superior performance of LSTM and GRU in these cases indicates their ability to generalize well across different volatility levels, making them suitable for a wide range of forecasting applications.

The superior performance of LSTM is due to its innovative memory cell architecture and gating mechanisms that allow it to capture both short-term fluctuations and long-term dependencies inherent in agricultural commodity price forecasting. LSTMs incorporate input, forget, and output gates that enable the network to selectively store and discard information, effectively mitigating the vanishing gradient problem and ensuring that crucial historical context—such as seasonal trends, policy shifts, and climatic events—remains influential over extended periods. This capability to model non-linear dynamics directly from raw data makes LSTM particularly adept at handling the complex interactions present in agricultural markets, where factors like weather variability and global economic conditions lead to unpredictable price movements. In contrast, while GRUs utilize a streamlined gating approach that offers computational efficiency, they may sometimes fall short in capturing very long-term dependencies due to their reduced complexity. Similarly, XGBoost, although powerful in handling structured data and complex feature interactions, relies heavily on manual feature engineering to incorporate temporal information and lacks an inherent mechanism to model sequential relationships. ARIMA, a classical statistical approach, is limited by its assumptions of linearity and stationarity, rendering it less effective in addressing the non-linear and volatile nature of agricultural price series.

The enhanced findings of this research have significant policy implications for agricultural market planning and regulation. Firstly, accurate price forecasting through advanced deep learning models like GRU and LSTM can empower policymakers to design more effective market stabilization strategies. Commodity price forecasting plays a crucial role in global markets, influencing trade policies, food security, and investment strategies34. By leveraging precise, timely predictions, authorities can implement dynamic pricing policies, such as minimum support prices (MSP) and targeted subsidies, ensuring that farmers receive fair compensation while minimizing the risk of market distortions. Historical examples in India underscore the critical need for reliable forecasting. For instance, during the 2013 pulses crisis, pulse prices in certain regions surged by as much as 200%, leading to an estimated loss in consumer surplus and market inefficiencies valued at roughly INR 3,500 crores. Similarly, the 2016 onion crisis saw prices in key producing states, such as Maharashtra, spike by nearly 300%, with market disruptions and losses estimated at around INR 12,000 crores. In both cases, the absence of robust forecasting tools contributed to reactive policy measures that amplified the economic fallout for both farmers and consumers.

For farmers, price forecasts can guide planting and marketing strategies. Research has shown that accurate forecasting models help stabilize agricultural markets and reduce risks for smallholder farmers26. A farmer armed with a forecast of low future prices might diversify crops or delay selling harvest (if storage is possible) to avoid losses. Conversely, a forecast of favorable prices could encourage planting more of that crop or selling forward to lock in profits. There are case studies where providing price outlooks to farmers improved their bargaining position – for example, some cooperative platforms share predictive insights, so farmers know if prices are likely to rise, helping them decide whether to bring produce to market immediately or hold off. Such decisions can materially affect farm income. Moreover, better forecasts reduce uncertainty, which can encourage investment in the agriculture sector. If both farmers and lenders trust price forecasts, credit and insurance products (like crop insurance or futures contracts) can be structured more effectively. In essence, forecast accuracy has an economic multiplier effect: it leads to more efficient market functioning. Stable and predictable prices allow for better planning of sowing area and crop rotation, optimal storage and logistics planning, and more stable consumer prices.

Smallholder farmers often lack access to sophisticated market information; improved forecasting (especially if disseminated via advisory services or apps) can level the playing field, enabling them to anticipate market gluts or scarcities. For example, a predictive model might warn of an expected bumper crop leading to price drops – farmers could then choose to process the crop or switch to an alternative to avoid a crash. On the policy side, accurate forecasts of cereal prices help governments ensure food policy decisions (like releasing public grain stocks or adjusting subsidies) are well-timed, preventing crises. A notable scenario is food price inflation: early prediction of a spike in a staple’s price lets policymakers act preemptively.

Furthermore, improved forecasting models enable policymakers to anticipate market volatility and supply chain disruptions, thereby enhancing food security planning. With better insights into potential price spikes or shortages, governments can proactively adjust import-export policies, manage strategic food reserves, and mobilize support for regions at risk of food insecurity. This proactive approach not only stabilizes markets but also protects consumers from sudden price hikes on essential commodities. Additionally, integrating these predictive analytics into national agricultural strategies can foster a more resilient and adaptive policy framework. By incorporating real-time data, including weather patterns and global market trends, into forecasting systems, governments can better coordinate multi-sectoral responses that mitigate the impact of both climatic and economic shocks. This comprehensive, data-driven approach will support sustainable agricultural growth, enhance market transparency, and ultimately contribute to long-term economic stability and food security.

Conclusion

This study utilized three categories of models to predict the prices of various commodities: stochastic, machine learning, and deep learning. The stochastic models, such as ARIMA, are limited to short-term memory and cannot adequately capture dataset volatility. In contrast, the data-driven machine learning and deep learning models’ approaches are capable of handling the data’s nonlinearity and complexity. The 23 commodities analyzed exhibit unique price behavior patterns. The exploration of these techniques showed that deep learning methods consistently outperformed both machine learning and stochastic models across all patterns. This superiority is attributed to deep learning’s ability to capture both long-term and short-term memory patterns in the data, as well as nonlinear complexities.

The findings of this research also have significant policy implications for agricultural market planning and regulation. Accurate price forecasting can help policymakers design more effective interventions to stabilize markets and support farmers. For instance, timely and reliable price predictions enable the implementation of minimum support prices (MSP) and other subsidies that ensure farmers receive fair compensation for their produce, reducing their vulnerability to market volatility. Moreover, enhanced forecasting accuracy can improve food security planning by helping authorities anticipate and mitigate the effects of price spikes and shortages on consumer access to essential commodities. Policies that leverage advanced predictive models, such as GRU and LSTM networks, can be more proactive and adaptive, providing better tools for managing supply chain disruptions and enhancing overall market resilience.

Despite the promising results, this study has limitations that warrant further investigation. Firstly, the predictive models used rely heavily on historical price data, which may not fully capture sudden, unforeseen market shocks caused by extreme weather events, geopolitical tensions, or pandemics. Incorporating real-time data and integrating exogenous variables—such as weather forecasts, global market trends, policy changes, and socio-political disruptions—into the forecasting models could significantly enhance their robustness and adaptability to dynamic market conditions. Additionally, while deep learning models like GRU and LSTM have shown superior performance, their computational requirements and data preprocessing demands may pose challenges for certain stakeholders, particularly in resource-constrained settings or regions with limited access to advanced computing infrastructure.

Future research will explore the development of hybrid models that combine the strengths of traditional statistical methods with advanced machine learning and deep learning techniques. Hybrid approaches could leverage the interpretability and simplicity of stochastic models while integrating the nonlinear learning capabilities of deep learning methods. Moreover, these models could incorporate diverse data types, including both quantified variables (e.g., historical prices, weather data, and market indices) and non-quantified inputs (e.g., text data from market reports, news sentiment analysis, and policy announcements). Such enhancements could improve prediction reliability, computational efficiency, and scalability for a wider range of users.

Expanding the scope of analysis to include a broader set of agricultural commodities, regional market data, and cross-border trading patterns would also provide deeper insights into global and regional agricultural dynamics. This broader scope could enable more comprehensive models that account for interdependencies across markets and commodities. Furthermore, collaborative efforts to make advanced forecasting tools more accessible to policymakers, small-scale farmers, and other stakeholders through user-friendly interfaces and educational outreach could maximize the practical utility of these models. By addressing these gaps, future studies can pave the way for more resilient, adaptive, and equitable agricultural markets.

Data availability

The datasets used and/or analysed during the current study available from the corresponding author on reasonable request.

References

Manogna, R. L. & Kulkarni, N. Does the financialization of agricultural commodities impact food security? An empirical investigation. Borsa Istanbul Rev. 24(2), 280–291 (2024).

Manogna, R. L., Kulkarni, N. & Krishna, A. Nexus between Financialization of Agricultural Products and Food Security Amid Financial Crisis: Empirical Insights from BRICS. J. Agribus. Dev. Emerg. Econ. https://doi.org/10.1108/JADEE-06-2023-0147 (2024).

Gouel, C., Gautam, M. & Martin, W. J. Managing food price volatility in a large open country: the case of wheat in india. Oxf. Econ. Pap. 68, 811–835 (2016).

Rakshit, D., Paul, R. K. & Panwar, S. Asymmetric price volatility of onion in india. Indian J. Agric. Econ. 76, 245–260 (2021).

Manogna, R. L. & Mishra, A. K. Price discovery and volatility spillover: an empirical evidence from spot and futures agricultural commodity markets in india. J. Agribus Dev. Emerg. Econ. 10, 447–473. https://doi.org/10.1108/JADEE-10-2019-0175 (2020).

Box, G. E. P. & Pierce, D. A. Distribution of residual autocorrelations in Autoregressive-Integrated moving average time series models. J. Am. Stat. Assoc. 65, 1509–1526 (1970).

Manogna, R. L. & Mishra, A. K. Forecasting spot prices of agricultural commodities in india: application of deep-learning models. Intell. Syst. Acc. Finance Manag. 28, 72–83 (2021).

Manogna, R. L. Innovation and firm growth in agricultural inputs industry: empirical evidence from india. J. Agribus Dev. Emerg. Econ. 11, 506–519 (2021).

Cao, L. J. & Tay, F. E. H. Support vector machine with adaptive parameters in financial time series forecasting. IEEE Trans. Neural Netw. 14, 1506–1518 (2003).

Chen, T., Guestrin, C. & XGBoost A scalable tree boosting system. Proc. 22nd ACM SIGKDD Int. Conf. Knowl. Discov. Data Min. 785–794 (2016).

Manogna, R. L. & Desai, D. Nexus of Monetary Policy and Productivity in an Emerging Economy: Supply-Side Transmission Evidence from India. J. Quant. Econ. https://doi.org/10.1007/s40953-023-00380-9

Cho, K., Van Merriënboer, B., Bahdanau, D. & Bengio, Y. On the properties of neural machine translation: Encoder-decoder approaches. ArXiv Preprint arXiv:14091259 (2014).

Elman, J. L. Finding structure in time. Cogn. Sci. 14, 179–211 (1990).

Hochreiter, S. & Schmidhuber, J. Long short-term memory. Neural Comput. 9, 1735–1780 (1997).

Manogna, R. L. & Mishra, A. K. Does investment in innovation impact firm performance in emerging economies? An empirical investigation of the indian food and agricultural manufacturing industry. Int. J. Innov. Sci. 13, 233–248 (2021).

Patel, R., Zeinali, M. & Passi, K. Deep learning-based robot control using recurrent neural networks (LSTM; GRU) and adaptive sliding mode control. Proc. 8th Int. Conf. Control Syst. Robot. (CDSR’21), Virtual (2021).

Jaeger, H. The echo state approach to analyzing and training recurrent neural networks. GMD Rep. 148, German National Research Center for Information Technology (2001).

Kim, J. & Moon, S. The application of neural networks in economic modeling: A review. Asian J. Econ. Behav. 12, 25–40 (2024).

Zhang, H., Li, Y. & Chen, X. Neural network-based energy consumption forecasting in the industrial sector. Meas. Energy. 56, 100001 (2024).

Chandra, A. & Patel, R. Deep learning models in financial forecasting: an indian context. Glob Finance Rev. 6, 15–27 (2024).

Mehta, K., Sharma, R. & Singh, P. Evaluating neural network scalability for agricultural price prediction. J. Model. Manag. 19, 230–245 (2024).

Manogna, R. L. & Mishra, A. K. Market efficiency and price risk management of agricultural commodity prices in india. J. Model. Manag. 18, 190–211 (2023).

Wang, T. & Zhao, H. Gaussian process regression for uncertainty quantification in price forecasting. Neural Comput. Appl. 36, 2507–2520 (2024).

Wang, T. & Zhao, H. Advances in gaussian process regression for energy demand prediction. Neural Comput. Appl. 37, 3011–3025 (2024).

Johnson, K. Applications of gaussian process regression in environmental modeling. Environ. Model. Softw. 32, 101–115 (2024).

Ma, W., Nowocin, K., Marathe, N. & Chen, G. H. An Interpretable Produce Price Forecasting System for Small and Marginal Farmers in India using Collaborative Filtering and Adaptive Nearest Neighbors. arXiv preprint arXiv:1812.05173, (2018).

Zhang, G. P. Time series forecasting using a hybrid ARIMA and artificial neural network model. Neurocomputing 50, 159–175 (2003).

Khashei, M. & Bijari, M. A novel hybridization of artificial neural networks and ARIMA models for time series forecasting. Appl. Soft Comput. 11, 2664–2675 (2011).

Bonawitz, K. et al. Towards federated learning at scale: System design. Proc. Mach. Learn. Syst. (2019).

Yang, Q., Liu, Y., Chen, T. & Tong, Y. Federated machine learning: concept and applications. ACM Trans. Intell. Syst. Technol. 10, 1–19 (2021).

McMahan, H. B. et al. Communication-efficient learning of deep networks from decentralized data. arXiv preprint arXiv 170106011 (2017).

Manogna, R. L. & Mishra, A. K. Financialization of indian agricultural commodities: the case of index investments. Int. J. Soc. Econ. 49, 73–96 (2022).

Manogna, R. L. & Mishra, A. K. Agricultural production efficiency of indian states: evidence from data envelopment analysis. Int. J. Finance Econ. 27, 4244–4255 (2022).

World Bank. Commodity Markets Outlook, World Bank Publications, (2024).October (2024).

Funding

Open access funding provided by Birla Institute of Technology and Science.

Author information

Authors and Affiliations

Contributions

The corresponding author, Prof. Manogna RL has contributed towards conceptualization, literature, data, results discussion and drafting the paper. The co-authors Mr. Vijay and Mr. Sarang has contributed towards data extraction, methodology, analysis and conclusion along with editing the draft. All authors have read and approved the manuscript, and ensure that this is the case.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Manogna, R.L., Dharmaji, V. & Sarang, S. Enhancing agricultural commodity price forecasting with deep learning. Sci Rep 15, 20903 (2025). https://doi.org/10.1038/s41598-025-05103-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-025-05103-z