Abstract

Evaporation represents a fundamental hydrological cycle process that demands dependable methods to quantify its fluctuation to ascertain sustainable agriculture, irrigation systems, and overall water resource management. Meteorological variables such as relative humidity, temperature, wind speed, and sunshine hours affect evaporation non-linearly, resulting in challenges while developing prediction models. To combat this, the study aimed to develop robust models for estimating evaporation in semi-arid environments by applying machine learning techniques. Daily meteorological datasets (from January 2000 to December 2010) for the above variables (input) were collected from the Sidi Yakoub meteorological station in the Wadi Sly basin, Algeria. Conventional deep neural network (DNN) coupled with support vector machine (SVM), Bayesian additive regression trees (BART), random subspace (RSS), M5 pruned, and random forest (RF) were used for developing prediction models using various input variable combinations. Model performances were compared using mean absolute error (MAE), root mean square error (RMSE), determination coefficient (R2), Nash–Sutcliffe efficiency (NSE) coefficient, and percentage bias (PBIAS). Results indicated comparatively better performance for hybrid models (DNN-SVM, DNN-BART, DNN-RSS, DNN-M5 pruned, and DNN-RF) than conventional models (standalone DNN). Among hybrid models, the DNN-SVM model outperformed others with high accuracy and performance and fewer statistical errors in the daily pan evaporation prediction during the testing phase (R²=0.65, RMSE = 3.00 mm, MAE = 2.13, NSE = 0.65, and PBIAS = 3.54). DNN-RF was in the second rank for the prediction with R2 of 0.64, RMSE of 3.00 mm, MAE of 2.16, NSE of 0.64, and PBIAS = 0.41. While the standalone DNN model gave the lowest results with MAE of 4.87, RMSE of 5.00 mm, and NRMSE of 0.65. The present framework’s success in Algeria’s Wadi Sly basin highlights its potential for scalable adoption in irrigation scheduling and drought resilience strategies, yielding implementable steps for policymakers, addressing climate-driven water scarcity. Future research should explore integrating real-time climate projections and socio-hydrological variables to improve predictive adaptability across diverse agroecological zones.

Similar content being viewed by others

Introduction

Evaporation losses have significant global implications, particularly in the context of climate change, human interventions, and natural climate oscillations1. Rising global temperatures have intensified evaporation rates, exacerbating water scarcity issues in many regions, especially arid and semi-arid areas2. Increased evaporation from reservoirs, lakes, and river basins threatens freshwater availability, impacting agriculture, drinking water supplies, and hydropower generation3. Additionally, anthropogenic activities such as land-use changes, deforestation, and urbanization alter local climate patterns, further influencing evaporation rates and disrupting regional water cycles4. Climate oscillations, such as El Niño and La Niña, also play a crucial role in modifying evaporation patterns, leading to extreme droughts or intense precipitation events that impact water storage and distribution5. Given these challenges, accurately quantifying evaporation losses is essential for sustainable water resource management, agricultural planning, and climate adaptation strategies6.

Evaporation is measured using either direct methods, such as the pan evaporation method, or indirect methods, such as semi-empirical and empirical models7. Pan evaporation is commonly used for measuring evaporation because of its simplicity and cost-effectiveness8,9. The Class A pan is the most widely used method of assessing surface evaporation because it allows researchers to compare the evaporation rates in different locations10. However, it cannot be deployed everywhere, especially in difficult areas where instrumentation cannot be installed or maintained11. Therefore, the implementation cost is a disadvantage in developing nations12. As a result, studies have suggested developing indirect evaporation estimation methods (i.e., empirical and semi-empirical models) from various metrological parameters, such as mean temperature (Tmean, ℃), maximum temperature (Tmax, ℃), minimum temperature (Tmin, ℃), wind speed (WSP, ms− 1), solar radiation (Rs, MJm2), extra-terrestrial radiation (Ra, MJm2), precipitation (P, mm), sunshine hours (SSH, hours), vapor pressure (VP, hPa), rainfall (R, mm), and relative humidity (RH, %)13. Penman-Monteith, Thornthwaite, and Priestley-Taylor equations are examples of empirical models14. However, due to the stochasticity of the meteorological variables and the ___location-specific nature of these models, the empirical approaches might underestimate or overestimate pan evaporation (Ep) under a wide range of climatic conditions, particularly during extreme weather events15. Hence developing robust machine learning (ML) models for predicting evaporation becomes an imperative approach16,17,18.

Over the last two decades, ML models have made significant improvements in several hydrological and climatological fields, including drought19,20, rainfall21,22, evapotranspiration23,24, surface water quality25, and streamflow26,27. This is owing to the capacity of these models to handle complex and stochastic problems28. A survey of related articles is conducted to grasp an overview of the most recent work in this field, as depicted in Table 1.

After reviewing the above-mentioned research studies, it has been recognized that ML models have been widely applied to estimate Ep across various regions worldwide. Compared to traditional empirical methods, ML-based approaches demonstrate superior predictive accuracy due to their ability to capture complex, nonlinear relationships between meteorological parameters29. The evolution of ML models in earth and atmospheric sciences has seen a transition from conventional statistical and empirical methods to more sophisticated deep learning frameworks. Early studies primarily relied on artificial neural network (ANN) and support vector machine (SVM) for hydrological modeling, benefiting from their ability to learn from data without explicit physical assumptions30. However, these models often required extensive parameter tuning and were limited in their capacity to handle high-dimensional datasets effectively. Recent advancements in deep learning have introduced more powerful architectures, such as deep neural networks (DNNs) and hybrid models, which integrate multiple ML techniques for improved accuracy and generalization31. Studies have shown that hybrid approaches, such as combining DNN with SVM or random forest (RF), can enhance predictive performance by leveraging the strengths of multiple algorithms32. Additionally, ensemble learning techniques and Bayesian frameworks, such as Bayesian additive regression trees (BART), have gained traction in atmospheric and hydrological studies due to their robustness in handling uncertainties and complex spatial-temporal patterns.

Despite significant advancements in ML applications for hydrological modeling, the generalization capability of ML models across diverse climatic regions remains a challenge due to the stochastic and region-specific nature of meteorological conditions. To address this limitation, the present study evaluates six ML models—conventional DNN, DNN coupled with SVM, BART, random subspace (RSS), M5 pruned trees, and RF—for predicting daily pan evaporation rates in semi-arid regions. The study utilizes daily meteorological data from the Sidi Yakoub meteorological station in the Wadi Sly basin, Algeria, incorporating key climatic variables such as sunshine hours, wind speed, and relative humidity and temperature (mean, maximum, and minimum). The novelty of this research lies in its comparative assessment of hybrid and ensemble ML models, integrating advanced techniques such as DNN-SVM and ensemble learning methods like BART and RF to enhance predictive accuracy. Unlike previous studies that primarily focus on standalone models, this study systematically evaluates multiple approaches to improve evaporation estimation. Additionally, it provides the first application of these models in Algeria’s semi-arid regions, addressing a critical gap in hydrological modeling for arid and semi-arid climates. The research also employs sensitivity analysis to optimize input variable selection, ensuring that the most influential meteorological parameters are identified for precise prediction. Finally, a rigorous performance evaluation using multiple statistical metrics strengthens the reliability of the findings, contributing valuable insights for sustainable water resource management in regions with high evaporation rates. By addressing these aspects, this study contributes to improving the reliability and adaptability of ML-based evaporation prediction models, offering valuable insights for sustainable water resource management in semi-arid regions.

Materials and methods

Study area and data collection

As shown in Fig. 1, the study area is the Wadi Sly basin, located in northwest Algeria. It has an area of 1,400 km2 with coordinates of 35° 36’ 5”–36° 5’ 53” N and 1° 8’ 16”–1° 44’ 56” E. The basin has a maximum width and length of 30 and 70 km, respectively. Besides, it has a narrow, long-form, and large hydrographic network. The Sidi Yakoub dam, built for agricultural purposes, influences flows in the lower section of the basin. According to the Köppen-Geiger classification, the basin’s climate is the Mediterranean, with extremely hot summers and moderate winters. The coldest month is often January, whose temperature is typically above 0 °C. Besides, the average temperatures of at least one and four months are above 22 °C and 10 °C, respectively. In this environment, rainfall in the wettest month of the year is generally three times that of the driest month of the year.

The Wadi Sly basin in northwest Algeria was chosen as the study area for several reasons. Firstly, it represents a typical semi-arid environment with a Mediterranean climate, characterized by hot summers and mild winters, making it an ideal ___location to study evaporation. The basin’s hydrological characteristics, including its extensive hydrographic network and the presence of the Sidi Yakoub dam, provide a complex yet controlled environment to assess the impact of meteorological variables on evaporation. Additionally, the region’s reliance on irrigation for agriculture underscores the importance of accurate evaporation modeling for sustainable water resource management. The availability of comprehensive meteorological data from the Sidi Yakoub meteorological station further facilitated the selection of this site for the study.

The daily meteorological data, including minimum, maximum, and mean air temperature (Tmin, Tmax, and Tmean), minimum, maximum, and mean relative humidity (RHmin, RHmax, and RHmean), wind speed (WSP), sunshine hours (SSH), and pan evaporation (Ep) are collected from the Sidi Yakoub meteorological station. The dataset comprises daily records for 11 years from January 2000 to December 2010 (Figure S1). Table 2 shows the statistical characteristics of the meteorological data.

Machine learning models

For modeling evaporation in Algeria, the present study applies six hybrid ML models; conventional DNN, DNN-SVM, DNN-BART, DNN-RSS, DNN-M5 pruned, and DNN-RF. The process of stacking has been adopted for the hybridization of the models. Stacking is a way to ensemble multiple regression models. It is a general procedure where a learner is trained to combine the individual learners. Here, the individual learners are called the first-level learners, while the combiner is called the second-level learner, or meta-learner. The division of data into training and testing sets in the developed ML models was 75:25. It implies that 75% of the data is used for training the model, while 25% is reserved for testing and evaluating the model’s performance. The architecture of each model is described in the following sub-sections.

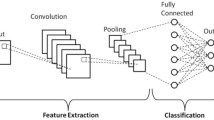

Deep neural network (DNN)

Hinton et al.49 proposed the concept of deep learning and networks that subsequently rejuvenated DNN. The DNN has emerged as a central and powerful deep learning model in the ongoing decade, given its added advantage over the single-hidden-layer ANN model50,51. The DNN models provide scope to learn complex non-linear relationships via multiple hidden layers considering features, targets, inputs, and outputs. These multiple layers allow models to understand complex features more efficiently and perform more intensive computational operations. The higher efficiency and computation ability of DNN are due to deep learning algorithms’ ability to learn from their own errors, such that DNN can validate the model prediction accuracy. On the other hand, classical ML models require varying degrees of human intervention to determine output accuracy. Therefore, the DNN model became the natural choice for evaporation estimates and predictions for the present investigation. In this study, a 4-layer DNN model with a rectified linear unit (\(\:ReLu\)) activation function is employed, as shown in Eq. 1. For this DNN architecture, the loss function (\(\:Loss\)) is estimated using Eqs. 2–3. In order to minimize the loss, a classical gradient-descent method is applied. TensorFlow software has been used to write the codes of the DNN model in Python 3.6.

.

where \(\:n\) is the number of observations or data records, \(\:{EP}_{0}\) and \(\:{Ep}_{DNN}\) are observed and predicted evaporation by the DNN model, respectively. Besides, in the networks, weights are represented as \(\:{w}_{1}\), \(\:{w}_{2}\), \(\:{w}_{3}\), \(\:{w}_{4}\), and \(\:{w}_{5}\), while bias terms are represented as \(\:{b}_{1}\), \(\:{b}_{2}\), \(\:{b}_{3}\), \(\:{b}_{4}\), and \(\:{b}_{5}\).

Support vector machine (SVM)

Cortes and Vapnik52 established the kernel-based SVM model, a supervised soft computing technique proficient in reducing complexities alongside errors in the estimation. The classifier models of SVMs are applied to data classification problems under different classes. Another group of the SVM is support vector regression, which is used in regression prediction problems. These regression functions are approximate as shown in Eq. 4. Here, the kernel function (input vector \(\:x\)) implicitly transforms the inputs of the lower-dimensional to a higher-dimensional feature [\(\:\beta\:\left(x\right)\)], wherein \(\:w\) is the weighting vector, and \(\:b\) is a bias. These two parameters are estimated using a regularized risk function [\(\:R\left(P\right)\)], as shown in Eqs. 5, 6.

.

where \(\:P\) is a penalty parameter, \(\:\frac{1}{2}{\left|\left|w\right|\right|}^{2}\) is a regularization term, \(\:{d}_{i}\) is the desired value, \(\:P\frac{1}{n}{\sum\:}_{i=1}^{n}L({d}_{i},{y}_{i})\) is the error term, and \(\:Ɛ\) is the tube size of SVM in \(\:{L}_{\epsilon\:}\). Since one of the advantages of employing SVM is finding a hyperplane in an N-dimensional space that separately classifies the data points, it works comparably well when there is an understandable margin of dissociation between classes, as observed for variables influencing evaporation processes. Furthermore, as the present study employs SVM in a higher dimension such that the target class is not much overlapping and data size is appropriate, SVM functions more productive and effective, becoming a confident choice for predicting evaporation.

Bayesian additive regression tree (BART)

In recent years, BART has gained popularity among the research community due to their widespread applications53,54. BART models have overcome one of the limitations of ML methods - the lack of uncertainty quantification for individual predictions. In a regression framework, BART quantifies uncertainty through a sum-of-trees approach, in that many decision trees contribute while predicting using a probabilistic model-based method. To execute, BART employs prediction standard error and prediction intervals, and thus it can be proclaimed that techniques like BART are highly appropriate when predicting evaporation where uncertainty is inherent. This study applied BART for additive regression using MATLAB. It has a continuous outcome for pan evaporation (say \(\:y\)) and \(\:p\) covariates \(\:x\:=\:({x}_{1},\dots\:,\:{x}_{p})\). The BART prediction model can define complex relations between the aforesaid \(\:x\) and \(\:y\) by estimating \(\:f\left(x\right)\) as follows: \(\:y\:=\:f\:\left(x\right)\:+\:\epsilon\:\), where \(\:\epsilon\:\:\sim\:\:N(0,\:{\sigma\:}^{2})\). Furthermore, a sum of \(\:m\) regression trees is used, i.e., \(\:f\left(x\right)\:=\:\sum\:\:g\:(x;\:{T}_{j},\:{M}_{j})\) ranging between \(\:j\:=\:1 \sim m\) allows estimation of \(\:f\left(x\right)\). The expression for BART is shown in Eq. 7.

.

Random subspace (RSS)

Ho55 introduced the RSS ensemble, which is proficient in combining multiple classifiers and their outputs (predictions) from multiple decision trees (DTs) via a voting approach. It overcomes one of the grave shortcomings of conventional DTs56. This has been achieved by addressing the overfitting issue of the decision-making tree classifier (i.e., high variance and low bias). Furthermore, it ensures the precision of the training results. Skurichina and Duin57 classified inputs of the RSS algorithm into four categories: training dataset, base classifier as well as size and number of subspaces. In the ensemble, the subset of input features (columns) is randomly selected for each model in the first step. While in the second step, the model is attempted to fit during the entire training dataset. To achieve this, bootstraps or random samples (rows) are implemented in the training dataset. RSS generally attempts to reduce the correlation between estimators in an ensemble by training them on random samples of features instead of the entire feature set. Consequently, RSS compels individual learners to not over-focus on variables that appear highly predictive in the training set but fail to be as predictive for points outside that set. Therefore, RSS has gained popularity for high-dimensional problems where the number of variables is significantly larger than the number of training points. Hence, this study employed the RSS model hybridized with DNN to exploit benefits of both techniques while predicting evaporation.

M5 pruned

Quinlan58 introduced the M5 algorithm, which was further reconstructed to develop the M5 pruned model tree. This integrates the traditional DT with the linear regression function. Wang and Witten59 described the four steps in the M5 pruned algorithm; (1) input space splitting, (2) linear regression model development, (3) pruning procedure, and (4) smoothing process. Besides, this algorithm has shown robustness due to its greater efficiency while dealing with missing data problems. Since M5 pruned can efficiently handle and process large datasets to ensure reduced errors in the output, it has been considered for analyzing and predicting the evaporation in the current study area.

The present study acquired data on the splitting measures for the M5 pruned model tree considering the error calculated at each node (linear regression functions are assigned on terminal nodes). The class values’ standard deviation is used to analyze the error at each node. The attribute at each node is tested to select a particular attribute for splitting. This selection is majorly driven by determining the attribute that maximizes the expected error reduction, which can be obtained by standard deviation reduction, as shown in Eq. 8.

.

where \(\:A\) represents the set of instances that attain the node; \(\:{\text{A}}_{\text{I}}\) represents the subset of illustrations that have the \(\:{\text{i}}^{\text{t}\text{h}}\) product of the possible set, and \(\:SD\) represents the standard deviation.

Random forest (RF)

The RF model yields comparatively higher performance while constructing ensembles. The learning algorithms of DTs rely on classification and regression tree. Considering architecture, RF comprises sets of DTs, wherein space occupied by each variable is further subdivided into smaller and smaller sub-spaces, achieving uniform space for each data/variable. The structure of DT is employed for this classification pattern such that two sub-branches originating from a branching point are recognized as a node. Furthermore, the root is identified as the first node in the tree structure, while the leaf is identified as the last node60. Each of these trees develops with a self-serving sample of the original data. To achieve the best division, a variable, randomly selecting the ‘m’ number of variables, is searched61. In the selection process, data discrepancy, in terms of data similarity, is estimated considering their assignment in the final subspaces (leaves of the same kind) instead of using the distance functions method. Equation 9 quantifies the data similarity between data \(\:a\) and \(\:b\) [\(\:s\left(a,\:b\right)\)], where it showcases the proportion of the number of times the data provided [\(\:d(a,\:b)\)] are located in the same final subspaces. In order to convert the similar matrix (data similarity issue) into a non-similar matrix, a forest similarity matrix, which is characteristically random, symmetric, and positive, is employed as per Eq. 9. In general, since the values of the variables do not influence the classification tree formation process, the deficiency of similarity of the RF is applied to the various variable categories. Once the predefined stop condition is attained, the reiteration of the splitting procedure is stopped62.

.

As far as the advantages of RF models are concerned, past studies indicated their high efficiency in learning target class samples retrieved from training data alongside unique characteristics from unclassified data6,35,63. As a result, RF develops better prediction ability as a hybrid method and overcomes the limitations of non-supervised classifier methods. Therefore, the present study hybridized RF with DNN for modeling pan evaporation.

Model performance evaluation indicators

In this research, five statistical metrics are applied to evaluate the performance of the developed models; determination coefficient (R2), root mean square error (RMSE), mean absolute error (MAE), Nash–Sutcliffe efficiency (NSE), and percentage bias (PBIAS). These metrics are listed as follows: (1) R2 [unitless], which evaluates the linear relationship between predicted and observed Ep values; (2) RMSE [mm/day], which measures the mean error of all estimates; (3) MAE [mm/day], which is commonly used to compute model error or residual; (4) NSE [unitless], which is a metric for determining the accuracy of a model; and (5) PBIAS [%], which quantifies the average tendency of the predicted values to be either overestimated or underestimated compared to the observed values.

Lower RMSE, MAE, and PBIAS values approaching 0 indicate better performance accuracy. Higher R2 and NSE values, on the other hand, imply a greater degree of agreement between estimated and observed values. The five error quantification measures are defined as per Eqs. 10–14, respectively. The readers may refer to already published literature to see their applications64,65,66,67,68,69,70,71,72.

.

where \(\:{\text{x}}_{\text{i}}\) and \(\:{\text{y}}_{\text{i}}\) are observed and predicted data, respectively; \(\:\stackrel{-}{x}\) and \(\:\stackrel{-}{y}\) are the mean observed and predicted data, respectively; \(\:{{s}_{x}}^{2}\) and \(\:{{s}_{y}}^{2}\) are observed and predicted variances, respectively.

Methodology

The main objective of this research is to estimate evaporation using several ML models in semi-arid regions. The flowchart of the research study is illustrated in Fig. 2, outlining the key steps involved in the methodology. The process begins with collecting daily meteorological datasets, including temperature, relative humidity, wind speed, insolation, and daily evaporation data. Using regression analysis and sensitivity tests, these datasets are then subjected to statistical analysis and data preparation to identify the best subset of input variables. This step is crucial for determining the optimal combination of meteorological factors influencing evaporation.

Following selecting the best input combination, the data is split into training and testing sets with a ratio of 75:25. This division is essential for training the models and evaluating their performance. The stacking process used in this study involves two layers: the first layer consists of multiple base models (DNN, SVM, BART, RSS, M5 pruned, and RF), and the second layer is a meta-model that combines the predictions from the base models. This approach allows for integrating diverse models, leveraging their strengths to improve overall performance. The training set is used to train the base models, and their outputs are then used as inputs for the meta-model, which is trained to optimize the final predictions.

The architecture of the individual models is designed to capitalize on their respective strengths. The DNN model used in this study consists of multiple hidden layers with a rectified linear unit activation function. The input layer receives the meteorological variables (Tmin, Tmax, RHmin, RHmax, insolation, and wind speed), and the output layer provides the predicted evaporation values. The number of neurons in each hidden layer was optimized through a grid search to minimize overfitting and improve model performance. SVM is a supervised max-margin model that maps the original finite-dimensional space into a higher-dimensional space to improve separability. It uses kernel functions, such as the Gaussian kernel, to compute dot products efficiently. The effectiveness of SVM depends on the selection of kernel parameters and the soft margin parameter, which are optimized using cross-validation.

BART is a flexible model for regression problems, combining multiple regression trees to model complex relationships between variables. This study uses BART as a base model in the stacking process to leverage its ability to handle non-linear interactions. RSS is an ensemble method that selects random subsets of features to train multiple models, reducing overfitting by averaging predictions across different feature spaces. The M5 model tree is a decision tree-based model incorporating linear regression functions at each leaf node, providing a robust framework for predicting evaporation. RF is an ensemble model that combines multiple decision trees to improve prediction accuracy, with each tree trained on a random subset of features and samples.

The hybrid models integrate the strengths of these base models. The DNN-SVM model combines the feature extraction capabilities of DNNs with the robust classification power of SVMs. The DNN-BART model integrates the non-linear modeling capabilities of BART with the DNNs ability to extract complex features. The DNN-RSS model leverages the feature extraction of DNNs, and the ensemble diversity provided by RSS. The DNN-M5 pruned model combines the strengths of DNNs with the linear regression capabilities of the M5 model tree, enhancing the model’s ability to handle continuous data. Finally, the DNN-RF model integrates the feature extraction of DNNs with the ensemble averaging of RF, providing robust and accurate predictions. For additional understanding of the methodological steps and ML architectures, readers may refer to relevant studies such as those by Samantaray and Ghose73,74,75, Masood et al.76 and Elbeltagi et al.77.

After developing and running these models, their performance is evaluated using metrics such as MAE, RMSE, R², NSE, and PBIAS. This evaluation stage is critical for selecting the best evaporation forecasting model. A Taylor diagram is also used to supplement the performance assessment measures, comprehensively verifying the optimum prediction model. The process concludes with selecting the most accurate model based on these evaluations, which can be used for practical applications in water resource management.

Results

Sensitivity analysis and best subset regression

The following sub-sections cover the best subset regression and sensitivity analyses for determining the optimum input combination for Ep prediction.

Input selection using the best subset model

Identifying the correct input parameters is crucial in achieving the greatest model performance. Eight meteorological variables (Tmin, Tmax, Tmean, RHmin, RHmax, RHmean, insolation, and wind speed) are employed in this study to determine the best input combination based on several performance indicators, as shown in Table 3. Overfitting is more likely when there are many input variables and a low correlation between input and output23,78,79a. For daily Ep prediction, seven statistical criteria were used to identify the optimal input combination: MSE, R2modified R2Mallows’ Cp, Amemiya prediction criterion (PC), Schwarz Bayesian criterion (SBC), and Akaike information criterion (AIC). Table 3 shows that the bold row is the optimal input combination (Tmin, Tmax, RHmin, RHmax, insolation, and wind speed) since it has the lowest Mallows’ Cp (6.18) and Amemiya’s PC (0.34) values, as well as the greatest R2 (0.66) and modified R2 (0.66) values of all input situations. This scenario is followed by the 6th input combination (Tmin, Tmax, Tmean, RHmean, insolation, and wind speed). Whereas considering a single variable as input (Tmean) results in the lowest R2 and adjusted R2 (0.59) and the highest values of other statistical criteria, representing the worst input variable in estimating daily Ep. Following normalization, the entire dataset is divided into two groups, with 75% of the dataset used for training and the remaining 25% used for testing and validating the models.

Sensitivity analysis

The combinations of input variables significantly influence the performance of data-driven models. Some variables contribute positively to model accuracy, while others may have a negative impact. To identify the most influential input parameters for predicting daily Ep, we conducted a regression analysis. The results in Table 4; Fig. 3 highlight the importance of Tmin, Tmax, RHmin, RHmax, insolation, and wind speed.

The statistical significance of each variable was assessed using t-tests, with the p-values indicating the probability of observing the test statistic under the null hypothesis that the coefficient is zero. A p-value less than 0.05 indicates that the variable has a statistically significant effect on the model. Tmin, with a coefficient of 0.433 and a p-value of less than 0.0001 (t = 23.220), is highly significant, suggesting that Tmin has a strong positive influence on the prediction of Ep. Tmax also positively affects Ep predictions, though to a lesser extent than Tmin, with a coefficient of 0.101 and a p-value of less than 0.0001 (t = 4.731).

In contrast, RHmin and RHmax have negative coefficients, negatively impacting Ep predictions. RHmin has a coefficient of − 0.130 with a p-value of less than 0.0001 (t = − 7.142), while RHmax has a coefficient of − 0.097 with a p-value of less than 0.0001 (t = − 6.644). Insolation, with a coefficient of 0.202 and a p-value of less than 0.0001 (t = 16.291), is a highly significant positive predictor of Ep. Wind speed also positively affects Ep, though to a lesser extent, with a coefficient of 0.027 and a p-value of 0.005 (t = 2.842). Tmean and RHmean were found to have no significant impact, as indicated by their coefficients of 0.000 and associated p-values.

This analysis demonstrates that Tmin, Tmax, RHmin, RHmax, insolation, and wind speed are the most influential input parameters for predicting daily Ep, with Tmin and insolation being particularly significant.

Implementation of machine learning models for daily ep estimation

The estimation of Ep at Sidi Yakoub station in the Wadi Sly basin is carried out using DNN and its hybrids (i.e., SVM, BART, RSS, M5 pruned, and RF). The parameters of the ML models used for pan evaporation modeling are depicted in Table 5. Comparative performances are evaluated using statistical performance indicators such as R2 NSE, RMSE, and MAE. The model with the highest values of R2 and NSE close to one as well as the lowest values of RMSE and MAE approaching zero is considered the best predictive model for Ep.

The values of R2 NSE, RMSE, and MAE for the predictive models during the training and testing periods are presented in Table 6. As depicted in Table 6, RF can enhance the performance of DNN and shows the best statistical performance criteria during the training period (RMSE = 1.000, MAE = 0.958, and NSE = 0.922). However, during the testing span, the DNN-SVM model shows superiority over the other models and has statistical performance criteria values of RMSE = 3.000, MAE = 2.127, and NSE = 0.649. Therefore, the DNN-SVM is selected as the optimum predictive model because it is associated with the best statistical criteria (i.e., minimum RMSE and MAE as well as maximum NSE) in the testing stage.

The scatter plots (right side) and temporal variation (left side) between predicted and observed daily evaporation values are plotted in Figs. 4 and 5 for the training and testing periods, respectively. In scatter plots, the regression line provides the R2 value as 0.668 for the DNN model, 0.651 for the DNN-SVM model, 0.630 for the DNN-BART, 0.631 for the DNN-RSS model, 0.635 for the DNN-M5 pruned model, and 0.638 for the DNN-RF model during the testing stage, respectively. The fitted regression line and the perfect line of fit (1:1) are almost identical for all the hybrid models except the standalone DNN model. This reveals that all the constructed hybrid models can enhance the performance of DNN.

Besides, the Taylor diagram is used to visually assess the efficiency of data-driven DNN and hybrid models. The fundamental advantage of this graphical approach is that it summarizes three important statistical criteria in a single chart: RMSE, correlation coefficient, and standard deviation23,80. Furthermore, it displays the model’s correctness and realism, when compared to the observable parameters. The standard deviation stands for the number of average measurements that deviate from one another81. As a result, high precision is indicated by the relative value of the standard deviation predicted to the standard deviation actual. In contrast, the value of the standard deviation predicted compared to the standard deviation actual denotes inferior accuracy. As shown in Fig. 6, the Taylor diagram is used to conduct a comparative analysis of models in this study. For the training phase, the DNN-RF model is closer to the observed point and shows superiority during the training period. For the testing phase, all the hybrid models compete; however, the DNN-SVM model provides a slightly better result than other models as it has the highest correlation coefficient and lowest RMSE and standard deviation values.

Discussion

According to the subset regression analysis results, the best input combination for Ep prediction included Tmin, Tmax, RHmin, RHmax, insolation, and wind speed. Previous studies showed that these factors physically impacted Ep79,82. The best subset combination was used to predict the daily Ep by constructing DNN, DNN-SVM, DNN-BART, DNN-RSS, DNN-M5 pruned, and DNN-RF models. The results showed that DNN-RF performed better during the training span; however, DNN-SVM showed superiority during the testing period. Therefore, the DNN-SVM model could be utilized as a prediction tool for daily evaporation in the selected station under semi-arid conditions. The applications of the model in various contexts may only be conceivable after they have been calibrated with new data. Besides, combining DNN and SVM models integrates the feature extraction capabilities of DNNs and the robust classification power of SVMs. For instance, Huynh et al.83 proposed a hybrid model that utilizes DNNs for automatic feature extraction from high-dimensional gene expression data, followed by SVM for classification, resulting in improved accuracy over standalone models. Similarly, Díaz-Vico et al.84 developed deep SVM models by integrating the non-linear feature extraction capabilities of DNN while incorporating the loss functions of SVM. This approach achieved comparable performance to standard SVM but with the added benefit of improved scalability for larger datasets. Along these lines, some of the other research for different applications (beyond hydrology) include Ahmad et al.85, Ma et al.86, Jo et al.87, Elbeltagi et al.88 and Prasunna et al.89. These findings suggest that a DNN-SVM hybrid model could effectively combine the strengths of both approaches, potentially leading to superior performance in daily evaporation prediction as well under semi-arid conditions.

Other recent research3,79,90,91 conducted on different continents of the world corroborated the findings of the current study. Lin et al.91 compared two ML algorithms for estimating daily evaporation values (SVM and backpropagation network). The results showed that SVM has a high capacity to predict daily Ep values and could be a viable alternative for Ep estimates. Malik et al.3 compared the accuracy of five ML algorithms (i.e., multivariate adaptive regression spline, SVM, multi-gene genetic programming, multiple model-ANN, and M5 model tree) in predicting the monthly Ep in India. The authors found that the multi-gene genetic programming and multiple model-ANN algorithms outperformed the SVM and multivariate adaptive regression spline methods and the M5 model tree approach in terms of prediction performance, as evidenced by their high RMSE values. Kushwaha et al.79 tested four ML algorithms (SVM, RSS, random tree, and reduced error pruning tree) in Northern India under various climate circumstances. According to the study, SVM outperformed other applied algorithms because it had high R and Willmott index values and low MAE and RMSE values. Chen et al.90 studied the efficacy of SVM for monthly Ep prediction at six distinct sites along the Yangtze River in China. The SVM was superior to standard approaches for estimating Ep in the study. The current study also shows that the DNN-SVM hybrid ML method predicts daily Ep more accurately than other algorithms. Overall, findings show hybrid models are more predictive in real-world scenarios and may be used effectively in watersheds with limited data. These models could forecast a wide range of hydrological and water resource phenomena besides Ep.

Despite its contributions, this study has certain limitations. Since the primary objective was to evaluate the comparative performance of standalone and hybrid DNN models, alternative deep learning architectures such as convolutional neural network, gated recurrent units, and other hybrid frameworks were not explored. Future studies could extend this research by incorporating these advanced models to improve prediction accuracy further and assess their generalizability across diverse climatic conditions. Additionally, while the current study explains Ep estimation, multi-step ahead forecasting was not within its scope. Future research should explore long-term Ep prediction using hybrid models integrated with temporal learning mechanisms such as long short-term memory and transformer-based architectures, which have demonstrated superior sequence modeling capabilities (e.g., Roy et al.92). Expanding the model to diverse climatic regions will help validate its robustness while integrating remote sensing and satellite data can improve spatial accuracy. Moreover, the study’s findings should be validated across other semi-arid regions worldwide to assess their adaptability under different environmental settings, as suggested by El-Kenawy et al.93. Comparative studies across multiple geographical contexts would boost the credibility and applicability of hybrid models in hydrological forecasting. Additionally, uncertainty quantification through Bayesian learning can improve model reliability and refine interpretability. Coupling artificial intelligent (AI) models with climate change projections can predict future evaporation trends under different scenarios. Furthermore, real-time applications such as AI-driven decision support systems and early warning mechanisms can aid water resource management. Evolutionary optimization techniques like genetic algorithms and swarm intelligence can refine model efficiency, while policy-driven studies can assess the socioeconomic impacts of evaporation variability. Integrating hybrid deep learning models with soil moisture indices and large-scale climate predictors such as the El Niño-Southern Oscillation and the Madden-Julian Oscillation could improve evaporation estimation under changing climate scenarios. These advancements will strengthen AI-driven hydrological modeling for sustainable water management in arid and semi-arid regions.

Conclusions

This study evaluated the effectiveness of standalone and hybrid DNN models in estimating Ep in the semi-arid Sidi Yakoub meteorological station, Wadi Sly basin, Algeria. Hybrid models were constructed by integrating DNN with advanced ML algorithms, including SVM, BART, RSS, M5 pruned, and RF. A comprehensive dataset spanning 2000–2010 was utilized, with 75% designated for training and 25% for testing. Subset regression analysis identified the most influential meteorological variables (i.e., wind speed, sunshine hours, maximum and minimum temperature, and humidity) followed by sensitivity analysis to determine their predictive significance. Performance evaluation using multiple statistical metrics revealed that hybrid models consistently outperformed standalone DNN models. Among them, the DNN-SVM model demonstrated the highest accuracy (R2 = 0.651, RMSE = 3.000, MAE = 2.127, NSE = 0.649, and PBIAS = 3.540), highlighting its ability to capture complex nonlinear relationships between meteorological parameters and Ep variations. Notably, models incorporating maximum and minimum temperature and humidity, rather than average values, exhibited superior predictive capabilities, reinforcing the importance of precise meteorological inputs. The findings underscore the potential of DNN-SVM as a robust and reliable predictive tool for Ep estimation in semi-arid environments, with broader applicability across Algeria. The study contributes to improving hydrological modeling and water resource management by providing a data-driven, machine-learning-based framework for evaporation forecasting. Future research could refine model performance by integrating wavelet packet decomposition and complete ensemble empirical mode decomposition, further enhancing predictive accuracy in diverse climatic conditions.

Data availability

The authors confirm that the data supporting the findings of this study are available within the article.

Abbreviations

- AI:

-

Artificial intelligence

- AIC:

-

Akaike information criterion

- ANN:

-

Artificial neural network

- BART:

-

Bayesian additive regression trees

- DNN:

-

Deep neural network

- DT:

-

Decision tree

- Ep:

-

Pan evaporation

- MAE:

-

Mean absolute error

- ML:

-

Machine learning

- NSE:

-

Nash–Sutcliffe efficiency

- P (mm):

-

Precipitation

- PBIAS:

-

Percentage bias

- PC:

-

Amemiya prediction criterion

- R2 :

-

Determination coefficient

- Ra (MJm2):

-

Extra-terrestrial radiation

- RF:

-

Random forest

- RHmax (%):

-

Maximum relative humidity

- RHmean (%):

-

Mean relative humidity

- RHmin (%):

-

Minimum relative humidity

- RMSE:

-

Root mean square error

- Rs (MJm2):

-

Solar radiation

- RSS:

-

Random subspace

- SBC:

-

Schwarz Bayesian criterion

- SSH (hours):

-

Sunshine hours

- SVM:

-

Support vector machine

- Tmax (℃):

-

Maximum temperature

- Tmean (℃):

-

Mean temperature

- Tmin (℃):

-

Minimum temperature

- VP (hPa):

-

Vapor pressure

- WSP (ms−1):

-

Wind speed

References

Kanwar, N., Kuniyal, J. C., Rautela, K. S., Singh, L. & Pandey, D. C. Longitudinal assessment of extreme climate events in Kinnaur district, Himachal pradesh, north-western himalaya, India. Environ. Monit. Assess. 196 (6), 557 (2024).

Ghiat, I., Mackey, H. R. & Al-Ansari, T. A review of evapotranspiration measurement models, techniques and methods for open and closed agricultural field applications. Water 13 (18), 2523 (2021).

Malik, A. et al. Modeling monthly pan evaporation process over the Indian central himalayas: application of multiple learning artificial intelligence model. Eng. Appl. Comput. Fluid Mech. 14 (1), 323–338 (2020).

Pande C. B. et al. Characterizing land use/land cover change dynamics by an enhanced random forest machine learning model: a Google Earth Engine implementation. Environ Sci Eur. 36, 84 (20214).

Qasem, S. N. et al. Modeling monthly pan evaporation using wavelet support vector regression and wavelet artificial neural networks in arid and humid climates. Eng. Appl. Comput. Fluid Mech. 13 (1), 177–187 (2019).

Shabani, S. et al. Modeling pan evaporation using Gaussian process regression k-nearest neighbors random forest and support vector machines; comparative analysis. Atmosphere 11 (1), 66 (2020).

Ghorbani, M. A., Deo, R. C., Yaseen, Z. M., Kashani, M. H. & Mohammadi, B. Pan evaporation prediction using a hybrid multilayer perceptron-firefly algorithm (MLP-FFA) model: case study in North Iran. Theoret. Appl. Climatol. 133, 1119–1113 (2017).

Majhi, B., Naidu, D., Mishra, A. P. & Satapathy, S. C. Improved prediction of daily pan evaporation using deep-LSTM model. Neural Comput. Appl. 32 (12), 7823–7783 (2020).

Wu, L. et al. Hybrid extreme learning machine with meta-heuristic algorithms for monthly pan evaporation prediction. Comput. Electron. Agric. 168, 105115 (2020).

Masoner, D. I. Differences in evaporation between a floating pan and class a pan on land 1. J. Am. Water Resour. Assoc. 44 (3), 552–556 (2008).

Kişi, Ö. Daily pan evaporation modelling using multi-layer perceptrons and radial basis neural networks. Hydrol. Processes: Int. J. 23 (2), 213–223 (2009).

Ashrafzadeh, A., Ghorbani, M. A., Biazar, S. M. & Yaseen, Z. M. Evaporation process modelling over Northern iran: application of an integrative data-intelligence model with the Krill herd optimization algorithm. Hydrol. Sci. J. 64 (15), 1843 –185 (2019).

Lu, X. et al. Daily pan evaporation modeling from local and cross-station data using three tree-based machine learning models. J. Hydrol. 566, 668–684 (2018).

Al-Mukhtar, M. Modeling of pan evaporation based on the development of machine learning methods. Theoret. Appl. Climatol. 146 (3), 961–979 (2021).

Salih, S. Q. et al. Viability of the advanced adaptive neuro-fuzzy inference system model on reservoir evaporation process simulation: case study of Nasser lake in Egypt. Eng. Appl. Comput. Fluid Mech. 13 (1), 878–891 (2019).

Khan, N., Shahid, S., Ismail, T. & Wang, X. J. Spatial distribution of unidirectional trends in temperature and temperature extremes in Pakistan. Theoret. Appl. Climatol. 136 (3–4), 899–913 (2018).

Naganna, S. R. et al. Dew point temperature estimation: application of artificial intelligence model integrated with nature-inspired optimization algorithms. Water 11 (4), 742 (2019).

Abdollahpour, S., Kosari-Moghaddam, A. & Bannayan, M. Prediction of wheat moisture content at harvest time through ANN and SVR modeling techniques. Inform. Process. Agric. 7 (4), 500–551 (2020).

Khan, N. et al. Prediction of droughts over Pakistan using machine learning algorithms. Adv. Water Resour. 139, 103562 (2020).

Jehanzaib, M., Bilal Idrees, M., Kim, D. & Kim, T. W. Comprehensive evaluation of machine learning techniques for hydrological drought forecasting. J. Irrig. Drain. Eng. 147 (7), 0402102 (2021).

Salih, S. Q. et al. Integrative stochastic model standardization with genetic algorithm for rainfall pattern forecasting in tropical and semi-arid environments. Hydrol. Sci. J. 65 (7), 1145–1157 (2020).

Elshaboury, N., Elshourbagy, M., Al-Sakkaf, A. & Abdelkader, E. M. Rainfall forecasting in arid regions using an ensemble of artificial neural networks. J. Phys: Conf. Ser. 1900, 012015 (2021).

Elbeltagi, A. et al. Data intelligence and hybrid metaheuristic algorithms-based Estimation of reference evapotranspiration. Appl. Water Sci. 12 (7), 1–18 (2022).

Kushwaha, N. L. et al. Evaluation of data-driven hybrid machine learning algorithms for modelling daily reference evapotranspiration. Atmos. Ocean. 60 (5), 1–22 (2022).

Rezaie-Balf, M. et al. Physicochemical parameters data assimilation for efficient improvement of water quality index prediction: comparative assessment of a noise suppression hybridization approach. J. Clean. Prod. 271, 122576 (2020).

Ghimire, S. et al. Streamflow prediction using an integrated methodology based on convolutional neural network and long short-term memory networks. Sci. Rep. 11 (1), 1–26 (2021).

He, S. et al. Machine learning improvement of streamflow simulation by utilizing remote sensing data and potential application in guiding reservoir operation. Sustainability 13 (7), 3645 (2021).

Chia, M. Y., Huang, Y. F. & Koo, C. H. Support vector machine enhanced empirical reference evapotranspiration Estimation with limited meteorological parameters. Comput. Electron. Agric. 175, 105577 (2020).

Malik, A. et al. Deep learning versus gradient boosting machine for pan evaporation prediction. Eng. Appl. Comput. Fluid Mech. 16 (1), 570–587 (2022).

Mehr, A. D. et al. Genetic programming in water resources engineering: A state-of-the-art review. J. Hydrol. 566, 643–667 (2018).

Jing, W. et al. Implementation of evolutionary computing models for reference evapotranspiration modeling: short review, assessment and possible future research directions. Eng. Appl. Comput. Fluid Mech. 13 (1), 811–823 (2019).

Al-Mukhtar, M. Random forest, support vector machine, and neural networks to modelling suspended sediment in Tigris River-Baghdad. Environ. Monit. Assess. 191 (11), 1–12 (2019).

Adnan, R. M., Malik, A., Kumar, A., Parmar, K. S. & Kisi, O. Pan evaporation modeling by three different neurofuzzy intelligent systems using Climatic inputs. Arab. J. Geosci. 12 (20), 60 (2019).

Ghaemi, A., Rezaie-Balf, M., Adamowski, J., Kisi, O. & Quilty, J. On the applicability of maximum overlap discrete wavelet transform integrated with MARS and M5 model tree for monthly pan evaporation prediction. Agric. For. Meteorol. 278, 107647 (2019).

Khosravi, K. et al. Meteorological data mining and hybrid data-intelligence models for reference evaporation simulation: A case study in Iraq. Comput. Electron. Agric. 167, 105041 (2019).

Kisi, O. & Heddam, S. Evaporation modelling by heuristic regression approaches using only temperature data. Hydrol. Sci. J. 64 (6), 653–672 (2019).

Rezaie-Balf, M., Kisi, O. & Chua, L. H. C. Application of ensemble empirical mode decomposition based on machine learning methodologies in forecasting monthly pan evaporation. Hydrol. Res. 50 (2), 498–451 (2019).

Sebbar, A., Heddam, S. & Djemili, L. Predicting daily pan evaporation (E pan) from dam reservoirs in the mediterranean regions of algeria: OPELM VS OSELM. Environ. Processes. 6 (1), 309–331 (2019).

Alsumaiei, A. A. Utility of artificial neural networks in modeling pan evaporation in hyper-arid climates. Water 12 (5), 1508 (2020).

Yaseen, Z. M. et al. Prediction of evaporation in arid and semi-arid regions: A comparative study using different machine learning models. Eng. Appl. Comput. Fluid Mech. 14 (1), 70–78 (2020).

Abed, M., Imteaz, M. A., Ahmed, A. N. & Huang, Y. F. Modelling monthly pan evaporation utilising random forest and deep learning algorithms. Sci. Rep. 12 (1), 13132 (2022).

Ehteram, M., Graf, R., Ahmed, A. N. & El-Shafie, A. Improved prediction of daily pan evaporation using bayesian model averaging and optimized kernel extreme machine models in different climates. Stoch. Env. Res. Risk Assess. 36 (11), 3875–3910 (2022).

Novotná, B. et al. Machine learning for pan evaporation modeling in different agroclimatic zones of the Slovak Republic (Macro-Regions). Sustainability 14 (6), 347 (2022).

El Bilali, A. et al. An interpretable machine learning approach based on DNN, SVR, extra tree, and XGBoost models for predicting daily pan evaporation. J. Environ. Manage. 327, 116890 (2023).

Elbeltagi, A., Al-Mukhtar, M., Kushwaha, N. L., Al-Ansari, N. & Vishwakarma, D. K. Forecasting monthly pan evaporation using hybrid additive regression and data-driven models in a semi-arid environment. Appl. Water Sci. 13 (2), 4 (2023).

Fu, T. & Li, X. Estimating the monthly pan evaporation with limited Climatic data in dryland based on the extended long short-term memory model enhanced with meta-heuristic algorithms. Sci. Rep. 13 (1), 5960 (2023).

Mohammed, A. S., Al-Hadeethi, B. & Almawla, A. S. Daily evapotranspiration prediction at arid and semiarid regions by using multiple linear regression technique at Ramadi City in Iraq region. IOP Conf. Series: Earth Environ. Sci. 1222, p012033 (2023).

Rajput, J. et al. Development of machine learning models for estimation of daily evaporation and mean temperature: a case study in New Delhi, India. Water Pract. Technol. 1, 2024144 (2024).

Hinton, G. E., Osindero, S. & Teh, Y. W. A fast learning algorithm for deep belief Nets. Neural Comput. 18 (7), 1527–1554 (2006).

Achieng, K. O. Modelling of soil moisture retention curve using machine learning techniques: artificial and deep neural networks vs support vector regression models. Comput. Geosci. 133, 104320 (2019).

Miikkulainen, R. et al. Evolving deep neural networks. In Artificial Intelligence in the Age of Neural Networks and Brain Computing 293–312 (Academic Press, 2019).

Cortes, C. & Vapnik, V. Support-vector networks. Mach. Learn. 20 (3), 273–229 (1995).

Tan, Y. V. & Roy, J. Bayesian additive regression trees and the general BART model. Stat. Med. 38 (25), 5048–5069 (2019).

Sparapani, R., Spanbauer, C. & McCulloch, R. Nonparametric machine learning and efficient computation with bayesian additive regression trees: the BART R package. J. Stat. Softw. 97, 1–66 (2021).

o, T. K. The random subspace method for constructing decision forests. IEEE Trans. Pattern Anal. Mach. Intell. 20 (8), 832–844 (1998).

Lasota, T., Łuczak, T., Niemczyk, M., Olszewski, M. & Trawiński, B. Investigation of property valuation models based on decision tree ensembles built over noised data. In International Conference on Computational Collective Intelligence 417–426 (Springer, 2013).

Skurichina, M. & Duin, R. P. Bagging, boosting and the random subspace method for linear classifiers. Pattern Anal. Appl. 5 (2), 121–135 (2002).

Quinlan, J. R. Learning with continuous classes. In 5th Australian Joint Conference on Artificial Intelligence, vol. 92, 343–348 (1992).

Wang, Y. & Witten, I. H. Induction of Model Trees for Predicting Continuous Classes (University of Waikat, 1996).

Franklin, J. The elements of statistical learning: data mining, inference and prediction. Math. Intelligencer. 27 (2), 83–85 (2005).

Breiman, L. Random forests. Mach. Learn. 45 (1), 5–32 (2001).

Shi, T. & Horvath, S. Unsupervised learning with random forest predictors. J. Comput. Graphical Stat. 15 (1), 118–138 (2006).

Niemeyer, J., Rottensteiner, F. & Soergel, U. Contextual classification of lidar data and Building object detection in urban areas. ISPRS J. Photogrammetry Remote Sens. 87, 152–165 (2014).

Legates, D. R. & McCave, G. J. Jr. Evaluating the use of ‘goodness-of-fit’ measures in hydrologic and hydroclimatic model validation. Water Resour. Res. 35 (1), 233–241 (1999).

Chai, T. & Draxler, R. R. Root mean square error (RMSE) or mean absolute error (MAE)? Arguments against avoiding RMSE in the literature. Geosci. Model Dev. 7 (3), 1247–1250 (2014).

Tao, H. et al. Hybridized artificial intelligence models with nature-inspired algorithms for river flow modeling: A comprehensive review, assessment, and possible future research directions. Eng. Appl. Artif. Intell. 129, 107559 (2024).

Samantaray, S. & Sahoo, A. Groundwater level prediction using an improved ELM model integrated with hybrid particle swarm optimisation and grey Wolf optimisation. Groundw. Sustainable Dev. 26, 101178 (2024).

Al-Mukhtar, M., Srivastava, A., Khadke, L., Al-Musawi, T. & Elbeltagi, A. Prediction of irrigation water quality indices using random committee, discretization regression, reptree, and additive regression. Water Resour. Manage. 38 (1), 343–368 (2024).

Samantaray, S., Sahoo, A. & Baliarsingh, F. Groundwater level prediction using an improved SVR model integrated with hybrid particle swarm optimization and firefly algorithm. Clean. Water. 1, 100003 (2024).

Hameed, M. M. et al. Investigating a hybrid extreme learning machine coupled with Dingo optimization algorithm for modeling liquefaction triggering in sand-silt mixtures. Sci. Rep. 14 (1), 10799 (2024).

Mahakur, V., Mahakur, V. K., Samantaray, S. & Ghose, D. K. Prediction of runoff at ungauged areas employing interpolation techniques and deep learning algorithm. HydroResearch 8, 265–275 (2025).

Samantaray, S., Sahoo, A., Yaseen, Z. M. & Al-Suwaiyan, M. S. River discharge prediction based multivariate Climatological variables using hybridized long short-term memory with nature inspired algorithm. J. Hydrol. 649, 132453 (2025).

Tulla et al. Daily suspended sediment yield estimation using soft-computing algorithms for hilly watersheds in a data-scarce situation: a case study of Bino watershed, Uttarakhand. Theor Appl Climatol. 155, 4023–4047 (2024).

Elbeltagi et al. Forecasting vapor pressure deficit for agricultural water management using machine learning in semi-arid environments. Agric. Water Manag. 283, 108302 (2023).

Samantaray, S. & Ghose, D. K. Prediction of S12-MKII Rainfall Simulator Experimental Runoff Data Sets (2022).

Masood, A. et al. Improving PM2.5 prediction in new Delhi using a hybrid extreme learning machine coupled with snake optimization algorithm. Sci. Rep. 13 (1), 2105 (2023).

Elbeltagi, A. et al. Forecasting actual evapotranspiration without climate data based on stacked integration of DNN and meta-heuristic models across China from 1958 to 2021. J. Environ. Manage. 345, 118697 (2023).

Kumar, M. et al. The superiority of data-driven techniques for Estimation of daily pan evaporation. Atmosphere 12 (6), 701 (2021).

Kushwaha, N. L. et al. Data intelligence model and meta-heuristic algorithms-based pan evaporation modelling in two different agro-climatic zones: A case study from Northern India. Atmosphere 12 (12), 1654 (2021).

Khosravi, K., Mao, L., Kisi, O., Yaseen, Z. M. & Shahid, S. Quantifying hourly suspended sediment load using data mining models: case study of a glacierized Andean catchment in Chile. J. Hydrol. 567, 165–179 (2018).

Taylor, K. E. Summarizing multiple aspects of model performance in a single diagram. J. Geophys. Research: Atmos. 106 (D7), 7183–7192 (2001).

Vishwakarma, D. K. et al. Evaluation of CatBoost Method for Predicting Weekly Pan Evaporation in Subtropical and Sub-Humid Regions. Pure Appl. Geophys. 181, 719–747 (2024).

Uynh, P. H., Nguyen, V. H. & Do, T. N. A coupling support vector machines with the feature learning of deep convolutional neural networks for gene expression data classification. In Modern Approaches for Intelligent Information and Database Systems (eds. Sieminski, A. et al.) 233–243 (Springer, 2018).

Díaz-Vico, D. et al. Deep support vector classification and regression. In From Bioinspired Systems and Biomedical Applications to Machine Learning. IWINAC 2019. Lecture Notes in Computer Science (eds. Ferrández Vicente, J.), vol. 11487, 33–43 (Springer, 2019).

Hahmad, J. et al. Determining speaker attributes from stress-affected speech in emergency situations with hybrid SVM-DNN architecture. Multimedia Tools Appl. 77, 4883–4907 (2018).

Ma, T., Yu, Y., Wang, F., Zhang, Q. & Chen, X. A hybrid methodologies for intrusion detection based deep neural network with support vector machine and clustering technique. In Frontier Computing. FC 2016. Lecture Notes in Electrical Engineering (eds Yen, N. & Hung, J.), vol. 422 (Springer, 2018).

Jo, G. R., Baek, B., Kim, Y. S. & Lim, D. H. Transfer learning based DNN-SVM hybrid model for breast cancer classification. J. Korea Soc. Comput. Inform. 28 (11), 1–11 (2023).

Elbeltagi, A. et al. Advanced stacked integration method for forecasting long-term drought severity: CNN with machine learning models. J. Hydrology: Reg. Stud. 53, 101759 (2024).

Prasunna, D. L. N. & Ashesh, K. Advancements in skin cancer diagnosis: A literature survey and hybrid approach employing SVM and DNN models with results analysis. In 2024 IEEE 6th International Conference on Cybernetics, Cognition and Machine Learning Applications (ICCCMLA) 73–77 (IEEE, 2024).

Chen, J. L., Yang, H., Lv, M. Q., Xiao, Z. L. & Wu, S. J. Estimation of monthly pan evaporation using support vector machine in three Gorges reservoir area, China. Theoret. Appl. Climatol. 138 (1), 1095–1107 (2019).

Lin, G. F., Lin, H. Y. & Wu, M. C. Development of a support-vector‐machine‐based model for daily pan evaporation Estimation. Hydrol. Process. 27 (22), 3115–3312 (2013).

Roy, D. K. et al. Daily prediction and multi-step forward forecasting of reference evapotranspiration using LSTM and Bi-LSTM models. Agronomy 12 (3), 594 (2022).

El-Kenawy, E. S. M. et al. Improved weighted ensemble learning for predicting the daily reference evapotranspiration under the semi-arid climate conditions. Environ. Sci. Pollut. Res. 1, 1–2 (2022).

Funding

Open access funding provided by University of Pécs.

Author information

Authors and Affiliations

Contributions

Mohammed Achite, Manish Kumar, Nehal Elshaboury, Aman Srivastava, Ali Salem and Ahmed Elbeltagi contributed to the study conception and design, data collection, analysis and interpretation of results, and manuscript drafting. All authors read and approved the final manuscript.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Achite, M., Kumar, M., Elshaboury, N. et al. Comparative assessment of standalone and hybrid deep neural networks for modeling daily pan evaporation in a semi-arid environment. Sci Rep 15, 20179 (2025). https://doi.org/10.1038/s41598-025-05985-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-025-05985-z